APPENDIX A

Answers to Sample Questions

Chapter 1—Security Management Practices

- Which choice below most accurately reflects the goals of risk mitigation?

- Defining the acceptable level of risk the organization can tolerate, and reducing risk to that level

- Analyzing and removing all vulnerabilities and threats to security within the organization

- Defining the acceptable level of risk the organization can tolerate, and assigning any costs associated with loss or disruption to a third party, such as an insurance carrier

- Analyzing the effects of a business disruption and preparing the company's response

Answer: a

The correct answer is a. The goal of risk mitigation is to reduce risk to a level acceptable to the organization. Therefore risk needs to be defined for the organization through risk analysis, business impact assessment, and/or vulnerability assessment.

Answer b is not possible. Answer c is called risk transference. Answer d is a distracter.

- Which answer below is the BEST description of a Single Loss Expectancy (SLE)?

- An algorithm that represents the magnitude of a loss to an asset from a threat

- An algorithm that expresses the annual frequency with which a threat is expected to occur

- An algorithm used to determine the monetary impact of each occurrence of a threat

- An algorithm that determines the expected annual loss to an organization from a threat

Answer: c

The correct answer is c. The Single Loss Expectancy (or Exposure) figure may be created as a result of a Business Impact Assessment (BIA). The SLE represents only the estimated monetary loss of a single occurrence of a specified threat event. The SLE is determined by multiplying the value of the asset by its exposure factor. This gives the expected loss the threat will cause for one occurrence.

Answer a describes the Exposure Factor (EF). The EF is expressed as a percentile of the expected value or functionality of the asset to be lost due to the realized threat event. This figure is used to calculate the SLE, above.

Answer b describes the Annualized Rate of Occurrence (ARO). This is an estimate of how often a given threat event may occur annually. For example, a threat expected to occur weekly would have an ARO of 52. A threat expected to occur once every five years has an ARO of 1/5 or .2. This figure is used to determine the ALE.

Answer d describes the Annualized Loss Expectancy (ALE). The ALE is derived by multiplying the SLE by its ARO. This value represents the expected risk factor of an annual threat event. This figure is then integrated into the risk management process.

- Which choice below is the BEST description of an Annualized Loss Expectancy (ALE)?

- The expected risk factor of an annual threat event, derived by multiplying the SLE by its ARO

- An estimate of how often a given threat event may occur annually

- The percentile of the value of the asset expected to be lost, used to calculate the SLE

- A value determined by multiplying the value of the asset by its exposure factor

Answer: a

Answer b describes the Annualized Rate of Occurrence (ARO).

Answer c describes the Exposure Factor (EF).

Answer d describes the algorithm to determine the Single Loss Expectancy (SLE) of a threat.

- Which choice below is NOT an example of appropriate security management practice?

- Reviewing access logs for unauthorized behavior

- Monitoring employee performance in the workplace

- Researching information on new intrusion exploits

- Promoting and implementing security awareness programs

Answer: b

Monitoring employee performance is not an example of security management, or a job function of the Information Security Officer. Employee performance issues are the domain of human resources and the employee's manager. The other three choices are appropriate practice for the information security area.

- Which choice below is an accurate statement about standards?

- Standards are the high-level statements made by senior management in support of information systems security.

- Standards are the first element created in an effective security policy program.

- Standards are used to describe how policies will be implemented within an organization.

- Standards are senior management's directives to create a computer security program.

Answer: c

Answers a, b, and d describe policies. Guidelines, standards, and procedures often accompany policy, but always follow the senior level management's statement of policy. Procedures, standards, and guidelines are used to describe how these policies will be implemented within an organization. Simply put, the three break down as follows:

- Which choice below is a role of the Information Systems Security Officer?

- The ISO establishes the overall goals of the organization's computer security program.

- The ISO is responsible for day-to-day security administration.

- The ISO is responsible for examining systems to see whether they are meeting stated security requirements.

- The ISO is responsible for following security procedures and reporting security problems.

Answer: b

Answer a is a responsibility of senior management. Answer c is a description of the role of auditing. Answer d is the role of the user, or consumer, of security in an organization.

- Which statement below is NOT true about security awareness, training, and educational programs?

- Awareness and training help users become more accountable for their actions.

- Security education assists management in determining who should be promoted.

- Security improves the users' awareness of the need to protect information resources.

- Security education assists management in developing the in-house expertise to manage security programs.

Answer: b

The purpose of computer security awareness, training, and education is to enhance security by:

- Improving awareness of the need to protect system resources

- Developing skills and knowledge so computer users can perform their jobs more securely

- Building in-depth knowledge, as needed, to design, implement, or operate security programs for organizations and systems

Making computer system users aware of their security responsibilities and teaching them correct practices helps users change their behavior. It also supports individual accountability because without the knowledge of the necessary security measures and to how to use them, users cannot be truly accountable for their actions. Source: National Institute of Standards and Technology, An Introduction to Computer Security: The NIST Handbook Special Publication 800–12.

- Which choice below is NOT an accurate description of an information policy?

- Information policy is senior management's directive to create a computer security program.

- An information policy could be a decision pertaining to use of the organization's fax.

- Information policy is a documentation of computer security decisions.

- Information policies are created after the system's infrastructure has been designed and built.

Answer: d

Computer security policy is often defined as the “documentation of computer security decisions.” The term “policy” has more than one meaning. Policy is senior management's directives to create a computer security program, establish its goals, and assign responsibilities. The term “policy” is also used to refer to the specific security rules for particular systems. Additionally, policy may refer to entirely different matters, such as the specific managerial decisions setting an organization's e-mail privacy policy or fax security policy.

A security policy is an important document to develop while designing an information system, early in the System Development Life Cycle (SDLC). The security policy begins with the organization's basic commitment to information security formulated as a general policy statement. The policy is then applied to all aspects of the system design or security solution. Source: NIST Special Publication 800–27, Engineering Principles for Information Technology Security (A Baseline for Achieving Security).

- Which choice below MOST accurately describes the organization's responsibilities during an unfriendly termination?

- System access should be removed as quickly as possible after termination.

- The employee should be given time to remove whatever files he needs from the network.

- Cryptographic keys can remain the employee's property.

- Physical removal from the offices would never be necessary.

Answer: a

Friendly terminations should be accomplished by implementing a standard set of procedures for outgoing or transferring employees. This normally includes:

- Removal of access privileges, computer accounts, authentication tokens.

- The control of keys.

- The briefing on the continuing responsibilities for confidentiality and privacy.

- Return of property.

- Continued availability of data. In both the manual and the electronic worlds this may involve documenting procedures or filing schemes, such as how documents are stored on the hard disk, and how they are backed up. Employees should be instructed whether or not to “clean up” their PC before leaving.

- If cryptography is used to protect data, the availability of cryptographic keys to management personnel must be ensured.

Given the potential for adverse consequences during an unfriendly termination, organizations should do the following:

- System access should be terminated as quickly as possible when an employee is leaving a position under less-than-friendly terms. If employees are to be fired, system access should be removed at the same time (or just before) the employees are notified of their dismissal.

- When an employee notifies an organization of the resignation and it can be reasonably expected that it is on unfriendly terms, system access should be immediately terminated.

- During the “notice of termination” period, it may be necessary to assign the individual to a restricted area and function. This may be particularly true for employees capable of changing programs or modifying the system or applications.

- In some cases, physical removal from the offices may be necessary.

Source: NIST Special Publication 800-14 Generally Accepted Principles and Practices for Securing Information Technology Systems.

- Which choice below is NOT an example of an issue-specific policy?

- E-mail privacy policy

- Virus-checking disk policy

- Defined router ACLs

- Unfriendly employee termination policy

Answer: c

Answer c is an example of a system-specific policy, in this case the router's access control lists. The other three answers are examples of issue-specific policy, as defined by NIST. Issue-specific policies are similar to program policies, in that they are not technically focused. While program policy is traditionally more general and strategic (the organization's computer security program, for example), issue-specific policy is a nontechnical policy addressing a single or specific issue of concern to the organization, such as the procedural guidelines for checking disks brought to work or e-mail privacy concerns. System-specific policy is technically focused and addresses only one computer system or device type. Source: National Institute of Standards and Technology, An Introduction to Computer Security: The NIST Handbook Special Publication 800-12.

- Who has the final responsibility for the preservation of the organization's information?

- Technology providers

- Senior management

- Users

- Application owners

Answer: b

Various officials and organizational offices are typically involved with computer security. They include the following groups:

- Senior management

- Program/functional managers/application owners

- Computer security management

- Technology providers

- Supporting organizations

- Users

Senior management has the final responsibility through due care and due diligence to preserve the capital of the organization and further its business model through the implementation of a security program. While senior management does not have the functional role of managing security procedures, it has the ultimate responsibility to see that business continuity is preserved.

- Which choice below is NOT a generally accepted benefit of security awareness, training, and education?

- A security awareness program can help operators understand the value of the information.

- A security education program can help system administrators recognize unauthorized intrusion attempts.

- A security awareness and training program will help prevent natural disasters from occurring.

- A security awareness and training program can help an organization reduce the number and severity of errors and omissions.

Answer: c

An effective computer security awareness and training program requires proper planning, implementation, maintenance, and periodic evaluation.

In general, a computer security awareness and training program should encompass the following seven steps:

- Identify program scope, goals, and objectives.

- Identify training staff.

- Identify target audiences.

- Motivate management and employees.

- Administer the program.

- Maintain the program.

- Evaluate the program.

Source: NIST Special Publication 800-14, Generally Accepted Principles and Practices for Securing Information Technology Systems.

- Which choice below is NOT a common information-gathering technique when performing a risk analysis?

- Distributing a questionnaire

- Employing automated risk assessment tools

- Reviewing existing policy documents

- Interviewing terminated employees

Answer: d

Any combination of the following techniques can be used in gathering information relevant to the IT system within its operational boundary:

Questionnaire. The questionnaire should be distributed to the applicable technical and nontechnical management personnel who are designing or supporting the IT system.

On-site Interviews. On-site visits also allow risk assessment personnel to observe and gather information about the physical, environmental, and operational security of the IT system.

Document Review. Policy documents, system documentation, and security-related documentation can provide good information about the security controls used by and planned for the IT system.

Use of Automated Scanning Tools. Proactive technical methods can be used to collect system information efficiently.

Source: NIST Special Publication 800-30, Risk Management Guide for Information Technology Systems.

- Which choice below is an incorrect description of a control?

- Detective controls discover attacks and trigger preventative or corrective controls.

- Corrective controls reduce the likelihood of a deliberate attack.

- Corrective controls reduce the effect of an attack.

- Controls are the countermeasures for vulnerabilities.

Answer: b

Controls are the countermeasures for vulnerabilities. There are many kinds, but generally they are categorized into four types:

- Deterrent controls reduce the likelihood of a deliberate attack.

- Preventative controls protect vulnerabilities and make an attack unsuccessful or reduce its impact. Preventative controls inhibit attempts to violate security policy.

- Corrective controls reduce the effect of an attack.

- Detective controls discover attacks and trigger preventative or corrective controls. Detective controls warn of violations or attempted violations of security policy and include such controls as audit trails, intrusion detection methods, and checksums.

Source: Introduction to Risk Analysis, C & A Security Risk Analysis Group and NIST Special Publication 800–30, Risk Management Guide for Information Technology Systems.

- Which statement below is accurate about the reasons to implement a layered security architecture?

- A layered security approach is not necessary when using COTS products.

- A good packet-filtering router will eliminate the need to implement a layered security architecture.

- A layered security approach is intended to increase the work-factor for an attacker.

- A layered approach doesn't really improve the security posture of the organization.

Answer: c

Security designs should consider a layered approach to address or protect against a specific threat or to reduce a vulnerability. For example, the use of a packet-filtering router in conjunction with an application gateway and an intrusion detection system combine to increase the work-factor an attacker must expend to successfully attack the system. The need for layered protections is important when commercial-off-the-shelf (COTS) products are used. The current state-of-the-art for security quality in COTS products do not provide a high degree of protection against sophisticated attacks. It is possible to help mitigate this situation by placing several controls in levels, requiring additional work by attackers to accomplish their goals.

Source: NIST Special Publication 800-27, Engineering Principles for Information Technology Security (A Baseline for Achieving Security).

- Which choice below represents an application or system demonstrating a need for a high level of confidentiality protection and controls?

- Unavailability of the system could result in inability to meet payroll obligations and could cause work stoppage and failure of user organizations to meet critical mission requirements. The system requires 24-hour access.

- The application contains proprietary business information and other financial information, which if disclosed to unauthorized sources, could cause an unfair advantage for vendors, contractors, or individuals and could result in financial loss or adverse legal action to user organizations.

- Destruction of the information would require significant expenditures of time and effort to replace. Although corrupted information would present an inconvenience to the staff, most information, and all vital information, is backed up by either paper documentation or on disk.

- The mission of this system is to produce local weather forecast information that is made available to the news media forecasters and the general public at all times. None of the information requires protection against disclosure.

Although elements of all of the systems described could require specific controls for confidentiality, given the descriptions above, system b fits the definition most closely of a system requiring a very high level of confidentiality. Answer a is an example of a system requiring high availability. Answer c is an example of a system that requires medium integrity controls. Answer d is a system that requires only a low level of confidentiality.

A system may need protection for one or more of the following reasons:

Confidentiality. The system contains information that requires protection from unauthorized disclosure.

Integrity. The system contains information that must be protected from unauthorized, unanticipated, or unintentional modification.

Availability. The system contains information or provides services which must be available on a timely basis to meet mission requirements or to avoid substantial losses.

Source: NIST Special Publication 800-18, Guide for Developing Security Plans for Information Technology Systems

- Which choice below is an accurate statement about the difference between monitoring and auditing?

- Monitoring is a one-time event to evaluate security.

- A system audit is an ongoing “real-time” activity that examines a system.

- A system audit cannot be automated.

- Monitoring is an ongoing activity that examines either the system or the users.

Answer: d

System audits and monitoring are the two methods organizations use to maintain operational assurance. Although the terms are used loosely within the computer security community, a system audit is a one-time or periodic event to evaluate security, whereas monitoring refers to an ongoing activity that examines either the system or the users. In general, the more “real-time” an activity is, the more it falls into the category of monitoring. Source: NIST Special Publication 800–14, Generally Accepted Principles and Practices for Securing Information Technology Systems.

- Which statement below is accurate about the difference between issue-specific and system-specific policies?

- Issue-specific policy is much more technically focused.

- System-specific policy is much more technically focused.

- System-specific policy is similar to program policy.

- Issue-specific policy commonly addresses only one system.

Answer: b

Often, managerial computer system security policies are categorized into three basic types:

- Program policy—used to create an organization's computer security program

- Issue-specific policies—used to address specific issues of concern to the organization

- System-specific policies—technical directives taken by management to protect a particular system

Program policy and issue-specific policy both address policy from a broad level, usually encompassing the entire organization. However, they do not provide sufficient information or direction, for example, to be used in establishing an access control list or in training users on what actions are permitted. System-specific policy fills this need. System-specific policy is much more focused, since it addresses only one system.

Table A.1 helps illustrate the difference between these three types of policies. Source: National Institute of Standards and Technology, An Introduction to Computer Security: The NIST Handbook Special Publication 800-12.

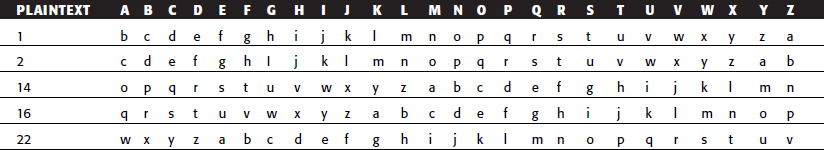

Table A.1 Security Policy Types

- Which statement below most accurately describes the difference between security awareness, security training, and security education?

- Security training teaches the skills that will help employees to perform their jobs more securely.

- Security education is required for all system operators.

- Security awareness is not necessary for high-level senior executives.

- Security training is more in depth than security education.

Answer: a

Awareness is used to reinforce the fact that security supports the mission of the organization by protecting valuable resources. The purpose of training is to teach people the skills that will enable them to perform their jobs more securely. Security education is more in depth than security training and is targeted for security professionals and those whose jobs require expertise in security. Management commitment is necessary because of the resources used in developing and implementing the program and also because the program affects their staff. Source: National Institute of Standards and Technology, An Introduction to Computer Security: The NIST Handbook Special Publication 800–12.

- Which choice below BEST describes the difference between the System Owner and the Information Owner?

- There is a one-to-one relationship between system owners and information owners.

- One system could have multiple information owners.

- The Information Owner is responsible for defining the system's operating parameters.

- The System Owner is responsible for establishing the rules for appropriate use of the information.

Answer: b

The System Owner is responsible for ensuring that the security plan is prepared and for implementing the plan and monitoring its effectiveness. The System Owner is responsible for defining the system's operating parameters, authorized functions, and security requirements. The information owner for information stored within, processed by, or transmitted by a system may or may not be the same as the System Owner. Also, a single system may utilize information from multiple Information Owners.

The Information Owner is responsible for establishing the rules for appropriate use and protection of the subject data/information (rules of behavior). The Information Owner retains that responsibility even when the data/information are shared with other organizations. Source: NIST Special Publication 800-18, Guide for Developing Security Plans for Information Technology Systems.

- Which choice below is NOT an accurate statement about an organization's incident-handling capability?

- The organization's incident-handling capability should be used to detect and punish senior-level executive wrong-doing.

- It should be used to prevent future damage from incidents.

- It should be used to provide the ability to respond quickly and effectively to an incident.

- The organization's incident-handling capability should be used to contain and repair damage done from incidents.

Answer: a

An organization should address computer security incidents by developing an incident-handling capability. The incident-handling capability should be used to:

- Provide the ability to respond quickly and effectively.

- Contain and repair the damage from incidents. When left unchecked, malicious software can significantly harm an organization's computing, depending on the technology and its connectivity. Containing the incident should include an assessment of whether the incident is part of a targeted attack on the organization or an isolated incident.

- Prevent future damage. An incident-handling capability should assist an organization in preventing (or at least minimizing) damage from future incidents. Incidents can be studied internally to gain a better understanding of the organization's threats and vulnerabilities.

Source: NIST Special Publication 800-14, Generally Accepted Principles and Practices for Securing Information Technology Systems.

- Place the data classification scheme in order, from the least secure to the most:

- Sensitive

- Public

- Private

- Confidential

Answer: b, c, a, and d

Various formats for categorizing the sensitivity of data exist. Although originally implemented in government systems, data classification is very useful in determining the sensitivity of business information to threats to confidentiality, integrity, or availability. Often an organization would use the high, medium, or low categories. This simple classification scheme rates each system by its need for protection based upon its C.I.A. needs, and whether it requires high, medium, or low protective controls. For example, a system and its information may require a high degree of integrity and availability, yet have no need for confidentiality.

Table A.2 A Sample H/M/L Data Classification

CATEGORY DESCRIPTION High Could cause loss of life, imprisonment, major financial loss, or require legal action for correction if the information is compromised. Medium Could cause significant financial loss or require legal action for correction if the information is compromised. Low Would cause only minor financial loss or require only administrative action for correction if the information is compromised. Or organizations may categorize data into four sensitivity classifications with separate handling requirements, such as Sensitive, Confidential, Private, and Public.

This system would define the categories as follows:

Sensitive. This classification applies to information that requires special precautions to assure the integrity of the information, by protecting it from unauthorized modification or deletion. It is information that requires a higher-than-normal assurance of accuracy and completeness.

Confidential. This classification applies to the most sensitive business information that is intended strictly for use within the organization. Its unauthorized disclosure could seriously and adversely impact the organization, its stockholders, its business partners, and/or its customers. This information is exempt from disclosure under the provisions of the Freedom of Information Act or other applicable federal laws or regulations.

Private. This classification applies to personal information that is intended for use within the organization. Its unauthorized disclosure could seriously and adversely impact the organization and/or its employees.

Public. This classification applies to all other information that does not clearly fit into any of the preceding three classifications. While its unauthorized disclosure is against policy, it is not expected to impact seriously or adversely the organization, its employees, and/or its customers.

The designated owners of information are responsible for determining data classification levels, subject to executive management review. Table A.2 shows a sample H/M/L data classification for sensitive information. Source: NIST Special Publication 800-26, Security Self-Assessment Guide for Information Technology Systems.

- Place the five system security life-cycle phases in order:

- ____ a. Implementation phase

- ____ b. Development/acquisition phase

- ____ c. Disposal phase

- ____ d. Operation/maintenance phase

- ____ e. Initiation phase

Answer: e, b, a, d, c

Security, like other aspects of an IT system, is best managed if planned for throughout the IT system life cycle. There are many models for the IT system life cycle, but most contain five basic phases: initiation, development/acquisition, implementation, operation, and disposal.

The order of these phases is:

- Initiation phase—During the initiation phase, the need for a system is expressed and the purpose of the system is documented.

- Development/acquisition phase—During this phase, the system is designed, purchased, programmed, developed, or otherwise constructed.

- Implementation phase—During implementation, the system is tested and installed or fielded.

- Operation/maintenance phase—During this phase, the system performs its work. The system is almost always being continuously modified by the addition of hardware and software and by numerous other events.

- Disposal phase—The disposal phase of the IT system life cycle involves the disposition of information, hardware, and software.

Source: NIST Special Publication 800-14, Generally Accepted Principles and Practices for Securing Information Technology Systems.

- How often should an independent review of the security controls be performed, according to OMB Circular A-130?

Answer: b

The correct answer is b. OMB Circular A-130 requires that a review of the security controls for each major government application be performed at least every three years. For general support systems, OMB Circular A-130 requires that the security controls be reviewed either by an independent audit or self review. Audits can be self-administered or independent (either internal or external). The essential difference between a self-audit and an independent audit is objectivity; however, some systems may require a fully independent review. Source: Office of Management and Budget Circular A-130, revised November 30, 2000.

- Which choice below is NOT one of NIST's 33 IT security principles?

- Implement least privilege.

- Assume that external systems are insecure.

- Totally eliminate any level of risk.

- Minimize the system elements to be trusted.

Answer: c

Risk can never be totally eliminated. NIST IT security principle #4 states: “Reduce risk to an acceptable level.” The National Institute of Standards and Technology's (NIST) Information Technology Laboratory (ITL) released NIST Special Publication (SP) 800-27, “Engineering Principles for Information Technology Security (EP-ITS)” in June 2001 to assist in the secure design, development, deployment, and life-cycle of information systems. It presents 33 security principles which start at the design phase of the information system or application and continue until the system's retirement and secure disposal. Some of the other 33 principles are:

Principle 1. Establish a sound security policy as the “foundation” for design.

Principle 2. Treat security as an integral part of the overall system design.

Principle 5. Assume that external systems are insecure.

Principle 6. Identify potential trade-offs between reducing risk and increased costs and decrease in other aspects of operational effectiveness.

Principle 7. Implement layered security (ensure no single point of vulnerability).

Principle 11. Minimize the system elements to be trusted.

Principle 16. Isolate public access systems from mission critical resources (e.g., data, processes, etc.).

Principle 17. Use boundary mechanisms to separate computing systems and network infrastructures.

Principle 22. Authenticate users and processes to ensure appropriate access control decisions both within and across domains.

Principle 23. Use unique identities to ensure accountability.

Principle 24. Implement least privilege.

Source: NIST Special Publication 800-27, Engineering Principles for Information Technology Security (A Baseline for Achieving Security), and “Federal Systems Level Guidance for Securing Information Systems,” James Corrie, August 16, 2001.

- Which choice below would NOT be considered an element of proper user account management?

- Users should never be rotated out of their current duties.

- The users' accounts should be reviewed periodically.

- A process for tracking access authorizations should be implemented.

- Periodically re-screen personnel in sensitive positions.

Answer: a

Organizations should ensure effective administration of users' computer access to maintain system security, including user account management, auditing, and the timely modification or removal of access. This includes:

User Account Management. Organizations should have a process for requesting, establishing, issuing, and closing user accounts, tracking users and their respective access authorizations, and managing these functions.

Management Reviews. It is necessary to periodically review user accounts. Reviews should examine the levels of access each individual has, conformity with the concept of least privilege, whether all accounts are still active, whether management authorizations are up-to-date, and whether required training has been completed.

Detecting Unauthorized/Illegal Activities. Mechanisms besides auditing and analysis of audit trails should be used to detect unauthorized and illegal acts, such as rotating employees in sensitive positions, which could expose a scam that required an employee's presence, or periodic re-screening of personnel.

Source: NIST Special Publication 800-14, Generally Accepted Principles and Practices for Securing Information Technology Systems.

- Which question below is NOT accurate regarding the process of risk assessment?

- The likelihood of a threat must be determined as an element of the risk assessment.

- The level of impact of a threat must be determined as an element of the risk assessment.

- Risk assessment is the first process in the risk management methodology

- Risk assessment is the final result of the risk management methodology.

Answer: d

Risk is a function of the likelihood of a given threat-source's exercising a particular potential vulnerability, and the resulting impact of that adverse event on the organization. Risk assessment is the first process in the risk management methodology. The risk assessment process helps organizations identify appropriate controls for reducing or eliminating risk during the risk mitigation process.

To determine the likelihood of a future adverse event, threats to an IT system must be analyzed in conjunction with the potential vulnerabilities and the controls in place for the IT system. The likelihood that a potential vulnerability could be exercised by a given threat-source can be described as high, medium, or low. Impact refers to the magnitude of harm that could be caused by a threat's exploitation of a vulnerability. The determination of the level of impact produces a relative value for the IT assets and resources affected. Source: NIST Special Publication 800-30, Risk Management Guide for Information Technology Systems.

- Which choice below is NOT an accurate statement about the visibility of IT security policy?

- The IT security policy should not be afforded high visibility.

- The IT security policy could be visible through panel discussions with guest speakers.

- The IT security policy should be afforded high visibility.

- Include the IT security policy as a regular topic at staff meetings at all levels of the organization.

Answer: a

Especially high visibility should be afforded the formal issuance of IT security policy. This is because nearly all employees at all levels will in some way be affected, major organizational resources are being addressed, and many new terms, procedures, and activities will be introduced.

Including IT security as a regular topic at staff meetings at all levels of the organization can be helpful. Also, providing visibility through such avenues as management presentations, panel discussions, guest speakers, question/answer forums, and newsletters can be beneficial.

- According to NIST, which choice below is not an accepted security self-testing technique?

- War Dialing

- Virus Distribution

- Password Cracking

- Virus Detection

Answer: b

Common types of self-testing techniques include:

- Network Mapping

- Vulnerability Scanning

- Penetration Testing

- Password Cracking

- Log Review

- Virus Detection

- War Dialing

Some testing techniques are predominantly human-initiated and conducted, while other tests are highly automated and require less human involvement. The staff that initiates and implements in-house security testing should have significant security and networking knowledge. These testing techniques are often combined to gain a more comprehensive assessment of the overall network security posture. For example, penetration testing almost always includes network mapping and vulnerability scanning to identify vulnerable hosts and services that may be targeted for later penetration. None of these tests by themselves will provide a complete picture of the network or its security posture. Source: NIST Special Publication 800–42, DRAFT Guideline on Network Security Testing.

- Which choice below is NOT a concern of policy development at the high level?

- Identifying the key business resources

- Identifying the type of firewalls to be used for perimeter security

- Defining roles in the organization

- Determining the capability and functionality of each role

Answer: b

Answers a, c, and d are elements of policy development at the highest level. Key business resources would have been identified during the risk assessment process. The various roles are then defined to determine the various levels of access to those resources. Answer d is the final step in the policy creation process and combines steps a and c. It determines which group gets access to each resource and what access privileges its members are assigned. Access to resources should be based on roles, not on individual identity. Source: Surviving Security: How to Integrate People, Process, and Technology by Mandy Andress (Sams Publishing, 2001).

Chapter 2—Access Control Systems and Methodology

- The concept of limiting the routes that can be taken between a workstation and a computer resource on a network is called:

- Path limitation

- An enforced path

- A security perimeter

- A trusted path

Answer: b

Individuals are authorized access to resources on a network through specific paths and the enforced path prohibits the user from accessing a resource through a different route than is authorized to that particular user. This prevents the individual from having unauthorized access to sensitive information in areas off limits to that individual. Examples of controls to implement an enforced path include establishing virtual private networks (VPNs) for specific groups within an organization, using firewalls with access control lists, restricting user menu options, and providing specific phone numbers or dedicated lines for remote access. Answer a is a distracter. Answer c, security perimeter, refers to the boundary where security controls are in effect to protect assets. This is a general definition and can apply to physical and technical (logical) access controls. In physical security, a fence may define the security perimeter. In technical access control, a security perimeter can be defined in terms of a Trusted Computing Base (TCB). A TCB is the total combination of protection mechanisms within a computer system. These mechanisms include the firmware, hardware, and software that enforce the system security policy. The security perimeter is the boundary that separates the TCB from the remainder of the system. In answer d, a trusted path is a path that exists to permit the user to access the TCB without being compromised by other processes or users.

- An important control that should be in place for external connections to a network that uses call-back schemes is:

- Breaking of a dial-up connection at the remote user's side of the line

- Call forwarding

- Call enhancement

- Breaking of a dial-up connection at the organization's computing resource side of the line

Answer: d

One attack that can be applied when call back is used for remote, dial-up connections is that the caller may not hang up. If the caller had been previously authenticated and has completed his/her session, a “live” connection into the remote network will still be maintained. Also, an unauthenticated remote user may hold the line open, acting as if call-back authentication has taken place. Thus, an active disconnect should be effected at the computing resource's side of the line. Answer a is not correct since it involves the caller hanging up. Answer b, call forwarding, is a feature that should be disabled, if possible, when used with call-back schemes. With call back, a cracker can have a call forwarded from a valid phone number to an invalid phone number during the call-back process. Answer c is a distracter.

- When logging on to a workstation, the log-on process should:

- Validate the log-on only after all input data has been supplied.

- Provide a Help mechanism that provides log-on assistance.

- Place no limits on the time allotted for log-on or on the number of unsuccessful log-on attempts.

- Not provide information on the previous successful log-on and on previous unsuccessful log-on attempts.

Answer: a

This approach is necessary to ensure that all the information required for a log-on has been submitted and to avoid providing information that would aid a cracker in trying to gain unauthorized access to the workstation or network. If a log-on attempt fails, information as to which part of the requested log-on information was incorrect should not be supplied to the user. Answer b is incorrect since a Help utility would provide help to a cracker trying to gain unauthorized access to the network. For answer c, maximum and minimum time limits should be placed on the log-on process. Also, the log-on process should limit the number of unsuccessful log-on attempts and temporarily suspend the log-on capability if that number is exceeded. One approach is to progressively increase the time interval allowed between unsuccessful log-on attempts. Answer d is incorrect since providing such information will alert an authorized user if someone has been attempting to gain unauthorized access to the network from the user's workstation.

- A group of processes that share access to the same resources is called:

- An access control list

- An access control triple

- A protection domain

- A Trusted Computing Base (TCB)

In answer a, an access control list (ACL) is a list denoting which users have what privileges to a particular resource. Table A.3 illustrates an ACL. The table shows the subjects or users that have access to the object, FILE X and what privileges they have with respect to that file.

For answer b, an access control triple consists of the user, program, and file with the corresponding access privileges noted for each user. The TCB, of answer d, is defined in the answers to Question 1 as the total combination of protection mechanisms within a computer system. These mechanisms include the firmware, hardware, and software that enforce the system security policy.

- What part of an access control matrix shows capabilities that one user has to multiple resources?

- Columns

- Rows

- Rows and columns

- Access control list

Answer: b

The rows of an access control matrix indicate the capabilities that users have to a number of resources. An example of a row in the access control matrix showing the capabilities of user JIM is given in Table A.4.

Answer a, columns in the access control matrix, define the access control list described in question 4. Answer c is incorrect since capabilities involve only the rows of the access control matrix. Answer d is incorrect since an ACL, again, is a column in the access control matrix.

USER FILE X JIM READ PROGRAM Y READ/WRITE GAIL READ/WRITE

- A type of preventive/physical access control is:

- Biometrics for authentication

- Motion detectors

- Biometrics for identification

- An intrusion detection system

Answer: c

Biometrics applied to identification of an individual is a “one-to-many” search where an individual's physiological or behavioral characteristics are compared to a database of stored information. An example would be trying to match a person's fingerprints to a set in a national database of fingerprints. This search differs from the biometrics search for authentication in answer a. That search would be a “one-to-one” comparison of a person's physiological or behavioral characteristics with their corresponding entry in an authentication database. Answer b, motion detectors, is a type of detective physical control and answer d is a detective/technical control.

- In addition to accuracy, a biometric system has additional factors that determine its effectiveness. Which one of the following listed items is NOT one of these additional factors?

- Throughput rate

- Acceptability

- Corpus

- Enrollment time

Answer: c

A corpus is a biometric term that refers to collected biometric images. The corpus is stored in a database of images. Potential sources of error are the corruption of images during collection and mislabeling or other transcription problems associated with the database. Therefore, the image collection, process and storage must be performed carefully with constant checking. These images are collected during the enrollment process and thus, are critical to the correct operation of the biometric device. In enrollment, images are collected and features are extracted, but no comparison occurs. The information is stored for use in future comparison steps. Answer a, the throughput rate, refers to the rate at which individuals, once enrolled, can be processed by a biometric system. If an individual is being authenticated, the biometric system will take a sample of the individual's characteristic to be evaluated and compare it to a template. A metric called distance is used to determine if the sample matches the template. Distance is the difference between the quantitative measure of the sample and the template. If the distance falls within a threshold value, a match is declared. If not, there is no match. Answer b, acceptability, is determined by privacy issues, invasiveness, and psychological and physical comfort when using the biometric system. Enrollment time, answer d, is the time it takes to initially “register” with a system by providing samples of the biometric characteristic to be evaluated.

- Access control that is a function of factors such as location, time of day, and previous access history is called:

- Positive

- Content-dependent

- Context-dependent

- Information flow

Answer: c

In answer c, access is determined by the context of the decision as opposed to the information contained in the item being accessed. The latter is referred to as content-dependent access control. (Answer b) In content-dependent access control, for example, the manager of a department may be authorized to access employment records of a department employee, but may not be permitted to view the health records of the employee. In answer a, the term “positive” in access control refers to positive access rights, such as read or write. Denial rights, such as denial to write to a file, can also be conferred upon a subject. Information flow, cited in answer d, describes a class of access control models. An information flow model is described by the set consisting of object, flow policy, states, and rules describing the transitions among states.

- A persistent collection of data items that form relations among each other is called a:

- Database management system (DBMS)

- Data description language (DDL)

- Schema

- Database

Answer: d

For a database to be viable, the data items must be stored on nonvolatile media and be protected from unauthorized modification. For answer a, a DBMS provides access to the items in the database and maintains the information in the database. The Data description language (DDL) in answer b provides the means to define the database and answer c, schema, is the description of the database.

- A relational database can provide security through view relations. Views enforce what information security principle?

- Aggregation

- Least privilege

- Separation of duties

- Inference

Answer: b

The principle of least privilege states that a subject is permitted to have access to the minimum amount of information required to perform an authorized task. When related to government security clearances, it is referred to as “need-to-know.” Answer a, aggregation, is defined as assembling or compiling units of information at one sensitivity level and having the resultant totality of data being of a higher sensitivity level than the individual components. Separation of duties, answer c, requires that two or more subjects are necessary to authorize an activity or task. Answer d, inference, refers to the ability of a subject to deduce information that is not authorized to be accessed by that subject from information that is authorized to that subject.

- A software interface to the operating system that implements access control by limiting the system commands that are available to a user is called a(n):

- Restricted shell

- Interrupt

- Physically constrained user interface

- View

Answer: a

Answer b refers to a software or hardware interrupt to a processor that causes the program to jump to another program to handle the interrupt request. Before leaving the program that was being executed at the time of the interrupt, the CPU must save the state of the computer so that the original program can continue after the interrupt has been serviced. A physically constrained user interface, answer c, is one in which a user's operations are limited by the physical characteristics of the interface device. An example would be a keypad with the choices limited to the operations permitted by each key. Answer d refers to database views, which restrict access to information contained in a database through content-dependent access control.

- Controlling access to information systems and associated networks is necessary for the preservation of their confidentiality, integrity, and availability. Which of the following is NOT a goal of integrity?

- Prevention of the modification of information by unauthorized users

- Prevention of the unauthorized or unintentional modification of information by authorized users

- Prevention of authorized modifications by unauthorized users

- Preservation of the internal and external consistency of the information

Answer: c

Answers a, b, and d are the three principles of integrity. Answer c is a distracter and does not make sense. In answer d, internal consistency ensures that internal data correlate. For example, the total number of a particular data item in the database should be the sum of all the individual, non-identical occurrences of that data item in the database. External consistency requires that the database content be consistent with the real world items that it represents.

- In a Kerberos exchange involving a message with an authenticator, the authenticator contains the client ID and which of the following?

- Ticket Granting Ticket (TGT)

- Timestamp

- Client/TGS session key

- Client network address

Answer: b

A timestamp, t, is used to check the validity of the accompanying request since a Kerberos ticket is valid for some time window, v, after it is issued. The timestamp indicates when the ticket was issued. Answer a, the TGT, is comprised of the client ID, the client network address, the starting and ending time the ticket is valid (v), and the client/TGS session key. This ticket is used by the client to request the service of a resource on the network from the TGS. In answer c, the client/TGS session key, Kc, tgs, is the symmetric key used for encrypted communication between the client and TGS for this particular session. For answer d, the client network address is included in the TGT and not in the authenticator.

- Which one of the following security areas is directly addressed by Kerberos?

- Confidentiality

- Frequency analysis

- Availability

- Physical attacks

Answer: a

Kerberos directly addresses the confidentiality and also the integrity of information. For answer b, attacks such as frequency analysis are not considered in the basic Kerberos implementation. In addition, the Kerberos protocol does not directly address availability issues. (Answer c.) For answer d, since the Kerberos TGS and the authentication servers hold all the secret keys, these servers are vulnerable to both physical attacks and attacks from malicious code. In the Kerberos exchange, the client workstation temporarily holds the client's secret key, and this key is vulnerable to compromise at the workstation.

- The Secure European System for Applications in a Multivendor Environment (SESAME) implements a Kerberos-like distribution of secret keys. Which of the following is NOT a characteristic of SESAME?

- Uses a trusted authentication server at each host

- Uses secret key cryptography for the distribution of secret keys

- Incorporates two certificates or tickets, one for authentication and one defining access privileges

- Uses public key cryptography for the distribution of secret keys

Answer: b

SESAME uses public key cryptography for the distribution of secret keys. In addition, SESAME employs the MD5 and crc32 oneway hash functions. A weakness in SESAME is that, similar to Kerberos, it is subject to password guessing.

- Windows 2000 uses which of the following as the primary mechanism for authenticating users requesting access to a network?

- Hash functions

- Kerberos

- SESAME

- Public key certificates

Answer: b

While Kerberos is the primary mechanism, system administrators may also use alternative authentication services running under the Security Support Provider Interface (SSPI). Answer a, hash functions, are used for digital signature implementations. Answer c, SESAME, is incorrect. It is the Secure European System for Applications in a Multivendor Environment. SESAME performs similar functions to Kerberos, but uses public key cryptography to distribute the secret keys. Answer d is incorrect, since public key certificates are not used in the Windows 2000 primary authentication approach.

- A protection mechanism to limit inferencing of information in statistical database queries is:

- Specifying a maximum query set size

- Specifying a minimum query set size

- Specifying a minimum query set size, but prohibiting the querying of all but one of the records in the database

- Specifying a maximum query set size, but prohibiting the querying of all but one of the records in the database

Answer: c

When querying a database for statistical information, individually identifiable information should be protected. Thus, requiring a minimum size for the query set (greater than one) offers protection against gathering information on one individual. However, an attack may consist of gathering statistics on a query set size M, equal to or greater than the minimum query set size, and then requesting the same statistics on a query set size of M + 1. The second query set would be designed to include the individual whose information is being sought surreptitiously. Thus with answer c, this type of attack could not take place. Answer b is, therefore, incorrect since it leaves open the loophole of the M+1 set size query. Answers a and d are incorrect since the critical metric is the minimum query set size and not the maximum size. Obviously, the maximum query set size cannot be set to a value less than the minimum set size.

- In SQL, a relation that is actually existent in the database is called a(n):

- Base relation

- View

- Attribute

- Domain

Answer: a

A base relation exists in the database while a view, answer b, is a virtual relation that is not stored in the database. A view is derived by the SQL definition and is developed from base relations or, possibly, other views. Answer c, an attribute, is a column in a relation table and answer d, a domain, is the set of permissible values of an attribute.

- A type of access control that supports the management of access rights for groups of subjects is:

- Role-based

- Discretionary

- Mandatory

- Rule-based

Answer: a

Role-based access control assigns identical privileges to groups of users. This approach simplifies the management of access rights, particularly when members of the group change. Thus, access rights are assigned to a role, not to an individual. Individuals are entered as members of specific groups and are assigned the access privileges of that group. In answer b, the access rights to an object are assigned by the owner at the owner's discretion. For large numbers of people whose duties and participation may change frequently, this type of access control can become unwieldy. Mandatory access control, answer c, uses security labels or classifications assigned to data items and clearances assigned to users. A user has access rights to data items with a classification equal to or less than the user's clearance. Another restriction is that the user has to have a “need-to-know” the information; this requirement is identical to the principle of least privilege. Answer d, rule-based access control, assigns access rights based on stated rules. An example of a rule is “Access to trade-secret data is restricted to corporate officers, the data owner and the legal department.”

- The Simple Security Property and the Star Property are key principles in which type of access control?

- Role-based

- Rule-based

- Discretionary

- Mandatory

Answer: d

Two properties define fundamental principles of mandatory access control. These properties are:

Simple Security Property. A user at one clearance level cannot read data from a higher classification level.

Star Property. A user at one clearance level cannot write data to a lower classification level

Answers a, b, and c are discussed in Question 19.

- Which of the following items is NOT used to determine the types of access controls to be applied in an organization?

- Least privilege

- Separation of duties

- Relational categories

- Organizational policies

Answer: c

The item, relational categories, is a distracter. Answers a, b, and d are important determinants of access control implementations in an organization.

- Kerberos provides an integrity check service for messages between two entities through the use of:

- A checksum

- Credentials

- Tickets

- A trusted, third-party authentication server

Answer: a

A checksum that is derived from a Kerberos message is used to verify the integrity of the message. This checksum may be a message digest resulting from the application of a hash function to the message. At the receiving end of the transmission, the receiving party can calculate the message digest of the received message using the identical hash algorithm as the sender. Then the message digest calculated by the receiver can be compared with the message digest appended to the message by the sender. If the two message digests match, the message has not been modified en route, and its integrity has been preserved. For answers b and c, credentials and tickets are authenticators used in the process of granting user access to services on the network. Answer d is the AS or authentication server that conducts the ticket-granting process.

- The Open Group has defined functional objectives in support of a user single sign-on (SSO) interface. Which of the following is NOT one of those objectives and would possibly represent a vulnerability?

- The interface shall be independent of the type of authentication information handled.

- Provision for user-initiated change of nonuser-configured authentication information.

- It shall not predefine the timing of secondary sign-on operations.

- Support shall be provided for a subject to establish a default user profile.

Answer: b

User configuration of nonuser-configured authentication mechanisms is not supported by the Open Group SSO interface objectives. Authentication mechanisms include items such as smart cards and magnetic badges. Strict controls must be placed to prevent a user from changing configurations that are set by another authority. Objective a supports the incorporation of a variety of authentication schemes and technologies. Answer c states that the interface functional objectives do not require that all sign-on operations be performed at the same time as the primary sign on. This prevents the creation of user sessions with all the available services even though these services are not needed by the user. For answer d, the creation of a default user profile will make the sign-on more efficient and less time-consuming.

In summary, the scope of the Open Group Single Sign-On Standards is to define services in support of:

- “The development of applications to provide a common, single end-user sign-on interface for an enterprise.

- The development of applications for the coordinated management of multiple user account management information bases maintained by an enterprise.”

- There are some correlations between relational data base terminology and object-oriented database terminology. Which of the following relational model terms, respectively, correspond to the object model terms of class, attribute and instance object?

- Domain, relation, and column

- Relation, domain, and column

- Relation, tuple, and column

- Relation, column, and tuple

Answer: d

Table A.5 shows the correspondence between the two models.

In comparing the two models, a class is similar to a relation; however, a relation does not have the inheritance property of a class. An attribute in the object model is similar to the column of a relational table. The column has limitations on the data types it can hold while an attribute in the object model can use all data types that are supported by the Java and C++ languages. An instance object in the object model corresponds to a tuple in the relational model. Again the data structures of the tuple are limited while those of the instance object can use data structures of Java and C++.

Table A.5 Object and Relational Model Correspondence

OBJECT MODEL RELATIONAL MODEL CLASS RELATION ATTRIBUTE COLUMN INSTANCE OBJECT TUPLE - A reference monitor is a system component that enforces access controls on an object. Specifically, the reference monitor concept is an abstract machine that mediates all access of subjects to objects. The hardware, firmware, and software elements of a trusted computing base that implement the reference monitor concept are called:

- The authorization database

- Identification and authentication (I & A) mechanisms

- The auditing subsystem

- The security kernel

Answer: d

The security kernel implements the reference model concept. The reference model must have the following characteristics:

- It must mediate all accesses.

- It must be protected from modification.

- It must be verifiable as correct.

Answer a, the authorization database, is used by the reference monitor to mediate accesses by subjects to objects. When a request for access is received, the reference monitor refers to entries in the authorization database to verify that the operation requested by a subject for application to an object is permitted. The authorization database has entries or authorizations of the form subject, object, access mode. In answer b, the I & A operation is separate from the reference monitor. The user enters his/her identification to the I & A function. Then the user must be authenticated. Authentication is verification that the user's claimed identity is valid. Authentication is based on the following three factor types:

Type 1. Something you know, such as a PIN or password

Type 2. Something you have, such as an ATM card or smart card

Type 3. Something you are (physically), such as a fingerprint or retina scan

Answer c, the auditing subsystem, is a key complement to the reference monitor. The auditing subsystem is used by the reference monitor to keep track of the reference monitor's activities. Examples of such activities include the date and time of an access request, identification of the subject and objects involved, the access privileges requested and the result of the request.

- Authentication in which a random value is presented to a user, who then returns a calculated number based on that random value is called:

- Man-in-the-middle

- Challenge-response

- One-time password

- Personal identification number (PIN) protocol

Answer: b

In challenge-response authentication, the user enters a random value (challenge) sent by the authentication server into a token device. The token device shares knowledge of a cryptographic secret key with the authentication server and calculates a response based on the challenge value and the secret key. This response is entered into the authentication server, which uses the response to authenticate the identity of the user by performing the same calculation and comparing results. Answer a, man-in-the-middle, is a type of attack in which a cracker is interposed between the user and authentication server and attempts to gain access to packets for replay in order to impersonate a valid user. A one-time password, answer c, is a password that is used only once to gain access to a network or computer system. A typical implementation is through the use of a token that generates a number based on the time of day. The user reads this number and enters it into the authenticating device. The authenticating device calculates the same number based on the time of day and uses the same algorithm used by the token. If the token's number matches that of the authentication server, the identity of the user is validated. Obviously, the token and the authentication server must be time-synchronized for this approach to work. Also, there is allowance for small values of time skew between the authorization device and the token. Answer d refers to a PIN number that is something you know used with something you have, such as an ATM card.

- Which of the following is NOT a criterion for access control?

Answer: c

Keystroke monitoring is associated with the auditing function and not access control. For answer a, the identity of the user is a criterion for access control. The identity must be authenticated as part of the I & A process. Answer b refers to role-based access control where access to information is determined by the user's job function or role in the organization. Transactions, answer d, refer to access control through entering an account number or a transaction number, as may be required for bill payments by telephone, for example.

- Which of the following is typically NOT a consideration in the design of passwords?

- Lifetime

- Composition

- Authentication period

- Electronic monitoring

Answer: d

Electronic monitoring is the eavesdropping on passwords that are being transmitted to the authenticating device. This issue is a technical one and is not a consideration in designing passwords. The other answers relate to very important password characteristics that must be taken into account when developing passwords. Password lifetime, in answer a, refers to the maximum period of time that a password is valid. Ideally, a password should be used only once. This approach can be implemented by token password generators and challenge response schemes. However, as a practical matter, passwords on most PC's and workstations are used repeatedly. The time period after which passwords should be changed is a function of the level of protection required for the information being accessed. In typical organizations, passwords may be changed every three to six months. Obviously, passwords should be changed when employees leave an organization or in a situation where a password may have been compromised. Answer b, the composition of a password, defines the characters that can be used in the password. The characters may be letters, numbers, or special symbols. The authentication period in answer c defines the maximum acceptable period between the initial authentication of a user and any subsequent reauthorization process. For example, users may be asked to authenticate themselves again after a specified period of time of being logged on to a server containing critical information.

- A distributed system using passwords as the authentication means can use a number of techniques to make the password system stronger. Which of the following is NOT one of these techniques?

- Password generators

- Regular password reuse

- Password file protection

- Limiting the number or frequency of log-on attempts

Answer: b

Passwords should never be reused after the time limit on their use has expired. Answer a, password generators, supply passwords upon request. These passwords are usually comprised of numbers, characters, and sometimes symbols. Passwords provided by password generators are, usually, not easy to remember. For answer c, password file protection may consist of encrypting the password with a one-way hash function and storing it in a password file. A typical brute force attack against this type of protection is to encrypt trial password guesses using the same hash function and to compare the encrypted results with the encrypted passwords stored in the password file. Answer d provides protection in that, after a specified number of unsuccessful log-on attempts, a user may be locked out of trying to log on for a period of time. An alternative is to progressively increase the time between permitted log-on tries after each unsuccessful log-on attempt.

- Enterprise Access Management (EAM) provides access control management services to Web-based enterprise systems. Which of the following functions is NOT normally provided by extant EAM approaches?

- Single sign-on

- Accommodation of a variety of authentication mechanisms

- Role-based access control

- Interoperability among EAM implementations

Answer: d

In general, security credentials produced by one EAM solution are not recognized by another implementation. Thus, reauthentication is required when linking from one Web site to another related Web site if the sites have different EAM implementations. Single sign-on (SSO), answer a, is approached in a number of ways. For example, SSO can be implemented on Web applications in the same domain residing on different servers by using nonpersistent, encrypted cookies on the client interface. This is accomplished by providing a cookie to each application that the user wishes to access. Another solution is to build a secure credential for each user on a reverse proxy that is situated in front of the Web server. The credential is, then, presented at each instance of a user attempting to access protected Web applications. For answer b, most EAM solutions accommodate a variety of authentication technologies, including tokens, ID/passwords and digital certificates. Similarly, for answer c, EAM solutions support role-based access controls, albeit they may be implemented in different fashions. Enterprise-level roles should be defined in terms that are universally accepted across most ecommerce applications.

- The main approach to obtaining the true biometric information from a collected sample of an individual's physiological or behavioral characteristics is:

- Feature extraction

- Enrollment

- False rejection

- Digraphs

Answer: a

Feature extraction algorithms are a subset of signal/image processing and are used to extract the key biometric information from a sample that has been taken from an individual. Usually, the sample is taken in an environment that may have “noise” and other conditions that may affect the raw sample image. Neural networks are an example of a feature extraction approach. Answer b, enrollment, refers to the process of collecting samples that are averaged and then stored to use as a reference base against which future samples are compared. False rejection, answer c, refers to the false rejection in biometrics. False rejection is the rejection of an authorized user because of a mismatch between the sample and the reference template. Conversely, false acceptance is the acceptance of an unauthorized user because of an incorrect match to the template of an authorized user. The corresponding measures in percentage are the False Rejection Rate (FRR) and False Acceptance Rate (FAR). For answer d, diagraphs refer to sets of average values compiled in the biometrics area of keystroke dynamics. Keystroke dynamics involves analyzing the characteristics of a user typing on a keyboard. Keystroke duration samples as well as measures of the latency between keystrokes are taken and averaged. These averages for all pairs of keys are called diagraphs. Trigraphs, sample sets for all key triples, can also be used as biometric samples.

- In a wireless General Packet Radio Services (GPRS) Virtual Private Network (VPN) application, which of the following security protocols is commonly used?

- SSL

- IPSEC

- TLS

- WTP

Answer: b

An example is the use of a GPRS-enabled laptop that connects to a corporate intranet via a VPN. The laptop is given an IP address and a RADIUS server authenticates the user. IPSEC is used to create the VPN. As background, GPRS is a second-generation (2G) packet data technology that is overlaid on existing Global System for Mobile communications (GSM). GSM is the wireless analog of the ISDN landline system. The key features of GPRS are that it is always on line (no dial-up needed), existing GSM networks can be upgraded with GPRS, and it can serve as the packet data core of third generation (3G) systems. Answers a and c, SSL and TLS, are similar security protocols that are used on the Internet side of the Wireless Application Protocol (WAP) Gateway. For answer d, WTP is the Wireless Transaction Protocol that is part of the WAP suite of protocols. WTP is a lightweight, message-oriented, transaction protocol that provides more reliable connections than UDP, but does not have the robustness of TCP.

- How is authentication implemented in GSM?

- Using public key cryptography

- It is not implemented in GSM

- Using secret key cryptography

- Out-of-band verification

Answer: c

Authentication is effected in GSM through the use of a common secret key, Ks, that is stored in the network operator's Authentication Center (AuC) and in the subscriber's SIM card. The SIM card may be in the subscriber's laptop, and the subscriber is not privy to Ks. To begin the authentication exchange, the home location of the subscriber's mobile station, (MS), generates a 128-bit random number (RAND) and sends it to the MS. Using an algorithm that is known to both the AuC and MS, the RAND is encrypted by both parties using the secret key, Ks. The ciphertext generated at the MS is then sent to the AuC and compared with the ciphertext generated by the AuC. If the two results match, the MS is authenticated and the access request is granted. If they do not match, the access request is denied. Answers a, b, and d are, therefore, incorrect.

Chapter 3—Telecommunications and Network Security

- Which of the choices below is NOT an OSI reference model Session Layer protocol, standard, or interface?

- SQL

- RPC

- MIDI

- ASP

- DNA SCP

Answer: c

The Musical Instrument Digital Interface (MIDI) standard is a Presentation Layer standard for digitized music. The other answers are all Session layer protocols or standards. Answer a, SQL, refers to the Structured Query Language database standard originally developed by IBM. Answer b, RPC, refers to the Remote Procedure Call redirection mechanism for remote clients. Answer d, ASP, is the AppleTalk Session Protocol; and answer e, DNA SCP, refers to DECnet's Digital Network Architecture Session Control Protocol. Source: Introduction to Cisco Router Configuration edited by Laura Chappell (Cisco Press, 1999).