MACHINE LEARNING (often abbreviated ML, though in this book I will simply call it ‘learning’) is a hot topic in technology and business, and that excitement has filtered into games. In principle, learning AI has the potential to adapt to each player, learning their tricks and techniques and providing a consistent challenge. It has the potential to produce more believable characters: characters that can learn about their environment and use it to the best effect. It also has the potential to reduce the effort needed to create game-specific AI: characters should be able to learn about their surroundings and the tactical options that they provide.

In practice, it hasn’t yet fulfilled its promise, and not for want of trying. Applying learning to your game requires careful planning and an understanding of the pitfalls. There have been some impressive successes in building learning AI that learns to play games, but less in providing compelling characters or enemies. The potential is sometimes more attractive than the reality, but if you understand the quirks of each technique and are realistic about how you apply them, there is no reason why you can’t take advantage of learning in your game.

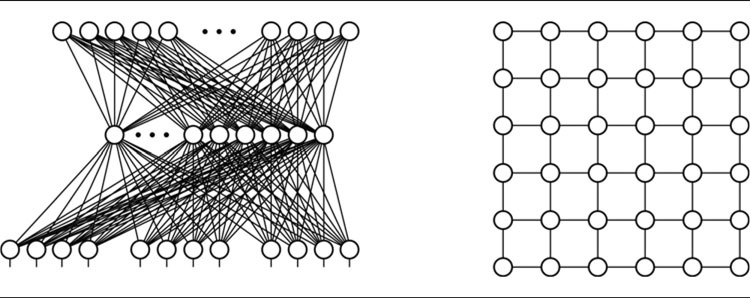

There is a whole range of different learning techniques, from very simple number tweaking through to complex neural networks. While most of the attention in the last few years has been focused on ‘deep learning’ (a form of neural networks) there are many other practical approaches. Each has its own idiosyncrasies that need to be understood before they can be used in real games.

We can classify learning techniques into several groups depending on when the learning occurs, what is being learned, and what effects the learning has on a character’s behavior.

7.1.1 ONLINE OR OFFLINE LEARNING

Learning can be performed during the game, while the player is playing. This is online learning, and it allows the characters to adapt dynamically to the player’s style and provides more consistent challenges. As a player plays more, their characteristic traits can be better anticipated by the computer, and the behavior of characters can be tuned to playing styles. This might be used to make enemies pose an ongoing challenge, or it could be used to offer the player more story lines of the kind they enjoy playing.

Unfortunately, online learning also produces problems with predictability and testing. If the game is constantly changing, it can be difficult to replicate bugs and problems. If an enemy character decides that the best way to tackle the player is to run into a wall, then it can be a nightmare to replicate the behavior (at worst you’d have to play through the whole same sequence of games, doing exactly the same thing each time as the player). I’ll return to this issue later in this section.

The majority of learning in game AI is done offline, either between levels of the game or more often at the development studio before the game leaves the building. This is performed by processing data about real games and trying to calculate strategies or parameters from them.

This allows more unpredictable learning algorithms to be tried out and their results to be tested exhaustively. The learning algorithms in games are usually applied offline; it is rare to find games that use any kind of online learning. Learning algorithms are increasingly being used offline to learn tactical features of multi-player maps, to produce accurate pathfinding and movement data, and to bootstrap interaction with physics engines. These are very constrained applications. Studios are experimenting with using deep learning in a broader way, having characters learn higher behavior from scratch. It remains to be seen whether this is successful enough to make major inroads into the way AI is created.

Applying learning in the pause when loading the next level of the game is a kind of offline learning: characters aren’t learning as they are acting. But it has many of the same downsides as online learning. We need to keep it short (load times for levels are usually part of a publisher or console manufacturer’s acceptance criteria for a game and certainly affects player satisfaction). We need to take care that bugs and problems can be replicated without replaying tens of games. We need to make sure that the data from the game are easily available in a suitable format (we can’t use long post-processing steps to dig data out of a huge log file, for example).

Most of the techniques in this chapter can be applied either online or offline. They aren’t limited to one or the other. If they are to be applied online, then the data they will learn from are presented as they are generated by the game. If it is used offline, then the data are stored and pulled in as a whole later.

The simplest kinds of learning are those that change a small area of a character’s behavior. They don’t change the whole quality of the behavior, but simply tweak it a little. These intra-behavior learning techniques are easy to control and can be easy to test.

Examples include learning to target correctly when projectiles are modeled by accurate physics, learning the best patrol routes around a level, learning where cover points are in a room, and learning how to chase an evading character successfully. Most of the learning examples in this chapter will illustrate intra-behavior learning.

An intra-behavior learning algorithm doesn’t help a character work out that it needs to do something very different (if a character is trying to reach a high ledge by learning to run and jump, it won’t tell the character to simply use the stairs instead, for example).

The frontier for learning AI in games is learning of behavior. What I mean by behavior is a qualitatively different mode of action—for example, a character that learns the best way to kill an enemy is to lay an ambush or a character that learns to tie a rope across a backstreet to stop an escaping motorbiker. Characters that can learn from scratch how to act in the game provide a challenging opposition for even the best human players.

Unfortunately, this kind of AI is on the limit of what might be possible.

Over time, an increasing amount of character behavior may be learned, either online or offline. Some of this may be to learn how to choose between a range of different behaviors (although the atomic behaviors will still need to be implemented by the developer). It is doubtful that it will be economical to learn everything. The basic movement systems, decision making tools, suites of available behaviors, and high-level decision making will almost certainly be easier and faster to implement directly. They can then be augmented with intra-behavior learning to tweak parameters.

The frontier for learning AI is decision making. Developers are increasingly experimenting with replacing the techniques discussed in Chapter 5 with learning systems, in particular deep learning. This is the only kind of inter-behavior learning I will look at in detail in this chapter: making decisions between fixed sets of (possibly parameterized) behaviors.

In reality, learning is not as widely used as you might think. Some of this is due to the relative complexity of learning techniques (in comparison with pathfinding and movement algorithms , at least). But games developers master far more complex techniques all the time, for graphics, network and physics simulation. The biggest problems with learning are not difficulty, but reproducibility and quality control.

Imagine a game in which the enemy characters learn their environment and the player’s actions over the course of several hours of gameplay. While playing one level, the QA team notices that a group of enemies is stuck in one cavern, not moving around the whole map. It is possible that this condition occurs only as a result of the particular set of things they have learned. In this case, finding the bug and later testing if it has been fixed involves replaying the same learning experiences. This is often impossible.

It is this kind of unpredictability that is the most often cited reason for severely curbing the learning ability of game characters. As companies developing industrial learning AI have often found, it is impossible to avoid the AI learning the “wrong” thing.

When you read academic papers about learning and games, they often use dramatic scenarios to illustrate the potential of a learning character on gameplay. You need to ask yourself, if the character can learn such dramatic changes of behavior then can it also learn dramatically poor behavior: behavior that might fulfill its own goals but will produce terrible gameplay? You can’t have your cake and eat it. The more flexible your learning is, the less control you have on gameplay.

The normal solution to this problem is to constrain the kinds of things that can be learned in a game. It is sensible to limit a particular learning system to working out places to take cover, for example. This learning system can then be tested by making sure that the cover points it is identifying look right. The learning will have difficulty getting carried away; it has a single task that can be easily visualized and checked.

Under this modular approach there is nothing to stop several different learning systems from being applied (one for cover points, another to learn accurate targeting, and so on). Care must be taken to ensure that they can’t interact in nasty ways. The targeting AI may learn to shoot in such a way that it often accidentally hits the cover that the cover-learning AI is selecting, for example.

A common problem identified in much of the AI learning literature is over-fitting, or over-learning. This means that if a learning AI is exposed to a number of experiences and learns from them, it may learn the response to only those situations. We normally want the learning AI to be able to generalize from the limited number of experiences it has to be able to cope with a wide range of new situations.

Different algorithms have different susceptibilities to over-fitting. Neural networks particularly can over-fit during learning if they are wrongly parameterized or if the network is too large for the learning task at hand. We’ll return to these issues as we consider each learning algorithm in turn.

7.1.6 THE ZOO OF LEARNING ALGORITHMS

In this chapter we’ll look at learning algorithms that gradually increase in complexity and sophistication. The most basic algorithms, such as the various parameter modification techniques in the next section, are often not thought of as learning at all.

At the other extreme we will look at reinforcement learning and neural networks, both fields of active AI research that are huge in their own right. The chapter ends with a overview of deep learning. But I will not be able to do more than scratch the surface of these advanced techniques. Hopefully there will be enough information to get the algorithms running. More importantly, I hope it will be clear why they are not yet ubiquitous in game AI.

The key thing to remember in all learning algorithms is the balance of effort. Learning algorithms are attractive because you can do less implementation work. You don’t need to anticipate every eventuality or make the character AI particularly good. Instead, you create a general-purpose learning tool and allow that to find the really tricky solutions to the problem. The balance of effort should be that it is less work to get the same result by creating a learning algorithm to do some of the work.

Unfortunately, it is often not possible. Learning algorithms can require a lot of hand-holding: presenting data in the correct way, parameterizing the learning system, making sure their results are valid, and testing them to avoid them learning the wrong thing.

I advise developers to consider carefully the balance of effort involved in learning. If a technique is very tricky for a human being to solve and implement, then it is likely to be tricky for the computer, too. If a human being can’t reliably learn to keep a car cornering on the limit of its tire’s grip, then a computer is unlikely to suddenly find it easy when equipped with a vanilla learning algorithm. To get the result you likely have to do a lot of additional work. Great results in academic papers can be achieved by the researchers carefully selecting the problem they need to solve, and spending a lot of time fine tuning the solution. Both are luxuries a studio AI developer cannot afford.

The simplest learning algorithms are those that calculate the value of one or more parameters. Numerical parameters are used throughout AI development: magic numbers that are used in steering calculations, cost functions for pathfinding, weights for blending tactical concerns, probabilities in decision making, and many other areas.

These values can often have a large effect on the behavior of a character. A small change in a decision making probability, for example, can lead an AI into a very different style of play.

Parameters such as these are good candidates for learning. Most commonly, this is done offline, but can usually be controlled when performed online.

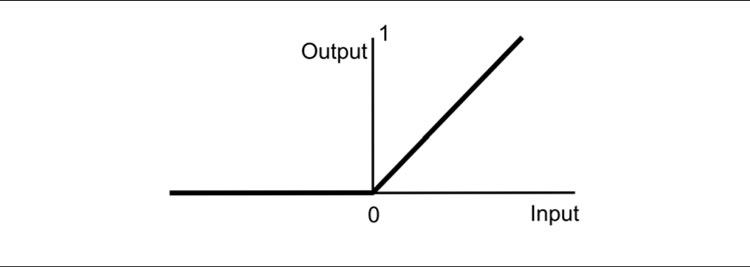

Figure 7.1: The energy landscape of a one-dimensional problem

A common way of understanding parameter learning is the “fitness landscape” or “energy landscape.” Imagine the value of the parameter as specifying a location. In the case of a single parameter this is a location somewhere along a line. For two parameters it is the location on a plane.

For each location (i.e., for each value of the parameter) there is some energy value. This energy value (often called a “fitness value” in some learning techniques) represents how good the value of the parameter is for the game. You can think of it as a score.

We can visualize the energy values by plotting them against the parameter values (see Figure 7.1).

For many problems the crinkled nature of this graph is reminiscent of a landscape, especially when the problem has two parameters to optimize (i.e., it forms a three-dimensional structure). For this reason it is usually called an energy or fitness landscape.

The aim of a parameter learning system is to find the best values of the parameter. The energy landscape model usually assumes that low energies are better, so we try to find the valleys in the landscape. Fitness landscapes are usually the opposite, so they try to find the peaks.

The difference between energy and fitness landscapes is a matter of terminology only: the same techniques apply to both. You simply swap searching for maximum (fitness) or minimum (energy). Often, you will find that different techniques favor different terminologies. In this section, for example, hill climbing is usually discussed in terms of fitness landscapes, and simulated annealing is discussed in terms of energy landscapes.

Energy and Fitness Values

It is possible for the energy and fitness values to be generated from some function or formula. If the formula is a simple mathematical formula, we may be able to differentiate it. If the formula is differentiable, then its best values can be found explicitly. In this case, there is no need for parameter optimization. We can simply find and use the best values.

In most cases, however, no such formula exists. The only way to find out the suitability of a parameter value is to try it out in the game and see how well it performs. In this case, there needs to be some code that monitors the performance of the parameter and provides a fitness or energy score. The techniques in this section all rely on having such an output value.

If we are trying to generate the correct parameters for decision making probabilities, for example, then we might have the character play a couple of games and see how it scores. The fitness value would be the score, with a high score indicating a good result.

In each technique we will look at several different sets of parameters that need to be tried. If we have to have a five-minute game for each set, then learning could take too long. There usually has to be some mechanism for determining the value for a set of parameters quickly. This might involve allowing the game to run at many times normal speed, without rendering the screen, for example. Or, we could use a set of heuristics that generate a value based on some assessment criteria, without ever running the game. If there is no way to perform the check other than running the game with the player, then the techniques in this chapter are unlikely to be practical.

There is nothing to stop the energy or fitness value from changing over time or containing some degree of guesswork. Often, the performance of the AI depends on what the player is doing. For online learning, this is exactly what we want. The best parameter value will change over time as the player behaves differently in the game. The algorithms in this section cope well with this kind of uncertain and changing fitness or energy score.

In all cases we will assume that we have some function that we can give a set of parameter values and it will return the fitness or energy value for those parameters. This might be a fast process (using heuristics) or it might involve running the game and testing the result. For the sake of parameter modification algorithms, however, it can be treated as a black box: in goes the parameters and out comes the score.

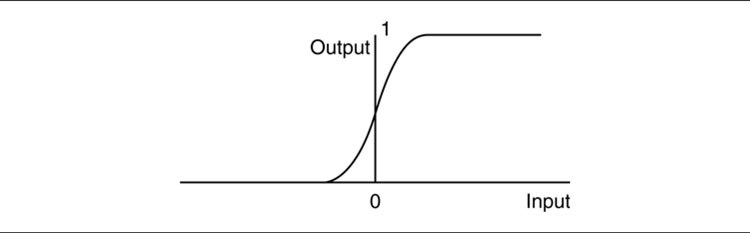

Initially, a guess is made as to the best parameter value. This can be completely random; it can be based on the programmer’s intuition or even on the results from a previous run of the algorithm. This parameter value is evaluated to get a score.

The algorithm then tries to work out in what direction to change the parameter in order to improve its score. It does this by looking at nearby values for each parameter. It changes each parameter in turn, keeping the others constant, and checks the score for each one. If it sees that the score increases in one or more directions, then it moves up the steepest gradient. Figure 7.2 shows the hill climbing algorithm scaling a fitness landscape.

Figure 7.2: Hill climbing ascends a fitness landscape

In the single parameter case, two neighboring values are sufficient, one on each side of the current value. For two parameters four samples are used, although more samples in a circle around the current value can provide better results at the cost of more evaluation time.

Hill climbing is a very simple parametric optimization technique. It is fast to run and can often give very good results.

Pseudo-Code

One step of the algorithm can be run using the following implementation:

The STEP constant in this function dictates the size of each tweak that can be made. We could replace this with an array, with one value per parameter if parameters required different step sizes.

The optimizeParameters function can then be called multiple times in a row to give the hill climbing algorithm. At each iteration the parameters given are the results from the previous call to optimizeParameters.

Data Structures and Interfaces

The list of parameters has its number of elements accessed with the size method. Other than this, there are no special interfaces or data structures required.

Implementation Notes

In the implementation above I evaluate the function on the same set of parameters inside both the driver and the optimization functions. This is wasteful, especially if the evaluation function is complex or time-consuming.

We should allow the same value to be shared, either by caching it (so it isn’t re-evaluated when the evaluation function is called again) or by passing both the value and the parameters back from optimizeParameters.

Performance

Each iteration of the algorithm is O(n) in time, where n is the number of parameters. It is O(1) in memory. The number of iterations is controlled by the steps parameter. If the steps parameter is sufficiently large, then the algorithm will return when it has found a solution (i.e., it has a set of parameters that it cannot improve further).

7.2.3 EXTENSIONS TO BASIC HILL CLIMBING

The hill climbing problem given in the algorithm description above is very easy to solve. It has a single slope in each direction from the highest fitness value. Following the slope will always lead you to the top. The fitness landscape in Figure 7.3 is more complex. The hill climbing algorithm shows that the best parameter value is never found. It gets stuck on a small sub-peak on the way to the main peak.

This sub-peak is called a local maximum (or a local minimum if we are using an energy landscape). The more local maxima there are in a problem, the more difficult it is for any algorithm to solve. At worst, every fitness or energy value could be random and not correlated to the nearby values at all. This is shown in Figure 7.4, and, in this case, no systematic search mechanism will be able to solve the problem.

Figure 7.3: Non-monotonic fitness landscape with sub-optimal hill climbing

Figure 7.4: Random fitness landscape

The basic hill climbing algorithm has several extensions that can be used to improve performance when there are local maxima. None of them forms a complete solution, and none works when the landscape is near to random, but they can help if the problem isn’t overwhelmed by sub-optima.

Figure 7.5: Non-monotonic fitness landscape solved by momentum hill climbing

Momentum

In the case of Figure 7.3 (and many others), we can solve the problem by introducing momentum. If the search is consistently improving in one direction, then it should continue in that direction for a little while, even when it seems that things aren’t improving any more.

This can be implemented using a momentum term. When the hill climber moves in a direction, it keeps a record of the score improvement it achieved at that step. At the next step it adds a proportion of that improvement to the fitness score for moving in the same direction again, which then biases the algorithm to move in the same direction again.

This approach will deliberately overshoot the target, take a couple of steps to work out that it is getting worse, and then reverse. Figure 7.5 shows the previous fitness landscape with momentum in the hill climbing algorithm. Notice that it takes much longer to reach the best parameter value, but it doesn’t get stuck so easily on the way to the main peak.

Adaptive Resolution

So far we have assumed that the parameter is changed by the same amount at each step of the algorithm. When the parameter is a long way from the best value, taking small steps means that the learning is slow (especially if it takes a while to generate a score by having the AI play the game). On the other hand, if the steps are large, then the optimization may always overshoot and never reach the best value.

Adaptive resolution is often used to make long jumps early in the search and smaller jumps later on. As long as the hill climbing algorithm is successfully improving, it will increase the length of its jumps somewhat. When it stops improving, it assumes that the jumps are overshooting the best value and reduces their size. This approach can be combined with a momentum term or used on its own in a regular hill climber.

Figure 7.6: Hill climbing multiple trials

Multiple Trials

Hill climbing is very much dependent on the initial guess. If the initial guess isn’t on the slope toward the best parameter value, then the hill climber may move off completely in the wrong direction and climb a smaller peak. Figure 7.6 shows this situation.

Most hill climbing algorithms use multiple different start values distributed across the whole landscape. In Figure 7.6, the correct optimum is found on the third attempt.

In cases where the learning is being performed online and the player expects the AI not to suddenly get worse (because it starts the hill climbing again with a new parameter value), this may not be a suitable technique.

Finding the Global Optimum

So far we’ve talked as if the goal is to find the best possible solution. This is undoubtedly our ultimate aspiration, but we are faced with a problem. In most problems we not only have no idea what the best solution is but we can’t even recognize it when we find it.

Let’s say in an RTS game we are trying to optimize the best use of resources into construction or research, for example. We may run 200 trials and find that one set of parameters is clearly the best. We can’t guarantee it is the best of all possible sets, however. Even if the last 50 trials all come up with the same value, we can’t guarantee that we won’t find a better set of parameters on the next go. There is no formula we can work out that lets us tell if the solution we have is the best possible one.

Extensions to hill climbing such as momentum, adaptive resolution, and multiple trials don’t guarantee that we get the best solution, but compared to the simple hill climbing algorithm they will almost always find better solutions more quickly. In a game we need to balance the time spent looking with the quality of solution. Eventually, the game needs to stop looking and conclude that the solution it has will be the one it uses, regardless if there is a better one out there.

This is sometimes called “satisficing” (although that term has different meanings for different people): we are optimizing to get a satisfactory result, rather than to find the best result.

Annealing is a physical process where the temperature of a molten metal is slowly reduced, allowing it to solidify in a highly ordered way. Reducing the temperature suddenly leads to internal stresses, weaknesses, and other undesired effects. Slow cooling allows the metal to find its lowest energy configuration.

As a parameter optimization technique, annealing uses a random term to represent the temperature. Initially, it is high, making the behavior of the algorithm very random. Over time it reduces, and the algorithm becomes more predictable.

It is based on the standard hill climbing algorithm, although it is customary to think in terms of energy landscapes rather than fitness landscapes (hence hill climbing becomes hill descent).

There are many ways to introduce the randomness into the hill descent algorithm. The original method uses a calculated Boltzmann probability coefficient. We’ll look at this later in this section. A simpler method is more commonly implemented, however, for simple parameter learning applications.

Direct Method

At each hill climbing step, a random number is added to the evaluation for each neighbor of the current value. In this way the best neighbor is still more likely to be chosen, but it can be overridden by a large random number. The range of the random number is initially large, but is reduced over time.

For example, the random range is ±10, the evaluation of the current value is 0, and its neighbors have evaluations of 20 and 39. A random number is added from the range ±10 to each evaluation. It is possible that the first value (scoring 20) will be chosen over the second, but only if the first gets a random number of +10 and the second gets a random number of -10. In the vast majority of cases, the second value will be chosen.

Several steps later, the random range might be ±1, in which case the first neighbor could never be chosen. On the other hand, at the start of the annealing, the random range might be ±100, where the first neighbor has a very good chance of being chosen.

Pseudo-Code

We can apply this directly to our previous hill climbing algorithm. The optimizeParameters function is replaced by annealParameters.

The randomBinomial function is implemented as

as in previous chapters.

The main hill climbing function should now call annealParameters rather than optimizeParameters.

Implementation Notes

I have changed the direction of the comparison operation in the middle of the algorithm. Because annealing algorithms are normally written based on energy landscapes, I have changed the implementation so that it now looks for a lower function value.

Performance

The performance characteristics of the algorithm are as before: O(n) in time and O(1) in memory.

Boltzmann Probabilities

Motivated by the physical annealing process, the original simulated annealing algorithm used a more complex method of introducing the random factor to hill climbing. It was based on a slightly less complex hill climbing algorithm.

In our hill climbing algorithm we evaluate all neighbors of the current value and work out which is the best one to move to. This is often called “steepest gradient” hill climbing, because it moves in the direction that will bring the best results. A simpler hill climbing algorithm will simply move as soon as it finds the first neighbor with a better score. It may not be the best direction to move in, but is an improvement nonetheless.

We can combine annealing with this simpler hill climbing algorithm as follows. If we find a neighbor that has a lower (better) score, we select it as normal. If the neighbor has a worse score, then we calculate the energy we’ll be gaining by moving there, ΔE. We make this move with a probability proportional to

where T is the current temperature of the simulation (corresponding to the amount of randomness). In the same way as previously, the T value is lowered over the course of the process.

Pseudo-Code

We can implement a Boltzmann optimization step in the following way:

The exp function returns the value of e raised to the power of its argument. It is a standard function in most math libraries.

The driver function is as before, but now calls boltzmannAnnealParameters rather than optimizeParameters.

Performance

The performance characteristics of the algorithm are as before: O(n) in time and O(1) in memory.

Optimizations

Just like regular hill climbing, annealing algorithms can be combined with momentum and adaptive resolution techniques for further optimization. Combining all these techniques is often a matter of trial and error, however. Tuning the amount of momentum, changing the step size, and annealing temperature so they work in harmony can be tricky.

In my experience I’ve rarely been able to make reliable improvements to annealing by adding in momentum, although adaptive step sizes are useful.

It is often useful to be able to guess what players will do next. Whether it is guessing which passage they are going to take, which weapon they will select, or which route they will attack from, a game that can predict a player’s actions can mount a more challenging opposition.

Humans are notoriously bad at behaving randomly. Psychological research has been carried out over decades and shows that we cannot accurately randomize our responses, even if we specifically try. Mind magicians and expert poker players make use of this. They can often easily work out what we’ll do or think next based on a relatively small amount of experience of what we’ve done in the past.

Often, it isn’t even necessary to observe the actions of the same player. We have shared characteristics that run so deep that learning to anticipate one player’s actions can often lead to better play against a completely different player.

A simple prediction game is “left or right.” One person holds a coin in either the left or right hand. The other person then attempts to guess which hand the person has hidden it in.

Although there are complex physical giveaways (called “tells”) which indicate a person’s choice, it turns out that a computer can score reasonably well at this game also. We will use it as the prototype action prediction task.

In a game context, this may apply to the choice of any item from a set of options: the choice of passageway, weapon, tactic, or cover point.

The simplest way to predict the choice of a player is to keep a tally of the number of times they choose each option. This will then form a raw probability of that player choosing that action again.

For example, after 20 times through a level, if the first passage has been chosen 72 times, and the second passage has been chosen 28 times, then the AI will be able to predict that a player will choose the first route.

Of course, if the AI then always lays in wait for the player in the first route, the player will very quickly learn to use the second route.

This kind of raw probability prediction is very easy to implement, but it gives a lot of feedback to the player, who can use the feedback to make their decisions more random.

In the above example, the character will position itself on the most likely route. The player will only fall foul of this once and then will use the other route. The character will continue standing where the player isn’t until the probabilities balance. Eventually, the player will learn to simply alternate different routes and always miss the character.

When the choice is made only once, then this kind of prediction may be all that is possible. If the probabilities are gained from many different players, then it can be a good indicator of which way a new player will go.

Often, a series of choices must be made, either repeats of the same choice or a series of different choices. The early choices can have good predictive power over the later choices. We can do much better than using raw probabilities.

When a choice is repeated several times (the selection of cover points or weapons when enemies attack, for example), a simple string matching algorithm can provide good prediction.

The sequence of choices made is stored as a string (it can be a string of numbers or objects, not just a string of characters). In the left-and-right game this may look like “LRRLRLLLRRLRLRR,” for example. To predict the next choice, the last few choices are searched for in the string, and the choice that normally follows is used as the prediction.

In the example above the last two moves were “RR.” Looking back over the sequence, two right-hand choices are always followed by a left, so we predict that the player will go for the left hand next time. In this case we have looked up the last two moves. This is called the “window size”: we are using a window size of two.

The string matching technique is rarely implemented by matching against a string. It is more common to use a set of probabilities similar to the raw probability in the previous section. This is known as an N-Gram predictor (where N is one greater than the window size parameter, so 3-Gram would be a predictor with a window size of two).

In an N-Gram we keep a record of the probabilities of making each move given all combinations of choices for the previous N moves. So in a 3-Gram for the left-and-right game we keep track of probability for left and right given four different sequences: “LL,” “LR,” “RL,” and “RR.” That is eight probabilities in all, but each pair must add up to one.

The sequence of moves above reduces to the following probabilities:

..R |

..L |

|

LR |

|

|

LR |

|

|

RL |

|

|

RR |

|

|

The raw probability method is equivalent to the string matching algorithm, with a zero window size.

N-Grams in Computer Science

N-Grams are used in various statistical analysis techniques and are not limited to prediction. They have applications particularly in analysis of human languages.

Strictly, an N-Gram algorithm keeps track of the frequency of each sequence, rather than the probability. In other words, a 3-Gram will keep track of the number of times each sequence of three choices is seen. For prediction, the first two choices form the window, and the probability is calculated by looking at the proportion of times each option is taken for the third choice.

In the implementation I will follow this pattern by storing frequencies rather than probabilities (they also have the advantage of being easier to update), although we will optimize the data structures for prediction by allowing lookup using the window choices only.

Pseudo-Code

We can implement the N-Gram predictor in the following way:

Each time an action occurs, the game registers the last n actions using the registerActions method. This updates the counts for the N-Gram. When the game needs to predict what will happen next, it feeds only the window actions into the getMostLikely method, which returns the most likely action or none if no data has ever been seen for the given action.

Data Structures and Interfaces

In the pseudo-code I have used a hash table to store count data. Each entry in the data hash is a key data record, which has the following structure:

There is one KeyDataRecord instance for each set of window actions. It contains counts for how often each following action is seen and a total member that keeps track of the total number of times the window has been seen.

We can calculate the probability of any following action by dividing its count by the total. This isn’t used in the algorithm above, but it can be used to determine how accurate the prediction is likely to be. A character may only lay an ambush in a dangerous location, for example, if it is very sure the player will come its way.

Within the record, the counts member is also a hash table indexed by the predicted action. In the getMostLikely function we need to be able to find all the keys in the counts hash table. This is done using the getKeys method.

Implementation Notes

The implementation above will work with any window size and can support more than two actions. It uses hash tables to avoid growing too large when most combinations of actions are never seen.

If there are only a small number of actions, and all possible sequences can be visited, then it will be more efficient to replace the nested hash tables with a single array. As in the table example at the start of this section, the array is indexed by the window actions and the predicted action. Values in the array initialized to zero are simply incremented when a sequence is registered. One row of the array can then be searched to find the highest value and, therefore, the most likely action.

Performance

Assuming that the hash tables are not full (i.e., that hash assignment and retrieval are constant time processes), the registerActions function is O(1) in time. The getMostLikely function is O(m) in time, where m is the number of possible actions (since we need to search each possible follow-on action to find the best). We can swap this over by keeping the counts hash table sorted by value. In this case, registerActions will be O(m) and getMostLikely will be O(1).

In most cases, however, actions will need to be registered much more often than they are predicted, so the balance as given is optimum.

The algorithm is O(mn) in memory, where n is the N value. The N value is the number of actions in the window, plus one.

Increasing the window size initially increases the performance of the prediction algorithm. For each additional action in the window, the improvement reduces until there is no benefit to having a larger window, and eventually the prediction gets worse with a larger window until we end up making worse predictions than we would if we simply guessed at random.

This is because, while our future actions are predicted by our preceding actions, this is rarely a long causal process. We are drawn toward certain actions and short sequences of actions, but longer sequences only occur because they are made up of the shorter sequences. If there is a certain degree of randomness in our actions, then a very long sequence will likely have a fair degree of randomness in it. The very large window size is likely to include more randomness and, therefore, be a poor predictor. There is a balance in having a large enough window to accurately capture the way our actions influence each other, without being so long that it gets foiled by our randomness. As the sequence of actions gets more random, the window size needs to be reduced.

Figure 7.7 shows the accuracy of an N-Gram for different window sizes on a sequence of 1,000 trials (for the left-or-right game). You’ll notice that we get greatest predictive power in the 5-Gram, and higher window sizes provide worse performance. But the majority of the power of the 5-Gram is present in the 3-Gram. If we use just a 3-Gram, we’ll get almost optimum performance, and we won’t have to train on so many samples. Once we get beyond the 10-Gram, prediction performance is very poor. Even on this very predictable sequence, we get worse performance than we’d expect if we guessed at random. This graph was produced using an N-Gram implementation which follows the algorithm given above.

In predictions where there are more than two possible choices, the minimum window size needs to be increased a little. Figure 7.8 shows results for the predictive power in a five choice game. In this case the 3-Gram does have noticeably less power than the 4-Gram.

We can also see in this example that the falloff is faster for higher window sizes: large window sizes get poorer more quickly than before.

There are mathematical models that can tell you how well an N-Gram predictor will predict a sequence. They are sometimes used to tune the optimal window size. I’ve never seen this done in games, however, and because they rely on being able to find certain inconvenient statistical properties of the input sequence, I suggest it is simpler to just start at a 4-Gram and use trial and error.

Figure 7.7: Different window sizes

Figure 7.8: Different windows in a five choice game

Memory Concerns

Counterbalanced against the improvement in predictive power are the memory and data requirements of the algorithm. For the left-and-right game, each additional move in the window doubles the number of probabilities that need to be stored (if there are three choices rather than two it triples the number, and so on). This increase in storage requirements can often get out of hand, although “sparse” data structures such as a hash table (where not every value needs to have storage assigned) can help.

Sequence Length

The larger number of probabilities requires more sample data to fill. If most of the sequences have never been seen before, then the predictor will not be very powerful. To reach the optimal prediction performance, all the likely window sequences need to have been visited several times. This means that learning takes much longer, and the performance of the predictor can appear quite poor. This final issue can be solved to some extent using a variation on the N-Gram algorithm: hierarchical N-Grams.

When an N-Gram algorithm is used for online learning, there is a balance between the maximum predictive power and the performance of the algorithm during the initial stages of learning. A larger window size may improve the potential performance, but will mean that the algorithm takes longer to get to a reasonable performance level.

The hierarchical N-Gram algorithm effectively has several N-Gram algorithms working in parallel, each with increasingly large window sizes. A hierarchical 3-Gram will have regular 1-Gram (i.e., the raw probability approach), 2-Gram, and 3-Gram algorithms working on the same data.

When a series of actions are provided, it is registered in all the N-Grams. A sequence of “LRR” passed to a hierarchical 3-Gram, for example, gets registered as normal in the 3-Gram, the “RR” portion gets registered in the 2-Gram, and “R” gets registered in the 1-Gram.

When a prediction is requested, the algorithm first looks up the window actions in the 3-Gram. If there have been sufficient examples of the window, then it uses the 3-Gram to generate its prediction. If there haven’t been enough, then it looks at the 2-Gram. If that likewise hasn’t had enough examples, then it takes its prediction from the 1-Gram. If none of the N-Grams has sufficient examples, then the algorithm returns no prediction or just a random prediction.

How many constitutes “enough” depends on the application. If a 3-Gram has only one entry for the sequence “LRL,” for example, then it will not be confident in making a prediction based on one occurrence. If the 2-Gram has four entries for the sequence “RL,” then it may be more confident. The more possible actions there are, the more examples are needed for an accurate prediction.

There is no single correct threshold value for the number of entries required for confidence. To some extent it needs to be found by trial and error. In online learning, however, it is common for the AI to make decisions based on very sketchy information, so the confidence threshold can be small (say, 3 or 4). In some of the literature on N-Gram learning, confidence values are much higher. As in many areas of AI, game AI can afford to take more risks.

Pseudo-Code

The hierarchical N-Gram system uses the original N-Gram predictor and can be implemented like the following:

I have added an explicit constructor in the algorithm to show how the array of N-Grams is structured.

Data Structures and Implementation

The algorithm uses the same data structures as previously and has the same implementation caveats: its constituent N-Grams can be implemented in whatever way is best for your application, as long as a count variable is available for each possible set of window actions.

Performance

The algorithm is O(n) in memory and O(n) in time, where n is the highest numbered N-Gram used.

The registerSequence method uses the O(1) registerSequence method of the N-Gram class, so it is O(n) overall. The getMostLikely method uses the O(n) getMostLikely method of the N-Gram class once, so it is O(n) overall.

Confidence

The code above used the number of samples to decide whether to use one level of N-Gram or to look at lower levels. While this gives good behavior in practice, it is strictly only an approximation. What we are interested in is the confidence that an N-Gram has in the prediction it will make. Confidence is a formal quantity defined in probability theory, although it has several different versions with their own characteristics. The number of samples is just one element that affects confidence.

In general, confidence measures the likelihood of a situation being arrived at by chance. If the probability of a situation being arrived at by chance is low, then the confidence is high.

For example, if we have four occurrences of “RL,” and all of them are followed by “R,” then there is a good chance that RL is normally followed by R, and our confidence in choosing R next is high. If we have 1000 “RL” occurrences followed always by “R,” then the confidence in predicting an “R” would be much higher. If, on the other hand, the four occurrences are followed by “R” in two cases and by “L” in two cases, then we’ll have no idea which one is more likely.

Actual confidence values are more complex than this. They need to take into account the probability that a smaller window size will have captured the correct data, while the more accurate N-Gram will have been fooled by random variation.

The math involved in all this isn’t concise and doesn’t buy any performance increase. I’ve only ever used a simple count cut-off in this kind of algorithm. In preparing for this book I experimented and changed implementation to take into account more complex confidence values, but there was no measurable improvement in performance.

By far the most widespread game application of N-Gram prediction is in combat games. Beat-em-ups, sword combat games, and any other combo-based melee games involve timed sequences of moves. Using an N-Gram predictor allows the AI to predict what the player is trying to do as they start their sequence of moves. It can then select an appropriate rebuttal.

This approach is so powerful, however, that it can provide unbeatable AI. A common requirement in this kind of game is to remove competency from the AI so that the player has a sporting chance.

This application is so deeply associated with the technique that many developers don’t give it a second thought in other situations. Predicting where players will be, what weapons they will use, or how they will attack are all areas to which N-Gram prediction can be applied.

So far I have described learning algorithms that operate on relatively restricted domains: the value of a parameter and predicting a series of player choices from a limited set of options.

To realize the potential of learning AI, we would need to allow the AI to learn to make decisions. Chapter 5 outlined several methods for making decisions; the following sections look at decision makers that choose based on their experience.

These approaches cannot replace the basic decision making tools. State machines, for example, explicitly limit the ability of a character to make decisions that are not applicable in a situation (no point choosing to fire if your weapon has no ammo, for example). Learning is probabilistic; you will usually have some probability (however small) of carrying out each possible action. Learning hard constraints is notoriously difficult to combine with learning general patterns of behavior suitable for outwitting human opponents.

7.4.1 THE STRUCTURE OF DECISION LEARNING

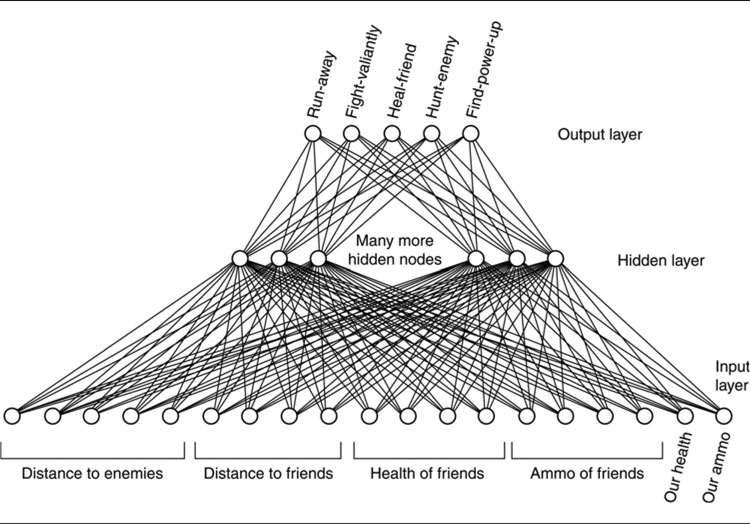

We can simplify the decision learning process into an easy to understand model. Our learning character has some set of behavior options that it can choose from. These may be steering behaviors, animations, or high-level strategies in a war game. In addition, it has some set of observable values that it can get from the game level. These may include the distance to the nearest enemy, the amount of ammo left, the relative size of each player’s army, and so on.

We need to learn to associate decisions (in the form of a single behavior option to choose) with observations. Over time, the AI can learn which decisions fit with which observations and can improve its performance.

Weak or Strong Supervision

In order to improve performance, we need to provide feedback to the learning algorithm. This feedback is called “supervision,” and there are two varieties of supervision used by different learning algorithms or by different flavors of the same algorithm.

Strong supervision takes the form of a set of correct answers. A series of observations are each associated with the behavior that should be chosen. The learning algorithm learns to choose the correct behavior given the observation inputs. These correct answers are often provided by a human player. The developer may play the game for a while and have the AI watch. The AI keeps track of the sets of observations and the decisions that the human player makes. It can then learn to act in the same way.

Weak supervision doesn’t require a set of correct answers. Instead, some feedback is given as to how good its action choices are. This can be feedback given by a developer, but more commonly it is provided by an algorithm that monitors the AI’s performance in the game. If the AI gets shot, then the performance monitor will provide negative feedback. If the AI consistently beats its enemies, then feedback will be positive.

Strong supervision is easier to implement and get right, but it is less flexible: it requires somebody to teach the algorithm what is right and wrong. Weak supervision can learn right and wrong for itself, but is much more difficult to get right.

Each of the remaining learning algorithms in this chapter works with this kind of model. It has access to observations, and it returns a single action to take next. It is supervised either weakly or strongly.

For any realistic size of game, the number of observable items of data will be huge and the range of actions will normally be fairly restricted. It is possible to learn very complex rules for actions in very specific circumstances.

This detailed learning is required for characters to perform at a high level of competency. It is characteristic of human behavior: a small change in our circumstances can dramatically affect our actions. As an extreme example, it makes a lot of difference if a barricade is made out of solid steel or cardboard boxes if we are going to use it as cover from incoming fire.

On the other hand, as we are in the process of learning, it will take a long time to learn the nuances of every specific situation. We would like to lay down some general rules for behavior fairly quickly. They will often be wrong (and we will need to be more specific), but overall they will at least look sensible.

Especially for online learning, it is essential to use learning algorithms that work from general principles to specifics, filling in the broad brush strokes of what is sensible before trying to be too clever. Often, the “clever” stage is so difficult to learn that AI algorithms never get there. They will have to rely on the general behaviors.

We’ll look at four decision learning techniques in the remainder of this chapter. All four have been used to some extent in games, but their adoption has not been overwhelming. The first technique, Naive Bayes classification, is what you should always try first. It is simple to implement and provides a good baseline for any more complicated techniques. For that reason, even academics who do research into new learning algorithms usually use Naive Bayes as a sanity check. In fact, much seemingly promising research in machine learning has foundered on the inability to do much better on a problem than Naive Bayes.

The second technique, decision tree learning, is also very practicable. It also has the important property than you can look at the output of the learning to see if it makes sense. The final two techniques, reinforcement learning and neural networks, have some potential for game AI, but are huge fields that I will only be able to overview here.

There are also many other learning techniques that you can read about in the literature. Modern machine learning is strongly grounded in Bayesian statistics and probability theory, so in that regard an introduction to Naive Bayes has the additional benefit of providing an introduction to the field.

The easiest way to explain Naive Bayes classifiers is with an example. Suppose we are writing a racing game and we want an AI character to learn a player’s style of going around corners. There are many factors that determine cornering style, but for simplicity let’s look at when the player decides to slow down based only on their speed and distance to a corner. To get started we can record some gameplay data to learn from. Here is a table that shows what a small subset of such data might look like:

brake? |

distance |

speed |

Y |

2.4 |

11.3 |

Y |

3.2 |

70.2 |

N |

75.7 |

72.7 |

Y |

80.6 |

89.4 |

N |

2.8 |

15.2 |

Y |

82.1 |

8.6 |

Y |

3.8 |

69.4 |

It is important to make the patterns in the data as obvious as possible; otherwise, the learning algorithm will require so much time and data that it will be impractical. So the first thing you need to do when thinking about applying learning to any problem is to look at your data. When we look at the data in the table we see some clear patterns emerging. Players are either near or far from the corner and are either going fast or slow. We will codify this by labeling distances below 20.0 as “near” and “far” otherwise. Similarly, we are going to say that speeds below 10.0 are considered “slow,” otherwise they are “fast.” This gives us the following table of binary discrete attributes:

brake? |

distance |

speed |

Y |

near |

slow |

Y |

near |

fast |

N |

far |

fast |

Y |

far |

fast |

N |

near |

slow |

Y |

far |

slow |

Y |

near |

fast |

Even for a human it is now easier to see connections between the attribute values and action choices. This is exactly what we were hoping for as it will make the learning fast and not require too much data.

In a real example there will obviously be a lot more to consider and the patterns might not be so obvious. But often knowledge of the game makes it fairly easy to know how to simplify things. For example, most human players will categorize objects as “in front,” “to the left,” “to the right,” or “behind.” So a similar categorization, instead of using precise angles, probably makes sense for the learning, too.

There are also statistical tools that can help. These tools can find clusters and can identify statistically significant combinations of attributes. But they are no match for common sense and practice. Making sure the learning has sensible attributes is part of the art of applying machine learning and getting it wrong is one of the main reasons for failure.

Now we need to specify precisely what it is that we would like to learn. We want to learn the conditional probability that a player would decide to brake given their distance and speed to a corner. The formula for this is

The next step is to apply Bayes rule:

The important point about Bayes rule is that it allows us to express the conditional probability of A given B, in terms of the conditional probability of B given A. We’ll see why this is important when we try to apply it. But first we’re going to re-state Bayes rule slightly as:

where

Here is the re-stated version of Bayes rule applied to our example:

Next we’ll apply a naive assumption of conditional independence to give:

If you remember any probability theory, then you’ve probably seen a formula a bit like this one before (but without the conditioning part) in the definition of independence.

Putting the application of Bayes rule and the naive assumption of conditional independence altogether gives the following final formula:

(7.1) |

The great thing about this final version is that we can use the table of values we generated earlier to look up various probabilities. To see how let’s consider the case when we have an AI character trying to decide whether to brake, or not, in a situation where the distance to a corner is 79.2 and its speed is 12.1. We want to calculate the conditional probability that a human player would brake in the same situation and use that to make our decision.

There are only two possibilities, either we brake or we don’t. So we will consider each one in turn. First, let’s calculate the probability of braking:

We begin by discretizing these new values to give:

Now we use the formula we derived in Equation 7.1, above, to give:

From the table of values we can count that for the 5 cases when people were braking, there are 2 cases when they were far away. So we estimate:

Similarly, we can count 2 out of 5 cases when people braked while traveling at slow speed to give:

Again from the table, in total there were 5 cases out of 7 when people were braking at all, to give:

This value is known as the prior since it represents the probability of braking prior to any knowledge about the current situation. An important point about the prior is that if an event is inherently unlikely, then the prior will be low. Therefore, the overall probability, given what we know about the current situation, can still be low. For example, Ebola is (thankfully) a rare disease so the prior that you have the disease is almost zero. So even if you have one of the symptoms, multiplying by the prior still makes it very unlikely that you actually have the disease.

Going back to our braking example, we can now put all these calculations together to compute the conditional probability a human player would brake in the current situation:

But what about the value of α? It turns out not to be important. To see why, let’s now calculate the probability of not braking:

The reason we don’t need a is because it cancels out (it has to be positive because probabilities can never be less than 0):

So the probability of braking is greater than that of not braking. If the AI character wants to behave like the humans from which we collected the data, then it should also brake.

The simplest implementation of a NaiveBayesClassifier class assumes we only have binary discrete attributes.

It’s not hard to extend the algorithm to non-binary discrete labels and non-binary discrete attributes. We also usually want to optimize the speed of the predict method. This is especially true in offline learning applications. In such cases you should pre-compute as many probabilities as possible in the update method.

One of the problems with multiplying small numbers together (like probabilities) is that, with the finite precision of floating point, they very quickly lose precision and eventually become zero. The usual way to solve this problem is to represent all probabilities as logarithms and then, instead of multiplying, we add. That is one of the reasons in the literature that you will often see people writing about the “log-likelihood.”

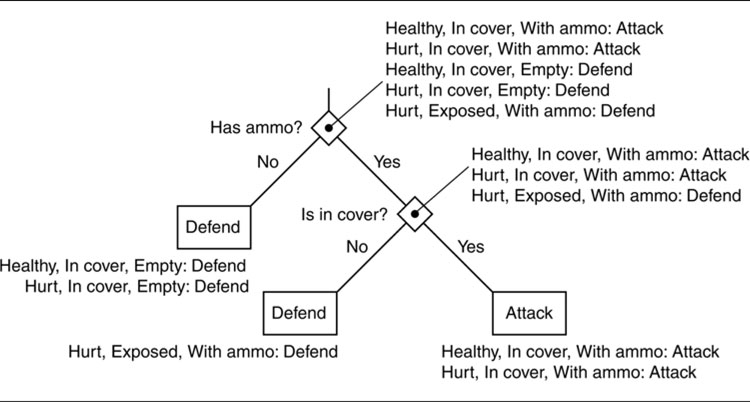

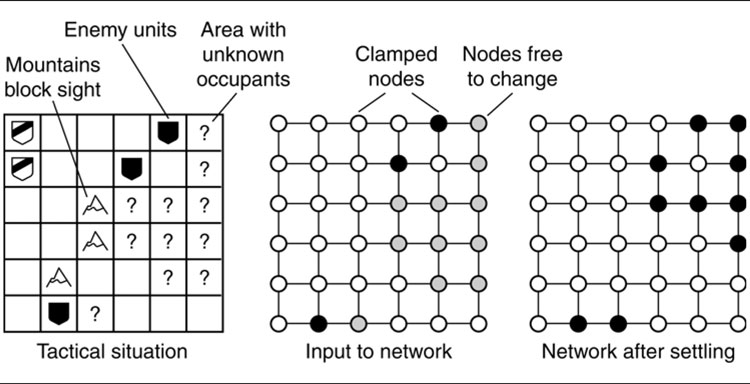

In Chapter 5 I described decision trees: a series of decisions that generate an action to take based on a set of observations. At each branch of the tree some aspect of the game world was considered and a different branch was chosen. Eventually, the series of branches lead to an action (Figure 7.9).

Trees with many branch points can be very specific and make decisions based on the intricate detail of their observations. Shallow trees, with only a few branches, give broad and general behaviors.

Decision trees can be efficiently learned: constructed dynamically from sets of observations and actions provided through strong supervision. The constructed trees can then be used in the normal way to make decisions during gameplay.

There are a range of different decision tree learning algorithms used for classification, prediction, and statistical analysis. Those used in game AI are typically based on Quinlan’s ID3 algorithm, which we will examine in this section.

Figure 7.9: A decision tree

Depending on whom you believe, ID3 stands for “Inductive Decision tree algorithm 3” or “Iterative Dichotomizer 3.” It is a simple to implement, relatively efficient decision tree learning algorithm.

Like any other algorithm it has a whole host of optimizations useful in different situations. It has been largely replaced in industrial AI use by optimized versions of the algorithm: C4, C4.5, and C5. In this book we’ll concentrate on the basic ID3 algorithm, which provides the foundation for these optimizations.

Algorithm

The basic ID3 algorithm uses the set of observation–action examples. Observations in ID3 are usually called “attributes.” The algorithm starts with a single leaf node in a decision tree and assigns a set of examples to the leaf node.

It then splits its current node (initially the single start node) so that it divides the examples into two groups. The division is chosen based on an attribute, and the division chosen is the one that is likely to produce the most efficient tree. When the division is made, each of the two new nodes is given the subset of examples that applies to them, and the algorithm repeats for each of them.

This algorithm is recursive: starting from a single node it replaces them with decisions until the whole decision tree has been created. At each branch creation it divides up the set of examples among its daughters, until all the examples agree on the same action. At that point the action can be carried out; there is no need for further branches.

The split process looks at each attribute in turn (i.e., each possible way to make a decision) and calculates the information gain for each possible division. The division with the highest information gain is chosen as the decision for this node. Information gain is a mathematical property, which we’ll need to look at in a little depth.

Entropy and Information Gain

In order to work out which attribute should be considered at each step, ID3 uses the entropy of the actions in the set. Entropy is a measure of the information in a set of examples. In our case, it measures the degree to which the actions in an example set agree with each other. If all the examples have the same action, the entropy will be 0. If the actions are distributed evenly, then the entropy will be 1. Information gain is simply the reduction in overall entropy.

You can think of the information in a set as being the degree to which membership of the set determines the output. If we have a set of examples with all different actions, then being in the set doesn’t tell us much about what action to take. Ideally, we want to reach a situation where being in a set tells us exactly which action to take.

This will be clearly demonstrated with an example. Let’s assume that we have two possible actions: attack and defend. We have three attributes: health, cover, and ammo. For simplicity, we’ll assume that we can divide each attribute into true or false: healthy or hurt, in cover or exposed, and with ammo or an empty gun. We’ll return later to situations with attributes that aren’t simply true or false.

Our set of examples might look like the following:

Healthy |

In Cover |

With Ammo |

Attack |

Hurt |

In Cover |

With Ammo |

Attack |

Healthy |

In Cover |

Empty |

Defend |

Hurt |

In Cover |

Empty |

Defend |

Hurt |

Exposed |

With Ammo |

Defend |

For two possible outcomes, attack and defend, the entropy of a set of actions is given by:

where pA is the proportion of attack actions in the example set, and pD is the proportion of defend actions. In our case, this means that the entropy of the whole set is 0.971.

At the first node the algorithm looks at each possible attribute in turn, divides the example set, and calculates the entropy associated with each division.

Divided by:

Health |

Ehealthy= 1.000 |

Ehurt= 0.918 |

Cover |

Ecover= 1.000 |

Eexposed= 0.000 |

Ammo |

Eammo= 0.918 |

Eempty= 0.000 |

Figure 7.10: The decision tree constructed from a simple example

The information gain for each division is the reduction in entropy from the current example set (0.971) to the entropies of the daughter sets. It is given by the formula:

where p⊤ is the proportion of examples for which the attribute is true, E⊤ is the entropy of those examples; similarly p⊥ and E⊥ refer to the examples for which the attribute is false. The equation shows that the entropies are multiplied by the proportion of examples in each category. This biases the search toward balanced branches where a similar number of examples get moved into each category.

In our example we can now calculate the information gained by dividing by each attribute:

So, of our three attributes, ammo is by far the best indicator of what action we need to take (this makes sense, since we cannot possibly attack without ammo). By the principle of learning the most general stuff first, we use ammo as our first branch in the decision tree.

If we continue in this manner, we will build the decision tree shown in Figure 7.10.

Notice that the health of the character doesn’t feature at all in this tree; from the examples we were given, it simply isn’t relevant to the decision. If we had more examples, then we might find situations in which it is relevant, and the decision tree would use it.

More than Two Actions

The same process works with more than two actions. In this case the entropy calculation generalizes to:

where n is the number of actions, and pi is the proportion of each action in the example set.

Most systems don’t have a dedicated base 2 logarithm. The logarithm for a particular base, lognx, is given by:

where the logarithms can be in any base (typically, base e is fastest, but it may be base 10 if you have an optimized implementation of that). So simply divide the result of whichever log you use by log(2) to give the logarithm to base 2.

Non-Binary Discrete Attributes

When there are more than two categories, there will be more than two daughter nodes for a decision.

The formula for information gained generalizes to:

where Si are the sets of examples for each of the n values of an attribute.

The listing below handles this situation naturally. It makes no assumptions about the number of values an attribute can have. Unfortunately, as we saw in Chapter 5, the flexibility of having more than two branches per decision isn’t too useful.

This still does not cover the majority of applications, however. The majority of attributes in a game either will be continuous or will have so many different possible values that having a separate branch for each is wasteful. We’ll need to extend the basic algorithm to cope with continuous attributes. We’ll return to this extension later in the section.

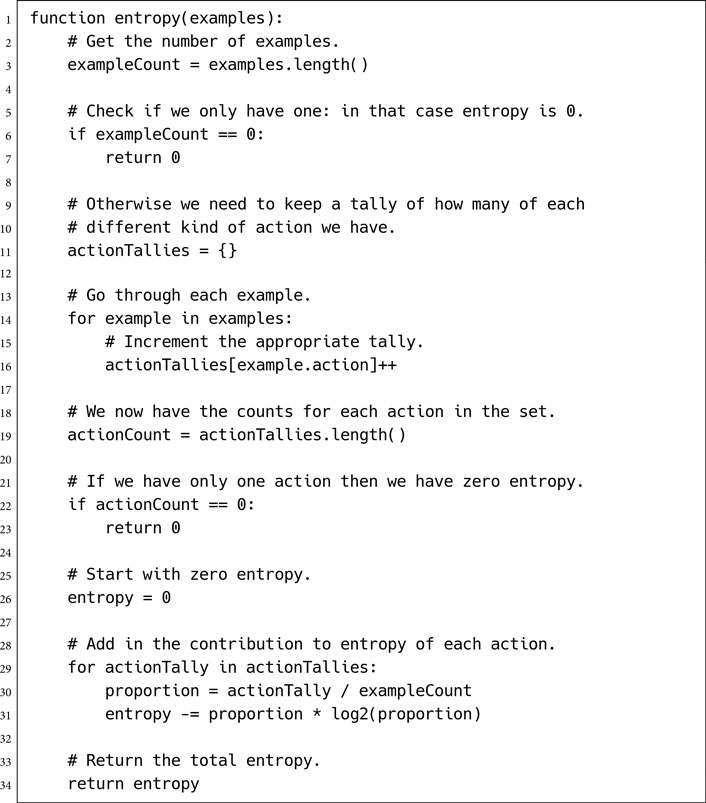

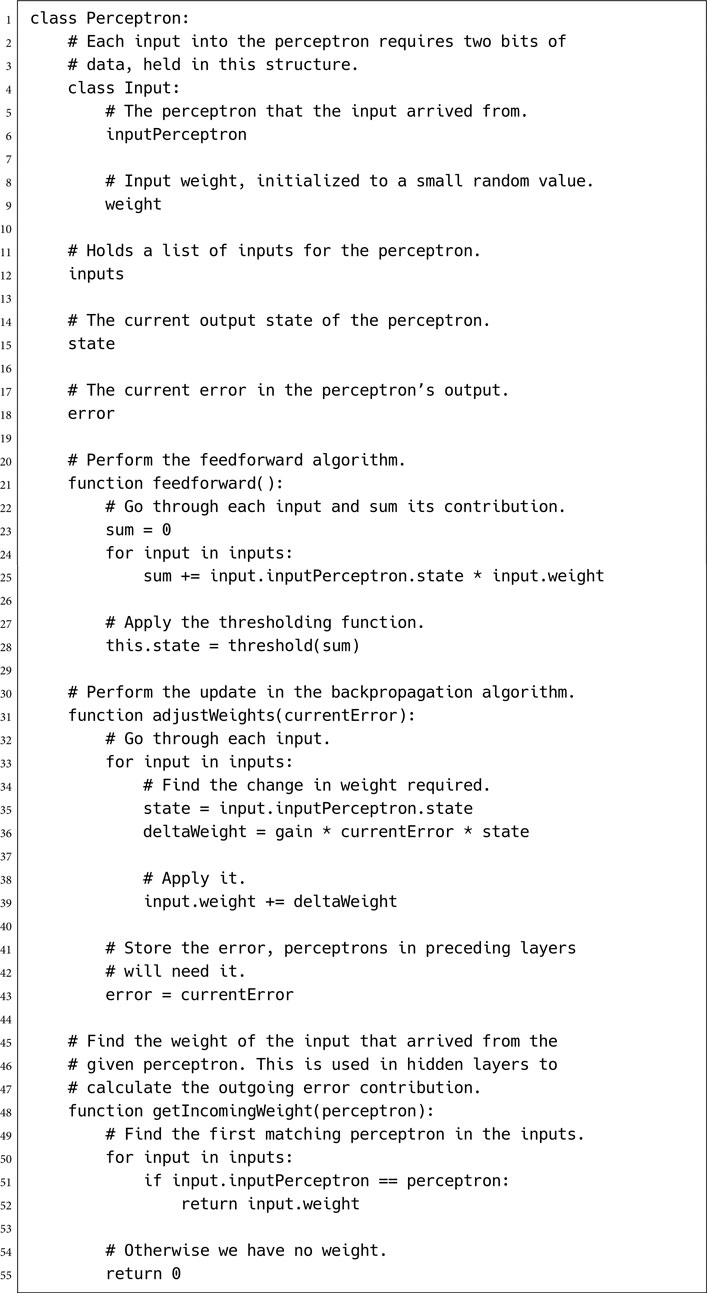

Pseudo-Code

The simplest implementation of makeTree is recursive. It performs a single split of a set of examples and then applies itself on each of the subsets to form the branches.

This pseudo-code relies on three key functions: splitByAttribute takes a list of examples and an attribute and divides them up into several subsets so that each of the examples in a subset share the same value for that attribute; entropy returns the entropy of a list of examples; and entropyOfSets returns the entropy of a list of lists (using the basic entropy function). The entropyOfSets method has the total number of examples passed to it to avoid having to sum up the sizes of each list in the list of lists. As we’ll see below, this makes implementation significantly easier.

Split by Attribute

The splitByAttribute function has the following form:

This pseudo-code treats the sets variable as both a dictionary of lists (when it adds examples based on their value) and a list of lists (when it returns the variable at the end). When it is used as a dictionary, care needs to be taken to initialize previously unused entries to be an empty list before trying to add the current example to it.

This duality is not a commonly supported requirement for a data structure, although the need for it occurs quite regularly. It is fairly easy to implement, however.

Entropy

The entropy function has the following form:

In this pseudo-code I have used the log2 function which gives the logarithm to base 2. As we saw earlier, this can be implemented as:

Although this is strictly correct, it isn’t necessary. We aren’t interested in finding the exact information gain. We are only interested in finding the greatest information gain. Because logarithms to any positive power retain the same order (i.e., if log2 x > log2 y then loge x > loge y), we can simply use the basic log in place of log2, and save on the floating point division.

The actionTallies variable acts both as a dictionary indexed by the action (we increment its values) and as a list (we iterate through its values). This can be implemented as a basic hash map, although care needs to be taken to initialize a previously unused entry to zero before trying to increment it.

Entropy of Sets

Finally, we can implement the function to find the entropy of a list of lists:

Data Structures and Interfaces

In addition to the unusual data structures used to accumulate subsets and keep a count of actions in the functions above, the algorithm only uses simple lists of examples. These do not change size after they have been created, so they can be implemented as arrays. Additional sets are created as the examples are divided into smaller groups. In C or C++, it is sensible to have the arrays refer by pointer to a single set of examples, rather than copying example data around constantly.

The pseudo-code assumes that examples have the following interface:

where getValue returns the value of a given attribute.

The ID3 algorithm does not depend on the number of attributes. action, not surprisingly, holds the action that should be taken given the attribute values.

Starting the Algorithm

The algorithm begins with a set of examples. Before we can call makeTree, we need to get a list of attributes and an initial decision tree node. The list of attributes is usually consistent over all examples and fixed in advance (i.e., we’ll know the attributes we’ll be choosing from); otherwise, we may need an additional application-dependent algorithm to work out the attributes that are used.

The initial decision node can simply be created empty. So the call may look something like:

![]()

Performance

The algorithm is O(a logv n) in memory and O(avn logv n) in time, where a is the number of attributes, v is the number of values for each attribute, and n is the number of examples in the initial set.

7.6.2 ID3 WITH CONTINUOUS ATTRIBUTES

ID3-based algorithms cannot operate directly with continuous attributes, and they are impractical when there are many possible values for each attribute. In either case the attribute values must be divided into a small number of discrete categories (usually two). This division can be performed automatically as an independent process, and with the categories in place the rest of the decision tree learning algorithm remains identical.

Single Splits

Continuous attributes can be used as the basis of binary decisions by selecting a threshold level. Values below the level are in one category, and values above the level are in another category. A continuous health value, for example, can be split into healthy and hurt categories with a single threshold value.

We can dynamically calculate the best threshold value to use with a process similar to that used to determine which attribute to use in a branch.

We sort the examples using the attribute we are interested in. We place the first element from the ordered list into category A and the remaining elements into category B. We now have a division, so we can perform the split and calculate information gained, as before.

We repeat the process by moving the lowest valued example from category B into category A and calculating the information gained in the same way. Whichever division gave the greatest information gained is used as the division. To enable future examples not in the set to be correctly classified by the resulting tree, we need a numeric threshold value. This is calculated by finding the average of the highest value in category A and the lowest value in category B.

This process works by trying every possible position to place the threshold that will give different daughter sets of examples. It finds the split with the best information gain and uses that.

The final step constructs a threshold value that would have correctly divided the examples into its daughter sets. This value is required, because when the decision tree is used to make decisions, we aren’t guaranteed to get the same values as we had in our examples: the threshold is used to place all possible values into a category.

As an example, consider a situation similar to that in the previous section. We have a health attribute, which can take any value between 0 and 200. We will ignore other observations and consider a set of examples with just this attribute.

50 |

Defend |

25 |

Defend |

39 |

Attack |

17 |

Defend |

We start by ordering the examples, placing them into the two categories, and calculating the information gained.

Category |

Attribute Value |

Action |

Information Gain |

A |

17 |

Defend |

|

B |

25 |

Defend |

|

39 |

Attack |

||

50 |

Defend |

0.12 |

|

Category |

Attribute Value |

Action |

Information Gain |

A |

17 |

Defend |

|

25 |

Defend |

||

B |

39 |

Attack |

|

50 |

Defend |

0.31 |

|

Category |

Attribute Value |

Action |

Information Gain |

A |

17 |

Defend |

|

25 |

Defend |

||

39 |

Attack |

||

B |

50 |

Defend |

0.12 |

We can see that the most information is gained if we put the threshold between 25 and 39. The midpoint between these values is 32, so 32 becomes our threshold value. Notice that the threshold value depends on the examples in the set. Because the set of examples gets smaller at each branch in the tree, we can get different threshold values at different places in the tree. This means that there is no set dividing line. It depends on the context. As more examples are available, the threshold value can be fine-tuned and made more accurate.

Determining where to split a continuous attribute can be incorporated into the entropy checks for determining which attribute to split on. In this form our algorithm is very similar to the C4.5 decision tree algorithm.

Pseudo-Code

We can incorporate this threshold step in the splitByAttribute function from the previous pseudo-code.

The sortReversed function takes a list of examples and returns a list of examples in order of decreasing value for the given attribute.

In the framework I described previously for makeTree, there was no facility for using a threshold value (it wasn’t appropriate if every different attribute value was sent to a different branch). In this case we would need to extend makeTree so that it receives the calculated threshold value and creates a decision node for the tree that could use it. In Chapter 5, Section 5.2 I described a FloatDecision class that would be suitable.

Data Structures and Interfaces

In the code above the list of examples is held in a stack. An object is removed from one list and added to another list using push and pop. Many collection data structures have these fundamental operations. If you are implementing your own lists, using a linked list, for example, this can be simply achieved by moving the “next“ pointer from one list to another.

Performance

The attribute splitting algorithm is O(n) in both memory and time, where n is the number of examples. Note that this is O(n) per attribute. If you are using it within ID3, it will be called once for each attribute.