14

Avoiding Pitfalls and Diving into the Future

The DevOps movement has evolved and changed in many ways since the Flickr talk at the 2009 Velocity Conference. New advances in technology, such as containers and Kubernetes, have changed many of the core practices. New ways of doing things such as site reliability engineering (SRE) have changed the roles people play.

In this chapter, we will examine how to start incorporating the principles and practices discussed throughout the book so that you can successfully move toward a DevOps transformation or move to SAFe®.

We will look at some emerging trends that may play a major role in the evolution of DevOps. We will look at XOps, which seeks to incorporate more parts of the organizations into the collaborative approach seen in DevOps and DevSecOps. We will also have a look at an alternative view to the standard linear value stream.

We will also examine new technologies that have emerged that promise to change how we work. With AI for IT Operations, also called AIOps, we will examine the growing role Artificial Intelligence (AI) and Machine Learning (ML) play in predicting problems and vulnerabilities. We will look at the merge between version control and Continuous Integration/Continuous Deployment (CI/CD) when we examine GitOps.

Finally, we will look at common questions that may come up with some answers.

Avoiding pitfalls

In the journey toward a DevOps approach, it only makes sense that not everyone will follow the same path. Different organizations are at different stages of readiness to accept the mindset change needed to allow for a successful DevOps journey.

That being said, we will look at a set of steps for starting or restarting a DevOps approach based on what we have learned in the previous chapters of this book. You will see that there is frequently no direct path from one step to the next.

Let’s view the following steps for our DevOps journey:

- Understand the system

- Start small

- Start automating

- Measure, learn, and pivot

Lets start on our journey now.

Understand the system

Whether we are starting fresh or restarting after stumbling, we need to take a step from the Improvement Kata from Lean thinking and evaluate where we are. This evaluation should be a holistic look at the existing processes, the people behind the processes, and if tools are enabling processes.

One way of looking at all these facets is to do a value stream mapping, as first discussed in Chapter 7, Mapping Your Value Streams. As we originally spoke about in that chapter, performing a value stream mapping workshop allows you to discover the following things:

- The process steps in your workflow from beginning to end

- The people that perform those process steps

- Possible areas for improvement

The value stream mapping workshop also gives the perspective of looking at the scope of the entire effort of development in a broader perspective of a system. This systemic view is not just a series of process steps and improvements, but also how tools work in this process, or can work in the process if they’re not incorporated.

A value stream mapping workshop produces two value streams: the current value stream and an ideal value stream that contains enhancements and improvements to lower cycle time and lead time and increases Percent Complete and Accurate (%C&A). Record these enhancements so that the people in the value stream will work on them as part of continuous learning (CL).

Start small

After the value stream mapping workshop, look at the list of improvements and enhancements from the ideal value stream. From the list of enhancements, the people in the value stream should select what they consider the most important enhancement to work on.

Allowing only one enhancement at a time promotes focus. We’ve seen this in Chapter 4, Leveraging Lean Flow to Keep the Work Moving, where working in small batch sizes avoids the problems that multitasking can bring in preventing work from completing.

One such enhancement may be to decrease cycle and lead time in developing your products by increasing flow. The practices in Chapter 4—such as small batch sizes, monitoring queues, and limiting WIP—can also help with this step. Following these practices may lead to increased agility that gets better with a DevOps approach.

Another area of improvement the people of the value stream can look at is the use of their tooling. The first foundational tool to consider is version control. The teams in the value stream should evaluate putting all their files in a version control system. If they are already using version control, they should ensure that they are using a common version control system and that everyone has the necessary access.

In addition to selecting a version control system, the people of the value stream should select a branching model that outlines how they will branch from the trunk and when to merge changes back to the main branch or trunk.

Start automating

A key step toward improving the value stream is establishing a CI/CD pipeline. If the organization has not established a CI/CD pipeline, it may establish one that comes included with many version control systems. Other organizations may want to have the flexibility that comes from installing a separate CI/CD pipeline tool. Either approach works, as the most important thing is the creation of such a pipeline.

The CI/CD pipeline can then be triggered from version control when a commit occurs. Add the necessary actions that occur after the commit, one action at a time. These actions may start with building or compiling the changes that come from the commit. A completed build can then allow the merge into the higher-level branch and possibly save the build artifacts into an artifact repository.

Start automating the testing

Once the endpoints of the CI/CD pipeline are defined, you can fill other intermediate steps of the pipeline with automated testing. You can create tests that will automatically run and find problems with functionality and security.

Start by creating, one by one, automated tests that comprise the following categories:

- Unit testing

- Functional testing

- System testing

- Security testing (static application security testing (SAST), dynamic application security testing (DAST), and other scans)

Development of the automated testing now encourages the creation of multiple environments for deployment, such as test, staging, and production. Unit test passage can allow for the deployment of a test environment for the other levels of testing.

Create environments, deployments, and monitoring

The establishment of testing allows us to establish the different pre-production environments, all of them equivalent as far as practicality will allow.

Introducing a new pre-production environment also allows us to introduce monitoring for these pre-production environments. Monitoring can start on the test environment until we have high enough confidence to deploy the monitoring on staging environments, and ultimately on production itself.

Constructing the pre-production environments allows us to establish the tooling to do the construction. Configuration management tooling and Infrastructure-as-Code (IaC) can be added to define and configure the environments. Establishing standard configurations allow capabilities such as rollback to occur.

Measure, learn, and pivot

At every step, we should evaluate whether each additional step we add is working to bring us closer to the ideal future state value stream. Make adjustments as needed. These tiny course corrections allow for CL.

There may be questions about whether the timing is right to pivot. The justification is always to avoid the sunk cost fallacy, where people hold on, avoiding change even in the face of overwhelming evidence that change should happen.

One final note: an apocryphal story holds that John F. Kennedy noted that the Chinese term for crisis was made up of the Chinese words for danger and opportunity. While it is true that the first part of the Chinese term for crisis on its own equals danger, the second part of the term on its own translates to point of change or turning point.

Adopting SAFe or adopting DevOps is a lot like watching the characters that make up a crisis. Watching the danger and watching the turning points will keep us away from the crisis.

Throughout this book, we’ve talked about the evolution of DevOps from its beginnings in 2009 to the present day. Let’s now talk about trends that are starting to emerge.

Emerging trends in DevOps

One of the themes that contributed to the success of the DevOps movement was the collaboration between two separate functions of an organization, Development and Operations, to achieve the greater goal of releasing products frequently but also allowing stability in the production environment.

As the movement grew, its success encouraged more imaginative efforts at collaboration to encourage other aspects besides increased deployment frequency and stability. Some of the reimagined efforts we will examine include the following:

- XOps

- The Revolution model

- Platform engineering

Let’s begin our exploration into the potential future of DevOps.

XOps

The success of the DevOps movement has encouraged the inclusion of other parts of the organization with Development and Operations to allow for speed of other qualities in addition to development frequency and stability. Notable movements include the following:

- DevSecOps: The incorporation of security throughout the stages of the pipeline to ensure that security is not left behind. Currently, many people consider the practices that are performed in a DevSecOps approach are fully absorbed in the DevOps movement.

- BizDevOps: Hailed as DevOps 2.0 this movement adds collaboration between business teams, developers, and operations personnel for the added goal of maximizing business revenue.

In addition, other engineering disciplines have found success in employing the same practices first promoted in DevOps. These DevOps-based movements for adjacent disciplines include DataOps and ModelOps.

Let’s look at how a DevOps approach works in these engineering disciplines.

DataOps

DataOps is the incorporation of Agile development principles, DevOps practices, and Lean thinking into the field of data analysis and data analytics.

Data analysis follows a process flow similar to product development. The following steps are part of a typical process for data analysis:

- Specification of the requirements for the data by those directing the analysis or by customers

- Collection of the data

- Processing data using statistical software or spreadsheets

- Data cleaning to remove duplicates or errors

- Exploratory data analysis (EDA)

- Data modeling to find relationships

- Creating outputs by using a data product application

- Reporting

As the volume of data increases exponentially, new methods are called into service to allow insights to be quickly obtained. The following methods used resemble the steps seen in DevOps for product development:

- The establishment of a data pipeline that allows for the processing of data into reports and analytics using the data analysis process

- Verification of the data flowing through the data pipeline through Statistical Process Controls (SPC)

- Adding automated testing to ensure the data is correct in the data cleaning, data analysis, and data modeling stages

- Measurement of the data flow through the data pipeline

- Separation of data into development, test, and production environments to ensure the correct function of automation for the data pipeline

- Collaboration between data scientists, analysts, data engineers, IT, and quality assurance/governance

The ability to easily find relationships based on voluminous amounts of data provides a competitive advantage. Using DataOps practices and principles may allow those creating insights from data to achieve greater deployment rates of reliable and error-free data analytics.

ModelOps

One of the largest trends in technology today is AI. This growing trend sets up ML and decision models that leverage large amounts of data to progressively improve performance. The development of these ML models can give a competitive advantage to any organization looking to understand more about their customers and how their products can help.

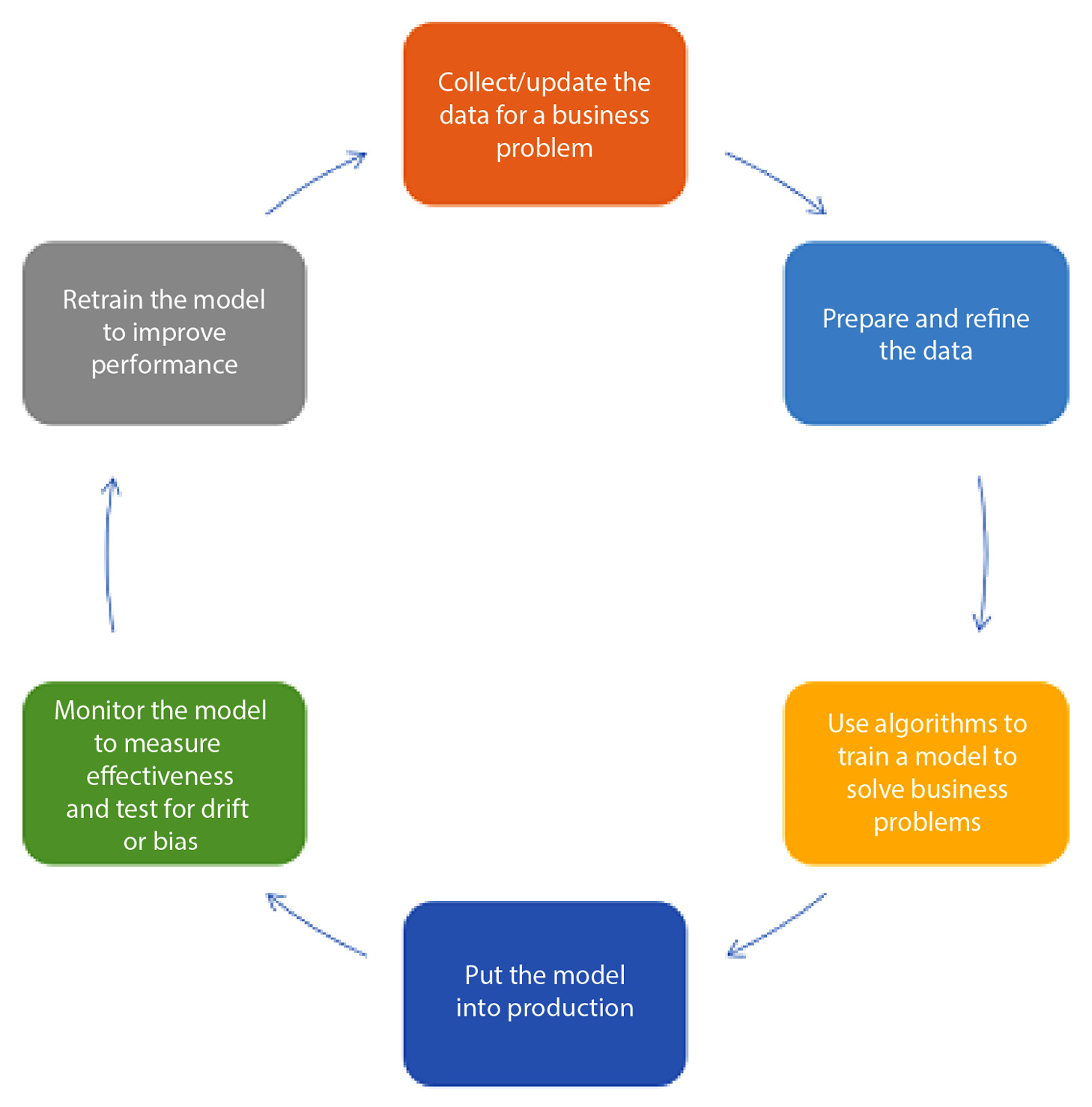

Developing effective models now goes through a development cycle, similar to the software development life cycle (SDLC) process. In December 2018, Waldemar Hummer and Vinod Muthusamy of IBM Research AI proposed the initial concept of ModelOps, where AI workflows were made more reusable, platform-independent, and composable using techniques derived from the DevOps approach.

The model life cycle covers how to create, train, deploy, monitor, and, based on feedback from the data, retrain the AI model for an enterprise. The following diagram demonstrates the path of the model life cycle:

Figure 14.1 – ModelOps life cycle (By Jforgo1 – Own work, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=99598780)

Because of the similarities between the model life cycle and the SDLC, the desired behaviors of deploying changes rapidly, monitoring their effects on the environment, and learning to improve allow for AI models developed using ModelOps to align with AI applications created using DevOps and have both rely on data analytics developed using DataOps.

The Revolution model

The concept of value streams for software development takes as a model the traditional SDLC process. This process, a remnant from Waterfall development, assumes that development happens in a step-by-step linear motion from one activity to the next. As products become more complex and DevOps expands the areas of responsibility, does a linear one-way progression of activities make sense?

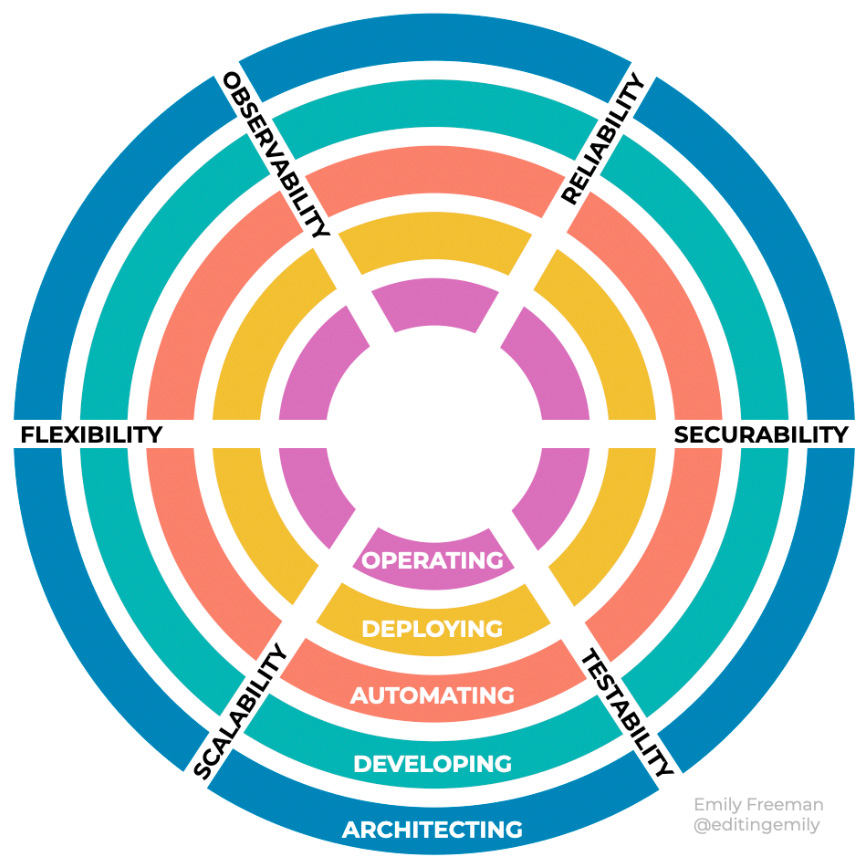

Emily Freeman, Head of Community Engagement at Amazon Web Services (AWS) and the author of DevOps for Dummies, has proposed a different way of looking at the development process. Instead of a straight-line approach, she outlines a model where priorities move forward and back, along circles of activity. She calls this model Revolution. Let’s take a closer look.

Freeman outlines the following five software development roles as concentric circles radiating out:

- Operating

- Deploying

- Automating

- Developing

- Architecting

Intersecting those circles are six qualities that engineers must consider in each activity. These qualities, listed here, are drawn as spokes against the concentric circles:

- Testability

- Securability

- Reliability

- Observability

- Flexibility

- Scalability

With the parts in place, let’s present the following diagram of the model:

Figure 14.2 – Revolution model

To ensure the six qualities are adequately met, an engineer or team member would jump from one role (or circle) to the next, starting from the outside in. Roles are meant to be temporarily adopted by the engineer for some period of time until they are not needed at the moment. In an incident management scenario that Freeman illustrates in a keynote (https://www.youtube.com/watch?v=rNBXfgWcy5Q), the team has members assume roles in operating, developing, and deploying to investigate whether adequate reliability, observability, flexibility, scalability, and testability occur.

Platform engineering

The vast amount of tooling and technology involved in cloud-native environments has accelerated in the past few years. The level of knowledge needed to set up and maintain test, staging, and production environments on which products reside has overwhelmed those responsible for their operation.

Historically, DevOps started with the inclusion of Operations in an Agile development process so that stability and development speed are equally maintained. Over time, Operations has embraced using an Agile development mindset to solve reliability problems of large systems with SRE. Charity Majors, the CTO of honeycomb.io, a leading company in the development of a monitoring and observability platform, writes in a blog article (https://www.honeycomb.io/blog/future-ops-platform-engineering) that the next step is to look at the environments that are the responsibility of Operations as products themselves that can be developed using an Agile development mindset.

Platform engineering, as defined by Luca Galante of Humanitec (https://platformengineering.org/blog/what-is-platform-engineering), designs toolchains and workflows to allow self-service capabilities using this Agile development mindset. The product developed through platform engineering is an Internal Developer Platform (IDP), an abstraction of the technology used in operational environments for developers to build their products and solutions.

Developers will use the following five components of an IDP to establish the capabilities required by their product or solution:

- Application Configuration Management (ACM): This includes the automatic creation of manifest files used by configuration management tools to deploy application changes

- Infrastructure Orchestration: This establishes integration between the CI pipeline with the environments for deployment, including possible cluster creation/updates, IAC, and image registries

- Environment Management: This integrates the ACM of the IDP with the underlying infrastructure of the environments to allow developers to create fully provisioned environments when needed

- Deployment Management: This sets up the CD pipeline

- Role-Based Access Control (RBAC): This allows granular access to the environment and its resources

We have seen trends emerge as people look at applying changes to processes, but DevOps will continue to change as technology changes. Let’s look at trends in DevOps that are driven by advances in technology.

New technologies in DevOps

DevOps today has expanded beyond what was originally envisioned in 2009, in part due to advances in technology that encouraged deployment into cloud-native environments. Advances today promise to add new capabilities never imagined.

The technologies we will examine for changing DevOps come from both refinements in deploying to cloud-native environments and applications on exciting new technology. We will take a look at these technology-based DevOps trends: AIOps and GitOps.

Let’s start out by looking to the future with AIOps.

AIOps

We discussed in the previous section the rise of the use of AI to create applications that can learn from the large amounts of data presented to AI models. One area that can benefit from improved insights based on large amounts of data may be product development that uses a DevOps approach and collects the data through full-stack telemetry. This is different from our previous discussions of DataOps or ModelOps because this time, we are applying ML and data visualization to our actual development process.

AI for IT Operations (or AIOps) looks to enhance traditional practices we previously identified in the CI, CD, and Release on Demand stages of the CD pipeline to incorporate tools that are based on ML to process the amount of data that comes from our full-stack telemetry. Incorporation of AIOps tools may assist in making sense of the following areas of the IT environment:

- Systems: Testing, staging, and production environments may be a complex mix of cloud-native and on-premise resources. Cloud resources may come from one or more vendors. The resources may be a mix of physical computing servers (“bare-metal”) or use a form of virtualization such as virtual machines (VMs) or containers. This large mix of resources ensures the environment is stable and reliable.

- Data: Full-stack telemetry creates mountains of data. Some of this data may be key to making crucial decisions, while some may not. How can we determine when the amount of data is voluminous?

- Tools: To acquire full-stack telemetry, a wide variety of tools may be employed to collect the data and manage the systems. These tools may not operate with one another or may be limited in functionality, creating silos of data.

To answer this challenge, tools incorporating ML may adopt the following types of AI algorithms:

- Data selection: This analyzes data to remove redundant and non-relevant information

- Pattern discovery: This examines the data to determine relationships

- Inference: This looks at the insights to identify root causes

- Collaboration: This enables automation of notifications to teams of problems discovered

- Automation: This looks to automate a response to recurring problems

These tools have applications in terms of identifying security vulnerabilities, finding root causes of production failures, or even identifying trends that may predict impending problems in your production environments. As the technology improves, the promise of the viability of AIOps to be a more standard part of DevOps and IT operations grows.

GitOps

As acceptance of CI/CD pipelines grew, many wondered how to establish these pipelines to deploy features into cloud-native testing, staging, and production environments. In 2017, Weaveworks coined the term GitOps, suggesting a CI/CD approach that is triggered from a commit in Git, a popular version control program.

GitOps starts with version control. There are separate repositories for the application and the environment configuration. The application repository will contain the code for the product, including how to build the product as a container in a Dockerfile. The environment repository will contain configuration files and scripts for a CI/CD pipeline tool, as well as deployment records for the environments.

Deployments in GitOps can either be push-based or pull-based. A push-based deployment uses the customary CI/CD pipeline tools to move from CI to CD. Pull-based deployment employs an operator that observes changes to the environment repository. When those changes occur, the operator deploys the changes to the environment according to the environment repository changes.

The following diagram shows the progression from the Git repository to the CI pipeline to the operator and deployment pipeline:

Figure 14.3 – GitOps pull-based deployment

GitOps has had a tremendous following with those looking to set up deployment using Kubernetes, a technology that packages Docker containers to set up clusters of microservices. As Kubernetes becomes more popular, GitOps has become an established practice to continuously deploy Kubernetes clusters to cloud-based environments.

Frequently asked questions

We now take a look at some questions that may come up, even after reading the earlier parts of this book.

What is DevOps?

DevOps is a technical movement for developing and maintaining products. It incorporates Lean thinking and Agile development principles and practices to extend focus beyond development to the deployment, release, and maintenance of new products and their features. DevOps promotes the use of automation and tooling to allow frequent testing for proper functionality and security and allow for consistent deployments and releases. As products move to release, DevOps models operational practices done by developers and operations personnel that allow for rapid recovery should problems appear in production environments in a mission-based generative culture.

What is the Scaled Agile Framework®?

The Scaled Agile Framework, or SAFe, is a set of values, principles, and practices developed by Scaled Agile, Inc. SAFe is primarily adopted by medium and large-size organizations working to introduce Lean and Agile ways of working for teams of teams and portfolios in enterprises. As Scaled Agile, Inc. defines it:

Do I need to adopt SAFe to move to DevOps?

No. We look at SAFe while talking about DevOps because a key perspective of working in SAFe is the focus on creating and organizing value streams for its team of teams, known as an Agile Release Train (ART). SAFe also focuses on process and automation through its CD pipeline. Both of these are in line with successful DevOps approaches.

Adopting the SAFe is best suited when your value stream can be accomplished by a team of 5-12 regular-sized teams and this team of teams is working together on a single product or solution. This is known as the Essential SAFe configuration.

Is DevOps only for those companies that develop for cloud environments?

While many of the recent technical strides in DevOps were developed to help those develop, test, deploy, release, and maintain on cloud environments, DevOps as a technical movement is agnostic to whichever technology your product is based on.

DevOps principles and practices have been successful in organizations that develop and maintain products on cloud, embedded hardware, mainframe, and physical server environments. Practices such as the adoption of Lean thinking, value stream management, and SRE do not rely on the technology used by the end product.

What’s the best tool for doing ___________?

A common question is to ask what the most preferred tool is to perform a specific function such as CI/CD, automated testing, configuration management, or security testing. I always refrain from picking an individual tool for several reasons.

The primary reason is that every industry and organization is different. A tool that works for one industry or organization may not work for a different industry or organization.

Another reason is that technology moves onward, releasing new tools that may now be considered “best.”

How does DevSecOps fit into DevOps?

Earlier in this chapter, we saw how DevSecOps was one of the first models of XOps where what eventually happened was the incorporation of a security mindset and practices into the broader definition of DevOps. With DevSecOps, we are adding security as a focus in addition to the desired foci of development speed and operational stability in vanilla DevOps. In this book, we outlined several places where including security practices move us from DevOps to DevSecOps.

In Chapter 6, Recovering from Production Failures, we saw that Chaos Engineering could be used to simulate disasters such as a security vulnerability and evaluate potential responses to such security failures.

In Chapter 10, Continuous Exploration and Finding New Features, we discovered that security was one of the primary considerations when trying to create new features for products. This consideration is handled by the system architect, often consulting with the organization’s security team.

We extended security further in Chapter 11, Continuous Integration of Solution Development, and Chapter 12, Continuous Deployment to Production, where we looked at adding security testing in various forms to detect potential vulnerabilities. During CI, we performed threat modeling to identify any potential attack vectors.

Finally, in Chapter 13, Releasing on Demand to Realize Value, we looked at continuous security monitoring and continuous security practices in the production environment to remain vigilant and fix environments when vulnerabilities do get exploited.

Summary

In this final chapter, we looked to the future. We first saw the emerging trends happening to DevOps from the product development side. New advances incorporating AI and data engineering have added new components to products under development. Development of artifacts such as AI models and data pipelines shows great benefits using a DevOps approach in new fields such as DataOps and ModelOps. We also examined a new look at software development and maintenance that may influence DevOps and value stream management.

Changes in technology have changed DevOps as well. We looked at incorporating AIOps products that include AI for analyzing the data collected in testing and maintenance to find vulnerabilities and potential failures. We explored the GitOps movement that unites version control with CD.

We then moved from the future of DevOps to making DevOps part of your organization’s future. We looked at adopting DevOps practices, including mapping your value stream and iteratively employing automation in different areas of your development process. We then finished with some questions you may have and their answers.

We have explored the different aspects of DevOps, from looking at the motivations of people to understanding the processes at play and examining the tools that can help achieve higher levels of performance. All three aspects are adopted in the SAFe so that teams of teams can perform together as a value stream to develop, deploy, and maintain products that provide value to the customer. It is our hope that this exploration helps guide your thoughts as you continue your DevOps journey.

Further reading

For more information, refer to the following resources:

- Blog article talking about XOps and its various components: https://www.expressanalytics.com/blog/everything-you-need-to-know-about-xops/

- Description of XOps, its main components, and its popularity: https://datakitchen.io/gartner-top-trends-in-data-and-analytics-for-2021-xops/

- Guidance article from Scaled Agile, Inc. that details how to work with DataOps in your value stream: https://www.scaledagileframework.com/an-agile-approach-to-big-data-in-safe/

- An initial whitepaper from Waldemar Hummer and Vinod Muthusamy of IBM Research AI detailing their ModelOps process: https://s3.us.cloud-object-storage.appdomain.cloud/res-files/3842-plday18-hummer.pdf

- An essential guide to ModelOps, created by ModelOp.com: https://www.modelop.com/wp-content/uploads/2020/05/ModelOps_Essential_Guide.pdf

- Guidance article from Scaled Agile, Inc. on working with ModelOps on your value stream: https://www.scaledagileframework.com/succeeding-with-ai-in-safe/

- The official documentation for Emily Freeman’s Revolution model: https://github.com/revolution-model

- Keynote address that features Emily Freeman discussing the Revolution model: https://www.youtube.com/watch?v=rNBXfgWcy5Q

- Blog article from Charity Majors, CTO of honeycomb.io, introducing platform engineering: https://www.honeycomb.io/blog/future-ops-platform-engineering

- Article from Luca Galante of Humanitec, describing platform engineering: https://platformengineering.org/blog/what-is-platform-engineering

- Definition of an IDP and its components: https://internaldeveloperplatform.org

- Blog article from Moogsoft, a DevOps tool vendor, detailing the uses of AIOps: https://www.moogsoft.com/resources/aiops/guide/everything-aiops/

- A primer on GitOps: https://www.gitops.tech

- A blog article from Warren Marusiak of Atlassian, outlining an approach to adopting DevOps: https://community.atlassian.com/t5/DevOps-articles/How-to-do-DevOps/ba-p/2137695

- Article from Scaled Agile, Inc. defining the Scaled Agile Framework: https://www.scaledagileframework.com/safe-for-lean-enterprises/