Chapter 12

CCAF and Interactive Instruction

We come now to the truly fun and exciting point at which everything comes together as a creative blend of instructional design, subject matter, management vision and objectives, multimedia treatments, and technology. It comes together for the purpose of enabling specified behavioral changes to happen—the changes needed for performance success.

In this chapter, I have attempted to get at the heart of instructional interactivity. I've tried to separate the wheat from the chaff, as they say:

- To explore the differences between mechanical interactivity and instructional interactivity

- To provide a framework for thinking about instructional interactivity

- To help designers create the kind of interactivity that works to engage learners, builds needed competencies, and provides value in e-learning that fully justifies the investment.

Throughout this book, I've minimized theoretical discourse in favor of plain talk and practical prescriptions. It is especially appropriate to continue this approach here, as it seems to me that theory-based approaches, as much as I respect them and find them valuable, have not resonated effectively with many designers. My attempt here is to clearly identify the essential components of instructional interactivity, flag some frequent misconceptions, and provide a number of successful models—all in a way that you will find meaningful and useful, whatever your goals for e-learning may be.

Supernatural Powers

Interactivity is the supernatural power of e-learning. It has dual powers that together are capable of achieving the ultimate goal of success through performance improvement:

- Instructional interactivity invokes thinking. Designed and used properly, interactivity makes us think. Thinking can lead to understanding, and understanding can lead to increased capabilities and a readiness for better performance.

- Instructional interactivity requires doing—to perform. Rehearsed performance leads to skill development. Improved skills permit better performance.

Natural Learning Environments

From the moment we are born, we are learners. As infants, we love learning because almost everything we learn gives us a bit more power or comfort. Even before we can actually do anything to improve our lot, we gain satisfaction from recognizing signs and predicting. We predict that food is coming, that we are going to be picked up, or that we are about to hear an amusing sound. And we are happy to be right.

Effective learning environments employ interactivity. Just as we have done from infancy on, we acquire knowledge and skills from attempting various solutions and observing their results. It's evident in children as they undertake the myriad skills they must conquer, from communicating differentially the needs of nourishment and a diaper change to holding up their heads and figuring out how to roll over. As we grow older and acquire the ability to understand language, we can be told how to do things and when to do them.

But verbal learning dissipates quickly and easily until we've actually performed successful behaviors ourselves and seen the results. Even then, effective rehearsal is important to integrate new behaviors fully into our repertoire of abilities.

e-Learning Environments and Rehearsal

Interactivity in e-learning provides an important opportunity for rehearsal without the risk of damaging equipment, hurting people's feelings, running up waste costs, or burning down buildings. Behaviors that help the learner avoid danger cannot be learned safely through experimental means except through very close mentoring or in simulated environments, such as those made possible in e-learning. And even if they could be learned in some kind of real-world environment, setup time and costs would prevent needed repetition. And, instruction of a number of people, who might be in many different locations, would present onerous problems.

Whether we are guided by a mentor or prompted by e-learning, interactivity is vital to effective learning events. As important as interactivity is for learning, however, instructional interactivity is an easily and often misunderstood concept.

Instructional Interactivity Defined

It is difficult to define and distinguish the salient attributes of instructional interactivity. Perhaps because of this, instructional interactivity is often thought to be present when, in reality, it is not. We can be deluded by all the buttons, graphics, and animated effects of today's multimedia or distracted by visual design and presentation technologies. Based on believing instructional interactivity is present when it is not, people expect more impact from an application than it can deliver. The confusion is damaging not only because minimal learning occurs, but also because people may conclude instructional interactivity isn't effective (even though there wasn't any).

Instructional interactivity is much more than a multimedia response to a learner's gesture. Playing a sound or displaying more information when the mouse button is pressed is just plan old interactivity. To be sure however, instructional interactivity in e-learning definitely does involve multimedia responses to learner gestures. Here's a working definition:

instructional interactivity Interaction that actively stimulates the learner's mind to do those things that improve ability and readiness to perform effectively.

This is a functional and useful definition, but we need to articulate further what instructional interactivity is—of what it is composed and how to create it.

CCAF

Thinking about all the e-learning we may have seen, we soon realize there are many varieties of instructional interactivity. Sometimes interactivity simply entreats us to rehearse fledgling skills. At other times, it puts forth a perplexity for us to untangle. It may even lead us to new levels of curiosity and to profound and original discoveries, not just to the identification of “correct” answers.

The many types and purposes of instructional interactivity compound the problem of definition, but in each case, there are four essential components, integrated and interlocked like a jigsaw puzzle in instructionally purposeful ways (see Figure 12.1):

Figure 12.1 Components of instructional interactivity.

- Context—the framework and conditions

- Challenge—a stimulus to action within the context

- Activity—a physical response to the challenge

- Feedback—reflection of the effectiveness of the learner's action

Examples

Let's look at a couple of e-learning designs, identify the components of instructional interactivity, and observe how they create effective instruction.

Context

An aerial view of a populated office work area

The depiction immediately provides an interesting visual context and creates an identifiable, real-world setting (see Figure 12.2). It gives both visual and cognitive perspective to the subject, saying, in effect, “Let's step back and look at a typical work environment without being too involved in everyday business operations to think about what's really happening.”

Figure 12.2 Office context in Supervisor Effectiveness training for employee security.

The context provides both an immediate sense of situational familiarity for the supervisors being trained and a vantage point for objective and thoughtful decision making. No large blocks of text are needed to build this valuable rapport with the learner.

This is a permissive context. Learners can jump right into the activity without any guidance or background information, or they can first request information on assessing threats.

Challenge

Identify the most immediate safety threat

Employees make many comments during the day that reflect their frustrations, both trivial and serious, and they imagine remedies of all sorts (see Figure 12.3). The solutions they contemplate may range from silly pranks to devastating violence. Most such expressions of frustration are harmless hyperbole. They provide a means of blowing off steam. But some reflect building hostility and forewarn the person's aggression and willingness to do harm to self or others.

Figure 12.3 Viewing thoughts of employees.

The challenge for learners is to recognize potentially serious concerns among harmless exasperations.

Activity

Listen to conversations, and decide who's making the most concerning comments. Then move (drag) that employee into your office

Learners roll the mouse pointer over the image of each person appearing in the office complex to listen to what employees are saying to each other. As the learner moves the pointer over speaking employees (indicated by waves animating around them), their comments appear in caption bubbles. Learners, all supervisors in training, select the employee making the most concerning comments by dragging the person's image to the area labeled “Your Office” (see Figure 12.4).

Figure 12.4 Learners drag the selected employee's icon into their office.

Feedback

At the gesture level, pop-up captions reward pointer placement by providing needed information. At the decision level, correct/incorrect judgment is augmented with action-consequence information.

Selecting and placing an employee in “your office” represents a decision made by the learner. In this application, feedback at this point also includes an instructive evaluation (see Figure 12.5).

Figure 12.5 Feedback with instruction.

When the person who is the highest-level concern is identified, confirming feedback is given (see Figure 12.6).

Figure 12.6 Feedback after correct threat identification.

Identifying high-risk comments is only the first step of this learning sequence, of course. Learners are then asked what steps they would take next, such as talk to Security or EAP, and then to question the distressed employee to further determine the probability of risk and finally decide what to do about it (see Figure 12.7).

Figure 12.7 Multistep activity continues with questioning the employee to gather more information.

Analysis of Example 1

There is a lot to learn in this application, and it is fun and engaging to learn this way. In contrast, imagine a typical e-learning design instead of the one being used here: Lists of danger signs would be presented. After reading them, learners would be given a list and an explanation of appropriate preventative actions to read. Then learners would be given scenario questions that would require them to select the right action from a multiple-choice list. No context, weak challenge, abstract activity, extrinsic feedback—boring.

Look again at how well the components in the preceding example integrate to make the learning experience effective:

- Learners not only go through comparative steps to rank the levels of concern but also symbolically move around the office listening to employees. Good supervisors are good, active listeners. They must circulate through work areas, and they must spend time listening to their employees. The interaction leads learners to envision gathering information that is critical to their responsibility for ensuring safety.

- When a security problem is identified, face-to-face encounters with employees are necessary in order to probe further. Symbolically moving the image of an employee into the learner's office helps supervisors to imagine this encounter and to realize that preparation for it will make the task less daunting and more productive.

- When the supervisor asks probing questions, troubled employees may feel relieved by the attention and therefore speak freely, but they may just as well feel uneasy, embarrassed, and defensive. It may be difficult to get information from them. The interactions in this example are designed so that employees will sometimes answer only a few questions and then get worried and stop. Experiencing this likely occurrence not only makes the learning more engaging but also continues to reveal the value of the learning experience to participants in preparing them to handle real-world situations.

- The focus is on actions and consequences. Although factual and conceptual information must be learned, it is offered within the context of task performance, stressing its relevance and making it more interesting, easier to learn, and easier to remember.

No Design Is Perfect

The feedback could be more intrinsic and possibly more effective. For example, if the learner mistakenly identified a lower-risk comment and took that individual to the office for investigation, the person representing a greater safety threat might actually carry out a violent act. Such a design might increase the learning impact and make it easier for supervisors to remember the consequences of certain decisions, but the design would have to be effected carefully so as not to trivialize an important responsibility and concern.

Operation Lifesaver, Inc., is a national, nonprofit safety education group whose goal is to eliminate deaths and injuries at railroad crossings and along railroad rights-of-way. It has programs in all 50 states with trained and certified presenters who provide free safety talks to raise alertness around tracks and trains.

Performance Goal

Operation Lifesaver wanted to address the unique challenges in training drivers of all types of trucks to apply safety procedures around railroad tracks and to help them make sound decisions at railroad crossings. They wanted to maximize learner participation by building an appealing online learning experience because statistics show approximately one out of four railroad-crossing crashes involves vehicles that require a Commercial Driver's License (CDL) to operate; this includes all sizes of trucks from large over-the-road vehicles to local delivery trucks.

Target Audience

Drivers of all types of trucks with a CDL.

Training Solution

The solution for Operation Lifesaver consisted of multiple instructional modalities, including a promotional video trailer, the interactive e-learning course, a traffic signs gamelette, and an integrated marketing plan incorporating various tactics across social and traditional media.

The e-learning course focuses on truck driver safety and employs a simulation with a game-like driving environment in which drivers are exposed to worst-case scenarios that require quick thinking and critical decision making. Drivers work independently, so that they can freely make mistakes and witness the (dire) consequences. The game-like characteristics push almost everyone who tries the e-learning to achieve successful excursions, through repetitive tries if necessary (see Figure 12.8). More than 15,000 truck drivers have taken the e-learning course.

Figure 12.8 The setup for OLI's Railroad Safety for Professional Drivers

Let's take a look.

Context

On a road, in the cab of several different trucks

The student is put into a driver simulation going down a road with signs and hazards related to railroads that must be dealt with as they are encountered. (See Figure 12.9).

Figure 12.9 Animation provides an authentic context by making learners feel as though they are moving down the road.

Figure 12.10 Distractions, such as a helpful bystander, tempt drivers to abandon safety procedures.

Challenge

Take the appropriate action for each hazard as it is encountered, following applicable laws, maximizing safety and minimizing risk (see Figure 12.9)

Activity

Given accelerator and braking controls, and the ability to interact with distracters, such as a radio, a cell phone, and so forth, the student must use the proper approach techniques for each hazard (see Figure 12.10).

Every time a truck driver approaches a railroad crossing in the e-learning course, he or she must:

- Slow down and/or stop immediately as directed by the signs and signals

- Eliminate distractions

- Roll down the window and listen

- Look both ways, using what truck drivers calls “rock and roll” to look around the large side mirrors

The user interface makes these actions easy to perform so learner focus remains on making good decisions and performing procedures rather than learning an artificial interface (see Figure 12.11).

Figure 12.11 Running a stop sign and other driving transgressions require, as in many games, the learner/player to start over.

Feedback

Because drivers have opportunities to correct some problems and perform some tasks in slightly different sequences, feedback is shown as the consequence of the performance (see Figure 12.12).

Figure 12.12 As happens far too often on the road, learners in this simulation are reminded of severe consequences possible for poor performance.

Anatomy of Good Interactions

Let's now reexamine each component of instructional interactivity.

Context

Context provides the framework and conditions that make the interaction relevant and meaningful to the learner. The media available in e-learning provide an important opportunity to make interactions much more than rhetorical or abstract events. Because we are often working to maximize transfer of learning to performance on the job, the more realistic the context can be, the more likely the learner will be to imagine the proposed situation actually occurring. With a context in mind, the learner is more likely to visualize taking alternative actions as well as the outcomes—a mental exercise that will increase the probability of transferring learning to real behavior.

Some researchers believe humans do much, if not all, of their reasoning through the use of mental images. Images can communicate to us more rapidly and with more fidelity than verbal descriptions, while animations can impart a sense of urgency with unequaled force. Presenting traditional academic questions, such as multiple choice, fill-in the blank, true/false, and matching questions without illustrations, takes little advantage of the instructional capabilities present in e-learning and are quite the opposite of working within a truly instructional context.

Challenge

Challenge is a stimulus to exhibit effective behavior. Challenge focuses learners on specific aspects of the instructional context and calls learners to action.

In its simplest (and often least effective) form, the challenge is a question the learner is to answer. In more complex and usually more effective settings, the stimulus may be such things as:

- Indications of a problem on a control panel

- Customer service complaints

- A spreadsheet showing an unreconciled difference

- An animated production line producing poor quality

- Increasing business losses

- A simulated customer call (via audio delivery)

- A medical prescription to be filled

- A simulated electrical fault

- A client record to be updated

“Click Next to go on” is not a stimulus for instructional interactivity unless you are trying to teach people how to advance to the next screen. But “You have 10 minutes before the patient's heart will give out” can be quite an effective stimulus.

Activity

Activity is the physical gesture and learner response to the context and challenge.

Whether learning physical skills (such as typing) or mental skills (such as sentence construction), learners need ways to communicate decisions, demonstrate abilities, ask for assistance, test solutions, and state answers. The gestures learners can use for input to the computer are often physically quite different from the actions learners are being trained to perform, but they should correspond and be natural and easy to use; they should readily and quickly represent the learner's intentions. The mechanisms with which learners express their questions, decisions, answers, and so on should not impede or bias the learner's ability to communicate nor be so complex as to defocus learners by asking them to deal with user interface artifacts.

Just making the learner do something, however, doesn't comprise an instructional interaction. Even if the computer responds specifically to what the learner does, a sequence of input and response doesn't necessarily constitute an instructional event.

The structure of the input tools (i.e., the recognized learner gestures) is a major design consideration. In many cases, they determine the extent of the cognitive processing the learner must perform. They may determine, for example, whether the learner needs to recognize an appropriate solution, recall it, or apply it. These are all very different cognitive processes.

Poorly constructed interactions require learners to translate what they know or can do into complex, artificial gestures. The task may be so difficult that fully proficient learners fail to respond “correctly,” not because they are unable to perform the targeted tasks but because they have trouble communicating their knowledge within the imposed constraints of the interface. For example, learners may be able to perform long division on a notepad but be unable to do it via keyboard input.

Very good interfaces are themselves a delight to use; their value to the learning process goes beyond just efficiency and machine-recognized gestures. Not only can they create a more memorable experience, perhaps just by providing a happily recalled event; they also help learners see and understand more fully many kinds of relationships, such as “a part of,” “an example of,” or “a complement to.”

Assembling glassware for a chemistry experiment and sorting fruits, vegetables, dairy products, grains, and meats into appropriate storage containers involve procedures and concepts. Learners might very well remember the process of moving displayed objects as a cognitive handle for recalling underlying relationships and concepts needed in on-the-job performance. Such kinesthetic association can enrich both understanding and recall, whereas having answered multiple-choice questions would give few lasting clues as to the correct on the job actions to take.

Feedback

Feedback acknowledges learner activity and provides information about the effectiveness of learner decisions and actions.

When learning, we seek to make cognitive connections and construct relationships—a neurological cognitive map, if you will, that might be represented like the one in Figure 12.13. The formation of meaning—creating understanding—is this very process of drawing relationships. The process of learning uses rehearsal to strengthen the forming relationships for later recall and application.

Figure 12.13 A cognitive map.

Feedback is essential in these processes to make sure learners construct effective relationships and deconstruct erroneous relationships. The instructional designer's challenge is therefore to devise feedback that will prove the most helpful to learners in their efforts to identify, to clarify, and to strengthen functional relationships.

It is often easier for learners to link specific actions and consequences than it is to associate two objects with each other, which might seem the simpler task. For example, it's quite easy to recall that sticking your finger in a live electrical outlet is likely to result in a shock, even though you may never have done it. But it is harder to recall the capital city of Maine (Augusta) or the name of an enclosed object with seven equal sides (heptagon) unless you have already created a cognitive structure to aid recall, such as from frequent travel to Augusta or doing design work with heptagons. Why? Well, in part because of repeatedly hearing the names and also because it's likely you would associate those names with actions and consequences. You like to go to Augusta perhaps because you enjoy the views of the Kennebec River and the shops in the Water Street area. Positive emotional responses arise from experiencing actions that produce pleasant consequences, and they strengthen associations that would otherwise be just learning a name.

It is extremely fortunate for us in training that action-consequence learning is easiest, because the objective of training is to improve performance. Whereas performance is based on factual, conceptual, and procedural knowledge, learning the necessary facts, concepts, and procedures is easier if done in the context of building relationships between actions and their consequences (not the other way around, as is more traditional).

High-impact feedback allows learners to determine the effectiveness of their actions, not just whether an answer was right or wrong. In fact, good feedback doesn't necessarily tell learners directly whether their actions are correct or incorrect; rather, it helps learners determine this for themselves.

Good feedback, therefore, is not necessarily judgment or evaluation, as most people think of it (although at times offering such judgment is critical). Rather, good feedback reflects the different outcomes of specific actions.

Strong and Weak Interactivity Components

Perhaps the primary challenge of creating effective learning experiences is designing great interactions. The simple presence of the four essential components—context, stimulus, activity, and feedback—doesn't create effective learning interactions. It is the specific nuances of each component individually and the way that they integrate with and support the effectiveness of the other components that leads to success.

Table 12.1 contrasts the characteristics of strong and weak interaction components.

Table 12.1 Characteristics of Good and Poor Interaction Components

| Component | Strong | Weak |

| Context | Focuses on applicable action/performance relationships Reinforces the relationship of subtasks to target outcomes | Focuses on learning abstract bodies of knowledge Uses a generalized, content-independent screen layout |

| Simulates performance environments | Simulates a traditional classroom environment or no identifiable environment at all | |

| Challenge | Requires learner to apply information and skills to meaningful and interesting problems | Presents traditional questions (multiple choice, true/false, matching), which at best require cognitive processing but not in an applied setting, and typically require little cognitive processing beyond simple recall |

| Progresses from single-step performance to requiring learners to perform multiple steps | Requires (and allows) separate, single-step performance only | |

| Puts learners atsome risk, such as having to start over if they make too many mistakes | Presents little or no risk by either revealing the correct answer after a mistake is made or using such structures as, “No. Try again.” | |

| Activity | Builds on the context to stimulate meaningful performance | Uses artificial, question-answering activities, such as choosing a, b, c, none of the above, all of the above |

| Provides an opportunity to back up and correct suspected mistakes or explore alternatives | Allows only one chance to answer | |

| Asks learners to justify their decisions before feedback is given; learners are allowed to back up and change responses at any point | Facilitates lucky guesses (and makes no effort to differentiate between lucky guesses and well-reasoned decisions) | |

| Feedback | Provides instructive information in response to either learner requests for it or repeated learner errors | Relies on content presentations (given prior to performance opportunities) |

| Helps learner see the negative consequences of poor performance and the positive consequences of good performance | Immediately judges every response as correct or incorrect | |

| Delays judgment, giving learners information needed to determine for themselves whether they are performing well | Focuses learners on earning points or passing tests, rather than on building proficiencies | |

| Provides frank and honest assessments; says so if and when learners begin making thoughtless errors | Babies learners; always assumes learners are doing their best |

The Elusive Essence of Good Interactivity

It's clear that not all interactions are of equal value. Nobody wants bad interactions; they're boring, ineffective, and wasteful of everyone's time, interest, and energy. Unfortunately, they're quite easy to build. Good interactions are, of course, just the opposite. They intrigue, involve, challenge, inform, reward, and provide recognizable value to the learner. And they require more planning and instructional design effort.

The essence of good interactivity seems quite elusive. It would be helpful if all the necessary attributes of good interactions could be boiled down to a single guiding principle, or even a single checklist. Even if imperfect, if the essence were easily communicated and widely understood, e-learning would be far better than it is today. For this reason, I have kept searching for that kind of guidance for quite some time. I offer the checklist in Table 12.2 as a basic tool that's surely better than nothing.

Table 12.2 Good Instructional Interactions—A Three-Point Checklist

| ✓ | Good interactions are purposeful in the mind of the learner. | Learners understand what they can accomplish through participation in the interaction. To be of greatest value for an individual, the learner needs to see value in the potential accomplishment and each learning step. |

| ✓ | The learner must apply genuine, authentic skills and knowledge. | It should not be possible to feign proficiency through good guesses. Challenges must be appropriately calibrated to the learner's abilities, readiness, and needs. Activities should become as similar to needed on-the-job performance as possible. |

| ✓ | Feedback is intrinsic. | The feedback demonstrates to learners the ineffectiveness (even risks) of poor responses and the value of good responses. |

Pseudo Interactivity

Misconceptions abound in e-learning. They derive from the many experiences we've had as students. And they have been exacerbated by the great enthusiasm for e-learning and fanaticism about all things related to the Internet, with the result that form is often prized over function. Identified delivery technologies, such as which development tools and learning management systems to use, are often mandated, whereas instructional effectiveness is not defined, required, or even measured. Although there are signs of a backlash effect, there has been a tendency to believe that instruction delivered via technology has credibility just because of the delivery medium. This surely is as wrong as believing that all things in print are true or that because a course of instruction is delivered by a living, breathing instructor, it is better than the alternatives.

Nothing in e-learning has been more confused than the design and application of interactivity. Its nature, is sufficiently multifaceted to be inherently confusing; yet, we've been investigating technology-supported instructional interactivity far too long to continue such confusion without embarrassment and impatience.

People confuse instructional interactivity with various multimedia components at both the detail and aggregate levels. At the detail level, very important design nuances separate highly effective interactivity from that which just goes through the motions. At the aggregate level, presentations, navigation, and questioning are wholly mistaken for instructional interactivity. To be clear that these are quite different entities with very different applications and outcomes, let's round out our examination of instructional interactivity with some final differentiations—that is, classifications of what is not instructional interactivity.

Presentation versus Instruction

It's easy to be deceived by appealing design. From book covers to automobile fenders, designers work endlessly to attract our attention, provide appeal, and paint a fantasy. The appeal they strive for is one that will create enthusiasm and a desire to buy, regardless of other factors that should be taken into account. They are often successful in getting us to disregard practicality, quality, and expense and to blindly pursue a desire, to buy into a dream. Just because it's attractive, of course, doesn't mean it is a quality product, good for us, or a smart buy.

A beautiful presentation is certainly worth more than an ugly one, but even superb presentation aesthetics don't convert a presentation into instruction or pronouncements into interactivity. These very different solutions should not be confused, just as the differing needs they address should not be confused.

Sometimes merely the presentation of needed information is sufficient. Those who are capable of the desired performance with only guiding information neither need nor benefit from instruction. They need only the information. Because instruction takes time and is much more expensive to prepare and deliver, it is important not to confuse a need for information with a need for instruction. (See Table 12.3.)

Table 12.3 Presentations versus Interactivity

| Choose Presentations When… | Choose Interactivity When… |

| Content is readily understood by targeted learners. | Content is complex and takes considerable thought to comprehend. |

| Learner differences are minimal. | Learners are diverse in their ability to understand the content. |

| Errors are harmless. | Errors are injurious, costly, or difficult to remedy. |

| Information is readily available for later retrieval and reference. | Information needs to be internalized. |

| Desired change to existing skill is minor and can be achieved without practice. | Behavioral changes will require practice. |

| Learners can easily differentiate between good and inadequate performance. | Learners need guidance to differentiate between good and poor performance. |

| Mentorship is inexpensive and will follow. | Mentorship is costly, limited, or unavailable. |

Navigation versus Interactivity

Navigation is the means learners have of getting from place to place—of getting to information. It includes such simple things as controls to back up, go forward, replay, pause, quit, and bring up tools such as a glossary, help function, calculator, hints, and progress records.

Because navigation requires input from the learner and provides a response from the computer, people frequently confuse navigation with interactivity. Further, interactive systems need good navigation capabilities. They depend on well-executed, supportive, integrated navigation and suffer when it's weak. But navigation and instructional interactivity are very different in terms of what they are, the learning support each provides, and where they are appropriate. As before, good navigation doesn't turn presentations into interactivity, although it can certainly improve the ability of learners to retrieve and review information.

Good navigation systems are valuable. They are neither easy to design nor easy to build. To be highly responsive to user requests and to provide intuitive controls is much more difficult than it would seem and failed attempts repeatedly attest to the level of challenge involved.

Nevertheless, with good navigation, content, and presentation components, performers can be aided such that their performance is hard to differentiate from that of an expert.

Electronic Performance Support Systems (EPSSs) versus Instructional Interactivity

Closely related to navigation are applications that provide prompts, guidelines, questions, and information in real time. They depend on very responsive navigation to keep in step with people throughout the performance of their tasks.

Electronic performance support systems (EPSSs) attempt to skip over the expensive, time-consuming efforts of taking people to high levels of proficiency. The EPSS takes advantage of circumstances in which computers can communicate with people as they perform, prompting many tasks in real time without the need of prior or extensive training. There are many benefits, even beyond the obvious benefit of avoided training costs:

- People can rotate into different positions on demand as workloads change from season to season, day to day, or even hour to hour.

- New hires can become productive very quickly.

- Errors caused by memory lapses, poor habits, and distractions can be greatly reduced, possibly even eliminated.

- Infrequently performed tasks can be performed with the same level of thoroughness and competency as those preformed frequently.

In short, an EPSS is an economical solution when training is not needed. It is an inappropriate and ineffective solution, however, when training is needed. We need to be careful to be clear about both the need and the fitting solution.

The navigational components of an EPSS can look much like instructional interactivity when they are designed to respond to observations made by users. If, for example, in making a payment-collection call to a person leasing a sports car, it is discovered that the payments have ceased because the car has been stolen, the EPSS's prompts on what to say will instantly change to address the unexpected context appropriately. The caller might not have learned how to handle such situations and might not have any background in stolen property circumstances, but the caller can still work through an instance successfully. The caller doesn't know—and doesn't need to know—what different behaviors might have been appropriate if the leased vehicle had been stolen before the payments fell behind rather than afterward. With the computer's support present, it doesn't matter. The employee isn't really a learner, just a performer successfully carrying out on-screen instructions.

Although the conditional programming necessary to help performers in real time can range from quite simple to extremely complex, even including components of artificial intelligence, the interactions built into these applications serve navigational needs more than instructional ones. Repetitive performance with EPSS aid should impart some useful internalized knowledge and skills, but EPSS applications are designed to optimize the effectiveness of the supported transactions rather than building new cognitive and performance skills (see Table 12.4).

Table 12.4 EPSS versus Interactivity

| Choose EPSS When… | Choose Interactivity When… |

| The task or job changes often. | The tasks are relatively stable. |

| Staff turnover is high. | Workers hold same responsibilities for a long time. |

| Performers do not need to know why each task step is important and whether it is appropriate in a specific circumstance. | Performers need to evaluate the appropriateness of each step and vigilantly monitor whether the process as a whole continues to be appropriate. |

| Tasks are systematic but complex and difficult to learn or remember. | Tasks may require unique, resourceful, and imaginative approaches. |

| Tasks are performed infrequently. | Tasks are performed frequently. |

| Tasks allow time for performance support. | Tasks are time critical and prohibit consulting a performance guide. |

| Supervision of employees on the job is limited or unavailable. | Supervision is expensive or impractical. |

| Mistakes in performance are costly. | Mistakes are easily rectified. |

| Learners are motivated to seek a solution. | Learners don't appreciate the value of good performance. |

Hybrid Applications: Using an EPSS for Instruction

Some applications both assist new learners to perform well immediately and teach operations so that they can be done either without computer support or more quickly with reduced support. That is, the techniques used for teaching and those that support performance are not mutually exclusive.

In fact, the early work of B. F. Skinner (1968) used a technique of fading. After learners read a poem to be memorized, some words were deleted from the text of the poem. Learners were generally able to continue reciting the poem, filling in the missing words as they read. Successively, more and more words were deleted until the whole poem could be recited with no assistance.

A similar approach can be used with EPSS applications, although considerably more learning support can be provided to learners than simply removing support steps. Instructive feedback, for example, can show learners the potential consequences of an error, while the EPSS application intervenes to prevent that error from actually occurring.

Questioning versus Interactivity

Question-answering activity is just question-answering activity, regardless of whether it is done with paper and pencil or a computer. If the learner doesn't have to apply higher-order mental processes, the learning impact is the same, regardless of whether learners drag objects, type letters, or click numbers. Just because some gestures are more sophisticated in terms of user interface, it doesn't mean that more learning is going on when they're employed.

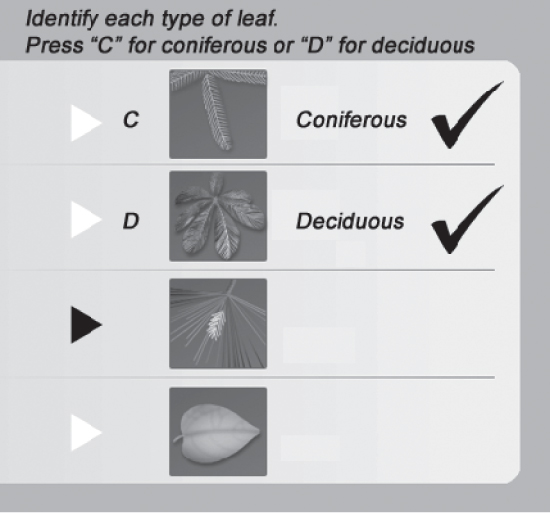

The screens in Figures 12.14 to 12.18 show five interaction styles, but the differences among the instructional impact of each style are insignificant.

Figure 12.14 Binary choice via key press.

Figure 12.15 The same binary choice via drag and drop.

Figure 12.16 The same binary choice via text entry.

Figure 12.17 The same binary choice via buttons.

Figure 12.18 Again, the same binary choice via clickable objects.

There's no doubt that some learning can occur from repeatedly answering questions and checking the answers. A big advantage of e-learning delivery is that it can require learners to commit to answers before checking them. Conversely, learners can easily look at a question at the end of a book chapter, such as one on identifying trees, and think to themselves, “That willow leaf must be from a conifer.” But then, on seeing from listed correct answers that it was actually from a deciduous broadleaf, they'll think “Oh right. I knew that. Should be more careful,” and go on without adequate practice.

Questioning can build some skills—at least the skills needed to answer questions correctly, but it isn't an efficient means of doing so, and it unfortunately takes minimal advantage of the more effective instructional capabilities available in e-learning.

At the very low end of the interactivity scale, e-learning relies on questioning. If learners casually guess at answers just to reveal correct answers the application readily yields, the learning event is no more effective than the book's end-of-chapter questions with upside-down answers. This structure does not create memorable events and is most likely to result in test learning, which is quickly forgotten after the examination.

Of course, a continuum of questioning paradigms builds from this low-end base toward true instructional interactivity. Some enhancements on basic questioning include:

- Not providing correct answers

- Not providing correct answers until after several tries

- Not providing correct answers right away, but providing hints first

- Not providing correct answers right away, but describing the fault with the learner's answer, then providing hints if necessary

- Giving credit only if the question is answered correctly on the first try

- Drawing randomly from a pool of questions to be sure learners aren't just memorizing answers to specific questions

- Selecting questions based on previous faults

And so on. There's no doubt that these techniques improve the value of questioning for instruction to a degree, but CCAF structures and instructional interactivity are much more effective.

Remember, to hit an instructional home run we need to create meaningful and memorable experiences. Unless the training is specifically designed just to teach learners to pass a test, there are much more efficient and effective methods than drilling through sample questions.

The Takeaways

Instructional interactivity and mechanical interactivity are two very different things. While mechanical interactivity involves input gestures, such as clicking the mouse button or pressing Enter, and a computer response, such as displaying the next page or playing a video, instructional interactivity can be defined as follows:

instructional interactivity Interaction that actively stimulates the learner's mind to do those things that improve ability and readiness to perform effectively.

Although instructional designs that elicit effective instructional interactivity involve successful integration of a great many factors, all build on just four elements:

- Context—the framework or situation and conditions which make content relevant and interesting to the learner

- Challenge—a stimulus to action within the context, such as a problem to solve

- Activity—a physical response to the challenge that represents a real-world action a person might really take

- Feedback—reflection of the effectiveness of the learner's action most often best presented as consequences to the action the learner took

When these components are used effectively, instructional interactions are characterized by:

- Being purposeful in the mind of learner. Learners can see value in what they're doing.

- Requiring the learner to perform authentic skills as they would expect to do after training is complete.

- Intrinsic feedback, often in the form of consequences to actions taken.

Presentations, navigation, EPSS, and more complex user interfaces can appear to suffice for well-designed instructional interactions but are quite different things and insufficient on their own when learners are building most performance skills.