Chapter 3

What You Don't Know Can Kill (Your e-Learning)

Of course, e-learning isn't more effective than other forms of instruction just because it's delivered via computer. The quality and effectiveness of e-learning are outcomes of its design, just as the quality and value of books, television, and film vary with the particular script, actors, episode, and movie. e-Learning can be very good, very bad, or anything in between. The way e-learning's capabilities are used makes all the difference.

One cause of frequent instructional failures is that the actual reasons for undertaking e-learning projects are not defined, are not conveyed, get lost, or become upstaged. Most often, the success-related performance goal of enabling new behaviors is overtaken by the pragmatics of simply putting something in place that could be mistaken for a good training program.

When executives and other reviewers are not attuned to the criteria against which e-learning solutions should be evaluated, the focus of development teams turns to what those reviewers will appreciate: mastering technology, overcoming production hurdles, and just getting something that looks good up and running within budget and on schedule. These latter two criteria, budget and schedule, become the operative goals, stealing focus from the original reason for building an e-learning project. (See Figure 3.1.)

Figure 3.1 Transformed goals.

Surely performance success is the primary reason most e-learning projects are undertaken. Success comes from more responsive customer service, increased throughput, reduced accidents and errors, better-engineered designs, and consistent sales. It comes from good decision making, careful listening, and skillful performance. Remember: Success for organizations and individuals alike requires doing the right things at the right times.

How do things in e-learning run amok so easily? Two reasons: counterfeit successes (a.k.a., to-do-list projects) and undercover operations (a.k.a., on-the-job training [OJT]).

To-Do-List Projects

Unfortunately, many e-learning projects are to-do-list projects. The typical scenario: People aren't doing what they need to be doing. Someone in the organization is given the assignment to get training in place. A budget is set (based on who knows what?), and the clock starts ticking down to the target rollout date. The objective is set: Get something done—and, by all means, get it done on time and within budget. Announce the availability of training, cross the assignment off the to-do list, and move on to something else. Goal accomplished.

For the project manager given the assignment, the driver for implementing e-learning easily transforms from the instigating business need—determining what people need to do and when, and finding the best way of assuring that performance can and will happen—to the pressing challenge of getting a training project done. This is likely because expenditures for training development and delivery are calculated easily, but training effectiveness is not quantified so easily and is, therefore, rarely measured at all. Any project manager knows how the success of the project will be assessed: timeliness, cost control, and by whether learners like it and report positive things about it. It will be measured by how good it looks, how quickly it performs, and whether it's easy enough to use. Complaints aren't good, so safeguards are taken to make sure the training isn't too challenging and doesn't generate a lot of extra work for administrative staff or others. The absence of complaints is a win.

Again, the original, driving goal of the project gets swept away and no longer determines priorities. The project transitions into somebody's assignment to get something done (another project on the to-do list). It will be a success because every effort and sacrifice will be made just to get it done. Success under these criteria, however, will contribute little to the organization or individual learners.

Let's Not Measure Outcomes

Indeed, many of the e-learning developers I know commiserate that no indicators of behavior change are likely ever to be measured and no assessment of either the business impact or the return on investment (ROI) is likely to be performed. In one study, for example (Bonk, 2002), nearly 60 percent of more than 200 survey respondents noted their organizations did not conduct formal evaluations of their e-learning. It would be very surprising if even 10 percent of organizations using e-learning actually conducted well-structured, valid evaluations. As research-to-practice expert on learning, Will Thalheimer (2016) notes:

We as workplace-learning professionals often work in darkness. We get most of our feedback from smile sheets—also known as happy sheets, postcourse evaluations, student-response forms, training-reaction surveys, and so on. We also get feedback from knowledge tests. Unfortunately, both smile sheets and knowledge tests are often flawed in their execution, providing dangerously misleading information. Yet, without valid feedback, it is impossible for us to know how successful we've been in our learning designs. (p. xix)

Many organizations prefer to use available training funds for the development of additional courseware rather than for evaluation of completed programs. This prevents everyone from learning about what works and doesn't; what's a smart investment and what isn't. I guess ignorance truly is bliss.

What Really Matters

Do people have such confidence their training will attain its goals that no measurement is necessary to confirm it? Or, conversely, could people think it's so unlikely that any training program is going to be effective, that it doesn't matter what is done as long as it wraps up on time and budget? Another possibility: Do people want to avoid the risks in having the impact of their training exposed? If nobody asks or complains, it may be better not to know.

Perhaps the real project goal isn't to achieve desired learning and performance outcomes. Some sort of formal training, regardless of its effectiveness, will be better than nothing. Perhaps the real reason for implementing the training program might actually be to have the appearance of concern and taking action. Otherwise, employees would complain about the lack of support and clarity of what they're supposed to do. They'd even have justifiable cause to complain. But by offering a training program—any training program—the burden shifts to the employee. Whew!

“What do you mean you don't know how? Didn't you learn anything in the training we provided? You must not have been paying attention. We go to all the expense of providing you training, and you're still not getting it? Better get on board fast!”

The likelihood of hearing such a comment is low because an employee would have to be caught not knowing what to do or voluntarily admitting not knowing what to do even after taking training. Most employees are adept at avoiding such exposure. Instead of speaking up, admitting lack of readiness, and enduring the consequences, they duck observation, quietly observe others, and, if all else fails, surreptitiously interrupt coworkers to learn what's necessary—just enough, at least, to get by and avoid censure. Poor training is let off the hook.

Informal On-the-Job Training: A Toxic Elixir for Poor Training

Under the scenario I just presented, formal training is delivered, employees appear able to perform, and no one is complaining. Success! Or maybe not. Unplanned, possibly even unobserved, informal on-the-job training may be at work here, not just the gratuitous, boring, and, ultimately ineffectual e-learning.

Who's more eager to learn than people trying to perform a skill, finding they can't do it, and fearing exposure? The helpful guidance of coworkers is gratefully received in this worrisome situation. It is often effective, at least in terms of assisting with the specific barrier at hand. Unfortunately, providing poor e-learning and then letting haphazard knowledge sharing deal with its ineffectiveness is expensive, slow, and potentially counterproductive—even dangerous. Let's see why this is so:

It's Expensive

On-the-job (OTJ) training is expensive because you have the costs of two training systems—the e-learning system and the all too often ad hoc, unseen, and unmeasured on-the-job training system. While the costs of e-learning are rather easily identified, including design, development, distribution, and learner time, only distribution costs and learner time are recurring costs. Those costs have become very inexpensive and broadly practical, whether providing access to e-learning is done through local area networks, cloud services; Wi-Fi, hotspots, or wired; on personal computers, laptops, tablets, or even smart phones.

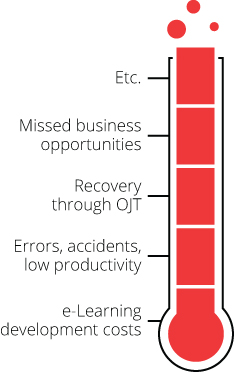

Conversely, unplanned, unstructured on-the-job training doesn't cost anything for design and development but carries high continuing costs that include coworker disruption and resulting loss of productivity. The learning worker probably receives an incomplete tutorial as well and will have to continue to interrupt others as he or she becomes aware of additional incompetencies. Performance errors and employee frustration are likely and contribute to the high cost of this strategy, which can cause domino effects of unhappy customers, missed business opportunities, disrespect for management, employee attrition, and so on. (See Figure 3.2.)

Figure 3.2 Stacked costs of poor training.

It's Slow

Surreptitious on-the-job training can be timely but is often inconvenient and slow. It could be that the knowledgeable individual needs to finish a task before providing help on another. Perhaps a machine has to be shut down and restarted each time a procedure is demonstrated. The learner stands and waits, perhaps even being distracted by something to be done elsewhere and then being detained when learning could resume. The coworker then stands and waits. It's easy to imagine a multitude of realistic, probable causes of scheduling difficulties and inefficiencies. Even when on-the-job training is planned, many companies look for alternatives because of the inherent scheduling difficulties, costs, quality-control problems, lack of scalability, delays in updating, and other problems inherent in on-the-job training.

It's Risky

If all these problems weren't bad enough, there are dangers that could lead to devastating situations. The learner might not realize he or she needs help or doesn't want to admit needing it. Keeping incompetency hidden can lead to severe consequences. While caring for you, for example, you don't want your doctor getting by on hunches, then discovering her errors and her need for training after the damage has been done. You don't want an unsupervised first-time Bobcat operator working next to your house or an auto mechanic guessing what's wrong with your brakes because he doesn't want to admit his lack of readiness. Trial and error may be a good teacher, but there are costs. If errors are necessary before a worker can get help, errors will have to be made and damages incurred. Even further, if the consulted coworker misunderstands the procedure he or she is helping another with, it's quite possible misconceptions will be perpetuated, compounding error upon error. That's how bad work habits become part of a corporate culture, and the result is the proverbial blind-leading-the-blind. Unstructured informal training is a risky remedy for poor training.

Don't Drink the Kool-Aid

We've seen increased interest in learner-constructed content. And I do appreciate instructional strategies that give learners the task of preparing to teach what they've learned. But mostly I think this is yet another product of hoping that the hard work of good instructional design can be avoided. Certainly, modern communications capabilities and concurrent authoring tools facilitate collaborative design and development. And as long as a process is provided to validate the subject matter, in some contexts, learner-constructed content might indeed be more affordable than engaging genuine subject-matter experts and skilled instructional designers (although it seems likely to be more expensive and less impactful in the end).

While recent learners and prospective learners are exceptionally valuable in the process of creating effective learning experiences (something I will detail later with the SAM approach to courseware development), it doesn't make sense to turn over the reins to those with minimal knowledge of instructional design. We see today the results of too many people working very hard but having no idea how to create performance-changing e-learning. Learner-constructed content shouldn't take the place of properly executed instructional design.

An Example

Here is a quick example to show that the value of good e-learning instruction can be instantly apparent, while also fun and relatively easy to develop. The content in this example may be far different from what you need to teach, but consider the instructional strategy for a moment anyway.

Experience versus Presentation

Suppose you were teaching the fundamentals of finding the epicenter of an earthquake with seismographs. This topic could be taught through some pages of text and graphics—literally a presentation—as shown in Figure 3.3.

Figure 3.3 Page turner.

But add a question as in Figure 3.4, and it becomes interactive learning, right? Barely.

Figure 3.4 Page turner with question.

This interaction invites guessing. There are so many sets of three on the screen that it's very likely learners would guess correctly without understanding any of the underlying concepts. Truly interactive learning creates an experience that facilitates deeper thinking.

In the design shown in Figures 3.5 to 3.8, learners place seismographic stations on a map, one station at a time, take a reading, and determine from distance data only (with no directionality) how it is necessary to have more than one reading. You can easily see how this would be a better learning experience.

Figure 3.5 Learner activity in an authentic context.

Figure 3.6 Let the learner play.

Figure 3.7 Learner experience teaches the principle.

Figure 3.8 Confirming feedback is almost unnecessary.

As shown in Figure 3.6, the learner adjusts the radius of circles dynamically to match computer-generated readings. This activity reinforces the concept that the seismograph reveals only the distance and not the direction of the epicenter.

In the pedantic approaches we want to avoid, learners would be told outright that three seismographs are usually needed and then asked to remember the number. But in interactive learning, learners can attempt to find the epicenter with just one or two readings and see why another is needed. With that approach, the complete concept is learned and is remembered more easily, as well.

Once the learner has placed and measured all three seismic stations, it is clear how to identify the epicenter.

It shouldn't be surprising that the most common learner response to completing this sequence is to try it again. It fosters no end of curiosity: What if I put the stations really close together? What if I put them in a straight line? What if I place one exactly on the distance circle of another station? The interface allows exploration of all these questions, and each answer develops a richer understanding in the learner's mind.

With capable authoring tools, such as Authorware or ZebraZapps that were used to create this example, active e-learning like this is easy and inexpensive to create; yet, the impact is far greater than just a presentation with or without the ubiquitous follow-up questions.

The Takeaways

It is the intent of this book to share some of the methods, tips, and tricks of accomplishing the primary success goal of all training: getting people to do the right thing at the right time. Just knowing what to do isn't sufficient and isn't success unless employees know how and when to do it.

Because so few organizations measure the impact of their training, regardless of whether it's delivered by e-learning, they get into a cycle of cutting training costs, not realizing the potentially deleterious effects (unless disaster strikes), and may continue to cut costs when no apparent harm is caused. With so little time and money to work with, training developers feel they can do little but prepare presentation-based instruction that's admittedly boring and ineffective. The cycle is perpetuated.

Boring instruction is ineffective and ultimately far more expensive than impactful instruction that achieves the success criterion of getting people to do the right thing at the right time. If proper measures aren't going to be put in place, then courseware at least should be designed with the characteristics that have been proven effective.

There are many proven benefits of good e-learning. They have been measured and are reliably reproducible. While we may try to avoid the work of good instructional design, it is clear that alternatives have major, costly risks, such as being slow, expensive, and inaccurate. Effective instruction is always less expensive than instruction that doesn't work.