Enhancements for the System z file system

The z/OS Distributed File Service System z® File System (zFS) is a z/OS UNIX file system that is used in parallel with the hierarchical file system (HFS).

This chapter describes new support and enhancements for zFS in z/OS V2R2 and includes the following chapters:

4.1 zFS 64-bit Support

z/OS V2R2 zFS provides the following enhancements for storage limitations, cache structures, and CPU usage:

•64-bit addressability

•A new log method

•Elimination of the metadata backing cache and keeping only the use of one metadata cache

•Running zFS in the OMVS address space

These enhancements result in the following benefits:

•Elimination of issues with running out of storage below the bar

•Use of bigger caches and a larger trace history

•Improved metadata performance, especially for parallel updates to the same v5 directory

•Improved vnode operations

4.1.1 zFS cache enhancements

In z/OS V2R2, a new log caching facility is used and statistics are available in a new format.

The statistics log cache information is available via the zFS API service command ZFSCALL_STATS (0x40000007), option code 247. Consider the following points:

•Specifying Version 1 returns the old structure API_LOG_STAT data.

•Specifying Version 2 returns the new structure API_NL_STATS data.

•z/OS UNIX command zfsadm query -logcache and MVS system command MODIFY ZFS,QUERY,LOG support the new statistical data.

The following types of caches are no longer available or used:

•The Transaction cache was removed.

With improved logging method, it is no longer needed.

•The Client cache was removed.

z/OS V1R12 cannot coexist with z/OS V2R2; therefore, it is no longer needed.

Elimination of the metadata backing cache

As 64-bit support allows zFS to obtain caches above the bar, there is no longer a need to define a metaback cache in data spaces.

Consider the following points:

•The zFS parmlib member option metaback_cache_size is used for compatibility.

•zFS internally combines meta cache and metaback cache and allocates one cache in zFS address space storage.

•It is suggested that where appropriate, remove the metaback_cache_size option from your zFS parmlib members and add its value to the meta_cache_size option.

4.1.2 Health check for zFS cache removals

There is a health check that is named ZFS_CACHE_REMOVALS to monitor zFS cache removals. Consider the following points:

•The health check determines whether zFS is running with parmlib configuration options metaback_cache_size, client_cache_size, and tran_cache_size.

•Specifying any of the options causes an exception. Therefore, we suggest that not to these options are not specified.

The following override check parameters keywords are available:

•METABACK

•CLIENT

•TRANS

The possible values are ABSENCE or EXISTENCE. Example 4-1 shows a sample of the parameter setting for this health checker.

Example 4-1 Sample parameter setting for the ZFS_CACHE_REMOVALS health checker

PARM('METABACK(EXISTENCE), CLIENT(EXISTENCE), TRANS(EXISTENCE)')

If active, the severity is set to low.

4.1.3 Statistics Storage Information API

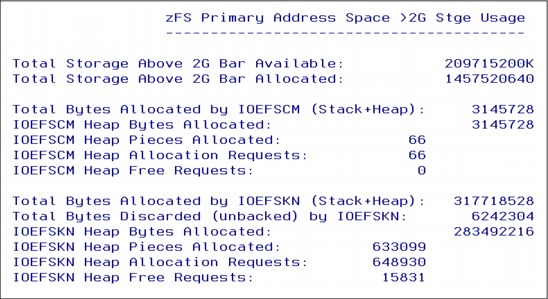

In z/OS V2R2, a new Statistics Above 2G Storage Information API was introduced. It is available via zFS API service command ZFSCALL_STATS (0x40000007), opcode 255 and named STATOP_STORAGE_ABOVE.

|

Note: The Statistics Storage Information API STATOP_STORAGE option code 241 uses API_STOR_STATS2 for Version 2.

|

The MODIFY ZFS,QUERY,STORAGE,DETAILS command provides many free lists for serviceability. An example is shown in Figure 4-1.

Figure 4-1 zFS storage information above 2 GB

4.1.4 Running zFS within the OMVS address space

In z/OS V2R2, zFS can run in the OMVS address space. Depending on the workload circumstances, this configuration might reduce CPU usage based on the shorter path lengths.

Consider the following points if you want to move zFS into the OMVS address space:

•You must remove the ASNAME keyword in the FILESYSTYPE statement for zFS in the BPXPRMxx parmlib member.

•If you still use the IOEZPRM DD statement in the zFS STC to point to the zFS configuration parameters, this DD statement should be added to the OMVS STC.

•If OMVS does not use the value that is defined in IBM-supplied Program Properties Table (PPT), ensure that the OMVS ID has the proper privileges as the zFS STC user ID did.

This issue might not be a problem because the OMVS user ID also is set up with high authority as is zFS.

•You must use the new MODIFY OMVS,PFS=ZFS command to address zFS MODIFY commands to zFS because there is no extra zFS STC active.

|

Note: For more information about this new command interface, see “MODIFY OMVS command enhancement” on page 2 and “Addressing PFS commands to zFS and TFS” on page 3.

|

4.1.5 Specifying larger values with the 64-bit zFS support

The new 64-bit support provides support for larger caches; the value ranges are listed in Table 4-1.

Table 4-1 Old and new cache range values

|

zFS configuration options

|

Old range

|

New range

|

|

vnode_cache_size

|

32 - 500,000

|

1000 - 10,000,000

|

|

meta_cache_size

|

1 M – 1024 M

|

1 M – 64 G

|

|

token_cache_size

|

20480 – 2,621,440

|

20480 – 20,000,000

|

|

trace_table_size

|

1 M – 2048 M

|

1 M – 65535 M

|

|

xcf_trace_table_size

|

1 M – 2048 M

|

1 M – 65535 M

|

The larger numbers use the following suffixes for counters and storage sizes:

•For counters:

– t : Units of 1,000

– m: Units of 1,000,000

– b : Units of 1,000,000,000

– tr: Units of 1,000,000,000,000

•For storage sizes:

– K : Units of 1,024.

– M : Units of 1,048,576.

– G : Units of 1,073,741,824

– T : Units of 1,099,511,627,776

4.1.6 Migration and coexistence considerations

Several required actions and possibilities are described in this section that are based on the conditions and software levels that were available at the time of this writing. We suggest you research the APAR numbers for any other related information.

Toleration APAR OA46026

Toleration APAR OA46026 must be installed and active on all z/OS V1R13 and z/OS V2R1 systems before z/OS V2R2 is introduced.

Consider the following points regarding the new format of the log cache statistics:

•Down level systems can recognize the new logging method and run the new log recovery and return Version 1 output, although most of the API_LOG_STAT values are 0.

•Applications that use STATOP_LOG_CACHE (opcode 247) to request Version 1 output must be updated to use Version 2 output.

•z/OS UNIX command zfsadm query -logcache and MVS system command MODIFY ZFS,QUERY,LOG return the new statistics.

Removing transaction cache and client cache

If the zFS parmlib configuration option tran_cache_size or client_cache_size are used, the specifications are ignored.

Use of Statistics APIs

Consider the following points regarding the use usage of Statistics APIs:

•The use of STATOP_USER_CACHE (opcode 242) remote VM_STATS are shown as all zero for Version 1 requests. No remote VM_STATs are provided for Version 2 requests.

Version 1 requests should be updated to Version 2 to receive the new output.

•When STATOP_TRAN_CACHE (opcode 250) is used, all zeros are returned for Version 1 requests and nothing is returned for Version 2 requests.

You should use STATOP_LOG_CACHE (opcode 247) with a Version 2 request to get the new output.

•The use one of Query Config Option tran_cache_size setting (opcode 208), client_cache_size setting (231) or Set Config Option tran_cache_size (opcode 160), client_cache_size (opcode 230) APIs has no effect.

Using commands

Consider the following points regarding the use of commands:

•Commands zfsadm config or zfsadm configquery with options -tran_cache_size or -client_cache_size have no effect, as shown in Example 4-2.

Example 4-2 Output of command zfsadm configquery -client_cache_size

$> zfsadm configquery -client_cache_size

IOEZ00317I The value for configuration option -client_cache_size is 32M.

IOEZ00668I zFS Configuration option -client_cache_size is obsolete and is not used.

•Command zfsadm query -trancache now displays all zeros. We suggest removing the use of the command.

•In the MODIFY ZFS,QUERY,LFS command report transaction, cache data was removed.

4.2 zFS enhanced and new functions

The following main enhancements were added in z/OS V2R2:

•The 4-byte counters (version 1) are replaced by 8-byte counters (version 2).

•Three new sysplex-related APIs are provided.

•A new powerful FSINFO function to obtain detailed file system information was introduced.

These enhanced new functions provide the following benefits:

•Monitoring statistics over a much longer period is possible.

•Improved performance in the use of API services.

•FSINFO provides more detailed information for single and multiple file systems in a faster and more flexible manner, including sysplex-wide information.

•More detailed statistics per file system.

4.2.1 New 8-byte counter support

The following APIs that manage statistic numbers now support 8-byte counters:

•STATOP_LOCKING (opcode 240)

•STATOP_STORAGE (opcode 241)

•STATOP_USER_CACHE (opcode 242)

•STATOP_IOCOUNTS (opcode 243)

•STATOP_IOBYAGGR (opcode 244)

•STATOP_IOBYDASD (opcode 245)

•STATOP_KNPFS (opcode 246)

•STATOP_META_CACHE (opcode 248)

•STATOP_VNODE_CACHE (opcode 251)

The zfsadm query and MODIFY QUERY commands are affected by the new 8-byte counters; therefore, we suggest that you review any automation or scripts that parse or reference the output from the commands.

4.2.2 New sysplex-related APIs

The following new sysplex-related APIs are available:

•Statistics Sysplex Client Operation Info, named STATOP_CTKC (opcode 253)

This API returns information about the number of local operations that required sending a message to another system.

•Server Token management Info, named STATOP_STKM (opcode 252)

This API returns the server token manager statistics.

•Statistics Sysplex Owner Operation, named STATOP_SVI (opcode 254)

This API returns information about the number of calls that were processed on the local system as a result of a message that was sent from another system.

zfsadm query commands

The API is used by the following new zfsadm query options:

•zfsadm query -ctkc

•zfsadm query -stkm

•zfsadm query -svi

zFS MODIFY commands

The following MODIFY ZFS,QUERY commands now support 8-byte counters:

•MODIFY ZFS,QUERY,CTKC

•MODIFY ZFS,QUERY,STKM

•MODIFY ZFS,QUERY,SVI

New FSINFO interface

The new and powerful FSINFO interface provides the following enhancements:

•A zfsadm command.

•A detailed file system API command that is named ZFSCALL_FSINFO (0x40000013).

•A zFS MODIFY command.

•Support for 8-byte counters.

|

Tip: We recommend the use of FSINFO instead of List Aggregate Status (opcode 135 or 140) or List File system status (opcode 142).

|

4.2.3 z/OS UNIX command zfsadm fsinfo

Figure 4-2 shows syntax information for the zfsadm fsinfo command.

|

zfsadm fsinfo [-aggregate name | -path path_name | -all]

[-basic |-owner | -full |-reset]

[-select criteria | -exceptions]

[-sort sort_name][-level][-help]

|

Figure 4-2 zfsadm fsinfo syntax

Available fsinfo options

The fsinfo command features the following options:

•-aggregate name

This option is used to specify the name of the aggregate. Use an asterisk (*) at the beginning, end, or both of the name as a wildcard. When wildcards are used, the default display mode is -basic. Otherwise, the default display is -owner.

•-path path_name

This option specifies the path name of a file or directory that is contained in the file system. The default information display is -owner.

•-all

This option displays information for all aggregates in the sysplex. The default information display is -owner.

•-basic

This option displays a line of basic file system information for each specified file system.

•-owner

This option displays only information that is maintained by the system that owns each specified file system.

•-full

This option displays information that is maintained by the system that owns each specified file system. It also displays information that is locally maintained by each system in the sysplex that has each specified file system locally mounted.

•-reset

This option resets zFS statistics that relate to each specified file system. This option requires system administrator authority.

Displaying information about aggregates with exceptional conditions

Use the -exceptions option to display information about aggregates with exception conditions. Table 4-2 on page 23 lists the available exceptions.

Table 4-2 Available exceptions

|

Exceptions

|

Description

|

|

CE

|

XCF communication failures between clients systems and owning systems

|

|

DA

|

Marked damaged by the zFS salvager

|

|

DI

|

Disabled for reading and writing

|

|

GD

|

Disabled for dynamic grow

|

|

GF

|

Failures on dynamic grow attempts

|

|

IE

|

Disk IO errors

|

|

L

|

Less than 1 MB of free space; forces increased XCF traffic for writing files

|

|

Q

|

Currently quiesced

|

|

SE

|

Returned ENOSPC errors to applications

|

|

V5D

|

Shown for aggregates that are disabled for conversion to version 1.5

|

Specifying select criteria

Use the -select option to indicate that each specified file system that matches the criteria is displayed. Multiple criteria are separated by commas, such as -select Q,DI,L.

|

Note: This option cannot be specified with -exceptions, -reset, and -path.

|

To use this select option, specify one or more select criteria that are listed in Table 4-3.

Table 4-3 Selection criteria

|

Criteria

|

Description

|

|

CE

|

XCF communication failures between clients systems and owning systems

|

|

DA

|

Marked damaged by the zFS salvager

|

|

DI

|

Disabled for reading and writing

|

|

GD

|

Disabled for dynamic grow

|

|

GF

|

Failures on dynamic grow attempts

|

|

GR

|

Currently being grown

|

|

IE

|

Returned ENOSPC errors to applications

|

|

L

|

Less than 1 MB of free space; forces increased XCF traffic for writing files

|

|

NS

|

Mounted NORWSHARE

|

|

OV

|

Extended (v5) directories that are using overflow pages

|

|

Q

|

Currently quiesced

|

|

RQ

|

Had application activity

|

|

RO

|

Mounted read-only

|

|

RW

|

Mounted read/write

|

|

RS

|

Mounted RWSHARE (sysplex-aware)

|

|

SE

|

Returned ENOSPC errors to applications

|

|

TH

|

Having sysplex thrashing objects in them

|

|

V4

|

Aggregates that are version 1.4

|

|

V5

|

Aggregates that are version 1.5

|

|

V5D

|

Aggregates that are disabled for conversion to version 1.5

|

|

WR

|

Had application write activity

|

Requesting sorted display data

Use the -sort sort_name option to specify that the information that is displayed is sorted as specified by the value of sort name, as listed in Table 4-4.

Table 4-4 Sort names for sorting information that is displayed

|

sort_name

|

Function

|

|

Name

|

Sort by file system name, in ascending order. This option is the default.

|

|

Requests

|

Sort by the number of external requests that are made to the file system by user applications, in descending order. The most actively requested file systems are listed first.

|

|

Response

|

Sort by response time of requests to the file system, in descending order. The slower responding file systems are listed first.

|

|

Note: This option cannot be specified with -reset.

|

General zfsadm options

For other zfsadm commands, fsinfo supports the following options:

•-level

This option prints the level of the zfsadm command. Except for -help, all valid options that are specified with -level are ignored.

•-help

This option prints the online help for this command. All other valid options that are specified with this option are ignored.

4.2.4 Displaying zfsadm fsinfo examples

An example of how to use an asterisk (*) as a wildcard is shown in Figure 4-3.

|

$> zfsadm fsinfo hering*

HERING.TEST.DUMMY.ZFS SC74 RW,RS,Q,L

HERING.TEST.ZFS SC74 RW,NS,L

HERING.ZFS SC74 RW,RS

Legend: RW=Read-write,Q=Quiesced,L=Low on space,RS=Mounted RWSHARE

NS=Mounted NORWSHARE

$>

|

Figure 4-3 Use of an asterisk to list all file systems starting with string “hering”

An example that provides a path name is shown in Figure 4-4.

|

$> zfsadm fsinfo -path test -basic

HERING.TEST.ZFS SC74 RW,NS,L

Legend: RW=Read-write, L=Low on space, NS=Mounted NORWSHARE

$>

|

Figure 4-4 Listing base information for the zFS to which the specific path belongs

More information about the same path and zFS file system is shown in Figure 4-5.

|

$> zfsadm fsinfo -path test

File System Name: HERING.TEST.ZFS

*** owner information ***

Owner: SC74 Converttov5: OFF,n/a

Size: 36000K Free 8K Blocks: 88

Free 1K Fragments: 46 Log File Size: 112K

Bitmap Size: 8K Anode Table Size: 80K

File System Objects: 257 Version: 1.5

Overflow Pages: 0 Overflow HighWater: 0

Thrashing Objects: 0 Thrashing Resolution: 0

Token Revocations: 0 Revocation Wait Time: 0.000

Devno: 54 Space Monitoring: 0,0

Quiescing System: n/a Quiescing Job Name: n/a

Quiescor ASID: n/a File System Grow: ON,0

Status: RW,NS,L

Audit Fid: C2C8F5E2 E3F20184 0000

File System Creation Time: Sep 8 09:38:25 2006

Time of Ownership: Jul 31 11:57:53 2015

Statistics Reset Time: Jul 31 11:57:53 2015

Quiesce Time: n/a

Last Grow Time: n/a

Connected Clients: n/a

Legend: RW=Read-write, L=Low on space, NS=Mounted NORWSHARE

$>

|

Figure 4-5 Listing more information about the zFS to which the specific path belongs

All zFS aggregates that are quiesced or not mounted sysplex-aware are shown in Figure 4-6.

|

$> zfsadm fsinfo -select q,ns

HERING.TEST.DUMMY.ZFS SC74 RW,RS,Q,L

HERING.TEST.ZFS SC74 RW,NS,L

Legend: RW=Read-write,Q=Quiesced,L=Low on space,RS=Mounted RWSHARE

NS=Mounted NORWSHARE

$>

|

Figure 4-6 Listing all quiesced or not sysplex-aware mounted zFS aggregates

You can also retrieve information about zFS aggregates that are not attached, as shown in Figure 4-7 on page 27.

|

$> rxdowner -a hering.largedir.v4

RXDWN004E Aggregate HERING.LARGEDIR.V4 cannot be found.

$> zfsadm fsinfo hering.largedir.v4

File System Name: HERING.LARGEDIR.V4

*** owner information ***

Owner: n/a Converttov5: OFF,n/a

Size: 360000K Free 8K Blocks: 9152

Free 1K Fragments: 7 Log File Size: 3600K

Bitmap Size: 56K Anode Table Size: 250264K

File System Objects: 1000003 Version: 1.5

Overflow Pages: 0 Overflow HighWater: 0

Thrashing Objects: 0 Thrashing Resolution: 0

Token Revocations: 0 Revocation Wait Time: 0.000

Devno: 0 Space Monitoring: 0,0

Quiescing System: n/a Quiescing Job Name: n/a

Quiescor ASID: n/a File System Grow: OFF,0

Status: NM

Audit Fid: C2C8F5D6 C5F1000A 0000

File System Creation Time: Jun 16 00:48:25 2013

Time of Ownership: Aug 12 22:38:19 2015

Statistics Reset Time: Aug 12 22:38:19 2015

Quiesce Time: n/a

Last Grow Time: n/a

Connected Clients: n/a

Legend: NM=Not mounted

$>

|

Figure 4-7 Listing information about a zFS aggregate that is not mounted and not attached

4.2.5 New zFS API ZFSCALL_FSINFO (0x40000013)

As for most zFS API calls, the pfsctl (BPX1PCT) application programming interface is used to send requests to the zFS physical file system. The simplified format for FSINFO is shown in Figure 4-8.

|

BPX1PCT(“ZFS “, /* File system type followed by 5 blanks */

0x40000013, /* ZFSCALL_FSINFO – fsinfo operation */

parmlen, /* Length of parameter buffer */

parmbuf, /* Address of parameter buffer */

&rv, /* return value */

&rc, /* return code */

&rsn) /* reason code */

|

Figure 4-8 Format of the fsinfo pfsctl() interface call

FSINFO features the following subcommands:

•Query file system info (opcode 153)

This subcommand requires a minimum buffer size of 10 K for a single-aggregate query and 64 K for a multi-aggregate query.

•Reset file system statistics (opcode 154)

This command requires a minimum buffer size of 10 K.

4.2.6 REXX example that uses the new ZFSCALL_FSINFO API

A sample REXX named rxlstqsd that uses the new fsinfo API was created for demonstration and reference for this book. Consider the following points:

•Sample rxlstqsd uses the new pfsctl() command ZFSCALL_FSINFO to list all quiesced zFS aggregates in a sysplex sharing environment.

•It was created run in z/OS UNIX, TSO, and as a SYSREXX routine.

•The utility is provided in ASCII text mode as other material for this IBM Redbooks publication. When the utility is transferred from your workstation to z/OS via FTP, it is suggested that you perform the following tasks:

– Transfer the rxlstqsd.txt file in text mode (not binary) to z/OS UNIX first.

– Use the FTP quote site sbd=(1047,819) subcommand before you run the transfer and rename it to rxlstqsd.

– From UNIX, you can copy it to a TSO REXX and a SYSREXX library.

How to use the utility from different environments is shown in Figure 4-9.

|

$> rxlstqsd

HERING.TEST.PRELE.ZFS

HERING.TEST.RW.ZFS

HERING.TEST.ZFS

$> cn "f axr,rxlstqsd"

ZFSQS004I RXLSTQSD on SC74 -

HERING.TEST.PRELE.ZFS

HERING.TEST.RW.ZFS

HERING.TEST.ZFS

$> sudo zfsadm unquiesce HERING.TEST.PRELE.ZFS

IOEZ00166I Aggregate HERING.TEST.PRELE.ZFS successfully unquiesced

$> sudo zfsadm unquiesce HERING.TEST.RW.ZFS

IOEZ00166I Aggregate HERING.TEST.RW.ZFS successfully unquiesced

$> sudo zfsadm unquiesce HERING.TEST.ZFS

IOEZ00166I Aggregate HERING.TEST.ZFS successfully unquiesced

$> rxlstqsd

ZFSQS006I There are no quiesced aggregates.

$> tsocmd "rxlstqsd"

rxlstqsd

ZFSQS006I There are no quiesced aggregates.

$>

|

Figure 4-9 Use of rxlstqsd from z/OS UNIX, TSO, and as SYSREXX routine

|

Note: On a down-level system, you receive a message that you must be at least on z/OS V2R2 to use the utility.

|

4.2.7 FSINFO zFS Modify interface command

The syntax of the FSINFO zFS Modify interface command is similar to the corresponding zfsadm command. The syntax is shown in Figure 4-10.

|

modify zFS_procname,fsinfo[,{aggrname | all}

[,{full | basic | owner | reset} [,{select=criteria | exceptions}]

[,sort=sort_name]]]

|

Figure 4-10 FSINFO zFS Modify interface command

Consider the following points regarding the command:

•Multiple selection criteria are separated by blanks.

•Parameters are positional.

4.2.8 Removing two zFS health checks

In z/OS V2R2, the following zFS health checks were removed because they are no longer needed:

•ZOSMIGV1R13_ZFS_FILESYS

•ZOSMIGREC_ZFS_RM_MULTIFS

4.3 Moving zFS into the OMVS address space

In this section, we described how to move zFS within OMVS.

4.3.1 Move preparation

First, we ensure that we are running with KERNELSTACKS above the bar or at least after the next IPL. The option is shown in Figure 4-11.

|

$> cn "d omvs,o" | grep KERNELSTACKS

KERNELSTACKS = ABOVE

$>

|

Figure 4-11 Displaying the OMVS KERNELSTACKS setting

It must be made clear that you have (at least) a BPXPRMxx member that is processed locally on next IPL and assures the new set up, as shown in Figure 4-12.

|

$> echo "The local sysclone value is:" $(sysvar SYSCLONE)

The local sysclone value is: 74

$> cat "//'SYS1.PARMLIB(IEASYS00)'" | grep OMVS

OMVS=(&SYSCLONE.,&OMVSPARM.),

$> cat "//'SYS1.PARMLIB(BPXPRM74)'"

KERNELSTACKS(ABOVE)

FILESYSTYPE TYPE(ZFS)

ENTRYPOINT(IOEFSCM)

PARM('PRM=(&SYSCLONE.,00)')

$>

|

Figure 4-12 Showing the BPXPRMxx parmlib settings for KERNELSTACKS and zFS

4.3.2 Moving and running zFS commands

If an IPL was needed to move, check to see whether this move was successful.

Displaying zFS related information and running zFS commands

The MODIFY ZFS command is no longer available. You must use the new MODIFY OMVS,PFS=ZFS interface, as shown in Figure 4-13.

|

$> cn "d omvs,p" | grep ZFS

ZFS IOEFSCM

ZFS PRM=(74,00)

$> cn "f zfs,query,level"

IEE341I ZFS NOT ACTIVE

$> cn "f omvs,pfs=zfs,query,level"

IOEZ00639I zFS kernel: z/OS zFS

Version 02.02.00 Service Level OA47915 - HZFS420.

Created on Fri May 29 13:31:44 EDT 2015.

sysplex(filesys,rwshare) interface(4)

IOEZ00025I zFS kernel: MODIFY command - QUERY,LEVEL completed successfully.

$>

|

Figure 4-13 Displaying information about zFS by using the new MODIFY interface

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.