JES3 examples and information

This appendix presents configuration examples and other JES3 considerations. It describes the necessary parameters and the considerations if more than one device type is installed in a library in a JES3 environment.

It provides two examples:

•Two libraries with an intermix of native drives of 3592-J1A and 3592-E05 installed

•Three libraries with an intermix of 3592-J1A, 3592-E05, 3592-E06/EU6, stand-alone cluster IBM Virtualization Engine TS7700 Grid, and multicluster TS7700 Virtualization Engine Grid installed

These examples provide all of the necessary information to install any possible configuration in an IBM System Storage TS3500 Tape Library. For more basic information about the products in these scenarios, see the following publications:

•z/OS JES3 Initialization and Tuning Guide, SA22-7549

•z/OS JES3 Initialization and Tuning Reference, SA22-7550

This appendix includes the following topics:

JES3 support for system-managed tape

JES3 TS3500 Tape Library support with Data Facility System Management Subsystem (DFSMS) is described. The primary purpose of this support is to maintain JES3 resource allocation and share tape allocations. For detailed information, see z/OS JES3 Initialization and Tuning Reference, SA22-7550.

DFSMS has support that provides JES3 allocation with the appropriate information to select a TS3500 Tape Library device by referencing device strings with a common name among systems within a JES3 complex.

To set up a TS3500 Tape Library in a JES3 environment, perform the following steps:

1. Define library device groups (LDGs). Prepare the naming conventions in advance. Clarify all the names for the library device groups that you need.

2. Include the esoteric names from step 1 in the hardware configuration definition (HCD) and activate the new Esoteric Device Table (EDT).

3. Update the JES3 INISH deck:

a. Define all devices in the TS3500 Tape Library through DEVICE statements.

b. Set JES3 device names through the SETNAME statement.

c. Define which device names are subsets of other device names through the high-watermark setup name (HWSNAME) statement.

All TS3500 Tape Library units can be shared between processors in a JES3 complex. They must also be shared among systems within the same SMSplex.

|

Restriction: Tape drives in the TS3500 Tape Library cannot be used by JES3 dynamic support programs (DSPs).

|

Define all devices in the libraries through DEVICE statements. All TS3500 Tape Library tape drives within a complex must be either JES3-managed or non-JES3-managed. Do not mix managed and non-managed devices. Mixing might prevent non-managed devices from use for new data set allocations and reduce device eligibility for existing data sets. Allocation failures or delays in the job setup will result.

Neither JES3 or DFSMS verifies that a complete and accurate set of initialization statements is defined to the system. Incomplete or inaccurate TS3500 Tape Library definitions can result in jobs failing to be allocated.

Library device groups

Library device groups (LDGs) isolate the TS3500 Tape Library drives from other tape drives in the complex. They allow JES3 main device scheduler (MDS) allocation to select an appropriate set of library-resident tape drives.

The DFSMS JES3 support requires LDGs to be defined to JES3 for SETNAME groups and HWSNAME names in the JES3 initialization statements. During converter/interpreter (C/I) processing for a job, the LDG names are passed to JES3 by DFSMS for use by MDS in selecting library tape drives for the job. Unlike a JES2 environment, a JES3 operating environment requires the specification of esoteric unit names for the devices within a library. These unit names are used in the required JES3 initialization statements.

|

Important: Even if the LDG definitions are defined as esoterics in HCD, they are not used in the JCL. There is no need for any UNIT parameter in JES3 JCL for libraries. The allocation goes through the automatic class selection (ACS) routines. Coding a UNIT parameter might cause problems.

The only need for coding the LDG definition in HCD as an esoteric name is the HWSNAME definitions in the JES3 INISH deck.

|

Each device within a library must have exactly four special esoteric names associated with it:

•The complex-wide name is always LDGW3495. It allows you to address every device and device type in every library.

•The library-specific name is an eight-character string composed of LDG prefixing the five-digit library identification number. It allows you to address every device and device type in that specific library.

•The complex-wide device type, shown in Table D-1, defines the various device types that are used. It contains a prefix of LDG and a device type identifier. It allows you to address a specific device type in every tape library.

Table D-1 Library device groups: Complex-wide device type specifications

|

Device type

|

Complex-wide device type definition

|

|

3490E

|

LDG3490E

|

|

3592-J1A

|

LDG359J

|

|

3592-E05

|

LDG359K

|

|

3592-E05 encryption-enabled

|

LDG359L

|

|

3592-E06 encryption-enabled

|

LDG359M

|

|

3592-E07 encryption-enabled

|

LDG359N

|

•A library-specific device type name, which is an eight-character string, starts with a different prefix for each device type followed by the five-digit library device number, as shown in Table D-2.

Table D-2 Library device groups: Library-specific device types

|

Device type

|

Library-specific device type

|

Content

|

|

3490E

|

LDE + library number

|

All 3490E in lib xx

|

|

3592-J1A

|

LDJ + library number

|

All 3592 Model J1A in lib xx

|

|

3592-E05

|

LDK + library number

|

All 3592 Model E05 in lib xx

|

|

3592-E05

|

LDL + library number

|

3592 Model E05 encryption-enabled in lib xx

|

|

3592-E06

|

LDM + library number

|

3592 Model E06 encryption-enabled in lib xx

|

|

3592-E07

|

LDN + library number

|

3592 Model E07

encryption-enabled in lib xx

|

It also allows you to address a specific device type in a specific tape library. In a stand-alone grid and a multiple cluster TS7700 Virtualization Engine grid installed in two physical libraries, there is still only one library-specific device name. The LIBRARY-ID of the composite library is used.

Updating the JES3 INISH deck

To allow JES3 to allocate the appropriate device, you must code several definitions:

•DEVICE statements

•SETNAME statements

•HWSNAME (high-watermark setup) statements

These statements are described in detail.

DEVICE statement: Defining I/O devices for TS3500 Tape Libraries

Use the DEVICE format to define a device so that JES3 can use it. A device statement (Figure D-1) must be defined for each string of TS3500 Tape Library drives in the complex. XTYPE specifies a one-character to eight-character name, which is given by the user. There is no default or specific naming convention for this statement. This name is used in other JES3 init statements to group the devices together for certain JES3 processes (for example, allocation). Therefore, it is necessary that all the devices with the same XTYPE belong to the same library and the same device type.

The letters CA in the XTYPE definition tell us that this is a CARTRIDGE device.

|

*/ Devices 3592-J1A, 3592-E05, 3592-E06, and 3592-E07 in Library 1 ............/* DEVICE,XTYPE=(LB13592J,CA),XUNIT=(1100,*ALL,,OFF),numdev=4 DEVICE,XTYPE=(LB13592K,CA),XUNIT=(1104,*ALL,,OFF),numdev=4

DEVICE,XTYPE=(LB13592M,CA),XUNIT=(0200,*ALL,,OFF),numdev=4

DEVICE,XTYPE=(LB13592N,CA),XUNIT=(0204,*ALL,,OFF),numdev=4

|

Figure D-1 DEVICE statement sample

|

Restriction: TS3500 Tape Library tape drives cannot be used as support units by JES3 dynamic support programs (DSPs). Therefore, do not specify DTYPE, JUNIT, and JNAME parameters on the DEVICE statements. No check is made during initialization to prevent TS3500 Tape Library drives from definition as support units, and no check is made to prevent the drives from allocation to a DSP if they are defined. Any attempt to call a tape DSP by requesting a TS3500 Tape Library fails, because the DSP is unable to allocate a TS3500 Tape Library drive.

|

SETNAME statement

The SETNAME statement is used for proper allocation in a JES3 environment. For tape devices, it tells JES3 which tape device belongs to which library. The SETNAME statement specifies the relationships between the XTYPE values (coded in the DEVICE Statement) and the LDG names (Figure D-2). A SETNAME statement must be defined for each unique XTYPE in the device statements.

|

SETNAME,XTYPE=LB1359K,

NAMES=(LDGW3495,LDGF4001,LDG359K,LDKF4001) Complex Library Complex Library Wide Specific Wide Specific Library Library Device Device Name Name Name Name |

Figure D-2 SETNAME rules

The SETNAME statement has these rules:

•Each SETNAME statement has one entry from each LDG category.

•The complex-wide library name must be included in all statements.

•A library-specific name must be included for XTYPEs within the referenced library.

•The complex-wide device type name must be included for all XTYPEs of the corresponding device type in the complex.

•A library-specific device type name must be included for the XTYPE associated with the devices within the library.

|

Tip: Do not specify esoteric and generic unit names, such as 3492, SYS3480R, and SYS348XR. Also, never use esoteric names, such as TAPE and CART.

|

High watermark setup names (HWSNAME)

Use the HWSNAME statement to define which device names are subsets of other device names. You must specify all applicable varieties. The HWSNAME command has this syntax:

HWSNAME, TYPE=(groupname,{altname})

The variables specify the following information:

•groupname: Specifies a device type valid for a high watermark setup.

•altname: Specifies a list of valid user-supplied or IBM-supplied device names. These are alternate units to be used in device selection.

Consider the following example:

HWSNAME,TYPE=(LDGW3495,LDGF4001,LDGF4006,LDG359J,LDG359K,LDG359M,LDG359N,LDJF4001,LDKF4001,LDKF4006)

The LDG HWSNAME statements have these rules:

•The complex-wide library name, LDGW3495, must include all other LDG names as alternates.

•The library-specific name must include all LDG names for the corresponding library as alternates. When all tape devices of a type within the complex are within a single 3494 Tape Library, the complex-device type name must also be included as an alternate name.

•The complex-wide device type name must include all library-specific device type names. When all devices of one type in the complex are within a single TS3500 Tape Library, the complex-wide device type name is equivalent to that library name. In this case, you need to also specify the library name as an alternate.

•The library-specific device type name must be included. Alternate names can be specified in the following manner:

– When all drives within the TS3500 Tape Library have the same device type, the library-specific device type name is equivalent to the library name. In this case, you need to specify the library-specific name as an alternate.

– When these drives are the only drives of this type in the complex, the complex-wide device type name is equivalent to the library-specific device type name.

Ensure that all valid alternate names are specified.

Example with two separate Tape Libraries

The first example includes different native tape drives in two separate Tape Libraries. Figure D-3 shows a JES3 complex with two TS3500 Tape Libraries attached to it. Library 1 has a LIBRARY-ID of F4001 and a mix of 3592-J1A and 3592-E05 drives installed. Library 2 has a LIBRARY-ID of F4006 and only 3592-E05 models installed. The 3592-E05 drives are not encryption-enabled.

Figure D-3 First JES3 configuration example

LDG definitions necessary for the first example

Table D-3 shows all the LDG definitions needed in HCD. There are a total of eight esoterics to define.

Table D-3 LDG definitions for the first configuration example

|

LDG definition

|

Value of LDG

|

Explanation

|

|

Complex-wide name

|

LDGW3495

|

Standard name, appears once

|

|

Library-specific name

|

LDGF4001

LDGF4006 |

One definition for each library

|

|

Complex-wide device type

|

LDG359J LDG359K |

One definition for each installed device type:

Represents the 3592-J1A devices

Represents the 3592-E05 devices

|

|

Library-specific device type

|

LDJF4001 LDKF4001

LDKF4006

|

One definition for each device type in each library:

Represents the 3592-J1A in library F4001

Represents the 3592-E05 in library F4001

Represents the 3592-E05 in library F4006

|

Device statements needed for this configuration

These examples use a naming convention for XTYPE that contains the library (LB1, LB2) in the first three digits and then the device type (Figure D-4). A naming convention for XTYPE is not mandatory, but it makes it easier to use the JES3 INISH deck.

|

*/ Devices 3592-J1A and 3592-E05 in Library 1 ............................/*

DEVICE,XTYPE=(LB13592J,CA),XUNIT=(1000,*ALL,,OFF),numdev=4 DEVICE,XTYPE=(LB13592K,CA),XUNIT=(1104,*ALL,,OFF),numdev=4 */ Devices 3592-E05 Encryption-Enabled in Library 2 ...................../* DEVICE,XTYPE=(LB23592L,CA),XUNIT=(2000,*ALL,,OFF),numdev=8 |

Figure D-4 First configuration example: Device-type definition sample

SETNAME statements needed for this configuration

Figure D-5 includes all the SETNAME statements for the first configuration example.

|

SETNAME,XTYPE=(LB13592J,CA),NAMES=(LDGW3495,LDGF4001,LDG359J,LDJF4001)

SETNAME,XTYPE=(LB13592K,CA),NAMES=(LDGW3495,LDGF4001,LDG359K,LDKF4001)

SETNAME,XTYPE=(LB23592L,CA),NAMES=(LDGW3495,LDGF4006,LDG359K,LDKF4006)

|

Figure D-5 First configuration example: SETNAME definition sample

You need three SETNAME statements for these reasons:

•One library with two different device types = Two SETNAME statements

•One library with one device type = One SETNAME statement

|

Tip: For definition purposes, encryption-enabled and non-encryption-enabled drives are considered two different device types. In the first example, all 3592 Tape Drives are not encryption-enabled.

|

HWSNAME statement needed for this configuration

The HWSNAME definition is tricky, so every statement shown in Figure D-6 is explained. If you are not experienced in JES3, read carefully through the explanation.

|

HWSNAME,TYPE=(LDGW3495,LDGF4001,LDGF4006,LDG359J,LDG359K,LDJF4001,LDKF4001,LDKF4006)1

HWSNAME,TYPE=(LDGF4001,LDJF4001,LDKF4001,LDG359J)2

HSWNAME,TYPE=(LDGF4006,LDKF4006)3

HWSNAME,TYPE=(LDJF4001,LDG359J)4

HWSNAME,TYPE=(LDG359J,LDJF4001)5

HWSNAME,TYPE=(LDG359K,LDKF4001,LDGF4006,LDKF4006)6

|

Figure D-6 HWSNAME definition sample

The numbers correspond to the numbers in Figure D-6:

1. All LDG definitions are a subset of the complex-wide name.

2. LDG359J is a subset of library F4001 (LDGF4001) because the other library only has 3592-E05s installed.

3. All 3592-E05s in library F4006 (LDKF4006) are a subset of library F4006. LDG359K will not be specified, because there are also 3592-E05s installed in the other library.

4. All 3592-J1As (LDG359J) are a subset of the 3592-J1A in library F4001 because no other 3592-J1As are installed.

5. All 3592-J1As in library F4001 (LDJF4001) are a subset of 3592-J1A because no other 3592-J1As are installed.

6. All 3592-E05s in library F4001 (LDKF4001) are a subset of 3592-E05. LDGF4006 (the entire library with the ID F4006) is a subset of 3592-E05, because only 3592-E05s are installed in this library.

Example with three Tape Libraries

Figure D-7 on page 895 shows a JES3 configuration with three TS3500 Tape Libraries attached to it. Library 1 has a LIBRARY-ID of F4001, a mix of 3592-J1A and 3592-E05 drives that are not encryption-enabled, and one TS7700 Virtualization Engine of a multiple cluster TS7700 Virtualization Engine grid (distributed library) installed. The multiple cluster TS7700 Virtualization Engine grid has a composite library LIBRARY-ID of 47110.

Library 2 has a LIBRARY-ID of F4006 and a mix of encryption-enabled and non-encryption-enabled 3592-E05 drives installed, which is also the reason why you might need to split a string of 3592-E05 drives. Library 2 is also the second distributed library for the multicluster grid with composite library LIBRARY-ID 47110.

Library 3 has a LIBRARY-ID of 22051 and only a TS7700 Virtualization Engine installed with a composite library LIBRARY-ID of 13001.

Figure D-7 does not show the actual configuration for the TS7700 Virtualization Engine configurations regarding the numbers of frames, controllers, and the back-end drives. Only the drives and frames actually needed for the host definitions are displayed.

Figure D-7 Second JES3 configuration example

LDG definitions needed for the second configuration example

Table D-4 shows all the LDG definitions needed in the hardware configuration definition of the second configuration example.

Table D-4 LDG definitions for the second configuration example

|

LDG definition

|

Value for LDG

|

Explanations

|

|

Complex-wide name

|

LDGW3495

|

Standard name, which appears once.

|

|

Library-specific name

|

LDGF4001

LDGF4006 LDG13001 LDG47110

|

One definition for each library and for each stand-alone cluster TS7700 Virtualization Engine grid. For a single cluster or multiple cluster TS7700 Virtualization Engine grid, only the composite library LIBRARY-ID is specified.

|

|

Complex-wide device type

|

LDG3490E LDG359J LDG359K

LDG359L

LDG359M

|

One definition for each installed device type:

•Represents the devices in multicluster TS7700 Virtualization Engine grid

•Represents the 3592-J1A

•Represents the 3592-E05

•Represents the 3592-E05 with Encryption

•Represents the 3592-E06

|

|

Library-specific device type

|

LDE13001 LDE47110

LDJF4001

LDKF4001

LDLF4006

LDMF4006

|

One definition for each device type in each library, except for the multicluster TS7700 Virtualization Engine grid:

•Represents the virtual drives in the stand-alone cluster TS7700 Virtualization Engine grid in library 22051

•Represents the virtual drives in the multicluster TS7700 Virtualization Engine grid in libraries F4001 and F4006

•Represents the 3592-J1A in library F4001

•Represents the 3592-E05 in library F4001

•Represents the encryption-enabled 3592-E05 in library F4006

•Represents the 3592-E06 in library F4006

|

Device statement needed

Figure D-8 shows all of the device statements for the second configuration example. The comment statements describe to which library the devices belong.

|

*/ Devices 3592-J1A and 3592-E05 in Library F4001 .............................../*

DEVICE,XTYPE=(LB13592J,CA),XUNIT=(1100,*ALL,,OFF),numdev=8 DEVICE,XTYPE=(LB13592K,CA),XUNIT=(1107,*ALL,,OFF),numdev=8, */ Devices 3592-E06 and 3592-E05 in Library F4006................................/* DEVICE,XTYPE=(LB2359M,CA),XUNIT=(4000,*ALL,,OFF),numdev=8 DEVICE,XTYPE=(LB2359L,CA),XUNIT=(2000,*ALL,,OFF),numdev=8

*/ Devices Stand-alone Cluster TS7700 Grid in library 22051 ........................../* DEVICE,XTYPE=(LB3GRD1,CA),XUNIT=(3000,*ALL,,OFF),numdev=256 */ Devices Multi Cluster TS7700 grid in libraries F4001 and F4006................/* ADDRSORT=NO DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0110,*ALL,S3,OFF) DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0120,*ALL,S3,OFF) DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0130,*ALL,S3,OFF)

DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0140,*ALL,S3,OFF) DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0111,*ALL,S3,OFF)

DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0121,*ALL,S3,OFF) DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0131,*ALL,S3,OFF)

DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(0141,*ALL,S3,OFF) ;;;;;;; DEVICE,XTYPE=(LB12GRD,CA),XUNIT=(01FF,*ALL,S3,OFF) |

Figure D-8 DEVICE statements for the second configuration example

|

Consideration: If you code NUMDEV in a peer-to-peer (PTP) virtual tape server (VTS) environment, the workload balancing from the CX1 controllers does not work. Therefore, you must specify each device as a single statement and specify ADDRSORT=NO to prevent JES3 from sorting them.

The same restriction applies to the virtual devices of the clusters of a multicluster grid configuration. If you want to balance the workload across the virtual devices of all clusters, do not code the NUMDEV parameter.

|

SETNAME statements needed

Figure D-9 on page 898 includes all the necessary SETNAME statements for the second configuration example.

|

SETNAME,XTYPE=LB1359J,NAMES=(LDGW3495,LDGF4001,LDG359J,LDJF4001)

SETNAME,XTYPE=LB1359K,NAMES=(LDGW3495,LDGF4001,LDG359K,LDKF4001)

SETNAME,XTYPE=LB2359L,NAMES=(LDGW3495,LDGF4006,LDG359L,LDKF4006)

SETNAME,XTYPE=LB2359M,NAMES=(LDGW3495,LDGF4006,LDG359M,LDMF4006)

SETNAME,XTYPE=LB3GRD1,NAMES=(LDGW3495,LDG13001,LDG22051,LDG3490E,LDE22051,LDE13001)

SETNAME,XTYPE=LB12GRD,NAMES=(LDGW3495,LDG47110,LDG3490E,LDE47110)

|

Figure D-9 SETNAME statement values for the second example

High watermark setup name statements

Figure D-10 shows the high watermark setup name statements for the second configuration example.

|

HWSNAME,TYPE=(LDGW3495,LDGF4001,LDGF4006,LDG13001,LDG47110,LDG3490E,

LDG359J,LDG359K,LDG359L,LDG359M,LDE13001,LDE47110,LDJF4001, LDKF4001,LDLF4006,LDMF4006) HWSNAME,TYPE=(LDGF4001,LDJF4001,LDKF4001)

HWSNAME,TYPE=(LDGF4006,LDLF4006,LDMF4006)

HWSNAME,TYPE=(LDG47110,LDE47110)

HWSNAME,TYPE=(LDG13001,LDE13001)

HWSNAME,TYPE=(LDG3490E,LDE47110,LDE13001)

HWSNAME,TYPE=(LDG359J,LDJF4001)

HWSNAME,TYPE=(LDG359K,LDKF4001)

HWSNAME,TYPE=(LDG359L,LDLF4006)

HWSNAME,TYPE=(LDG359M,LDMF4006)

|

Figure D-10 High watermark setup statements for the second example

Processing changes

Although no JCL changes are required, a few processing restrictions and limitations are associated with using the TS3500 Tape Library in a JES3 environment:

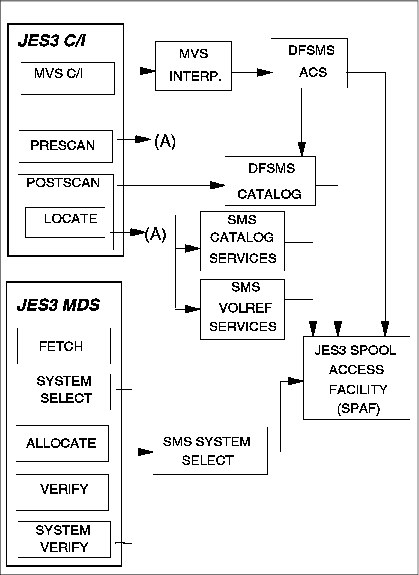

•JES3 spool access facility (SPAF) calls are not used.

•Two calls, one from the prescan phase and the other call from the locate processing phase, are made to the new DFSMS/MVS support module, as shown in Figure D-11 on page 899.

•The main device scheduler (MDS) processing phases, system select, and system verify are not made for tape data sets.

•The MDS verify phase is bypassed for TS3500 Tape Library mounts, and mount processing is deferred until job execution.

Figure D-11 on page 899 shows the JES3 processing phases for C/I and MDS. The processing phases include the support for system-managed direct access storage device (DASD) data sets.

The following major differences exist between TS3500 Tape Library deferred mounting and tape mounts for non-library drives:

•Mounts for non-library drives by JES3 are only for the first use of a drive. Mounts for the same unit are issued by z/OS for the job. All mounts for TS3500 Tape Library drives are issued by z/OS.

•If all mounts within a job are deferred because there are no non-library tape mounts, that job is not included in the setup depth parameter (SDEPTH).

•MDS mount messages are suppressed for the TS3500 Tape Library.

Figure D-11 JES3 C/I and MDS processing phases

JES3/DFSMS processing

DFSMS is called by the z/OS interpreter to perform these functions:

•Update the scheduler work area (SWA) for DFSMS tape requests

•Call automatic class selection (ACS) exits for construct defaults

DFSMS/MVS system-managed tape devices are not selected using the UNIT parameter in the JCL. For each data definition (DD) request requiring a TS3500 Tape Library unit, a list of device pool names is passed, and from that list, an LDG name is assigned to the DD request. This results in an LDG name passed to JES3 MDS for that request. Device pool names are never known externally.

Selecting UNITNAMEs

For a DD request, the LDG selection is based on the following conditions:

•When all devices in the complex are eligible to satisfy the request, the complex-wide LDGW3495 name is used.

•When the list of names contains names of all devices of one device type in the complex, the corresponding complex-device type name (for example, LDG3490E) must be used.

•When the list of names contains all subsystems in one TS3500 Tape Library, the library-specific LDG name (in the examples, LDGF4001, LDGF4006, and so on) is used.

•When the list contains only subsystems for a specific device type within one TS3500 Tape Library, the LDG device type library name (in the example, LDKF4001, and so on) is used.

New or modified data sets

For new data sets, ACS directs the allocation by providing Storage Group, Storage Class, and Data Class. When the Storage Group specified by ACS is defined in the active DFSMS configuration as a tape Storage Group, the request is allocated to a TS3500 Tape Library tape drive.

DFSMS-managed DISP=MOD data sets are assumed to be new update locate processing. If a catalog locate determines that the data set is old by the VOLSER specified, a new LDG name is determined based on the rules for old data sets.

Old data sets

Old data set allocations are directed to a specific TS3500 Tape Library when the volumes containing the data set are located within that TS3500 Tape Library. For old data sets, the list is restricted to the TS3500 Tape Library that contains the volumes.

DFSMS catalog processing

JES3 catalog processing determines all of the catalogs required by a job and divides them into two categories:

•DFSMS-managed user catalogs

•JES3-managed user catalogs

DFSMS catalog services, a subsystem interface call to catalog locate processing, is used for normal locate requests. DFSMS catalog services is invoked during locate processing. It invokes SVC 26 for all existing data sets when DFSMS is active. Locates are required for all existing data sets to determine whether they are DFSMS-managed, even if VOL=SER= is present in the DD statement. If the request is for an old data set, catalog services determine whether it is for a library volume. For multivolume requests that are system-managed, a check is made to determine whether all volumes are in the same library.

DFSMS VOLREF processing

DFSMS VOLREF services are invoked during locate processing if VOL=REF= is present in a DD statement for each data set that contains a volume reference to a cataloged data set. DFSMS VOLREF services determine whether the data set referenced by a VOL=REF= parameter is DFSMS-managed. Note that VOL=REF= now maps to the same Storage Group for a DFSMS-managed data set, but not necessarily to the same volume. DFSMS VOLREF services also collect information about the job’s resource requirements.

The TS3500 Tape Library supports the following features:

•Identifies the DDs that are TS3500 Tape Library-managed mountable entries

•Obtains the associated device pool names list

•Selects the LDG that best matches the names list

•Provides the LDG name to JES3 for setup

•Indicates to JES3 that the mount is deferred until execution

Fetch messages

While TS3500 Tape Library cartridges are mounted and demounted by the library, fetch messages to an operator are unnecessary and can be confusing. With this support, all fetch messages (IAT5110) for TS3500 Tape Library requests are changed to be the non-action informational USES form of the message. These messages are routed to the same console destination as other USES fetch messages. The routing of the message is based on the UNITNAME.

JES3 allocation and mounting

JES3 MDS controls the fetching, allocation, and mounting of the tape volumes requested in the JCL for each job to be executed on a processor. The scope of MDS tape device support is complex-wide, unlike z/OS job resource allocation, whose scope is limited to one processor. Another difference between JES3 MDS allocation and z/OS allocation is that MDS considers the resource requirements for all the steps in a job for all processors in a loosely coupled complex. z/OS allocation considers job resource requirements one step at a time in the executing processor.

MDS processing also determines which processors are eligible to execute a job based on resource availability and connectivity in the complex.

z/OS allocation interfaces with JES3 MDS during step allocation and dynamic allocation to get the JES3 device allocation information and to inform MDS of resource deallocations. z/OS allocation is enhanced by reducing the allocation path for mountable volumes. JES3 supplies the device address for the TS3500 Tape Library allocation request through a subsystem interface (SSI) request to JES3 during step initiation when the job is executing under the initiator. This support is not changed from previous releases.

DFSMS/MVS and z/OS provide all of the TS3500 Tape Library support except for the interfaces to JES3 for MDS allocation and processor selection.

JES3 MDS continues to select tape units for the TS3500 Tape Library. MDS no longer uses the UNIT parameter for allocation of tape requests for TS3500 Tape Library requests. DFSMS/MVS determines the appropriate LDG name for the JES3 setup from the Storage Group and Data Class assigned to the data set, and replaces the UNITNAME from the JCL with that LDG name. Because this action is done after the ACS routine, the JCL-specified UNITNAME is available to the ACS routine. This capability is used to disallow JCL-specified LDG names. If LDG names are permitted in the JCL, the associated data sets must be in a DFSMS tape environment. Otherwise, the allocation fails, because an LDG name restricts allocation to TS3500 Tape Library drives that can be used only for system-managed volumes.

|

Restriction: An LDG name specified as a UNITNAME in JCL can be used only to filter requests within the ACS routine. Because DFSMS/MVS replaces the externally specified UNITNAME, it cannot be used to direct allocation to a specific library or library device type.

|

All components within z/OS and DFSMS/MVS request tape mounting and demounting inside a TS3500 Tape Library. They call a Data Facility Product (DFP) service, library automation communication services (LACS), instead of issuing a write to operator (WTO), which is done by z/OS allocation, so all mounts are deferred until job execution. The LACS support is called at that time.

MDS allocates an available drive from the available unit addresses for LDGW3495. It passes that device address to z/OS allocation through the JES3 allocation SSI. At data set OPEN time, LACS are used to mount and verify a scratch tape. When the job finishes with the tape, either CLOSE or deallocation issues a demount request through LACS, which removes the tape from the drive. MDS does normal breakdown processing and does not need to communicate with the TS7700.

Multicluster grid considerations

With JES3, the scratch allocation assistance (SAA) and device allocation assistance (DAA) functions are not applicable because JES3 handles tape device allocation itself and does not use standard MVS allocation algorithms. In a multicluster grid configuration, careful planning of the Copy Consistency Points and the Copy Override settings can help avoid unnecessary copies in the grid and unnecessary traffic on the grid links.

Consider the following aspects, especially if you are using a multicluster grid with more than two clusters and not all clusters contain copies of all logical volumes:

•Retain Copy mode setting

If you do not copy logical volumes to all of the clusters in the grid, JES3 might, for a specific mount, select a drive that does not have a copy of the logical volume. If Retain Copy mode is not enabled on the mounting cluster, an unnecessary copy might be forced according to the Copy Consistency Points that are defined for this cluster in the Management Class.

•Copy Consistency Point

Copy Consistency Point has one of the largest influences on which cluster’s cache is used for a mount. The Copy Consistency Point of Rewind Unload (R) takes precedence over a Copy Consistency Point of Deferred (D). For example, assuming that each cluster has a consistent copy of the data, if a virtual device on Cluster 0 is selected for a mount and the Copy Consistency Point is [R,D], the CL0 cache will be selected for the mount. However, if the Copy Consistency Point is [D,R], CL1’s cache will be selected.

For workload balancing, consider specifying [D,D] rather than [R,D]. This will more evenly distribute the workload to both clusters in the grid.

If the Copy Consistency Points for a cluster are [D,D], other factors are used to determine which cluster’s cache to use. The “Prefer local cache for fast ready mount requests” and “Prefer local cache for non-fast ready mounts” overrides will cause the cluster that received the mount request to be the cache used to access the volume.

•Cluster families

If there are more than two clusters in the grid, consider defining cluster families. Especially in multi-site configurations with larger distances between the sites, defining one cluster family per site can help reduce the grid link traffic between both sites.

•Copy Override settings

Remember that all Copy Override settings, such as “Prefer local cache for fast ready mount requests” and “Prefer local cache for non-fast ready mounts”, always apply to the entire cluster, where the Copy Consistency Points defined in the Management Class can be different and tailored according to workload requirements.

You can find detailed information about these settings and other workload considerations in Chapter 5, “Hardware implementation” on page 189 and Chapter 10, “Performance and monitoring” on page 653.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.