Student feedback in engineering: a discipline-specific overview and background

Abstract:

This chapter reviews the need to improve key learning outcomes of engineering education, among them conceptual understanding, solving real problems in context, and enabling skills for engineering such as communication and teamwork. At the same time it is necessary to improve both the attractiveness of engineering to prospective students and retention in engineering programmes. Research suggests that to address these problems the full student learning experience needs to better affirm students’ identity formation. Student feedback is identified as a key source of intelligence to inform curriculum and course development. An argument is made for clarifying the purpose of any student feedback system, as there is an inherent tension between utilising it for accountability or for enhancement. An example shows how enhancement is best supported by a rich qualitative investigation of how the learning experience is perceived by the learner. Further, a tension between student satisfaction and quality learning is identified, suggesting that to usefully inform improvement, feedback must always be interpreted using theory on teaching and learning. Finally, a few examples are provided to show various ways to collect, interpret and use student feedback.

Introduction

The aim of this book is to provide inspiration for enhancement of engineering education using student feedback as a means. It is important to recognise that enhancement is a value-laden term, and the course we set must be the result of the legitimate claims of all stakeholders, among them students, society, employers and faculty. As external stakeholders society and employers are mainly interested in the outcomes of engineering education – such as the competences and characteristics of graduates, the supply of graduates and the cost-effectiveness of education. Students and faculty share an additional interest in the teaching and learning processes, as internal stakeholders. Here, student feedback will be discussed as a source of information which can be productively used to improve engineering education – both the outcomes and processes – in the interest of all the stakeholders. As will be shown, the focus on enhancement has far-reaching implications for shaping an approach to collecting, interpreting and utilising student feedback: the keyword is usefulness to inform improvement.

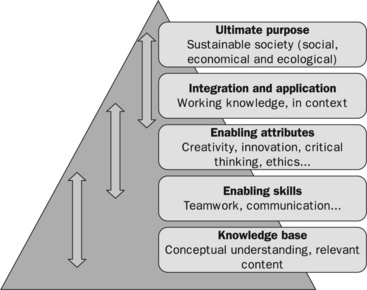

Improving the outcomes of engineering education

The desired outcomes of engineering education are shown in Figure 1.1, categorised into several layers. Each of these aspects can be the focus for improvement, and thus the underlying rationale for engineering education enhancement. Here the layers are nested in the sense that quality at any of the levels depends on the lower levels, and interrelations between the levels are crucial. An intervention to improve any one of the aspects needs to be seen in this full context. Ultimately, any outcomes of engineering education should be discussed in relation to the highest aim, which is to produce graduate engineers capable of purposeful professional practice in society.

The target for improvement can range from a detail, such as students’ conceptual understanding of a single concept in the subject, to a much more complex outcome such as their overall ability to contribute to a sustainable society. Nevertheless, whatever aspect one wishes to enhance, it is always within the context of the full curriculum, and singling out one aspect and addressing it with an isolated intervention will therefore only have a limited impact. For instance, if the aim is to develop graduates who would be more innovative engineers, it is not enough to insert a single ‘innovation learning activity’, such as asking the students to brainstorm 50 ways to use a brick. This is indeed an enjoyable activity, but as a bolt-on intervention it will have a limited impact and fail to truly foster innovative engineers. A successful endeavour must address relevant aspects of the whole curriculum: selection of content, required conceptual understanding, integration and application of knowledge, and the enabling skills and attributes needed for innovation. At the same time, innovation must be seen in a purposeful context – innovation for what and for whom?

Improving problem-solving skills

The rhetoric in engineering education is that engineers are problem solvers, and therefore much effort in education is devoted to developing students’ proficiency in problem solving. In lectures, tutorials and textbooks, students encounter numerous problems, and they quickly develop the habit of plugging numbers into equations and arriving at a correct answer, remarkably often a neat expression like π/2. However, it is not unusual to discover that a significant proportion of students, even many of those who have successfully passed an exam, display poor understanding whenever they are required to do anything outside reproducing known manipulations to known types of problems.

Educators are often surprised and disappointed by this, because the intention was that students should be able to explain matters in their own words, interpret results, integrate knowledge from different courses and apply it to new problems. In short, an important outcome of engineering education is that students acquire the conceptual understanding necessary for problem solving.

But if problem-solving skills are important outcomes of engineering education, it is necessary to widen the understanding of what should constitute the problems. While students indeed encounter many problems in courses, an overwhelming majority are pure and clear-cut, with one right answer: they are textbook problems. In fact, they have been artificially created by teachers to illustrate a single aspect of theory in a course. Thus, much of students’ knowledge can be accessed only when the problems look very similar to those in the textbook or exam: what they have learned often seems inert in relation to real life. This is because real life consists mainly of situations that are markedly different from what students are drilled to handle. Real-life problems can be complex and ill-defined and contain contradictions. Interpretations, estimations and approximations are necessary, and therefore ‘one right answer’ cases are exceptions. Solving real problems often requires a systems view. In the typical engineering curriculum students seldom practice how to identify and formulate problems themselves, and they rarely practice and test their own judgement. Students are simply not comfortable in translating between physical reality and models, and in understanding the implications of manipulations. As engineering is fundamentally based on this relationship, it certainly seems as if engineering educators have some problems to solve in engineering education.

Real problems also have the troublesome character that they do not fit the structure of engineering programmes. Real problems cannot easily fit into any of the subjects because they do not have the courtesy to respect (the socially constructed) disciplinary boundaries. As both modules and faculty are organised into disciplinary silos, at least in the research-intensive universities, real problems seem to be outside everyone’s responsibility and the consequence is that students very rarely meet any problem that goes across disciplines. To make matters worse, real problems are not only cross-disciplinary within engineering, but as they are often rich with context factors such as understanding user needs, societal, environmental and business aspects, they cross over into subjects outside engineering. While many students’ first response is to define away all factors that are not purely technical and then give the remainder of the problem a purely technical solution, this solution is probably not adequate to address the original problem. Engineers also need to be able to address problems that have a real context.

If this list of shortcomings seems overwhelming, the good news is that the situation certainly can be improved, as the fault lies primarily in how engineering is taught and – not least – what is assessed and how, because ‘what we assess is what we get’. By constantly rewarding students for merely reproducing known solutions to recurring standard problems in exams, this is what engineering education is reduced to. Enabling students to achieve better and more worthwhile learning outcomes is necessary. Rethinking the design of programmes and courses also makes it possible.

Improving the enabling skills and attributes for engineering

While conceptual understanding and individual problem-solving skills are necessary outcomes of engineering education, they are not sufficient. As engineers, graduates must also be able to apply their understanding and problem-solving skills in a professional context. Because the aim is to prepare students for engineering, education should be better aligned with the actual modes of professional practice (Crawley et al., 2007). This means that students need to develop the enabling skills for engineering, such as communication and teamwork, and attributes such as creative and critical thinking.

It is important to think of these skills and attributes not as ‘soft’ additions to the otherwise ‘hard core’ technical knowledge of engineering, but as engineering skills and legitimate outcomes of engineering education. For instance, the ability to communicate in engineering can be expressed in many different ways. Some signs that can be seen as indicators that students have acquired communication skills are when they can:

![]() use the technical concepts comfortably;

use the technical concepts comfortably;

![]() bring up what is relevant to the situation;

bring up what is relevant to the situation;

![]() argue for or against concepts and solutions;

argue for or against concepts and solutions;

![]() develop ideas through discussion;

develop ideas through discussion;

![]() present ideas, arguments and solutions clearly in speech, in writing, in sketches and other graphic representations;

present ideas, arguments and solutions clearly in speech, in writing, in sketches and other graphic representations;

When observing these kinds of learning outcomes in students, it should be impossible to distinguish the communication skills from their expression of technical knowledge, as these are integrated. In short, students’ technical understanding is transformed by the enabling skill into working knowledge (Barrie, 2004). There is nothing ‘soft’ or easy about engineering communication skills. In fact, they should be regarded as a whole range of necessary engineering competences embedded in, and inseparable from, students’ application of technical knowledge. The same kind of reasoning can be applied to other enabling skills such as teamwork, ethics, critical thinking, etc. A consequence is that these competences should be learned and assessed in the technical context supported by engineering faculty. They cannot be taught in separate classes by someone else. A synergy effect is that the learning activities where the students practice communication in the subject will simultaneously help reinforcing their technical understanding. Thus, developing these enabling skills and attributes is fundamentally about students becoming engineers.

Understanding and improving the full student learning experience

Improving the outcomes of engineering education and the effectiveness of its teaching and learning processes could be characterised as doing what educators already set out to do, only better. That does not imply that it is an easy task, or that it is trivial. It is an ambitious undertaking, an endeavour well worth spending all efforts on. Investigations of teaching and learning processes will help identify discrepancies between educational intentions and what actually happens: such gaps indicate room for improvement. How the learning experience is perceived by the learner is probably the best source of intelligence on how education can be improved and it is therefore crucial to seek and interpret student feedback on their concrete experiences. Student feedback will help inform the development of teaching and learning in order to more effectively contribute to the intended educational gains. This is not at all, as will be demonstrated later in this chapter, the same as giving the students what they want: their feedback must always be interpreted.

However, improving engineering education within the framework of present thinking is not enough. The effectiveness in fulfilling society’s need of engineering graduates is seriously reduced by two problems. The first problem is the weak recruitment of students to engineering programmes. At least in the industrialised world, engineering education faces considerable problems with attractiveness to prospective students, in general but also particularly in relation to gender. Engineering, as an education and career, is perceived not to accommodate personal development and being passionate about one’s work. The ROSE study (Schreiner and Sjøberg, 2007) shows that while secondary school students rate it highly important to work with ‘something I find important and meaningful’ (females more than males) and definitely agree that science and technology are ‘important for society’, they give very low ratings to ‘I would like to get a job in technology’ (females less than males). In less developed countries the picture is somewhat different, as to a greater extent, young people associate engineering with growth and building the country. There is increasing evidence that the problem lies in the experience of being an engineering student in relation to their personal identity formation. Interviews with 134 students (Holmegaard et al., 2010) showed that students who choose engineering are interested in doing engineering themselves: solving real problems in an innovative and creative atmosphere. What they encounter when starting engineering education is often not what they sought, but instead courses focused on textbook examples in disciplinary silos, with very little project-based cross-disciplinary real-world innovative work. Holmegaard et al. (2010) put it bluntly:

The conclusion is that engineering to a large extent matches the expectations of those who do not choose engineering.

In fact, engineering programs can be so much built from the bottom up that it literally takes years to reach the courses which could remind the students why they wanted to study engineering in the first place. But by that time, many of them will have left the programme.

The second problem is the high drop-out rate in engineering programmes. This is often believed to be an inevitable, almost desirable quality, a weeding out process where the less able students drop out, while the more able persist. But in fact, there is not much difference between those who leave and those who stay, neither in terms of interest in the subject nor in their ability to do well in the courses. The students who persist report having the same problems and disappointments with the curriculum that made their friends leave, and thus the students who leave are only the visible tip of the iceberg (Seymour and Hewitt, 1997). Recent research shows (Ulriksen et al., 2010) that a predictor of persistence is a sense of belonging, the degree to which students’ identity formation finds a match between who they are, or want to be, with the educational and social experience in the programme. It is as if students have a sensor constantly probing ‘Is this me?’ For prospective students, if the answer is ‘no’, they will probably not consider engineering education. If they choose engineering, and the answer during the program turns into a ‘no’, they are at risk of leaving the programme.

The two problems, lack of attractiveness and low retention, are two sides of the same coin: they constitute the external and internal symptoms of the same identity mismatch. In order to attract students to engineering education, and to retain them until they have successfully completed their degrees, the full learning experience in engineering curricula must support student learning and personal development through providing a meaningful and motivational context. It is hard to see a way to meaningfully tackle this task without the use of student feedback. Because there is a need to understand the relationship between student identity work and the design of engineering programmes, courses and learning activities, student feedback is the best source there is to truly understand how to educate engineers in a way which is aligned with student motivation. It is necessary to adopt an open, sensitive and listening mindset, something of an ethnographic approach to studying and understanding the student life world. Again, this is not the same as giving the students exactly what they say that they want. To address the double-sided problem of attractiveness and persistence, educators must better understand how the full educational experience can match students’ identity work so that the reading on ‘Is this me?’ can be and remain positive. This creates a need to consider not only how to improve the outcomes of education, but this task has to be approached in a way that simultaneously addresses even more fundamentally the student learning experience. ‘More of the same’ will not tackle the problem – rethinking and action is needed, in an iterative development process.

The task for educators is to engage students in learning experiences which support them in achieving the intended outcomes, as discussed above, and at the same time make the full learning experience affirm their identity formation. Improving the outcomes of engineering education and improving the full student learning experience should not be seen as two separate tasks, because fortunately the two issues are related in such way that they have to some extent the same solutions. The overemphasis on reproducing solutions to artificial and de-contextualised problems is just as inadequate in relation to student motivation as it is to their understanding. Students will more easily find meaning, motivation and personal development in learning experiences which result in conceptual understanding, in developing engineering skills and attributes, in working with real problems in context, in aligning education with professional practice, and in a purposeful approach to engineering in society.

Clarifying the purpose of collecting student feedback

The two classic purposes of evaluation are quality assurance and quality enhancement (Biggs and Tang, 2007). Other terms used for the same dichotomy are accountability and improvement (Bowden and Marton, 1998); appraisal and developmental purpose (Kember et al., 2002); and judgemental and developmental purpose (Hounsell, 2003). It is important to decide on the purpose from the outset, for two important reasons which will be outlined below. As the title of this book suggests, the focus here is enhancement of engineering education. Unfortunately, it is very often the case that the purpose for which the student feedback is being collected is never made clear, and this confusion propagates to every issue surrounding student feedback, including how to collect, interpret and use it. Here an attempt will be made to sort out the different possible purposes and their implications, in order to adopt a truly enhancement-led approach.

Accountability or improvement: a fundamental tension

The first reason for settling the purpose is that there is a tension between accountability and improvement: there is a different logic to them. This can shed some light on why student feedback is often a very sensitive issue. When the purpose is not made clear and allowed to guide every aspect of the system, this confusion can lead to a conflict between stakeholders, a conflict which most often appears around methods for collection and use of student feedback. In fact, student feedback is an issue full of power and politics, and different stakeholders manoeuvre to further their own position around the issue. The cause of this are changed forms of management in the public sector in the last few decades, not only in higher education. Management takes place less through planning processes, rules and regulations, and more through objectives and follow-up (Power, 1999; Dahler-Larsen, 2005). The result is an increasing pressure for evaluations and transparency, and ever more, and ever more sophisticated, systems for evaluation.

The pressure to evaluate and collect student feedback seems to mostly concern that it is done. There is considerably less pressure to show any real results from it. But it is necessary to start by clarifying the purpose and utilisation:

In principle, evaluation should not be made at all unless those making or requiring the evaluation are sure how they are going to benefit from it. (Kogan, 1990)

Evaluation is often loosely coupled (Weick, 1976) to improvements. It is not unusual that the official discourse around a student feedback system states that the purpose is to improve education, while in reality it is being used to audit teachers, or, indeed, only as a ritual intended to create a facade of rationality and accountability (Dahler-Larsen, 2005; Edström, 2008). Evaluation is demanded, despite its lack of actual effects, but mainly because it seems appropriate, and especially so when it involves student feedback. The suggestion here is not to do away with evaluation and student feedback, but that evaluation and collection of student feedback will potentially have much better impact on enhancement of teaching and learning if its purpose is made clear. A purely enhancementled approach to student feedback can liberate teachers from feeling that they themselves are under scrutiny, and may therefore better lead to improvement.

Here it may be worth noting that accountability is also a legitimate purpose. This is not an argument for a system where educators should not be held accountable for the quality of their work. Educators should be accountable. But so should evaluators or anyone arguing for evaluation. If the espoused purpose is enhancement, the system must be designed, all the way through, so that it can indeed serve this purpose. One fundamental problem is that it is difficult to bring about enhancement to teaching and learning through an evaluation system which is accountability led, as it can be perceived as a threat to the teachers, who may react by watching their backs and trying to gloss over any problems. It takes a non-threatening enhancement-led system to liberate teachers and open up for a genuine focus on improvement of education. A survey system alone will not improve education (Kember et al., 2002; Edström, 2008) – but student feedback can lead to improvement of education if data is purposefully collected, interpreted and analysed, and turned into action plans coupled with resources, support and leadership.

Accountability or improvement – implications for methodology

The second reason why it is important to decide from the beginning whether the purpose is improvement or accountability, is that different data collection methods would be appropriate depending on which purpose it is supposed to serve. Indeed, it is very common in universities to have heated debates on the technicalities in evaluation methods (for instance items in a survey instrument) without even having discussed the purpose of evaluation and how it is to be utilised. There is often reason to note (Ramsden, 1992) that:

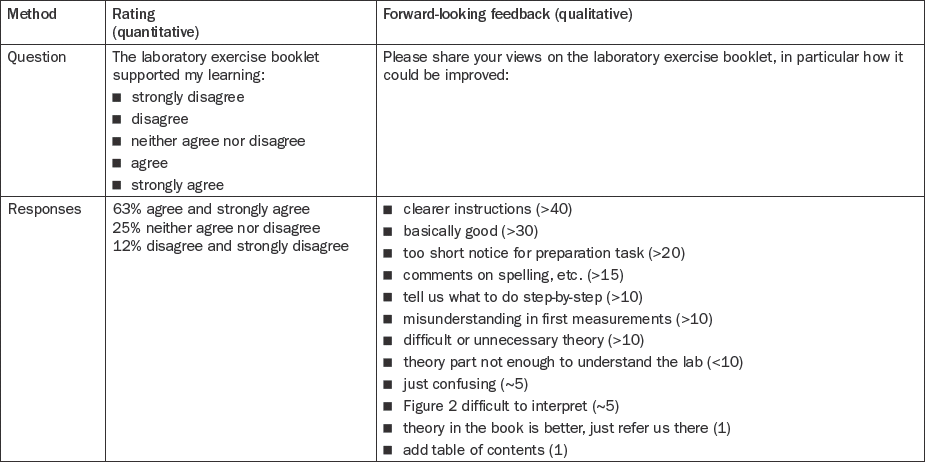

Most survey instruments used for collecting student feedback are based on quantitative ratings (Richardson, 2005) and therefore seem designed with an accountability purpose in mind, or at least, they have very limited value to inform improvement. If the purpose is improvement, a qualitative, ‘open’ approach is better suited to investigate student experience of the teaching and learning processes. With richer descriptions, the analysis of what is going on will be better informed, as will the design of interventions. Table 1.1 illustrates two different ways to ask questions, with completely different potential for analysis and action.

Using student feedback to improve engineering education

Do not give the students what they want – give them something better!

So how is it possible to go beyond simply collecting data – and use student feedback to actually improve courses and programmes? Interpretation of student comments, and subsequent course development, must be done with knowledge of the context and theories on student learning. In particular, it is important to note that the purpose of higher education is learning, not student satisfaction. This is not to say that an educator’s job is to keep students unhappy either, but the relation between learning and satisfaction is not straightforward and there is definitely a tension between them. This is aptly put by Gibbs (2010):

What [students] may want teachers to do may be known from research evidence to be unlikely to result in educational gains.

Take for instance this e-mail, which a professor received from a student in the first-year mechanics course:

After looking through all the exams written by you, it feels like you have a much higher standard than the other examiners. The biggest problem, as I see it, is that I cannot learn all your questions because they don’t seem to recur. When the questions are always different it is very difficult to learn all the solutions. Please could you make an exam for next week more like those by the other examiners.

Of course, this professor wants students to achieve conceptual understanding, and therefore avoids assessing the most obvious reproduction of standard solutions to known problems. It would be devastating to accommodate this student’s wish for more predictable assessment which would allow for, and even invite, learning by rote. But knowing that this attitude exists is still very valuable information, because it can then be addressed in many ways. While there may be limited room to effectively help this particular student in the remaining week before the exam, it is certainly possible to support future students in becoming familiar with the required level, and with this way of expressing understanding, early on in the course. The professor can discuss different qualities of understanding and make it clear what they will set out to achieve in this course, and give little quizzes regularly during the course so it will not come as a shock at the end, when it is too late. Further, the e-mail can spark discussions about the quality of learning outcomes in the department, because the other examiners would probably benefit from a more sophisticated understanding of the relationship between how assessment is designed and its effects on learning.

In relation to this example, a final observation can be made that this professor obviously comes across as rather odd to this student, who, if asked, may give the professor a low rating. Some institutions have student rating system where teachers are rewarded for high ratings, or even depend on them for tenure. That creates incentives to do what it takes to keep students happy, even when it means sacrificing the quality of learning outcomes. But a better principle is: Do not give the students what they want – give them something better! Students are learners, not consumers. If what really matters most is the quality of learning and improvement of learning processes, then student feedback must be a much broader concept than student ratings of teaching. But educators must learn how to use student feedback productively; to become useful it must be interpreted:

To be effective in quality improvement, data […] must be transformed into information that can be used within an institution to effect change. (Harvey, 2003)

A framework for interpreting student feedback

Student feedback shows how the learning process is experienced by the learner, and the main reason to collect their feedback is to be able to improve the effectiveness of course design. Naturally, as was argued above, students will always give their feedback from their own frames of reference. Students’ experience of their learning processes – and thus the feedback they give – depends to a great extent on how the learning activity, course or programme are designed. In particular, it will influence the extent to which students adopt a deep or surface approach to learning (Marton and Säljö, 1984; Gibbs, 1992; Biggs and Tang, 2007).

A deep approach to learning is when the student’s intention is to find meaning, to understand: the result is well-structured and lasting knowledge. Course characteristics associated with a deep approach are (Biggs and Tang, 207):

![]() learner activity, including interaction with others;

learner activity, including interaction with others;

![]() well-structured knowledge base;

well-structured knowledge base;

![]() self-monitoring (including awareness of one’s own learning processes, self-assessment and reflection exercises).

self-monitoring (including awareness of one’s own learning processes, self-assessment and reflection exercises).

These should be guiding principles for enhancing engineering education. On the other hand, a surface approach to learning is associated with an intention to complete the task as quickly as possible. The focus is on being able to produce the required signs of knowledge, not the underlying meaning. In anticipation of the assessment, students focus on being able to reproduce the subject matter – but since they do not seek meaning, they will not find it. It is inefficient to study using a surface approach, because the result is disastrous. Although the focus was on passing the course, the resulting learning is poorly structured and easily forgotten, so in fact, passing the course may sometimes be hard – unless assessment allows for this poor quality of understanding. Course characteristics associated with a surface approach are (Gibbs, 1992):

![]() relatively high class contact hours;

relatively high class contact hours;

![]() excessive amount of course material;

excessive amount of course material;

![]() lack of opportunity to pursue subjects in depth;

lack of opportunity to pursue subjects in depth;

![]() lack of choice over subjects and lack of choice over the method of study;

lack of choice over subjects and lack of choice over the method of study;

Many engineering programmes suffer from several of these characteristics and these ought to be reduced.

Most students have the capacity to adopt both approaches, and the purpose of course design is thus to influence students to adopt a deep approach to learning, as the following quote from a third-year engineering student indicates:

The things I remember from a course are the parts we had assignments on. Then I really sat down with the problems and worked out the solutions myself. When I study old exams, I check up the correct answer right away, and then move on without really learning. (Edström et al., 2003)

This shows that the same student can use both deep and surface approaches, within the same course. Here, working on assignments is associated with a deep approach, while studying for the exam is associated with a surface approach.

In addition to course-related factors, student expectations will be shaped also by other factors preceding the particular course: students’ previous experiences and their conceptions of learning (Prosser and Trigwell, 1999), as well as their attitude to knowledge (Perry, 1970). These factors are therefore also present in their learning experience and thus in their feedback. While these factors may predispose a student to spontaneously adopt a deep or surface approach to learning, it is not a fixed and inherent trait but one that can be influenced. In order to support students in assuming a role as learners that differs from their expectations, faculty can actively support their transition into a more appropriate role, by increasing students’ awareness of their own learning processes, by confronting the symptoms of the surface approach, and creating trust in the learning model.

Collecting student feedback – a student learning focus

When setting out to collect student feedback, a useful principle is to ‘begin with the end in mind’. What data can actually inform education enhancement, or in other words, what is necessary to investigate about the learning process in order to be able to develop it? Educators’ own views on teaching and learning will influence which variables are perceived as possible, acceptable and desirable to manipulate in course development. Perhaps this is a contributing reason why so often a focus on teaching and the teacher seems to be present when student feedback is solicited. But if the aim is to improve learning, the guiding principle for the investigation should be ‘How are the students doing?’ rather than ‘How is the teacher doing?’ A few suggestions intended to illustrate some of the implications for student feedback that follow from adopting a genuine student learning focus are given below.

Assessment data as student feedback on their learning

If the aim is to improve student learning, then an appraisal of actual student learning outcomes is probably the best input to inform course development. To say the least, how well students learned must always be a more relevant variable to pursue than how well they liked the teaching or the teachers. While it is seldom thought of as such, one excellent form of student feedback on their learning is assessment data. Even in a seemingly well-designed and popular course, all teachers who go through assessments will be able to set urgent and relevant improvement targets. And since teachers have to go through assessments anyway, why not make full use of this source of intelligence? A simple approach is to keep a notepad handy when marking student work, to jot down troublesome issues, analysis and possible actions. An added advantage is that the assessments are conveniently available. The data has already been collected and has probably been archived over several years. But the quality of assessment data still depends on the validity of the assessment: does it really measure the intended learning outcomes? Do the exams require the level of conceptual understanding that students were intended to reach, or could it be possible to do well on the exams by only applying lower level outcomes, such as pattern matching the recurring types of problems? Sometimes it may be illuminating to triangulate, for instance by oral discussions that more easily reveal those misconceptions which can hide behind mere reproduction.

Student feedback as a way of directly improving learning

Soliciting feedback can serve as a means to facilitate student awareness of their own understanding and learning processes. In this case, the chief aim is to directly improve learning through reflection. Two examples are given below.

Eric Mazur (1997) developed a method where student feedback is used to expose student understanding as it develops in real time and to immediately improve it. In a lecture, students are given a multiple-choice question where wrong alternatives reflect common misconceptions. After a first vote (using show-of-hands, coloured slips or electronic response systems), students are asked to convince their neighbour for a few minutes, and thereafter the class takes a new vote. Naturally, the discussion is a very effective learning activity for the students, as they will investigate the different assumptions and compare the arguments for and against them, and the class systematically converges towards the correct answer.

The one-minute paper (Angelo and Cross, 1993) is a simple method for soliciting feedback. At the end of any learning activity, students answer one or more questions on a sheet of paper. Questions such as ‘What are the three most useful things you have learned?’ can be asked, either in relation to the learning activity or to the whole course so far. This will invite student reflection as well as expose to the teacher how the students are getting along, thereby showing the potential for improvement. A common variant is to ask about the muddiest point in a lecture: ‘What point remains least clear to you?’ How this feedback is used depends on the purpose and the available resources. Selected muddy points can be commented on in the next lecture, via e-mail or the course homepage. Alternatively, the sheets can be stored and only read when preparing for the same lecture next year.

Finding out what students do out of class

While teachers often solicit student feedback on what they, the teachers, do during class, it is much less common to find out what the students themselves do outside scheduled hours. This negligence is remarkable, since the volume and quality of independent study is of utmost importance for the quality of student learning. It is therefore useful to know how much time they spend studying (time-on-task), the distribution of their effort during the course, and whether the teaching generates appropriate learning activity outside class (Gibbs, 1999). Even if it comes as an unpleasant surprise, discovering that most students have not yet opened the course book five weeks into the course is like receiving a precious gift – low hanging fruit – this situation has much potential for major improvements. Finding out can be as simple as asking students to draw a graph indicating how much time they have spent studying every week of the course, or just an open-text question where they are asked to say how they went about their studies in the course. To gain deeper insights, it is a useful to conduct in-depth interviews with students, to investigate their lived world as students. By recording and perhaps even transcribing the interviews their narrative can be analysed just like any research material.

A case of effective learning – finding out why it worked

An innovative learning activity used at the Royal Institute of Technology in Stockholm is student problem-solving sessions replacing the traditional weekly tutorials where a teacher solves problems on the board. Instead, the students will all prepare to present solutions to weekly problem sets. Arriving at the session, students tick on a list what problems they are prepared to present. For each problem, a new student is randomly picked to present at the board, followed by class discussions on any alternative solutions, critical aspects and inherent difficulties. Ticking (say) 75 per cent of the problems is required, but as the purpose is purely formative, the quality of the presentation does not affect the grade. Students must, however, demonstrate that they have prepared, and at least be able to lead a classroom discussion toward a satisfactory treatment of the problem. The teachers who first began to implement this were surprised by the extraordinary results. There were substantial improvements in understanding and in exam results (pass rate going up from below 60 per cent to consistently around 70–85 per cent). The activity was popular with the students (rated well over 4 on a Likert scale of 1–5). Further, teachers appreciated the improved cost-effectiveness (not least by eliminating the tedious work of correcting poor exam papers) and they found themselves in a much more stimulating role, as discussing the subject with students who were prepared and active was much more enjoyable than presenting solutions to a silent room of students taking notes.

But it was student feedback which helped the teachers finding out exactly why this process was working so well. Student revealed in interviews that this felt like an effective way to study, not least because the problem-solving was aligned with the performance expected in the assessment. Students actually spent 6–7 hours in preparation before each session, forming groups and running practice sessions in empty classrooms, taking turns to present and discussing each problem together. Student problem-solving sessions were generating much time spent on-task, which was well distributed over the length of the course, and the learning activity was perfectly appropriate (Gibbs, 1999). In fact, the scheduled sessions were just the tip of the iceberg that drove large volumes of extremely good studies. In stark contrast, when asked about preparations for traditional teacher-led sessions, a typical student reply was:

At the sessions, all students could easily follow the solution, as they were already familiar with the problem, even if they had not succeeded in solving it. They also received feedback on their own efforts through the class discussion on alternative solutions and critical aspects of the problem. It may not seem like a big difference to a person who accidentally opens the door of a classroom if it is a student or teacher who is standing at the board, but student feedback revealed the difference in volume and quality of work that these learning activities generate outside class. It turned out that student-led and teacher-led tutorials are worlds apart: the learning process is fundamentally changed. Student problem-solving sessions obviously improve students’ conceptual understanding of the subject. The activity format also contributes to developing communication skills, which is an important enabling skill for an engineer.

Furthermore, it is an active and more social learning format in which both engineering students and teachers enjoy much more stimulating roles than in the old and weary routine. The evidence gained through student feedback played an important role in understanding exactly how the learning process was changed by this particular intervention. With this knowledge and evidence it is possible to spread, if not the exact format of this particular activity but rather its fundamental principles, to benefit other courses in engineering education.

Conclusions

This chapter has argued that student feedback is a crucial source of intelligence which reveals clues about the inner workings of the teaching and learning processes, thus enabling educators to better understand and improve them. Engineering education needs to enhance not only the quality of learning outcomes, but also the full learning experience in order to address the issues of attractiveness and retention in engineering education. An enhancement-led approach to collecting student feedback should be non-threatening to the teaching staff and collect rich qualitative descriptions that can be utilised to inform development. Finally, as the tension between student satisfaction and learning is recognised, student feedback always must be interpreted. The principle is: Do not give students what they want, give them something better!

References

Angelo, T.A., Cross, P.K. Classroom Assessment Techniques. San Francisco, CA: Jossey-Bass; 1993.

Barrie, S. A research-based approach to generic graduate attributes policy. Higher Education Research and Development. 2004; 23(3):261–275.

Biggs, J., Tang, C. Teaching for Quality Learning at University: What the Student Does. Buckingham, UK: Society for Research into Higher Education and Open University Press; 2007.

Bowden, J., Marton, F. The University of Learning: Beyond Quality and Competence in Higher Education. London: Kogan Page; 1998.

Crawley, E.F., Malmqvist, J., Ostlund, S., Brodeur, D.R. Rethinking Engineering Education: The CDIO Approach. New York: Springer; 2007.

Dahler-Larsen, P. Den rituelle reflektion – om evaluering i organisationer. Odense, Denmark: Syddansk Universitetsforlag; 2005.

Edström, K. Doing course evaluation as if learning matters most. Higher Education Research and Development. 2008; 27(2):95–106.

Edström, K., Törnevik, J., Engström, M., Wiklund, Ǻ. Student involvement in principled change: understanding the student experience. In: Rust C., ed. Improving Student Learning: Theory, Research and Practice. Proceedings of the 2003 11th International Symposium. UK: OCSLD: Oxford; 2003:158–170.

Gibbs, G. Improving the Quality of Student Learning. Bristol, UK: Technical and Educational Services; 1992.

Gibbs, G. Using assessment strategically to change the way students learn. In: Brown S., Glasner A., eds. Assessment Matters in Higher Education. Buckingham, UK: Society for Research into Higher Education and Open University Press, 1999.

Gibbs, G. Dimensions of Quality. York, UK: The Higher Education Academy; 2010.

Handal, G. Studentevaluering av undervisning: Håndbok for lœrere og studenter i høyere utdanning. Oslo, Norway: Cappelen Akademisk Forlag; 1996.

Harvey, L. Student feedback [1]. Quality in Higher Education. 2003; 9(1):3–20.

Holmegaard, H.T., Ulriksen, L., Madsen, L.M., Why students choose (not) to study engineering. Proceedings of the Joint International IGIP-SEFI Annual Conference 2010, Trnava, Slovakia, 19–22 September 2010, 2010.

Hounsell, D. The evaluation of teaching. In: Fry H., Ketteridge S., Marshall S., eds. A Handbook for Teaching and Learning in Higher Education: Enhancing Academic Practice. London: Kogan Page, 2003.

Kember, D., Leung, D.Y.P., Kwan, K.P. Does the use of student feedback questionnaires improve the overall quality of teaching? Assessment and Evaluation in Higher Education. 2002; 27(5):411–425.

Kogan, M. Fitting evaluation within the governance of education. In: Granheim M., Kogan M., Lundgren U.P., eds. Evaluation as Policy Making: Introducing Evaluation into a National Decentralised Educational System. London: Jessica Kingsley Publishing, 1990.

Marton, F., Säljö, R. Approaches to learning. In: Marton F., Hounsell D., Entwistle N., eds. The Experience of Learning. Edinburgh, UK: Scottish Academic Press, 1984.

Mazur, E. Peer Instruction: A User’s Manual. Upper Saddle River, NJ: Prentice Hall; 1997.

Perry, W.G. Forms of Ethical and Intellectual Development in the College Years: A Scheme. New York: Holt, Rinehart and Winston; 1970.

Power, M. The Audit Society: Rituals of Verification. Oxford, UK: Oxford University Press; 1999.

Prosser, M., Trigwell, K. Understanding Learning and Teaching: The Experience in Higher Education. Buckingham, UK: Society for Research into Higher Education and Open University Press; 1999.

Ramsden, P. Learning to Teach in Higher Education. London: Routledge; 1992.

Schreiner, C., Sjøberg, S. Science education and youth’s identity construction – two incompatible projects? In: Corrigan D., Dillon J., Gunstone R., eds. The Re-emergence of Values in the Science Curriculum. Rotterdam, The Netherlands: Sense Publisher, 2007.

Seymour, E., Hewitt, N.M. Talking About Leaving: Why Undergraduates Leave the Sciences. Boulder, CO: Westview Press; 1997.

Ulriksen, L., Madsen, L.M., Holmegaard, H.T. What do we know about explanations for drop out/opt out among young people from STM higher education programmes? Studies in Science Education. 2010; 46(2):209–244.

Weick, K. Educational organisations as loosely coupled systems. Administrative Science Quarterly. 1976; 21(1):1–19.