There are two dimensions to correct practice for data analysts working with data; compliance with legal and regulatory requirements, which forms the first part of this chapter; and giving themselves the best chance for success by looking at data quality and thinking about the data community, which is covered in the second part.

WORKING WITH OTHER PEOPLE’S DATA

Nowadays, businesses are able to link their customer information together with that from third parties, such as social media sites, to create a rich picture of what their customers are motivated by. Some will have this expertise in-house; others will do so supported by specialist marketing agencies or analytics companies.

Many users are unaware that the provision of a service free at the point of consumption, such as Facebook, is funded by the business making use of their data. When this comes to light, the response can be extremely negative – particularly when the service and the use to which it puts data do not have an obvious link.

EXAMPLE

unroll.me is a web service that promises to help users manage large amounts of received marketing emails. Once given access to a user’s mailbox, it can create a digest email for them – and also make it easy to unsubscribe (‘unroll’) from these emails.

What users were not aware of was how unroll.me would sell this data on, which only came to public attention through a New York Times investigation into Uber.

This report identified that Slice Intelligence (the owners of unroll.me) collected emailed receipts from a rival taxi service called Lyft and sold the information, which was anonymised, to Uber. Uber then used the data as a proxy for the health of Lyft’s business.

(www.nytimes.com/2017/04/24/technology/personal-data-firm-slice-unroll-me-backlash-uber.html)

Is this good for us?

Developments in data usage often lead towards increased personalisation; advertising is more targeted to what the user is interested in, and websites may change what they display to prioritise certain offerings. In an area such as insurance, enhanced data and analytics may enable more personalised pricing, so those with healthier lifestyles or who drive more carefully may get cheaper cover than those who are more sedentary or drive more erratically.

But where there are winners, there are also losers. Do we want a world where it is impossible to find insurance because you are considered a high risk? Do you want to find that airline tickets cost more because the airline thinks that you are richer than another customer? Indeed, a travel service, orbitz.com, experimented with showing more expensive hotel rooms to those using Apple devices, reasoning that Apple users have more expensive tastes than PC users.1

In October 2017 Wired magazine published an article by Rachel Botsman (2017b) – an extract from her book (2017a) – describing a Chinese government scheme that analyses data on its citizens, planned to go live in 2020:

Imagine a world where many of your daily activities were constantly monitored and evaluated: what you buy at the shops and online; where you are at any given time; who your friends are and how you interact with them; how many hours you spend watching content or playing video games; and what bills and taxes you pay (or not).

… Now imagine a system where all these behaviours are rated as either positive or negative and distilled into a single number, according to rules set by the government. That would create your Citizen Score and it would tell everyone whether or not you were trustworthy. Plus, your rating would be publicly ranked against that of the entire population and used to determine your eligibility for a mortgage or a job, where your children can go to school – or even just your chances of getting a date.

Possibilities such as these have led some governments to step in and create laws around what can and can’t be done.

THE REGULATORY RESPONSE

This section reviews the laws and regulations that have been introduced over time to respond to the changing use of data.

Data Protection Act (DPA)

The increasing use of computers in the 1970s first prompted concerns about the risks they posed to privacy. In 1981 the Council of Europe Convention established standards in member countries to ensure the free flow of information among them without infringing personal privacy. This was followed by the UK’s Data Protection Acts in 1984 and 1998.

However, these Acts pre-dated the development of the internet, and do not directly address some of the challenges to privacy in the internet age. To give some context: although Amazon.com did operate in 1998, Google only launched in September of that year, and there was no YouTube, no iPhone and no Facebook.

The DPA (1984) introduced the government role of Data Protection Registrar. That role grew over time and, in 2001, became the Information Commissioner’s Office (ICO). The ICO describes itself as: ‘The UK’s independent authority set up to uphold information rights in the public interest, promoting openness by public bodies and data privacy for individuals.’2

Privacy and Electronic Communications Regulations (PECR)

The response to the arrival of the internet and email was partially addressed by the PECR in 2003, and later amended in 2004, 2011, 2015 and 2016. PECR gives people specific privacy rights in relation to electronic communications.

There are specific rules on:

• marketing calls, emails, texts and faxes;

• cookies (and similar technologies);

• keeping communications services secure; and

• customer privacy regarding traffic and location data, itemised billing, phone number identification and directory listings.

PECR continues to apply alongside the GDPR.

GENERAL DATA PROTECTION REGULATION (GDPR)

The GDPR was created by the EU to bring the laws on data privacy into the current age. GDPR became law on 25 May 2016, with enforcement commencing two years later, on 25 May 2018.

GDPR applies to all EU member states, but also applies to any businesses worldwide doing business in the EU or with an EU customer. It became law before ‘Brexit’, but in any event, the British government has adopted the GDPR (and, in fact, strengthened it in some respects) as British law – the DPA 2018.3

The main responsibilities for organisations come from the six data protection principles, set out in Article 5,4 which require that personal data shall be:

1. processed lawfully, fairly and in a transparent manner in relation to individuals;

2. collected for specified, explicit and legitimate purposes and not further processed in a manner that is incompatible with those purposes; further processing for archiving purposes in the public interest, scientific or historical research purposes or statistical purposes shall not be considered to be incompatible with the initial purposes;

3. adequate, relevant and limited to what is necessary in relation to the purposes for which they are processed;

4. accurate and, where necessary, kept up to date; every reasonable step must be taken to ensure that personal data that are inaccurate, having regard to the purposes for which they are processed, are erased or rectified without delay;

5. kept in a form which permits identification of data subjects for no longer than is necessary for the purposes for which the personal data are processed; personal data may be stored for longer periods insofar as the personal data will be processed solely for archiving purposes in the public interest, scientific or historical research purposes or statistical purposes subject to implementation of the appropriate technical and organisational measures required by the GDPR in order to safeguard the rights and freedoms of individuals;

6. processed in a manner that ensures appropriate security of the personal data, including protection against unauthorised or unlawful processing and against accidental loss, destruction or damage, using appropriate technical or organisational measures.

In the next section, we’ll explain some of the terms and principles in the GDPR, and how they affect data analysts.

Understanding the GDPR

GDPR only applies to personal data so, before we go further, let’s consider what that means.

Data classification

Looking after data incurs costs. These can relate to activities such as its storage, archive, backup, encryption, monitoring, profiling and cleansing.

As data volumes increase, and these costs rise, it makes sense to only do these activities on data that requires it. It may be easy to say that ‘all of the files on the corporate network belonging to Finance should be encrypted and backed up offsite’, but is it sensible to do this for the spreadsheet of the team tea rota; or the ‘secret Santa’?

The solution comes in the form of data classification. Three standard levels are:

• Public: there is no restriction on sharing this data, such as financial figures in the published annual accounts that are freely available to anyone.

• Confidential: data that the organisation doesn’t want to share, but it wouldn’t be the end of the world if it happened, such as footfall in different stores for a retailer.

• Highly confidential: data that would have a significant adverse effect on the organisation if it became public, such as details of profit margins or employee salaries.

GDPR leads us to data classifications for personal data, specifically personal data and sensitive personal data.

NOTE

Some texts will refer to personally identifiable information (PII). PII is a term more commonly used in North America, and covers data items that could be used to identify an individual, such as name, address and birth date. Personal data, as defined by the GDPR, has a broader scope, including data such as postings on social media and Internet Protocol (IP) addresses.

PII data is personal data; but not all personal data is PII.

However, be aware that this distinction is not widely observed, and your organisation may be using the terms interchangeably. If in doubt, ask!

Personal data

The ICO website states:5

Personal data only includes information relating to natural persons who:

• can be identified or who are identifiable directly from the information in question; or

• who can be indirectly identified from that information in combination with other information.

There is no complete list of types of personal data – much depends on context and what other data is available. There are also some data items, such as name, address and phone number, which may not be personal data in some cases, but will usually be treated as personal data universally. Examples: David Smith is not a unique name; a house may have multiple tenants; and a family home with a landline phone links one phone number to multiple people. Examples of personal data might be:

• name;

• address;

• gender;

• marital status;

• contact details (for example, phone numbers);

• email address;

• national insurance number (or local equivalent in other countries);

• date of birth;

• bank account details;

• credit card details;

• job title;

• images, video or voice recordings (for example, phone calls or CCTV footage);

• cookies and IP addresses.

Although the above data types can be considered as personal data, there are simple changes that can render it non-personal.

• Instead of a specific date of birth, store and use the month and year.

• Instead of a full address, store and the use the first part of the postcode.

What other simple changes can you come up with?

Sensitive personal data

Although sensitive personal data is harder to look after, it’s easier to define! The GDPR sets it out in Article 9:6

1. racial or ethnic origin;

2. political opinions;

3. religious or philosophical beliefs;

4. trade union membership;

5. genetic data;

6. biometric data;

7. data concerning health (both physical and mental health are included);

8. data concerning a natural person’s sex life or sexual orientation.

Why might you, as an individual, be more concerned about these types of information than other information about you?

Elements of GDPR relevant to the data analyst

The full text of the GDPR drives activity across many parts of an organisation, so in this section, we’ll pick out elements relevant to the data analyst and their role.

Principle 1 – Lawfulness, fairness and transparency

This principle includes the fact that data subjects should be informed how their personal data will be used and if the data has been breached.

Where the processing of personal data is based on consent, this will need to be demonstrable. Consent is one of the lawful bases for processing data, set out in Article 6. We will come back to this in the next section.

The principle also supports the rights of access, rectification, erasure, restriction of processing, portability and the right to object – collectively known as Data Subject Rights. These rights are a significant feature of the GDPR from the consumer perspective, but of less relevance to the data analyst.

Principle 2 - Purpose limitation

This principle prevents you from collecting data for one purpose and using it for another. For example, if you collected people’s email addresses for a prize draw, you would not be allowed to add them to your mailing list unless that had been made clear to them (note that there are other restrictions on this as well).

Principle 3 – Data minimisation

Data minimisation means that you can’t ask for lots and lots of data in the hope that some may be interesting. For example, a retailer may have valid reason to record if a customer is under or over the age of 21, but not the exact date of birth.

Adherence to this principle has caused changes to documents such as application forms and online registration.

Principle 4 – Accuracy

Accurate data is no longer a ‘nice to have’, but has become a legal requirement for personal data. Associated with this is the obligation that inaccurate personal data is rectified without ‘undue delay’.7

Principle 5 – Storage limitation

Storage limitation is otherwise known as retention periods. These must be appropriate; for example, your dentist may keep records of your X-rays back for a few years, but do they really need to keep them back to childhood? On the other hand, insurers may legitimately keep some policy records for 50 years or more, to cover cases of illnesses resulting from exposure to toxic materials going back decades.

Some organisations are applying retention periods to different uses and roles. For example, the marketing team may only see personal data within a retention period of 18 months, whereas the legal team can see personal data stretching back seven years or more. This can be enforced by applying user profiles when logging into systems.

Principle 6 – Integrity and confidentiality

We have all seen media reports of confidential information being lost – not just by falling into the hands of hackers, but also in occurrences such as losing a laptop or USB stick. This has always been a risk for organisations, but it now carries a regulatory impact as well.

This principle is also behind the requirement to report a personal data breach if it’s likely to result in a risk to people’s rights and freedoms. Reporting must be done ‘without undue delay and, where feasible, not later than 72 hours after having become aware of it’.8

Lawful bases for processing personal data

Article 6 of the GDPR also sets out the lawful bases for processing personal data:

a. Consent of the data subject

The ICO describe data subject consent for GDPR nicely:9

Consent must be freely given; this means giving people genuine ongoing choice and control over how you use their data.

Consent should be obvious and require a positive action to opt in. Consent requests must be prominent, unbundled from other terms and conditions, concise and easy to understand, and user-friendly.

Consent must specifically cover the controller’s name, the purposes of the processing and the types of processing activity.

Explicit consent must be expressly confirmed in words, rather than by any other positive action.

There is no set time limit for consent. How long it lasts will depend on the context. You should review and refresh consent as appropriate.

This requirement for consent has caused problems for organisations with large mailing lists when the original sign up was:

• not recorded by the organisation, or can’t be found; or

• was done on an opt-out, rather than an opt-in basis.10

In June 2017, the pub chain, Wetherspoons, advised all customers on its database that it would cease sending them emails and would be deleting all the details that they held about them. The company advised also that it would instead use its website and social media to share news of promotions and special offers.

At the time, it was widely reported that this was a pre-emptive step to avoid the effort required in meeting the GDPR requirements (which were then almost a year away from enforcement).

b. Necessary for the performance of a contract

The data processing is necessary:

• in relation to a contract which the data subject has entered into; or

• because the individual has asked for something to be done so they can enter into a contract.

This ties in with rights such as the ‘right to be forgotten’. Individuals can request that an organisation deletes the information that they hold about them, but the organisation may have reason to decline this. For example, a mobile phone company where the customer is still in contract.

c. Necessary for compliance with a legal obligation

If data processing must occur in order to comply with a legal obligation, it is considered lawful. For example, when employers deduct income tax and National Insurance payments from employees (a legal obligation), they will also need to provide personal details of who they relate to.

Legal obligations can come from the law of any EU country.

d. To protect vital interests of a data subject or another person

Data processing to protect the vital interests of a data subject or another person only applies in cases of life and death, such as when an individual’s medical history is disclosed to a hospital’s A&E department treating them after a serious road accident.

e. Necessary for the performance of a task carried out in the public interest or in the exercise of official authority vested in the controller

Examples of data processing necessary for the performance of a task carried out in the public interest or in the exercise of official authority by the controller include crime reporting, preventive or occupational medicine and social care.

f. Legitimate interests

The category of legitimate interests covers cases where you need to be able to ‘do your job’, but the rationale isn’t based on a specific purpose and doesn’t fit into any of the other categories.

Helpfully, the ICO have taken the text of the regulation and broken it down into a three-part test,11 to be applied in order:

• Purpose test – is there a legitimate interest behind the processing?

• Necessity test – is the processing necessary for that purpose?

• Balancing test – is the legitimate interest overridden by the individual’s interests, rights or freedoms?

The ‘purpose test’ may seem to be redundant, as the test of ‘legitimate interests’ begins by asking if there is ‘legitimate interest’! However, what it does is make you work out exactly why you want to do the activity, which then makes it easier to carry out the other two tests.

There are further conditions for special categories of data, detailed in Article 9, which are outside the scope of this book.

DATA SECURITY

Data analysts are entrusted with data – some of which will be personal data, but all of which must be looked after. This section looks at two ways data analysts mitigate the risk of losing data. The first way looks at changing data so that they are not holding so much (or any) personal data. The other is more general guidance about reducing the chance of that data being taken.

Rendering a data subject no longer identifiable

The concept of personally identifying information lies at the core of the GDPR, but does not apply to data that ‘does not relate to an identified or identifiable natural person or to data rendered anonymous in such a way that the data subject is no longer identifiable’.12 Where a data subject can no longer be identified, then controllers do not need to provide those data subjects with access, rectification, erasure or data portability.

On a related note, GDPR does not apply to personal data of the deceased, although some countries, notably Denmark, use local law to include this.13

There are three main ways of achieving this:

• data anonymisation (also known as data masking or data obfuscation);

• data encryption;

• data pseudonymisation.

Data anonymisation

There are several techniques for data anonymisation. The challenge is in retaining the informational value of the data for analysis. Techniques include:

Substitution Replacing data with random but authentic text. For example, we have a list of first names by gender, and replace the first names of policy holders with a name taken from the list. This is helpful to analysts who retain an understanding of the type of data in a field. One possible issue is that, in the event of a breach, it could create an impression that personal data has been lost.

Aggregation This reduces the level of detail in data to a level where the data is no longer able to uniquely identify a person, but can still provide valuable analysis. For example, when analysing customers in a shop, the exact date of birth could be generalised to age bands (for example, age 18–24, 25–29, 30–35, etc.). When considering addresses, the first part of the postcode alone (for example, SW1) could be used to identify where your customers live, without the need for a full address.

EXAMPLE OF DATA ANONYMISATION

Premium Bonds are issued by the NS&I, a government body. The monthly lists of high value prize winners include information about where they live.

To protect winners’ anonymity and help to keep their personal details confidential, they only list an area when there are at least 100,000 Premium Bond holders living there.

If this is not the case, the following hierarchy is used until there is a level with at least 100,000 Premium Bond holders:

• Level 1: Royal Mail postcode address file (PAF) town;

• Level 2: county or local authority;

• Level 3: government standard region;

• Level 4: country.

(Information from: www.nsandi.com/prize-checker)

Shuffling Data could be randomly shuffled within a column (for example, the dates of birth across all policy holders are swapped). However, if used in isolation, anyone with any knowledge of the original data can then apply a ‘What if’ scenario to the data set and then piece back together a real identity. The shuffling method is also open to being reversed if the shuffling algorithm can be deciphered. The fact that data in this case is no longer accurate is not a problem because the data is no longer considered to be personal data.

Number and date variance This is appropriate for financial and date related fields. For example, applying a variance of around +/− 10 per cent may still provide meaningful salary data. Dates of birth could be shifted by a number of days, masking the true dates but keeping the same (although shifted) distribution patterns. As with shuffling, we need not worry that this makes the data inaccurate.

Nulling out or deletion This approach is simple, but destroys the informational value of data and also makes it very obvious that the data has been masked. Dynamic data masking is where only some of the details are hidden, so that a customer service person sees the last four digits of a credit card number; but the system itself retains all the digits so that the transaction can be made.

Data encryption

This is often seen by business users as the ‘easiest approach’ to managing personal data, as security is added without losing any data, but encryption is a complex approach technically. Usually, a ‘key’ is required to view the data, but this raises the challenge of the key being obtained by unauthorised users, and those with access making unencrypted copies of the data.

Encryption usually affects the performance of the database or system that it is applied to, so organisations will generally identify specific data items to encrypt, rather than everything. Under GDPR, if there is a breach, but the data was encrypted, then there is no regulatory requirement to inform the data subjects. This is seen as a strong incentive for encryption, but there could remain a reputational impact if an unreported breach became public knowledge.

Data pseudonymisation

Pseudonymisation is a technique where directly identifying data is held separately and securely from processed data to ensure non-attribution. It can significantly reduce the risks associated with data processing while also maintaining the data’s utility. As with encryption, pseudonymised data that is breached would not require a notification to the data subjects, but the same reputational risk noted above applies.

There are two ways in which pseudonymised data could be re-identified:

• The identifying data and the processed data are both obtained through a data breach.

• The processed data could be combined with other available information to identify the individuals.

The key distinction between pseudonymous data, which is regulated by the GDPR, and anonymous data, which is not, is whether the data can be re-identified with reasonable effort. GDPR considers pseudonymised data to be personal data if it could be attributed to a natural person by the reasonable use of additional information.

EXAMPLE OF RE-IDENTIFICATION

Consider flags such as gender, job title and salary band. By themselves, none of these could identify an individual in the UK:

• Knowing if a person is male or female gives around 30 million possibilities.

• Defining a job title as ‘Member of Parliament’ gives more than 600 possibilities.

• The number of people with annual salaries above £140,000 per year is more than 100,000.

However, if we consider female Members of Parliament earning more than £140,000 per year, we have only one possibility: the Prime Minister at the time of writing, Theresa May.

GDPR would consider this grouping as personal data because the identification does not require a great amount of effort. Ministerial salaries are not confidential and can be accessed freely on the internet. The name of the Prime Minister is also publicly available information!

The UK implementation of GDPR adds an additional offence of intentionally or recklessly re-identifying individuals from anonymised or pseudonymised data. Offenders who knowingly handle or process such data are also guilty of an offence and the maximum penalty is an unlimited fine.

Data breaches

Once data analysts have been entrusted with other people’s data, there is an obligation to take care of it. A scan of the news media will reveal many high-profile data breaches from large and respected companies; indeed, you may yourself have received an email or letter advising you that your account details might have been breached. Even the most competent organisations, in this respect, talk about when a breach happens rather than if.

Breaches can occur accidentally through social means, as well as by malicious attack. Consider the following scenarios – maybe you have seen these yourself?

• A person making a purchase over the phone in a public place, giving out their credit card numbers (front and back) and the other associated data to make a valid transaction.

• The conscientious worker using their train journey home to get some work done, and not realising that the person next to them can read the document they are working on.

• Your email software’s autocomplete function causing you to send a work email to a personal contact with the same first name.

Consider steps you could take to minimise the chance of being caught out in these ways:

• If you must give your details out over the phone, do it somewhere private.

• Screen filters are available for laptops and tablets, so you can only see the screen if you are looking straight at it.

• The traditional approach is to check and check again before you hit send for an email. Some companies have configured their email tools to generate a warning if the email is going to an address outside the corporate domain.

The frequency of data breaches means that public tolerance is beginning to increase in this regard, particularly where the organisation had good defences in place, reporting of the breach is prompt and support is given to those who are affected. For example, it is common for the breached company to provide an identity monitoring service, which will flag any indication that an individual is a victim of identity theft.

On the other hand, organisations that do not handle the situation well can find that their reputations are shattered in a very short time. Organisations adversely affected by a data breach may well decide that some of their data analysis falls outside their risk appetite (that is, the risk of something negative happening is considered to be higher than the benefit they get from the analysis) and they decide to hold less data and/or reduce their use of it.

Find out what you should do if you were to lose your organisation’s laptop or mobile phone. Is there a phone number that you should call or an address that should be emailed? If you had lost one or both devices, would you still be able to find those details?

What if you lost your own phone? Could the person with your phone get access to your Facebook, Twitter, Instagram or LinkedIn apps? Could they get access to your online banking? Could they even spend your money if you’ve set up phone-based payments? Most modern phones allow remote wiping of data, but you would need to know how to do this and sometimes the method needs to be set up in advance.

DATA GOVERNANCE

A Data Analyst function is to leverage data to take a nebulous question and refine it down to an exact one.

(James Londal, Chief Data Officer, Hearts & Science)

For both the data analyst and their organisation, the best of use of time is to analyse data. However, as discussed in the previous chapters, data often requires extensive preparation before use.

The concept of data governance is to put in place people, processes and tools to ensure that there are people who are responsible, accountable, consulted and informed about data issues. Well-governed data is helpful to the data analyst if:

• It is documented, the source(s) of the data is identified and the data item is defined.

• The quality of the data item is known over a period of time.

• The journey of the data item from source to target is known.

• The people involved in the data (such as owners, stewards, subject matter experts) are identified.

Data governance is typically driven by a policy document, which will set out the organisation’s approach to data. The activity to achieve the position described in the policy is then set out in more detailed policy documents, or through specific standards or controls.

A data management team

Historically, responsibility for data sat within the IT function because data issues would be considered as part of the systems and technologies where the data was found. Over time, organisations have set up dedicated data management functions, which often incorporate legacy management information (MI) and business intelligence (BI) teams alongside those responsible for building out data governance activities. More mature setups will have a central ‘data office’ under a Chief Data Officer (CDO).

Whatever the name, central data functions are often very small relative to the size of the organisation – having just one or two people is common. An exception is where data quality checking or a similar activity is centralised under the data function; but, even in these cases, there will be a small number of people focusing on data governance. Successful data offices can function with a small headcount because they work with many other people around the organisation.

Before we talk about using other people, let’s find out more about the problems that a data management team looks to solve.

DATA QUALITY

In general language, we associate ‘quality’ with ideas such as being ‘good’, ‘right’ or ‘correct’. Data analysts need to be more careful how they define things, so let’s consider what ‘data quality’ means.

What does ‘good’ data quality mean and when is good, good enough?

‘Good data quality’ is a phrase often used, and most business stakeholders will make statements around ‘the importance of good quality data’, ‘Six Sigma quality’14 or ‘everything must be 100 per cent accurate’. Conversely, ‘bad data’ is an easy thing to blame when business decisions have led to poor outcomes. Moreover, many people who spend their time working with data will have a natural desire to want to have all the data ‘right’. This can cause such people to invest a great deal of time in fixing relatively minor issues.

The time and resources that are spent fixing ‘small’ data issues are the time and resources that are not spent on fixing major issues and managing root causes.

This approach starts well, but ultimately fails due to the amount of effort required as more and more data issues are identified. A more structured approach to data quality considers these three steps:

1. define;

2. measure;

3. monitor.

Defining data quality

We can describe how good data needs to be by using data quality dimensions.

![]()

WHAT ARE QUALITY DIMENSIONS?

In the context of data quality, dimensions refer to the attribute of the data that we are considering.

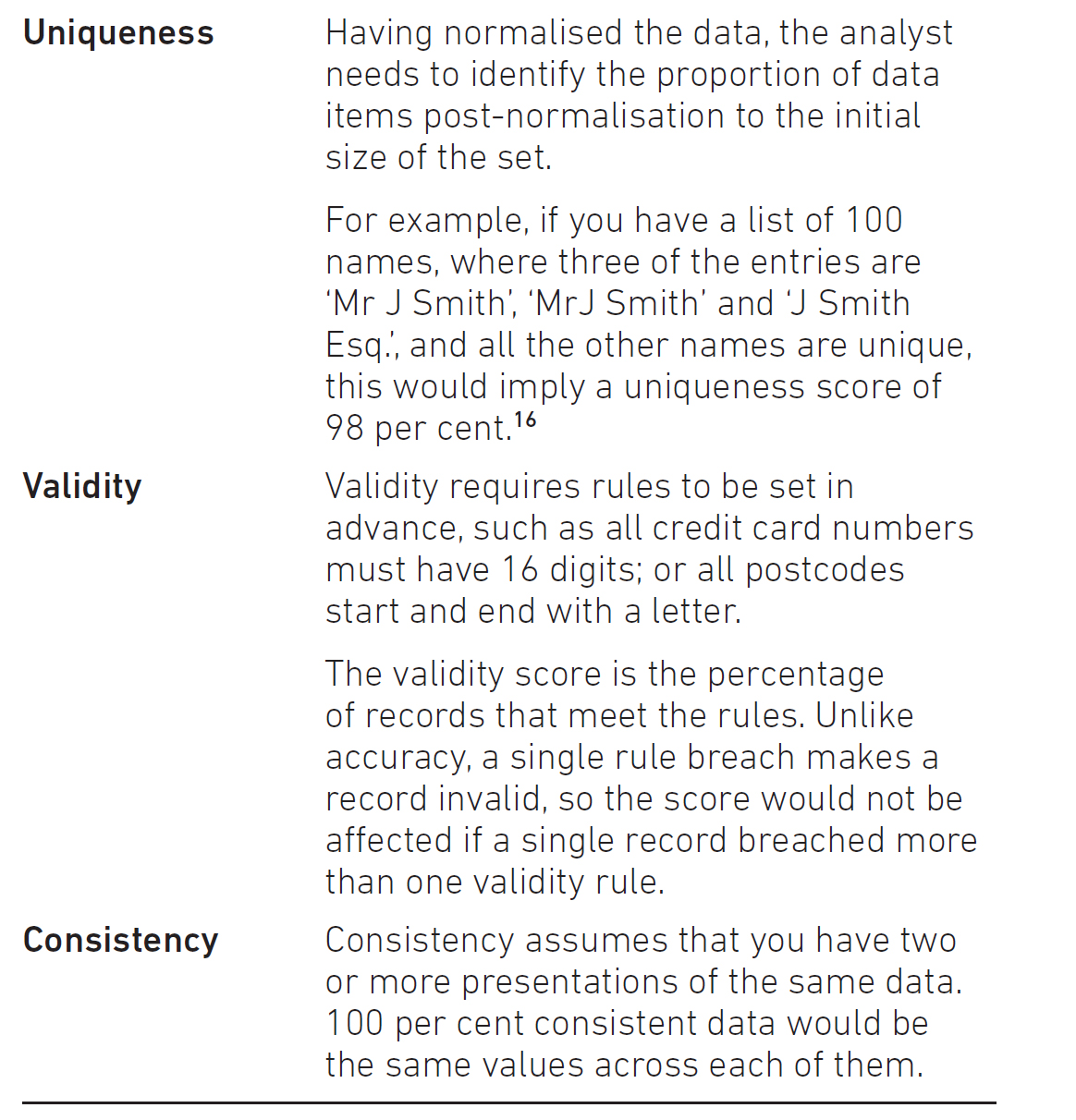

Typical data quality dimensions include:

• accuracy;

• appropriateness;

• timeliness (sometimes incorporated within appropriateness);

• completeness;

• uniqueness;

• validity;

• consistency.

Let’s work through what they mean.

Accuracy This is what most people mean when talking about data quality. Accuracy can be thought of as ‘correctness’.

Getting a single character error in an email address will likely cause the email not to go to the right place.15 For example, it would be bizarre to consider that 15 out of 16 characters are correct, so it is 94 per cent accurate. Rather, we would say that the email address in this case is inaccurate.

Appropriateness This considers if data analysts are looking at data relevant to the problem they are trying to solve.

If a shop owner wants to measure customers that enter the shop, they could monitor the colour of customers’ socks. This information could be 100 per cent accurate, but also entirely useless. Such data would be considered inappropriate.

When looking at large data sets, it is likely that much of the data will be useless for the task in hand. Being able to identify and extract useful data is one of the most challenging, and rewarding, parts of being a data analyst.

Timeliness Imagine that an ice cream seller is trying to estimate how much ice cream to purchase before a sunny day at the beach. It would be sensible to look at how much ice cream they had bought on previous occasions, and how much remained unsold. But if that ice cream seller looked at sales figures from six months earlier – an entirely different season – then the analysis would be misleading. This would be the case even if the data was highly accurate. This is an example of the timeliness dimension. Are we considering data in a relevant and useful timeframe?

At the extreme end of the timeliness spectrum, financial institutions involved in algorithmic and high-speed trading will require some data points to be within fractions of a second of the current time.

Completeness Completeness considers whether we have all the data that we need. This does not mean taking all the data available; as discussed for appropriateness, it is a key skill to pick out the useful data.

Some of the incorrect predictions made in recent general elections have been blamed on incomplete data, such as not considering voter patterns in remote areas of the country. In a corporate context, common causes of incomplete data are when data has been copied from a spreadsheet (missing some rows or columns) and pasted elsewhere; or when a data transfer between systems has stopped before completion, so that not all of the data is found in the recipient system.

On the night of 18 September 2008, a first-year law associate supporting the Barclays acquisition of assets from the stricken Lehman Brothers company, reformatted a Microsoft Excel spreadsheet of critical contracts to be assumed and assigned in bankruptcy on the closing date of the Lehman/Barclays sale. This work was done long after normal business hours, just after 11.30 p.m.

On 19 September, the law firm produced the list of contracts based on the associate’s work the night before. There was a problem. The list contained 179 contracts that should not have been included. The sale closed on 22 September, with the overinclusive list of contracts.

The mistake was caught on 1 October. According to the various affidavits posted at court, the associate did not notice that the 179 contracts were marked as ‘hidden’ in Excel, and did not realise that those entries became ‘un-hidden’ when he globally reformatted the document.

The law firm had to file a motion before the bankruptcy court asking for relief from the final sale order due to mistake or excusable neglect.

(This account is based on Mystal 2008)

Uniqueness This refers to having records corresponding to ‘real world’ items. This itself can be subjective.

The Office for National Statistics (ONS) data for the most popular boys’ baby names in 2016 listed Muhammad as the eighth most popular name. However, this methodology does not include other spellings of what is effectively the same name, so Mohammed appeared in 31st place, Mohammad was 68th and other variants were also counted separately.

Combining these versions would take the name into first place. The ONS provide their own response to the question: https://visual.ons.gov.uk/the-popularity-of-the-name-muhammadmohammedmohammad/

Validity Validity considers whether the data meets some predefined criteria, but does not consider if the data is ‘correct’ or ‘accurate’. It is possible for data to be both valid and inaccurate.

The benefit of considering validity is that it is much easier to test for validity than for correctness. Validity can be expressed by some simple rules or constraints, whereas accuracy requires us to have the ‘correct answer’ to be checked against. This means that validity is also useful as a pre-check of data.

Recall when you last made an online purchase using a credit card. If you made an error in entering the credit card number, so there were too few or too many digits, the website would normally give you an error message – without checking the actual credit card number. The validity test of number of digits is enough for this first pass.

Consistency This uses logic across multiple sources of the same or equivalent data to identify possible errors.

A retailer may compare a customer’s billing and delivery addresses. These do not have to be the same, but, where they are not, there could be an extra control put in place to ensure that the delivery address is legitimate. Taking this a step further, if we store people’s dates of birth in one place; and their ages in another, then – assuming we have the correct current date – we should be able to test that the dates of birth and ages are consistent.

Another instance of consistency is where data is moved or copied from one place to another. For example, a system outputs data to a spreadsheet, which is merged with another spreadsheet. Each of these ‘jumps’ is known as a transformation, and each transformation carries a risk of introducing errors to the data. Consistency is when errors are not introduced.

Putting it all together The relevant data quality dimensions (or a combination of them) will change depending on the nature of the data and what the analyst wants to achieve with it.

It is vital that data analysts engage with stakeholders to understand what they require from their data. This may not be immediately apparent, and can often require some extended conversation or questioning to tease out what is truly of interest.

Measuring data quality

Once data analysts know what they are looking for, they can think about measuring it. Each dimension requires a different approach for measurement – and some of them can be difficult to measure.

Table 4.1 suggests approaches for each of the dimensions just discussed, but this is not an exhaustive list and there may be specific factors that apply to particular use cases.

Monitoring data quality

Having defined and measured our data quality, we now move to the third step: monitoring the outputs of the data quality measurement activity.

While sharing the raw data quality measurements may expose the correct and relevant numbers, the real purpose of doing so is to assess where action needs to be taken and to drive activity that mitigates or resolves the issue, or, if no action is to be taken, acknowledges that the lack of action is a conscious decision (for example, choosing to allocate resources to other activities).

Table 4.1 Suggested approaches for data quality dimensions

Data quality scores or measurements provide useful insight when measured over a period of time. For example:

• A small but steady deterioration in quality may not be noticed from month to month, but the trend can be observed (and so acted upon) over several months.

• A large change in the quality score may indicate a new issue (perhaps an underlying system has stopped working).

Showing your outputs through a dashboard is a powerful method of generating buy-in from the data users and management. Dashboard design is outside the scope of this book, but a fundamental principle is that it must be designed to be meaningful to the audience, even if this is at the expense of sharing all the information you would like to share.

Improving data quality

The steps taken so far expose data issues; some are more important than others, and some are more complex or expensive to fix than others. The people who help data analysts to deal with these issues are discussed in the next section, but analysts need to give those people something to work with.

Issue trackers An issue tracker is used to record data issues and their implications, together with progress towards remediation. As usual, there are commercial tools available such as the workflow tools as commonly used by IT support desks and also more focused data governance tools, but a basic tracker can be operated successfully in Microsoft Excel.

An issue tracker should include:

• A unique identifier for the issue.

• A description of the issue (data analysts may wish to additionally have a shorter ‘headline’).

• The impacts of the issue: people, process, cost (these may change over time as more is discovered about the issue and its resolution). Being able to put a financial impact on an issue is an extremely powerful way of driving a resolution – and of demonstrating the value of the analyst who resolved it.

• Who and when: the issue was raised by; the issue last updated by; issue closed by; and key stakeholders.

• Target date(s) for remediation of the issue (for example, it may be necessary to fix a report by a specific due date).

• The status of the issue (open, resolved, mitigated, dropped).

• A risk rating for the issue (red, amber, green).

It is good practice not to delete items from the tracker. Sometimes issues may be closed on the grounds of being immaterial, but then, when another event arises at a later time, these can be linked when considering what action to take.

Linking this improving of data quality to monitoring it, data analysts can create a dashboard of issues trackers: this could demonstrate their success at closing data issues, and the financial benefits (money saved, or money made) from doing so.

ENGAGING WITH THE ORGANISATION – THE DATA COMMUNITY

Many data quality issues can be categorised as ‘problem exists between chair and keyboard’ (PEBCAK), but while people may often be the cause of issues, they will also lead you to solutions.

Even where there is no formal data governance in place in an organisation, there are often pockets of conscientious good practice. This may take the form of a post-data entry review or reconciliation, sometimes known as ‘four eyes testing’. The flaw here is that the remediation effort is unlikely to have considered the risk, impact and cost of resolution – so resources may not be used in an optimal way.

To engage people, they have to be given defined roles – roles that are viable alongside their existing day jobs. Putting too much work onto individuals will simply lead to work not being done, and data governance work, which may not yet have established its value, will be the first not to get done.

We will now define these roles together with some ways to help individuals fulfil them, and some causes why this might not be happening. The roles may have different names in different organisations, but it should still be possible for the data analyst to identity them through their activities.

This is a story about four people named Everybody, Somebody, Anybody and Nobody.

There was an important job to be done and Everybody was sure that Somebody would do it.

Anybody could have done it, but Nobody did it.

Somebody got angry about that, because it was Everybody’s job.

Everybody thought Anybody could do it, but Nobody realized that Everybody wouldn’t do it.

It ended up that Everybody blamed Somebody when Nobody did what Anybody could have.

(Attributed to Charles Osgood’s poem, A Poem About Responsibility)

Data user

The first business role is that of a data user: anyone making use of data in a business. Data analysts can’t give specific responsibilities to data users in the organisation because they usually won’t have the resources to do so. They can, however, create an environment where data users are able to highlight data issues, and analysts are likely to find that data users around the business will have a backlog of issues to share.

What can help a data user to succeed?

A clear and non-complex way for data users to share knowledge of issues and be informed about progress of their issue and, where appropriate, mitigation or resolution.

What can prevent a data user from helping?

A complex or little known process to flag data issues. A lack of response and, even where a mitigation or resolution has been found, poor communication of this.

Data steward

The next role is commonly referred to as a data steward. These are some of the most important allies that data analysts will have in the data community. These people understand the systems and processes that support data inside out, back to front and in reverse.

The data stewards will be the ones who can advise whether a problem is truly complex, and may also know the underlying issues. Where data flows between multiple sources, bringing together the relevant data stewards will greatly help the process of root cause analysis.

![]()

WHAT IS ROOT CAUSE ANALYSIS?

Root cause analysis is a problem-solving approach that traces a problem all the way back to its source.

The major benefit of this approach is that the root cause may have triggered other issues – as yet unidentified – and so fixing the problem at its root will resolve those issues as well, improving quality more widely.

Indicators that a person is likely to be a strong data steward

People known in the business for their technical or subject matter expertise to whom others will go for support. They will often have their own list of data quality issues that they would like to address.

What can go wrong when working with a data steward?

The data function not engaging with data stewards; or inability to get some quick wins (these are needed to gain the stewards’ confidence).

Data owner

The third role is that of the data owner. The data owner must be someone of sufficient seniority to make decisions and authorise budget or resource as required. Data owners may delegate much of their activity to their stewards, but they cannot pass on the responsibility or accountability.

Data owners are sometimes referred to as business owners, or even business data owners, to differentiate them from the IT professionals who have some level of system ownership.

Indicators of a successful data owner

A senior individual who is engaged and can advocate for good data practice with their peer group; someone who knows the business politics well and can help to navigate it.

Indicators of an ineffective data owner

A role holder who is there in name only; no budget or influence to be able to drive or sign off resolution activity; anyone who uses the phrases ‘gold plating’17 or ‘boiling the ocean’18 when it is proposed to enhance processes or control activities.

Governance committees

Engaging with multiple data owners and data stewards will quickly become very time-consuming, and prioritising the resolution of data issues will also be challenging unless you can bring the stakeholders together.

The senior governance committee for data should consist of the most significant data owners. An associated working group would comprise the most significant data stewards. In both cases, other attendees can attend by invitation. However, the working group should not be put in place until the senior governance committee agrees that it is needed. If not, then there is a risk that the working group has little to do and it fizzles out.

Terms of reference will need to be set out for each group, which include:

• committee purpose;

• membership and quorum;

• meeting frequency;

• lines of escalation and delegation;

• budget responsibility (if there is any);

• standing agenda.

It is critical that these committees are able to manage real issues early on so that credibility is established. The majority of failures in this respect are when the committee members do not feel that the group is adding value.

Given that the ownership of data lies in the business, a successful committee will have input from its business members. A meeting must not consist only of the central data function describing what they have, or haven’t, done.

DATA PROVENANCE

Where does data come from, and how does a data analyst know that it is any good, and that it hasn’t been corrupted on the way in? In all but the most simple organisations, data will enter from many sources, and move through many other data sources before being consumed. For example, customer data could be entered by shop staff into one system, by the call centre team into another one and directly by the customers themselves when shopping online. Changes to data (for example, resolving complaints) could subsequently be made by another team entirely.

Data could then flow out of those data entry systems into a data warehouse. Some may have a direct feed; others may need to be exported into a database or spreadsheet before being imported to the warehouse; yet others may require manual rekey into another system before import. Once extracted from the warehouse into a spreadsheet, that spreadsheet could be manipulated in all sorts of ways before being used to make a business decision.

Data lineage mappings

A solution to this uncertainty is the creation of a data lineage map. These provide a visual representation of how data gets from source to its usage. Mapping all data flows in an organisation can be a mammoth task, and the map produced will be so complex as to be unusable. Instead, data analysts can create mappings that relate to particular business activities.

The simple lineage in Figure 4.1 shows what happens when a customer buys an item online. Having ordered through the website, their data will go into a customer order database; however, some of this data goes direct (for example, what they ordered and when), while their billing data could go via a credit card company,19 and the address data also needs to go to the firm carrying out the delivery.

Finding and documenting the content

This can be the most interesting part of the process. Around your organisation, people know how to do their own jobs; engaging with these people enables you to understand the processes and join them up into a ‘big picture’. Frequently, no one in the business will have seen how complex the entire process is from end to end.

Figure 4.1 A simple data lineage map

This author was involved in a data lineage exercise relating to a particular business process. The initial response was that the lineage must have got something wrong as it was too big. Once satisfied that it was accurate, the team looked at each component of the process and found ways to simplify them. A positive result of this activity was the retirement of several spreadsheets; and doing so reduced the number of opportunities to introduce errors.

This role is best suited to a trained business analyst, who will have specific skills in terms of eliciting this information and documenting it properly. Some tips for data analysts doing this themselves include:

• Take the time to work with the business users – you want to extract all the detail.

• There is almost always a ‘right way’ of doing a process and the ‘real way’ that things get done, especially when there are exceptions. A good trigger question is to ask: ‘Do you always do things this way?’

Tools such as Microsoft Visio are often used to build data lineages. These outputs are frozen at a period in time, so it is important to have a regular review cycle. More recently, commercial tools have been developed that support collaboration; for example, any business user can update the data lineage and, once approved, the revised lineage is available to all. Some tools are also able to be linked directly to systems and can hence generate part of the data flows for analysts.

SUMMARY

In this chapter, we have seen how interest in and use of data and analytics has increased over past years, and how this has both positive and negative effects on the business. Governments have introduced legal and regulatory responses, most recently the GDPR, which has been in force since 25 May 2018. GDPR does not stop organisations working with personal data, but does require that care is taken as to why, where and how it is used.

The processes covered in this chapter, along with other best practice considerations for data analysts, described how data analysts need to be able to define, measure and monitor data quality, and appreciate the importance of communicating and tracking issues so that required actions are taken.

1 See https://business.time.com/2012/06/26/orbitz-shows-higher-prices-to-mac-users/

2 See https://ico.org.uk/

3 See https://ico.org.uk/for-organisations/data-protection-act-2018/

4 From GDPR, see https://gdpr-info.eu/art-5-gdpr/ and https://eur-lex.europa.eu/eli/reg/2016/679/oj

5 See https://ico.org.uk/for-organisations/guide-to-the-general-data-protection-regulation-gdpr/key-definitions/what-is-personal-data/

6 See https://gdpr-info.eu/art-9-gdpr/

7 See https://gdpr-info.eu/art-16-gdpr/

8 See https://gdpr-info.eu/art-33-gdpr/

9 Information Commissioner’s Office, ‘Consent’ (as of April 2018), licenced under the Open Government Licence: https://ico.org.uk/for-organisations/guide-to-the-general-data-protection-regulation-gdpr/lawful-basis-for-processing/consent/

10 Opt-out is when your agreement is assumed, unless you say that you don’t agree. Opt-in is where you only receive material if you asked for it.

11 Article 6(1)(f) of the GDPR; Information Commissioner’s Office, ‘What is the “legitimate interests” basis?’ (as of October 2018), licenced under the Open Government Licence: https://ico.org.uk/for-organisations/guide-to-the-general-data-protection-regulation-gdpr/legitimate-interests/what-is-the-legitimate-interests-basis/

12 Recital 26 of GDPR: www.privacy-regulation.eu/en/recital-26-GDPR.htm

13 See www.twobirds.com/en/in-focus/general-data-protection-regulation/gdpr-tracker/deceased-persons

14 Six Sigma is a widely used process improvement methodology. The term comes from statistics, and represents near-perfection. In a Six Sigma process, there are just 3.4 defective features per million opportunities!

15 An exception being gmail, which ignores full stops before the @; so [email protected] would get to the same mailbox as [email protected].

16 If you are wondering why is this 98 per cent and not 97 per cent, then consider that the three names consolidate to one, so only two terms are duplicates or non-unique.

17 Translation: things work well enough as they are, and you are trying to spend resources making them better than they need to be.

18 Translation: this sounds like a lot of work, so I’m going to say it’s impossible.

19 The Payment Card Industry Data Security Standard (PCI DSS) is a very stringent standard for organisations working with credit card data.