Chapter 7

Infrastructure Security and Controls

A well-designed security architecture design seeks to eliminate, or at least minimize, the number of points where a single failure in infrastructure security and controls can lead to a breach. The controls that make up each of the layers of security for an organization can include technical controls, administrative controls, and physical controls that prevent, detect, or correct issues.

In the first half of this chapter, we will explore defense-in-depth designs and layered security concepts. We will then look at how those concepts are implemented via network architecture designs, asset and change management, segmentation, and a variety of other methods. Together, these techniques and design elements can create a complete layered security design, resulting in an effective defense-in-depth strategy.

In the second part of this chapter, we will explore operational security controls ranging from permissions to technical controls like network access control and malware signature development.

Understanding Defense-in-Depth

The foundation of most security designs relies on the concept of defense-in-depth. In other words, a single defensive measure should not be the only control preventing an attacker (or a mistake!) from creating a problem. Since there are many potential ways for a security breach to occur, a wide range of defenses must be layered together to ensure that a failure in one does not endanger sensitive data, systems, or networks.

Layered Security

One of the most important concepts for defense-in-depth is the idea of layered security. This means that each layer of security includes additional protections that help prevent a hole or flaw in another layer from allowing an attacker in. Figure 7.1 shows a high-level conceptual diagram of a sample layered security approach. In this design, data security is at the core where policies, practices, and data classification would be implemented. Each additional layer adds protections, from application layer security which protects the methods used to access the data to endpoint system security like data loss prevention software.

As you can see, a layered approach can combine technologies that are specifically appropriate to the layer where they reside with technologies that are a fit for multiple layers. Configurations may differ based on the specific needs found at that layer in the security model. For example, data security might require full disk encryption for laptops and mobile devices, whereas desktops might only leverage data loss prevention software.

FIGURE 7.1 Layered security network design

Layered security can be complex to design. The interactions between security controls, organizational business needs, and usability all require careful attention during the design of a layered security approach.

Zero Trust

One major concept in modern security architecture design is the idea of zero trust. The zero trust concept removes the trust that used to be placed in systems, services, and individuals inside security boundaries. In a zero trust environment, each action requested and allowed must be verified and validated before being allowed to occur.

Zero trust moves away from the strong perimeter as the primary security layer and instead moves even further toward a deeply layered security model where individual devices and applications, as well as user accounts, are part of the security design. As perimeters have become increasingly difficult to define, zero trust designs have become more common to address the changing security environment that modern organizations exist in.

Implementing zero trust designs requires a blend of technologies, processes, and policies to manage, monitor, assess, and maintain a complex environment.

Segmentation

Providing a layered defense often involves the use of segmentation, or separation. Physical segmentation involves running on separate physical infrastructure or networks. System isolation is handled by ensuring that the infrastructure is separated, and can go as far as using an air gap, which ensures that there is no connection at all between the infrastructures.

Virtual segmentation takes advantage of virtualization capabilities to separate functions to virtual machines or containers, although some implementations of segmentation for virtualization also run on separate physical servers in addition to running separate virtual machines.

Network segmentation or compartmentalization is a common element of network design. It provides a number of advantages:

- The number of systems that are exposed to attackers (commonly called the organization's attack surface) can be reduced by compartmentalizing systems and networks.

- It can help to limit the scope of regulatory compliance efforts by placing the systems, data, or unit that must be compliant in a more easily maintained environment separate from the rest of the organization.

- In some cases, segmentation can help increase availability by limiting the impact of an issue or attack.

- Segmentation is used to increase the efficiency of a network. Larger numbers of systems in a single segment can lead to network congestion, making segmentation attractive as networks increase in size.

Network segmentation can be accomplished in many ways, but for security reasons, a firewall with a carefully designed ruleset is typically used between network segments with different levels of trust or functional requirements. Network segmentation also frequently relies on routers and switches that support VLAN tagging. In some cases where segmentation is desired and more nuanced controls are not necessary, segmentation is handled using only routers or switches.

One common solution for access into segmented environments like these is the use of a jump box (sometimes called a jump server), which is a system that resides in a segmented environment and is used to access and manage the devices in the segment where it resides. Jump boxes span two different security zones and should thus be carefully secured, managed, and monitored.

In addition to jump boxes, another common means of providing remote access as well as access into segmented networks from different security zones is through a virtual private network (VPN). Although VPNs do not technically have to provide an encryption layer to protect the traffic they carry, almost all modern implementations will use encryption while providing a secure connection that makes a remote network available to a system or device.

Figure 7.2 shows an example of a segmented network with a protected network behind security devices, with a VPN connection to a jump box allowing access to the protected segment.

FIGURE 7.2 Network segmentation with a protected network

Network Architecture

Understanding how your network is designed, what devices exist on it and what their purposes and capabilities are, and what controls you can apply using the devices is critical to securing a network. Traditional physical networks, software-defined networks, and virtual networks exist in parallel in many organizations, and you will need to understand how each of these technologies can impact the architecture and security posture of your organization.

Physical Network Architectures

Physical network architecture is composed of the routers, switches, security devices, cabling, and all the other network components that make up a traditional network. You can leverage a wide range of security solutions on a physical network, but common elements of a security design include the following:

- Firewalls that control traffic flow between networks or systems.

- Intrusion prevention systems (IPSs), which can detect and stop attacks, and intrusion detection systems (IDSs), which only alarm or notify when attacks are detected.

- Content filtering and caching devices that are used to control what information passes through to protected devices.

- Network access control (NAC) technology that controls which devices are able to connect to the network and which may assess the security state of devices or require other information before allowing a connection.

- Network scanners that can identify systems and gather information about them, including the services they are running, patch levels, and other details about the systems.

- Unified threat management (UTM) devices that combine a number of these services often including firewalls, IDSs/IPSs, content filtering, and other security features.

As you prepare for the CySA+ exam, you should familiarize yourself with these common devices and their basic functions so that you can apply them in a network security design.

Software-Defined Networks

Software-defined networking (SDN) makes networks programmable. Using SDN, you can control networks centrally, which allows management of network resources and traffic with more intelligence than a traditional physical network infrastructure. Software-defined networks provide information and control via APIs (application programming interfaces) like OpenFlow, which means that network monitoring and management can be done across disparate hardware and software vendors.

Since SDN allows control via APIs, API security as well as secure code development practices are both important elements of an SDN implementation.

In addition to organizationally controlled SDN implementations, software-defined network wide area networks (SDN-WANs) are an SDN-driven service model where providers use SDN technology to provide network services. They allow blended infrastructures that may combine a variety of technologies behind the scenes to deliver network connectivity to customers. SDN-WAN implementations often provide encryption but introduce risks, including vulnerabilities of the SDN orchestration platform, risks related to multivendor network paths and control, and of course, availability and integrity risks as traffic flows through multiple paths.

Virtualization

Virtualization uses software to run virtual computers on underlying real hardware. This means that you can run multiple systems, running multiple operating systems, all of which act like they are on their own hardware. This approach provides additional control of things like resource usage and what hardware is presented to the guest operating systems, and it allows efficient use of the underlying hardware because you can leverage shared resources.

Virtualization is used in many ways. It is used to implement virtual desktop infrastructure (VDI), which runs desktop operating systems like Windows 10 on central hardware and streams the desktops across the network to systems. Many organizations virtualize almost all their servers, running clusters of virtualization hosts that host all their infrastructure. Virtual security appliances and other vendor-provided virtual solutions are also part of the virtualization ecosystem.

The advantages of virtualization also come with some challenges for security professionals who must now determine how to monitor, secure, and respond to issues in a virtual environment. Much like the other elements of a security design that we have discussed, this requires preplanning and effort to understand the architecture and implementation that your organization will use.

Containerization provides an alternative to virtualizing an entire system, and instead permits applications to be run in their own environment with their own required components, such as libraries, configuration files, and other dependencies, in a dedicated container. Kubernetes and Docker are examples of containerization technologies.

Containerization allows for a high level of portability, but it also creates new security challenges. Traditional host-based security may work for the underlying containerization server, but the containers themselves need to be addressed differently. At the same time, since many containers run on the same server, threats to the host OS can impact many containerized services. Fortunately, tools exist to sign container images and to monitor and patch containers. Beyond these tools, traditional hardening, application and service monitoring, and auditing tools can be useful.

When addressing containerized systems, bear in mind the shared underlying host as well as the rapid deployment models typically used with containers. Security must be baked into the service and software development life cycle as well as the system maintenance and management process.

Serverless computing in a broad sense describes cloud computing, but much of the time when it is used currently it describes technology sometimes called function as a service (FaaS). In essence, serverless computing relies on a system that executes functions as they are called. Amazon's AWS Lambda, Google's App Engine, and Azure Functions are all examples of serverless computing FaaS implementations. In these cases, security models typically address the functions like other code, meaning that the same types of controls used for software development need to be applied to the function-as-a-service environment. In addition, controls appropriate to cloud computing environments such as access controls and rights, as well as monitoring and resource management capabilities, are necessary to ensure a secure deployment.

Networking in virtual environments may combine traditional physical networks between virtualization or containerization hosts, and then virtual networking that runs inside the virtual environment. If you don't plan ahead, this can make segments of your network essentially invisible to your monitoring tools and security systems. As you design virtualized environments, make sure that you consider who controls the virtual network connectivity and design, how you will monitor it, and use the same set of security controls that you would need to apply on a physical network.

Asset and Change Management

Securing assets effectively requires knowing what assets you have and what their current state is. Those paired requirements drive organizations to implement asset management tools and processes, and to apply change management methodology and tools to the software and systems that they have in their asset inventory.

In addition to having an inventory of what devices, systems, and software they have, organizations often use asset tagging to ensure that their assets can be inventoried and in some cases, may be returned if they are misplaced or stolen. Asset tagging can help discourage theft, help identify systems more easily, and can make day-to-day support work easier for support staff who can quickly look up a system or device in an asset inventory list to determine what it is, who the device was issued to, and what its current state should be.

Logging, Monitoring, and Validation

Layered security requires appropriate monitoring, alerting, and validation. Logging systems in a well-designed layered security architecture should ensure that logs are secure and are available centrally for monitoring. Monitoring systems need to have appropriate alert thresholds set and notification capabilities that match the needs of the organization.

Most security designs implement a separate log server, either in the form of a security information and event management (SIEM) device or as a specialized log server. This design allows the remote log server to act as a bastion host, preserving unmodified copies of logs in case of compromise and allowing for central analysis of the log data.

In addition to monitoring and logging capabilities, configuration management and validation capabilities are important. Tools like Microsoft's Endpoint Manager or Jamf's Jamf Pro offer the ability to set and validate system settings across networks of varying types of devices, thus ensuring that their configurations and installed software match institutional expectations.

Encryption

Both encryption and hashing are critical to many of the controls found at each of the layers we have discussed. They play roles in network security, host security, and data security, and they are embedded in many of the applications and systems that each layer depends on.

This makes using current, secure encryption techniques and ensuring that proper key management occurs critical to a layered security design. When reviewing security designs, it is important to identify where encryption (and hashing) are used, how they are used, and how both the encryption keys and their passphrases are stored. It is also important to understand when data is encrypted and when it is unencrypted—security designs can fail because the carefully encrypted data that was sent securely is unencrypted and stored in a cache or by a local user, removing the protection it relied on during transit.

Certificate Management

Managing digital security certificates used to mostly be an issue for system and application administrators who were responsible for the SSL certificates that their web and other services used. As digital certificates have become more deeply integrated into service architectures, including the use of certificates for workstations and other endpoints and for access to cloud service providers, certificate management has become a broader issue.

The basic concepts behind certificate management remain the same, however:

- Keeping private keys and passphrases secure is of the utmost importance.

- Ensuring that systems use and respect certificate revocations is necessary.

- Managing certificate life cycles helps to prevent expired certificates causing issues and ensures that they are replaced when necessary.

- Central management makes responses to events like compromised keys or changes in certificate root vendors less problematic.

If your organization uses certificates, you should ensure that they are tracked and managed, and that you have a plan in place for what would happen if you needed to change them or if they were compromised.

Active Defense

Active defense describes offensive actions taken to counter adversaries. These might include active responses to incursions to limit damage by shutting down systems, removing accounts, or otherwise responding to attacks and compromises. They can also include incident response and forensic activities that can provide additional information about how the attackers breached your defenses.

Threat intelligence, threat hunting, and countermeasure design and implementation are also part of active defenses. Continuously evaluating your security posture and controls and adjusting them to meet the threats that your organization faces over time is the best approach to building a strong foundation for active defense.

Honeypots

One technology used in some active defenses is a honeypot. Honeypots are systems that are designed to look like an attractive target for an attacker. They capture attack traffic, techniques, and other information as attackers attempt to compromise them. This provides both insight into attacker tools and methodologies as well as clues to what attackers and threats might target your organization.

Infrastructure Security and the Cloud

The shift to use of cloud services throughout organizations has not only shifted the perimeter and driven the need for zero trust environments, but has also created a need for organizations to update their security practices for a more porous, more diverse operating environment.

Unlike on-premises systems, the underlying environment provided by cloud service providers is not typically accessible to security practitioners to configure, test, or otherwise control. That means that securing cloud services requires a different approach.

With most software as a service (SaaS) and platform as a service (PaaS) vendors, security will primarily be tackled via contractual obligations. Configurations and implementation options can create security challenges, and identity and access management is also important in these environments.

Infrastructure as a service (IaaS) vendors like AWS, Azure, Google, and others provide more access to infrastructure, and thus some of the traditional security concerns around operating system configuration, management, and patching will apply. Similarly, services and applications need to be installed, configured, and maintained in a secure manner. Cloud providers often provide additional security-oriented services that may be useful in their environment and which may replace or supplement the tools that you might use on-premises.

Many cloud vendors will provide audit and assessment results upon request, often with a required nondisclosure agreement before they will provide the information.

When considering on-premises versus cloud security, you should review what options exist in each environment, and what threats are relevant and which are different, if any. Assessing the security capabilities, posture, threats, and risks that apply to or are specific to your cloud service provider is also an important task.

Virtual private cloud (VPC) is an option delivered by cloud service providers that builds an on-demand semi-isolated environment. A VPC typically exists on a private subnet and may have additional security to ensure that intersystem communications remain secure.

Another cloud security tool is a cloud access security broker (CASB). CASB tools are policy enforcement points that can exist either locally or in the cloud, and enforce security policies when cloud resources and services are used. CASBs can help with data security, antimalware functionality, service usage and access visibility, and risk management. As you might expect with powerful tools, a CASB can be very helpful but requires careful configuration and continued maintenance.

Improving Security by Improving Controls

Security designs rely on controls that can help prevent, detect, counteract, or limit the impact of security risks. Controls are typically classified based on two categorization schemes: how they are implemented, or when they react relative to the security incident or threat. Classifying controls based on implementation type is done using the following model:

- Technical controls include firewalls, intrusion detection and prevention systems, network segmentation, authentication and authorization systems, and a variety of other systems and technical capabilities designed to provide security through technical means.

- Administrative controls (sometimes called procedural controls) involve processes and procedures like those found in incident response plans, account creation and management, as well as awareness and training efforts.

- Physical controls include locks, fences, and other controls that control or limit physical access, as well as controls like fire extinguishers that can help prevent physical harm to property.

Classification by when the control acts uses the following classification scheme:

- Preventive controls are intended to stop an incident from occurring by taking proactive measures to stop the threat. Preventive controls include firewalls, training, and security guards.

- Detective controls work to detect an incident and to capture information about it, allowing a response, like alarms or notifications.

- Corrective controls either remediate an incident or act to limit how much damage can result from an incident. Corrective controls are often used as part of an incident response process. Examples of corrective controls include patching, antimalware software, and system restores from backups.

Layered Host Security

Endpoint systems, whether they are laptops, desktop PCs, or mobile devices, can also use a layered security approach. Since individual systems are used by individual users for their day-to-day work, they are often one of the most at-risk parts of your infrastructure and can create significant threat if they are compromised.

Layered security at the individual host level typically relies on a number of common security controls:

- Passwords or other strong authentication help ensure that only authorized users access hosts.

- Host firewalls and host intrusion prevention software limit the network attack surface of hosts and can also help prevent undesired outbound communication in the event of a system compromise.

- Data loss prevention software monitors and manages protected data.

- Whitelisting or blacklisting software can allow or prevent specific software packages and applications from being installed or run on the system.

- Antimalware and antivirus packages monitor for known malware as well as behavior that commonly occurs due to malicious software.

- Patch management and vulnerability assessment tools are used to ensure that applications and the operating system are fully patched and properly secured.

- System hardening and configuration management ensures that unneeded services are turned off, that good security practices are followed for operating system–level configuration options, and that unnecessary accounts are not present.

- Encryption, either at the file level or full-disk encryption, may be appropriate to protect the system from access if it is lost or stolen or if data at rest needs additional security.

- File integrity monitoring tools monitor files and directories for changes and can alert an administrator if changes occur.

- Logging of events, actions, and issues is an important detective control at the host layer.

The host security layer may also include physical security controls to help prevent theft or undesired access to the systems themselves.

Permissions

Permissions are the access rights or privileges given to users, systems, applications, or devices. Permissions are often granted based on roles in organizations to make rights management easier. You are likely familiar with permissions like read, write, and execute.

Linux permissions can be seen using the ls command. In Figure 7.3 you can see a file listing. At the left is a -, which means this is a file rather than d for a directory. Next are three sets of three characters, which can be r for read, w for write, x for execute, or a for no permission. These three settings define what the user can do, what the group can do, and what all or everyone can do.

FIGURE 7.3 Linux permissions

Permissions exist in many places such as software packages, operating systems, and cloud services. Building appropriate permissions models for users and their roles is a critical part of a strong security control model.

Whitelisting and Blacklisting

Whitelisting and blacklisting are important concepts for security professionals who are implementing controls. Whitelisting techniques rely on building a list of allowed things, whether they are IP addresses in firewall ruleset, software packages, or something else. Blacklisting takes the opposite approach and specifically prohibits things based on entries in a list.

Whitelisting can be difficult in some scenarios because you have to have a list of all possible needed entries on your permitted list. Whitelisting software packages for an entire enterprise can be challenging for this reason. Blacklisting has its own challenges, particularly in cases where using broad definitions in entries can lead to inadvertent blocks.

Despite these challenges, whitelisting and blacklisting are both commonly used techniques.

Technical Controls

Technical controls use technology to implement safeguards. Technical controls can be implemented in software, hardware, or firmware, and they typically provide automated protection to the systems where they are implemented.

For the CySA+ exam, you should be familiar with the basics of the following types of security devices and controls:

- Firewalls that block or allow traffic based on rules that use information like the source or destination IP address, port, or protocol used by traffic. They are typically used between different trust zones. There are a number of different types of firewall technologies, as well as both host and network firewall devices.

- Intrusion prevention systems (IPSs) are used to monitor traffic and apply rules based on behaviors and traffic content. Like firewalls, IPSs and IDSs (intrusion detection systems) rely on rules, but both IPSs and IDSs typically inspect traffic at a deeper level, paying attention to content and other details inside the packets themselves. Although the CySA+ exam materials reference only IPSs that can stop unwanted traffic, IDSs that can only detect traffic and alert or log information about it are also deployed as a detective control. Or an IPS may be configured with rules that do not take action when such an action might cause issues.

- Data loss prevention (DLP) systems and software work to protect data from leaving the organization or systems where it should be contained. A complete DLP system targets data in motion, data at rest and in use, and endpoint systems where data may be accessed or stored. DLP relies on identifying the data that should be protected and then detecting when leaks occur, which can be challenging when encryption is frequently used between systems and across networks. This means that DLP installations combine endpoint software and various means of making network traffic visible to the DLP system.

- Endpoint detection and response (EDR) is a relatively new term for systems that provides continuous monitoring and response to advanced threats. They typically use endpoint data gathering and monitoring capabilities paired with central processing and analysis to provide high levels of visibility into what occurs on endpoints. This use of data gathering, search, and analysis tools is intended to help detect and respond to suspicious activity. The ability to handle multiple threat types, including ransomware, malware, and data exfiltration, is also a common feature for EDR systems.

- Network access control (NAC) is a technology that requires a system to authenticate or provide other information before it can connect to a network. NAC operates in either a preadmission or postadmission mode, either checking systems before they are connected or checking user actions after systems are connected to the network. NAC solutions may require an agent or software on the connecting system to gather data and handle the NAC connection process, or they may be agentless. When systems do not successfully connect to a NAC-protected network, or when an issue is detected, they may be placed into a quarantine network where they may have access to remediation tools or an information portal, or they may simply be prevented from connecting.

- Sinkholing redirects traffic from its original destination to a destination of your choice. Although the most common implementation of sinkholing is done via DNS to prevent traffic from being sent to malicious sites, you may also encounter other types of sinkholing as well.

- Port security is a technology that monitors the MAC (hardware) addresses of devices connecting to switch ports and allows or denies them access to the network based on their MAC address. A knowledgeable attacker may spoof a MAC address from a trusted system to bypass port security.

- Sandboxing refers to a variety of techniques that place untrusted software or systems into a protected and isolated environment. Sandboxing is often used for software testing and will isolate an application from the system, thus preventing it from causing issues if something goes wrong. Virtualization technologies can be used to create sandboxes for entire systems, and organizations that test for malware often use heavily instrumented sandbox environments to determine what a malware package is doing.

Malware Signatures

Malware signatures used to be simply composed of recognizable file patterns or hashes that could be checked to see if a given file or files matched those from known malware. As malware has become more complex, techniques that help obfuscate the malware by changing it to prevent this via techniques like mutation, expanding or shrinking code, or register renaming have made traditional signature-based identification less successful.

Signatures can be created by analyzing the malware and creating hashes or other comparators that can be checked to see if packages match.

Malware identification now relies on multiple layers of detection capabilities that look at more than just file signatures. Behavior-based detection looks at the actions that an executable takes such as accessing memory or the filesystem, changing rights, or otherwise performing suspicious actions. Looking at behaviors also helps identify polymorphic viruses, which change themselves so that simply hashing their binaries will not allow them to be identified. Now antimalware software may even roll back actions that the malware took while it was being monitored. This type of monitoring is often called dynamic analysis.

In both traditional malware signature creation scenarios and behavior-based detection creation scenarios, malware samples are placed into an isolated sandbox with instrumentation that tracks what the malware does. That information is used to help create both signatures from files or other materials the malware creates, installs, or modifies, and the actions that it takes. In many cases, automated malware signature creation is done using these techniques so that antimalware providers can provide signatures more quickly.

If you want to try this out yourself, you can submit suspected malware to sites like VirusTotal.com or jotti.com.

Policy, Process, and Standards

Administrative controls that involve policies, processes, and standards are a necessary layer when looking at a complete layered security design. In addition to controls that support security at the technology layer, administrative controls found in a complete security design include

- Change control

- Configuration management

- Monitoring and response policies

- Personnel security controls

- Business continuity and disaster recovery controls

- Human resource controls like background checks and terminations

Personnel Security

In addition to technical and procedural concerns, the human layer of a design must be considered. Staff members need to be trained for the tasks they perform and to ensure that they react appropriately to security issues and threats. Critical personnel controls should be put in place where needed to provide separation of duties and to remove single points of failure in staffing.

A wide variety of personnel controls can be implemented as part of a complete security program, ranging from training to process and human resources–related controls. The most common personnel controls are as follows:

- Separation of Duties When individuals in an organization are given a role to perform, they can potentially abuse the rights and privileges that that role provides. Properly implemented separation of duties requires more than one individual to perform elements of a task to ensure that fraud or abuse do not occur. A typical separation of duties can be found in financially significant systems like payroll or accounts payable software. One person should not be able to modify financial data without being detected, so they should not have modification rights and also be charged with monitoring for changes.

- Succession Planning This is important to ensure continuity for roles, regardless of the reason a person leaves your organization. A departing staff member can take critical expertise and skills with them, leaving important duties unattended or tasks unperformed. When a manager or supervisor leaves, not having a succession plan can also result in a lack of oversight for functions, making it easier for other personnel issues to occur without being caught.

- Background Checks These are commonly performed before employees are hired to ensure that the candidates are suitable for employment with the organization.

- Termination When an employee quits or is terminated, it is important to ensure that their access to organizational resources and accounts is also terminated. This requires reviewing their access and ensuring that the organization's separation process properly handles retrieving any organizational property like laptops, mobile devices, and data. In many organizations, accounts are initially disabled (often by changing the password to one the current user does not know). This ensures that data is not lost and can be accessed by the organization if needed. Once you know that any data associated with the account is no longer needed, you can then delete the account itself.

- Cross Training Cross training focuses on teaching employees skills that enable them to take on tasks that their coworkers and other staff members normally perform. This can help to prevent single points of failure due to skillsets and can also help to detect issues caused by an employee or a process by bringing someone who is less familiar with the task or procedure into the loop. Cross training is commonly used to help ensure that critical capabilities have backups in place since it can help prevent issues with employee separation when an indispensable employee leaves. It is also an important part of enabling other security controls. Succession planning and mandatory vacation are made easier if appropriate cross training occurs.

- Dual Control Dual control is useful when a process is so sensitive that it is desirable to require two individuals to perform an action together. The classic example of this appears in many movies in the form of a dual-control system that requires two military officers to insert and turn their keys at the same time to fire a nuclear weapon. Of course, this isn't likely to be necessary in your organization, but dual control may be a useful security control when sensitive tasks are involved because it requires both parties to collude for a breach to occur. This is often seen in organizations that require two signatures for checks over a certain value. Dual control can be implemented as either an administrative control via procedures or via technical controls.

- Mandatory Vacation This process requires staff members to take vacation, allowing you to identify individuals who are exploiting the rights they have. Mandatory vacation prevents employees from hiding issues or taking advantage of their privileges by ensuring that they are not continuously responsible for a task.

When mandatory vacation is combined with separation of duties, it can provide a highly effective way to detect employee collusion and malfeasance. Sending one or more employees involved in a process on vacation offers an opportunity for a third party to observe any issues or irregularities in the processes that the employees on vacation normally handle.

Analyzing Security Architecture

The key to analyzing a security infrastructure for an organization is to identify where defenses are weak, or where an attacker may be able to exploit flaws in architectural design, processes, procedures, or in the underlying technology like vulnerabilities or misconfigurations. Control gaps, single points of failure, and improperly implemented controls are all common issues that you are likely to encounter.

Analyzing Security Requirements

Security architectures can be analyzed based on their attributes by reviewing the security model and ensuring that it meets a specific requirement. For example, if you were asked to review a workstation security design that used antimalware software to determine if it would prevent unwanted software from being installed, you might identify three scenarios:

- Success Antimalware software can successfully prevent unwanted software installation if the software is known malware or if it behaves in a way that the antimalware software will detect as unwanted.

- Failure It will not detect software that is not permitted by organizational policy but that is not malware.

- Failure It may not prevent unknown malware, or malware that does not act in ways that are typical of known malware.

This type of attribute-based testing can be performed based on a risk assessment and control plan to determine whether the security architecture meets the control objectives. It can also be directly applied to each control by determining the goal of each control and then reviewing whether it meets that goal.

Reviewing Architecture

In addition to a requirement-based analysis method, a number of formal architectural models rely on views, or viewpoints, from which the architecture and controls can be reviewed. Common views that can be taken when reviewing an architecture include the following:

- Operational views describe how a function is performed, or what it accomplishes. This view typically shows how information flows but does not capture the technical detail about how data is transmitted, stored, or captured. Operational views are useful for understanding what is occurring and often influence procedural or administrative controls.

- Technical views (sometimes called service-oriented, or systems-based, views) focus on the technologies, settings, and configurations used in an architecture. This can help identify incorrect configurations and insecure design decisions. An example of a technical view might include details like the TLS version of a connection, or the specific settings for password length and complexity required for user accounts.

- A logical view is sometimes used to describe how systems interconnect. It is typically less technically detailed than a technical view but conveys broader information about how a system or service connects or works. The network diagrams earlier in this chapter are examples of logical views.

Security architecture reviews may need any or all of these viewpoints to provide a complete understanding of where issues may exist.

Common Issues

Analyzing security architectures requires an understanding of the design concepts and controls they commonly use as well as the issues that are most frequently encountered in those designs. Four of the most commonly encountered design issues are single points of failure, data validation and trust problems, user issues, and authentication and authorization security and process problems.

Single Points of Failure

A key element to consider when analyzing a layered security architecture is the existence of single points of failure—a single point of the system where, if that component, control, or system fails, the entire system will not work or will fail to provide the desired level of security.

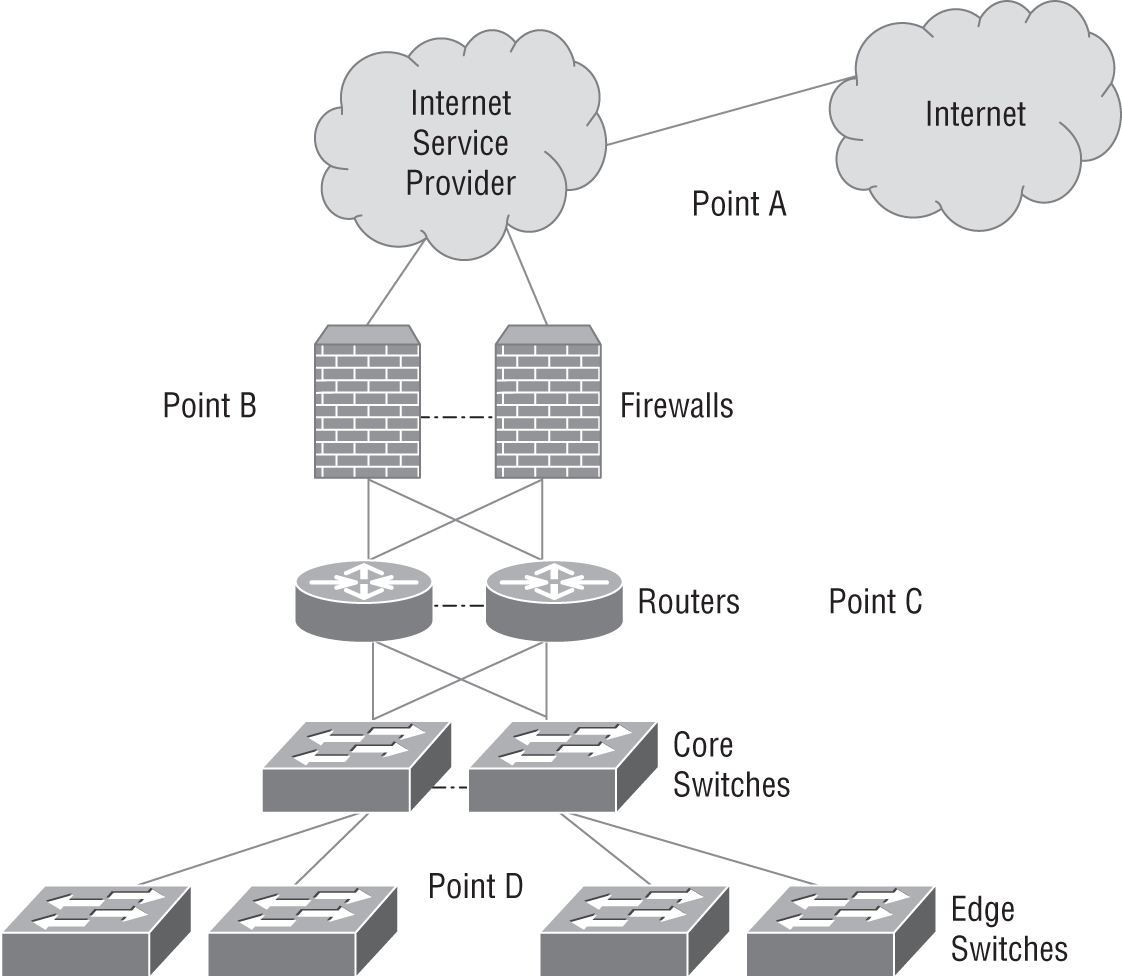

Figure 7.4 shows a fully redundant network design with fault-tolerant firewalls, routers, and core switches. Each device communicates with its partner via a heartbeat link, which provides status and synchronization information. If a device's partner fails, it will take over, providing continuity of service. Each device is also cross-linked, allowing any component to send traffic to the devices in front of and behind it via multiple paths, removing a failed wire as a potential point of failure. Similar protections would be in place for power protection, and the devices would typically be physically located in separate racks or even rooms to prevent a single physical issue from disrupting service.

FIGURE 7.4 A fully redundant network edge design

Network and infrastructure design diagrams can make spotting a potential single point of failure much easier. In Figure 7.5, the same redundant network's internal design shows a single point of failure (Point A) at the distribution router and edge switch layers. Here, a failure of a link, the router, or the switches might take down a section of the network, rather than the organization's primary Internet link. In situations like this, a single point of failure may be acceptable based on the organization's risk profile and functional requirements.

FIGURE 7.5 Single points of failure in a network design

The same analysis process can be used to identify issues with applications, processes, and control architectures. A block diagram is created that includes all the critical components or controls, and the flow that the diagram supports is traced through the diagram. Figure 7.6 shows a sample flow diagram for an account creation process. Note that at point A, an authorized requester files a request for a new account, and at point B, a manager approves the account creation. During process analysis a flaw would be noted if the manager can both request and approve an account creation. If the review at point D is also performed by the manager, this process flaw would be even more severe. This provides a great case for separation of duties as an appropriate and useful control!

FIGURE 7.6 Single points of failure in a process flow

Data Validation and Trust

The ability to trust data that is used for data processing or to make decisions is another area where security issues frequently occur. Data is often assumed to be valid, and incorrect or falsified data can result in significant issues. The ability to rely on data can be enhanced by

- Protecting data at rest and in transit using encryption

- Validating data integrity using file integrity checking tools, or otherwise performing data integrity validation

- Implementing processes to verify data in an automated or a manual fashion

- Profiling or boundary checking data based on known attributes of the data

Web application security testers are very familiar with exploiting weaknesses in data validation schemes. Insecure web applications often trust that the data they receive from a page that they generate is valid and then use that data for further tasks. For example, if an item costs $25 and the application pulls that data from a hidden field found in a web form but does not validate that the data it receives matches a value it should accept, an attacker could change the value to $0 and might get the item for free! Validating that the data you receive either matches the specific dataset you expect or is at least in a reasonable range is a critical step in layered security design.

When analyzing data flows, storage, and usage, remember to look for places where data issues could cause high-impact failures. Data validation issues at some points in a design can be far more critical to the overall design than they are at other points, making effective control placement extremely important in those locations.

Users

Human error is a frequent cause of failure in security architectures. Mistakes, malfeasance, or social engineering attacks that target staff members can all break security designs. Layered security designs should be built with the assumption that the humans who carry out the procedures and processes that it relies on may make mistakes and should be designed to identify and alert if intentional violations occur.

Failures due to users are often limited by

- Using automated monitoring and alerting systems to detect human error

- Constraining interfaces to only allow permitted activities

- Implementing procedural checks and balances like separation of duties and the other personnel controls previously discussed in this chapter

- Training and awareness programs designed to help prepare staff for the types of threats they are likely to encounter

Identifying possible issues caused by users is often useful to brainstorm with experts on the systems or processes. When that isn't possible, reviewing how user actions are monitored and controlled can help point to possible gaps.

A practical example of this type of review occurs in grocery stores where it is common practice to issue a cashier a cash drawer to take to their station. Sometimes, the cashier counts out their own drawer before starting a shift; in other cases they are given a cash drawer with a standard and verified amount of money. During their shift, they are the only users of that drawer, and at the end of the shift, they count out the drawer and have it verified or work with a manager to count it out. If there continued to be loss at grocery store, you might walk through the cashier's tasks, asking them at each step what task they are performing and how it is validated. When you identify an area where an issue could occur, you can then work to identify controls to prevent that issue.

Authentication and Authorization

User credentials, passwords, and user rights are all areas that often create issues in security designs. Common problems include inappropriate or overly broad user rights, poor credential security or management, embedded and stored passwords and keys, as well as reliance on passwords to protect critical systems. Security designs that seek to avoid these issues often implement solutions like

- Multifactor authentication

- Centralized account and privilege management and monitoring

- Privileged account usage monitoring

- Training and awareness efforts

When analyzing a security design for potential authentication and authorization issues, the first step is to identify where authentication occurs, how authorization is performed, and what rights are needed and provided to users. Once you understand those, you can focus on the controls that are implemented and where those controls may leave gaps. Remember to consider technical, process, and human factors.

Reviewing a Security Architecture

Reviewing a security architecture requires step-by-step analysis of the security needs that influenced a design and the controls that were put in place. Figure 7.7 shows a high-level design for a web application with a database backend. To analyze this, we can first start with a statement of the design requirements for the service:

The web application is presented to customers via the Internet, and contains customer business sensitive data. It must be fault-tolerant, secure against web application attacks, and should provide secure authentication and authorization, as well as appropriate security monitoring and system security.

From this, we can review the design for potential flaws. Starting from the left, we will step through each section looking for possible issues. It's important to note that this example won't cover every possible flaw with this design. Instead, this is meant as an example of how a review might identify flaws based on a network logical diagram.

- The Internet connection, as shown, has a single path. This could cause an availability issue.

- There is no IDS or IPS between the Internet and the web server layer. This may be desirable to handle attacks that the WAF and the firewall layer cannot detect.

- The diagram does not show how the network security devices like firewalls and the WAF are managed. Exposure of management ports via public networks is a security risk.

- The web application firewall and the load balancers are shown as clustered devices. This may indicate an availability issue.

- There is no active detection security layer between the web server and the databases. A SQL-aware security device would provide an additional layer of security.

FIGURE 7.7 Sample security architecture

In addition to the information that the logical diagram shows, review of a dataflow diagram, configurations, processes, and procedures would each provide useful views to ensure this design provides an appropriate level of security.

Maintaining a Security Design

Security designs need to continue to address the threats and requirements that they were built to address. Over time, new threats may appear, old threats may stop being a concern, technology will change, and organizational capabilities will be different. That means that they need to be reviewed, improved, and eventually retired.

Scheduled Reviews

A security design should undergo periodical, scheduled reviews. The frequency of the reviews may vary depending on how quickly threats that the organization faces change; how often its systems, networks, and processes change; if regulator or contractual requirements change; and if a major security event occurs that indicates that a review may be needed.

Continual Improvement

Continual improvement processes (sometimes called CIP or CI processes) are designed to provide incremental improvements over time. A security program or design needs to be assessed on a recurring basis, making a continuous improvement process important to ensure that the design does not become outdated.

Retirement of Processes

Security processes and policies may become outdated, and instead of updating, they may need to be retired. This is often the case for one of a few reasons:

- The process or policy is no longer relevant.

- It has been superseded by a newer policy or process.

- The organization no longer wants to use the policy or process.

Retirement of processes and policies should be documented and recorded in the document that describes it. Once a decision has been made, appropriate communication needs to occur to ensure that it is no longer used and that individuals and groups who used the process or policy are aware of its replacement if appropriate.

Summary

Security controls should be layered to ensure that the failure of a control does not result in the failure of the overall security architecture of an organization, system, or service. Layered security designs may provide uniform protection or may implement design choices that place more sensitive systems or data in protected enclaves. It may also use analysis of threats or data classifications as the foundation for design decisions. In any complete security design, multiple controls are implemented together to create a stronger security design that can prevent attacks, detect problems, and allow for appropriate response and recovery in the event that a failure does occur.

Layered security designs often use segmentation and network security devices to separate networks and systems based on functional or other distinctions. Systems also require layered security, and tools like host firewalls, data loss prevention software, antivirus and antimalware software, and system configuration and monitoring tools, all providing parts of the host security puzzle. Other common parts of a layered security design include encryption, logging and monitoring, and personnel security, as well as policies, processes, and standards. Attribute-based assessments of security models rely on verifying that a design meets security requirements. Views of the architecture should be used to ensure that the design holds up from multiple perspectives, including operational, technical, and logical paths through the security design. Single points of failure, trust and data validation problems, authentication and authorization issues, and user-related concerns are all important to consider when looking for flaws. All of this means that security designs should undergo regular review and updates to stay ahead of changing needs and new security threats.

Exam Essentials

Know why security solutions are an important part of infrastructure management. Layered security, including segmentation at the physical and/or virtual level, as well as use of concepts like system isolation and air gapping, is part of a strong defensive design. The network architecture for physical, virtual, and cloud environments must leverage secure design concepts as well as security tools like honeypots, firewalls, and other technologies.

Explain what implementing defense-in-depth requires. Common designs use segmentation to separate different security levels or areas where security needs differ. Network architecture in many organizations needs to take into account physical network devices and design, cloud options, and software-defined networks. Cloud tools like virtual private clouds and tools like cloud access security brokers should be considered where appropriate. A wide range of controls can help with this, ranging from use of appropriate permissions, whitelisting, and blacklisting to technical controls like firewalls, intrusion prevention and detection systems, data loss prevention (DLP) tools, and endpoint detection and response.

Know that understanding requirements and identifying potential points of failure are part of security architecture analysis. Single points of failure are design elements where a single failure can cause the design to fail to function as intended. Other common issues include data validation issues, problems caused by trust and requirements for trust versus whether the data or system can be trusted, user-related failures, and authentication and authorization processes and procedures.

Know that maintaining layered security designs requires continual review and validation. Scheduled reviews help to ensure that the design has not become outdated. Continual improvement processes keep layered defense designs current while helping to engage staff and provide ongoing awareness. At the end of their life cycle, processes, procedures, and technical designs must be retired with appropriate notification and documentation.

Lab Exercises

Activity 7.1: Review an Application Using the OWASP Attack Surface Analysis Cheat Sheet

In this exercise you will use the Open Web Application Security Project Application Attack Surface Analysis Cheat Sheet. If you are not completely familiar with an application in your organization, you may find it helpful to talk to an application administrator, DevOps engineer, or developer.

- Part 1: Review the OWASP Attack Surface Analysis Cheat Sheet. The cheat sheet can be found at

cheatsheetseries.owasp.org/cheatsheets/Attack_Surface_Analysis_Cheat_Sheet.html.

Review the cheat sheet, and make sure that you understand how application attack surfaces are mapped. If you're not familiar with the topic area, spend some time researching it.

- Part 2: Select an application you are familiar with and follow the cheat sheet to map the application's attack surface. Select an application that you have experience with from your professional experience and follow the cheat sheet. If you do not know an answer, note that you cannot identify the information and move on.

- Part 3: Measure the application's attack surface. Use your responses to analyze the application's attack surface. Answer the following questions:

- What are the high-risk attack surface areas?

- What entry points exist for the application?

- Can you calculate the Relative Attack Surface Quotient for the application using the data you have? If not, why not?

- What did you learn by doing this mapping?

- Part 4: Manage the attack surface. Using your mapping, identify how (and if!) you should modify the application's attack surface. What would you change and why? Are there controls or decisions that you would make differently in different circumstances?

Activity 7.2: Review a NIST Security Architecture

The graphic on the next page shows the NIST access authorization information flow and its control points in a logical flow diagram as found in NIST SP1800-5b. This NIST architecture uses a number of important information gathering and analytical systems:

- Fathom, a system that provides anomaly detection

- BelManage, which monitors installed software

- Bro, an IDS

- Puppet, an open source configuration management tool that is connected to the organization's change management process

- Splunk, for searching, monitoring, and analyzing machine-generated big data, via a web-style interface

- Snort, an IDS

- WSUS for Windows updates

- OpenVAS, an open source vulnerability management tool

- Asset Central, an asset tracking system

- CA ITAM, which also tracks physical assets

- iSTAR Edge, a physical access control system

Make note of potential issues with this diagram, marking where you would apply additional controls or where a single failure might lead to a systemwide failure. Additional details about the specific systems and capabilities can be found in the NIST ITAM draft at nccoe.nist.gov/sites/default/files/library/sp1800/fs-itam-nist-sp1800-5b-draft.pdf.

Activity 7.3: Security Architecture Terminology

Match each of the following terms to the correct description.

| Air gap | A logically isolated segment of a cloud that provides you with control of your own environment. |

| Containerization | An access control mechanism that allows through all things except those that are specifically blocked. |

| VPC | A physical separation between devices or networks to prevent access. |

| Cloud access security broker | A system that scans outbound traffic and prevents it from being transmitted if it contains specific content types. |

| Blacklisting | A system that validates systems and sometimes users before they connect to a network. |

| Asset tagging | Software or a service that enforces security for cloud applications. |

| NAC | A technology that bundles together an application and the files, libraries, and other dependencies it needs to run, allowing the application to be deployed to multiple platforms or systems. |

| Data loss prevention | Labeling or otherwise identifying systems, devices, or other items. |

Review Questions

- Susan needs to explain what a jump box is to a member of her team. What should she tell them?

- It is a secured system that is exposed in a DMZ.

- It is a system used to access and manage systems or devices in the same security zone.

- It is a system used to skip revisions during updates.

- It is a system used to access and manage systems or devices in another security zone.

- Ben sets up a system that acts like a vulnerable host in order to observe attacker behavior. What type of system has he set up?

- A sinkhole

- A blackhole

- A honeypot

- A beehive

- Cameron builds a malware signature using a hash of the binary that he found on an infected system. What problem is he likely to encounter with modern malware when he tries to match hashes with other infected systems?

- The malware may be polymorphic.

- The hashes may match too many malware packages.

- The attackers may have encrypted the binary.

- The hash value may be too long.

- Ric is reviewing his organization's network design and is concerned that a known flaw in the border router could let an attacker disable their Internet connectivity. Which of the following is an appropriate compensatory control?

- An identical second redundant router set up in an active/passive design

- An alternate Internet connectivity method using a different router type

- An identical second redundant router set up in an active/active design

- A firewall in front of the router to stop any potential exploits that could cause a failure of connectivity

- Fred wants to ensure that only software that has been preapproved runs on workstations he manages. What solution will best fit this need?

- Blacklisting

- Antivirus

- Whitelisting

- Virtual desktop infrastructure (VDI)

- A member of Susan's team recently fell for a phishing scam and provided his password and personal information to a scammer. What layered security approach is not an appropriate layer for Susan to implement to protect her organization from future issues?

- Multifactor authentication

- Multitiered firewalls

- An awareness program

- A SIEM monitoring where logins occur

- Chris is in charge of his organization's Windows security standard, including their Windows 7 security standard, and has recently decommissioned the organization's last Windows 7 system. What is the next step in his security standard's life cycle?

- A scheduled review of the Windows standards

- A final update to the standard, noting that Windows 7 is no longer supported

- Continual improvement of the Windows standards

- Retiring the Windows 7 standard

- Example Corporation has split their network into network zones that include sales, HR, research and development, and guest networks, each separated from the others using network security devices. What concept is Example Corporation using for their network security?

- Segmentation

- Multiple-interface firewalls

- Single-point-of-failure avoidance

- Zoned routing

- Which of the following layered security controls is commonly used at the WAN, LAN, and host layer in a security design?

- Encryption of data at rest

- Firewalls

- DMZs

- Antivirus

- After a breach that resulted in attackers successfully exfiltrating a sensitive database, Jason has been asked to deploy a technology that will prevent similar issues in the future. What technology is best suited to this requirement?

- Firewalls

- IDS

- DLP

- EDR

- Michelle has been asked to review her corporate network's design for single points of failure that would impact the core network operations. The following graphic shows a redundant network design with a critical fault: a single point of failure that could take the network offline if it failed. Where is this single point of failure?

- Point A

- Point B

- Point C

- Point D

- During a penetration test of Anna's company, the penetration testers were able to compromise the company's web servers and deleted their log files, preventing analysis of their attacks. What compensating control is best suited to prevent this issue in the future?

- Using full-disk encryption

- Using log rotation

- Sending logs to a syslog server

- Using TLS to protect traffic

- Which of the following controls is best suited to prevent vulnerabilities related to software updates?

- Operating system patching standards

- Centralized patch management software

- Vulnerability scanning

- An IPS with appropriate detections enabled

- Ben's organization uses data loss prevention software that relies on metadata tagging to ensure that sensitive files do not leave the organization. What compensating control is best suited to ensuring that data that does leave is not exposed?

- Mandatory data tagging policies

- Encryption of all files sent outside the organization

- DLP monitoring of all outbound network traffic

- Network segmentation for sensitive data handling systems

- James is concerned that network traffic from his datacenter has increased and that it may be caused by a compromise that his security tools have not identified. What SIEM analysis capability could he use to look at the traffic over time sent by his datacenter systems?

- Automated reporting

- Trend analysis

- BGP graphing

- Log aggregation

- Angela needs to implement a control to ensure that she is notified of changes to important configuration files on her server. What type of tool should she use for this control?

- Antimalware

- Configuration management

- File integrity checking

- Logging

- Megan has recently discovered that the Linux server she is responsible for maintaining is affected by a zero-day exploit for a vulnerability in the web application software that is needed by her organization. Which of the following compensating controls should she implement to best protect the server?

- A WAF

- Least privilege for accounts

- A patch from the vendor

- An IDS

- Mike installs a firewall in front of a previously open network to prevent the systems behind the firewall from being targeted by external systems. What did Mike do?

- Reduced the organization's attack surface

- Implemented defense-in-depth

- Added a corrective control

- Added an administrative control

- Port security refers to what type of security control?

- Allowing only specific MAC addresses to access a network port

- The controls used to protect port when oceangoing vessels dock

- A technical control that requires authentication by a user before a port is used

- A layer 3 filter applied to switch ports

- Tony configures his network to provide false DNS responses for known malware domains. What technique is he using?

- Blacklisting

- Whitelisting

- Sinkholing

- Honeypotting