Chapter 3

Reconnaissance and Intelligence Gathering

Security analysts, penetration testing professionals, vulnerability and threat analysts, and others who are tasked with understanding the security environment in which an organization operates need to know how to gather information. This process is called reconnaissance or intelligence gathering.

Information gathering is often a requirement of information security standards and laws. For example, the Payment Card Industry Data Security Standard (PCI DSS) requires that organizations handling credit cards perform both internal and external network vulnerability scans at least quarterly, and after any significant change. Gathering internal and external information about your own organization is typically considered a necessary part of understanding organizational risk, and implementing industry best practices to meet required due diligence requirements is likely to result in this type of work.

In this chapter, you will explore active intelligence gathering, including port scanning tools and how you can determine a network's topology from scan data. Then you will learn about passive intelligence gathering, including tools, techniques, and real-world experiences, to help you understand your organization's footprint. Finally, you will learn how to limit a potential attacker's ability to gather information about your organization using the same techniques.

Mapping and Enumeration

The first step when gathering organizational intelligence is to identify an organization's technical footprint. Host enumeration is used to create a map of an organization's networks, systems, and other infrastructure. This is typically accomplished by combining information-gathering tools with manual research to identify the networks and systems that an organization uses.

Standards for penetration testing typically include enumeration and reconnaissance processes and guidelines. There are a number of publicly available resources, including the Open Source Security Testing Methodology Manual (OSSTMM), the Penetration Testing Execution Standard, and National Institute of Standards and Technology (NIST) Special Publication 800-115, the Technical Guide to Information Security Testing and Assessment.

- OSSTMM:

www.isecom.org/research.html - Penetration Testing Execution Standard:

www.pentest-standard.org/index.php/Main_Page - SP 800-115:

csrc.nist.gov/publications/nistpubs/800-115/SP800-115.pdf

Active Reconnaissance

Information gathered during enumeration exercises is typically used to provide the targets for active reconnaissance. Active reconnaissance uses host scanning tools to gather information about systems, services, and vulnerabilities. It is important to note that although reconnaissance does not involve exploitation, it can provide information about vulnerabilities that can be exploited.

Mapping Networks and Discovering Topology

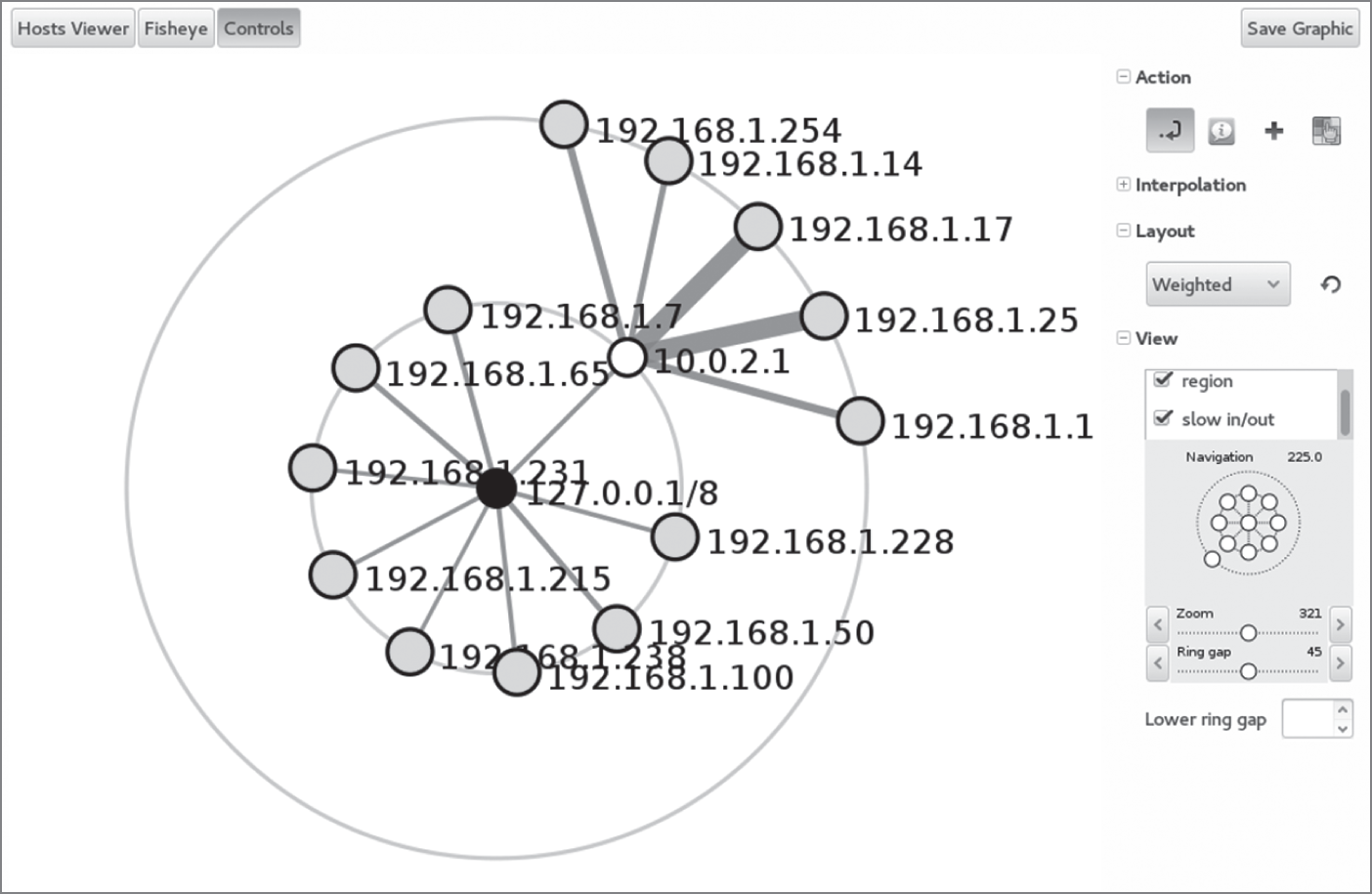

Active scans can also provide information about network design and topology. As a scanning tool traverses a network range, it can assess information contained in the responses it receives. This can help a tester take an educated guess about the topology of the network based on the time to live (TTL) of the packets it receives, traceroute information, and responses from network and security devices. Figure 3.1 shows a scan of a simple example network. Routers or gateways are centrally connected to hosts and allow you to easily see where a group of hosts connect. The system that nmap runs from becomes the center of the initial scan and shows its local loopback address, 127.0.0.1. A number of hosts appear on a second network segment behind the 10.0.2.1 router. Nmap (and Zenmap, using nmap) may not discover all systems and network devices—firewalls or other security devices can stop scan traffic, resulting in missing systems or networks.

FIGURE 3.1 Zenmap topology view

When you are performing network discovery and mapping, it is important to lay out the systems that are discovered based on their network addresses and TTL. These data points can help you assess their relative position in the network. Of course, if you can get actual network diagrams, you will have a much more accurate view of the network design than scans may provide.

The topology information gathered by a scanning tool is likely to have flaws and may not match the actual design of the target network. Security and network devices can cause differences in the TTL and traceroute information, resulting in incorrect or missing data. Firewalls can also make devices and systems effectively invisible to scans, resulting in segments of the network not showing up in the topology built from scan results.

In addition to challenges caused by security devices, you may have to account for variables, including differences between wired and wireless networks, virtual networks and virtual environments like VMware and Microsoft Hyper-V, and of course on-premises networks versus cloud-hosted services and infrastructure. If you are scanning networks that you or your organization controls, you should be able to ensure that your scanning systems or devices are placed appropriately to gather the information that you need. If you are scanning as part of a penetration test or a zero-knowledge test, you may need to review your data to ensure that these variables haven't caused you to miss important information.

Pinging Hosts

The most basic form of discovery that you can conduct is pinging a network address. The ping command is a low-level network command that sends a packet called an echo request to a remote IP address. If the remote system receives the request, it responds with an echo reply, indicating that it is up and running and that the communication path is valid. Ping communications take place using the Internet Control Message Protocol (ICMP).

Here's an example of an echo request sent to a server running on a local network:

[~/]$ ping 172.31.48.137PING 172.31.48.137 (172.31.48.137) 56(84) bytes of data.64 bytes from 172.31.48.137: icmp_seq=1 ttl=255 time=0.016 ms64 bytes from 172.31.48.137: icmp_seq=2 ttl=255 time=0.037 ms64 bytes from 172.31.48.137: icmp_seq=3 ttl=255 time=0.026 ms64 bytes from 172.31.48.137: icmp_seq=4 ttl=255 time=0.028 ms64 bytes from 172.31.48.137: icmp_seq=5 ttl=255 time=0.026 ms64 bytes from 172.31.48.137: icmp_seq=6 ttl=255 time=0.027 ms64 bytes from 172.31.48.137: icmp_seq=7 ttl=255 time=0.027 ms--- 172.31.48.137 ping statistics ---7 packets transmitted, 7 received, 0% packet loss, time 6142msrtt min/avg/max/mdev = 0.016/0.026/0.037/0.008 ms

In this case, a user used the ping command to query the status of a system located at 172.31.48.137 and received seven replies to the seven requests that were sent.

It's important to recognize that, while an echo reply from a remote host indicates that it is up and running, the lack of a response does not necessarily mean that the remote host is not active. Many firewalls block ping requests and individual systems may be configured to ignore echo request packets.

The hping utility is a more advanced version of the ping command that allows the customization of echo requests in an effort to increase the likelihood of detection. hping can also be used to generate handcrafted packets as part of a penetration test. Here's an example of the hping command in action:

[~/]$ hping -p 80 -S 172.31.48.137HPING 172.31.48.137 (eth0 172.31.48.137): S set, 40 headers + 0 data bytes.len=44 ip=172.31.48.137 ttl=45 DF id=0 sport=80 flags=SA seq=0 win-29200 rtt=20.0mslen=44 ip=172.31.48.137 ttl=45 DF id=0 sport=80 flags=SA seq=1 win-29200 rtt=19.7mslen=44 ip=172.31.48.137 ttl=45 DF id=0 sport=80 flags=SA seq=2 win-29200 rtt=19.8mslen=44 ip=172.31.48.137 ttl=44 DF id=0 sport=80 flags=SA seq=3 win-29200 rtt=20.1mslen=44 ip=172.31.48.137 ttl=46 DF id=0 sport=80 flags=SA seq=4 win-29200 rtt=20.2mslen=44 ip=172.31.48.137 ttl=45 DF id=0 sport=80 flags=SA seq=5 win-29200 rtt=20.5mslen=44 ip=172.31.48.137 ttl=46 DF id=0 sport=80 flags=SA seq=6 win-29200 rtt=20.2ms^C--- 172.31.48.137 hping statistic ---26 packets transmitted, 26 packets received, 0% packet lossRound-trip min/avg/max = 19.2/20.0/20.8

In this command, the -p 80 flag was used to specify that the probes should take place using TCP port 80. This port is a strong choice because it is used to host web servers. The -S flag indicates that the TCP SYN flag should be set, indicating a request to open a connection. Any remote target running an HTTP web server would be likely to respond to this request because it is indistinguishable from a legitimate web connection request.

Port Scanning and Service Discovery Techniques and Tools

Port scanning tools are designed to send traffic to remote systems and then gather responses that provide information about the systems and the services they provide. They are one of the most frequently used tools when gathering information about a network and the devices that are connected to it. Because of this, port scans are often the first step in an active reconnaissance of an organization.

Port scanners have a number of common features, including the following:

- Host discovery

- Port scanning and service identification

- Service version identification

- Operating system identification

An important part of port scanning is an understanding of common ports and services. Ports 0–1023 are referred to as well-known ports or system ports, but there are quite a few higher ports that are commonly of interest when conducting port scanning. Ports ranging from 1024 to 49151 are registered ports and are assigned by the Internet Assigned Numbers Authority (IANA) when requested. Many are also used arbitrarily for services. Since ports can be manually assigned, simply assuming that a service running on a given port matches the common usage isn't always a good idea. In particular, many SSH and HTTP/HTTPS servers are run on alternate ports, either to allow multiple web services to have unique ports or to avoid port scanning that only targets their normal port.

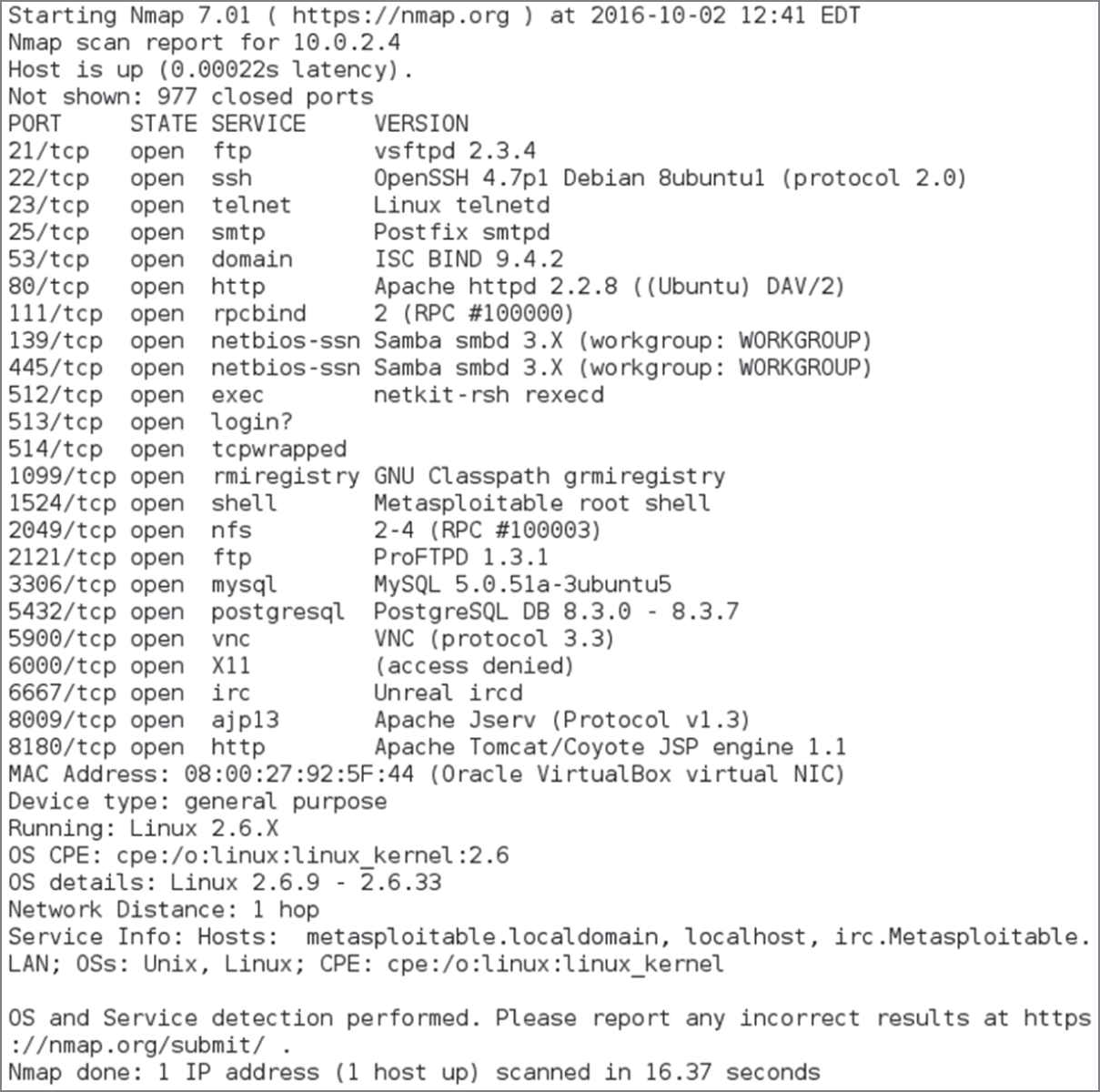

Analysis of scan data can be an art, but basic knowledge of how to read a scan is quite useful since scans can provide information about what hosts are on a network, what services they are running, and clues about whether they are vulnerable to attacks. In Figure 3.2, a vulnerable Linux system with a wide range of services available has been scanned. To read this scan, you can start at the top with the command used to run it. The nmap port scanner (which we will discuss in more depth in a few pages) was run with the -O option, resulting in an attempt at operating system identification. The -P0 flag tells nmap to skip pinging the system before scanning, and the -sS flag performed a TCP SYN scan, which sends connection attempts to each port. Finally, we see the IP address of the remote system. By default, nmap scans 1,000 common ports, and nmap discovered 23 open ports out of that list.

FIGURE 3.2 Nmap scan results

Next, the scan shows us the ports it found open, whether they are TCP or UDP, their state (which can be open if the service is accessible, closed if it is not, or filtered if there is a firewall or similar protection in place), and its guess about what service the port is. Nmap service identification can be wrong—it's not as full featured as some vulnerability scanners, but the service list is a useful starting place.

Finally, after we see our services listed, we get the MAC address—in this case, indicating that the system is running as a VM under Oracle's VirtualBox virtualization tool and that it is running a 2.6 Linux kernel. This kernel is quite old and reached its end-of-life support date in February 2016, meaning that it's likely to be vulnerable.

The final things to note about this scan are the time it took to run and how many hops there are to the host. This scan completed in less than two seconds, which tells us that the host responded quickly and that the host was only one hop away—it was directly accessible from the scanning host. A more complex network path will show more hops, and scanning more hosts or additional security on the system or between the scanner and the remote target can slow things down.

OS Fingerprinting

The ability to identify an operating system based on the network traffic that it sends is known as operating system fingerprinting, and it can provide useful information when performing reconnaissance. This is typically done using TCP/IP stack fingerprinting techniques that focus on comparing responses to TCP and UDP packets sent to remote hosts. Differences in how operating systems and even operating system versions respond, what TCP options they support, what order they send packets in, and a host of other details can provide a good guess at what OS the remote system is running.

Service and Version Identification

The ability to identify a service can provide useful information about potential vulnerabilities, as well as verify that the service that is responding on a given port matches the service that typically uses that port. Service identification is usually done in one of two ways: either by connecting and grabbing the banner or connection information provided by the service or by comparing its responses to the signatures of known services.

Figure 3.3 shows the same system scanned in Figure 3.1 with the nmap -sV flag used. The -sV flag grabs banners and performs other service version validation steps to capture additional information, which it checks against a database of services.

The basic nmap output remains the same as Figure 3.1, but we have added information in the Version column, including the service name as well as the version and sometimes additional detail about the service protocol version or other details. This information can be used to check for patch levels or vulnerabilities and can also help to identify services that are running on nonstandard ports.

Common Tools

Nmap is the most commonly used command-line port scanner, and it is a free, open source tool. It provides a broad range of capabilities, including multiple scan modes intended to bypass firewalls and other network protection devices. In addition, it provides support for operating system fingerprinting, service identification, and many other capabilities.

FIGURE 3.3 Nmap service and version detection

Using nmap's basic functionality is quite simple. Port scanning a system merely requires that nmap be installed and that you provide the target system's hostname or IP address. Figure 3.4 shows an nmap scan of a Windows 10 system with its firewall turned off. The nmap scan provides quite a bit of information about the system—first, we see a series of common Microsoft ports, including 135, 139, and 445, running Microsoft Remote Procedure Call (MSRPC), NetBIOS, and Microsoft's domain services, which are useful indicators that a remote system is a Windows host. The additional ports that are shown also reinforce that assessment, since ICSLAP (the local port opened by Internet Connection Sharing) is used for Microsoft internal proxying, Web Services on Devices API (WSDAPI) is a Microsoft devices API, and each of the other ports can be similarly easily identified by using a quick search for the port and service name nmap provides. This means that you can often correctly guess details about a system even without an OS identification scan.

FIGURE 3.4 Nmap of a Windows 10 system

A more typical nmap scan is likely to include a number of nmap's command-line flags:

- A scan technique, like TCP

SYN, which is the most popular scan method because it uses a TCPSYNpacket to verify a service response, and is quick and unobtrusive. Other connection methods are Connect, which completes a full connection; UDP scans for non-TCP services; ACK scans, which are used to map firewall rules; and a variety of other methods for specific uses. - A port range, either specifying ports or including the full 1–65535 range. Scanning the full range of ports can be very slow, but it can be useful to identify hidden or unexpected services. Fortunately, nmap's default ports are likely to help find and identify most systems.

- Service version detection using the

–sVflag, which as shown earlier can provide additional detail but may not be necessary if you intend to use a vulnerability scanner to follow up on your scans. - OS detection using the

–Oflag, which can help provide additional information about systems on your network.

Nmap also has an official graphical user interface, Zenmap, which provides additional visualization capabilities, including a topology view mode that provides information about how hosts fit into a network.

Angry IP Scanner is a multiplatform (Windows, Linux, and macOS) port scanner with a graphical user interface. In Figure 3.5, you can see a sample scan run with Angry IP Scanner with the details for a single scanned host displayed. Unlike nmap, Angry IP Scanner does not provide detailed identification for services and operating systems, but you can turn on different modules called “fetchers,” including ports, TTL, filtered ports, and others. When running Angry IP Scanner, it is important to configure the ports scanned under the Preferences menu; otherwise, no port information will be returned! Unfortunately, Angry IP Scanner requires Java, which means that it may not run on systems where Java is not installed for security reasons.

FIGURE 3.5 Angry IP Scanner

Angry IP Scanner is not as feature rich as nmap, but the same basic techniques can be used to gather information about hosts based on the port scan results. Figure 3.5 shows the information from a scan of a home router. Note that unlike nmap, Angry IP Scanner does not provide service names or service identification information.

In addition to these two popular scanners, security tools often build in a port scanning capability to support their primary functionality. Metasploit, the Qualys vulnerability management platform, OpenVAS, and Tenable's Nessus vulnerability scanner are all examples of security tools that have built-in port scanning capabilities as part of their suite of tools.

Passive Footprinting

Passive footprinting is far more challenging than active information gathering. Passive analysis relies on information that is available about the organization, systems, or network without performing your own probes. Passive fingerprinting typically relies on logs and other existing data, which may not provide all the information needed to fully identify targets. Its reliance on stored data means that it may also be out of date!

Despite this, you can use a number of common techniques if you need to perform passive fingerprinting. Each relies on access to existing data, or to a place where data can be gathered in the course of normal business operations.

Log and Configuration Analysis

Log files can provide a treasure trove of information about systems and networks. If you have access to local system configuration data and logs, you can use the information they contain to build a thorough map of how systems work together, which users and systems exist, and how they are configured. Over the next few pages, we will look at how each of these types of log files can be used and some of the common locations where they can be found.

Network Devices

Network devices log their own activities, status, and events including traffic patterns and usage. Network device information includes network device logs, network device configuration files, and network flows.

Network Device Logs

By default, many network devices log messages to their console ports, which means that only a user logged in at the console will see them. Fortunately, most managed networks also send network logs to a central log server using the syslog utility. Many networks also leverage the Simple Network Management Protocol (SNMP) to send device information to a central control system.

Network device log files often have a log level associated with them. Although log level definitions vary, many are similar to Cisco's log levels, which are shown in Table 3.1.

TABLE 3.1 Cisco log levels

| Level | Level name | Example |

| 0 | Emergencies | Device shutdown due to failure |

| 1 | Alerts | Temperature limit exceeded |

| 2 | Critical | Software failure |

| 3 | Errors | Interface down message |

| 4 | Warning | Configuration change |

| 5 | Notifications | Line protocol up/down |

| 6 | Information | ACL violation |

| 7 | Debugging | Debugging messages |

Network device logs are often not as useful as the device configuration data when you are focused on intelligence gathering, although they can provide some assistance with topology discovery based on the devices they communicate with. During penetration tests or when you are conducting security operations, network device logs can provide useful warning of attacks or reveal configuration or system issues.

The Cisco router log shown in Figure 3.6 is accessed using the command show logging and can be filtered using an IP address, a list number, or a number of other variables. Here, we see a series of entries with a single packet denied from a remote host 10.0.2.50. The remote host is attempting to connect to its target system on a steadily increasing TCP port, likely indicating a port scan is in progress and being blocked by a rule in access list 210.

FIGURE 3.6 Cisco router log

Network Device Configuration

Configuration files from network devices can be invaluable when mapping network topology. Configuration files often include details of the network, routes, systems that the devices interact with, and other network details. In addition, they can provide details about syslog and SNMP servers, administrative and user account information, and other configuration items useful as part of information gathering.

Figure 3.7 shows a portion of the SNMP configuration from a typical Cisco router. Reading the entire file shows routing information, interface information, and details that will help you place the router in a network topology. The section shown provides in-depth detail of the SNMP community strings, the contact for the device, as well as what traps are enabled and where they are sent. In addition, you can see that the organization uses Terminal Access Controller Access Control System (TACACS) to control their servers and what the IP addresses of those servers are. As a security analyst, this is useful information—for an attacker, this could be the start of an effective social engineering attack!

FIGURE 3.7 SNMP configuration from a typical Cisco router

Netflows

Netflow is a Cisco network protocol that collects IP traffic information, allowing network traffic monitoring. Flow data is used to provide a view of traffic flow and volume. A typical flow capture includes the IP and port source and destination for the traffic and the class of service. Netflows and a netflow analyzer can help identify service problems and baseline typical network behavior and can also be useful in identifying unexpected behaviors.

Netstat

In addition to network log files, local host network information can be gathered using netstat in Windows, Linux, and macOS, as well as most Unix and Unix-like operating systems. Netstat provides a wealth of information, with its capabilities varying slightly between operating systems. It can provide such information as the following:

- Active TCP and UDP connections, filtered by each of the major protocols: TCP, UDP, ICMP, IP, IPv6, and others. Figure 3.8 shows Linux netstat output for

netstat -ta, showing active TCP connections. Here, an SSH session is open to a remote host. The-uflag would work the same way for UDP;-wshows RAW, and-Xshows Unix socket connections.

FIGURE 3.8 Linux

netstat -taoutput - Which executable file created the connection, or its process ID (PID). Figure 3.9 shows a Windows netstat call using the

-oflag to identify process numbers, which can then be referenced using the Windows Task Manager.

FIGURE 3.9 Windows

netstat -ooutput - Ethernet statistics on how many bytes and packets have been sent and received. In Figure 3.10, netstat is run on a Windows system with the

-eflag, providing interface statistics. This tracks the number of bytes sent and received, as well as errors, discards, and traffic sent via unknown protocols.

FIGURE 3.10 Windows

netstat -eoutput - Route table information, including IPv4 and IPv6 information, as shown in Figure 3.11. This is retrieved using the

-nrflag and includes various information depending on the OS, with the Windows version showing the destination network, netmask, gateway, the interface the route is associated with, and a metric for the route that captures link speed and other details to establish preference for the route.

FIGURE 3.11 Windows

netstat -nroutput

This means that running netstat from a system can provide information about both the machine's network behavior and what the local network looks like. Knowing what machines a system has or is communicating with can help you understand local topology and services. Best of all, because netstat is available by default on so many operating systems, it makes sense to presume it will exist and that you can use it to gather information.

DHCP Logs and DHCP Server Configuration Files

The Dynamic Host Configuration Protocol (DHCP) is a client/server protocol that provides an IP address as well as information such as the default gateway and subnet mask for the network segment that the host will reside on. When you are conducting passive reconnaissance, DHCP logs from the DHCP server for a network can provide a quick way to identify many of the hosts on the network. If you combine DHCP logs with other logs, such as firewall logs, you can determine which hosts are provided with dynamic IP addresses and which hosts are using static IP addresses. As you can see in Figure 3.12, a Linux dhcpd.conf file provides information about hosts and the network they are accessing.

FIGURE 3.12 Linux dhcpd.conf file

In this example, the DHCP server provides IP addresses between 192.168.1.20 and 192.168.1.240; the router for the network is 192.168.1.1, and the DNS servers are 192.168.1.1 and 192.168.1.2. We also see a single system named “Demo” with a fixed DHCP address. Systems with fixed DHCP addresses are often servers or systems that need to have a known IP address for a specific function and are thus more interesting when gathering information.

DHCP logs for Linux are typically found in /var/log/dhcpd.log or by using the journalctl command to view logs, depending on the distribution you are using. DHCP logs can provide information about systems, their MAC addresses, and their IP addresses, as seen in this sample log entry:

Oct 5 02:28:11 demo dhcpd[3957]: reuse_lease: lease age 80 (secs) under 25%threshold, reply with unaltered, existing leaseOct 5 02:28:11 demo dhcpd[3957]: DHCPREQUEST for 10.0.2.40 (10.0.2.32) from08:00:27:fa:25:8e via enp0s3Oct 5 02:28:11 demo dhcpd[3957]: DHCPACK on 10.0.2.40 to 08:00:27:fa:25:8e via enp0s3Oct 5 02:29:17 demo dhcpd[3957]: reuse_lease: lease age 146 (secs) under 25%threshold, reply with unaltered, existing leaseOct 5 02:29:17 demo dhcpd[3957]: DHCPREQUEST for 10.0.2.40 from 08:00:27:fa:25:8e via enp0s3Oct 5 02:29:17 demo dhcpd[3957]: DHCPACK on 10.0.2.40 to 08:00:27:fa:25:8e via enp0s3Oct 5 02:29:38 demo dhcpd[3957]: DHCPREQUEST for 10.0.2.40 from 08:00:27:fa:25:8e via enp0s3Oct 5 02:29:38 demo dhcpd[3957]: DHCPACK on 10.0.2.40 to 08:00:27:fa:25:8e(demo) via enp0s3

This log shows a system with IP address 10.0.2.40 renewing its existing lease. The system has a hardware address of 08:00:27:fa:25:8e, and the server runs its DHCP server on the local interface enp0s3.

Firewall Logs and Configuration Files

Router and firewall configurations files and logs often contain information about both successful and blocked connections. This means that analyzing router and firewall access control lists (ACLs) and logs can provide useful information about what traffic is allowed and can help with topological mapping by identifying where systems are based on traffic allowed through or blocked. Configuration files make this even easier, since they can be directly read to understand how systems interact with the firewall.

Firewall logs can also allow penetration testers to reverse-engineer firewall rules based on the contents of the logs. Even without the actual configuration files, log files can provide a good view of how traffic flows. Like many other network devices, firewalls often use log levels to separate informational and debugging messages from more important messages. In addition, they typically have a vendor-specific firewall event log format that provides information based on the vendor's logging standards.

Organizations use a wide variety of firewalls, including those from Cisco, Palo Alto, and Check Point, which means that you may encounter logs in multiple formats. Fortunately, all three have common features. Each provides a date/timestamp and details of the event in a format intended to be understandable. For example, Cisco ASA firewall logs can be accessed from the console using the show logging command (often typed as show log). Entries are reasonably readable, listing the date and time, the system, and the action taken. For example, a log might read

Sep 13 10:05:11 10.0.0.1 %ASA-5-111008: User 'ASAadmin' executed the 'enable' commandThis command indicates that the user ASAadmin ran the Cisco enable command, which is typically used to enter privileged mode on the device. If ASAadmin was not supposed to use administrative privileges, this would be an immediate red flag in your investigation.

A review of router/firewall ACLs can also be conducted manually. A portion of a sample Cisco router ACL is shown here:

ip access-list extended inb-lanpermit tcp 10.0.0.0 0.255.255.255 any eq 22permit tcp 172.16.0.0 0.15.255.255 any eq 22permit tcp host 192.168.2.1 any eq 22deny tcp 8.16.0.0 0.15.255.255 any eq 22

This ACL segment names the access list and then sets a series of permitted actions along with the networks that are allowed to perform the actions. This set of rules specifically allows all addresses in the 10.0.0.0 network to use TCP port 22 to send traffic, thus allowing SSH. The 172.16.0.0 network is allowed the same access, as is a host with IP address 192.168.2.1. The final deny rule will prevent the named network range from sending SSH traffic.

If you encounter firewall or router configuration files, log files, or rules on the exam, it may help to rewrite them into language you can read more easily. To do that, start with the action or command; then find the targets, users, or other things that are affected. Finally, find any modifiers that specify what will occur or what did occur. In the previous router configuration, you could write permit tcp 10.0.0.0 0.255.255.255 any eq 22 as “Allow TCP traffic from the 10.0.0.0 network on any source port to destination port 22.” Even if you're not familiar with the specific configuration or commands, this can help you understand many of the entries you will encounter.

System Log Files

System logs are collected by most systems to provide troubleshooting and other system information. Log information can vary greatly depending on the operating system, how it is configured, and what service and applications the system is running.

Log files can provide information about how systems are configured, what applications are running on them, which user accounts exist on the system, and other details, but they are not typically at the top of the list for reconnaissance. They are gathered if they are accessible, but most log files are kept in a secure location and are not accessible without administrative system access.

Harvesting Data from DNS and Whois

The Domain Name System (DNS) is often one of the first stops when gathering information about an organization. Not only is DNS information publicly available, it is often easily connected to the organization by simply checking for Whois information about their website. With that information available, you can find other websites and hosts to add to your organizational footprint.

DNS and Traceroute Information

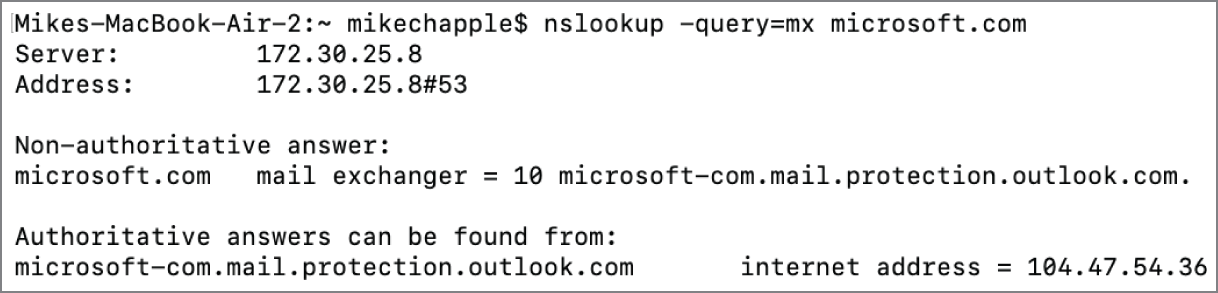

DNS converts domain names like google.com to IP addresses (as shown in Figure 3.13) or from IP addresses to human-understandable domain names. The command for this on Windows, Linux, and macOS systems is nslookup.

FIGURE 3.13 Nslookup for google.com

Once you know the IP address that a system is using, you can look up information about the IP range it resides in. That can provide information about the company or about the hosting services that they use. Nslookup provides a number of additional flags and capabilities, including choosing the DNS server that you use by specifying it as the second parameter, as shown here with a sample query looking up Microsoft.com via Google's public DNS server 8.8.8.8:

nslookup microsoft.com 8.8.8.8Other types of DNS records can be looked up using the -query flag, including MX, NS, SOA, and ANY as possible entries:

nslookup -query=mx microsoft.comThis results in a response like that shown in Figure 3.14.

FIGURE 3.14 Nslookup using Google's DNS with MX query flag

The IP address or hostname also can be used to gather information about the network topology for the system or device that has a given IP address. Using traceroute in Linux or macOS (or tracert on Windows systems), you can see the path packets take to the host. Since the Internet is designed to allow traffic to take the best path, you may see several different paths on the way to the system, but you will typically find that the last few responses stay the same. These are often the local routers and other network devices in an organization's network, and knowing how traffic gets to a system can give you insight into the company's internal network topology. Some systems don't respond with hostname data. Traceroute can be helpful, but it often provides only part of the story, as you can see in Figure 3.15, which provides traceroute information to the BBC's website as shown by the asterisks and request timed out entries in Figure 3.15, and that the last two systems return only IP addresses.

This traceroute starts by passing through the author's home router, then follows a path through Comcast's network with stops in the South Bend area, and then Chicago. The 4.68.63.125 address without a hostname resolution can be matched to Level 3 communications using a Whois website. The requests that timed out may be due to blocked ICMP responses or other network issues, but the rest of the path remains clear: another Level 3 communications host, then a BBC IP address, and two addresses that are under the control of RIPE, the European NCC. Here we can see details of upstream network providers and backbone networks and even start to get an idea of what might be some of the BBC's production network IP ranges.

FIGURE 3.15 Traceroute for bbc.co.uk

Domains and IP Ranges

Domain names are managed by domain name registrars. Domain registrars are accredited by generic top-level domain (gTLD) registries and/or country code top-level domain (ccTLD) registries. This means that registrars work with the domain name registries to provide registration services: the ability to acquire and use domain names. Registrars provide the interface between customers and the domain registries and handle purchase, billing, and day-to-day domain maintenance, including renewals for domain registrations.

Registrars also handle transfers of domains, either due to a sale or when a domain is transferred to another registrar. This requires authorization by the current domain owner, as well as a release of the domain to the new registrar.

The global IP address space is managed by IANA. In addition, IANA manages the DNS Root Zone, which handles the assignments of both gTLDs and ccTLDs. Regional authority over these resources are handled by five regional Internet registries (RIRs):

- African Network Information Center (AFRINIC) for Africa

- American Registry for Internet Numbers (ARIN) for the United States, Canada, parts of the Caribbean region, and Antarctica

- Asia-Pacific Network Information Centre (APNIC) for Asia, Australia, New Zealand, and other countries in the region

- Latin America and Caribbean Network Information Centre (LACNIC) for Latin America and parts of the Caribbean not covered by ARIN

- Réseaux IP Européens Network Coordination Centre (RIPE NCC) for Central Asia, Europe, the Middle East, and Russia

Each of the RIRs provides Whois services to identify the assigned users of the IP space they are responsible for, as well as other services that help to ensure that the underlying IP and DNS foundations of the Internet function for their region.

DNS Entries

In addition to the information provided using nslookup, DNS entries can provide useful information about systems simply through the hostname. A system named “AD4” is a more likely target for Active Directory–based exploits and Windows Server–specific scans, whereas hostnames that reflect a specific application or service can provide both target information and a clue for social engineering and human intelligence activities.

DNS Discovery

External DNS information for an organization is provided as part of its Whois information, providing a good starting place for DNS-based information gathering. Additional DNS servers may be identified either as part of active scanning or passive information gathering based on network traffic or logs, or even by reviewing an organization's documentation. This can be done using a port scan and searching for systems that provide DNS services on UDP or TCP port 53. Once you have found a DNS server, you can query it using dig or other DNS lookup commands, or you can test it to see if it supports zone transfers, which can make acquiring organizational DNS data easy.

Zone Transfers

One way to gather information about an organization is to perform a zone transfer. Zone transfers are intended to be used to replicate DNS databases between DNS servers, which makes them a powerful information-gathering tool if a target's DNS servers allow a zone transfer. This means that most DNS servers are set to prohibit zone transfers to servers that aren't their trusted DNS peers, but security analysts, penetration testers, and attackers are still likely to check to see if a zone transfer is possible.

To check if your DNS server allows zone transfers from the command line, you can use either host or dig:

host –t axfr domain.name dns-serverdig axfr @dns-server domain.name

Running this against a DNS server that allows zone transfers will result in a large file with data like the following dump from digi.ninja, a site that allows practice zone transfers for security practitioners:

; <<>> DiG 9.9.5-12.1-Debian <<>> axfr @nsztm1.digi.ninja zonetransfer.me; (1 server found);; global options: +cmdzonetransfer.me. 7200 IN SOA nsztm1.digi.ninja.robin.digi.ninja. 2014101603 172800 900 1209600 3600zonetransfer.me. 7200 IN RRSIG SOA 8 2 7200 2016033013370020160229123700 44244 zonetransfer.me. GzQojkYAP8zuTOB9UAx66mTDiEGJ26hVIIP2ifk2DpbQLrEAPg4M77i4 M0yFWHpNfMJIuuJ8nMxQgFVCU3yTOeT/EMbN98FYC8lVYwEZeWHtbMmS 88jVlF+cOz2WarjCdyV0+UJCTdGtBJriIczC52EXKkw2RCkv3gtdKKVa fBE=zonetransfer.me. 7200 IN NS nsztm1.digi.ninja.zonetransfer.me. 7200 IN NS nsztm2.digi.ninja.zonetransfer.me. 7200 IN RRSIG NS 8 2 7200 2016033013370020160229123700 44244 zonetransfer.me. TyFngBk2PMWxgJc6RtgCE/RhE0kqeWfwhYSBxFxezupFLeiDjHeVXo+S WZxP54Xvwfk7jlFClNZ9lRNkL5qHyxRElhlH1JJI1hjvod0fycqLqCnx XIqkOzUCkm2Mxr8OcGf2jVNDUcLPDO5XjHgOXCK9tRbVVKIpB92f4Qal ulw=zonetransfer.me. 7200 IN A 217.147.177.157

This transfer starts with a start of authority (SOA) record, which lists the primary name server; the contact for it, robin.digi.ninja (which should be read as [email protected]); and the current serial number for the domain, 2014101603. It also provides the time secondary name servers should wait between changes: 172,800 seconds, the time a primary name server should wait if it fails to refresh; 900 seconds, the time in seconds that a secondary name server can claim to have authoritative information; 1,209,600 seconds, the expiration of the record (two weeks); and 3,600 seconds, the minimum TTL for the domain. Both of the primary name servers for the domain are also listed—nsztm1 and nsztm2—and MX records and other details are contained in the file. These details, plus the full list of DNS entries for the domain, can be very useful when gathering information about an organization, and they are a major reason that zone transfers are turned off for most DNS servers.

DNS Brute Forcing

If a zone transfer isn't possible, DNS information can still be gathered from public DNS by brute force. Simply sending a manual or scripted DNS query for each IP address that the organization uses can provide a useful list of systems. This can be partially prevented by using an IDS or IPS with a rule that will prevent DNS brute-force attacks. Sending queries at a slow rate or from a number of systems can bypass most prevention methods.

Whois

Whois, as mentioned earlier, allows you to search databases of registered users of domains and IP address blocks, and it can provide useful information about an organization or individual based on their registration information. In the sample Whois query for Google shown in Figure 3.16, you can see that information about Google, such as the company's headquarters location, contact information, and its primary name servers, is returned by the Whois query. This information can provide you with additional hints about the organization by looking for other domains registered with similar information, email addresses to contact, and details you can use during the information-gathering process.

Other information can be gathered by using the host command in Linux. This command will provide information about a system's IPv4 and IPv6 addresses as well as its email servers, as shown in Figure 3.17.

FIGURE 3.16 Whois query data for google.com

FIGURE 3.17 host command response for google.com

Responder

Responder is a Python script that is an interesting hybrid between active and passive information gathering. It begins by passively monitoring the network, waiting for other systems to send out broadcast requests intended for devices running networked services. Once Responder passively identifies one of these requests, it switches into active mode and responds, attempting to hijack the connection and gather information from the broadcasting system and its users.

Figure 3.18 provides a look at the Responder start-up screen, showing the variety of services that Responder monitors.

FIGURE 3.18 Responder start-up screen

Responder is available for download from the GitHub repository at github.com/SpiderLabs/Responder.

Information Aggregation and Analysis Tools

A variety of tools can help with aggregating and analyzing information gathering. Examples include theHarvester, a tool designed to gather emails, domain information, hostnames, employee names, and open ports and banners using search engines; Maltego, which builds relationship maps between people and their ties to other resources; and the Shodan search engine for Internet-connected devices and their vulnerabilities. Using a tool like the Harvester can help simplify searches of large datasets, but it's not a complete substitute for a human's creativity.

Information Gathering Using Packet Capture

A final method of passive information gathering requires access to the target network. This means that internal security teams can more easily rely on packet capture as a tool, whereas penetration testers (or attackers!) typically have to breach an organization's security to capture network traffic.

Once you have access, however, packet capture can provide huge amounts of useful information. A capture from a single host can tell you what systems are on a given network by capturing broadcast packets, and OS fingerprinting can give you a good guess about a remote host's operating system. If you are able to capture data from a strategic location in a network using a network tap or span port, you'll have access to far more network traffic, and thus even more information about the network.

In Figure 3.19, you can see filtered packet capture data during an nmap scan. Using packet capture can allow you to dig into specific responses or to verify that you did test a specific host at a specific time. Thus, packet capture can be used both as an analysis tool and as proof that a task was accomplished.

Gathering Organizational Intelligence

The CySA+ exam objectives focus on technical capabilities, but an understanding of nontechnical information gathering can give you a real edge when conducting penetration testing or protecting your organization. Organizational data can provide clues to how systems and networks may be structured, useful information for social engineering, or details of specific platforms or applications that could be vulnerable.

FIGURE 3.19 Packet capture data from an nmap scan

Organizational Data

Gathering organizational data takes on many forms, from reviewing websites to searching through databases like the EDGAR financial database, gathering data from social networks, and even social-engineering staff members to gather data.

Organizational data covers a broad range of information. Penetration testers often look for such information as

- Locations, including where buildings are, how they are secured, and even the business hours and workflow of the organization

- Relationships between departments, individuals, and even other organizations

- Organizational charts

- Document analysis—metadata and marketing

- Financial data

- Individuals

The type of organizational data gathered and the methods used depend on the type of assessment or evaluation being conducted. A no-holds-barred external penetration test may use almost all the techniques we will discuss, whereas an internal assessment may only verify that critical information is not publicly available.

Electronic Document Harvesting

Documents can provide a treasure trove of information about an organization. Document metadata often includes information like the author's name and information about the software used to create the document, and at times it can even include revisions, edits, and other data that you may not want to expose to others who access the files. Cell phone photos may have location data, allowing you to know when and where the photo was taken.

Analytical data based on documents and email can also provide useful information about an organization. In Figure 3.20, an MIT Media Labs tool called Immersion provides information about the people who the demo email account emails regularly. This type of analysis can help identify key contacts and topics quickly, providing leads for further investigation.

Fortunately, metadata scrubbing is easily handled by using a metadata scrubber utility or by using built-in tools like the Document Inspector built into Microsoft Word or the Examine Document tool in Adobe Acrobat. Many websites automatically strip sensitive metadata like location information.

FIGURE 3.20 Demonstration account from immersion.media.mit.edu

Websites

It might seem obvious to include an organization's website when gathering electronic documents, but simply gathering the current website's information doesn't provide a full view of the data that might be available. The Internet Archive (archive.org) and the Time Travel Service (timetravel.mementoweb.org) both provide a way to search historic versions of websites. You can also directly search Google and other caches using a site like cachedview.com.

Historical and cached information can provide valuable data, including details that the organization believes are no longer accessible. Finding every instance of a cached copy and ensuring that they are removed can be quite challenging!

Social Media Analysis

Gathering information about an organization often includes gathering information about the organization's employees. Social media can provide a lot of information, from professional details about what employees do and what technologies and projects they work on to personal data that can be used for social engineering or password guessing. A social media and Internet presence profiling exercise may look at what social networks and profiles an individual has, who they are connected to, how much metadata their profiles contain, and what their tone and posting behaviors are.

In addition to their use as part of organizational information gathering, social media sites are often used as part of a social engineering attack. Knowing an individual's interests or details of their life can provide a much more effective way to ensure that they are caught by a social engineering attack.

Social Engineering

Social engineering, or exploiting the human element of security, targets individuals to gather information. This may be via phone, email, social media, or in person. Typically, social engineering targets specific access or accounts, but it may be more general in nature.

A number of toolkits are available to help with social engineering activities:

- The Social Engineering Toolkit (SET), which provides technical tools to enable social engineering attacks

- Creepy, a geolocation tool that uses social media and file metadata about individuals to provide better information about them

- Metasploit, which includes phishing and other tools to help with social engineering

Phishing, which targets account information or other sensitive information by pretending to be a reputable entity or organization via email or other channels, is commonly used as part of a social engineering process. Targeting an organization with a well-designed phishing attack is one of the most common ways to get credentials for an organization.

Detecting, Preventing, and Responding to Reconnaissance

Although reconnaissance doesn't always result in attacks, limiting the ability of potential attackers to gather information about your organization is a good idea. Unfortunately, organizations that are connected to the Internet are almost constantly being scanned, and that background noise can make it difficult to detect directed attacks. That means that detecting reconnaissance at your Internet border may be a process filled with a lot of background noise. Fortunately, the same techniques apply to limiting both casual and directed reconnaissance activities.

Capturing and Analyzing Data to Detect Reconnaissance

The first step in detecting reconnaissance is to capture data. In order to prioritize where data collection should occur, you first need to understand your own network topology. Monitoring at the connection points between network zones and where data sensitivity or privilege zones meet will provide the most benefit. Since most internal networks should be well protected, monitoring for internal scans is usually a lot easier than monitoring for external data gathering.

Data Sources

Typical data sources for analysis include the following:

- Network traffic analysis using intrusion detection systems (IDSs), intrusion prevention systems (IPSs), host intrusion detection systems (HIDSs), network intrusion detection systems (NIDSs), firewalls, or other network security devices. These devices often provide one or more of the following types of analysis capabilities:

- Packet analysis, with inspection occurring at the individual packet level to detect issues with the content of the packets, behaviors related to the content of the packet, or signatures of attacks contained in them.

- Protocol analysis, which examines traffic to ensure that protocol-level attacks and exploits are not being conducted.

- Traffic and flow analysis intended to monitor behavior based on historic traffic patterns and behavior-based models.

- Device and system logs that can help identify reconnaissance and attacks when analyzed manually or using a tool.

- Port and vulnerability scans conducted internally to identify potential issues. These can help match known vulnerabilities to attempted exploits, alerting administrators to attacks that are likely to have succeeded.

- Security device logs that are designed to identify problems and that often have specific detection and/or response capabilities that can help limit the impact of reconnaissance.

- Security information and event management (SIEM) systems that centralize and analyze data, allowing reporting, notification, and response to security events based on correlation and analysis capabilities.

Data Analysis Methods

Collecting data isn't useful unless you can correlate and analyze it. Understanding the techniques available to analyze data can help you decide how to handle data and what tools you want to apply.

- Anomaly analysis looks for differences from established patterns or expected behaviors. Anomaly detection requires knowledge of what “normal” is to identify differences to build a base model. IDSs and IPSs often use anomaly detection as part of their detection methods.

- Trend analysis focuses on predicting behaviors based on existing data. Trend analysis can help to identify future outcomes such as network congestion based on usage patterns and observed growth. It is not used as frequently as a security analysis method but can be useful to help guarantee availability of services by ensuring that they are capable of handling an organization's growth or increasing needs.

- Signature analysis uses a fingerprint or signature to detect threats or other events. This means that a signature has to exist before it can be detected, but if the signature is well designed, it can reliably detect the specific threat or event.

- Heuristic, or behavioral analysis, is used to detect threats based on their behavior. Unlike signature detection, heuristic detection can detect unknown threats since it focuses on what the threat does rather than attempting to match it to a known fingerprint.

- Manual analysis is also frequently performed. Human expertise and instinct can be useful when analyzing data and may detect something that would otherwise not be seen.

Your choice of analysis methods will be shaped by the tools you have available and the threats your organization faces. In many cases, you may deploy multiple detection and analysis methods in differing locations throughout your network and systems. Defense-in-depth remains a key concept when building a comprehensive security infrastructure.

Preventing Reconnaissance

Denying attackers information about your organization is a useful defensive strategy. Now that you have explored how to perform reconnaissance, you may want to review how to limit the effectiveness of the same strategies.

Preventing Active Reconnaissance

Active reconnaissance can be limited by employing network defenses, but it cannot be completely stopped if you provide any services to the outside world. Active reconnaissance prevention typically relies on a few common defenses:

- Limiting external exposure of services and ensuring that you know your external footprint

- Using an IPS or similar defensive technology that can limit or stop probes to prevent scanning

- Using monitoring and alerting systems to notify you about events that continue despite these preventive measures

Detecting active reconnaissance on your internal network should be a priority, and policy related to the use of scanning tools should be a priority to ensure that attackers cannot probe your internal systems without being detected.

Preventing Passive Information Gathering

Preventing passive information gathering relies on controlling the information that you release. Reviewing passive information gathering techniques, then making sure that your organization has intentionally decided what information should be available, is critical to ensuring that passive information gathering is not a significant risk.

Each passive information gathering technique has its own set of controls that can be applied. For example, DNS antiharvesting techniques used by domain registrars can help prevent misuse. These include the following:

- Blacklisting systems or networks that abuse the service

- Using CAPTCHAs to prevent bots

- Providing privacy services that use third-party registration information instead of the actual person or organization registering the domain

- Implementing rate limiting to ensure that lookups are not done at high speeds

- Not publishing zone files if possible, but gTLDs are required to publish their zone files, meaning this works for only some ccTLDs

Other types of passive information gathering each require a thorough review of exposed data and organization decisions about what should (or must) be exposed and what can be limited either by technical or administrative means.

Summary

Reconnaissance is performed by both attackers and defenders. Both sides seek to gather information about potential targets using port scans, vulnerability scans, and information gathering, thus creating a view of an organization's networks and systems. Security professionals may use the information they gather to improve their defensive posture or to identify potential issues. Attackers may find the information they need to attack vulnerable infrastructure.

Organizational intelligence gathering is often performed in addition to the technical information that is frequently gathered during footprinting and active reconnaissance activities. Organizational intelligence focuses on information about an organization, such as its physical location and facilities security, internal hierarchy and structure, social media and web presence, and how policies and procedures work. This information can help attackers perform social engineering attacks or better leverage the information they gained during their technical reconnaissance activities.

Detecting reconnaissance typically involves instrumenting networks and systems using tools like IDSs, IPSs, and network traffic flow monitors. Scans and probes are common on public networks, but internal networks should experience scanning only from expected locations and times set by internal policies and procedures. Unexpected scans are often an indication of compromise or a flaw in the organization's perimeter security.

As a security practitioner, you need to understand how to gather information by port and vulnerability scanning, log review, passive information gathering, and organizational intelligence gathering. You should also be familiar with common tools like nmap; vulnerability scanners; and local host utilities like dig, netstat, and traceroute. Together these skills will provide you with the abilities you need to understand the networks, systems, and other organizational assets that you must defend.

Exam Essentials

Explain how active reconnaissance is critical to understanding system and network exposure. Active reconnaissance involves probing systems and networks for information. Port scanning is a frequent first step during reconnaissance, and nmap is a commonly used tool for system, port, OS, and service discovery for part scanning. Active reconnaissance can also help determine network topology by capturing information and analyzing responses from network devices and systems. It is important to know common port and service pairings to help with analyzing and understanding discovered services.

Know how passive footprinting provides information without active probes. Passive footprinting relies on data gathered without probing systems and networks. Log files, configuration files, and published data from DNS and Whois queries can all provide valuable data without sending any traffic to a system or network. Packet capture is useful when working to understand a network and can help document active reconnaissance activities as well as providing diagnostic and network data.

Know how gathering organizational intelligence is important to perform or prevent social engineering attacks. Organizational intelligence includes information about the organization like its location, org charts, financial data, business relationships, and details about its staff. This data can be gathered through electronic data harvesting, social media analysis, and social engineering, or by gathering data in person.

Describe how detecting reconnaissance can help identify security flaws and warn of potential attacks. Detecting reconnaissance relies on capturing evidence of the intelligence gathering activities. This is typically done using tools like IDSs, IPSs, and log analysis, and by correlating information using a SIEM system. Automated data analysis methods used to detect reconnaissance look for anomalies, trends, signatures, and behaviors, but having a human expert in the process can help identify events that a program may miss.

Describe what preventing, and responding to, reconnaissance relies on. Preventing information gathering typically requires limiting your organizational footprint, as well as detecting and preventing information gathering using automated means like an IPS or firewall. Proactive measures such as penetration testing and self-testing can help ensure that you know and understand your footprint and potential areas of exposure. Each technology and service that is in place requires a distinct plan to prevent or limit information gathering.

Lab Exercises

Activity 3.1: Port Scanning

In this exercise, you will use a Kali Linux virtual machine to

- Perform a port scan of a vulnerable system using nmap

- Identify the remote system's operating system and version

- Capture packets during the port scan

Part 1: Set up virtual machines

Information on downloading and setting up the Kali Linux and Metasploitable virtual machines can be found in the introduction of this book. You can also substitute your own system if you have one already set up to run nmap.

- Boot the Kali Linux and Metasploitable virtual machines and log into both. The username/password pair for Kali Linux is

root/toor, and Metasploitable usesmsfadmin/msfadmin. - Run

ifconfigfrom the console of the Metasploitable virtual machine. Take note of the IP address assigned to the system.

Part 2: Perform a port scan

Now we will perform a port scan of the Metasploitable virtual machine. Metasploitable is designed to be vulnerable, so we should anticipate seeing many services that might not otherwise be available on a properly secured Linux system.

- Open a Terminal window using the menu bar at the top of the screen.

- To run nmap, simply type

nmapand the IP address of the target system. Use the IP address of the Metasploitable system:nmap [target IP].What ports are open, and what services are identified? Do you believe that you have identified all the open ports on the system?

- Now we will identify the operating system of the Metasploitable virtual machine. This is enabled using the

–Oflag in nmap. Rerun your nmap, but this time typenmap –O [target IP]and add–p 1-65535to capture all possible ports.Which operating system and version is the Metasploitable virtual machine running? Which additional ports showed up?

Activity 3.2: Write an Intelligence Gathering Plan

For this activity, design a passive intelligence gathering plan for an organization of your choice. You may want to reference a resource like OSSTMM, NIST SP 800-115, or pentest-standard.org before you write the plan.

Your intelligence gathering plan should identify the following:

- The target

- How you would gather passive data, including what data you would look for

- What tools you would use

Once you are done, use one or more of the references listed earlier to review your plan. Identify what you missed and what additional data you could gather.

Repeat the activity, documenting how you would perform active intelligence gathering, including how you would determine network topology, what operating systems are in use, and what services are accessible. Remember to account for variables like wired and wireless networks, on-site and cloud hosting, and virtual versus physical hosts.

Activity 3.3: Intelligence Gathering Techniques

Match each of the information types in the following chart to the tool that can help gather it.

| Route to a system | netstat |

| Open services via a network | Whois |

| IP traffic flow and volume | traceroute |

| Organizational contact information associated with domain registration | Wireshark |

| Connections listed by protocol | Nmap |

| Packet capture | Creepy |

| Zone transfer | dig |

| Social media geotagging | netflow |

Review Questions

- What method is used to replicate DNS information for DNS servers but is also a tempting exploit target for attackers?

- DNSSEC

- AXR

- DNS registration

- Zone transfers

- What flag does nmap use to enable operating system identification?

–os–id–o–osscan

- What command-line tool can be used to determine the path that traffic takes to a remote system?

- Whois

- traceroute

- nslookup

- routeview

- What type of data can frequently be gathered from images taken on smartphones?

- Extended Graphics Format

- Exif

- JPIF

- PNGrams

- Which Cisco log level is the most critical?

- 0

- 1

- 7

- 10

- During passive intelligence gathering, you are able to run netstat on a workstation located at your target's headquarters. What information would you not be able to find using netstat on a Windows system?

- Active TCP connections

- A list of executables by connection

- Active UDP connections

- Route table information

- Which of the following options is the most likely used for the host listed in the

dhcpd.confentry?host db1 {option host-name "sqldb1.example.com";hardware ethernet 8a:00:83:aa:21:9ffixed address 10.1.240.10- Active Directory server

- Apache web server

- Oracle database server

- Microsoft SQL Server

- Which type of Windows log is most likely to contain information about a file being deleted?

httpdlogs- Security logs

- System logs

- Configuration logs

- What organization manages the global IP address space?

- NASA

- ARIN

- WorldNIC

- IANA

- Before Ben sends a Word document, he uses the built-in Document Inspector to verify that the file does not contain hidden content. What is this process called?

- Data purging

- Data remanence insurance

- Metadata scrubbing

- File cleansing

- What type of analysis is best suited to identify a previously unknown malware package operating on a compromised system?

- Trend analysis

- Signature analysis

- Heuristic analysis

- Regression analysis

- Which of the following is not a common DNS antiharvesting technique?

- Blacklisting systems or networks

- Registering manually

- Rate limiting

- CAPTCHAs

- What technique is being used in this command?

dig axfr @dns-server example.com- DNS query

- nslookup

- dig scan

- Zone transfer

- Which of the following is not a reason that penetration testers often perform packet capture while conducting port and vulnerability scanning?

- Work process documentation

- To capture additional data for analysis

- Plausible deniability

- To provide a timeline

- What process uses information such as the way that a system's TCP stack responds to queries, what TCP options it supports, and the initial window size it uses?

- Service identification

- Fuzzing

- Application scanning

- OS detection

- What tool would you use to capture IP traffic information to provide flow and volume information about a network?

- libpcap

- netflow

- netstat

- pflow

- What method used to replicate DNS information between DNS servers can also be used to gather large amounts of information about an organization's systems?

- traceroute

- Zone transfer

- DNS sync

- dig

- Selah believes that an organization she is penetration testing may have exposed information about their systems on their website in the past. What site might help her find an older copy of their website?

- The Internet Archive

- WikiLeaks

- The Internet Rewinder

- TimeTurner

- During an information gathering exercise, Chris is asked to find out detailed personal information about his target's employees. What is frequently the best place to find this information?

- Forums

- Social media

- The company's website

- Creepy

- Which lookup tool provides information about a domain's registrar and physical location?

- nslookup

- host

- Whois

- traceroute