300 Core Software Security

which to architect. These may also be used as a training and mentorship

basis. McGraw’s principles are

1. Secure the weakest link.

2. Defend in depth.

3. Fail securely.

4. Grant least privilege.

5. Separate privileges.

6. Economize mechanisms.

7. Do not share mechanisms.

8. Be reluctant to trust.

9. Assume your secrets are not safe.

10. Mediate completely.

11. Make security usable.

12. Promote privacy.

13. Use your resources.

16

An in-depth discussion of these principles is beyond the scope of this

work. Security practitioners will likely already be familiar with most if

not all of them. We cite them as an example of how to seed an architec-

ture practice. From whatever principles you choose to adopt, architecture

patterns will emerge. For instance, hold in your mind “Be reluctant to

trust” and “Assume your secrets are not safe” while we consider a classic

problem. When an assessor encounters configuration files on permanent

storage the first time, it may be surprising to consider these an attack vec-

tor, that the routines to read and parse the files are an attack surface. One

is tempted to ask, “Aren’t these private to the program?” Not necessarily.

One must consider what protections are applied to keep attackers from

using the files as a vector to deliver an exploit and payload. There are two

security controls at a minimum:

1. Carefully set permissions on configuration files such that only the

intended application may read and write the files.

2. Rigorously validate all inputted data read from a configuration file

before using the input data for any purpose in a program. This, of

course, suggests fuzz testing these inputs to assure the input validation.

Once encountered, or perhaps after a few encounters, these two pat-

terns become a standard that assessors will begin to catch every time

Applying the SDL Framework to the Real World 301

as they threat model. These patterns start to seem “cut and dried.”* If

configuration files are used consistently across a portfolio, a standard

can be written from the pattern. Principles lead to patterns, which then

can be standardized.

Each of these dicta engenders certain patterns and suggests certain

types of controls that will apply to those patterns. These patterns can

then be applied across relevant systems. As architects gain experience,

they will likely write standards whose patterns apply to all systems of a

particular class.

In order to catch subtle variations, the best tool we have used is peer

review. If there is any doubt or uncertainty on the part of the assessor,

institute a system of peer review of the assessment or threat model.

Using basic security principles as a starting point, coupled to strong

mentorship, a security architecture and design expertise can be built over

time. The other ingredient that you will need is a methodology for cal-

culating risk.

Generally, in our experience, information security risk

†

is not well

understood. Threats become risks; vulnerabilities are equated to risk in

isolation. Often, the very worst impact on any system, under any possible

set of circumstances, is assumed. This is done rather than carefully inves-

tigating just how a particular vulnerability might be exposed to which

type of threat. And if exercised, what might be the likely impact of the

exploit? We have seen very durable server farm installations that took

great care to limit the impact of many common Web vulnerabilities such

that the risk of allowing these vulnerabilities to be deployed was quite

limited. Each part (term) of a risk calculation must be taken into account;

in practice, we find that, unfortunately, a holistic approach is not taken

when calculating risk.

A successful software security practice will spend time training risk

assessment techniques and then building or adopting a methodology that

* Overly standardizing has its own danger: Assessors can begin to miss subtleties that lie

outside the standards. For the foreseeable future, assessment and threat modeling will

continue to be an art that requires human intelligence to do thoroughly. Beware the

temptation to attempt to standardize everything, and thus, attempt to take the expert

out of the process. While this may be a seductive vision, it will likely lead to misses

which lead to vulnerabilities.

† Information security risk calculation is beyond the scope of this chapter. Please see

the Open Group’s adoption of the FAIR methodology.

302 Core Software Security

is lightweight enough to be performed quickly and often, but thorough

enough that decision makers have adequate risk information.

9.4 Testing

Designing and writing software is a creative, innovative art, which also

involves a fair amount of personal discipline. The tension between creativity

and discipline is especially true when trying to produce vulnerability-free

code whose security implementations are correct. Mistakes are inevitable.

It is key that the SDL security testing approach be thorough; testing is

the linchpin of the defense in depth of your SDL. Test approaches must

overlap. Since no one test approach can deliver all the required assurance,

using approaches that overlap each other helps to ensure completeness,

good coverage both of the code as well as all the types of vulnerabilities

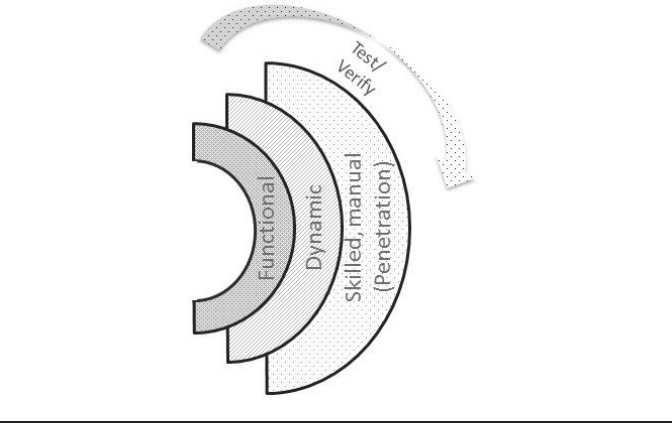

that can creep in. Figure 9.11 describes the high-level types of testing

approaches contained in the SDL. In our experience, test methodologies

are also flawed and available tools are far from perfect. It’s important not

to put all one’s security assurance “eggs” into one basket.

Based on a broad level of use cases across many different types of pro-

jects utilizing many of the commercial and free tools available, most of

Figure 9.11 Complete test suite.

Applying the SDL Framework to the Real World 303

the comprehensive commercial tools are nontrivial to learn, configure,

and use. Because testing personnel become proficient in a subset of avail-

able tools, there can be a tendency to rely on each tester’s tool expertise

as opposed to building a holistic program. We have seen one team using

a hand-tailored approach (attack and penetration), while the next team

runs a couple of language- and platform-specific freeware tools, next to a

team who are only running static analysis, or only a dynamic tool. Each

of these approaches is incomplete; each is likely to miss important issues.

Following are our suggestions for applying the right tool to the right set

of problems, at a minimum.

As noted previously, we believe that static analysis belongs within the

heart of your SDL. We use it as a part of the developers’ code writing

process rather than as a part of the formal test plan. Please see the first

section for more information about the use of static analysis in the SDL.

9.4.1 Functional Testing

Each aspect of the security features and controls must be tested to ensure

that they work correctly, as designed. This may be obvious, but it is

important to include this aspect of the security test plan in any SDL.

We have seen project teams and quality personnel claim that since the

security feature was not specifically included in the test plan, testing

was not required and hence was not performed. As irresponsible as this

response may seem to security professionals, some people only execute

what is in the plan, while others creatively try to cover what is needed.

When building an SDL task list, clarity is useful for everyone; every step

must be specified.

The functional test suite is a direct descendant of the architecture and

design. Each security requirement must be thoroughly proved to have

been designed and then built. Each feature, security or otherwise, must

be thoroughly tested to prove that it works as designed. Describing a set

of functional tests is beyond the scope of this chapter. However, we will

suggest that using several approaches builds a reliable proof.

• Does it work as expected?

• Test the corner and edge cases to prove that these are handled appro-

priately and do not disturb the expected functionality.

• Test abuse cases; for inputs, these will be fuzzing tests.

304 Core Software Security

Basically, the tests must check the precise behavior as specified. That

is, turn the specification into a test case: When a user attempts to load a

protected page, is there an authentication challenge? When correct login

information is input, is the challenge satisfied? When invalid credentials

are offered, is authentication denied?

Corner cases are the most difficult. These might be tests of default

behavior or behavior that is not explicitly specified. In our authentication

case, if a page does not choose protection, is the default for the Web server

followed? If the default is configurable, try both binary defaults: No page

is protected versus all pages are protected.

Other corner cases for this example might be to test invalid user and

garbage IDs against the authentication, or to try to replay session tokens.

Session tokens typically have a time-out. What happens if the clock on

the browser is different than the clock at the server? Is the token still valid,

or does it expire en route? Each of these behaviors might happen to a typi-

cal user, but won’t be usual.

Finally, and most especially for security features, many features will be

attacked. In other words, whatever can be abused is likely to be abused.

An attacker will pound on a login page, attempting brute-force discovery

of legal passwords. Not only should a test plan include testing of any lock-

out feature, the test plan should also be able to uncover any weaknesses in

the ability to handle multiple, rapid logins without failing or crashing the

application or the authentication service.

In our experience, most experienced quality people will understand “as

designed” testing, as well as corner cases. Abuse cases, however, may be a

new concept that will need support, training, perhaps mentorship.

9.4.2 Dynamic Testing

Dynamic testing refers to executing the source code and seeing how

it performs with specific inputs. All validation activities come in this

category where execution of the program is essential.

17

Dynamic tests are tests run against the executing program. In the security

view, dynamic testing is generally performed from the perspective of the

attacker. In the purest sense of the term, any test which is run against

the executing program is “dynamic.” This includes vulnerability scans,

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.