Design and Development (A4): SDL Activities and Best Practices 191

that the data is traced back to its source, and trust is assigned based on

the weakest link.

There are other lists of questions that should be considered. Some of

these are organized into sets of key areas based on the implementation

mistakes that result in the most common software vulnerabilities relevant

to the software product or solution being developed, also called hotspots.

These questions are typically developed by the software security architect

and revolve around the last top 10–20 CVE or OWASP “Top 10” lists

described earlier in the book.

A review for security issues unique to the architecture should also be

conducted as part of the manual security review process. This step is par-

ticularly important if the software product uses a custom security mecha-

nism or has features to mitigate known security threats. During this step,

the list of code review objectives is also examined for anything that has

not yet been reviewed. Here, too, a question-driven approach such as the

following list will be useful, as the final code review step to verify that

the security features and requirements that are unique to your software

architecture have been met.

• Does your architecture include a custom security implementation? A

custom security implementation is a great place to look for security

issues for these reasons:

o

It has already been recognized that a security problem exists,

which is why the custom security code was written in the first

place.

o

Unlike other areas of the product, a functional issue is very likely

to result in security vulnerability.

• Are there known threats that have been specifically mitigated? Code

that mitigates known threats needs to be carefully reviewed for prob-

lems that could be used to circumvent the mitigation.

• Are there unique roles in the application? The use of roles assumes that

there are some users with lower privileges than others. Make sure

that there are no problems in the code that could allow one role to

assume the privileges of another.

44

We would like to reiterate that it is not an either/or proposition

between different types of security testing. For a product to be secure, it

should go through all types of security testing—static analysis, dynamic

analysis, manual code review, penetration testing, and fuzzing. Often,

192 Core Software Security

trade-offs are made during the development cycle due to time constraints

or deadlines, and testing is skipped as a product is rushed to market. This

might save some time and a product may be released a few weeks/months

sooner. However, this is an expensive proposition from a ROI point of

view. Further, security problems found after a product is released can

cause a lot of damage to customers and the brand name of the company.

6.5 Key Success Factors

Success of this fourth phase of the SDL depends on review of policy com-

pliance, security test case execution, completion of different types of secu-

rity testing, and validation of privacy requirements. Table 6.1 lists key

success factors for this phase.

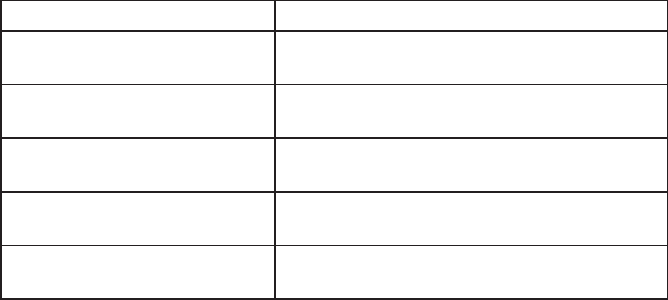

Table 6.1 Key Success Factors

Key Success Factor Description

1. Security test case execution Coverage of all relevant test cases

2. Security testing Completion of all types of security

testing and remediation of problems

found

3. Privacy validation and remediation Effectiveness of privacy-related

controls and remediation of any

issues found

4. Policy compliance review Updates for policy compliance as

related to Phase 4

Success Factor 1: Security Test Case Execution

Refer to Section 6.2 for details on success criteria for security test execu-

tion plan.

Success Factor 2: Security Testing

It is critical to complete all types of security testing—manual code

review, static analysis, dynamic analysis, penetration testing, and fuzzing.

Issues found during each type of testing should be evaluated for risk and

prioritized. Any security defect with medium or higher severity should

be remediated before a product is released or deployed. Defects with

Design and Development (A4): SDL Activities and Best Practices 193

low severity should not be ignored but should be put on a roadmap for

remediation as soon as possible.

Success Factor 3: Privacy Validation and Remediation

Validation of privacy issues should be part of security test plans and secu-

rity testing. However, it is a good idea to have a separate workstream to

assess effectiveness of controls in the product as related to privacy. Any

issues identified should be prioritized and remediated before the product

is released or deployed.

Success Factor 4: Policy Compliance Review (Updates)

If any additional policies are identified or previously identified policies

have been updated since analysis was performed in Phase 3, updates should

be reviewed and changes to the product should be planned accordingly.

6.6 Deliverables

Table 6.2 lists deliverables for this phase of the SDL.

Security Test Execution Report

The execution report should provide status on security tests executed and

frequency of tests. The report should also provide information on the

number of re-tests performed to validate remediation of issues.

Table 6.2 Deliverables for Phase A4

Deliverable Goal

Security test execution report Review progress against identified security

test cases

Updated policy compliance

analysis

Analysis of adherence to company policies

Privacy compliance report Validation that recommendations from

privacy assessment have been implemented

Security testing reports Findings from different types of security

testing

Remediation report Provide status on security posture of

product

194 Core Software Security

Updated Policy Compliance Analysis

Policy compliance analysis artifacts (see Chapters 4 and 5) should be

updated based on any new requirements or policies that might have come

up during this phase of the SDL.

Privacy Compliance Report

The privacy compliance report should provide progress against privacy

requirements provided in earlier phases. Any outstanding requirement

should be implemented as soon as possible. It is also prudent to assess

any changes in laws/regulations to identify (and put on a roadmap) any

new requirements.

Security Testing Reports

A findings summary should be prepared for each type of security testing:

manual code review, static analysis, dynamic analysis, penetration testing,

and fuzzing. The reports should provide the type and number of issues

identified as well as any consistent theme that can be derived from the

findings. For example, if there are far fewer XSS issues in one component

of the application compared to another, it could be because developers

in the former were better trained or implemented the framework more

effectively. Such feedback should be looped back into earlier stages of the

SDL during the next release cycle.

Remediation Report

A remediation report/dashboard should be prepared and updated regu-

larly from this stage. The purpose of this report is to showcase the security

posture and risk of the product at a technical level.

6.7 Metrics

The following metrics should be collected during this phase of the SDL

(some of these may overlap metrics we discussed earlier).

• Percent compliance with company policies (updated)

o

Percent of compliance in Phase 3 versus Phase 4

Design and Development (A4): SDL Activities and Best Practices 195

• Number of lines of code tested effectively with static analysis tools

• Number of security defects found through static analysis tools

• Number of high-risk defects found through static analysis tools

• Defect density (security issues per 1000 lines of code)

• Number and types of security issues found through static analysis,

dynamic analysis, manual code review, penetration testing, and

fuzzing

o

Overlap of security issues found through different types of testing

o

Comparison of severity of findings from different types of testing

o

Mapping of findings to threats/risks identified earlier

• Number of security findings remediated

o

Severity of findings

o

Time spent (approximate) in hours to remediate findings

• Number, types, and severity of findings outstanding

• Percentage compliance with security test plan

• Number of security test cases executed

o

Number of findings from security test case execution

o

Number of re-tests executed

6.8 Chapter Summary

During our discussion of the design and development (A4) phase, we

described the process for successful test case execution, the process of

proper code review through the use of both automated tools and manual

review, and the process for privacy validation and remediation to be con-

ducted during this phase of the SDL. Perhaps the most important pro-

cesses and procedures described in this chapter are those that provide the

ability to effectively and efficiently test, tune, and remediate all known

and discovered vulnerabilities, and to ensure that secure coding policies

have been followed which provide the necessary security and privacy vul-

nerability protections before moving on to the product ship (A5) phase

of the SDL.

References

1. Kaner, C. (2008, April). A Tutorial in Exploratory Testing, p. 36. Available at http://

www.kaner.com/pdfs/QAIExploring.pdf.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.