5. Design Heuristics

Most art forms characteristically involve representations of real-world phenomena. As Aristotle observed, art represents not what is, but a kind of thing that might be; environments, objects, situations, characters, and actions are represented within a wide range of deviation from real life. The degree and types of deviations are the result of the form, style, and purpose of the representation. In drama, only a few styles (predominantly of the last two centuries) venture far afield from representing characters, situations, and actions that are recognizably human or human-like. Likewise, non-representational styles in painting and sculpture are largely modern developments in Western culture. One reason for the preference for real-world objects in artistic representations, at least in popular culture, may be that they impose relatively less cognitive overhead on their audiences. The principle at work is that real-world objects make representations more accessible, and hence more enjoyable, to a larger number of people. Non-representational styles require exposure to and practice with subtle inferential constructions that many people aren’t prepared for by their education.

Computer as Medium

Computers are an interactive representational medium. Wardrip-Fruin (2009) says that modern computers are designed to make possible “the continual creation of new machines, opening new possibilities, through the definition of new sets of computational processes.” Indeed, much of the innovation going on today is happening at the level of processing. Understanding what computers are really doing is an ongoing definitional process that heavily influences the kinds of representations that we make with them. Uses in such areas as statistical analysis and database management have led to the notion of computers as representers of information. Scientists use computers to represent real-world phenomena in a variety of ways, from purely textual mathematical modeling to simulations that are symbolic, schematic, or realistically multisensory.

The “outward and visible signs” of computer-based representations—that is, the ways in which they are available to humans—have come to be known as the human-computer interface, or the Surface in Wardrip-Fruin’s (2009) analysis. The characteristics of the interface for any given representation are influenced by the pragmatics of usage and principles of human factors and ergonomics, as well as by an overarching definition of what computers are. Interface styles that are indirect—that is, those in which a person’s actions are defined as operating the computer, rather than operating directly on the objects they represent—spring from the notion that computers themselves are tools. The logic behind the “tool metaphor” goes like this: regardless of what people think they are doing (e.g., searching for information, playing a game, or designing a cathedral), they are actually using their computers as tools to carry out their commands, as are computer programmers. It follows, then, that what people are seen to be interacting with is the computer itself, with outcomes like information retrieval, document design, learning, or game playing as secondary consequences of that primary interaction.

As McLuhan (1964) observed, a new medium begins by consuming old media as its content. For example, the newspaper gobbled up the broadside in the 17th century as well as the story/report and aspects of the letter (Stephens 1988). Early film, with its fixed cameras and proscenium-like cinemas, began with theatre sans speech as its starting point, with the addition of titles and motion photography; now it is a medium in its own right with its own conventions and techniques that the theatre could not imitate. Both forms survive, but film has found its own language; it has become its own medium. One may say that computers imitate other media: film, newspapers, journals, and the like. But computers, like film, have developed their own unique methods of representation and experience. True, they have embraced and enfolded media like film and newspapers, but have given them a new twist in terms of authorship, distribution, production methods, and interactivity.

The notion of the computer as a tool obviously leads to the construction and inclusion of concepts in all application domains that are inconsistent with the context of the specific representation: file operations, buffers, data structures, lists, and programming-like syntax, for example. For purposes of comparison, think about how people use “real” tools. When one hammers a nail into a board, one does not think about operating the hammer; one thinks about pounding the nail. But in the computer medium, the “tool problem” is compounded by existential recursion; the medium can be used to represent tools. Some, like virtual paintbrushes, are more or less modeled on real-life objects. Others, like the omnipresent cursor in most of its instantiations, have no clear referents in the real world. It is especially in these cases that interface designers are tempted to represent the tool in terms of computer-based operations that are cognitively and operationally unnecessary for their use. Why? Because the computer-oriented representation is seen as an “honest” explanation of what the tool is and how it works, and because that’s how the designer understands it. People quickly become entangled in a mass of internal mythology that they must construct in a largely ad hoc fashion, in contrast to Rubinstein and Hersh’s notion of a clear and consistent “external myth” (1984). As an interactor, one may quickly fall through the trap door into the inner workings of the computer or the software.

Design Heuristic

Interaction should be couched in the context of the representation—its objects, environment, potential, and tools.

Interface Metaphors: Powers and Limitations

The notion of employing metaphors as a basis for interface design has partially replaced the notion of computer as tool with the idea of computer as representer of a virtual world or system, in which a person may interact more or less directly with the representation. Action occurs in the mimetic context and only secondarily in the context of computer operation. Metaphors can exist at every level, from the application (your remote is a gun) to the whole system (your screen is a desktop). The theory is that if the interface presents representations of real-world objects, people will naturally know what to do with them.

I can’t resist including this old quote. In 1990, Ted Nelson delivered a deliciously acerbic analysis:

Let us consider the ‘desktop metaphor,’ that opening screen jumble which is widely thought at the present time to be useful. . . . Why is this curious clutter called a desktop? It doesn’t look like a desktop; we have to tell the beginner how it looks like a desktop, since it doesn’t (it might as easily properly be called the Tablecloth or the Graffiti Wall).

The user is shown a gray or colored area with little pictures on it. The pictures represent files, programs and disk directories which are almost exactly like those for the IBM PC, but now represented as in a rebus. These pictures may be moved around in this area, although if a file or program picture is put on top of a directory picture it may disappear, being thus moved to the directory. Partially covered pictures, when clicked once, become themselves covering, and partially cover what was over them before.

We are told to believe that this is a ‘metaphor’ for a ‘desktop.’ But I have never personally seen a desktop where pointing at a lower piece of paper makes it jump to the top, or where placing a sheet of paper on top of a file folder caused the folder to gobble it up; I do not believe such desks exist; and I do not think I would want one if it did.

The reaction to Nelson from the Xerox PARC inventors would likely be something on the order of “Geez, Ted, lighten up. It’s a magical metaphor, and it’s fun!”

The problem with interface metaphors is that they are like reality, only different. Why should this matter? Because we usually don’t know precisely how they are different. Some of the applications built with Wii affordances—playing tennis or directing an orchestra—actually do work metaphorically. The primary reason is that the affordances of the controller in the context of the application are designed to closely match the affordances of the object and activity being represented. The interactor can forget about the controller and feel secure in suspending disbelief.

Historically, however, interface “metaphors” have usually functioned as similes; whereas a metaphor posits that one thing is another, a simile asserts that one thing is like another. But what is being compared to what? Now there is a third part to the representation: the simile (say, a representational phone and address book), the real-world object (a real phone and address book), and the thing that the representation really is—a bundle of functionalities that do not necessarily correspond to the operations of the real-world referent, augmenting it with “magical” powers like the ability to “search” for a name or number, click on a number and make a call or send a text message, or display a current photo of the contact that is automagically updated. This phenomenon is well illustrated in Nelson’s comment, where he never uses the word “folder” at all, but refers to it as a “disk directory.” The simile becomes a kind of cognitive mediator between a real-world object and something going on inside the computer. What Ted misses is that the “disk directory” as presented in a command-line interface is as much a metaphor within that interface as it is when presented as a “folder” within the desktop interface; it is a presentation of information to and a mediator of actions with the user in a medium of otherwise invisible entities.

What happens to people who are trying to use interface similes? Alas, they must form mental models of what is going on inside the computer that incorporate an understanding of all three of these questions (What is the object being represented? What is the representational object’s qualities? How is the representational object different from the object of the representation?). In this way, interface “metaphors” can fail to simplify what is going on; rather, they tend to complicate it. People must explain to themselves the ways in which the behavior of mimetic objects differs from the behavior of their real-world counterparts.

To put it another way, the problem with interface metaphors (or similes) is that they often act as indices (or pointers) to the wrong thing: the internal operations of the computer. John Seely Brown (1986), former head of Xerox PARC, puts it this way:

. . . it is not enough to simply to try to show the user how the system is functioning beneath its opaque surfaces; a useful representation must be cognitively transparent in the sense of facilitating the user’s ability to ‘grow’ a productive mental model of relevant aspects of the system. We must be careful to separate physical fidelity from cognitive fidelity, recognizing that an ‘accurate’ rendition of the system’s inner workings does not necessarily provide the best resource for constructing a clear mental picture of its central abstractions.

Brown’s observations are as relevant today as when he initially made them. The term “central abstractions” seems to be roughly equivalent to what I call the representation. The point, then, is that the object of the mental model should not be what the computer is doing, but what is going on in the representation: the context, objects, agents, and activities of the virtual world. Users do not need to understand what a POSIX file link “is,” nor how a journaled file system protects against disk-drive write errors.

Another strength of good interface metaphors is coherence—all of the elements “go together” in natural ways. Folders go with documents, which go with desktops. To the extent that this works, the mimetic context is supported, and people can go about their business in a relatively uninterrupted way. But there are two ways to fall off the desktop. One is when you start looking for the other things that “go with” it, and you can’t find them. In the original version of this book, I mentioned filing cabinets, telephones, blotters for doodling and making notes, or even an administrative assistant; today, that would be a terabyte hard drive, Skype, Stickies, and agents like Siri (whom I would fire, by the way). I still can’t doodle very well on my Mac, but that’s probably about me (and the fact that I don’t have a tablet peripheral). The metaphor may in fact have played some positive role in the development of these deskly affordances. The more common way to fall off the desktop is to find something on it that doesn’t “go with” everything else, thereby undermining or exploding the mimetic context; for example, a trashcan that either (1) works to “throw away” files or (2) works to eject disks or drives: fundamentally differing operations overloaded on to a single “object.”1

1. The fix for this in OSX is still clumsy. Dragging the icon for a disk drive towards the trash icon on the application bar causes the trash icon to change from a trashcan to an “eject” button; an improvement, but still confusing.

A third, highly rated strength of interface metaphors is their value in helping people learn how to use a system. The difficulty comes in helping a person make a graceful transition from the entry-level, metaphorical stage of understanding into the realm of expert use, where power seems to be concentrated specifically in those aspects of a system’s operation for which the metaphor breaks down. In this context, the usefulness of a metaphorical approach can be understood as a trade-off between the reduced learning load and the potential cognitive train-wrecks that await down the track.

Design Heuristic

Interface metaphors have limited usefulness. What you gain now you may have to pay for later.

Alternatives to Metaphor in Design

A dramatic notion of representation provides a good alternative to metaphor in at least three ways. First, we can effectively represent actions that are quite novel by establishing causality and probability (the notion of probable impossibility). True, representations may not have any real-world counterparts, but they may exhibit clear causal relations. Second, effective representation of such objects or actions probably requires a sensory (visual or multisensory) component. The sensory component may even be expressed in text, as interactive storytelling applications have demonstrated. Third, a represented action or object must be self-disclosing in context, even if its attributes or causes can only be determined through successive discovery in the course of a whole action.

The Primacy of Action

One shortcoming of many metaphorical interfaces is that their design tends to be guided by the goal of representing objects and relations among them as opposed to representing actions. Often, the former seems easier to do.

Strategy and Tactics

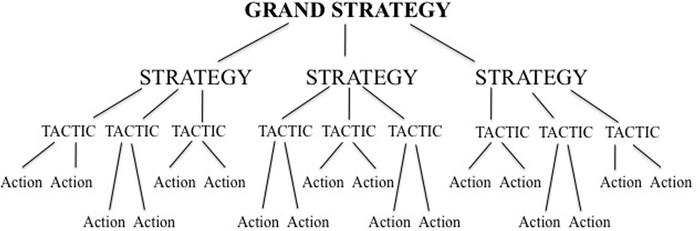

I have found a strategic approach helpful in keeping focus on the action. The foundations of strategy as I use the term are essentially military, as expressed by Sun Tzu in The Art of War (written around 500 BCE) and more explicitly by Liddel Hart in his book Strategy (1954, revised edition 1991). But we apply strategic thinking to the work of design as well. Figure 5.1 shows a basic diagram.

The grand strategic goal is the main event. Tow (2004) calls this “a three-level top-down structure.” Strategies are “distinct patterns of action” in support of the grand strategic goal. The third level down gets us to tactics, more detailed actions in support of strategies.

I’ve added into my graph the level of “actions.” In a military sense, logistics operate on the same level as strategy because the logistics plan goes toward supporting the grand strategic goal in a more general way. How to get supplies, food, and fuel to many areas in the “theatre of war” is not specifically tied to any given strategy or tactic, but works in parallel to serve many operations in different locations. Here I am using the term “action” to refer to what is necessary to meet a particular tactical goal.

Figure 5.2 is another version of the same graph with some new information. First, a given strategy can support more than one grand strategic goal. Likewise, a particular tactic may serve more than one strategy.

Figure 5.2. A strategy may work in support of more than one grand strategic goal, just as a tactic may serve more than one strategy.

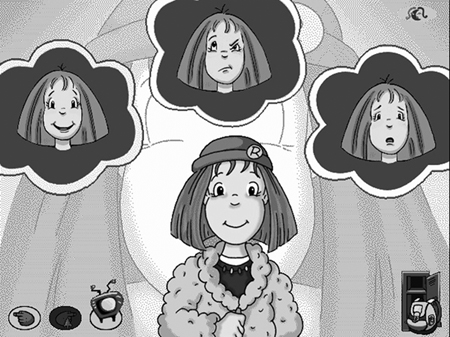

My old company Purple Moon provides a good example. Our original goal was to create an engaging computer-based activity that would be enticing enough to get “tween” girls (ages 7 to 12) to use a computer and become comfortable with it. You may be old enough to recall that the landscape of computer games and videogames in 1998 was dominated by games specifically addressed to the interests and play patterns of boys. Our gender studies revealed that girls and boys exhibited some strong differences in how they thought of “play,” hence our desire to create content with play patterns that would appeal to girls’ play preferences. But as we were doing the research and designing the games, we were hearing from girls that they often felt “stuck” in their social and emotional lives. Many experienced a sense of inevitability about things that happened with friends. So our second grand strategic goal emerged: to provide an emotional rehearsal space for girls that would allow them to try out different social choices. Most of our strategies served both grand strategic goals.

Figure 5.3 is yet a third diagram that replaces the generic terms with some specific ones, in this case related to game design; we could make such a diagram for non-game interactions as well, but I think you get the point.

Figure 5.3. A strategic diagram with elements at strategic, tactical, and supporting levels drawn from game design. Many such diagrams are possible.

One of the ways in which strategic analysis of this sort is useful is to give us criteria for the inclusion or exclusion of materials at any level of formulation. For example, if a particular tactic is interesting and fun to the designer, but does not serve any strategy, then it is extraneous and should be eliminated or rethought, “for that which makes no perceptible difference by its presence or absence is no real part of the whole” (Poetics 1451a, 34–35).

Design Heuristic

Focus on designing the action. The design of objects, environments, and characters must all serve this grand strategic goal.

Action with a Dramatic Shape

In the section on constraints, we spoke about designing what people think of doing as a way to help create dramatic action. Interventions by the designer in the form of discovery, surprise, and reversal are also effective. Responsive non-player characters (NPCs) that can adapt to players’ choices in games can also be designed to push the slope and speed of dramatic action under specific conditions.

Game designers still puzzle over the question of dramatic shape. The greatest successes that I know of in this regard lay out a story arc “in the large” through the selection and arrangement of challenges, venues, NPC behaviors, and elements of action like quests and levels. In Aristotle’s view, the authoring of plot consisted of the selection and arrangement of incidents. A designer of interactive media has the same power; it is in what way and how much that power is used that influences an interactor’s experience of agency.

Game designer and scriptwriter Clint Hocking (Splinter Cell, Splinter Cell: Chaos Theory, Far Cry 2) proposes a generative view of the shape of an interactive plot (2013). He describes the “region of story” as “low frequency, high amplitude” shifts or curves. The “region of choice” he sees as “high frequency, low amplitude” curves. Combining these curves can give us the shape of a game, but that is only possible if the two regions of the game are aligned with one another. Part of Hocking’s proposed solution is to “align verbs”—that is, to express interactive choices with verbs that correspond to those in the overarching narrative.

As I listened to Hocking speak, I wondered if one might simply nudge the shape of the region of story into one that looks more like the shape of a dramatic plot. When I contacted him, he explained to me how that was exactly what they were trying to do in Far Cry 2 with the development of infamy, a condition in which a player who needs cooperation from NPCs becomes both very powerful and feared by them, thus the fall. Hocking’s description reads like classic tragedy.2

2. For an in-depth analysis of the tragic component of Far Cry 2, see an excellent essay by Cesar Bautista (Penn State, CS) at http://ceasarbautista.com/essays/far_cry.html.

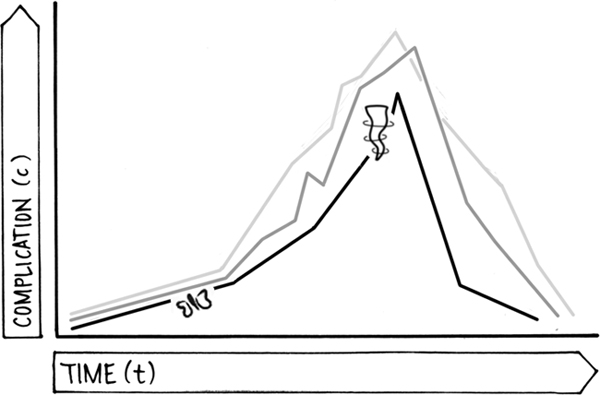

Hocking thinks that “consequentiality” may be a better axis to track than complication and resolution over time. Consequentiality is what the low-frequency, high-amplitude curve is mapping. In that sense, he is talking about causality and probability. I realized that causality isn’t mapped in the Freytag diagram, and the shape of the plot over time isn’t mapped well in the “Flying Wedge” diagram. We need a new way to visualize it.

A “consequential” incident may be a “small” event in terms of dramatic intensity that impacts the probability of something “large” happening later on. Playwrights can plant such incidents (either as causal over time or as foreshadowing) and lead us to the conclusion that, in retrospect, a seemingly inconsequential action causes a great change later on. In a land where thieving and smiting are fairly common, a man beset at a crossroads kills all but one of the folks who seem to be holding him up. Later he learns that he has killed his own father. After the consequences occur, we can trace “causality” back to the consequential incident, but we can’t predict it beforehand.

In games, by creating environments and NPCs with specific behaviors and rules, the designer can increase the possibility for consequential incidents to be generated through the player’s interactions with the environments and the NPCs. When a player makes a particular choice that may seem inconsequential, the possibility for certain actions to occur is transformed into probability. Hocking (2013) provides this example:

In Thief (which is a highly consequential game) the low level action of leaving a body unhidden might cause a group of guards much later in the level to become agitated—changing the way I navigate a subsequent series of rooms.3

3. Hocking, personal communication, May 18, 2013.

Now, this is not as hairy as what happened to Oedipus, but it might lead to other challenges or transform another possibility into probability for an action or event to occur later on.

Hocking’s notion of “consequentiality” is a sort of simulated butterfly effect,4 a term coined to describe a central idea in chaos theory developed by Edward Lorenz (1963). Lorenz’s phrase “sensitive dependence on initial conditions” means that a small change in one place (such as a butterfly flapping its wings in Brazil) can cause much larger changes later on, and that these changes are unpredictable (such as a tornado in Texas).5 The butterfly seems innocuous, but in retrospect it is highly consequential. In dramatic terms, such incidents may be discoveries, surprises, or even reversals, all potent elements in the shape of dramatic action (see Figure 5.4).

4. When I mentioned this to Clint, he laughed and said that his original title for Splinter Cell: Chaos Theory was Splinter Cell: Butterfly Effect. And here I thought I was being so smart.

5. At a meeting of the American Association for the Advancement of Science in 1972, Philip Merilees suggested a title for Lorenz’s paper: “Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas?”

Figure 5.4. An early incident (the butterfly flapping), although it may seem inconsequential, can increase the probability of a highly dramatic event later on (the tornado). If the butterfly doesn’t flap (or the player doesn’t make that particular choice), the plot may take other directions and shapes.

Depending upon how the environments and NPCs are designed, the designer can tip the scales toward consequentiality and shape dramatic action.

In the world of text-based interactive storytelling, Emily Short (2013b) takes a unique approach with Versu (discussed in Chapter 4). At the bottom level, Versu contains genre definitions—a collection of information that includes the ethics and “rules of conduct” for different story types. The next level up are “story files”:

Story files contain premises, situations, and provocations. They lay out locations and objects that characters might encounter, and provide narrative turning points that might depend on how characters currently relate to one another. Story files create opportunities for characters to change their views of one another, come into conflict, and have to make difficult choices, or perhaps to discover what is going on in the narrative scenario.

Character files “contain character descriptions, preferences, traits, habits, [and] props unique to that particular character” as well as the character’s hopes and goals. Characters’ goals may change during the course of the action. Emily introduces the notion of “social physics” to describe the network of relations among characters and how it may change.

I am struck by the interesting differences between Hocking’s and Short’s thoughts in terms of the granularity of player (or “character”) actions and the workings of the overarching narrative scenario. Both approaches seek to retain the sense of agency through interaction, while bounding the dramatic shape of the whole. Short (2013a) mused that she would like to design a “drama manager agent” that could create new situations.

Design Heuristic

Choices for (and by) interactors can transform possibility into probability for dramatic action later on.

Designing Character and Thought

Let’s begin at the beginning. Who or what is the source of these messages?

Are you sure?

Loading.

Your application has unexpectedly quit.

I DON’T KNOW THAT WORD.

Who or what is the receiver of these messages?

Insert text box.

Check spelling.

Quit.

Who is the agent of these actions?

Logon.

Save.

Apply style.

Delete *.*.

And now, for the million-dollar question: Who said the following?

Pay no attention to the man behind the curtain.

Free-Floating Agency

Without clear agency, the source and receiver of messages in a system are vague and may cause both frustration and serious errors. Without clear agency, the meaning of information may be seriously misconstrued. Without clear agency, things that happen are often as “magical” and fraudulent as the light show created by The Wizard of Oz—and the result of accidental unmasking can be unsettling, changing (as it did for Dorothy and her friends) the whole structure of probability and causality.

There are two primary problems involved in the vague way that agency is often handled in human-computer interaction. The first is that unclear or “free-floating” agency leaves uncomfortable holes in the mimetic context—holes that people can fall through into the twilight zone of system operations. The second is that these vague forces destroy the experience of agency for humans. Typically, these sorts of transactions require that people set parameters or specify the details of a desired action in some way, but the form of the transaction is one of supplication rather than cooperation; one might as well apply to Central Services for permission to sit down (age check). As the Cowardly Lion says, “let me at ‘im”; let me confront the source of all this bossing around, face to face. Unclear agency places the locus of control in a place where we can’t “get at it.” Even though we are in fact agents by virtue of making choices and specifying action characteristics, these shadowy forces manage to make us feel that we are patients—those who are done unto, rather than those who do.

Design Heuristic

Represent sources of agency.

Collective Characters

As we discussed in Chapter 4, interactions among interactors make distinct contributions to the unfolding of the whole action or plot. In computer-supported collaborative work applications, for example, Löwgren and Reimer (2013) remark on the relative lack of literature treating computers as a medium for interactions among people. In CSCW as well as in multiplayer games, groups of people with common goals form and reform to act in concert, for a time. These groups may be considered “characters” in their own right. Once a group has formed—to carry out a quest, for example—there are still very active dynamics among its members (perhaps including treachery and secession), but the quest group as a whole tends to take actions as a unit toward reaching a particular in-game goal.

Technically, we can understand such “collective characters” in terms of the aggregate of thought that is the material cause of their actions. We can understand the quest as the action, and its success or failure has implications for the plot. By providing potential goals for collective characters, designers create formal constraints that encourage their formation, adding a new level of richness to the action.

Design Heuristic

Groups of interactors with common goals may function as collective characters where group dynamics serve as traits.

Affordances for Emotional Interaction

Aristotle identified the end cause of drama as catharsis—the arousal and release of emotion. Emotional expression and communication are essential in dramatic art. It follows, then, that designers of dramatic interaction pay close attention to the emotional dimension of their work. Certainly, the scripting of game scenarios, situations, and characters shows strong evidence of game designers’ ability to incorporate emotion through gameplay. I want to look at a few other aspects of emotional interaction, beginning with facial expression.

In his book The Expression of the Emotions in Man and Animals (1872), Charles Darwin meticulously explored the commonalities and differences among emotional expressions as well as the “why” of the ones he identified as basic. Darwin posited that emotional expressions (primarily facial) were universal among humans and not dependent upon culture or learning. Long opposed by cultural relativists, Darwin’s work has reemerged as good science, stimulating new interest and research in recent years. In the preface to the third edition, scholar Paul Eckman notes that since the mid-1970s, “systematic research using quantitative methods has tested Darwin’s ideas about universality.”6 Eckman’s research confirmed Darwin’s conclusions. While gesture is much more culturally relative, Darwin said, facial expressions related to basic emotions are legible to all humans.

6. Eckman was, by the way, science advisor to the remarkable and too-short television series Lie to Me (2009-2011), centered on the work of an investigator (played by Tim Roth) who is uncannily skilled at reading facial and body cues.

Of the many emotions treated in Darwin’s book, at least six are seen to be fundamentally universal: happiness, sadness, fear, disgust, surprise, and anger. Images of masks worn in the Greek theatre exist for each of these emotions. Both Darwin and Eckman discuss many more emotions as candidates for universal expression, including amusement, contempt, contentment, embarrassment, guilt, shame, pride, and relief. Facial expressions of many of these emotions can be seen in the leather masks used for “stock” characters of the Commedia dell’Arte, including the pompous doctor Il Dottore, the miserly merchant Pantalone, or the amorous wit Arlecchino. The Commedia was a semi-improvisational street theatre form that reached its zenith in 16th-century Italy. The stock characters may be traced back at least to Roman Comedy and continued through the Middle Ages via wandering Medieval entertainers (Duchartre 1966). The Greek and Italian masks—along with masks from Africa, Asia, and around the world—attest dramatically to the “universal legibility” of emotional facial expressions.

Since the original version of this book, we have developed sophisticated technological means for recognizing faces and facial expressions. Likewise, animation techniques have given us the ability to represent them with great acuity. It follows that one way we can use these affordances is to “read” emotional facial expressions of interactors and to respond to them in the same way—through facial expression. So, for example, mirroring the facial expression of another person is a way of establishing empathy or connection. In acting exercises, actors carry on entire “conversations” with the use of facial expressions alone.

Of course, our voices, words, and gestures communicate emotion as well, often refining what our faces are saying about us. Klaus Scherer has done canonical work in “The Expression of Emotion in Voice and Music” (1995):

Vocal communication of emotion is biologically adaptive for socially living species and has therefore evolved in a phylogenetically continuous manner. Human affect bursts or interjections can be considered close parallels to animal affect vocalizations. The development of speech, unique to the human species, has relied on the voice as a carrier signal, and thus emotion effects on the voice become audible during speech.

Scherer has compelling evidence of “listeners’ ability to accurately identify a speaker’s emotion from voice cues alone.” He has identified some of the acoustical components of various emotional expressions through voice. These acoustical components can be both recognized and reproduced, giving us the ability to infer emotion in a general way from acoustics alone. So if speech is an affordance, we can learn a lot about the emotion of both players and NPCs, even when the words may not be entirely intelligible.

Design Heuristic

Explore new methods for enabling emotional expression and communication among agents.

Thinking about Thought

While the element of Thought cannot be provided entirely by the author, the design of the world and its denizens—or the application and its affordances—materially constrain Thought in a variety of ways. Up the ladder of material causality, Thought is the result of the successive shaping of Enactment, Pattern, and Language. In this regard, exposition plays an important role. It may be provided implicitly during the early action, providing a way for interactors to discover “physical” and behavioral aspects of a mimetic world, characters, and past events. Patterns emerge as the action unfolds, and communication affordances and conventions become clear. These are the obvious sources.

Perhaps not so obvious (unless the design is specifically political) are the assumptions made by the designer, consciously or not, that influence each of these elements and also play directly into the thought processes of the interactor. Mary Flanagan addresses many of these in her book Critical Play (2009):

As a cultural medium, games carry embedded beliefs within their systems of representation and their structures, whether game designers intend these ideologies or not. In media effects research, this is referred to as “incidental learning” from media messages. For example, The Sims computer game is said to teach consumer consumption, a fundamental value of capitalism. Sims players are encouraged, even required, to earn money so they can spend and acquire goods. Grand Theft Auto was not created as an educational game, but nonetheless does impart a world view, and while the game portrays its world as physically similar to our own . . . the game world’s value system is put forward as one of success achieved through violence, rewarding criminal behavior and reinforcing racial and gender stereotypes.

This is probably the sternest language Flanagan uses in her book; generally, it is extremely scholarly, thoughtful and insightful—a must-read for everyone who is serious about interaction design, especially games and interactive art. That said, few could contest her comments quoted here.

Design Heuristic

Examine your assumptions and biases. Everybody has some.

Understanding Audiences

One of the most common difficulties that young designers have is a compulsion to design for themselves. I know that might sound crazy. Of course, you want to use your own aesthetic and skill, to exercise your own notion of play patterns, and to build something that will be pleasurable. But you are not necessarily designing it for yourself, unless you are designing for people exactly like you. In fact, that’s what happened in the early days of the videogame industry. The industry was vertically integrated all the way up to the audience. Young men designed games for young men under the direction of slightly older men. The games were sold to men in male-dominated retail environments. In those days, it was a truism that women and girls did not play videogames. That may be because vanishingly few were designed with women and girls in mind, at least until the brief surge of “girl games” in the late 1990s.

Today, women and girls make up the bulk of the market for casual games, and many more are playing games like WoW, Minecraft, and even MMFPS games. But that doesn’t mean our work is done. People are different. Obvious differences are gender and age; others include socio-economic status, ethnicity, personal interests, and politics. Who are you trying to reach?

Human-centered design research can help you understand your intended audience in both general and subtle ways. If you are working for a big corporation that does not have a design research department, you will be fighting an uphill battle to convince your publisher that design research has value, especially if they have been successful doing exactly what they do. You will likely find that play testing or beta testing is the only kind of research such companies do. But that happens after the egg has hatched, so to speak, and such research likely does not shape the game itself beyond some tweaking.

Human-centered design research shares many of the methods common to market research, but deployed for a different purpose. To oversimplify only a bit, market research looks at how to sell something, while design research looks at what should be designed and how. It comes much earlier in the process, so as to inform design as it proceeds.

To begin, you really need to block-erase your preconceived notions about your audience. Be ready to be surprised. You may find out things that disturb you. Don’t worry; your main objective is to meet your audience where they are, and as all designers know, there are ways to finesse what you might think of as bad habits or bad ideas in your audience.

Design Heuristic

Check your preconceptions and values at the door. You will pick them up later, after you have findings and are ready to turn them into design principles.

Most of the methods of design research are fairly straightforward. Secondary research looks at other people’s insights (and products), history, and culture. Primary research can take the form of interviews, focus groups, or intercepts. Primary research can include interviews with experts in the field that you’ve uncovered in your secondary research. Primary research is generally trickier than secondary, but it’s the most valuable way of gaining insight into your potential audience.

Finding the right questions to ask is crucial. Asking people directly what kind of application or game they would like will not get you far. As I am fond of telling my students, if you had asked a group of kids and teens in 1957 what kind of toy they would like, no one would have said they wanted a plastic hoop they could rotate around their hips, but that’s when Richard Knerr and Arthur Melin developed the contemporary hula hoop for Wham-O toys. In a way, the hula hoop was an extension of rhythmic play such as jump rope and clapping games. It arose in the context of popular dance styles, in which Bop was replacing Swing as the dance of choice for teens (the Twist would come later, and it may have had something to do with the hula hoop).

The first step is to develop a screener—a document that details the kind of people you want to reach out to. For a good example, see Portigal (2013), p. 38. Portigal’s book Interviewing Users: How to Uncover Compelling Insights is a great guide to interviewing, especially if you are not experienced with it.

Basically, you want to find out what people in your proposed audience are like; what do they do for fun, work, or relaxation? What colors and visual styles appeal to them? Who are their heroes? What are their strongest values? What’s their favorite music? What brands are in their refrigerator? This latter question can best be answered through home visits. If you can manage to visit people in their homes, you will find out much more about them by just looking around, and you’re likelier to gain insights by finding things you didn’t even know you were looking for.

If you don’t have the luxury of home visits, interviews are the next best thing, in my experience. And not just one-on-one. I’ve had the best success with dyad interviews. That means inviting one interview subject (who meets the criteria on your screener) and asking them to bring their best friend. The dyad interview keeps people honest. When a person gives a false or incomplete answer to a question, their friend will likely call them on it. Your subjects may strike up conversations on their own during the interview that will reveal more about them.

Although consumer companies love them, I have found the focus group to be the weakest form of interviewing, especially with young people. Regardless of age, most people in a focus group consciously or unconsciously discover the most eloquent or aspirational person in the group and align themselves in some way with that person. What you get may be more like a portrait of a social dynamic than good answers to your questions. If you want to delve more deeply into various forms of design research, I recommend my book Design Research: Methods and Perspectives (2004).

Finally, it must be said that many artists and designers are wary of design research, primarily because they are concerned that they will lose their power to their findings. This is not the case. Design research informs the design, but does not dictate it. When you have your findings in front of you, translate them into design heuristics for yourself. As you do that, add your own values and voice back into the equation. You’ll be a smarter designer for it.

This chapter has presented several rules of thumb pertaining to the design of the various elements in a dramatic representation. Some of those principles have appeared in different forms in the literature of human factors and interface design, and some are simply intuitive. By contextualizing them within the overarching notion of interactive representation, we have attempted to deepen our understanding of the derivation of such rules and the relationships among them.