Chapter 3. Beginning of Microservices

Although a lift-and-shift approach to migrating a monolithic application allows for quick migration and can save on short-term costs, the operational costs of running software that has not been optimized for the cloud will eventually overtake the cost of development. The next stage toward cloud-native is adopting a microservice architecture to take advantage of improved agility and scalability.

Embrace a Microservices Architecture

In a microservices architecture, applications are composed of small, independent services. The services are loosely coupled, communicating via an API rather than via direct method calls, as in tightly coupled monolithic application. A microservices architecture is more flexible than a monolithic application, because it allows you to independently scale, update, or even completely replace each part of the application.

It can be challenging to determine where to begin when migrating from a large legacy application toward microservices. Migrate gradually, by splitting off small parts of the monolith into separate services, rather than trying to reimplement everything all at once. The first candidates for splitting off into microservices are likely to be those parts of the application with issues that need to be addressed, such as performance or reliability issues, because it makes sense to begin by redeveloping the parts of the application that will benefit most from being migrated to the cloud.

Another challenge with splitting up monolithic applications is deciding on the granularity for the new services—just how small should each service be? Too large and you’ll be dealing with several monoliths instead of just the one. Too small and managing them will become a nightmare. Services should be split up so that they each implement a single business capability. You can apply the Domain-Driven Design (DDD) technique of context mapping to identify bounded contexts (conceptual boundaries) within a business domain and the relationship between them. From this, you can derive the microservices and the connections between them.

Microservices can be stateful or stateless. Stateless services do not need to persist any state, whereas stateful services persist state; for example, to a database, file system, or key-value store. Stateless services are often preferred in a microservices architecture, because it is easy to scale stateless services by adding more instances. However, some parts of an application necessarily need to persist state, so these parts should be separated from the stateless parts into stateful services. Scaling stateful services requires a little more coordination; however, stateful services can make use of cloud data stores or make use of persistent volumes within a container environment.

Containers

As mentioned earlier, container environments are particularly well suited to microservices.

Containers are a form of operating system virtualization. Unlike hypervisor-based virtualization (i.e., traditional VMs), which each contain a separate copy of the operating system, system binaries, and libraries as well at the application code, containers running on the same host run on the same shared operating system kernel. Containers are a lightweight environment for running the processes associated with each service, with process isolation so that each service runs independently from the others.

Each container encapsulates everything that the service needs to run—the application runtime, configuration, libraries, and the application code for the microservice itself. The idea behind containers is that you can build them once and then run them anywhere.

Almost 60 percent of the respondents to the Cloud Platform Survey reported that they are not running containers in production (Figure 3-1). However, more than half (61 percent) of those not currently running container-based environments in production are planning to (Figure 3-2) adopt containers within the next five years.

Figure 3-1. Are you running container-based environments in production?

Figure 3-2. Are you planning to run a container-based environment in production?

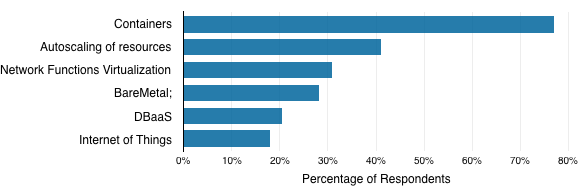

Considering just those respondents using OpenStack, 77 percent of the survey respondents currently using that tool indicated that they plan to adopt containers in their OpenStack cluster. Forty-one percent anticipate introducing autoscaling of resources (Figure 3-3).

Figure 3-4 shows the top motivations for running containers for these 178 respondents. Common motivations for the majority of the respondents included flexible scaling of services (65 percent of respondents), resource efficiency (53 percent), and migrating to microservices (51 percent).

Figure 3-3. Which new/emerging technologies are you planning to use in your OpenStack cluster?

Figure 3-4. Motivations for planning to run container-based environments in production

Forty-five percent of the 178 respondents planning to adopt containers indicated that they will adopt Docker. Other popular container technologies include EC2 Container Service, runc, rkt, and Mesoscontainer.

The traditional Platform as a Service (PaaS) approach for configuring application environments is via buildpacks. Originally designed for Heroku, buildpacks provide runtime support for applications running on the PaaS, for example by including scripts that download dependencies and configure the application to communicate with services. However, you can also launch PaaS containers from images; for example, Docker containers are launched from images created from Dockerfiles.

PaaS

Container technologies enable the journey toward a microservices architecture. Containers are light-weight environments for running microservices. Moving applications into containers, with continuous service delivery, standardizes the way that applications are delivered. Rather than IaaS running a monolithic application with the technology stack for the application largely unchanged, an entire new technology stack is required for the microservices—PaaS is adopted. PaaS provides platform abstraction to make it easier for development teams to deploy applications, allowing them to focus on development and deployment of their applications rather than DevOps.

Only 21 percent of survey respondents are currently running PaaS in production, compared to 74 percent running IaaS (Figure 3-5).

Figure 3-5. Are you running PaaS environments in production?

However, 34 percent of those not currently using PaaS in production indicated that they do intend to adopt PaaS. The primary motivation reported by those 132 respondents planning to adopt PaaS was streamlining development and deployment, reported by 66 percent of respondents. Other top motivations included resource efficiency, ease of use, establishing company standards and security (Figure 3-6).

Figure 3-6. Motivations for adopting PaaS

Many PaaS providers are also Containers as a Service (CaaS) providers. Containers have become the industry standard for implementing microservices on both public and private cloud platforms.

Of the 104 respondents who are running PaaS in production, 59 percent run their PaaS on a public cloud (Figure 3-7).

Figure 3-7. Where do you run your PaaS environment?

Adopting containers and a PaaS helps to streamline the release process, resulting in faster development iterations to push code to production. However, as more services are split off into separate containers, to make the most of containers they need to be orchestrated to coordinate applications spanning multiple containers. To make efficient use of cloud resources and for improved resilience, load balancing should be in place to distribute workloads across multiple service instances, and health management should be a priority for automated failover and self-healing.

Polyglot Architectures

With microservices, services communicate via high-level APIs, and so unlike a monolithic application, services implementing each part of the application do not need to use the same technology stack. Development teams have the autonomy to make architecture decisions that affect only their own services and APIs. They can employ different technologies for different purposes in the architecture instead of having to conform to use a technology that doesn’t fit. For example, a team might implement a messaging queue service using Node.js and a NoSQL cloud data store, whereas a team responsible for a geolocation service might implement it using Python.

Independent Release Cycles

Splitting a monolith into smaller decoupled services also means that development teams are no longer as dependent on one another. Teams might choose to release their services on a schedule that suits them. However, this flexibility comes at the price of additional complexity: when each team is operating with independent release cycles, it becomes more difficult to keep track of exactly what services and which version(s) of each service are live at any given time. Communication processes need to be established to ensure that DevOps and other development teams are aware of the current state of the environment and are notified of any changes. Automated testing should also be adopted within a Continuous Integration/Continuous Delivery (CI/CD) pipeline, to ensure that teams are notified as soon as possible if any changes to services on which they depend have resulted in issues.

Monitoring

Microservices architectures by nature introduce new challenges to cloud computing and monitoring:

- Granularity and locality

-

It is an architectural challenge to define the scope and boundaries of individual microservices within an application. Monitoring can help to identify tightly coupled or chatty services by looking at the frequency of service calls based on user or system behavior. Architects might then decide to combine two services into one or use platform mechanisms to guarantee colocation (for example Kubernetes pods).

- Impacts of remote function calls

-

In-memory function in monoliths turn into remote service calls in the cloud, where the payload needs to include actual data versus only in-memory object references. This raises a number of issues that depend heavily on the locality and chattiness of a service: how large is the payload value compared to the in-memory reference? How much resources are used for marshalling/unmarshalling and for encoding? How to deal with large responses? Should there be caching or pagination? Is this done locally or remotely? Monitoring provides valuable empirical baselines and trend data on all these questions.

- Network monitoring

-

Network monitoring gets into the limelight as calls between microservices usually traverse the network. Software-Defined Networks (SDNs) and overlay networks become more important with PaaS and dynamic deployments. Although maintenance and administration requirements for the underlying physical network components (cables, routers, and so on) decline, virtual networks need more attention because they come with network and computing overhead.

- Polyglot technologies

-

Monitoring solutions need to be able to cover polyglot technologies as microservice developers move away from a one-language-fits-all approach. They need to trace transactions across different technologies like, for example, a mobile frontend, a Node.js API gateway, a Java or .NET backend, and a MongoDB database.

- Container monitoring

-

With the move toward container technologies, monitoring needs to become “container-aware”; that is, it needs to cover and monitor containers and the service inside automatically. Because containers are started dynamically—for example, by orchestration tools like Kubernetes—static configuration of monitoring agents is no longer feasible.

- Platform-aware monitoring

-

Furthermore, monitoring solutions need the capabilities to distinguish between the performance of the application itself and the performance of the dynamic infrastructure. For example, microservice calls over the network have latency. However, the control plane (e.g., Kubernetes Master) also uses the network. This could be discarded as background noise, but it is there and can have an impact. In general, cloud platform technologies are still in their infancy and emerging technologies need to be monitored very closely because they have the potential for catastrophic failures.

- Monitoring as communications tool

-

Microservices are typically developed and operated by many independent teams with different agendas. Using an integrated monitoring solution that covers the engineering process from development through operation, not only allows for better problem analysis but has significant benefits for establishing and nurturing an agile DevOps culture and for providing business executives with the high-level views they need.

Cultural Impact on the Organization

Just as adopting microservices involves breaking applications into smaller parts, migrating to microservices architecture also involves breaking down silos within the organization—opening up communication, sharing responsibility, and fostering collaboration. According to Conway’s Law, organizations produce application designs that mimic the organization’s communication structure. When moving toward microservices, some businesses are applying an “inverse Conway maneuver”—reorganizing their organizational structure into small teams with lightweight communication overheads.

Any organization that designs a system will inevitably produce a design whose structure is a copy of the organization’s communication structure.

Melvin Conway

Decentralized governance is desirable for microservices architectures—autonomous teams have the flexibility to release according to their own schedule and to select technologies that fit their services best. The extension of this strategy is that teams take on responsibility for all aspects of their code, not just for shipping it—this is the “you build it, you run it” approach. Rather than having a DevOps team (another silo to be broken down), teams develop a DevOps mindset and shift toward cross-functional teams, in which team members have a mix of expertise. However, although DevOps might be being absorbed into the development teams, there is still a need for Ops, to support development teams and manage and provide the platform and tools that enable them to automate their CI/CD and self-service their deployments.

Although communication between teams is reduced, teams still need to know their microservice consumers (e.g., other development teams), and ensure that their service APIs continue to meet their needs while having the flexibility to update their service and APIs. Consumer-driven contracts can be used to document the expectations of each consumer by autogenerating the documentation from their code where possible so that it does not grow stale, and real examples of how other teams are using the APIs can also be added to test suites.

There might also be the need for some minimal centralized governance: as the number of teams increases and the technology stack becomes more diverse, the microservices environment can become more unpredictable and more complex, and the skills required to manage microservices-based applications become more demanding. Just because a team wants to use a particular technology does not mean that the Ops team or platform will be willing to support it.

Adopting containers is one strategy for dealing with this complexity. Containers are designed to be built once and run anywhere, so they allow the development team to focus on taking care of its apps, and Ops doesn’t need to know what is inside of the containers to keep them running.

Challenges Companies Face Adopting Microservices

The biggest challenges identified by survey respondents with PaaS in production include integrating with (legacy) systems, mastering complexity of application dependencies, technology maturity, and scaling application instances (Figure 3-8).

Figure 3-8. What are the biggest challenges with your PaaS environment?

Specifically looking at those respondents using container-based environments, the biggest challenge reported by the 198 respondents using containers was technology maturity (Figure 3-9). Container technologies themselves continue to evolve, with container management and orchestration toolchains in particular changing rapidly and sometimes in ways that are not compatible with other container systems. Efforts by groups like the Open Container Initiative to describe and standardize behavior of container technologies might help to ease this concern as container technologies mature.

Once again, mastering complexity of dependencies was a concern, identified by 44 percent of the respondents using containers. With organizational changes associated with adopting microservices leading to teams adopting a DevOps mindset, teams can independently deploy, which also increases complexity of the microservices environment. This change in mindset was a concern for 41 percent of respondents.

Figure 3-9. What are the biggest challenges with your container-based environment?

The other top concern was application monitoring, (41 percent of respondents). With multiple development teams working faster and application complexity and dependencies increasing as applications scale, application monitoring—service-level monitoring in particular—is essential to ensure that code and service quality remain high. Other common concerns included container scaling, volume management, and blue/green deployments.

Blue/green deployment is a strategy for minimizing downtime and reducing risk when updating applications. Blue/green deployments are possible when the platform runs two parallel production environments (named blue and green for ease of reference). Only one environment is active at any given time (say, blue), while the other (green) remains idle. A router sits in front of the two environments, directing traffic to the active production environment (blue).

Having two almost identical environments helps to minimize downtime and reduce risk when performing rolling updates. You can release new featuresinto the inactive green environment first. With this release strategy, the inactive (green) environment acts as a staging environment, allowing final testing of features to be performed in an environment which is identical to the blue production environment, thus eliminating the need for a separate preproduction environment. When ready, the route to the production environment is flipped at the router so that production traffic will begin to be directed to the green environment.

The now inactive blue environment can be kept in its existing state for a short period of time, to make it possible to rapidly switch back if anything goes wrong during or shortly after the update.

PaaS offers different mechanisms to create two logical environments on the fly. In fact, the blue/green environments coexist in the production environment and upgrades are rolled out by replacing instance by instance with newer versions. Although the same basics are applied, the entire procedure is controlled by code and can therefore be executed automatically, without human intervention. Furthermore the rolling ugprade mechanism is not only limited to services and containers (e.g., Kubernetes), but is also applicable for VMs (e.g., AWS Cloudforms).

Conclusion

Adopting a microservices architecture allows businesses to streamline delivery of stable, scalable applications that make more efficient use of cloud resources. Shifting from a monolith application toward microservices is an incremental process that requires and results in cultural change as well as architectural change as teams become smaller and more autonomous, with polyglot architectures and independent release cycles becoming more common.

-

Adopting containers helps deal with increased complexity, and when combined with CI/CD introduced in the first stage of cloud-native evolution, results in faster development iterations to push high-quality services to production.

-

Identifying and mastering complexity of application dependencies remain key challenges during this phase of cloud-native evolution.

-

Monitoring can help to better understand dependencies while applications are being migrated to a microservices architecture, and to ensure that applications continue to operate effectively throughout the process.

Case Study: Prep Sportswear

Prep Sportswear is a Seattle-based textile printing and ecommerce company. It produces apparel with custom logos and mascots for thousands of US schools, colleges, sports clubs, the military, and the government. Its online marketplace allows customers to design and purchase products related to their favorite schools or organizations.

In 2014, Preps Sportswear had a serious scaling and quality problem. On peak volume days like Black Friday or Cyber Monday, it took up to 22 hours just to process the incoming orders before the production and fulfillment process even started. On top of that, the company faced regular breakdowns which required the lead software engineer in Seattle to be on call around the clock. During one season in particular, the software engineer’s phone rang 17 days in a row at 3 o’clock in the morning because something was wrong with the platform and the production facility came to a halt!

This was detrimental to the goal of shipping an online order within four days. Preps Sportswear did not have preprinted products on the shelf. Clearly something had to be done to make the software platform faster, more scalable, and less error prone. In 2015, the engineering team decided to move to a cloud-based technology stack with microservices. This process involved dealing with technical debt in their existing monolithic application, restructuring the development team to implement an Agile approach, and adopting new bug-tracking and CD tools to integrate with the existing technology stack:

- Monoliths as technical debt

-

The developers began with a custom-built monolith with 4.5 million lines of code, all written from the ground up by an external vendor. It included the ecommerce platform, the Enterprise Resource Planning (ERP) system, manufacturing device integration, and design pattern manipulation. And it represented a huge technical debt because all of the original developers had left, documentation was poor, and some of the inline comments were in Russian.

- Agile teams

-

To put the new vision into action, Prep Sportswear needed to lay the groundwork by restructuring the development team and implementing an Agile DevOps approach to software engineering. The new team is very slim, with only 10 engineers in software development, 1 in DevOps, and 2 in operations.

- Technology stack

-

The old bug tracking tool was replaced by JIRA, and TeamCity became the team’s CD technology. It integrated nicely with its technology stack. The application is written in C# and .NET. For the cloud infrastructure, the team chose OpenStack.

Converting the large monolithic application into a modern, scalable microservice architecture turned out to be tricky and it had to be done one step at a time. Here are two examples of typical challenges:

-

The first standalone service was a new feature; an inventory system. Traditionally, Prep Sportswear had produced just-in-time, but it turned out that the fulfillment process would be faster with a small stock and a smart inventory system. The main challenge was the touchpoint with the rest of the monolith. At first, tracking inventory against orders worked just as intended. But there was another part of the system outside of the new service that allowed customers to cancel an order and replace it with a new request. This didn’t happen often, but it introduced serious errors into the new service.

-

A second example was pricing as part of a new purchasing cart service. The price of a shirt, for example, depended on whether logos needed to be printed with ink, embroidered, or appliquéd. For embroidery works, the legacy system called the design module to retrieve the exact count of stitches needed, which in turn determined the price of the merchandise. This needed to be done for every item in the cart and it slowed down the service tremendously.

In the monolith, the modules were so entangled that it was difficult to break out individual functionalities. The standard solution to segregate the existing data was technically not feasible. Rather, a set of practices helped to deal with the technical debt of the legacy system:

-

Any design of a new microservice and its boundaries started with a comprehensive end-to-end analysis of business processes and user practices.

-

A solid and fast CD system was crucial to deploy resolutions to unforeseen problems fast and reliably.

-

An outdated application monitoring tool was replaced with a modern platform that allowed the team to oversee the entire software production pipeline, perform deep root-cause analysis, and even helped with proactive service scaling by using trend data.

Key Takeaways

The transition from a monolith to microservices is challenging because new services can break when connected with the legacy system. However, at Prep Sportswear the overall system became significantly more stable and scalable. During the last peak shopping period the system broke only once. The order fulfillment time went down from six to three days and the team is already working on the next goal—same day shipping.