Chapter 3. Dynamic Multipoint VPN

This chapter covers the following topics:

![]() Generic routing encapsulation (GRE) tunnels

Generic routing encapsulation (GRE) tunnels

![]() Next Hop Resolution Protocol (NHRP)

Next Hop Resolution Protocol (NHRP)

![]() Dynamic Multipoint VPN (DMVPN) tunnels

Dynamic Multipoint VPN (DMVPN) tunnels

![]() DMVPN failure and detection and high availability

DMVPN failure and detection and high availability

![]() DMVPN dual-hub and dual-cloud designs

DMVPN dual-hub and dual-cloud designs

![]() Sample IWAN DMVPN transport models

Sample IWAN DMVPN transport models

Dynamic Multipoint VPN (DMVPN) is a Cisco solution that provides a scalable VPN architecture. DMVPN uses generic routing encapsulation (GRE) for tunneling, Next Hop Resolution Protocol (NHRP) for on-demand forwarding and mapping information, and IPsec to provide a secure overlay network to address the deficiencies of site-to-site VPN tunnels while providing full-mesh connectivity. This chapter explains the underlying technologies and components of deploying DMVPN for IWAN.

DMVPN provides the following benefits to network administrators:

![]() Zero-touch provisioning: DMVPN hubs do not require additional configuration when additional spokes are added. DMVPN spokes can use a templated tunnel configuration.

Zero-touch provisioning: DMVPN hubs do not require additional configuration when additional spokes are added. DMVPN spokes can use a templated tunnel configuration.

![]() Scalable deployment: Minimal peering and minimal permanent state on spoke routers allow for massive scale. Network scale is not limited by device (physical, virtual, or logical).

Scalable deployment: Minimal peering and minimal permanent state on spoke routers allow for massive scale. Network scale is not limited by device (physical, virtual, or logical).

![]() Spoke-to-spoke tunnels: DMVPN provides full-mesh connectivity while configuring only the initial spoke-to-hub tunnel. Dynamic spoke-to-spoke tunnels are created as needed and torn down when no longer needed. There is no packet loss while building dynamic on-demand spoke-to-spoke tunnels after the initial spoke-to-hub tunnels are established. A spoke maintains forwarding states only for spokes with which it is communicating.

Spoke-to-spoke tunnels: DMVPN provides full-mesh connectivity while configuring only the initial spoke-to-hub tunnel. Dynamic spoke-to-spoke tunnels are created as needed and torn down when no longer needed. There is no packet loss while building dynamic on-demand spoke-to-spoke tunnels after the initial spoke-to-hub tunnels are established. A spoke maintains forwarding states only for spokes with which it is communicating.

![]() Flexible network topologies: DMVPN operation does not make any rigid assumptions about either the control plane or data plane overlay topologies. The DMVPN control plane can be used in a highly distributed and resilient model that allows massive scale and avoids a single point of failure or congestion. At the other extreme, it can also be used in a centralized model for a single point of control.

Flexible network topologies: DMVPN operation does not make any rigid assumptions about either the control plane or data plane overlay topologies. The DMVPN control plane can be used in a highly distributed and resilient model that allows massive scale and avoids a single point of failure or congestion. At the other extreme, it can also be used in a centralized model for a single point of control.

![]() Multiprotocol support: DMVPN supports IPv4, IPv6, and MPLS as the overlay or transport network protocol.

Multiprotocol support: DMVPN supports IPv4, IPv6, and MPLS as the overlay or transport network protocol.

![]() Multicast support: DMVPN allows multicast traffic to flow on the tunnel interfaces.

Multicast support: DMVPN allows multicast traffic to flow on the tunnel interfaces.

![]() Adaptable connectivity: DMVPN routers can establish connectivity behind Network Address Translation (NAT). Spoke routers can use dynamic IP addressing such as Dynamic Host Configuration Protocol (DHCP).

Adaptable connectivity: DMVPN routers can establish connectivity behind Network Address Translation (NAT). Spoke routers can use dynamic IP addressing such as Dynamic Host Configuration Protocol (DHCP).

![]() Standardized building blocks: DMVPN uses industry-standardized technologies (NHRP, GRE, and IPsec) to build an overlay network. This propagates familiarity while minimizing the learning curve and easing troubleshooting.

Standardized building blocks: DMVPN uses industry-standardized technologies (NHRP, GRE, and IPsec) to build an overlay network. This propagates familiarity while minimizing the learning curve and easing troubleshooting.

Generic Routing Encapsulation (GRE) Tunnels

A GRE tunnel provides connectivity to a wide variety of network-layer protocols by encapsulating and forwarding those packets over an IP-based network. The original use of GRE tunnels was to provide a transport mechanism for nonroutable legacy protocols such as DECnet, Systems Network Architecture (SNA), or IPX. GRE tunnels have been used as a quick workaround for bad routing designs, or as a method to pass traffic through a firewall or ACL. DMVPN uses multipoint GRE (mGRE) encapsulation and supports dynamic routing protocols, which eliminates many of the support issues associated with other VPN technologies. GRE tunnels are classified as an overlay network because the GRE tunnel is built on top of an existing transport network, also known as an underlay network.

Additional header information is added to the packet when the router encapsulates the packet for the GRE tunnel. The new header information contains the remote endpoint IP address as the destination. The new IP headers allow the packet to be routed between the two tunnel endpoints without inspection of the packet’s payload. After the packet reaches the remote endpoint, the GRE headers are removed, and the original packet is forwarded out of the remote router.

The following section explains the fundamentals of a GRE tunnel before explaining multipoint GRE tunnels that are a component of DMVPN. The process for configuring a GRE tunnel is described in the following sections.

GRE Tunnel Configuration

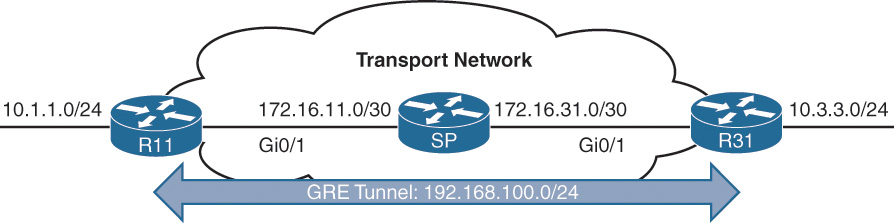

Figure 3-1 illustrates the configuration of a GRE tunnel. The 172.16.0.0/16 network range is the transport (underlay) network, and 192.168.100.0/24 is used for the GRE tunnel (overlay network).

In this topology, R11, R31, and the SP router have enabled Routing Information Protocol (RIP) on all the 10.0.0.0/8 and 172.16.0.0/16 network interfaces. This allows R11 and R31 to locate the remote router’s encapsulating interface. R11 uses the SP router as a next hop to reach the 172.16.31.0/30 network, and R31 uses the SP router as a next hop toward the 172.16.11.0/30 network.

Note

The RIP configuration does not include the 192.168.0.0/16 network range.

Example 3-1 shows the routing table of R11 before the GRE tunnel is created. Notice that the 10.3.3.0/24 network is reachable by RIP and is two hops away.

Example 3-1 R11 Routing Table Without the GRE Tunnel

R11# show ip route

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

Gateway of last resort is not set

10.0.0.0/8 is variably subnetted, 3 subnets, 2 masks

C 10.1.1.0/24 is directly connected, GigabitEthernet0/2

R 10.3.3.0/24 [120/2] via 172.16.11.2, 00:00:01, GigabitEthernet0/1

172.16.0.0/16 is variably subnetted, 3 subnets, 2 masks

C 172.16.11.0/30 is directly connected, GigabitEthernet0/1

R 172.16.31.0/30 [120/1] via 172.16.11.2, 00:00:10, GigabitEthernet0/1

R11# trace 10.3.3.3 source 10.1.1.1

Tracing the route to 10.3.3.3

1 172.16.11.2 0 msec 0 msec 1 msec

2 172.16.31.3 0 msec

The steps for configuring GRE tunnels are as follows:

Step 1. Create the tunnel interface.

Create the tunnel interface with the global configuration command interface tunnel tunnel-number.

Step 2. Identify the tunnel source.

Identify the local source of the tunnel with the interface parameter command tunnel source {ip-address | interface-id}. The tunnel source interface indicates the interface that will be used for encapsulation and decapsulation of the GRE tunnel. The tunnel source can be a physical interface or a loopback interface. A loopback interface can provide reachability if one of the transport interfaces were to fail.

Step 3. Identify the remote destination IP address.

Identify the tunnel destination with the interface parameter command tunnel destination ip-address. The tunnel destination is the remote router’s underlay IP address toward which the local router sends GRE packets.

Step 4. Allocate an IP address to the tunnel interface.

An IP address is allocated to the interface with the command ip address ip-address subnet-mask.

Step 5. Define the tunnel bandwidth (optional).

Virtual interfaces do not have the concept of latency and need to have a reference bandwidth configured so that routing protocols that use bandwidth for best-path calculation can make an intelligent decision. Bandwidth is also used for QoS configuration on the interface. Bandwidth is defined with the interface parameter command bandwidth [1-10000000], which is measured in kilobits per second.

Step 6. Specify a GRE tunnel keepalive (optional).

Tunnel interfaces are GRE point-to-point (P2P) by default, and the line protocol enters an up state when the router detects that a route to the tunnel destination exists in the routing table. If the tunnel destination is not in the routing table, the tunnel interface (line protocol) enters a down state.

Tunnel keepalives ensure that bidirectional communication exists between tunnel endpoints to keep the line protocol up. Otherwise the router must rely upon routing protocol timers to detect a dead remote endpoint.

Keepalives are configured with the interface parameter command keepalive [seconds [retries]]. The default timer is 10 seconds and three retries.

Step 7. Define the IP maximum transmission unit (MTU) for the tunnel interface (optional).

The GRE tunnel adds a minimum of 24 bytes to the packet size to accommodate the headers that are added to the packet. Specifying the IP MTU on the tunnel interface has the router perform the fragmentation in advance of the host having to detect and specify the packet MTU. IP MTU is configured with the interface parameter command ip mtu mtu.

Table 3-1 displays the amount of encapsulation overhead for various tunnel techniques. The header size may change based upon the configuration options used. For all of our examples, the IP MTU is set to 1400.

GRE Example Configuration

Example 3-2 provides the GRE tunnel configuration for R11 and R31. EIGRP is enabled on the LAN (10.0.0.0/8) and GRE tunnel (192.168.100.0/24) networks. RIP is enabled on the LAN (10.0.0.0/8) and transport (172.16.0.0/16) networks but is not enabled on the GRE tunnel. R11 and R31 become direct EIGRP peers on the GRE tunnel because all the network traffic is encapsulated between them.

EIGRP has a lower administrative distance (AD), 90, and the routers use the route learned via the EIGRP connection (using the GRE tunnel) versus the route learned via RIP (120) that came from the transport network. Notice that the EIGRP configuration uses named mode. EIGRP named mode provides clarity and keeps the entire EIGRP configuration in one centralized location. EIGRP named mode is the only method of EIGRP configuration that supports some of the newer features such as stub site.

R11

interface Tunnel100

bandwidth 4000

ip address 192.168.100.11 255.255.255.0

ip mtu 1400

keepalive 5 3

tunnel source GigabitEthernet0/1

tunnel destination 172.16.31.1

!

router eigrp GRE-OVERLAY

address-family ipv4 unicast autonomous-system 100

topology base

exit-af-topology

network 10.0.0.0

network 192.168.100.0

exit-address-family

!

router rip

version 2

network 172.16.0.0

no auto-summary

R31

interface Tunnel100

bandwidth 4000

ip address 192.168.100.31 255.255.255.0

ip mtu 1400

keepalive 5 3

tunnel source GigabitEthernet0/1

tunnel destination 172.16.11.1

!

router eigrp GRE-OVERLAY

address-family ipv4 unicast autonomous-system 100

topology base

exit-af-topology

network 10.0.0.0

network 192.168.100.0

exit-address-family

!

router rip

version 2

network 172.16.0.0

no auto-summary

Now that the GRE tunnel is configured, the state of the tunnel can be verified with the command show interface tunnel number. Example 3-3 displays output from the command. Notice that the output includes the tunnel source and destination addresses, keepalive values (if any), and the tunnel line protocol state, and that the tunnel is a GRE/IP tunnel.

Example 3-3 Display of GRE Tunnel Parameters

R11# show interface tunnel 100

! Output omitted for brevity

Tunnel100 is up, line protocol is up

Hardware is Tunnel

Internet address is 192.168.100.1/24

MTU 17916 bytes, BW 400 Kbit/sec, DLY 50000 usec,

reliability 255/255, txload 1/255, rxload 1/255

Encapsulation TUNNEL, loopback not set

Keepalive set (5 sec), retries 3

Tunnel source 172.16.11.1 (GigabitEthernet0/1), destination 172.16.31.1

Tunnel Subblocks:

src-track:

Tunnel100 source tracking subblock associated with GigabitEthernet0/1

Set of tunnels with source GigabitEthernet0/1, 1 member (includes

iterators), on interface <OK>

Tunnel protocol/transport GRE/IP

Key disabled, sequencing disabled

Checksumming of packets disabled

Tunnel TTL 255, Fast tunneling enabled

Tunnel transport MTU 1476 bytes

Tunnel transmit bandwidth 8000 (kbps)

Tunnel receive bandwidth 8000 (kbps)

Last input 00:00:02, output 00:00:02, output hang never

Example 3-4 displays the routing table of R11 after it has become an EIGRP neighbor with R31. Notice that R11 learns the 10.3.3.0/24 network directly from R31 via tunnel 100.

Example 3-4 R11 Routing Table with GRE Tunnel

R11# show ip route

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

Gateway of last resort is not set

10.0.0.0/8 is variably subnetted, 3 subnets, 2 masks

C 10.1.1.0/24 is directly connected, GigabitEthernet0/2

D 10.3.3.0/24 [90/38912000] via 192.168.100.31, 00:03:35, Tunnel100

172.16.0.0/16 is variably subnetted, 3 subnets, 2 masks

C 172.16.11.0/30 is directly connected, GigabitEthernet0/1

R 172.16.31.0/30 [120/1] via 172.16.11.2, 00:00:03, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

Example 3-5 verifies that traffic from 10.1.1.1 takes tunnel 100 (192.168.100.0/24) to reach the 10.3.3.3 network.

Example 3-5 Verification of the Path from R11 to R31

R11# traceroute 10.3.3.3 source 10.1.1.1

Tracing the route to 10.3.3.3

1 192.168.100.31 1 msec * 0 msec

Note

Notice that from R11’s perspective, the network is only one hop away. The traceroute does not display all the hops in the underlay. In the same fashion, the packet’s time to live (TTL) is encapsulated as part of the payload. The original TTL decreases by only one for the GRE tunnel regardless of the number of hops in the transport network.

Next Hop Resolution Protocol (NHRP)

Next Hop Resolution Protocol (NHRP) is defined in RFC 2332 as a method to provide address resolution for hosts or networks (ARP-like capability) for non-broadcast multi-access (NBMA) networks such as Frame Relay and ATM. NHRP provides a method for devices to learn the protocol and NBMA network, thereby allowing them to directly communicate with each other.

NHRP is a client-server protocol that allows devices to register themselves over directly connected or disparate networks. NHRP next-hop servers (NHSs) are responsible for registering addresses or networks, maintaining an NHRP repository, and replying to any queries received by next-hop clients (NHCs). The NHC and NHS are transactional in nature.

DMVPN uses multipoint GRE tunnels, which requires a method of mapping tunnel IP addresses to the transport (underlay) IP address. NHRP provides the technology for mapping those IP addresses. DMVPN spokes (NHCs) are statically configured with the IP address of the hubs (NHSs) so that they can register their tunnel and NBMA (transport) IP address with the hubs (NHSs). When a spoke-to-spoke tunnel is established, NHRP messages provide the necessary information for the spokes to locate each other so that they can build a spoke-to-spoke DMVPN tunnel. The NHRP messages also allow a spoke to locate a remote network. Cisco has added additional NHRP message types to those defined in RFC 2332 to provide some of the recent enhancements in DMVPN.

All NHRP packets must include the source NBMA address, source protocol address, destination protocol address, and NHRP message type. The NHRP message types are explained in Table 3-2.

Note

The NBMA address refers to the transport network, and the protocol address refers to the IP address assigned to the overlay network (tunnel IP address or a network/host address).

NHRP messages can contain additional information that is included in the extension part of a message. Table 3-3 lists the common NHRP message extensions.

Dynamic Multipoint VPN (DMVPN)

DMVPN provides complete connectivity while simplifying configuration as new sites are deployed. It is considered a zero-touch technology because no configuration is needed on the DMVPN hub routers as new spokes are added to the DMVPN network. This facilitates a consistent configuration where all spokes can use identical tunnel configuration (that is, can be templatized) to simplify support and deployment with network provisioning systems like Cisco Prime Infrastructure.

Spoke sites initiate a persistent VPN connection to the hub router. Network traffic between spoke sites does not have to travel through the hubs. DMVPN dynamically builds a VPN tunnel between spoke sites on an as-needed basis. This allows network traffic, such as for VoIP, to take a direct path, which reduces delay and jitter without consuming bandwidth at the hub site.

DMVPN was released in three phases, and each phase was built on the previous one with additional functions. All three phases of DMVPN need only one tunnel interface on a router, and the DMVPN network size should accommodate all the endpoints associated to that tunnel network. DMVPN spokes can use DHCP or static addressing for the transport and overlay networks. They locate the other spokes’ IP addresses (protocols and NBMA) through NHRP.

Phase 1: Spoke-to-Hub

DMVPN Phase 1 was the first DMVPN implementation and provides a zero-touch deployment for VPN sites. VPN tunnels are created only between spoke and hub sites. Traffic between spokes must traverse the hub to reach the other spoke.

Phase 2: Spoke-to-Spoke

DMVPN Phase 2 provides additional capability from DMVPN Phase 1 and allows spoke-to-spoke communication on a dynamic basis by creating an on-demand VPN tunnel between the spoke devices. DMVPN Phase 2 does not allow summarization (next-hop preservation). As a result, it also does not support spoke-to-spoke communication between different DMVPN networks (multilevel hierarchical DMVPN).

Phase 3: Hierarchical Tree Spoke-to-Spoke

DMVPN Phase 3 refines spoke-to-spoke connectivity by enhancing the NHRP messaging and interacting with the routing table. With DMVPN Phase 3 the hub sends an NHRP redirect message to the spoke that originated the packet flow. The NHRP redirect message provides the necessary information so that the originator spoke can initiate a resolution of the destination host/network. Cisco PfRv3 adds API support for DMVPN Phase 3 as well.

In DMVPN Phase 3, NHRP installs paths in the routing table for the shortcuts it creates. NHRP shortcuts modify the next-hop entry for existing routes or add a more explicit route entry to the routing table. Because NHRP shortcuts install more explicit routes in the routing table, DMVPN Phase 3 supports summarization of networks at the hub while providing optimal routing between spoke routers. NHRP shortcuts allow a hierarchical tree topology so that a regional hub is responsible for managing NHRP traffic and subnets within that region, but spoke-to-spoke tunnels can be established outside of that region.

Figure 3-2 illustrates the differences in traffic patterns for all three DMVPN phases. All three models support direct spoke-to-hub communication as shown by R1 and R2. Spoke-to-spoke packet flow in DMVPN Phase 1 is different from the packet flow in DMVPN Phases 2 and 3. Traffic between R3 and R4 must traverse the hub for Phase 1 DMVPN, whereas a dynamic spoke-to-spoke tunnel is created for DMVPN Phase 2 and Phase 3 that allows direct communication.

Figure 3-3 illustrates the difference in traffic patterns between Phase 2 and Phase 3 DMVPN with hierarchical topologies (multilevel). In this two-tier hierarchical design, R2 is the hub for DMVPN tunnel 20, and R3 is the hub for DMVPN tunnel 30. Connectivity between DMVPN tunnels 20 and 30 is established by DMVPN tunnel 10. All three DMVPN tunnels use the same DMVPN tunnel ID even though they use different tunnel interfaces. For Phase 2 DMVPN tunnels, traffic from R5 must flow to the hub R2, where it is sent to R3 and then back down to R6. For Phase 3 DMVPN tunnels, a spoke-to-spoke tunnel is established between R5 and R6, and the two routers can communicate directly.

Note

Each DMVPN phase has its own specific configuration. Intermixing DMVPN phases on the same tunnel network is not recommended. If you need to support multiple DMVPN phases for a migration, a second DMVPN network (subnet and tunnel interface) should be used.

This book explains the DMVPN fundamentals with DMVPN Phase 1 and then explains DMVPN Phase 3. It does not cover DMVPN Phase 2. DMVPN Phase 3 is part of the prescriptive IWAN validated design and is explained thoroughly. At the time of writing this book, two-level hierarchical DMVPN topologies are not supported as part of the prescriptive IWAN validated design.

DMVPN Configuration

There are two types of DMVPN configurations (hub or spoke), which vary depending on a router’s role. The DMVPN hub is the NHRP NHS, and the DMVPN spoke is the NHRP NHC. The spokes should be preconfigured with the hub’s static IP address, but a spoke’s NBMA IP address can be static or assigned from DHCP.

Note

In this book, the terms “spoke router” and “branch router” are interchangeable, as are the terms “hub router” and “headquarters/data center router.”

Figure 3-4 shows the first topology used to explain DMVPN configuration and functions. R11 acts as the DMVPN hub, and R31 and R41 are the DMVPN spokes. All three routers use a static default route to the SP router that provides connectivity for the NBMA (transport) networks in the 172.16.0.0/16 network range. EIGRP has been configured to operate on the DMVPN tunnel and to advertise the local LAN networks. Specific considerations for configuring EIGRP are addressed in Chapter 4, “Intelligent WAN (IWAN) Routing.”

DMVPN Hub Configuration

The steps for configuring DMVPN on a hub router are as follows:

Step 1. Create the tunnel interface.

Create the tunnel interface with the global configuration command interface tunnel tunnel-number.

Step 2. Identify the tunnel source.

Identify the local source of the tunnel with the interface parameter command tunnel source {ip-address | interface-id}. The tunnel source depends on the transport type. The encapsulating interface can be a logical interface such as a loopback or a subinterface.

Note

QoS problems can occur with the use of loopback interfaces when there are multiple paths in the forwarding table to the decapsulating router. The same problems occur automatically with port channels, which are not recommended at the time of this writing.

Step 3. Convert the tunnel to a GRE multipoint interface.

Configure the DMVPN tunnel as a GRE multipoint tunnel with the interface parameter command tunnel mode gre multipoint.

Step 4. Allocate an IP address for the DMVPN network (tunnel).

An IP address is configured to the interface with the command ip address ip-address subnet-mask.

Note

The subnet mask or size of the network should accommodate the total number of routers that are participating in the DMVPN tunnel. All the DMVPN tunnels in this book use /24, which accommodates 254 routers. Depending on the hardware used, the DMVPN network can scale much larger to 2000 or more devices.

Step 5. Enable NHRP on the tunnel interface.

Enable NHRP and uniquely identify the DMVPN tunnel for the virtual interface with the interface parameter command ip nhrp network-id 1-4294967295.

The NHRP network ID is locally significant and is used to identify a DMVPN cloud on a router because multiple tunnel interfaces can belong to the same DMVPN cloud. It is recommended that the NHRP network ID match on all routers participating in the same DMVPN network.

Step 6. Define the tunnel key (optional).

The tunnel key helps identify the DMVPN virtual tunnel interface if multiple tunnel interfaces use the same tunnel source interfaces as defined in Step 3. Tunnel keys, if configured, must match for a DMVPN tunnel to establish between two routers. The tunnel key adds 4 bytes to the DMVPN header.

The tunnel key is configured with the command tunnel key 0-4294967295.

There is no technical correlation between the NHRP network ID and the tunnel interface number; however, keeping them the same helps from an operational support aspect.

Step 7. Enable multicast support for NHRP (optional).

NHRP provides a mapping service of the protocol (tunnel IP) address to the NBMA (transport) address for multicast packets too. In order to support multicast or routing protocols that use multicast, this must be enabled on DMVPN hub routers with the tunnel command ip nhrp map multicast dynamic. This feature is explained further in Chapter 4.

Step 8. Enable NHRP redirect (used only for Phase 3).

Enable NHRP redirect functions with the command ip nhrp redirect.

Step 9. Define the tunnel bandwidth (optional).

Virtual interfaces do not have the concept of latency and need to have a reference bandwidth configured so that routing protocols that use bandwidth for best-path calculation can make an intelligent decision. Bandwidth is also used for QoS configuration on the interface. Bandwidth is defined with the interface parameter command bandwidth [1-10000000], which is measured in kilobits per second.

Step 10. Define the IP MTU for the tunnel interface (optional).

The IP MTU is configured with the interface parameter command ip mtu mtu. Typically an MTU of 1400 is used for DMVPN tunnels to account for the additional encapsulation overhead.

Step 11. Define the TCP maximum segment size (MSS) (optional).

The TCP Adjust MSS feature ensures that the router will edit the payload of a TCP three-way handshake if the MSS exceeds the configured value. The command is ip tcp adjust-mss mss-size. Typically DMVPN interfaces use a value of 1360 to accommodate IP, GRE, and IPsec headers.

Note

Multipoint GRE tunnels do not support the option for using a keepalive.

DMVPN Spoke Configuration for DMVPN Phase 1 (Point-to-Point)

Configuration of DMVPN Phase 1 spokes is similar to the configuration for a hub router except:

![]() It does not use a multipoint GRE tunnel. Instead, the tunnel destination is specified.

It does not use a multipoint GRE tunnel. Instead, the tunnel destination is specified.

![]() The NHRP mapping points to at least one active NHS.

The NHRP mapping points to at least one active NHS.

The process for configuring a DMVPN Phase 1 spoke router is as follows:

Step 1. Create the tunnel interface.

Create the tunnel interface with the global configuration command interface tunnel tunnel-number.

Step 2. Identify the remote destination IP address.

Identify the tunnel destination with the interface parameter command tunnel destination ip-address.

Step 3. Identify the tunnel source.

Identify the local source of the tunnel with the interface parameter command tunnel source {ip-address | interface-id}.

Step 4. Define the tunnel destination (hub).

Identify the tunnel destination with the interface parameter command tunnel destination ip-address. The tunnel destination is the DMVPN hub IP (NBMA) address that the local router uses to establish the DMVPN tunnel.

Step 5. Allocate an IP address for the DMVPN network (tunnel).

An IP address is configured to the interface with the command ip address {ip-address subnet-mask | dhcp} or with the command ipv6 address ipv6-address/prefix-length. At the time of writing this book, DHCP is not supported for tunnel IPv6 address allocation.

Step 6. Enable NHRP on the tunnel interface.

Enable NHRP and uniquely identify the DMVPN tunnel for the virtual interface with the interface parameter command ip nhrp network-id 1-4294967295.

Step 7. Define the NHRP tunnel key (optional).

The NHRP tunnel key helps identify the DMVPN virtual tunnel interface if multiple tunnels terminate on the same interface as defined in Step 3. Tunnel keys must match for a DMVPN tunnel to establish between two routers. The tunnel key adds 4 bytes to the DMVPN header.

The tunnel key is configured with the command tunnel key 0-4294967295.

Note

If the tunnel key is defined on the hub router, it must be defined on all the spoke routers.

Step 8. Specify the NHRP NHS, NBMA address, and multicast mapping.

Specify the address of one or more NHRP NHS servers with the command ip nhrp nhs nhs-address nbma nbma-address [multicast]. The multicast keyword provides multicast mapping functions in NHRP and is required to support the following routing protocols: RIP, EIGRP, and OSPF.

This command is the simplest method of defining the NHRP configuration. Table 3-4 lists the alternative NHRP mapping commands, which are needed only in cases where a static unicast or multicast map is needed for a node that is not an NHS.

Note

Remember that the NBMA address is the transport IP address, and the NHS address is the protocol address for the DMVPN hub. This is the hardest concept for most network engineers to remember.

Step 9. Define the tunnel bandwidth (optional).

Virtual interfaces do not have the concept of latency and need to have a reference bandwidth configured so that routing protocols that use bandwidth for best-path calculation can make an intelligent decision. Bandwidth is also used for QoS configuration on the interface. Bandwidth is defined with the interface parameter command bandwidth [1-10000000], which is measured in kilobits per second.

Step 10. Define the IP MTU for the tunnel interface (optional).

The IP MTU is configured with the interface parameter command ip mtu mtu. Typically an MTU of 1400 is used for DMVPN tunnels to account for the additional encapsulation overhead.

Step 11. Define the TCP MSS (optional).

The TCP Adjust MSS feature ensures that the router will edit the payload of a TCP three-way handshake if the MSS exceeds the configured value. The command is ip tcp adjust-mss mss-size. Typically DMVPN interfaces use a value of 1360 to accommodate IP, GRE, and IPsec headers.

Example 3-6 provides a sample configuration for R11 (hub), R31 (spoke), and R41 (spoke). Notice that R11 uses the tunnel mode gre multipoint configuration, whereas R31 and R41 use tunnel destination 172.16.11.1 (R11’s transport endpoint IP address). All three routers have set the appropriate MTU, bandwidth, and TCP MSS values.

R31’s NHRP settings are configured with the single multivalue NHRP command, whereas R41’s configuration uses three NHRP commands to provide identical functions. This configuration has been highlighted and should demonstrate the complexity it may add for typical uses.

Example 3-6 Phase 1 DMVPN Configuration

R11-Hub

interface Tunnel100

bandwidth 4000

ip address 192.168.100.11 255.255.255.0

ip mtu 1400

ip nhrp map multicast dynamic

ip nhrp network-id 100

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel mode gre multipoint

tunnel key 100

R31-Spoke (Single Command NHRP Configuration)

interface Tunnel100

bandwidth 4000

ip address 192.168.100.31 255.255.255.0

ip mtu 1400

ip nhrp network-id 100

ip nhrp nhs 192.168.100.11 nbma 172.16.11.1 multicast

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel destination 172.16.11.1

tunnel key 100

R41-Spoke (Multi-Command NHRP Configuration)

interface Tunnel100

bandwidth 40000

ip address 192.168.100.41 255.255.255.0

ip mtu 1400

ip nhrp map 192.168.100.1 172.16.11.1

ip nhrp map multicast 172.16.11.1

ip nhrp network-id 100

ip nhrp nhs 192.168.100.11

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel destination 172.16.11.1

tunnel key 100

Viewing DMVPN Tunnel Status

Upon configuring a DMVPN network, it is a good practice to verify that the tunnels have been established and that NHRP is functioning properly.

The command show dmvpn [detail] provides the tunnel interface, tunnel role, tunnel state, and tunnel peers with uptime. When the DMVPN tunnel interface is administratively shut down, there are no entries associated to that tunnel interface. The tunnel states are, in order of establishment:

![]() INTF: The line protocol of the DMVPN tunnel is down.

INTF: The line protocol of the DMVPN tunnel is down.

![]() IKE: DMVPN tunnels configured with IPsec have not yet successfully established an IKE session.

IKE: DMVPN tunnels configured with IPsec have not yet successfully established an IKE session.

![]() IPsec: An IKE session is established but an IPsec security association (SA) has not yet been established.

IPsec: An IKE session is established but an IPsec security association (SA) has not yet been established.

![]() NHRP: The DMVPN spoke router has not yet successfully registered.

NHRP: The DMVPN spoke router has not yet successfully registered.

![]() Up: The DMVPN spoke router has registered with the DMVPN hub and received an ACK (positive registration reply) from the hub.

Up: The DMVPN spoke router has registered with the DMVPN hub and received an ACK (positive registration reply) from the hub.

Example 3-7 provides sample output of the command show dmvpn. The output displays that R31 and R41 have defined one tunnel with one NHS (R11). This entry is in a static state because of the static NHRP mappings in the tunnel interface. R11 has two tunnels that were learned dynamically when R31 and R41 registered and established a tunnel to R11.

Example 3-7 Viewing the DMVPN Tunnel Status for DMVPN Phase 1

R11-Hub# show dmvpn

Legend: Attrb --> S - Static, D - Dynamic, I - Incomplete

N - NATed, L - Local, X - No Socket

T1 - Route Installed, T2 - Nexthop-override

C - CTS Capable

# Ent --> Number of NHRP entries with same NBMA peer

NHS Status: E --> Expecting Replies, R --> Responding, W --> Waiting

UpDn Time --> Up or Down Time for a Tunnel

==========================================================================

Interface: Tunnel100, IPv4 NHRP Details

Type:Hub, NHRP Peers:2,

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb

----- --------------- --------------- ----- -------- -----

1 172.16.31.1 192.168.100.31 UP 00:05:26 D

1 172.16.41.1 192.168.100.41 UP 00:05:26 D

R31-Spoke# show dmvpn

! Output omitted for brevity

Interface: Tunnel100, IPv4 NHRP Details

Type:Spoke, NHRP Peers:1,

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb

----- --------------- --------------- ----- -------- -----

1 172.16.11.1 192.168.100.11 UP 00:05:26 S

R41-Spoke# show dmvpn

! Output omitted for brevity

Interface: Tunnel100, IPv4 NHRP Details

Type:Spoke, NHRP Peers:1,

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb

----- --------------- --------------- ----- -------- -----

1 172.16.11.1 192.168.100.11 UP 00:05:26 S

Note

Both routers must maintain an up NHRP state with each other for data traffic to flow successfully between them.

Example 3-8 provides output of the command show dmvpn detail. Notice that the detail keyword provides the local tunnel and NBMA IP addresses, tunnel health monitoring, and VRF contexts. In addition, IPsec crypto information (if configured) is displayed.

Example 3-8 Viewing the DMVPN Tunnel Status for Phase 1 DMVPN

R11-Hub# show dmvpn detail

Legend: Attrb --> S - Static, D - Dynamic, I - Incomplete

N - NATed, L - Local, X - No Socket

T1 - Route Installed, T2 - Nexthop-override

C - CTS Capable

# Ent --> Number of NHRP entries with same NBMA peer

NHS Status: E --> Expecting Replies, R --> Responding, W --> Waiting

UpDn Time --> Up or Down Time for a Tunnel

==========================================================================

Interface Tunnel100 is up/up, Addr. is 192.168.100.11, VRF ""

Tunnel Src./Dest. addr: 172.16.11.1/MGRE, Tunnel VRF ""

Protocol/Transport: "multi-GRE/IP", Protect ""

Interface State Control: Disabled

nhrp event-publisher : Disabled

Type:Hub, Total NBMA Peers (v4/v6): 2

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

1 172.16.31.1 192.168.100.31 UP 00:01:05 D 192.168.100.31/32

1 172.16.41.1 192.168.100.41 UP 00:01:06 D 192.168.100.41/32

R31-Spoke# show dmvpn detail

! Output omitted for brevity

Interface Tunnel100 is up/up, Addr. is 192.168.100.31, VRF ""

Tunnel Src./Dest. addr: 172.16.31.1/172.16.11.1, Tunnel VRF ""

Protocol/Transport: "GRE/IP", Protect ""

Interface State Control: Disabled

nhrp event-publisher : Disabled

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 1

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Ne

----- --------------- --------------- ----- -------- ----- ------------

1 172.16.11.1 192.168.100.11 UP 00:00:28 S 192.168.100

R41-Spoke# show dmvpn detail

! Output omitted for brevity

Interface Tunnel100 is up/up, Addr. is 192.168.100.41, VRF ""

Tunnel Src./Dest. addr: 172.16.41.1/172.16.11.1, Tunnel VRF ""

Protocol/Transport: "GRE/IP", Protect ""

Interface State Control: Disabled

nhrp event-publisher : Disabled

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 1

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

1 172.16.11.1 192.168.100.11 UP 00:02:00 S 192.168.100.11/32

Viewing the NHRP Cache

The information that NHRP provides is a vital component of the operation of DMVPN. Every router maintains a cache of requests that it receives or is processing. The command show ip nhrp [brief] displays the local NHRP cache on a router. The NHRP cache contains the following fields:

![]() Network entry for hosts (IPv4: /32 or IPv6: /128) or for a network /x and the tunnel IP address to NBMA (transport) IP address.

Network entry for hosts (IPv4: /32 or IPv6: /128) or for a network /x and the tunnel IP address to NBMA (transport) IP address.

![]() The interface number, duration of existence, and when it will expire (hours:minutes:seconds). Only dynamic entries expire.

The interface number, duration of existence, and when it will expire (hours:minutes:seconds). Only dynamic entries expire.

![]() The NHRP mapping entry type. Table 3-5 provides a list of NHRP mapping entries in the local cache.

The NHRP mapping entry type. Table 3-5 provides a list of NHRP mapping entries in the local cache.

NHRP message flags specify attributes of an NHRP cache entry or of the peer for which the entry was created. Table 3-6 provides a listing of the NHRP message flags and their meanings.

The command show ip nhrp [brief | detail] displays the local NHRP cache on a router. Example 3-9 displays the local NHRP cache for the various routers in the sample topology. R11 contains only dynamic registrations for R31 and R41. In the event that R31 and R41 cannot maintain connectivity to R11’s transport IP address, eventually the tunnel mapping will be removed on R11. The NHRP message flags on R11 indicate that R31 and R41 successfully registered with the unique registration to R11, and that traffic has recently been forwarded to both routers.

Example 3-9 Local NHRP Cache for DMVPN Phase 1

R11-Hub# show ip nhrp

192.168.100.31/32 via 192.168.100.31

Tunnel100 created 23:04:04, expire 01:37:26

Type: dynamic, Flags: unique registered used nhop

NBMA address: 172.16.31.1

192.168.100.41/32 via 192.168.100.41

Tunnel100 created 23:04:00, expire 01:37:42

Type: dynamic, Flags: unique registered used nhop

NBMA address: 172.16.41.1

R31-Spoke# show ip nhrp

192.168.100.11/32 via 192.168.100.11

Tunnel100 created 23:02:53, never expire

Type: static, Flags:

NBMA address: 172.16.11.1

R41-Spoke# show ip nhrp

192.168.100.11/32 via 192.168.100.11

Tunnel100 created 23:02:53, never expire

Type: static, Flags:

NBMA address: 172.16.11.1

Note

Using the optional detail keyword provides a list of routers that submitted an NHRP resolution request and its request ID.

Example 3-10 provides the output for the show ip nhrp brief command. Some information such as the used and nhop NHRP message flags are not shown with the brief keyword.

Example 3-10 Sample Output from the show ip nhrp brief Command

R11-Hub# show ip nhrp brief

****************************************************************************

NOTE: Link-Local, No-socket and Incomplete entries are not displayed

****************************************************************************

Legend: Type --> S - Static, D - Dynamic

Flags --> u - unique, r - registered, e - temporary, c - claimed

a - authoritative, t - route

============================================================================

Intf NextHop Address NBMA Address

Target Network T/Flag

-------- ------------------------------------------- ------ ----------------

Tu100 192.168.100.31 172.16.31.1

192.168.100.31/32 D/ur

Tu100 192.168.100.41 172.16.41.1

192.168.100.41/32 D/ur

R31-Spoke# show ip nhrp brief

! Output omitted for brevity

Intf NextHop Address NBMA Address

Target Network T/Flag

-------- ------------------------------------------- ------ ----------------

Tu100 192.168.100.11 172.16.11.1

192.168.100.11/32 S/

R41-Spoke# show ip nhrp brief

! Output omitted for brevity

Intf NextHop Address NBMA Address

Target Network T/Flag

-------- ------------------------------------------- ------ ----------------

Tu100 192.168.100.11 172.16.11.1

192.168.100.11/32 S/

Example 3-11 displays the routing tables for R11, R31, and R41. All three routers maintain connectivity to the 10.1.1.0/24, 10.3.3.0/24, and 10.4.4.0/24 networks. Notice that the next-hop address between spoke routers is 192.168.100.11 (R11).

Example 3-11 DMVPN Phase 1 Routing Table

R11-Hub# show ip route

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

Gateway of last resort is 172.16.11.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.11.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

C 10.1.1.0/24 is directly connected, GigabitEthernet0/2

D 10.3.3.0/24 [90/27392000] via 192.168.100.31, 23:03:53, Tunnel100

D 10.4.4.0/24 [90/27392000] via 192.168.100.41, 23:03:28, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.11.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

R31-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.31.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.31.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 23:04:48, Tunnel100

C 10.3.3.0/24 is directly connected, GigabitEthernet0/2

D 10.4.4.0/24 [90/52992000] via 192.168.100.11, 23:04:23, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.31.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

R41-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.41.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.41.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 23:05:01, Tunnel100

D 10.3.3.0/24 [90/52992000] via 192.168.100.11, 23:05:01, Tunnel100

C 10.4.4.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.41.0/24 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

Example 3-12 verifies that R31 can connect to R41, but network traffic must still pass through R11.

Example 3-12 Phase 1 DMVPN Traceroute from R31 to R41

R31-Spoke# traceroute 10.4.4.1 source 10.3.3.1

Tracing the route to 10.4.4.1

1 192.168.100.11 0 msec 0 msec 1 msec

2 192.168.100.41 1 msec * 1 msec

DMVPN Configuration for Phase 3 DMVPN (Multipoint)

The Phase 3 DMVPN configuration for the hub router adds the interface parameter command ip nhrp redirect on the hub router. This command checks the flow of packets on the tunnel interface and sends a redirect message to the source spoke router when it detects packets hairpinning out of the DMVPN cloud. Hairpinning is when traffic is received and sent out of an interface in the same cloud (identified by the NHRP network ID). For instance, packets coming in and going out of the same tunnel interface is a case of hairpinning.

The Phase 3 DMVPN configuration for spoke routers uses the multipoint GRE tunnel interface and uses the command ip nhrp shortcut on the tunnel interface.

Note

There are no negative effects of placing ip nhrp shortcut and ip nhrp redirect on the same DMVPN tunnel interface.

The process for configuring a DMVPN Phase 3 spoke router is as follows:

Step 1. Create the tunnel interface.

Create the tunnel interface with the global configuration command interface tunnel tunnel-number.

Step 2. Identify the tunnel source.

Identify the local source of the tunnel with the interface parameter command tunnel source {ip-address | interface-id}.

Step 3. Convert the tunnel to a GRE multipoint interface.

Configure the DMVPN tunnel as a GRE multipoint tunnel with the interface parameter command tunnel mode gre multipoint.

Step 4. Allocate an IP address for the DMVPN network (tunnel).

An IP address is configured to the interface with the command ip address ip-address subnet-mask.

Step 5. Enable NHRP on the tunnel interface.

Enable NHRP and uniquely identify the DMVPN tunnel for the virtual interface with the interface parameter command ip nhrp network-id 1-4294967295.

Step 6. Define the tunnel key (optional).

The tunnel key is configured with the command tunnel key 0-4294967295. Tunnel keys must match for a DMVPN tunnel to establish between two routers.

Step 7. Enable NHRP shortcut.

Enable the NHRP shortcut function with the command ip nhrp shortcut.

Step 8. Specify the NHRP NHS, NBMA address, and multicast mapping.

Specify the address of one or more NHRP NHSs with the command ip nhrp nhs nhs-address nbma nbma-address [multicast].

Step 9. Define the IP MTU for the tunnel interface (optional).

MTU is configured with the interface parameter command ip mtu mtu. Typically an MTU of 1400 is used for DMVPN tunnels.

Step 10. Define the TCP MSS (optional).

The TCP Adjust MSS feature ensures that the router will edit the payload of a TCP three-way handshake if the MSS exceeds the configured value. The command is ip tcp adjust-mss mss-size. Typically DMVPN interfaces use a value of 1360 to accommodate IP, GRE, and IPsec headers.

Example 3-13 provides a sample configuration for R11 (hub), R21 (spoke), and R31 (spoke) configured with Phase 3 DMVPN. Notice that all three routers have tunnel mode gre multipoint and have set the appropriate MTU, bandwidth, and TCP MSS values too. R11 uses the command ip nhrp redirect and R31 and R41 use the command ip nhrp shortcut.

Example 3-13 DMVPN Phase3 Configuration for Spokes

R11-Hub

interface Tunnel100

bandwidth 4000

ip address 192.168.100.11 255.255.255.0

ip mtu 1400

ip nhrp map multicast dynamic

ip nhrp network-id 100

ip nhrp redirect

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel mode gre multipoint

tunnel key 100

R31-Spoke

interface Tunnel100

bandwidth 4000

ip address 192.168.100.31 255.255.255.0

ip mtu 1400

ip nhrp network-id 100

ip nhrp nhs 192.168.100.11 nbma 172.16.11.1 multicast

ip nhrp shortcut

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel mode gre multipoint

tunnel key 100

R41-Spoke

interface Tunnel100

bandwidth 4000

ip address 192.168.100.41 255.255.255.0

ip mtu 1400

ip nhrp network-id 100

ip nhrp nhs 192.168.100.12 nbma 172.16.11.1

ip nhrp shortcut

ip tcp adjust-mss 1360

tunnel source GigabitEthernet0/1

tunnel mode gre multipoint

tunnel key 100

Spoke-to-Spoke Communication

After the configuration on R11, R31, and R41 has been modified to support DMVPN Phase 3, the tunnels are established. All the DMVPN, NHRP, and routing tables look exactly like they did in Examples 3-7 through 3-11. Please note that no traffic is exchanged between R31 and R41 at this time.

This section focuses on the underlying mechanisms used to establish spoke-to-spoke communication. In DMVPN Phase 1, the spoke devices rely upon the configured tunnel destination to identify where to send the encapsulated packets. Phase 3 DMVPN uses multipoint GRE tunnels and thereby relies upon NHRP redirect and resolution request messages to identify the NBMA address for any destination networks.

Packets flow through the hub in a traditional hub-and-spoke manner until the spoke-to-spoke tunnel has been established in both directions. As packets flow across the hub, the hub engages NHRP redirection to start the process of finding a more optimal path with spoke-to-spoke tunnels.

In Example 3-14, R31 initiates a traceroute to R41. Notice that the first packet travels across R11 (hub), but by the time a second stream of packets is sent, the spoke-to-spoke tunnel has been initialized so that traffic flows directly between R31 and R41 on the transport and overlay networks.

Example 3-14 Initiation of Traffic Between Spoke Routers

! Initial Packet Flow

R31-Spoke# traceroute 10.4.4.1 source 10.3.3.1

Tracing the route to 10.4.4.1

1 192.168.100.11 5 msec 1 msec 0 msec <- This is the Hub Router (R11-Hub)

2 192.168.100.41 5 msec * 1 msec

! Packetflow after Spoke-to-Spoke Tunnel is Established

R31-Spoke# traceroute 10.4.4.1 source 10.3.3.1

Tracing the route to 10.4.4.1

1 192.168.100.41 1 msec * 0 msec

Forming Spoke-to-Spoke Tunnels

This section explains in detail how a spoke-to-spoke DMVPN tunnel is formed. Figure 3-5 illustrates the packet flow among all three devices to establish a bidirectional spoke-to-spoke DMVPN tunnel; the numbers in the figure correspond to the steps in the following list:

R31 performs a route lookup for 10.4.4.1 and finds the entry 10.4.4.0/24 with a next-hop IP address of 192.168.100.11. R31 encapsulates the packet destined for 10.4.4.1 and forwards it to R11 out of the tunnel 100 interface.

Step 2 (on R11).

R11 receives the packet from R31 and performs a route lookup for the packet destined for 10.4.4.1. R11 locates the 10.4.4.0/24 network with a next-hop IP address of 192.168.100.41. R11 checks the NHRP cache and locates the entry for the 192.168.100.41/32 address. R11 forwards the packet to R41 using the NBMA IP address 172.16.41.1 found in the NHRP cache. The packet is then forwarded out of the same tunnel interface.

R11 has ip nhrp redirect configured on the tunnel interface and recognizes that the packet received from R31 hairpinned out of the tunnel interface. R11 sends an NHRP redirect to R31 indicating the packet source of 10.3.3.1 and destination of 10.4.4.1. The NHRP redirect indicates to R31 that the traffic is using a suboptimal path.

Step 3

(On R31). R31 receives the NHRP redirect and sends an NHRP resolution request to R11 for the 10.4.4.1 address. Inside the NHRP resolution request, R31 provides its protocol (tunnel IP) address, 192.168.100.31, and source NBMA address, 172.16.31.1.

(On R41). R41 performs a route lookup for 10.3.3.1 and finds the entry 10.3.3.0/24 with a next-hop IP address of 192.168.100.11. R41 encapsulates the packet destined for 10.4.4.1 and forwards it to R11 out of the tunnel 100 interface.

Step 4 (on R11).

R11 receives the packet from R41 and performs a route lookup for the packet destined for 10.3.3.1. R11 locates the 10.3.3.0/24 network with a next-hop IP address of 192.168.100.31. R11 checks the NHRP cache and locates an entry for 192.168.100.31/32. R11 forwards the packet to R31 using the NBMA IP address 172.16.31.1 found in the NHRP cache. The packet is then forwarded out of the same tunnel interface.

R11 has ip nhrp redirect configured on the tunnel interface and recognizes that the packet received from R41 hairpinned out of the tunnel interface. R11 sends an NHRP redirect to R41 indicating the packet source of 10.4.4.1 and a destination of 10.3.3.1 The NHRP redirect indicates to R41 that the traffic is using a suboptimal path.

R11 forwards R31’s NHRP resolution requests for the 10.4.4.1 address.

R41 sends an NHRP resolution request to R11 for the 10.3.3.1 address and provides its protocol (tunnel IP) address, 192.168.100.41, and source NBMA address, 172.16.41.1.

R41 sends an NHRP resolution reply directly to R31 using the source information from R31’s NHRP resolution request. The NHRP resolution reply contains the original source information in R31’s NHRP resolution request as a method of verification and contains the client protocol address of 192.168.100.41 and the client NBMA address of 172.16.41.1. (If IPsec protection is configured, the IPsec tunnel is set up before the NHRP reply is sent.)

Note

The NHRP reply is for the entire subnet rather than the specified host address.

Step 6 (on R11).

R11 forwards R41’s NHRP resolution requests for the 192.168.100.31 and 10.4.4.1 entries.

Step 7 (on R31).

R31 sends an NHRP resolution reply directly to R41 using the source information from R41’s NHRP resolution request. The NHRP resolution reply contains the original source information in R41’s NHRP resolution request as a method of verification and contains the client protocol address of 192.168.100.31 and the client NBMA address of 172.16.31.1. (Again, if IPsec protection is configured, the tunnel is set up before the NHRP reply is sent back in the other direction.)

A spoke-to-spoke DMVPN tunnel is established in both directions after Step 7 has completed. This allows traffic to flow across the spoke-to-spoke tunnel instead of traversing the hub router.

Example 3-15 displays the status of DMVPN tunnels on R31 and R41 where there are two new spoke-to-spoke tunnels (highlighted). The DLX entries represent the local (no-socket) routes. The original tunnel to R11 remains as a static tunnel.

Example 3-15 Detailed NHRP Mapping with Spoke-to-Hub Traffic

R31-Spoke# show dmvpn detail

Legend: Attrb --> S - Static, D - Dynamic, I - Incomplete

N - NATed, L - Local, X - No Socket

T1 - Route Installed, T2 - Nexthop-override

C - CTS Capable

# Ent --> Number of NHRP entries with same NBMA peer

NHS Status: E --> Expecting Replies, R --> Responding, W --> Waiting

UpDn Time --> Up or Down Time for a Tunnel

============================================================================

Interface Tunnel100 is up/up, Addr. is 192.168.100.31, VRF ""

Tunnel Src./Dest. addr: 172.16.31.1/MGRE, Tunnel VRF ""

Protocol/Transport: "multi-GRE/IP", Protect ""

Interface State Control: Disabled

nhrp event-publisher : Disabled

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 3

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

1 172.16.31.1 192.168.100.31 UP 00:00:10 DLX 10.3.3.0/24

2 172.16.41.1 192.168.100.41 UP 00:00:10 DT2 10.4.4.0/24

172.16.41.1 192.168.100.41 UP 00:00:10 DT1 192.168.100.41/32

1 172.16.11.1 192.168.100.11 UP 00:00:51 S 192.168.100.11/32

R41-Spoke# show dmvpn detail

! Output omitted for brevity

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 3

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

2 172.16.31.1 192.168.100.31 UP 00:00:34 DT2 10.3.3.0/24

172.16.31.1 192.168.100.31 UP 00:00:34 DT1 192.168.100.31/32

1 172.16.41.1 192.168.100.41 UP 00:00:34 DLX 10.4.4.0/24

1 172.16.11.1 192.168.100.11 UP 00:01:15 S 192.168.100.11/32

Example 3-16 displays the NHRP cache for R31 and R41. Notice the NHRP mappings: router, rib, nho, and nhop. The flag rib nho indicates that the router has found an identical route in the routing table that belongs to a different protocol. NHRP has overridden the other protocol’s next-hop entry for the network by installing a next-hop shortcut in the routing table. The flag rib nhop indicates that the router has an explicit method to reach the tunnel IP address via an NBMA address and has an associated route installed in the routing table.

Example 3-16 NHRP Mapping with Spoke-to-Hub Traffic

R31-Spoke# show ip nhrp detail

10.3.3.0/24 via 192.168.100.31

Tunnel100 created 00:01:44, expire 01:58:15

Type: dynamic, Flags: router unique local

NBMA address: 172.16.31.1

Preference: 255

(no-socket)

Requester: 192.168.100.41 Request ID: 3

10.4.4.0/24 via 192.168.100.41

Tunnel100 created 00:01:44, expire 01:58:15

Type: dynamic, Flags: router rib nho

NBMA address: 172.16.41.1

Preference: 255

192.168.100.11/32 via 192.168.100.11

Tunnel100 created 10:43:18, never expire

Type: static, Flags: used

NBMA address: 172.16.11.1

Preference: 255

192.168.100.41/32 via 192.168.100.41

Tunnel100 created 00:01:45, expire 01:58:15

Type: dynamic, Flags: router used nhop rib

NBMA address: 172.16.41.1

Preference: 255

R41-Spoke# show ip nhrp detail

10.3.3.0/24 via 192.168.100.31

Tunnel100 created 00:02:04, expire 01:57:55

Type: dynamic, Flags: router rib nho

NBMA address: 172.16.31.1

Preference: 255

10.4.4.0/24 via 192.168.100.41

Tunnel100 created 00:02:04, expire 01:57:55

Type: dynamic, Flags: router unique local

NBMA address: 172.16.41.1

Preference: 255

(no-socket)

Requester: 192.168.100.31 Request ID: 3

192.168.100.11/32 via 192.168.100.11

Tunnel100 created 10:43:42, never expire

Type: static, Flags: used

NBMA address: 172.16.11.1

Preference: 255

192.168.100.31/32 via 192.168.100.31

Tunnel100 created 00:02:04, expire 01:57:55

Type: dynamic, Flags: router used nhop rib

NBMA address: 172.16.31.1 Preference: 255

Example 3-16 uses the optional detail keyword for viewing the NHRP cache information. The 10.4.4.0/24 entry on R31 and the 10.3.3.0/24 entry on R41 display a list of devices to which the router responded to resolution request packets and the request ID that they received.

NHRP Route Table Manipulation

NHRP tightly interacts with the routing/forwarding tables and installs or modifies routes in the routing information base (RIB), also known as the routing table, as necessary. In the event that an entry exists with an exact match for the network and prefix length, NHRP overrides the existing next hop with a shortcut. The original protocol is still responsible for the prefix, but overwritten next-hop addresses are indicated in the routing table by the percent sign (%).

Example 3-17 provides the routing tables for R31 and R41. The next-hop IP address for the EIGRP remote network (highlighted) still shows 192.168.100.11 as the next-hop address but includes a percent sign (%) to indicate a next-hop override. Notice that R31 installs the NHRP route to 192.168.10.41/32 and that R41 installs the NHRP route to 192.18.100.31/32 into the routing table as well.

Example 3-17 NHRP Routing Table Manipulation

R31-Spoke# show ip route

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

o - ODR, P - periodic downloaded static route, H - NHRP, l - LISP

+ - replicated route, % - next hop override, p - overrides from PfR

Gateway of last resort is 172.16.31.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.31.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 10:44:45, Tunnel100

C 10.3.3.0/24 is directly connected, GigabitEthernet0/2

D % 10.4.4.0/24 [90/52992000] via 192.168.100.11, 10:44:45, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.31.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.41/32 is directly connected, 00:03:21, Tunnel100

R41-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.41.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.41.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 10:44:34, Tunnel100

D % 10.3.3.0/24 [90/52992000] via 192.168.100.11, 10:44:34, Tunnel100

C 10.4.4.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.41.0/24 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.31/32 is directly connected, 00:03:10, Tunnel100

The command show ip route next-hop-override displays the routing table with the explicit NHRP shortcuts that were added. Example 3-18 displays the command’s output for our topology. Notice that the NHRP shortcut is indicated by the NHO marking and shown underneath the original entry with the correct next-hop IP address.

Example 3-18 Next-Hop Override Routing Table

R31-Spoke# show ip route next-hop-override

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

+ - replicated route, % - next hop override

Gateway of last resort is 172.16.31.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.31.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 10:46:38, Tunnel100

C 10.3.3.0/24 is directly connected, GigabitEthernet0/2

D % 10.4.4.0/24 [90/52992000] via 192.168.100.11, 10:46:38, Tunnel100

[NHO][90/255] via 192.168.100.41, 00:05:14, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.31.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.41/32 is directly connected, 00:05:14, Tunnel100

R41-Spoke# show ip route next-hop-override

! Output omitted for brevity

Gateway of last resort is 172.16.41.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.41.2

10.0.0.0/8 is variably subnetted, 4 subnets, 2 masks

D 10.1.1.0/24 [90/26885120] via 192.168.100.11, 10:45:44, Tunnel100

D % 10.3.3.0/24 [90/52992000] via 192.168.100.11, 10:45:44, Tunnel100

[NHO][90/255] via 192.168.100.31, 00:04:20, Tunnel100

C 10.4.4.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.41.0/24 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.31/32 is directly connected, 00:04:20, Tunnel100

Note

Review the output from Example 3-15 again. Notice that the DT2 entries represent the networks that have had the next-hop IP address overwritten.

NHRP Route Table Manipulation with Summarization

Summarizing routes on WAN links provides stability by hiding network convergence and thereby adding scalability. This section demonstrates NHRP’s interaction on the routing table when the exact route does not exist there. R11’s EIGRP configuration now advertises the 10.0.0.0/8 summary prefix out of tunnel 100. The spoke routers use the summary route for forwarding traffic until the NHRP establishes the spoke-to-spoke tunnel. The more explicit entries from NHRP install into the routing table after the spoke-to-spoke tunnels have initialized.

Example 3-19 displays the change to R11’s EIGRP configuration for summarizing the 10.0.0.0/8 networks out of the tunnel 100 interface.

Example 3-19 R11’s Summarization Configuration

R11-Hub

router eigrp IWAN

address-family ipv4 unicast autonomous-system 100

af-interface Tunnel100

summary-address 10.0.0.0 255.0.0.0

hello-interval 20

hold-time 60

no split-horizon

exit-af-interface

!

topology base

exit-af-topology

network 10.0.0.0

network 192.168.100.0

exit-address-family

The NHRP cache is cleared on all routers with the command clear ip nhrp which removes any NHRP entries. Example 3-20 provides the routing table for R11, R31, and R41. Notice that only the 10.0.0.0/8 summary route provides initial connectivity among all three routers.

Example 3-20 Routing Table with Summarization

R11-Hub# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.11.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.11.2

10.0.0.0/8 is variably subnetted, 5 subnets, 3 masks

D 10.0.0.0/8 is a summary, 00:28:44, Null0

C 10.1.1.0/24 is directly connected, GigabitEthernet0/2

D 10.3.3.0/24 [90/27392000] via 192.168.100.31, 11:18:13, Tunnel100

D 10.4.4.0/24 [90/27392000] via 192.168.100.41, 11:18:13, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.11.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

R31-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.31.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.31.2

10.0.0.0/8 is variably subnetted, 3 subnets, 3 masks

D 10.0.0.0/8 [90/26885120] via 192.168.100.11, 00:29:28, Tunnel100

C 10.3.3.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.31.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

R41-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.41.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.41.2

10.0.0.0/8 is variably subnetted, 3 subnets, 3 masks

D 10.0.0.0/8 [90/26885120] via 192.168.100.11, 00:29:54, Tunnel100

C 10.4.4.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.41.0/24 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

Traffic was re-initiated from 10.3.3.1 to 10.4.4.1 to initialize the spoke-to-spoke tunnels. R11 still sends the NHRP redirect for hairpinned traffic, and the pattern would complete as shown earlier except that NHRP would install a more specific route (10.3.3.0/24) into the routing table on R31 and R4. The NHRP injected route is indicated by the ‘H’ entry as shown in Example 3-21.

Example 3-21 Routing Table with Summarization and Spoke-to-Spoke Traffic

R31-Spoke# show ip route

! Output omitted for brevity

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

o - ODR, P - periodic downloaded static route, H - NHRP, l - LISP

Gateway of last resort is 172.16.31.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.31.2

10.0.0.0/8 is variably subnetted, 4 subnets, 3 masks

D 10.0.0.0/8 [90/26885120] via 192.168.100.11, 00:31:06, Tunnel100

C 10.3.3.0/24 is directly connected, GigabitEthernet0/2

H 10.4.4.0/24 [250/255] via 192.168.100.41, 00:00:22, Tunnel100

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.31.0/30 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.41/32 is directly connected, 00:00:22, Tunnel100

R41-Spoke# show ip route

! Output omitted for brevity

Gateway of last resort is 172.16.41.2 to network 0.0.0.0

S* 0.0.0.0/0 [1/0] via 172.16.41.2

10.0.0.0/8 is variably subnetted, 4 subnets, 3 masks

D 10.0.0.0/8 [90/26885120] via 192.168.100.11, 00:31:24, Tunnel100

H 10.3.3.0/24 [250/255] via 192.168.100.31, 00:00:40, Tunnel100

C 10.4.4.0/24 is directly connected, GigabitEthernet0/2

172.16.0.0/16 is variably subnetted, 2 subnets, 2 masks

C 172.16.41.0/24 is directly connected, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 3 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

H 192.168.100.31/32 is directly connected, 00:00:40, Tunnel100

Example 3-22 displays the DMVPN tunnels after R31 and R41 have initialized the spoke-to-spoke tunnel with summarization on R11. Notice that both of the new spoke-to-spoke tunnel entries are DT1 because they are new routes in the RIB. If the routes were more explicit (as shown in Example 3-17), NHRP would have overridden the next-hop address and used a DT2 entry.

Example 3-22 Detailed DMVPN Tunnel Output

R31-Spoke# show dmvpn detail

! Output omitted for brevity

Legend: Attrb --> S - Static, D - Dynamic, I - Incomplete

N - NATed, L - Local, X - No Socket

T1 - Route Installed, T2 - Nexthop-override

C - CTS Capable

# Ent --> Number of NHRP entries with same NBMA peer

NHS Status: E --> Expecting Replies, R --> Responding, W --> Waiting

UpDn Time --> Up or Down Time for a Tunnel

==========================================================================

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 3

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

1 172.16.31.1 192.168.100.31 UP 00:01:17 DLX 10.3.3.0/24

2 172.16.41.1 192.168.100.41 UP 00:01:17 DT1 10.4.4.0/24

172.16.41.1 192.168.100.41 UP 00:01:17 DT1 192.168.100.41/32

1 172.16.11.1 192.168.100.11 UP 11:21:33 S 192.168.100.11/32

R41-Spoke# show dmvpn detail

! Output omitted for brevity

IPv4 NHS:

192.168.100.11 RE NBMA Address: 172.16.11.1 priority = 0 cluster = 0

Type:Spoke, Total NBMA Peers (v4/v6): 3

# Ent Peer NBMA Addr Peer Tunnel Add State UpDn Tm Attrb Target Network

----- --------------- --------------- ----- -------- ----- -----------------

2 172.16.31.1 192.168.100.31 UP 00:01:56 DT1 10.3.3.0/24

172.16.31.1 192.168.100.31 UP 00:01:56 DT1 192.168.100.31/32

1 172.16.41.1 192.168.100.41 UP 00:01:56 DLX 10.4.4.0/24

1 172.16.11.1 192.168.100.11 UP 11:22:09 S 192.168.100.11/32

This section demonstrated the process for establishing spoke-to-spoke DMVPN tunnels and the methods by which NHRP interacts with the routing table. Phase 3 DMVPN fully supports summarization, which should be used to minimize the number of prefixes advertised across the WAN.

Problems with Overlay Networks

There are two common problems that are frequently found with tunnel or overlay networks: recursive routing and outbound interface selection. The following section explains these problems and provides a solution to them.

Recursive Routing Problems

Explicit care must be taken when using a routing protocol on a network tunnel. If a router tries to reach the remote router’s encapsulating interface (transport IP address) via the tunnel (overlay network), problems will occur. This is a common issue if the transport network is advertised into the same routing protocol that runs on the overlay network.

Figure 3-6 demonstrates a simple GRE tunnel between R11 and R31. R11, R31, and the SP routers are running OSPF on the 100.64.0.0/16 transport networks. R11 and R31 are running EIGRP on the 10.0.0.0/8 LAN and 192.168.100.0/24 tunnel network.

Example 3-23 provides R11’s routing table with everything working properly.

Example 3-23 R11 Routing Table with GRE Tunnel

R11# show ip route

! Output omitted for brevity

10.0.0.0/8 is variably subnetted, 3 subnets, 2 masks

C 10.1.1.0/24 is directly connected, GigabitEthernet0/2

D 10.3.3.0/24 [90/25610240] via 192.168.100.31, 00:02:35, Tunnel0

100.0.0.0/8 is variably subnetted, 3 subnets, 2 masks

C 100.64.11.0/24 is directly connected, GigabitEthernet0/1

O 100.64.31.0/24 [110/2] via 100.64.11.2, 00:03:11, GigabitEthernet0/1

192.168.100.0/24 is variably subnetted, 2 subnets, 2 masks

C 192.168.100.0/24 is directly connected, Tunnel100

A junior network administrator has accidentally added the 100.64.0.0/16 network interfaces to EIGRP on R11 and R31. The SP router is not running EIGRP, so an adjacency does not form, but R11 and R31 add the transport network to EIGRP which has a lower AD than OSPF. The routers will then try to use the tunnel to reach the tunnel endpoint address, which is not possible. This scenario is known as “recursive routing.”

The router detects recursive routing and provides an appropriate syslog message as shown in Example 3-24. The tunnel is brought down, which terminates the EIGRP neighbors, and then R11 and R31 find each other using OSPF again. The tunnel is reestablished, EIGRP forms a relationship, and the problem repeats over and over again.

Example 3-24 Recursive Routing Syslog Messages on R11 for GRE Tunnels

00:49:52: %DUAL-5-NBRCHANGE: EIGRP-IPv4 100: Neighbor 192.168.100.31 (Tunnel100)

is up: new adjacency

00:49:52: %ADJ-5-PARENT: Midchain parent maintenance for IP midchain out of

Tunnel100 - looped chain attempting to stack

00:49:57: %TUN-5-RECURDOWN: Tunnel100 temporarily disabled due recursive routing

00:49:57: %LINEPROTO-5-UPDOWN: Line protocol on Interface Tunnel100, changed

state to down

00:49:57: %DUAL-5-NBRCHANGE: EIGRP-IPv4 100: Neighbor 192.168.30.3 (Tunnel100) is

down: interface down

00:50:12: %LINEPROTO-5-UPDOWN: Line protocol on Interface Tunnel100, changed

state to up

00:50:15: %DUAL-5-NBRCHANGE: EIGRP-IPv4 100: Neighbor 192.168.100.31 (Tunnel100)

is up: new adjacency

Note

Only point-to-point GRE tunnels provide the syslog message “temporarily disabled due to recursive routing.” Both DMVPN and GRE tunnels use “looped chained attempting to stack.”

Recursive routing problems are remediated by preventing the tunnel endpoint address from being advertised across the tunnel network. Removing EIGRP on the transport network stabilizes this topology.

Outbound Interface Selection