Chapter 8. Network and Host Profiling

This chapter covers the following topics:

![]() Identifying elements used for network profiling

Identifying elements used for network profiling

![]() Understanding network throughput

Understanding network throughput

![]() How session duration is used for profiling

How session duration is used for profiling

![]() Monitoring port usage

Monitoring port usage

![]() Understanding and controlling critical asset address space

Understanding and controlling critical asset address space

![]() Identifying elements used for server profiling

Identifying elements used for server profiling

![]() Learning about listening ports

Learning about listening ports

![]() Identifying logged-in users and used service accounts

Identifying logged-in users and used service accounts

![]() Monitoring running processes, tasks, and applications on servers

Monitoring running processes, tasks, and applications on servers

Profiling is the process of collecting data about a target to better understand the type of system, who is using the system, and its intentions while on the network. The more profiling data collected typically means a better explanation can be made about the target being evaluated. For example, having basic information on a system found on your network might tell you that a computer is using an Ethernet connection. This limited data would make enforcing access control policies difficult. Having more data about a target system could reveal that the system is a corporate-issued Windows laptop being used by a trusted employee named Joey. Joey as an employee should be treated differently from an access control perspective when comparing Joey and his system to a Linux laptop run by an unknown guest (with the assumption that this sample organization’s standard-issued laptop is Windows based). Also, knowing the types of devices on your network helps with making future business decisions, network and throughput sizing considerations, as well as what your security policy should look like.

The focus of this chapter is on understanding how network and host profiling are accomplished. The results may be used to determine the access rights that will be granted to the system, identify potentially malicious behavior, troubleshoot, audit for compliance, and so on. The focus on the SECOPS exam will be assessing devices based on the network traffic they produce, also known as their network footprint. However, we will also touch on profiling devices from the host level (meaning what is installed on the device) to round out the profiling concepts covered.

Let’s start off by testing your understanding of profiling concepts.

“Do I Know This Already?” Quiz

The “Do I Know This Already?” quiz allows you to assess whether you should read this entire chapter thoroughly or jump to the “Exam Preparation Tasks” section. If you are in doubt about your answers to these questions or your own assessment of your knowledge of the topics, read the entire chapter. Table 8-1 lists the major headings in this chapter and their corresponding “Do I Know This Already?” quiz questions. You can find the answers in Appendix A, “Answers to the ‘Do I Know This Already?’ Quizzes and Q&A.”

1. Which of the following is true about NetFlow?

a. NetFlow typically provides more details than sFlow.

b. NetFlow typically contains more details than packet capturing.

c. NetFlow is not available in virtual networking environments.

d. NetFlow is only used as a network performance measurement.

2. Which of the following is not used to establish a network baseline?

a. Determining the time to collect data

b. Selecting the type of data to collect

c. Developing a list of users on the network

d. Identifying the devices that can provide data

3. Which of the following is an advantage of port security over automated NAC?

a. Device profiling

b. Ease of deployment

c. Management requirements

d. Technology cost

4. What is the best definition of session duration in terms of network profiling?

a. The total time the user or device requests services from the network

b. The total time the user connects to the network

c. The total time a user or device connects to a network and later disconnects from it

d. The total time the user logs in to a system and logs out of the system

5. Which of the following is not a tool or option for monitoring a host session on the network?

a. Use firewall logs to monitor user connections to the network

b. Use NetFlow to monitor user connections to the network

c. Capture network packets and monitor user connections to the network

d. Use SNMP tools to monitor user connections to the network

6. Which of the following is not true about listening ports?

a. A listening port is a port held open by a running application in order to accept inbound connections.

b. Seeing traffic from a known port will identify the associated service.

c. Listening ports use values that can range between 1 and 65535.

d. TCP port 80 is commonly known for Internet traffic.

7. A traffic substitution and insertion attack does which of the following?

a. Substitutes the traffic with data in a different format but with the same meaning

b. Substitutes the payload with data in the same format but with a different meaning

c. Substitutes the payload with data in a different format but with the same meaning

d. Substitutes the traffic with data in the same format but with a different meaning

8. Which of the following is not a method for identifying running processes?

a. Reading network traffic from a SPAN port with the proper technology

b. Reading port security logs

c. Reading traffic from inline with the proper technology

d. Using port scanner technology

9. Which of the following is not a tool that can identify applications on hosts?

a. Web proxy

b. Application layer firewall

c. Using NBAR

d. Using NetFlow

10. Which of the following statements is incorrect?

a. Latency is a delay in throughput detected at the gateway of the network.

b. Throughput is typically measured in bandwidth.

c. A valley is when there is an unusually low amount of throughput compared to the normal baseline.

d. A peak is when there is a spike in throughput compared to the normal baseline

Foundation Topics

Network Profiling

Profiling involves identifying something based on various characteristics specified in a detector. Typically, this is a weighted system, meaning that with more data and higher quality data, the target system can be more accurately profiled. An example might be using generic system data to identify that a system is possibly an Apple OS X product versus gaining enough information (such as detailed application or network protocol data) to distinguish an iPad from an iPhone. The results come down to the detector, data quality, and how the detectors are used.

The first section of this chapter focuses on network profiling concepts. These are methods used to capture network-based data that can reveal how systems are functioning on the network. The areas of focus for this section are determining throughput, ports used, session duration, and address space. Throughput directly impacts network performance. For most networks, it is mission critical to not over-utilize throughput; otherwise, the users will not be happy with the overall network performance. Network access comes from a LAN, VPN, or wireless connection; however, at some point that traffic will eventually connect to a network port. This means that by monitoring ports, you can tell what is accessing your network regardless of the connection type. Session duration refers to how long systems are on the network or how long users are accessing a system. This is important for monitoring potential security issues as well as for baselining user and server access trends. Lastly, address space is important to ensure critical systems are given priority over other systems when you’re considering distributing network resources.

Let’s start off by looking into profiling network throughput.

Throughput

Throughput is the amount of traffic that can cross a specific point in the network, and at what speed. If throughput fails, network performance suffers, and most likely people will complain. Administrators typically have alarms monitoring for situations where throughput utilization reaches a level of concern. Normal throughput levels are typically recorded as a network baseline, where a deviation from that baseline could indicate a throughput problem. Baselines are also useful for establishing real throughput compared to what is promised by your service provider. Having a network baseline may sound like a great idea; however, the challenge is establishing what your organization’s real network baseline should be.

The first step in establishing a network baseline is identifying the devices on your network that can detect network traffic, such as routers and switches. Once you have an inventory, the next step is identifying what type of data can be pulled from those devices. The most common desired data is network utilization; however, that alone should not be your only source for building a network baseline. Network utilization has limitations, such as knowing what devices are actually doing on the network.

There are two common tactics for collecting network data for traffic analytic purposes. The first approach is capturing packets and then analyzing the data. The second approach is capturing network flow, also known as NetFlow, as explained in Chapter 4, “NetFlow for Cybersecurity.” Both approaches have benefits and disadvantages. Packet capturing can provide more details than NetFlow; however, this approach requires storing packets as well as a method to properly analyze what is captured. Capturing packets can quickly increase storage requirements, making this option financially challenging for some organizations. Other times, the details provided by capturing packets are necessary for establishing baselines as well as security requirements and therefore is the best approach versus what limited data NetFlow can provide. For digital forensics requirements, capturing packets will most likely be the way to go due to the nature of the type of details needed to perform an investigation and to validate findings during the legal process.

NetFlow involves looking at network records versus actually storing data packets. This approach dramatically reduces storage needs and can be quicker to analyze. NetFlow can provide a lot of useful data; however, that data will not be as detailed as capturing the actual packets. An analogy of comparing capturing packets to NetFlow would be monitoring a person’s phone. Capturing packets would be similar to recording all calls from a person’s phone and spending time listening to each call to determine if there is a performance or security incident. This obviously would be time consuming and require storage for all the calls. Capturing NetFlow would be similar to monitoring the call records to and from the phone being analyzed, meaning less research and smaller storage requirements. Having the phone call (packet capture) would mean having details about the incident, whereas the call record (NetFlow) would show possible issues, such as multiple calls happening at 3 a.m. between the person and another party. In this case, you would have details such as the phone numbers, time of call, and length of call. If these call records are between a married person and somebody who is not that person’s significant other, it could indicate a problem—or it could simply be planning for a surprise party. The point is, NetFlow provides a method to determine areas of concern quickly, whereas packet capturing determines concerns as well as includes details about the event since the actual data is being analyzed versus records of the data when using NetFlow. Also, it is important to note that some vendors offer hybrid solutions that use NetFlow but start capturing packets upon receiving an alarm. One example of a hybrid technology is Cisco’s StealthWatch technology.

Once you have your source and data type selected, the final task for establishing a baseline is determining the proper length of time to capture data. This is not an exact science; however, many experts will suggest at least a week to allow for enough data to accommodate trends found within most networks. This requirement can change depending on many factors, such as how the business model of an organization could have different levels of traffic at different times of the year. A simple example of this concept would be how retailers typically see higher amounts of traffic during holiday seasons, meaning a baseline sample during peak and nonpeak business months would most likely be different. Network spikes must be accounted for if they are perceived to be part of the normal traffic, which is important if the results of the baseline are to be considered a true baseline of the environment. Time also impacts results in that any baseline taken today may be different in the future as the network changes, making it important to retest the baseline after a certain period of time.

Most network administrators’ goal for understanding throughput is to establish a network baseline so throughput can later be monitored with alarms that trigger at the sign of a throughput valley or peak. Peaks are spikes of throughput that exceed the normal baseline, whereas valleys are periods of time that are below the normal baseline. Peaks can lead to problems, such as causing users to experience long delays when accessing resources, triggering redundant systems to switch to backups, breaking applications that require a certain data source, and so on. A large number of valleys could indicate that a part of the network is underutilized, representing a waste of resources or possible failure of a system that normally utilizes certain resources.

Many tools are available for viewing the total throughput on a network. These tools can typically help develop a network baseline as well as account for predicted peaks and valleys. One common metric used by throughput-measuring tools is bandwidth, meaning the data rate supported by a network connection or interface. Bandwidth, referred to as bits per second (bps), is impacted by the capacity of the link as well as latency factors, meaning things that slow down traffic performance.

Best practice for building a baseline is capturing bandwidth from various parts of the network to accommodate the many factors that impact bandwidth. The most common place to look at throughput is the gateway router, meaning the place that traffic enters and leaves the network. However, throughput issues can occur anywhere along the path of traffic, so only having a sample from the gateway could be useful for understanding total throughput for data leaving and entering the network, but this number would not be effective for troubleshooting any issues found within the network. For example, network congestion could occur between a host and network relay point prior to data hitting the network gateway, making the throughput at the gateway look slower than it actually would be if the administrator only tests for complications from the host network and doesn’t validate the entire path between the host and gateway.

Measuring throughput across the network can lead to the following improvements:

![]() Understanding the impact of applications on the network

Understanding the impact of applications on the network

![]() Reducing peak traffic by utilizing network optimization tools to accommodate for latency-generating elements such as bandwidth hogs

Reducing peak traffic by utilizing network optimization tools to accommodate for latency-generating elements such as bandwidth hogs

![]() Troubleshooting and understanding network pain points, meaning areas that cause latency.

Troubleshooting and understanding network pain points, meaning areas that cause latency.

![]() Detecting unauthorized traffic

Detecting unauthorized traffic

![]() Using security and anomaly detection

Using security and anomaly detection

![]() Understanding network trends for segmentation and security planning

Understanding network trends for segmentation and security planning

![]() Validating whether quality of service settings are being utilized properly

Validating whether quality of service settings are being utilized properly

Let’s look at some methods for measuring throughput.

Measuring Throughput

To capture packets and measure throughput, you will need a tap on the network before you can start monitoring. Most tools that collect throughput leverage a single point configured to provide raw data, such as pulling traffic from a switch or router. If the access point for the traffic is a switch, typically a network port is configured as a Switched Port Analyzer (SPAN) port, sometimes also called port mirroring or port monitoring. The probe capturing data from a SPAN port can be either a local probe or data from a SPAN port that is routed to a remote monitoring tool.

The following is an example of configuring a Cisco switch port as a SPAN port so that a collection tool can be set up to monitor throughput. The SPAN session for this example is ID 1 and is set to listen on the fastEthernet0/1 interface of the switch while exporting results to the fastEthernet0/10 interface of the switch. SPAN sessions can also be configured to capture more than one VLAN.

Switch(config)# no monitor session 1

Switch(config)# monitor session 1 source interface fastEthernet0/1

Switch(config)# monitor session 1 destination interface fastEthernet0/10

encapsulation dot1q

Switch(config)# end

Another method for capturing packets is to place a capturing device in the line of traffic. This is a common tactic for monitoring throughput from a routing point or security checkpoint, such as a firewall configured to have all traffic cross it for security purposes. Many current firewall solutions offer a range of throughput-monitoring capabilities that cover the entire network protocol stack. Figure 8-1 provides an example of Cisco Firepower application layer monitoring showing various widgets focused on throughput.

Routers can be leveraged to view current throughput levels. To see the current state of an interface on a standard Cisco IOS router, simply use the show interface command to display lots of data, including bps, packets sent, and so on. Some sample numbers from running this command might include the following information:

5 minute input rate 131000 bits/sec, 124 packets/sec

5 minute output rate 1660100 bit/sec, 214 packets/sec

This output provides details on throughput at that moment in time. Taking the average of samples across a span of time would be one method of calculating a possible network baseline.

Packet capture tools, sometimes referred to as packet analyzers or packet sniffers, are hardware or programs that intercept and log traffic as it passes over a digital network. This happens as a data stream crosses the network while the packet capture tool captures each packet and decodes the packet’s raw data elements representing the various fields that can be interpreted to understand its content.

The requirements for capturing packets are having access to the data, the ability to capture and store the data, tools to interoperate the data, and capabilities to use the data. This means the tool must be able to access the data from a tap and have enough storage to collect the data, and it must be able to read the results and have a method to use those results for some goal. Failing at any of these will most likely result in an unsuccessful packet capture session. One of the most popular packet capture applications used by industry experts is Wireshark, as shown in Figure 8-2 and covered in Chapter 3, “Fundamentals of Intrusion Analysis.” Wireshark can break down packets using various filters, thus aiding an analyst’s investigation of a captured session.

Capturing NetFlow is a different process than collecting network packets. NetFlow must be supported on the system to generate NetFlow data, and that data must be exported to a NetFlow collector that can translate NetFlow into usable data. Exceptions to this requirement are tools such as the Cisco StealthWatch Sensor. These tools offer the ability to convert raw data into NetFlow in a network-tap-like deployment. The typical sources of NetFlow are routers, switches, wireless access points, and virtual networking solutions that offer the ability to produce NetFlow upon proper configuration, meaning turning those devices into NetFlow producers rather than purchasing more equipment to create NetFlow. Note that some devices may require software upgrades or flat out do not support the ability to create NetFlow.

Enabling NetFlow typically requires two steps. The first step is to turn on NetFlow within the network device. An example of enabling NetFlow on a standard Cisco IOS router can be seen in the following example, which shows enabling NetFlow on the Ethernet 1/0 interface:

Router(config)# ip cef

Router(config)# interface Ethernet 1/0

Router(config-if)# ip flow ingress

The second step to enabling NetFlow is determining where the collected NetFlow should be sent. The next set of configurations is an example of exporting NetFlow from a Cisco IOS router to a NetFlow collector located at the IP address 10.1.1.40:

Router(config)# ip flow-export version 9

Router(config)# ip flow-export destination 10.1.1.40

One last important NetFlow concept is the fact that all versions of NetFlow do not provide the same level of data details. An example of this is comparing sFlow to NetFlow, where sFlow is a copy of flow, also called “sampled flow,” and NetFlow is a direct capture. The details between sFlow and NetFlow are dramatically different. Let’s look at location as an example: sFlow could inform you that a system is somewhere on the network, whereas NetFlow could provide the exact location of the system. The recommended best form of NetFlow at the time of this writing is using NetFlow version 9, as covered in Chapter 4.

The last throughput topic to consider is how to make improvements to your network baseline as well as accommodate for peaks and valleys. One tactic is implementing quality of services (QoS) tools designed to define different priory levels for applications, users, and data flows, with the goal of guaranteeing a certain level of performance to that data. For throughput, this could be guaranteeing a specific bit rate, latency, jitter, and packet loss to protect throughput levels from elements that could impact traffic types with a higher priority. An example would be providing high priority to video traffic since delays would be quickly noticed versus delays in delivering email. QoS is also important for defending against attacks directed at services such as denial-of-service and distributed denial-of-service attacks. Best practice is defining the data types on your network and configuring QoS properties for those deemed as high importance, which are commonly voice traffic, video traffic, and data from critical systems.

The list that follows highlights the key throughput concepts:

![]() A network baseline represents normal network patterns.

A network baseline represents normal network patterns.

![]() Establishing a network baseline involves identifying devices that will collect throughput, pick the type of data available to be collected, and determine the time to collect data.

Establishing a network baseline involves identifying devices that will collect throughput, pick the type of data available to be collected, and determine the time to collect data.

![]() The two common tactics for collecting data are capturing packets and harvesting NetFlow.

The two common tactics for collecting data are capturing packets and harvesting NetFlow.

![]() Peaks and valleys are indications of a spike and a reduced amount of throughput from the network baseline, respectively. Both could represent a network problem.

Peaks and valleys are indications of a spike and a reduced amount of throughput from the network baseline, respectively. Both could represent a network problem.

![]() Network throughput is typically measured in bandwidth, and delays are identified as latency.

Network throughput is typically measured in bandwidth, and delays are identified as latency.

![]() Packet capture technologies are either inline to the network traffic being captured or positioned on a SPAN port.

Packet capture technologies are either inline to the network traffic being captured or positioned on a SPAN port.

![]() Enabling NetFlow on supported devices involves enabling NetFlow and pointing the collected NetFlow to a NetFlow analyzer tool.

Enabling NetFlow on supported devices involves enabling NetFlow and pointing the collected NetFlow to a NetFlow analyzer tool.

![]() QoS can be used to prioritize traffic to guarantee performance of specific traffic types such as voice and video.

QoS can be used to prioritize traffic to guarantee performance of specific traffic types such as voice and video.

Used Ports

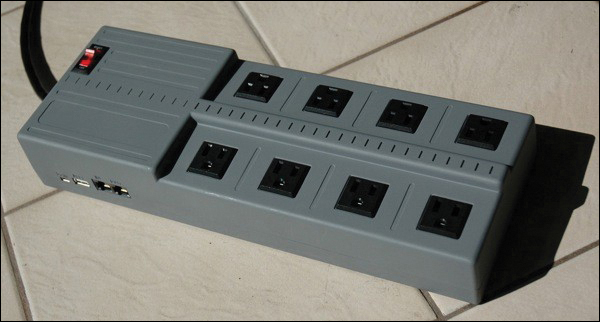

Controlling access to the network continues to be one of the most challenging yet important tasks for a network administrator. Networks will gradually increase in size, and the types of devices accessing the network will grow in diversity. Cyber attacks are also increasing in sophistication and speed, thus decreasing the time required to achieve a malicious goal such as stealing data or disrupting services. For these and many other reasons, access control must be a top priority for protecting your network. An example of a scary device you would not want to find on your network is the Pwnie Express attack arsenal disguised as a generic power plug (see Figure 8-3).

Devices on the network tend to bypass many forms of traditional security solutions such as the network firewalls and intrusion prevention systems (IPSs) typically found at the gateway of the network. This gives physically connected devices some degree of trust, depending on the access control and resource-provisioning policies. Access control strategies should include not only identifying and controlling what can access the network but also some form of posture evaluation to properly provision access rights. Posture evaluation can include the topics in this chapter as well as applications running on the device, such as antivirus vendor and version. One example of why this is important would be preventing a host access to sensitive resources based on having unacceptable risks, such as lacking antivirus. Another example would be an unapproved device such as a mobile phone placed on the same network as the data center that hosts sensitive data. Posture concepts, meaning remediating devices out of policy, will not be covered in this chapter; however, profiling device types, which is typically part of a posture strategy, will be covered later.

Controlling network access starts with securing the network port. Similar concepts can apply to wireless and virtual private networks (VPNs); however, even those technologies eventually end up on a network port once the traffic is authenticated and permitted through a connection medium. The most basic form of controlling network access is the use of port security. Port security features provide a method to limit what devices will be permitted to access and send traffic on individual switch ports within a switched network.

Port security can be very useful yet also one of the most challenging practices to enforce due to misconfiguration and manual efforts to maintain. Many administrators responsible for large and dynamic networks manually managing port security strategies find they spend too much time addressing ports that have become disabled due to various reasons and must be manually reset by an authorized administrator. This makes the deployment methodology critical to the success of the access control practice. Enabling basic port security on a Cisco switch interface can be accomplished using the command switchport port-security. However, without other parameters being configured, this will only permit one MAC address that is dynamically learned. Any other MAC addresses found accessing that network port would cause an “err-disabled” state, meaning an administrator must reset the port before it can be used again. Other configuration and port security strategies can be enforced, such as providing a static list of devices that can access any port or dynamically learning about devices as they connect to the network using a sticky MAC configuration. However, maintaining a healthy state for multiple ports tends to be a challenge for most administrators using any of these strategies.

NOTE

An unauthorized device could spoof an authorized MAC address and bypass a port security configuration. Also, it is important to understand specific access levels granted when port security is used. These access levels are preconfigured or manually changed by the network administrator, meaning a device accessing VLAN10 must be manually predefined for a specific port by an administrator, making deploying a dynamic network policy across multiple ports using port security extremely difficult to maintain.

The challenges involved with port security led to a more advanced method to provide a similar goal by leveraging automation for enforcing port security. Per industry lingo, this is known as network access control (NAC). NAC provides a method to have a network port automatically determine who and what is attempting to access a switch port and automatically provision specific network access based on a set of predefined policies. NAC can be enforced using various technologies, such as SNMP; however, 802.1x is one of the leading industry approaches at the time of this writing.

Regardless of the NAC technology being used, the goal for NAC is to determine the device type accessing the network using certain profiling approaches as well as to determine who is using the device. With 802.1x, users are identified using certificates validated by a backend authentication system. Policies can be defined for a user and device type, meaning a specific employee using a specific device could be granted specific access regardless of where he or she plugs in to the network. An example would be having employee Raylin Muniz granted access to VLAN10 if she is using her corporate-issued laptop regardless of the port she plugs in to. The process would work by having Raylin plug in to a port that is NAC enabled yet not granting any network access. The NAC solution would authenticate and authorize Raylin to her specific access if she passes whatever checks are put in place to ensure she meets the NAC access policies. If Raylin unplugs her system and another system is plugged in to the same port, the NAC solution could evaluate that system and provision the proper network access. An example would be a guest plugging in a NAC-enabled port and being provisioned limited network privileges such as only Internet access.

The first step in deploying an access control strategy regardless of whether port security or automated NAC is used is capturing the current state of the network. This means identifying all devices that are currently connected to a network port. Capturing what is currently on the network is also important for meeting audits for compliance as well as network capacity validation. There are many approaches for capturing the current state of the network. The most basic method for collecting port information is identifying which ports are being used. For Cisco IOS devices, the show interface status command will display a summary of all ports’ connection status, similar to the output shown in Example 8-1.

Example 8-1 The show interfaces status Command

Switch#/>show interfaces status/>

Port Name Status Vlan Duplex Speed Type

Gi1/1 notconnect 1 auto auto No Gbic

Gi1/2 notconnect 1 auto auto No Gbic

Gi5/1 notconnect 1 auto auto 10/100/1000-TX

Gi5/2 notconnect 1 auto auto 10/100/1000-TX

Gi5/3 notconnect 1 auto auto 10/100/1000-TX

Gi5/4 notconnect 1 auto auto 10/100/1000-TX

Fa6/1 connected 1 a-full a-100 10/100BaseTX

Fa6/2 connected 2 a-full a-100 10/100BaseTX

Another option is to use the show interface command, which provides a ton of information, including whether the port is being used. To focus on whether the port is being used, you can use the pipe command following show interface to narrow down the output to the data of interest. An example would be using the command show interface | i (FastEthernet|0 packets input). This command assumes the links of interest are using FastEthernet; i means “include” to match the following search expressions and items between the brackets to look for the conditions specified. The output of this command might look like that shown in Example 8-2, which is designed to specifically identify used ports.

Example 8-2 Output of the show interface | i (FastEthernet|0 packets input) Command

FastEthernet1/0/31 is up, line protocol is up (connected) 95445640 packets input,

18990165053 bytes, 0 no buffer FastEthernet1/0/32 is up, line protocol is up (connected)

FastEthernet1/0/33 is up, line protocol is up (connected) FastEthernet1/0/34 is down,

line protocol is down (notconnect) 0 packets input, 0 bytes, 0 no buffer

FastEthernet1/0/35 is down, line protocol is down (notconnect)

FastEthernet1/0/36 is up, line protocol is up (connected) FastEthernet1/0/37 is down,

line protocol is down (notconnect) 0 packets input, 0 bytes, 0 no buffer

Another filter option you might include is when the port was last used by issuing the command show interface | i proto|Last in. The output might look like Example 8-3.

Example 8-3 The show interface | i proto|Last in Command

switch#show int | i proto|Last in

GigabitEthernet1/1 is down, line protocol is down (notconnect) Last input 6w6d, output 6w6d, output hang never

GigabitEthernet1/2 is down, line protocol is down (notconnect) Last input 21w1d, output 21w1d, output hang never

GigabitEthernet1/3 is up, line protocol is up (connected) Last input 00:00:00, output 00:00:24, output hang never

GigabitEthernet1/4 is up, line protocol is up (connected) Last input 00:00:58, output 00:00:24, output hang never

GigabitEthernet1/5 is down, line protocol is down (notconnect) Last input never, output never, output hang never

These network-based show commands are useful for identifying used ports; however, they do not explain what type of device is attached or who is using the device. Other protocols such as Cisco Discovery Protocol (CDP), Link Layer Discovery Protocol (LLDP), and Dynamic Host Configuration Protocol (DHCP) can be used to learn more about what is connected. CDP is used to share information about other directly connected Cisco equipment, such as the operating system, hardware platform, IP address, device type, and so on. The command show cdp neighbors is used in Figure 8-4.

LLDP is a neighbor discovery protocol that has an advantage over CDP by being a vendor-neutral protocol. LLDP can be used to capture identity, capabilities, and neighbors through LLDP advertisements with their network neighboring devices and to store that data in their internal database. SNMP can be used to access this information to build an inventory of devices connected to the network as well as applications.

Other protocols such as DHCP and domain browsing are also used to identify routers, subnets, potential clients, and other connected devices. Typically, discovery tools harvest this type of information based on what is available to determine details of the device connected. Cisco Identity Services Engine is an example of a technology that uses various protocol probes to discover device types, as shown in Figure 8-5.

Many other discovery solutions are available on the market that can leverage various protocols to determine the device types plugged in to a port such as Ipswitch’s WhatsUp Gold, SolarWinds, OpManager, and so on. Also, port-scanning applications such as Nmap can be used to identify the devices connected to a network, which will be covered later in this chapter.

Once you have an idea of what devices are on the network, the next step is enforcing a basic policy. Typically, a basic policy’s goal is to connect corporate devices and/or employees with full network access while limiting guest users as well as denying other unauthorized devices. A basic policy does not include complicated configurations (such as multiple user policy types) or consider posture. The purpose is to introduce NAC concepts to the network slowly to avoid disrupting business process as well as to let administration become familiar with using NAC technology. It is very rare and not recommended to start enforcing multiple policies during an initial NAC deployment due to the risk of impacting business and causing a negative outlook on the overall security mission.

After a basic policy is successfully deployed for a specific time, the remaining step to provision NAC is tuning the policy to be more granular in identifying device types, user groups, and what is provisioned. For example, a NAC policy could be developed for a specific device type such as employee mobile devices, which may have less access rights than employees using a corporate-issued device. Another example would be segmenting critical devices or devices requiring unique services such as Voice over IP phones. This step is typically slowly deployed and tested as the environment adopts the NAC technology.

The SECOPS exam will not test you on configuring or deploying access control technology; however, it is important that you understand these concepts before considering deploying this technology into your network.

The list that follows highlights the key used port concepts:

![]() Port security is a basic form of controlling what devices can plug in to a network port.

Port security is a basic form of controlling what devices can plug in to a network port.

![]() Basic port security uses MAC addresses, which is labor intensive to manage and can be bypassed using MAC spoofing.

Basic port security uses MAC addresses, which is labor intensive to manage and can be bypassed using MAC spoofing.

![]() NAC technology automates port security to simplify port security management as well as adds additional security elements such as profiling and host posture checks.

NAC technology automates port security to simplify port security management as well as adds additional security elements such as profiling and host posture checks.

![]() Best practices for enabling a NAC solution is to discover what is on the network, establish a basic NAC policy, and then later tune that policy with more granular checks and policies.

Best practices for enabling a NAC solution is to discover what is on the network, establish a basic NAC policy, and then later tune that policy with more granular checks and policies.

Session Duration

Another important network topic is monitoring how long devices are connected to the network. The value in knowing this can be learning how long users utilize network resources during different periods of the workday, identifying when a critical system goes offline, and identifying how to enforce usage policies, such as limiting access to the network to approved times. An example of limiting access could be controlling guest access to only work hours. We will focus in this section on viewing the session duration of devices connecting to a network rather than on the time users are logged in to a host system.

Session duration in network access terms is the total time a user or device connects to a network and later disconnects from that network. The session average is the total duration of all sessions at a time divided by the number of sessions. Taking the average of a host’s session duration for a period of time can provide a baseline of how that system utilizes network resources. This can be a very valuable tool for monitoring for anomalies that could represent a technical issue or security breach. An example is identifying that for the first time in eight months, one system starts connecting to other systems it has never connected to in the past. Malicious software is typically designed to spread through the network. Also, attackers tend to seek other systems when compromising a network. Seeing authorized yet “weird” behavior or behavior outside of normal sessions can indicate an insider threat. The same approach can be used to identify whether any critical systems are identified as leaving the network, indicating a problem such as internal network complications or power loss.

Identifying sessions starts with knowing what devices are connected to the network. The “Used Ports” section of this chapter showed various methods for identifying used ports. Session duration is a little different in that you need to use SNMP or a port-monitoring solution to gather exact utilization data. Cisco IOS offers the ability to show how long an IP address is mapped to a MAC address using the command show ip arp, as shown in Figure 8-6. This command indicates how long the device has been seen on the network. As you can see in this example, two IP addresses have been seen for less than 20 minutes.

Identifying the session duration for the entire network using the command line is a challenging task. The industry typically uses tools that pull SNMP and port logs to calculate the duration of a connected device. An example of a network-monitoring application with this capability is Cisco Prime LAN Manager. Other protocols can also be used to monitor ports such as NetFlow and packet-capturing technology, as long as the monitoring starts before the host connects to the network. The SECOPS exam won’t ask you about the available tools on the market for monitoring sessions because this is out of scope, and there are way too many to mention.

The list that follows highlights the key session duration concepts:

![]() Session duration in network access terms is the total time a user or device connects to a network and later disconnects from that network.

Session duration in network access terms is the total time a user or device connects to a network and later disconnects from that network.

![]() Identifying sessions starts with knowing what devices are connected to the network.

Identifying sessions starts with knowing what devices are connected to the network.

![]() Many tools are available on the market that leverage SNMP, NetFlow, packet captures, and so on, to monitor the entire session a host is seen on the network.

Many tools are available on the market that leverage SNMP, NetFlow, packet captures, and so on, to monitor the entire session a host is seen on the network.

Critical Asset Address Space

The last topic for the network section of this chapter involves looking at controlling the asset address space. This is important to ensure that critical assets are provisioned network resources, limit devices from interfering with devices deemed a higher importance, and possibly control the use of address spaces for security or cost purposes.

Address space can be either Internet Protocol version 4 (IPv4) or 6 (IPv6). IPv4 uses 32-bit addresses broken down into 4 bytes, which equals 8 bits per byte. An IPv4 address is written in a dotted-decimal notation, as shown in Figure 8-7. The address is divided into two parts, where the highest order octet of the address identifies the class of network, and the rest of the address represents the host. There are five classes of networks: Classes A, B, C, D, and E. Classes A, B, and C have different bit lengths for the new network identification, meaning the rest of the network space will be different regarding the capacity for address hosts. Certain ranges of IP addresses defined by the Internet Engineering Task Force (IETF) and the Internet Assigned Numbers Authority (IANA) are restricted from general use and reserved for special purposes. Network address translation (NAT) was developed as a method to remap one IP address space into another by modifying network address information; however, this still doesn’t deal with the increasing demand for new IP addresses. Details about creating IPv4 networks is out of scope for this section of the book and for the SECOPS exam.

To tackle the issue of available IPv4 address depletion, IPv6 was created as a replacement. It offers a much larger address space that ideally won’t be exhausted for many years. This also removes the need for NAT, which has its own inherent problems. IPv6 is made up of a 128-bit address format logically divided into a network prefix and host identifier. The number of bits in a network prefix is represented by a prefix length, while the remaining bits are used for the host identifier. Figure 8-8 shows an example of an IPv6 address using the default 64-bit network prefix length. Adoption of IPv6 has been slow, and many administrators leverage IPv4-to-IPv6 transition techniques, such as running both protocols simultaneously, which is known as dual stacking or translating between IPv4 and IPv6 approaches. The details for developing IPv6 address ranges are out of scope for this chapter and for the SECOPS exam.

Regardless of the version of IP addressing being used, IP address management (IPAM) will be a topic to tackle. Factors that impact address management include the version of IP addresses deployed, DNS security measures, and DHCP support. IPAM can be broken down into three major areas of focus.

![]() IP address inventory: Obtaining and defining the public and private IPv4 and IPv6 address space as well as allocating that address space to locations, subnets, devices, address pools, and users on the network.

IP address inventory: Obtaining and defining the public and private IPv4 and IPv6 address space as well as allocating that address space to locations, subnets, devices, address pools, and users on the network.

![]() Dynamic IP address services management: The parameters associated with each address pool defined within the IP address space management function as well as how DHCP servers supply relevant IP addresses and parameters to requesting systems. This function also includes managing capacity of address pools to ensure dynamic IP addresses are available.

Dynamic IP address services management: The parameters associated with each address pool defined within the IP address space management function as well as how DHCP servers supply relevant IP addresses and parameters to requesting systems. This function also includes managing capacity of address pools to ensure dynamic IP addresses are available.

![]() IP name services management: Managing domain name system (DNS) assignment so devices can access URL resources by name. This also includes other relevant DNS management tasks.

IP name services management: Managing domain name system (DNS) assignment so devices can access URL resources by name. This also includes other relevant DNS management tasks.

Each of these areas is critical to the proper operation of an IP-based network. Any device accessing the network regardless of connection type will need an IP address and the ability to access internal and/or external resources to be productive. Typically, this starts with an organization obtaining a public IPv4 or IPv6 address space from an Internet service provider (ISP). Administrators must spend time planning to accommodate current and future IP address capacity requirements in user accessible and mission-critical subnets on the network. Once those numbers are calculated, proper address allocation needs to be enforced. This means considering the routing infrastructure and avoiding issues such as duplicated IP addresses being assigned, networks rendered unreachable due to route summarization conflicts, and IP space rendered unusable due to errors in providing IP addresses in a hierarchical manager while still preserving address space for use on other parts of the network.

Address planning can be challenging and best accomplished by using a centralized IP inventory database, DHCP, and DNS policy. This provides one single, holistic view of the entire address space that is deployed across the network, regardless of the number of locations and required address pools, DHCP servers, and DNS servers. Dynamic Host Configuration Protocol (DHCP) automatically provisions hosts with an IP address and other related configurations, such as a subnet mask and default gateway. Centralizing DHCP can improve configuration accuracy and consistency of address pool allocation as well as IP reallocation, as needed for ongoing address pool capacity management.

The same centralized deployment practice should be enforced for domain name servers (DNS) responsible for resolving IP addresses to domain names, and vice versa. Leveraging a single DNS database simplifies deploying configuration parameters to the appropriate master or slave configuration as well as aggregating dynamic updates to keep all servers up to date. These and other strategies should make up your IP inventory assurance practice for enforcing the accuracy of IP inventory through periodic discovery, exception reporting, and selective database updates. IP assurance must be a continuous process to confirm the integrity of the IP inventory for effective IP planning.

Critical devices need additional measures to ensure other devices don’t impact their availability and functionality. Typically, two approaches are used to ensure critical devices don’t lose their IP address. One is to manually configure the IP information on those devices, and the other approach is reserving those IP addresses in DHCP. Manually setting the IP address, sometimes called statically setting or hard coding the IP address, ensures that the critical system does not rely on an external system to provide it IP information. Reserving IP addresses to the MAC address of a critical system in DHCP can accomplish the same thing; however, there is the low risk that the critical asset is relying on DHCP to issue the correct IP address, which could go down or be compromised. The risk of using static IP assignment is based on the concept that any system manually configured is not aware of how the IP address it is using impacts the rest of the network. If another device has the same IP address, there will be an IP address conflict and thus network issues. Static assignments also are manually enforced, which means this approach has a risk of address planning errors.

Once IP address sizing and deployment is complete, IP space management will become the next and ongoing challenge. This is due to various reasons that can occur and potentially impact the IP address space. New locations may open or existing locations may close. New IT services may be requested, such as Voice over IP (VoIP) requiring a new dedicated IP range. New security requirements such as network segmentation demands may be pushed down from leadership. One change that many administrators are or will eventually face is converting IPv4 networks to IPv6, as all networks are slowly adapting to the future of the Internet. The success of maintaining an effective IP address space is staying on top of these and other requests.

Here is a summary of IP address inventory management best practices:

![]() Use a centralized database for IP address inventory.

Use a centralized database for IP address inventory.

![]() Use a centralized DHCP server configuration and include failover measures.

Use a centralized DHCP server configuration and include failover measures.

![]() Document all IP subnet and address space assignments.

Document all IP subnet and address space assignments.

![]() Use basic security practices to provide selective address assignment.

Use basic security practices to provide selective address assignment.

![]() Track address space allocations in accordance with the routing topology.

Track address space allocations in accordance with the routing topology.

![]() Deploy consistent subnet addressing policies.

Deploy consistent subnet addressing policies.

![]() Develop network diagrams that include a naming convention for all devices using IP space.

Develop network diagrams that include a naming convention for all devices using IP space.

![]() Continuously monitor address and pool utilization as well as adapt to capacity issues.

Continuously monitor address and pool utilization as well as adapt to capacity issues.

![]() Plan for IPv6 if using IPv4.

Plan for IPv6 if using IPv4.

Host Profiling

Profiling hosts on the network is similar to profiling network behavior. The focus for the remaining topics in this chapter will be on viewing devices connected to the network versus the actual network itself. This can be valuable in identifying vulnerable systems, internal threats, what applications are installed on hosts, and so on. We will touch upon how to view details directly from the host; however, the main focus will be on profiling hosts as an outside entity by looking at a host’s network footprint.

Let’s start off by discussing how to view data from a network host and the applications it is using.

Listening Ports

The first aspect people look at when attempting to learn about a host system over a network—regardless of whether it is a system administrator, penetration tester, or malicious attacker—is determining which ports are “listening.” A listening port is a port held open by a running application in order to accept inbound connections. From a security perspective, this may mean a vulnerable system that could be exploited. A worst-case scenario would be an unauthorized active listening port to an exploited system permitting external access to a malicious party. Because most attackers will be outside your network, unauthorized listening ports are typically evidence of an intrusion.

Let’s look at the fundamentals behind ports: Messages associated with application protocols use TCP or UDP. Both of these employ port numbers to identify a specific process to which an Internet or other network message is to be forwarded when it arrives at a server. A port number is a 16-bit integer that is put in the header appended to a specific message unit. Port numbers are passed logically between the client and server transport layers and physically between the transport layer and the IP layer before they are forwarded on. This client/server model is typically seen as web client software. An example is a browser communicating with a web server listening on a port such as port 80. Port values can range between 1 and 65535, with server applications generally assigned a valued below 1024.

The following is a list of well-known ports used by applications:

![]() TCP 20 and 21: File Transfer Protocol (FTP)

TCP 20 and 21: File Transfer Protocol (FTP)

![]() TCP 22: Secure Shell (SSH)

TCP 22: Secure Shell (SSH)

![]() TCP 23: Telnet

TCP 23: Telnet

![]() TCP 25: Simple Mail Transfer Protocol (SMTP)

TCP 25: Simple Mail Transfer Protocol (SMTP)

![]() TCP and UDP 53: Domain Name System (DNS)

TCP and UDP 53: Domain Name System (DNS)

![]() UDP 69: Trivial File Transfer Protocol (TFTP)

UDP 69: Trivial File Transfer Protocol (TFTP)

![]() TCP 79: Finger

TCP 79: Finger

![]() TCP 80: Hypertext Transfer Protocol (HTTP)

TCP 80: Hypertext Transfer Protocol (HTTP)

![]() TCP 110: Post Office Protocol v3 (POP3)

TCP 110: Post Office Protocol v3 (POP3)

![]() TCP 119: Network News Protocol (NNTP)

TCP 119: Network News Protocol (NNTP)

![]() UDP 161 and 162: Simple Network Management Protocol (SNMP)

UDP 161 and 162: Simple Network Management Protocol (SNMP)

![]() UDP 443: Secure Sockets Layer over HTTP (HTTPS)

UDP 443: Secure Sockets Layer over HTTP (HTTPS)

NOTE:

These are just industry guidelines, meaning administrators do not have to run the following services over these ports. Typically administrators will follow these guidelines; however, these services can run over other ports. The services do not have to run on the known port to service list.

There are two basic approaches for identifying listening ports on the network. The first approach is accessing a host and searching for which ports are set to a listening state. This requires a minimal level of access to the host and being authorized on the host to run commands. This could also be done with authorized applications that are capable of showing all possible applications available on the host. The most common host-based tool for checking systems for listening ports on Windows and UNIX systems is the netstat command. An example of looking for listening ports using the netstat command is netstat –an, as shown in Figure 8-9. As you can see, two applications are in the “LISTEN” state. Another host command to view similar data is the lsof –i command.

A second and more reliable approach to determining what ports are listening from a host is to scan the host as an outside evaluator with a port scanner application. A port scanner probes a host system running TCP/IP to determine which TCP and UDP ports are open and listening. One extremely popular tool that can do this is the nmap tool, which is a port scanner that can determine whether ports are listening, plus provide many other details. The nmap command nmap-services will look for more than 2,200 well-known services to fingerprint any applications running on the port.

It is important to be aware that port scanners are providing a best guess, and the results should be validated. For example, a security solution could reply with the wrong information or an administrator could spoof information such as the version number of a vulnerable server to make it appear to a port scanner that the server is patched. Newer breach detection technologies such as advanced honey pots will attempt to attract attackers that have successfully breached the network by leaving vulnerable ports open on systems in the network. They will then monitor those systems for any connections. The concept is that attackers will most likely scan and connect to systems that are found to be vulnerable, thus being tricked into believing the fake honey pot is really a vulnerable system. Figure 8-10 shows an example of using the nmap –sT –O localhost command to identify ports that are open or listening for TCP connections.

If attackers are able to identify a server with an available port, they can attempt to connect to that service, determine what software is running on the server, and check to see if there are known vulnerabilities within the identified software that potentially could be exploited, as previously explained. This tactic can be effective when servers are identified as unadvertised because many website administrators fail to adequately protect systems that may be considered “non-production” systems yet are still on the network. An example would be using a port scanner to identify servers running older software, such as an older version of Internet Information Service (IIS) that has known exploitable vulnerabilities. Many penetration arsenals such as Metasploit carry a library of vulnerabilities matching the results from a port scanner application. Based on the port scan in Figure 8-10, an attacker would be particularly interested in the open TCP ports listed with unknown services. This is how many cyber attacks are delivered, meaning the attacker identifies applications on open ports, matches them to a known vulnerability, and exploits the vulnerability with the goal of delivering something such as malware, a remote access tool, or ransomware.

Another option for viewing “listening” ports on a host system is to use a network device such as a Cisco IOS router. A command similar to netstat on Cisco IOS devices is show control-plan host open-ports. A router’s control plane is responsible for handling traffic destined for the router itself, versus the data plane being responsible for passing transient traffic. This means the output from the Cisco IOS command is similar to a Windows netstat command, as shown in Figure 8-11, which lists a few ports in the LISTEN state.

Best practice for securing any listening and open ports is to perform periodic network assessments on any host using network resources for open ports and services that might be running and are either unintended or unnecessary. The goal is to reduce the risk of exposing vulnerable services and to identify exploited systems or malicious applications. Port scanners are very common and widely available for the Windows and UNIX platforms. Many of these programs are open source projects, such as nmap, and have well-established support communities. A risk evaluation should be applied to identified listening ports because some services may be exploitable but wouldn’t matter for some situations. An example would be a server inside a closed network without external access that’s identified to have a listening port that an attacker would never be able to access.

The following list shows some of the known “bad” ports that should be secured:

![]() 1243/tcp: SubSeven server (default for V1.0-2.0)

1243/tcp: SubSeven server (default for V1.0-2.0)

![]() 6346/tcp: Gnutella

6346/tcp: Gnutella

![]() 6667/tcp: Trinity intruder-to-master and master-to-daemon

6667/tcp: Trinity intruder-to-master and master-to-daemon

![]() 6667/tcp: SubSeven server (default for V2.1 Icqfix and beyond)

6667/tcp: SubSeven server (default for V2.1 Icqfix and beyond)

![]() 12345/tcp: NetBus 1.x

12345/tcp: NetBus 1.x

![]() 12346/tcp: NetBus 1.x

12346/tcp: NetBus 1.x

![]() 16660/tcp: Stacheldraht intruder-to-master

16660/tcp: Stacheldraht intruder-to-master

![]() 18753/udp: Shaft master-to-daemon

18753/udp: Shaft master-to-daemon

![]() 20034/tcp: NetBus Pro

20034/tcp: NetBus Pro

![]() 20432/tcp: Shaft intruder-to-master

20432/tcp: Shaft intruder-to-master

![]() 20433/udp: Shaft daemon-to-master

20433/udp: Shaft daemon-to-master

![]() 27374/tcp: SubSeven server (default for V2.1-Defcon)

27374/tcp: SubSeven server (default for V2.1-Defcon)

![]() 27444/udp: Trinoo master-to-daemon

27444/udp: Trinoo master-to-daemon

![]() 27665/tcp: Trinoo intruder-to-master

27665/tcp: Trinoo intruder-to-master

![]() 31335/udp: Trinoo daemon-to-master

31335/udp: Trinoo daemon-to-master

![]() 31337/tcp: Back Orifice

31337/tcp: Back Orifice

![]() 33270/tcp: Trinity master-to-daemon

33270/tcp: Trinity master-to-daemon

![]() 33567/tcp: Backdoor rootshell via inetd (from Lion worm)

33567/tcp: Backdoor rootshell via inetd (from Lion worm)

![]() 33568/tcp: Trojaned version of SSH (from Lion worm)

33568/tcp: Trojaned version of SSH (from Lion worm)

![]() 40421/tcp: Masters Paradise Trojan horse

40421/tcp: Masters Paradise Trojan horse

![]() 60008/tcp: Backdoor rootshell via inetd (from Lion worm)

60008/tcp: Backdoor rootshell via inetd (from Lion worm)

![]() 65000/tcp: Stacheldraht master-to-daemon

65000/tcp: Stacheldraht master-to-daemon

One final best practice we’ll cover for protecting listening and open ports is implementing security solutions such as firewalls. The purpose of a firewall is to control traffic as it enters and leaves a network based on a set of rules. Part of the responsibility is protecting listening ports from unauthorized systems—for example, preventing external attackers from having the ability to scan internal systems or connect to listening ports. Firewall technology has come a long way, providing capabilities across the entire network protocol stack and the ability to evaluate the types of communication permitted. For example, older firewalls can permit or deny web traffic via port 80 and 443, but current application layer firewalls can also permit or deny specific applications within that traffic, such as denying YouTube videos within a Facebook page, which is seen as an option in most application layer firewalls. Firewalls are just one of the many tools available to protect listening ports. Best practice is to layer security defense strategies to avoid being compromised if one method of protection is breached.

The list that follows highlights the key listening port concepts:

![]() A listening port is a port held open by a running application in order to accept inbound connections.

A listening port is a port held open by a running application in order to accept inbound connections.

![]() Ports use values that range between 1 and 65535.

Ports use values that range between 1 and 65535.

![]() Using netstat and nmap are popular methods for identifying listening ports.

Using netstat and nmap are popular methods for identifying listening ports.

![]() Netstat can be run locally on a device, whereas nmap can be used to scan a range of IP addresses for listening ports.

Netstat can be run locally on a device, whereas nmap can be used to scan a range of IP addresses for listening ports.

![]() Best practice for securing listening ports is to scan and evaluate any identified listening port as well as to implement layered security, such as combining a firewall with other defensive capabilities.

Best practice for securing listening ports is to scan and evaluate any identified listening port as well as to implement layered security, such as combining a firewall with other defensive capabilities.

Logged-in Users/Service Accounts

Identifying who is logged in to a system is important for knowing how the system will be used. Administrators typically have more access to various services than other users because their job requires those privileges. Human Resources may need more access rights than other employees to validate whether an employee is violating a policy. Guest users typically require very little access rights because they are considered a security risk to most organizations. In summary, best practice for provisioning access rights is to enforce the concept of least privilege, meaning to provision the absolute least amount of access rights required to perform a job.

People can be logged in to a system in two ways. The first method is to be physically at a keyboard logged in to the system. The other method is to remotely access the system using something like a Remote Desktop Connection (RDP) protocol. Sometimes the remote system is authorized and controlled, such as using a Citrix remote desktop solution to provide remote users access to the desktop, whereas other times it’s a malicious user who has planted a remote access tool (RAT) to gain unauthorized access to the host system. Identifying post-breach situations is just one of the many reasons why monitoring remote connections should be a priority for protecting your organization from cyber breaches.

Identifying who is logged in to a system can be accomplished by using a few approaches. For Windows machines, the first method involves using the Remote Desktop Services Manager suite. This approach requires the software to be installed. Once the software is running, an administrator can remotely access the host to verify who is logged in. Figure 8-12 shows an example of using the Remote Desktop Services Manager to remotely view that two users are logged in to a host system.

Another tool you can use to validate who is logged in to a Windows system is the PsLoggedOn application. For this application to work, it has to be downloaded and placed somewhere on your local computer that will be remotely checking hosts. Once it’s installed, simply open a command prompt and execute the command C:PsToolspsloggedon.exe \HOST_TO_CONNECT. Figure 8-13 shows an example of using PsTools to connect to “server-a” and finding that two users are logged in to the host system.

One last method to remotely validate who is logged in to a Windows system is using the Windows Query application, which is executed using the command query user /server:server-a. Figure 8-14 shows an example of using this command to see who is logged into server-a.

For Linux machines, various commands can show who is logged in to a system, such as the w command, who command, users command, whoami command, and the last “user name” command, as shown in Figure 8-15. Each option shows a slightly different set of information about who is currently logged in to a system. One command that displays the same information on a Windows system is the whoami command.

Many administrative tools can be used to remotely access hosts, so the preceding commands can be issued to validate who is logged in to the system. One such tool is a virtual network computing (VNC) server. This method requires three pieces. The first part is having a VNC server that will be used to access clients. The second part is having a VNC viewer client installed on the host to be accessed by the server. The final part is an SSH connection that is established between the server and client once things are set up successfully. SSH can also be used directly from one system to access another system using the ssh “remote_host” or ssh “remote_username@remote_host” command if SSH is set up properly. There are many other applications, both open source and commercial, that can provide remote desktop access service to host systems.

It is important to be aware that validating who is logged in to a host can identify when a host is compromised. According to the kill chain concept, attackers that breach a network will look to establish a foothold through breaching one or more systems. Once they have access to a system, they will seek out other systems by pivoting from system to system. In many cases, attackers want to identify a system with more access rights so they can increase their privilege level, meaning gain access to an administration account, which typically can access critical systems. Security tools that include the ability to monitor users logged in to systems can flag whether a system associated with an employee accesses a system that’s typically only accessed by administrator-level users, thus indicating a concern for an internal attack through a compromised host. The industry calls this type of security breach detection, meaning technology looking for post compromise attacks.

The list that follows highlights the key concepts covered in this section:

![]() Employing least privilege means to provision the absolute minimum amount of access rights required to perform a job.

Employing least privilege means to provision the absolute minimum amount of access rights required to perform a job.

![]() The two methods to log in to a host are locally and remotely.

The two methods to log in to a host are locally and remotely.

![]() Common methods for remotely accessing a host are using SSH and using a remote access server application such as VNC.

Common methods for remotely accessing a host are using SSH and using a remote access server application such as VNC.

Running Processes

Now that we have covered identifying listening ports and how to check users that are logged in to a host system, the next topic to address is how to identify which processes are running on a host system. A running process is an instance of a computer program being executed. There’s lots of value in understanding what is running on hosts, such as identifying what is consuming resources, developing more granular security policies, and tuning how resources are distributed based on QoS adjustments linked to identified applications. We will briefly look at identifying processes with access to the host system; however, the focus of this section will be on viewing applications as a remote system on the same network.

In Windows, one simple method for viewing the running processes when you have access to the host system is to open the Task Manager using the Ctrl+Shift+Esc, as shown in Figure 8-16.

A similar result can be achieved using the Windows command line by opening the command terminal with the cmd command and issuing the tasklist command, as shown in Figure 8-17.

For UNIX systems, the command ps -e can be used to display a similar result as the Windows commands previously covered. Figure 8-18 shows executing the ps -e command to display running processes on an OS X system.

These approaches are very useful when you can log in to the host and have the privilege level to issue such commands. The focus for the SECOPS exam is identifying these processes from an administrator system on the same network versus administrating the host directly. This requires evaluation of the hosts based on traffic and available ports. There are known services associated with ports, meaning that simply seeing a specific port being used indicates it has a known associated process running. For example, if port 25 is showing SMTP traffic, it is expected that the host has a mail process running.

Identifying traffic from a host and the ports being used by the host can be handled using methods we previously covered, such as using a port scanner, having a detection tool inline, or reading traffic from a SPAN port. An example is using nmap commands that include application data, such as the nmap –sV command shown in Figure 8-19. This nmap command searchers the port against more than 2,200 well-known services. It is important to note that a general nmap scan will just provide a best guess for the service based on being linked to a known port, whereas the nmap –sV command will interrogate the open ports using probes that the specific service understands to validate what is really running. This also holds true for identifying the applications, which we will cover in the next section.

The list that follows highlights the key concepts covered in this section:

![]() A running process is an instance of a computer program being executed.

A running process is an instance of a computer program being executed.

![]() Identifying traffic and ports from a host can be accomplished by using a port scanner, having a detection tool inline, or reading traffic from a SPAN port.

Identifying traffic and ports from a host can be accomplished by using a port scanner, having a detection tool inline, or reading traffic from a SPAN port.

![]() Identifying running processes uses similar tools as discovering listening ports.

Identifying running processes uses similar tools as discovering listening ports.

Applications

The final topic for this chapter is detecting and monitoring host applications. An application is software that performs a specific task. Applications can be found on desktops, laptops, mobile devices, and so on. They run inside the operating system and can be simple tasks or complicated programs. Identifying applications can be done using the methods previously covered, such as identifying which protocols are seen by a scanner, the type of clients (such as the web browser or email client), and the sources they are communicating with (such as what web applications are being used).

Note

Applications operate at the top of the OSI and TCP/IP layer models, whereas traffic is sent by the transport and lower layers, as shown in Figure 8-20.

To view applications on a Windows system with access to the host, you can use the same methods we covered for viewing processes. The Task Manager is one option, as shown in Figure 8-21. Notice in this example I’m running two applications: a command terminal and the Google Chrome Internet Browser.

For OS X systems, you can use Force Quit Applications (accessed with Command+Option+Escape), as shown in Figure 8-22, or the Activity Monitor tool.

Once again, these options for viewing applications are great if you have access to the host as well as the proper privilege rights to run those commands or applications; however, let’s look at identifying the applications as an outsider profiling a system on the same network.

The first tool to consider is a port scanner that can also interrogate for more information than port data. Nmap version scanning can further interrogate open ports to probe for specific services. This tells nmap what is really running versus just the ports that are open. For example, running nmap –v could display lots of details, including the following information showing which port is open and the identified service:

PORT STATE SERVICE

80/tcp open http

631/tcp open ipp

3306/tcp open mysql

A classification engine available in Cisco IOS software that can be used to identify applications is Network-Based Application Recognition (NBAR). This works by enabling an IOS router interface to map traffic ports to protocols as well as recognize traffic that doesn’t have a standard port, such as various peer-to-peer protocols. NBAR is typically used as a means to identify traffic for QoS policies; however, you can use the show ip nbar protocol-discovery command, as shown in Figure 8-23, to identify what protocols and associated applications are identified by NBAR.

Many other tools with built-in application-detection capabilities are available. Most content filters and network proxies can provide application layer details, such as Cisco’s Web Security Appliance (WSA).

Even NetFlow can have application data added when using a Cisco StealthWatch Flow Sensor. The Flow Sensor adds detection of 900 applications while it converts raw data into NetFlow. Application layer firewalls also provide detailed application data, such as Cisco Firepower, which is shown in Figure 8-24.