CHAPTER 3

Forecasting Performance Evaluation and Reporting

Process improvement begins with process measurement. But it can be a challenge to find the right metrics to motivate the desired behavior. A simple example is provided by Steve Morlidge (in an article later in this chapter) for the case of intermittent demand:

When 50% or more of the periods are zero, a forecast of zero every period will generate the lowest average absolute error—irrespective of the size of the nonzero values. Yet forecasting zero every period is probably the wrong thing to do for inventory planning and demand fulfillment.

There are dozens of available forecasting performance metrics. Some, like mean absolute percent error (MAPE), represent error as a percentage. Others, like mean absolute error (MAE), are scale dependent; that is, they report the error in the original units of the data. Relative-error metrics (such as Theil’s U or forecast value added (FVA)) compare performance versus a benchmark (typically a naïve model). Each metric has its place—a situation where it is suitable to use and informative. But there are also countless examples (many provided in the articles below) where particular metrics are unsuitable and lead decision makers to inappropriate conclusions.

After Len Tashman’s opening overview and tutorial on forecast accuracy measurement, this chapter provides a critical exploration of many specific metrics and methods for evaluating forecasting performance. It covers some innovative approaches to performance reporting—including the application of statistical process control methods to forecasting. And it concludes with the most fundamental—yet frequently unasked—question in any performance evaluation: Can you beat the naïve forecast?

3.1 Dos and Don’ts of Forecast Accuracy Measurement: A Tutorial1

Len Tashman

As a forecaster, you’ve acquired a good deal of knowledge about statistical measurements of accuracy, and you’ve applied accuracy metrics alongside a large dose of common sense. This tutorial is designed to confirm your use of appropriate practices in forecast accuracy measurement, and to suggest alternatives that may provide new and better insights.

Perhaps most important, we warn you of practices that can distort or even undermine your accuracy evaluations. You will see these listed below as taboos—errors and omissions that must be avoided.

The Most Basic Issue: Distinguish In-Sample Fit from Out-of-Sample Accuracy

Using a statistical model applied to your sales history of daily, weekly, monthly, or quarterly data, possibly modified by judgmental adjustments, you generate forecasts for a certain number of periods into the future. We’ll use month as the general time interval. The question, “How accurate is your model?”—a question that contributes to decisions on whether the model is reliable as a forecasting tool—has two distinct components:

- In-Sample or Fitting Accuracy: How closely does the model track (fit, reproduce) the historical data that were used to generate the forecasts? We call this component in-sample accuracy. If you used the most recent 36 months of history to fit your model, the metrics of in-sample fit reveal the proximity of the model’s estimates to the actual values over each of the past 36 months.

- Out-of-Sample or Forecasting Accuracy: How closely will the model predict activity for the months ahead? The essential distinction from fitting accuracy is that you are now predicting into an unknown future, as opposed to predicting historical activity whose measurements were known to you. This forecasting accuracy component is often called out-of-sample accuracy, since the future is necessarily outside the sample of historical data we have about the past.

The best practice is to calculate and report measurements of both fitting and forecasting accuracy. The differences in the figures can be dramatic, and the key is to avoid taboo #1.

Volumes of research tell us that you cannot judge forecasting accuracy by fitting accuracy. For example, if your average error in-sample is found to be 10%, it is very probable that forecast errors will average substantially more than 10%. More generally put, in-sample errors are liable to understate errors out of sample. The reason is that you have calibrated your model to the past but you cannot calibrate to a future that has yet to occur.

How serious can violation of Taboo #1 be? If in-sample errors average 10%, how much larger than 10% will forecast errors be? A bit, twice the 10% figure, five times this figure, or 100 times this figure? That depends upon how closely the near future tracks the recent past, but it would not be surprising to find that out-of-sample errors are more than double the magnitude of in-sample errors.

The point to remember is this: The use of in-sample figures as a guide to forecasting accuracy is a mistake (a) that is of a potentially major magnitude; (b) that occurs far too often in practice; and (c) that is perpetuated by omissions in some, and perhaps most, forecasting software programs (think Excel, for example). The lack of software support is one reason that this mistake persists.

So how do you keep the distinction between fitting and forecasting accuracy clearly delineated?

Assessing Forecast Accuracy

There are at least three approaches that can be used to measure forecasting accuracy. These are:

- Wait and see in real time

- Use holdout samples

- Create retrospective evaluations

While there are variations on these themes, it is worthwhile understanding their basic similarities and differences.

Real-Time Evaluations

A company commits to a forecast for “next month” on or before the last day of the current month. This is a forecast with a one-month lead-time, or one-month horizon. We call it a one-month-ahead forecast.

Suppose the May forecast is presented by April 30. Soon after May has elapsed, the activity level for this month is a known fact. The difference between that level and what had been forecast on April 30 is the forecast error for the month of May, a one-month-ahead forecast error.

The company has developed worksheets that show the actuals, forecasts, and errors-by-month over the past few years. They use these figures to compare alternative forecasting procedures and to see if accuracy is improving or deteriorating over time.

The real-time evaluation is “pure” in that forecasts for the next month do not utilize any information that becomes known after the month (May) begins. One disadvantage here is that there is more than a month’s lag before the next accuracy figure can be calculated.

The most critical lead-time for judging forecast accuracy is determined by the order/replenishment cycle. If it takes two months, on average, to obtain the resources to produce the product or service, then forecasting accuracy at two months ahead is the critical lead-time.

There is also an inconvenience to real-time evaluations. These normally must be done outside the forecasting tool, requiring creation of worksheets to track results. If the company wishes to learn how accurately it can forecast more than one month ahead—for example, forecasting with lead-times of two months, three months, or longer—it will need to create a separate worksheet for each lead-time.

Holdout Samples

Many software tools support holdout samples. They allow you to divide the historical data on an item, product, or family into two segments. The earlier segment serves as the fit or in-sample period; the fit-period data are used to estimate statistical models and determine their fitting accuracy. The more recent past is held out of the fit period to serve as the test, validation, or out-of-sample period: Since the test-period data have not been used in choosing or fitting the statistical models, they represent the future that the models are trying to forecast. Hence, a comparison of the forecasts against the test-period data is essentially a test of forecasting accuracy.

Peeking

Holdout samples permit you to obtain impressions of forecast accuracy without waiting for the future to materialize (they have another important virtue as well, discussed in the next section). One danger, however, is peeking, which is what occurs when a forecaster inspects the holdout sample to help choose a model. You can’t peek into the future, so peeking at the held-out data undermines the forecast-accuracy evaluation.

Another form of the peeking problem occurs when the forecaster experiments with different models and then chooses the one that best “forecasts” the holdout sample of data. This overfitting procedure is a no-no because it effectively converts the out-of-sample data into in-sample data. After all, how can you know how any particular model performed in the real future, without waiting for the future to arrive?

In short, if the holdout sample is to provide an untainted view of forecast accuracy, it must not be used for model selection.

Single Origin Evaluations

Let’s say you have monthly data for the most recent 4 years, and have divided this series into a fit period of the first 3 years, holding out the most recent 12 months to serve as the test period. The forecast origin would be the final month of year 3. From this origin, you forecast each of the 12 months of year 4. The result is a set of 12 forecasts, one each for lead-times 1–12.

What can you learn from these forecasts? Very little, actually, since you have only one data point on forecast accuracy for each lead-time. For example, you have one figure telling you how accurately the model predicted one month ahead. Judging accuracy from samples of size 1 is not prudent. Moreover, this one figure may be “corrupted” by occurrences unique to that time period.

Further, you will be tempted (and your software may enable you) to average the forecast errors over lead-times 1–12. Doing so gives you a metric that is a mélange of near-term and longer-term errors that have ceased to be linked to your replenishment cycle.

Rolling Origin Evaluations

The shortcomings of single-origin evaluations can be overcome in part by successively updating the forecast origin. This technique is also called a rolling-origin evaluation. In the previous example (4 years of monthly data, the first 3 serving as the fit period and year 4 as the test period), you begin the same way, by generating the 12 forecasts for the months of year 4 . . . but you don’t stop there.

You then move the first month of year 4 from the test period into the fit period, and refit the same statistical model to the expanded in-sample data. The updated model generates 11 forecasts, one each for the remaining months of year 4.

The process continues by updating the fit period to include the second month of year 4, then the third month, and so forth, until your holdout sample is exhausted (down to a single month). Look at the results:

- 12 data points on forecast accuracy for forecasting one month ahead

- 11 data points on forecast accuracy for forecasting two months ahead

- . . .

- 2 data points on forecast accuracy for forecasting 11 months ahead

- 1 data point on forecast accuracy for forecasting 12 months ahead.

If your critical lead-time is 2 months, you now have 11 data points for judging how accurately the statistical procedure will forecast two months ahead. An average of the 11 two-months-ahead forecast errors will be a valuable metric and one that does not succumb to Taboo #3.

Performing rolling-origin evaluations is feasible only if your software tool supports this technique. A software survey that Jim Hoover and I did in 2000 for the Principles of Forecasting project (Tashman and Hoover, 2001) found that few demand-planning tools, spreadsheet packages, and general statistical programs offered this support. However, dedicated business-forecasting software packages do tend to provide more adequate support for forecasting-accuracy evaluations.

How Much Data to Hold Out?

There are no hard and fast rules. Rather, it’s a balancing act between too large and too small a holdout sample. Too large a holdout sample and there is not enough data left in-sample to fit your statistical model. Too small a holdout sample and you don’t acquire enough data points to reliably judge forecasting accuracy.

If you are fortunate to have a long history—say, 48 months of data or more—you are free to make the decision on the holdout sample based on common sense. Normally, I hold out the final year (12 months), using the earlier years to fit the model. This gives a picture of how that model would have forecast each month of the past year.

If the items in question have a short replenishment cycle—2 months, let’s say—you’re interested mainly in the accuracy of 2-months-ahead forecasts. In this situation, I recommend holding out at least 4 months. In a rolling-origin evaluation, you’ll receive 4 data points on accuracy for one month ahead and 3 for two months ahead. (Had you held out only 2 months, you’d receive only 1 data point on accuracy for your 2-months-ahead forecast.) I call this the H+2 rule, where H is the forecast horizon determined by your replenishment cycle.

When you have only a short history, it is not feasible to use a holdout sample. But then statistical accuracy metrics based on short histories are not reliable to begin with.

Retrospective Evaluations

Real-time evaluations and holdout samples are two approaches to assessment of forecasting accuracy. Retrospective evaluation is a third. Here, you define a target month, say the most recent December. Then you record the forecasts for the target month that were made one month ago, two months ago, three months ago, and so forth. Subtracting each forecast from the actual December value gives you the error in a forecast made so many months prior. So-called backtracking grids or waterfall charts are used to display the retrospective forecast errors. An example can be seen at www.mcconnellchase.com/fd6.shtml.

It would be a good sign if the errors diminish as you approach the target month.

The retrospective evaluation, like the rolling-origin evaluation, allows you to group errors by lead-time. You do this by repeating the analysis for different target months and then collecting the forecast errors into one month before, two months before, and so forth. If your replenishment cycle is short, you need go back only a few months prior to each target.

Accuracy Metrics

The core of virtually all accuracy metrics is the difference between what the model forecast and the actual data point. Using A for the actual and F for the forecast, the difference is the forecast error.

The forecast error can be calculated as A – F (actual minus forecast) or F – A (forecast minus actual). Most textbooks and software present the A – F form, but there are plenty of fans of both methods. Greene and Tashman (2008) summarize the preferences between the two forms. Proponents of A – F cite convention—it is the more common representation—while advocates of F – A say it is more intuitive, in that a positive error F > A represents an overforecast and F < A an underforecast. With A – F, an overforecast is represented by a negative error, which could be confusing to some people.

However, the form really doesn’t matter when the concern is with accuracy rather than bias. Accuracy metrics are calculated on the basis of the difference between actual and forecast without regard to the direction of the difference. The directionless difference is called the absolute value of the error. Using absolute values prevents negative and positive errors from offsetting each other and focuses your attention on the size of the errors.

In contrast, metrics that assess bias—a tendency to misforecast in one direction—retain the sign of the error (+ or –) as an indicator of direction. Therefore, it is important to distinguish metrics that reveal bias from those that measure accuracy or average size of the errors.

The presence and severity of bias is more clearly revealed by a graph rather than a metric.

Pearson (2007) shows you how to create a Prediction-Realization Diagram. This single graphic reveals whether your forecasts are biased (and in what direction), how large your errors are (the accuracy issue), and if your forecasts are better than a naïve benchmark. Seeing all this in one graphic reveals patterns in your forecast errors and insights into how to improve your forecasting performance.

Classification of Accuracy Metrics

Hyndman (2006) classifies accuracy metrics into 4 types, but here I’m going to simplify his taxonomy into 3 categories:

- Basic metrics in the original units of the data (same as Hyndman’s scale dependent)

- Basic metrics in percentage form (same as Hyndman’s percentage error)

- Relative-error metrics (Hyndman’s relative and scale-free metrics)

I use the term basic metric to describe the accuracy of a set of forecasts for a single item from a single procedure or model. In a basic metric, aggregation is not an issue; that is, we are not averaging errors over many items.

In contrast to basic metrics, relative-error metrics compare the accuracy of a procedure against a designated benchmark procedure.

Aggregate-error metrics can be compiled from both basic and relative-error metrics.

Basic Metrics in the Original Units of the Data

Basic metrics reveal the average size of the error. The “original units” of the data will normally be volume units (# cases, # widgets) or monetary units (value of orders or sales).

The principal metric of this type is the mean of the absolute errors, symbolized normally as the MAD (mean absolute deviation) or MAE (mean absolute error). Recall that by “absolute” we mean that negative errors are not allowed to offset positive errors (that is, over- and underforecasts do not cancel). If we permitted negatives to offset positives, the result could well be an average close to zero, despite large errors overall.

A MAD of 350 cases tells us that the forecasts were off by 350 cases on the average.

An alternative to the MAD (MAE) that prevents cancellation of negatives and positives is the squared-error metric, variously called the RMSE (root mean square error), the standard deviation of the error (SDE), or standard error (SE). These metrics are more popular among statisticians than among forecasters, and are a step more challenging to interpret and explain. Nevertheless, they remain the most common basis for calculations of safety stocks for inventory management, principally because of the (questionable) tradition of basing safety-stock calculations on the bell-shaped Normal distribution.

MAPE: The Basic Metric in Percentage Form

The percentage version of the MAD is the MAPE (the mean of the absolute percentage errors).

A MAPE of 3.5% tells us that the forecasts were off by 3.5% on the average.

There is little question that the MAPE is the most commonly cited accuracy metric, because it seems so easy to interpret and understand. Moreover, since it is a percentage, it is scale free (not in units of widgets, currency, etc.) while the MAD, which is expressed in the units of the data, is therefore scale dependent.

A scale-free metric has two main virtues. First, it provides perspective on the size of the forecast errors to those unfamiliar with the units of the data. If I tell you that my forecast errors average 175 widgets, you really have no idea if this is large or small; but if I tell you that my errors average 2.7%, you have some basis for making a judgment.

Secondly, scale-free metrics are better for aggregating forecast errors of different items. If you sell both apples and oranges, each MAD is an average in its own fruit units, making aggregation silly unless the fruit units are converted to something like cases. But even if you sell two types of oranges, aggregation in the original data units will not be meaningful when the sales volume of one type dominates that of the other. In this case, the forecast error of the lower-volume item will be swamped by the forecast error of the higher-volume item. (If 90% of sales volume is of navel oranges and 10% of mandarin oranges, equally accurate procedures for forecasting the two kinds will yield errors that on average are 9 times greater for navel oranges.)

Clearly, the MAPE has important virtues. And, if both MAD and MAPE are reported, the size of the forecast errors can be understood in both the units of the data and in percentage form.

Still, while the MAPE is a near-universal metric for forecast accuracy, its drawbacks are poorly understood, and these can be so severe as to undermine the forecast accuracy assessment.

MAPE: The Issues

Many authors have warned about the use of the MAPE. A brief summary of the issues:

- Most companies calculate the MAPE by expressing the (absolute) forecast error as a percentage of the actual value A. Some, however, prefer a percentage of the forecast F; others, a percentage of the average of A and F; and a few use the higher of A or F. Greene and Tashman (2009) present the various explanations supporting each form.

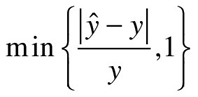

- The preference for use of the higher of A or F as the denominator may seem baffling; but, as by Hawitt (20102010), this form of the MAPE may be necessary if the forecaster wishes to report “forecast accuracy” rather than “forecast error.” This is the situation when you wish to report that your forecasts are 80% accurate rather than 20% in error on the average.

- Kolassa and Schutz (2007) describe three potential problems with the MAPE. One is the concern with forecast bias, which can lead us to inadvertently prefer methods that produce lower forecasts; a second is with the danger of using the MAPE to calculate aggregate error metrics across items; and the third is the effect on the MAPE of intermittent demands (zero orders in certain time periods). The authors explain that all three concerns can be overcome by use of an alternative metric to the standard MAPE called the MAD/MEAN ratio. I have long felt that the MAD/MEAN ratio is a superior metric to the MAPE, and recommend that you give this substitution serious consideration.

- The problem posed by intermittent demands is especially vexing. In the traditional definition of the MAPE—the absolute forecast error as a percentage of the actual A—a time period of zero orders (A = 0) means the error for that period is divided by zero, yielding an undefined result. In such cases, the MAPE cannot be calculated. Despite this, some software packages report a figure for the “MAPE” that is potentially misleading. Hoover (2006) proposes alternatives, including the MAD/MEAN ratio.

- Hyndman’s (2006) proposal for replacing the MAPE in the case of intermittent demands is the use of a scaled-error metric, which he calls the mean absolute scaled error or MASE. The MASE, he notes, is closely related to the MAD/MEAN ratio.

- Another issue is the aggregation problem: What metrics are appropriate for measurement of aggregate forecast error over a range (group, family, etc.) of items. Many companies calculate an aggregate error by starting with the MAPE of each item and then weighting the MAPE by the importance of the item in the group, but this procedure is problematic when the MAPE is problematic. Again, the MAD/MEAN ratio is a good alternative in this context as well.

Relative Error Metrics

The third category of error metrics is that of relative errors, the errors from a particular forecast method in relation to the errors from a benchmark method. As such, this type of metric, unlike basis metrics, can tell you whether a particular forecasting method has improved upon a benchmark. Hyndman (2006) provides an overview of some key relative-error metrics including his preferred metric, the MASE.

The main issue in devising a relative-error metric is the choice of benchmark. Many software packages use as a default benchmark the errors from a naïve model, one that always forecasts that next month will be the same as this month. A naïve forecast is a no-change forecast (another name used for the naïve model is the random walk). The ratio of the error from your forecasting method to that of the error from the naïve benchmark is called the relative absolute error. Averaging the relative absolute errors over the months of the forecast period yields an indication of the degree to which your method has improved on the naïve.

Of course, you could and should define your own benchmark; but then you’ll need to find out if your software does the required calculations. Too many software packages offer limited choices for accuracy metrics and do not permit variations that may interest you. More generally, the problem is that your software may not support best practices.

A relative-error metric not only can tell you how much your method improves on a benchmark; it provides a needed perspective for bad data situations. Bad data usually means high forecast errors, but high forecast errors do not necessarily mean that your forecast method has failed. If you compare your errors against the benchmark, you may find that you’ve still made progress, and that the source of the high error rate is not bad forecasting but bad data.

Benchmarking and Forecastability

Relative-error metrics represent one form of benchmarking, that in which the forecast accuracy of a model is compared to that of a benchmark model. Typically, the benchmark is a naïve model, one that forecasts “no change” from a base period.

Two other forms are more commonly employed. One is to benchmark against the accuracy of forecasts made for similar products or under similar conditions. Frequently, published surveys of forecast accuracy (from a sample of companies) are cited as the source of these benchmarks. Company names of course are not disclosed. This is external benchmarking.

In contrast, internal benchmarking refers to comparisons of forecasting accuracy over time, usually to determine whether improvements are being realized.

External Benchmarking

Kolassa (2008) has taken a critical look at these surveys and questions their value as benchmarks. Noting that “comparability” is the key in benchmarking, he identifies potential sources of incomparability in the product mix, time frame, granularity, and forecasting process. This article is worth careful consideration for the task of creating valid benchmarks.

Internal Benchmarking

Internal benchmarking is far more promising than external benchmarking, according to Hoover (2009) and Rieg (2008). Rieg develops a case study of internal benchmarking at a large automobile manufacturer in Germany. Using the MAD/MEAN ratio as the metric, he tracks the changes in forecasting accuracy over a 15-year period, being careful to distinguish organizational changes, which can be controlled, from changes in the forecasting environment, which are beyond the organization’s control.

Hoover provides a more global look at internal benchmarking. He first notes the obstacles that have inhibited corporate initiatives in tracking accuracy. He then presents an eight-step guide to the assessment of forecast accuracy improvement over time.

Forecastability

Forecastability takes benchmarking another step forward. Benchmarks give us a basis for comparing our forecasting performance against an internal or external standard. However, benchmarks do not tell us about the potential accuracy we can hope to achieve.

Forecastability concepts help define achievable accuracy goals.

Catt (2009) begins with a brief historical perspective on the concept of the data-generating process, the underlying process from which our observed data are derived. If this process is largely deterministic—the result of identifiable forces—it should be forecastable. If the process is essentially random—no identifiable causes of its behavior—it is unforecastable. Peter uses six data series to illustrate these fundamental aspects of forecastability. Now the question is what metrics are there to assess forecastability.

Several books, articles, and blogs have proposed the coefficient of variation as a forecastability metric. The coefficient of variation is the ratio of some measure of variation (e.g., the standard deviation) of the data to an average (normally the mean) of the data. It reveals something about the degree of variation around the average. The presumption made is that the more variable (volatile) the data series, the less forecastable it is; conversely, the more stable the data series, the easier it is to forecast.

Catt demonstrates, however, that the coefficient of variation does not account for behavioral aspects of the data other than trend and seasonality, and so has limitations in assessing forecastability. A far more reliable metric, he finds, is that of approximate entropy, which measures the degree of disorder in the data and can detect many patterns beyond mere trend and seasonality.

Yet, as Boylan (2009) notes, metrics based on variation and entropy are really measuring the stability-volatility of the data and not necessarily forecastability. For example, a stable series may nevertheless come from a data-generating process that is difficult to identify and hence difficult to forecast. Conversely, a volatile series may be predictable based on its correlation with other variables or upon qualitative information about the business environment. Still, knowing how stable-volatile a series is gives us a big head start, and can explain why some products are more accurately forecast than others.

Boylan argues that a forecastability metric should supply an upper and lower bound for forecast error. The upper bound is the largest degree of error that should occur, and is normally calculated as the error from a naïve model. After all, if your forecasts can’t improve on simple no-change forecasts, what have you accomplished? On this view, the relative-error metrics serve to tell us if and to what extent our forecast errors fall below the upper bound.

The lower bound of error represents the best accuracy we can hope to achieve. Although establishing a precise lower bound is elusive, Boylan describes various ways in which you can make the data more forecastable, including use of analogous series, aggregated series, correlated series, and qualitative information.

Kolassa (2009) compares the Catt stability metric with the Boylan forecastability bounds. He sees a great deal of merit in the entropy concept, pointing out its successful use in medical research, quantifying the stability in a patient’s heart rate. However, entropy is little understood in the forecasting community and is not currently supported by forecasting software. Hopefully, that will change, but he notes that we do need more research on the interrelation of entropy and forecast-error bounds.

These articles do not provide an ending to the forecastability story, but they do clarify the issues and help you avoid simplistic approaches.

Costs of Forecast Error

Forecast accuracy metrics do not reveal the financial impact of forecast error, which can be considerable. At the same time, we should recognize that improved forecast accuracy does not automatically translate into operational benefits (e.g., improved service levels, reduced inventory costs). The magnitude of the benefit depends upon the effectiveness of the forecasting and the planning processes. Moreover, there are costs to improving forecast accuracy, especially when doing so requires upgrades to systems, software, and training.

How can we determine the costs of forecast error and the costs and benefits of actions designed to reduce forecast error? A good starting point is the template provided by Catt (2007a). The cost of forecast error (CFE) calculation should incorporate both inventory costs (including safety stock) and the costs of poor service (stockouts).

The calculation requires (1) information or judgment calls about marginal costs in production and inventory, (2) a forecast-error measurement that results from a statistical forecast, and (3) the use of a statistical table (traditionally the Normal Distribution) to translate forecast errors into probabilities of stockouts.

The potential rewards from a CFE calculation can be large. First, the CFE helps guide decisions about optimal service level and safety stock, often preventing excessive inventory. Additionally, CFE calculations could reveal that systems upgrades may not be worth the investment cost.

Clarifications and enhancements to this CFE template are offered by Boylan (2007) and Willemain (2007). Boylan recommends that service-level targets be set strategically—at higher levels of the product hierarchy—than tactically at the item level. John also shows how you can get around the absence of good estimates of marginal costs by creating tradeoff curves and applying sensitivity analysis to cost estimates.

Willemain explains that the use of the normal distribution is not always justifiable, and can lead to excessive costs and poor service. Situations in which we really do need an alternative to the normal distribution—such as the bootstrap approach—include service parts, and short and intermittent demand histories. He also makes further suggestions for simplifying the cost assumptions required in the CFE calculation.

Catt’s (2007b) reply is to distinguish the cost inputs that can usually be extracted from the accounting system from those that require some subjectivity. He concurs with Boylan’s recommendation of the need for sensitivity analysis of the cost estimates and shows how the results can be displayed as a CFE surface plot. Such a plot may reveal that the CFE is highly sensitive to, say, the inventory carrying charge, but insensitive to the service level.

Software could and should facilitate the CFE calculation; however, Catt sadly notes that he has yet to find a package that does: “Vendors often promise great benefits but provide little evidence of them.”

And this leads us to our final taboo:

REFERENCES

- Boylan, J. (2007). Key assumptions in calculating the cost of forecast error. Foresight: International Journal of Applied Forecasting 8, 22–24.

- Boylan, J. (2009). Toward a more precise definition of forecastability. Foresight: International Journal of Applied Forecasting 13, 34–40.

- Catt, P. (2007a). Assessing the cost of forecast error: A practical example. Foresight: International Journal of Applied Forecasting 7, 5–10.

- Catt, P. (2007b). Reply to “Cost of Forecast Error” commentaries. Foresight 8, 29–30.

- Catt, P. (2009). Forecastability: Insights from physics, graphical decomposition, and information theory. Foresight: International Journal of Applied Forecasting 13, 24–33.

- Greene, K., and Tashman, L. (2008). Should we define forecast error as E = F – A or E = A – F? Foresight 10, 38–40.

- Greene, K., and Tashman, L. (2009). Percentage Error: What Denominator? Foresight 12, 36–40.

- Hawitt, D. (2010), Should you report forecast error or forecast accuracy? Foresight 19, Summer 2010, p. 46.

- Hoover, J. (2006). Measuring forecast accuracy: Omissions in today’s forecasting engines and demand planning software. Foresight: International Journal of Applied Forecasting 4, 32–35.

- Hoover, J. (2009). How to track forecast accuracy to guide forecast process improvement. Foresight 14, 17–23.

- Hyndman, R. (2006). Another look at forecast-accuracy metrics for intermittent demand. Foresight 4, 43–46.

- Kolassa, S., and Schütz, W. (2007). Advantages of the MAD/MEAN ratio over the MAPE. Foresight: International Journal of Applied Forecasting 6, 40–43.

- Kolassa, S. (2008). Can we obtain valid benchmarks from published surveys of forecast accuracy? Foresight 11, 6–14.

- Kolassa, S. (2009). How to assess forecastability. Foresight: International Journal of Applied Forecasting 13, 41–45.

- Pearson, R. (2007). An expanded prediction-realization diagram for assessing forecast errors. Foresight 7, 11–16.

- Rieg, R. (2008). Measuring improvement in forecast accuracy, a case study. Foresight: International Journal of Applied Forecasting 11, 15–20.

- Tashman, L., and Hoover, J. (2000). Diffusion of forecasting principles through software. In J. S.Armstrong (ed.), Principles of Forecasting 651–676.

- Willemain, T. (2007). Use of the normal distribution in calculating the cost of forecast error. Foresight: International Journal of Applied Forecasting 8, 25–26.

3.2 How to Track Forecast Accuracy to Guide Forecast Process Improvement2

Jim Hoover

Introduction

One of the more important tasks in supply-chain management is improving forecast accuracy. Because your investment in inventory is tied to it, forecast accuracy is critical to the bottom line. If you can improve accuracy across your range of SKUs, you can reduce the safety-stock levels needed to reach target fill rates.

I have seen a great deal of information in the forecasting literature on measuring forecasting accuracy for individual items at a point in time but see very little attention paid to the issues of tracking changes in forecasting accuracy over time, especially for the aggregate of items being forecast. Foresight has begun to address this topic with a case study from Robert Rieg (2008).

In practice, the portion of firms tracking aggregated accuracy is surprisingly small. Teresa McCarthy and colleagues (2006) reported that only 55% of the companies they surveyed believed that forecasting performance was being formally evaluated. When I asked the same question at a recent conference of forecasting practitioners, I found that approximately half of the participants indicated that their company tracked forecast accuracy as a key performance indicator; less than half reported that financial incentives were tied to forecast-accuracy measurement.

Obstacles to Tracking Accuracy

Why aren’t organizations formally tracking forecast accuracy? One reason is that forecasts are not always stored over time. Many supply-chain systems with roots in the 1960s and 1970s did not save prior-period forecasts because of the high cost of storage in that era. Technology advances have reduced storage costs and, while the underlying forecast applications have been re-hosted on new systems, they have not been updated to retain prior forecasts, thus forfeiting the possibility of tracking performance over time.

A second reason is that saving the history in a useful manner sometimes requires retention of the original customer-level demand data. These are the data that can later be rebuilt into different levels of distribution center activity, when DCs are added or removed. This additional requirement creates a much larger storage challenge than saving just the aggregated forecasts.

Third, there are companies that haven’t settled on a forecast-accuracy metric. While this may seem to be a simple task, the choice of metric depends on the nature of the demand data. For intermittent demands, popular metrics such as the Mean Absolute Percentage Error (MAPE) are inappropriate, as pointed out in Hoover (2006).

Finally, some companies don’t have processes in place that factor forecast-accuracy metrics into business decisions. So they lack the impetus to track accuracy.

Multistep Tracking Process

A process for effective tracking of forecasting accuracy has a number of key steps, as shown in Figure 3.1.

Figure 3.1 Key Steps in the Tracking Process

Step 1. Decide on the Forecast-Accuracy Metric

For many forecasters, the MAPE is the primary forecast-accuracy metric. Because the MAPE is scale-independent (since it is a percentage error, it is unit free), it can be used to assess and compare accuracy across a range of items. Kolassa and Schutz (2007) point out, however, that this virtue is somewhat mitigated when combining low- and high-volume items.

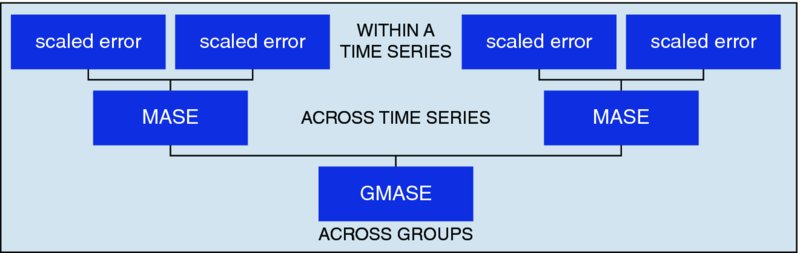

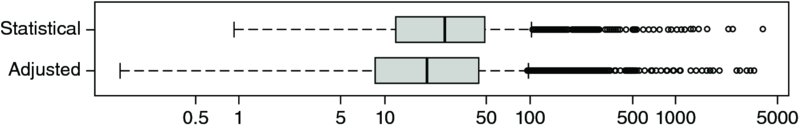

The MAPE is also a very problematic metric in certain situations, such as intermittent demands. This point was made in a feature section in Foresight entitled “Forecast-Accuracy Metrics for Inventory Control and Intermittent Demands” (Issue 4, June 2006). Proposed alternatives included the MAD/Mean ratio, a metric which overcomes many problems with low-demand SKUs and provides consistent measures across SKUs. Another metric is the Mean Absolute Scaled error, or MASE, which compares the error from a forecast model with the error resulting from a naïve method. Slightly more complex is GMASE, proposed by Valentin (2007), which is a weighted geometric mean of the individual MASEs calculated at the SKU level. Still other metrics are available, including those based on medians rather than means and using the percentage of forecasts that exceed an established error threshold.

In choosing an appropriate metric, there are two major considerations. The metric should be scale-independent so that it makes sense when applied to an aggregate across SKUs. Secondly, the metric should be intuitively understandable to management. The popularity of the MAPE is largely attributable to its intuitive interpretation as an average percentage error. The MAD-to-Mean is nearly as intuitive, measuring the average error as a percent of the average volume. Less intuitive are the MASE and GMASE.

I would recommend the more intuitive metrics, specifically MAD-to-Mean, because they are understandable to both management and forecasters. Using something as complicated as MASE or GMASE can leave some managers confused and frustrated, potentially leading to a lack of buy-in or commitment to the tracking metric.

Step 2. Determine the Level of Aggregation

The appropriate level of aggregation is the one where major business decisions on resource allocation, revenue generation, and inventory investment are made. This ensures that your forecast-accuracy tracking process is linked to the decisions that rely on the forecasts.

If you have SKUs stored both in retail sites and in a distribution center (DC), you will have the option to track forecast error at the individual retail site, at the DC, or at the overall aggregate level. If key business decisions (such as inventory investment and service level) are based on the aggregate-level SKU forecasts and you allocate that quantity down your supply chain, then you should assess forecast accuracy at the aggregate level. If you forecast by retail site and then aggregate the individual forecasts up to the DC or at the overall SKU aggregate, then you should be measuring forecasting accuracy at the individual site level. Again, the point is to track accuracy at the level where you make the important business decisions.

Additionally, you should consider tracking accuracy across like items. If you use one service-level calculation for fast-moving, continuous-demand items, and a second standard for slower- and intermittent-demand items, you should calculate separate error measures for the distinct groups.

Table 3.1 illustrates how the aggregation of the forecasts could be accomplished to calculate an average aggregate percent error for an individual time period.

Step 3. Decide Which Attributes of the Forecasting Process to Store

There are many options here, including:

- the actual demands

- the unadjusted statistical forecasts (before override or modifications)

- when manual overrides were made to the statistical forecast, and by whom

- when outliers were removed

- the method used to create the statistical forecast and the parameters of that method

- the forecaster responsible for that SKU

- when promotions or other special events occurred

- whether there was collaboration with customers or suppliers

- the weights applied when allocating forecasts down the supply chain

Table 3.1 Calculation of an Aggregate Percent Error

| SKUs at Store Location 1 | History Current Period | Forecast for Current Period | Error (History −Forecast) | Absolute Error | Absolute Percent Error |

| SKU 1 | 20 | 18 | 2 | 2 | 10.0% |

| SKU 2 | 10 | 15 | −5 | 5 | 50.0% |

| SKU 3 | 50 | 65 | −15 | 15 | 30.0% |

| SKU 4 | 5 | 2 | 3 | 3 | 60.0% |

| SKU 5 | 3 | 8 | −5 | 5 | 166.7% |

| SKU 6 | 220 | 180 | 40 | 40 | 18.2% |

| Average Error = 55.8% | |||||

Figure 3.2 Flowchart for Storing Attributes of a Forecasting Process

Choosing the right attributes facilitates a forecasting autopsy, which seeks explanations for failing to meet forecast-accuracy targets. For example, it can be useful to know if forecast errors were being driven by judgmental overrides to the statistical forecasts. To find this out requires that we store more than just the actual demands and final forecasts.

Figure 3.2 presents a flowchart illustrating the sequence of actions in storing key attributes. Please note that the best time to add these fields is when initially designing your accuracy-tracking system. It is more difficult and less useful to add them later, it will cost more money, and you will have to baseline your forecast autopsy results from the periods following any change in attributes. It is easier at the outset to store more data elements than you think you need, rather than adding them later.

Step 4. Apply Relevant Business Weights to the Accuracy Metric

George Orwell might have put it this way: “All forecasts are equal, but some are more equal than others.” The simple truth: You want better accuracy when forecasting those items that, for whatever reason, are more important than other items.

The forecast-accuracy metric can reflect the item’s importance through assignment of weights. Table 3.2 provides an illustration, using inventory holding costs to assign weights.

Table 3.2 Calculating a Weighted Average Percent

| SKUs at Store Location 1 | History Current Period | Forecast for Currant Period | Error (History-Forecast) | Absolute Error | Absolute Percent Error | Cost of Item | Inventory Holding Cost | Percentage of Total Holding Costs | Weighted APE Contribution | |

| SKU 1 | 20 | 18 | 2 | 2 | 10.0% | $50.00 | $900.00 | 5.3% | 0.5% | |

| SKU 2 | 10 | 15 | −5 | 5 | 50.0% | $50.00 | $750.00 | 4.4% | 2.2%. | |

| SKU 3 | 50 | 65 | −15 | 15 | 30.0% | $25.00 | $1,625.00 | 9.6% | 2.9% | |

| SKU 4 | 5 | 2 | 3 | 3 | 60.0% | $5.00 | $10.00 | 0.1% | 0.0% | |

| SKU 5 | 3 | 8 | −5 | 5 | 166.7% | $15.00 | $120.00 | 0.7% | 1.2% | |

| SKU 6 | 220 | 180 | 40 | 40 | 18.2% | $75.00 | $13,500.00 | 79.9% | 14.5% | |

| Weighted summary APE calculated from individual weights applied to SKU’s based on holding costs | Summarized Monthly APE = 21.4% Unweighted MAPE = 55.8% | |||||||||

As shown in this example, SKUs 3 and 6 have the larger weights and move the weighted APE metric down from the average of 55.8% (seen in Table 3.1) to 21.4%.

Use the weighting factor that makes the most sense from a business perspective to calculate your aggregated periodic forecast-accuracy metric. Here are some weighting factors to consider:

- Inventory holding costs

- Return on invested assets

- Expected sales levels

- Contribution margin of the item to business bottom line

- Customer-relationship metrics

- Expected service level

- “Never out” requirements (readiness-based)

- Inventory

Weighting permits the forecaster to prioritize efforts at forecast-accuracy improvement.

Step 5. Track the Aggregated Forecast-Accuracy Metric over Time

An aggregate forecast-accuracy metric is needed by top management for process review and financial reporting. This metric can serve as the basis for tracking process improvement over time. Similar to statistical process-control metrics, the forecast-accuracy metric will assess forecast improvement efforts and signal major shifts in the forecast environment and forecast-process effectiveness, both of which require positive forecast-management action.

Figure 3.3 illustrates the tracking of a forecast-error metric over time. An improvement process instituted in period 5 resulted in reduced errors in period 6.

Step 6. Target Items for Forecast Improvement

Forecasters may manage hundreds or thousands of items. How can they monitor all of the individual SKU forecasts to identify those most requiring improvement? Simply put, they can’t, but the weighting factors discussed in Step 4 reveal those items that have the largest impact on the aggregated forecast-accuracy metric (and the largest business effect). Table 3.3 illustrates how to identify the forecast with the biggest impact from the earlier example.

You can see that SKU 6 has the largest impact on the weighted APE tracking metric. Even though SKU 4 has the second-highest error rate of all of the SKUs, it has very little effect on the aggregated metric.

Figure 3.3 Illustration of a Tracking Signal

Step 7. Apply Best Forecasting Practices

Once you have identified those items where forecast improvement should be concentrated, you have numerous factors to guide you. Did you:

- Apply the principles of forecasting (Armstrong, 2001)?

- Try automatic forecasting methods and settings?

- Analyze the gains or losses from manual overrides?

- Identify product life-cycle patterns?

- Determine adjustments that should have been made (e.g., promotions)?

- Evaluate individual forecaster performance?

- Assess environmental changes (recession)?

As Robert Reig reported in his case study of forecast accuracy over time (2008), significant changes in the environment may radically affect forecast accuracy. Events like the current economic recession, the entry of new competition into the market space of a SKU, government intervention (e.g., the recent tomato salmonella scare), or transportation interruptions can all dramatically change the accuracy of your forecasts. While the change might not be the forecaster’s “fault,” tracking accuracy enables a rapid response to deteriorating performance.

Table 3.3 Targets for Forecast Improvement

Step 8. Repeat Steps 4 through 7 Each Period

All of the factors in Step 7 form a deliberative, continuous responsibility for the forecasting team. With the proper metrics in place, forecasters can be held accountable for the items under their purview. Steps 4–7 should be repeated each period, so that the aggregated forecast-accuracy metric is continually updated for management and new targets for improvement emerge.

Conclusions and Recommendations

Forecast accuracy has a major impact on business costs and profits. The forecasting process must be evaluated by individual and aggregated forecast-accuracy metrics. Tracking these metrics over time is critical to driving process improvement.

See if your company has included forecast accuracy as a key performance indicator for management. If it has not, create a plan to begin recording accuracy at the aggregated level, and sell the idea to management. Build a tracking database that saves the key attributes of the forecasting process. Doing so will permit forecasting autopsies, which drive improvement efforts and prioritization of forecaster workload. See if you have weighted the forecasts to include the relative business impact, and make sure you have a structured approach to improving the individual and aggregated forecast accuracy over time. The data gathered in a good tracking process should lead to any number of improved business outcomes.

REFERENCES

- Armstrong, J. S. (ed.) (2001). Principles of Forecasting. Boston: Kluwer Academic Publishers.

- Hoover, J. (2006). Measuring forecast accuracy: Omissions in today’s forecasting engines and demand planning software. Foresight: International Journal of Applied Forecasting 4, 32–35.

- Kolassa, S., and W. Schütz (2007). Advantages of the MAD/Mean ratio over the MAPE. Foresight: International Journal of Applied Forecasting 6, 40–43.

- McCarthy, T., D. Davis, L. Glolicic, and J. Mentzer (2006). The evolution of sales forecasting management: A 20-year longitudinal study of forecasting practices. Journal of Forecasting 25, 303–324.

- Rieg, R. (2008). Measuring improvement in forecast accuracy, a case study. Foresight: International Journal of Applied Forecasting 11, 15–20.

- Valentin, L. (2007). Use scaled errors instead of percentage errors in forecast evaluations. Foresight: International Journal of Applied Forecasting 7, 17–22.

3.3 A “Softer” Approach to the Measurement of Forecast Accuracy3

John Boylan

The Complement of Mean Absolute Percent Error

Recently, I was invited to talk on new developments in forecasting to a Supply-Chain Planning Forum of a manufacturing company with facilities across Europe. I had met the group supply-chain director previously, but not the senior members of his team. To get better acquainted, I arrived on the evening before the forum.

In informal discussion, it soon became clear that forecast-accuracy measurement was a hot topic for the company. Documentation was being written on the subject, and the managers thought my arrival was very timely. I made a mental note to add some more slides on accuracy measurement and asked if they had already prepared some draft documentation. They had, and this was duly provided for me just before I turned in for the night.

In the documents, there was a proposal to define forecast accuracy (FA) as the complement of mean absolute percentage error (MAPE):

where MAPE is found by working out the error of each forecast as a percentage of the actual value (ignoring the sign if the error is negative), and then calculating the overall mean. If the value of FA was negative, it would be forced to zero, to give a scale of 0 to 100.

What would your advice be?

Forecast Researchers and Practitioners: Different Needs and Perspectives

I know how some feel about this topic, as there’s been a recent discussion thread on forecast accuracy in the International Institute of Forecasters “Linked In” group. Keenan Wong, demand-planning analyst at Kraft Food, Toronto, wondered, “If 1 – Forecast Error gives me forecast accuracy, does 1 – MAPE give me mean absolute percent accuracy?” The question sparked a lively discussion, with over 20 comments at the time of this writing. I want to focus on just two, as they summarize the tensions in my own mind:

- Len Tashman responded: “Both forms of the ‘1 minus’ offer only very casual meanings of accuracy. Technically, they mean nothing—and 1 –MAPE is frequently misunderstood to mean the percentage of time the forecast is on the mark.”

- Alec Finney commented: “A very powerful way of defining forecast accuracy is to agree on a written definition with key stakeholders. Ultimately, it’s not about formulae, numerators, denominators, etc., but about an easy-to-understand, transparent indicator.”

Both of these comments contain significant truths, and yet they come from very different perspectives. Can these viewpoints possibly be reconciled? I believe that they can.

A good starting point is a comment by Hans Levenbach, also from the discussion group: “Accuracy needs to be defined in terms of the context of use, with practical meaning in mind for users.” I think it is instructive to look at the needs of two groups of users—forecasting researchers and forecasting practitioners—to see how they are similar and how they vary.

The first requirement for the forecasting researcher is that accuracy metrics should not be unduly influenced by either abnormally large or small observations (outliers). If they are so influenced, then research results do not generalize to other situations. Instead, the results would depend on the vagaries of outliers being present or absent from datasets. This is an example of where the needs of researchers and practitioners coincide. The practitioner may not need to generalize from one collection of time series to another, but does need to generalize from findings in the past to recommendations for the future.

A second requirement for the forecasting researcher is scale independence. After the first M-Competition, which compared a range of forecasting methods on 1,001 real-world time-series, it was found that the overall results according to some measures depended very heavily on less than 1% of the series, typically those with the highest volumes. From a researcher’s perspective, this is a real issue: Again, the results may not generalize from one collection of time series to another. Researchers typically get around this problem by dividing errors by actual values (or means of actual values). Thus, an error of 10% for a very low-volume item receives the same weight as an error of 10% for a very high-volume item.

This is a good example of where the needs of researchers and practitioners may not coincide. The practitioner is likely to say that the forecast error of a high-value, high-volume item should not receive the same weight as the forecast error of a low-value, low-volume item. (Exceptions arise when the forecast accuracy of a low-value item is important because its availability allows the sale of a related high-value item.) Consideration of value-related importance of forecast accuracy has led some practitioners to seek alternative measures, such as weighted MAPEs.

This discussion leads me to two conclusions:

- When designing forecast-accuracy measures for practical application, we should not ignore the insights that have been gained by forecasting researchers.

- Nevertheless, the requirements of forecasting researchers and practitioners are not identical. We must begin with the needs of the practitioner when choosing an error measure for a particular practical application.

The Soft Systems Approach

An insightful way of looking at forecasting-systems design is through the lens of Soft Systems Methodology (SSM), an approach developed principally by Peter Checkland. It is well known in the UK operational research community, but less so in other countries. A good introduction can be found in the book Learning for Action (Checkland and Poulter, 2006).

A summary of the SSM approach, in the context of forecasting systems, is shown in Figure 3.4.

Figure 3.4 Soft Systems Methodology Applied to Forecasting

Relevant Systems and Root Definitions

SSM starts by asking a group of managers, “What relevant systems do you wish to investigate?” This simple question is worth pondering. I was involved in a study a decade ago (Boylan and Williams, 2001) in which the managers concluded there were three systems of interest: (i) HR Planning System; (ii) Marketing Planning System; and (iii) Financial Planning System. It then became clear to the managers that all three systems need the support of a fourth system, namely a Forecasting System.

SSM requires managers to debate the intended purpose of systems and to describe the relevant systems in a succinct root definition. The managers agreed that the root definition for HR Planning would be:

A system, owned by the Board, and operated out of Corporate Services, which delivers information about production and productivity to team leaders, so that new employees can be started at the right time to absorb forecasted extra business.

Root definitions may appear bland, rather like mission statements. However, the main benefit is not the end product but the process by which managers debate what a system is for, how it should be informed by forecasts, and then come to an agreement (or at least some accommodation) on the system and its purpose. In the HR planning example, the implication of the root definition is that planning should be informed by forecasts of extra business, production, and productivity. The root definition was a product of its time, when demand was buoyant, but could be easily adapted to take into account more difficult market conditions, when decisions need to be made about not replacing departing employees or seeking redundancies.

Effectiveness Measures and Accuracy Measures

The root definition offers a guide not only to the required forecasts, but also to the purpose of the forecasts. For HR planning, the purpose was “so that new employees can be started at the right time to absorb forecasted extra business.” In Soft Systems Methodology, this statement of purpose helps to specify the metrics by which the system should be measured, in three main categories:

- System effectiveness measures whether the system is giving the desired effect. In the example, the question is whether the HR planning system enables extra business to be absorbed. Appropriate effectiveness metrics would reflect the managers’ priorities, including measures like “business turned away,” “delays in completing business orders,” and “cost of employees hired.” These measures are influenced by forecast accuracy and have been described as “accuracy implication metrics” (Boylan and Syntetos, 2006).

- System efficiency measures the cost of resources to make the system work (strictly, the ratio of outputs to inputs). This has received relatively little attention in the forecasting literature but is an important issue for practitioners. Robert Fildes and colleagues (2009) found that small adjustments had a negligible effect on the accuracy of computer-system-generated forecasts. The proportion of employee time spent on such small adjustments would offer a useful measure of efficiency (or inefficiency!).

- System efficacy measures whether the system works at an operational level. In our HR example, efficacy measures would include the timeliness and accuracy of forecasts. The accuracy metrics should be chosen so that they have a direct bearing on the system-performance measures. The exact relationship between forecast accuracy measures and effectiveness measures often cannot be expressed using a simple formula. However, computer-based simulations can help us understand how different error metrics can influence measures of system effectiveness.

It is sometimes asked why measures of forecast accuracy are needed if we have measures of system effectiveness. After all, it’s the business impact of forecasts that is most important to the practitioner. While this is true, forecast accuracy is vital for diagnosis of system problems. Suppose we find that additional staff is being taken on, but not quickly enough to absorb the new business. Then we can turn to measures such as the mean error (which measures forecast bias) to see if the forecasts are consistently too low, and whether another forecast method would be able to detect and predict the trend more accurately.

In a supply chain context, the first type of monitor often relates to stock-holding or service-level measures. These may be expressed in terms of total system cost or service-level measures such as fill rates, reflecting the priorities of the company. When system performance begins to deteriorate in terms of these metrics, then diagnosis is necessary. If the reason for poorer system performance relates to forecasting, rather than ordering policy, then we need to examine forecast accuracy. Suppose that stock levels appear to be too low, with too many stock-outs, and that the system is based on order-up-to levels set at the 95% quantile of demand, calculated from forecasts of the mean and standard deviation of demand. A diagnostic check of forecast accuracy relating to these quantities may reveal why the quantile estimates are too low, and remedial action can be taken.

Using a Structured Approach in Practice

I should stress that Soft Systems Methodology is just one structured approach that can be used by managers to think through their needs and to specify forecast-accuracy measures accordingly. Others are available, too. The main benefit of a participative, structured approach is to encourage managers to gain greater understanding of effectiveness measures and forecast-accuracy measures that are most appropriate for their organization.

Let’s return here to our quotations from Len Tashman and Alec Finney. They both have understanding at the heart of their comments. Len is concerned about managers’ misunderstanding of metrics, and Alec wants to promote easy-to-understand indicators. From my experience, the greater the participation by managers in the high-level designs of systems, the better their understanding.

Soft Systems Methodology is quite demanding and challenging of the managers who participate in the process. They must agree on the relevant systems, hammer out written root definitions, and specify measures of system effectiveness. This requires open debate, which may or may not be facilitated by an independent party familiar with SSM. The stage of debating metrics of forecast accuracy poses an additional challenge: understanding how accuracy metrics have a bearing on effectiveness. If the managers are already savvy in such matters, they will be ready to face this additional challenge. If not, it may be beneficial to use a facilitator who is an expert in forecasting methods and error metrics. The facilitator should desist from playing a dominant role, but be well placed to challenge the specification of measures that would be unduly affected by outliers, suffer from “division by zero” problems, or have other technical shortcomings.

This approach allows for genuine growth in understanding and ownership of measures that have been agreed on by managers, as suggested by Alec. The involvement of an expert facilitator will avoid the sort of problems highlighted by Len.

Postscript: Advice on the Complement of MAPE

Returning to the incident prompting these reflections, I thought long and hard about how to advise a company intending to use the “Complement of MAPE” as its error measure. There was insufficient time to go back to first principles, and to ask them to specify the relevant systems, root definitions, and measures of effectiveness. It would be inappropriate for me, as a visiting speaker not acting in a full consulting capacity, to propose a set of alternative measures, especially without the necessary background of the systems supported by the company’s forecasts. Still, I felt that I should not let the proposed measure go unchallenged.

In my talk, I gave examples where the forecast error was so large as to be greater than the actual value itself. I asked if this was realistic for some stock-keeping units in the company and was assured that it was. I then pointed out that using their definition would result in a forecast accuracy of zero, whether the error was just greater than the actual value or far exceeded it. This gave the group pause, and they are currently reviewing their metrics.

My recommendation for this company—indeed, for any company—is not to adopt standard recommendations such as “use Mean Absolute Percentage Error.” Rather, by working backwards from first principles, involving the key stakeholders in the process, it should be possible to agree on system-effectiveness measures that are relevant to the company and, in turn, to forecast-error measures that have a direct bearing on system effectiveness.

REFERENCES

- Boylan J. E., and A. A. Syntetos (2006). Accuracy and accuracy-implication metrics for intermittent demand. Foresight 4 (Summer), 39–42.

- Boylan, J. E., and Williams, M. A. (2001). Introducing forecasting and monitoring systems to an SME: The role of Soft Systems Methodology. In M. G. Nicholls, S. Clarke, and B. Lehaney (Eds.), Mixed-Mode Modelling: Mixing Methodologies for Organizational Intervention. Dordrecht: Kluwer Academic Publishers.

- Checkland, P., and J. Poulter (2006). Learning for Action: A Short Definitive Account of Soft Systems Methodology and Its Use for Practitioners, Teachers and Students. Hoboken, NJ: John Wiley & Sons.

- Fildes, R. A., P. Goodwin, M. Lawrence, and K. Nikolopoulos (2009). Effective forecasting and judgmental adjustments: An empirical evaluation and strategies for improvement in supply-chain planning. International Journal of Forecasting 25, 3–23.

- Hawitt, D. (2010). Should you report forecast error or forecast accuracy? Foresight 18 (Summer), 46.

- Hoover, J., and M. Little (2011). Two commentaries on forecast error vs. forecast accuracy. Foresight 18 (Spring), 45–46.

3.4 Measuring Forecast Accuracy4

Rob Hyndman

Everyone wants to know how accurate their forecasts are. Does your forecasting method give good forecasts? Are they better than the competitor methods?

There are many ways of measuring the accuracy of forecasts, and the answers to these questions depends on what is being forecast, what accuracy measure is used, and what data set is used for computing the accuracy measure. In this article, I will summarize the most important and useful approaches.

Training and Test Sets

It is important to evaluate forecast accuracy using genuine forecasts. That is, it is invalid to look at how well a model fits the historical data; the accuracy of forecasts can only be determined by considering how well a model performs on new data that were not used when estimating the model. When choosing models, it is common to use a portion of the available data for testing, and use the rest of the data for estimating (or “training”) the model. Then the testing data can be used to measure how well the model is likely to forecast on new data.

Figure 3.5 A time series is often divided into training data (used to estimate the model) and test data (used to evaluate the forecasts).

The size of the test data set is typically about 20% of the total sample, although this value depends on how long the sample is and how far ahead you want to forecast. The size of the test set should ideally be at least as large as the maximum forecast horizon required.

The following points should be noted:

- A model that fits the data well does not necessarily forecast well.

- A perfect fit can always be obtained by using a model with enough parameters.

- Overfitting a model to data is as bad as failing to identify the systematic pattern in the data.

Some references describe the test data as the “hold-out set” because these data are “held out” of the data used for fitting. Other references call the training data the “in-sample data” and the test data the “out-of-sample data.”

Forecast Accuracy Measures

Suppose our data set is denoted by y1 , . . . , yT, and we split it into two sections: the training data (y1 , . . . , yN) and the test data (yN+1 , . . . , yT ). To check the accuracy of our forecasting method, we will estimate the parameters using the training data, and forecast the next T − N observations. These forecasts can then be compared to the test data.

The h-step-ahead forecast can be written as N+h|N. The “hat” notation indicates that it is an estimate rather than an observed value, and the subscript indicates that we are estimating N+h using all the data observed up to and including time N.

The forecast errors are the difference between the actual values in the test set and the forecasts produced using only the data in the training set. Thus

These errors are on the same scale as the data. For example, if yt is sales volume in kilograms, then et is also in kilograms. Accuracy measures that are based directly on et are therefore scale-dependent and cannot be used to make comparisons between series that are on different scales. The two most commonly used scale-dependent measures are based on the absolute errors or squared errors:

When comparing forecast methods on a single data set, the MAE is popular as it is easy to understand and compute. The percentage error is given by pt = 100et/yt. Percentage errors have the advantage of being scale-independent, and so are frequently used to compare forecast performance between different data sets. The most commonly used measure is:

Measures based on percentage errors have the disadvantage of being infinite or undefined if yt = 0 for any observation in the test set, and having extreme values when any yt is close to zero. Another problem with percentage errors that is often overlooked is that they assume a scale based on quantity. If yt is measured in dollars, or kilograms, or some other quantity, percentages make sense. On the other hand, a percentage error makes no sense when measuring the accuracy of temperature forecasts on the Fahrenheit or Celsius scales, because these are not measuring a quantity. One way to think about it is that percentage errors only make sense if changing the scale does not change the percentage. Changing yt from kilograms to pounds will give the same percentages, but changing yt from Fahrenheit to Celsius will give different percentages. Scaled errors were proposed by Hyndman and Koehler (2006) as an alternative to using percentage errors when comparing forecast accuracy across series on different scales. A scaled error is given by qt = et/Q where Q is a scaling statistic computed on the training data. For a nonseasonal time series, a useful way to define the scaling statistic is the mean absolute difference between consecutive observations:

That is, Q is the MAE for naïve forecasts computed on the training data. Because the numerator and denominator both involve values on the scale of the original data, qt is independent of the scale of the data. A scaled error is less than one if it arises from a better forecast than the average naïve forecast computed on the training data. Conversely, it is greater than one if the forecast is worse than the average naïve forecast computed on the training data. For seasonal time series, a scaling statistic can be defined using seasonal naïve forecasts:

The mean absolute scaled error is simply

The value of Q is calculated using the training data because it is important to get a stable measure of the scale of the data. The training set is usually much larger than the test set, and so allows a better estimate of Q. Figure 3.6 shows forecasts for quarterly Australian beer production (data source: Australian Bureau of Statistics, Cat. No. 8301.0.55.001). An ARIMA model was estimated on the training data (data from 1992 to 2006), and forecasts for the next 14 quarters were produced. The actual values for the period 2007–2010 are also shown. The forecast accuracy measures are computed in Table 3.4. The scaling constant for the MASE statistic was Q = 14.55 (based on the training data 1992–2006). Figure 3.6 Forecasts of Australian quarterly beer production using an ARIMA model applied to data up to the end of 2006. The thin line shows actual values (in the training and test data sets) while the thick line shows the forecasts. Table 3.4 Accuracy Measures Computed from ARIMA Forecasts for the 14 Observations in the Test DataScale-Dependent Errors

![]()

Percentage Errors

![]()

Scaled Errors

![]()

Example: Australian Quarterly Beer Production

Actual

Forecast

Error

Percent Error

2007 Ql

427

423.69

3.31

0.78

2007 Q2

383

386.88

−3.88

−1.01

2007 Q3

394

404.71

−10.71

−2.72

2007 Q4

473

483.59

−10.59

−2.24

200S Ql

420

423.81

−3.81

−0.91

200S Q2

390

385.42

4.58

1.17

200S Q3

410

403.25

6.75

1.65

2008 Q4

488

482.13

5.87

1.20

2009 Ql

415

422.35

−7.35

−1.77

2009 Q2

398

383.96

14.04

3.53

2009 Q3

419

401.79

17.21

4.11

2009 Q4

488

480.67

7.33

1.50

2010 Ql

414

420.89

−6.89

−1.66

2010 Q2

374

382.50

−8.50

−2.27

MAE

7.92

RMSE

8.82

MAPE

1.89%

MASE

0.54

Time-Series Cross-Validation

For short time series, we do not want to limit the available data by splitting some off in a test set. Also, if the test set is small, the conclusions we draw from the forecast accuracy measures may not be very reliable. One solution to these problems is to use time-series cross-validation.

In this approach, we use many different training sets, each one containing one more observation than the previous one. Figure 3.7 shows the series of training sets (in black) and test sets (in gray). The forecast accuracy measures are calculated on each test set and the results are averaged across all test sets (adjusting for their different sizes).

A variation on this approach focuses on a single forecast horizon for each test set. Figure 3.8 shows a series of test sets containing only one observation in each case. Then the calculation of accuracy measures is for one-step forecasts, rather than averaging across several forecast horizons.

Figure 3.7 In time series cross-validation, a series of training and test sets are used. Each training set (black) contains one more observation than the previous one, and consequently each test set (gray) has one fewer observations than the previous one.

Figure 3.8 Time-series cross-validation based on one-step forecasts. The black points are training sets, the gray points are test sets, and the light-gray points are ignored.

In any of these cross-validation approaches, we need a minimum size for the training set because it is often not possible to do any meaningful forecasting if there is not enough data in the training set to estimate our chosen model. The minimum size of the training set depends on the complexity of the model we want to use.

Suppose k observations are required to produce a reliable forecast. Then the process works as follows:

- Select the observation at time k + i for the test set, and use the observations at times 1, 2, . . . , k + i − 1 to estimate the forecasting model. Compute the error on the forecast for time k + i.

- Repeat the above step for i = 1, 2, . . . , T − k where T is the total number of observations.

- Compute the forecast accuracy measures based on the errors obtained.

This procedure is sometimes known as evaluation on a “rolling forecasting origin” because the “origin” (k + i − 1) at which the forecast is based rolls forward in time.

With time-series forecasting, one-step forecasts may not be as relevant as multistep forecasts. In this case, the cross-validation procedure based on a rolling forecasting origin can be modified to allow multistep errors to be used. Suppose we are interested in models that produce good h-step-ahead forecasts: