11

Bayesian Stochastic Blockmodeling

Tiago P. Peixoto

Department of Mathematical Sciences and Centre for Networks and Collective Behaviour, University of Bath, United Kingdom, and ISI Foundation, Turin, Italy

This chapter provides a self-contained introduction to the use of Bayesian inference to extract large-scale modular structures from network data, based on the stochastic blockmodel (SBM), as well as its degree-corrected and overlapping generalizations. We focus on nonparametric formulations that allow their inference in a manner that prevents overfitting and enables model selection. We discuss aspects of the choice of priors, in particular how to avoid underfitting via increased Bayesian hierarchies, and we contrast the task of sampling network partitions from the posterior distribution with finding the single point estimate that maximizes it, while describing efficient algorithms to perform either one. We also show how inferring the SBM can be used to predict missing and spurious links, and shed light on the fundamental limitations of the detectability of modular structures in networks.

11.1 Introduction

Over the past decade and a half there has been an ever-increasing demand to analyze network data, in particular those stemming from social, biological, and technological systems. Often these systems are very large, comprising millions or even billions of nodes and edges, such as the World Wide Web, and the global-level social interactions among humans. A particular challenge that arises is how to describe the large-scale structures of these systems in a way that abstracts away from low-level details, allowing us to focus instead on “the big picture.” Differently from systems that are naturally embedded in some low-dimensional space – such as the population density of cities or the physiology of organisms – we are unable just to “look” at a network and readily extract its most salient features. This has prompted much of activity in developing algorithmic approaches to extract such global information in a well-defined manner, many of which are described in the remaining chapters of this book. Most of them operate on a rather simple ansatz, where we try to divide the network into “building blocks,” which then can be described at an aggregate level in a simplified manner. The majority of such methods go under the name “community detection,” “network clustering” or “blockmodeling.” In this chapter we consider the situation where the ultimate objective when analyzing network data in this way is to model it, i.e. we want to make statements about possible generative mechanisms that are responsible for the network formation. This overall aim sets us in a well-defined path, where we get to formulate probabilistic models for network structure, and use principled and robust methods of statistical inference to fit our models to data. Central to this approach is the ability to distinguish structure from randomness, so that we do not fool ourselves into believing that there are elaborate structures in our data which are in fact just the outcome of stochastic fluctuations, which tends to be the Achilles' heel of alternative nonstatistical approaches. In addition to providing a description of the data, the models we infer can also be used to generalize from observations, and make statements about what has not yet been observed, yielding something more tangible than mere interpretations. In what follows we will give an introduction to this inference approach, which includes recent developments that allow us to perform it in a consistent, versatile and efficient manner.

11.2 Structure Versus Randomness in Networks

If we observe a random string of characters we will eventually encounter every possible substring, provided the string is long enough. This leads to the famous thought experiment of a large number of monkeys with typewriters: assuming that they type randomly, for a sufficiently large number of monkeys any output can be observed, including, for example, the very text you are reading. Therefore, if we are ever faced with this situation, we should not be surprised if a such a text is in fact produced and, most importantly, we should not offer its simian author a place in a university department, as this occurrence is unlikely to be repeated. However, this example is of little practical relevance, as the number of monkeys necessary to type the text “blockmodeling” by chance is already of the order of ![]() , and there are simply not that many monkeys.

, and there are simply not that many monkeys.

Networks, however, are different from random strings. The network analogue of a random string is an Erdős-Rényi random graph [22] where each possible edge can occur with the same probability. But differently from a random string, a random graph can contain a wealth of structure before it becomes astronomically large, particularly if we search for it. An example of this is shown in Figure 11.1 for a modest network of 5000 nodes, where its adjacency matrix is visualized using three different node orderings. Two of the orderings seem to reveal patterns of large-scale connections that are tantalizingly clear, and indeed would be eagerly captured by many network clustering methods [39]. In particular, they seem to show groupings of nodes that have distinct probabilities of connections to each other, in direct contradiction to the actual process that generated the network, where all connections had the same probability of occurring. What makes matters even worse is that Figure 11.1 shows only a very small subset of all orderings that have similar patterns, but are otherwise very distinct from each other. Naturally, in the same way we should not confuse a monkey with a proper scientist in our previous example, we should not use any of these node groupings to explain why the network has its structure. Doing so should be considering overfitting it, i.e. mistaking random fluctuations for generative structure, yielding an overly complicated and ultimately wrong explanation for the data.

Figure 11.1 The three panels show the same adjacency matrix, with the only difference between them being the ordering of the nodes. The different orderings show seemingly clear, albeit very distinct, patterns of modular structure. However, the adjacency matrix in question corresponds to an instance of a fully random Erdős–Rényi model, where each edge has the same probability  of occurring, with

of occurring, with  . Although the patterns seen in the second and third panels are not mere fabrications – as they are really there in the network – they are also not meaningful descriptions of this network, since they arise purely out of random fluctuations. Therefore, the node groups that are identified via these patterns bear no relation to the generative process that produced the data. In other words, the second and third panels correspond each to an overfit of the data, where stochastic fluctuations are misrepresented as underlying structure. This pitfall can lead to misleading interpretations of results from clustering methods that do not account for statistical significance.

. Although the patterns seen in the second and third panels are not mere fabrications – as they are really there in the network – they are also not meaningful descriptions of this network, since they arise purely out of random fluctuations. Therefore, the node groups that are identified via these patterns bear no relation to the generative process that produced the data. In other words, the second and third panels correspond each to an overfit of the data, where stochastic fluctuations are misrepresented as underlying structure. This pitfall can lead to misleading interpretations of results from clustering methods that do not account for statistical significance.

The remedy to this problem is to think probabilistically. We need to ascribe to each possible explanation of the data a probability that it is correct, which takes into account modeling assumptions, the statistical evidence available in the data, as well any source of prior information we may have. Imbued in the whole procedure must be the principle of parsimony – or Occam's razor – where a simpler model is preferred if the evidence is not sufficient to justify a more complicated one.

In order to follow this path, before we look at any network data, we must first look in the “forward” direction, and decide which mechanisms generate networks in the first place. Based on this, we will finally be able to look “backwards,” and tell which particular mechanism generated a given observed network.

11.3 The Stochastic Blockmodel

As mentioned in the introduction, we wish to decompose networks into “building blocks” by grouping together nodes that have a similar role in the network. From a generative point of view, we wish to work with models that are based on a partition of ![]() nodes into

nodes into ![]() such building blocks, given by the vector

such building blocks, given by the vector ![]() with entries

with entries

specifying the group membership of node ![]() . We wish to construct a generative model that takes this division of the nodes as parameters and generates networks with a probability

. We wish to construct a generative model that takes this division of the nodes as parameters and generates networks with a probability

where ![]() is the adjacency matrix. But what shape should

is the adjacency matrix. But what shape should ![]() have? If we wish to impose that nodes that belong to the same group are statistically indistinguishable, our ensemble of networks should be fully characterized by the number of edges that connects nodes of two groups

have? If we wish to impose that nodes that belong to the same group are statistically indistinguishable, our ensemble of networks should be fully characterized by the number of edges that connects nodes of two groups ![]() and

and ![]() ,

,

or twice that number if ![]() . If we take these as conserved quantities, the ensemble that reflects our maximal indifference towards any other aspect is the one that maximizes the entropy [48]

. If we take these as conserved quantities, the ensemble that reflects our maximal indifference towards any other aspect is the one that maximizes the entropy [48]

subject to the constraint of Equation (11.1). If we relax somewhat our requirements, such that Equation (11.1) is obeyed only for expectations then we can obtain our model using the method of Lagrange multipliers, using the Lagrangian function

where ![]() are constants independent of

are constants independent of ![]() , and

, and ![]() and

and ![]() are multipliers that enforce our desired constraints and normalization, respectively. Obtaining the saddle point

are multipliers that enforce our desired constraints and normalization, respectively. Obtaining the saddle point ![]() ,

, ![]() and

and ![]() gives us the maximum entropy ensemble with the desired properties. If we constrain ourselves to simple graphs, i.e.

gives us the maximum entropy ensemble with the desired properties. If we constrain ourselves to simple graphs, i.e. ![]() , without self-loops, we have as our maximum entropy model

, without self-loops, we have as our maximum entropy model

with ![]() being the probability of an edge existing between any two nodes belonging to groups

being the probability of an edge existing between any two nodes belonging to groups ![]() and

and ![]() . This model is called the stochastic blockmodel (SBM), and has its roots in the social sciences and statistics [44,72,100,105], but has appeared repeatedly in the literature under a variety of different names [8–10,12,17,102]. By selecting the probabilities

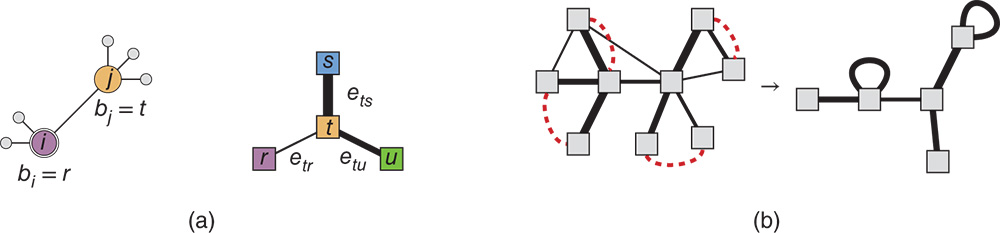

. This model is called the stochastic blockmodel (SBM), and has its roots in the social sciences and statistics [44,72,100,105], but has appeared repeatedly in the literature under a variety of different names [8–10,12,17,102]. By selecting the probabilities ![]() appropriately, we can achieve arbitrary mixing patterns between the groups of nodes, as illustrated in Figure 11.2. We stress that while the SBM can perfectly accommodate the usual “community structure” pattern [25], i.e. when the diagonal entries of

appropriately, we can achieve arbitrary mixing patterns between the groups of nodes, as illustrated in Figure 11.2. We stress that while the SBM can perfectly accommodate the usual “community structure” pattern [25], i.e. when the diagonal entries of ![]() are dominant, it can equally well describe a large variety of other patterns, such as bipartiteness, core-periphery, and many others.

are dominant, it can equally well describe a large variety of other patterns, such as bipartiteness, core-periphery, and many others.

Figure 11.2 SBM: (a) the matrix of probabilities between groups  defines the large-scale structure of generated networks and (b) a sampled network corresponding to (a), where the node colors indicate the group membership.

defines the large-scale structure of generated networks and (b) a sampled network corresponding to (a), where the node colors indicate the group membership.

Instead of simple graphs, we may consider multigraphs by allowing multiple edges between nodes, i.e. ![]() . Repeating the same procedure, we obtain in this case

. Repeating the same procedure, we obtain in this case

with ![]() being the average number of edges existing between any two nodes belonging to group

being the average number of edges existing between any two nodes belonging to group ![]() and

and ![]() . Whereas the placement of edges in Equation (11.4) is given by a Bernoulli distribution, in Equation (11.5) it is given by a geometric distribution, reflecting the different nature of both kinds of networks. Although these models are not the same, there is in fact little difference between the networks they generate in the sparse limit given by

. Whereas the placement of edges in Equation (11.4) is given by a Bernoulli distribution, in Equation (11.5) it is given by a geometric distribution, reflecting the different nature of both kinds of networks. Although these models are not the same, there is in fact little difference between the networks they generate in the sparse limit given by ![]() with

with ![]() . We see this by noticing how their log-probabilities become asymptotically identical in this limit, i.e.

. We see this by noticing how their log-probabilities become asymptotically identical in this limit, i.e.

Therefore, since most networks that we are likely to encounter are sparse [66], it does not matter which model we use, and we may prefer whatever is more convenient for our calculations. With this in mind, we may consider yet another variant, which uses instead a Poisson distribution to sample edges [50],

where now we also allow for self-loops. Like the geometric model, the Poisson model generates multigraphs, and it is easy to verify that it also leads to Equation (11.7) in the sparse limit. This model is easier to use in some of the calculations that we are going to make, in particular when we consider important extensions of the SBM, therefore we will focus on it.1

The model above generates undirected networks. It can be very easily modified to generate directed networks instead, by making ![]() an asymmetric matrix, and adjusting the model likelihood accordingly. The same is true for all model variations that are going to be used in the following sections. However, for the sake of conciseness we will focus only on the undirected case. We point out that the corresponding expressions for the directed case are readily available in the literature (e.g. [78,84,85]).

an asymmetric matrix, and adjusting the model likelihood accordingly. The same is true for all model variations that are going to be used in the following sections. However, for the sake of conciseness we will focus only on the undirected case. We point out that the corresponding expressions for the directed case are readily available in the literature (e.g. [78,84,85]).

Now that we have defined how networks with prescribed modular structure are generated, we need to develop the reverse procedure, i.e. how to infer the modular structure from data.

11.4 Bayesian Inference: The Posterior Probability of Partitions

Instead of generating networks, our nominal task is to determine which partition ![]() generated an observed network

generated an observed network ![]() , assuming this was done via the SBM. In other words, we want to obtain the probability

, assuming this was done via the SBM. In other words, we want to obtain the probability ![]() that a node partition

that a node partition ![]() was responsible for a network

was responsible for a network ![]() . By evoking elementary properties of conditional probabilities, we can write this probability as

. By evoking elementary properties of conditional probabilities, we can write this probability as

with

being the marginal likelihood integrated over the remaining model parameters, and

which is called the evidence, i.e. the total probability of the data under the model, which serves as a normalization constant in Equation (11.9). Equation (11.9) is known as Bayes' rule, and far from being only a simple mathematical step, it encodes how our prior beliefs about the model, i.e. before we observe any data – in the above represented by the prior distributions ![]() and

and ![]() – are affected by the observation, yielding the so-called posterior distribution

– are affected by the observation, yielding the so-called posterior distribution ![]() . The overall approach outlined above has been proposed to the problem of network inference by several authors [5,16,37,41,43,51,64,65,71,79,84,85,89,93,95,109], with different implementations that vary in some superficial details in the model specification, approximations used, and in particular in the choice of priors. Here we will not review or compare all approaches in detail, but rather focus on the most important aspects, while choosing a particular path that makes exact calculations possible.

. The overall approach outlined above has been proposed to the problem of network inference by several authors [5,16,37,41,43,51,64,65,71,79,84,85,89,93,95,109], with different implementations that vary in some superficial details in the model specification, approximations used, and in particular in the choice of priors. Here we will not review or compare all approaches in detail, but rather focus on the most important aspects, while choosing a particular path that makes exact calculations possible.

The prior probabilities are a crucial element of the inference procedure, as they will affect the shape of the posterior distribution and, ultimately, our inference results. In more traditional scenarios, the choice of priors would be guided by previous observations of data that are believed to come from the same model. However, this is not an applicable scenario when considering networks, which are typically singletons, i.e. they are unique objects, instead of coming from a population (e.g. there is only one internet, one network of trade between countries, etc).2 In the absence of such empirical prior information, we should try as much as possible to be guided by well-defined principles and reasonable assumptions about our data, rather than ad hoc choices. A central proposition we will be using is the principle of maximum indifference about the model before we observe any data. This will lead us to so-called uninformative priors,3 which are maximum entropy distributions that ascribe the same probability to each possible parameter combination [48]. These priors have the property that they do not bias the posterior distribution in any particular way, and thus let the data “speak for itself.” But as we will see in the following, the naive application of this principle will lead to adverse effects in many cases, and upon closer inspection we will often be able to identify aspects of the model that we should not be agnostic about. Instead, a more meaningful approach will be to describe higher-order aspects of the model with their own models. This can be done in a manner that preserves the unbiased nature of our results, while being able to provide a more faithful representation of the data.

We begin by choosing the prior for the partition, ![]() . The most direct uninformative prior is the “flat” distribution where all partitions into at most

. The most direct uninformative prior is the “flat” distribution where all partitions into at most ![]() groups are equally likely, namely

groups are equally likely, namely

where ![]() are the ordered Bell numbers [99], given by

are the ordered Bell numbers [99], given by

where ![]() are the Stirling numbers of the second kind [98], which count the number of ways to partition a set of size

are the Stirling numbers of the second kind [98], which count the number of ways to partition a set of size ![]() into

into ![]() indistinguishable and nonempty groups (the

indistinguishable and nonempty groups (the ![]() in the above equation recovers the distinguishability of the groups, which we require). However, upon closer inspection we often find that such flat distributions are not a good choice. In this particular case, since there are many more partitions into

in the above equation recovers the distinguishability of the groups, which we require). However, upon closer inspection we often find that such flat distributions are not a good choice. In this particular case, since there are many more partitions into ![]() groups than there are into

groups than there are into ![]() groups (if

groups (if ![]() is sufficiently smaller than

is sufficiently smaller than ![]() ), Equation (11.12) will typically prefer partitions with a number of groups that is comparable to the number of nodes. Therefore, this uniform assumption seems to betray the principle of parsimony that we stated in the introduction, since it favors large models with many groups, before we even observe the data.4 Instead, we may wish to be agnostic about the number of groups itself, by first sampling it from its own uninformative distribution

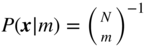

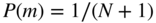

), Equation (11.12) will typically prefer partitions with a number of groups that is comparable to the number of nodes. Therefore, this uniform assumption seems to betray the principle of parsimony that we stated in the introduction, since it favors large models with many groups, before we even observe the data.4 Instead, we may wish to be agnostic about the number of groups itself, by first sampling it from its own uninformative distribution ![]() , and then sampling the partition conditioned on it

, and then sampling the partition conditioned on it

since ![]() is the number of ways to partition

is the number of ways to partition ![]() nodes into

nodes into ![]() labelled groups.5 Since

labelled groups.5 Since ![]() is a parameter of our model, the number of groups

is a parameter of our model, the number of groups ![]() is a called a hyperparameter, and its distribution

is a called a hyperparameter, and its distribution ![]() is called a hyperprior. But once more, upon closer inspection we can identify further problems: If we sample from Equation (11.14), most partitions of the nodes will occupy all the groups approximately equally, i.e. all group sizes will be the approximately the same. Is this something we want to assume before observing any data? Instead, we may wish to be agnostic about this aspect as well, and choose to sample first the distribution of group sizes

is called a hyperprior. But once more, upon closer inspection we can identify further problems: If we sample from Equation (11.14), most partitions of the nodes will occupy all the groups approximately equally, i.e. all group sizes will be the approximately the same. Is this something we want to assume before observing any data? Instead, we may wish to be agnostic about this aspect as well, and choose to sample first the distribution of group sizes ![]() , where

, where ![]() is the number of nodes in group

is the number of nodes in group ![]() , forbidding empty groups,

, forbidding empty groups,

since ![]() is the number of ways to divide

is the number of ways to divide ![]() nonzero counts into

nonzero counts into ![]() nonempty bins. Given these randomly sampled sizes as a constraint, we sample the partition with a uniform probability

nonempty bins. Given these randomly sampled sizes as a constraint, we sample the partition with a uniform probability

This gives us finally

At this point the reader may wonder if there is any particular reason to stop here. Certainly we can find some higher-order aspect of the group sizes ![]() that we may wish to be agnostic about, and introduce a hyperhyperprior, and so on, indefinitely. The reason why we should not keep recursively being more and more agnostic about higher-order aspects of our model is that it brings increasingly diminishing returns. In this particular case, if we assume that the individual group sizes are sufficiently large, we obtain asymptotically

that we may wish to be agnostic about, and introduce a hyperhyperprior, and so on, indefinitely. The reason why we should not keep recursively being more and more agnostic about higher-order aspects of our model is that it brings increasingly diminishing returns. In this particular case, if we assume that the individual group sizes are sufficiently large, we obtain asymptotically

where ![]() is the entropy of the group size distribution. The value

is the entropy of the group size distribution. The value ![]() is an information-theoretical limit that cannot be surpassed, regardless of how we choose

is an information-theoretical limit that cannot be surpassed, regardless of how we choose ![]() . Therefore, the most we can optimize by being more refined is a marginal factor

. Therefore, the most we can optimize by being more refined is a marginal factor ![]() in the log-probability, which would amount to little practical difference in most cases.

in the log-probability, which would amount to little practical difference in most cases.

In the above, we went from a purely flat uninformative prior distribution for ![]() to a Bayesian hierarchy with three levels, where we sample first the number of groups, the groups sizes, and then finally the partition. In each of the levels we used maximum entropy distributions that are constrained by parameters that are themselves sampled from their own distributions at a higher level. In doing so, we removed some intrinsic assumptions about the model (in this case, number and sizes of groups), thereby postponing any decision on them until we observe the data. This will be a general strategy we will use for the remaining model parameters.

to a Bayesian hierarchy with three levels, where we sample first the number of groups, the groups sizes, and then finally the partition. In each of the levels we used maximum entropy distributions that are constrained by parameters that are themselves sampled from their own distributions at a higher level. In doing so, we removed some intrinsic assumptions about the model (in this case, number and sizes of groups), thereby postponing any decision on them until we observe the data. This will be a general strategy we will use for the remaining model parameters.

Having dealt with ![]() , this leaves us with the prior for the group to group connections,

, this leaves us with the prior for the group to group connections, ![]() . A good starting point is an uninformative prior conditioned on a global average,

. A good starting point is an uninformative prior conditioned on a global average, ![]() , which will determine the expected density of the network. For a continuous variable

, which will determine the expected density of the network. For a continuous variable ![]() , the maximum entropy distribution with a constrained average

, the maximum entropy distribution with a constrained average ![]() is the exponential,

is the exponential, ![]() . Therefore, for

. Therefore, for ![]() we have

we have

with ![]() determining the expected total number of edges,6 where we have assumed the local average

determining the expected total number of edges,6 where we have assumed the local average ![]() , such that that the expected number of edges

, such that that the expected number of edges ![]() will be equal to

will be equal to ![]() , irrespective of the group sizes

, irrespective of the group sizes ![]() and

and ![]() [85]. Combining this with Equation (11.8), we can compute the integrated marginal likelihood of Equation (11.10) as

[85]. Combining this with Equation (11.8), we can compute the integrated marginal likelihood of Equation (11.10) as

Just as with the node partition, the uninformative assumption of Equation (11.19) also leads to its own problems, but we postpone dealing with them to Section 11.6. For now, we have everything we need to write the posterior distribution, with the exception of the model evidence ![]() given by Equation (11.11). Unfortunately, since it involves a sum over all possible partitions, it is not tractable to compute the evidence exactly. However, since it is just a normalization constant, we will not need to determine it when optimizing or sampling from the posterior, as we will see in Section 11.8. The numerator of Equation (11.9), which comprises of the terms that we can compute exactly, already contains all the information we need to proceed with the inference, and also has a special interpretation, as we will see in the next section.

given by Equation (11.11). Unfortunately, since it involves a sum over all possible partitions, it is not tractable to compute the evidence exactly. However, since it is just a normalization constant, we will not need to determine it when optimizing or sampling from the posterior, as we will see in Section 11.8. The numerator of Equation (11.9), which comprises of the terms that we can compute exactly, already contains all the information we need to proceed with the inference, and also has a special interpretation, as we will see in the next section.

The posterior of Equation (11.9) will put low probabilities on partitions that are not backed by sufficient statistical evidence in the network structure, and it will not lead us to spurious partitions such as those depicted in Figure 11.1. Inferring partitions from this posterior amounts to a so-called nonparametric approach; not because it lacks the estimation of parameters, but because the number of parameters itself, a.k.a. the order or dimension of the model, will be inferred as well. More specifically, the number of groups ![]() itself will be an outcome of the inference procedure, which will be chosen in order to accommodate the structure in the data, without overfitting. The precise reasons why the latter is guaranteed might not be immediately obvious to those unfamiliar with Bayesian inference. In the following section we will provide an explanation by making a straightforward connection with information theory. The connection is based on a different interpretation of our model, which allows us to introduce some important improvements.

itself will be an outcome of the inference procedure, which will be chosen in order to accommodate the structure in the data, without overfitting. The precise reasons why the latter is guaranteed might not be immediately obvious to those unfamiliar with Bayesian inference. In the following section we will provide an explanation by making a straightforward connection with information theory. The connection is based on a different interpretation of our model, which allows us to introduce some important improvements.

11.5 Microcanonical Models and the Minimum Description Length Principle

We can re-interpret the integrated marginal likelihood of Equation (11.20) as the joint likelihood of a microcanonical model given by7

where

and ![]() is the matrix of edge counts between groups. The term “microcanonical” – borrowed from statistical physics – means that model parameters correspond to “hard” constraints that are strictly imposed on the ensemble, as opposed to “soft” constraints that are obeyed only on average. In the particular case above,

is the matrix of edge counts between groups. The term “microcanonical” – borrowed from statistical physics – means that model parameters correspond to “hard” constraints that are strictly imposed on the ensemble, as opposed to “soft” constraints that are obeyed only on average. In the particular case above, ![]() is the probability of generating a multigraph

is the probability of generating a multigraph ![]() where Equation (11.1) is always fulfilled, i.e. the total number of edges between groups

where Equation (11.1) is always fulfilled, i.e. the total number of edges between groups ![]() and

and ![]() is always exactly

is always exactly ![]() , without any fluctuation allowed between samples (see [85] for a combinatorial derivation). This contrasts with the parameter

, without any fluctuation allowed between samples (see [85] for a combinatorial derivation). This contrasts with the parameter ![]() in Equation (11.8), which determines only the average number of edges between groups, which fluctuates between samples. Conversely, the prior for the edge counts

in Equation (11.8), which determines only the average number of edges between groups, which fluctuates between samples. Conversely, the prior for the edge counts ![]() is a mixture of geometric distributions with average

is a mixture of geometric distributions with average ![]() , which does allow the edge counts to fluctuate, guaranteeing the overall equivalence. The fact that Equation (11.21) holds is rather remarkable, since it means that – at least for the basic priors we used – these two kinds of model (“canonical” and microcanonical) cannot be distinguished from data, since their marginal likelihoods (and hence the posterior probability) are identical.8

, which does allow the edge counts to fluctuate, guaranteeing the overall equivalence. The fact that Equation (11.21) holds is rather remarkable, since it means that – at least for the basic priors we used – these two kinds of model (“canonical” and microcanonical) cannot be distinguished from data, since their marginal likelihoods (and hence the posterior probability) are identical.8

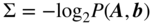

With this microcanonical interpretation in mind, we may frame the posterior probability in an information-theoretical manner as follows. If a discrete variable ![]() occurs with a probability mass

occurs with a probability mass ![]() , the asymptotic amount of information necessary to describe it is

, the asymptotic amount of information necessary to describe it is ![]() (if we choose bits as the unit of measurement), by using an optimal lossless coding scheme such as Huffman's algorithm [57]. With this in mind, we may write the numerator of the posterior distribution in Equation (11.9) as

(if we choose bits as the unit of measurement), by using an optimal lossless coding scheme such as Huffman's algorithm [57]. With this in mind, we may write the numerator of the posterior distribution in Equation (11.9) as

where the quantity

is called the description length of the data [35,91]. It corresponds to the asymptotic amount of information necessary to encode the data ![]() together with the model parameters

together with the model parameters ![]() and

and ![]() . Therefore, if we find a network partition that maximizes the posterior distribution of Equation (11.20), we are also automatically finding one which minimizes the description length.9 With this, we can see how the Bayesian approach outlined above prevents overfitting: As the size of the model increases (via a larger number of occupied groups), it will constrain itself better to the data, and the amount of information necessary to describe it when the model is known,

. Therefore, if we find a network partition that maximizes the posterior distribution of Equation (11.20), we are also automatically finding one which minimizes the description length.9 With this, we can see how the Bayesian approach outlined above prevents overfitting: As the size of the model increases (via a larger number of occupied groups), it will constrain itself better to the data, and the amount of information necessary to describe it when the model is known, ![]() , will decrease. At the same time, the amount of information necessary to describe the model itself,

, will decrease. At the same time, the amount of information necessary to describe the model itself, ![]() , will increase as it becomes more complex. Therefore, the latter will function as a penalty10 that prevents the model from becoming overly complex, and the optimal choice will amount to a proper balance between both terms.11 Among other things, this approach will allow us to properly estimate the dimension of the model – represented by the number of groups

, will increase as it becomes more complex. Therefore, the latter will function as a penalty10 that prevents the model from becoming overly complex, and the optimal choice will amount to a proper balance between both terms.11 Among other things, this approach will allow us to properly estimate the dimension of the model – represented by the number of groups ![]() – in a parsimonious way.

– in a parsimonious way.

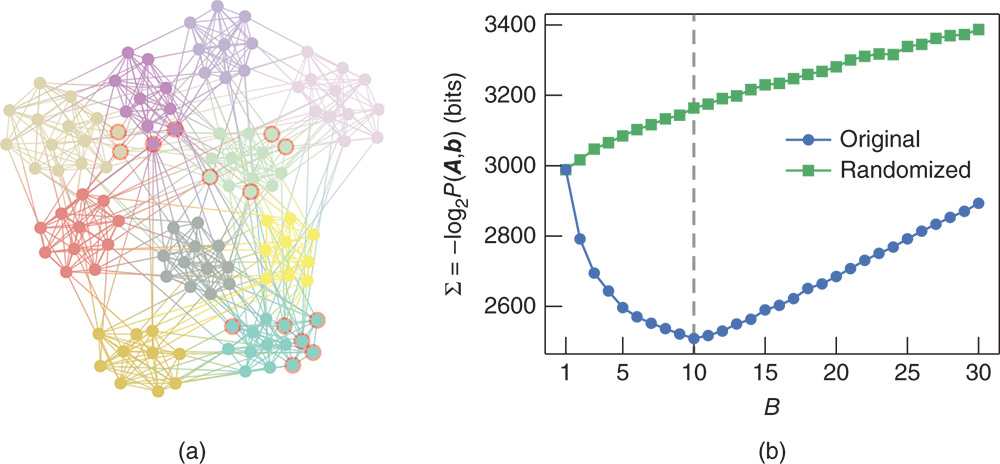

Figure 11.3 Bayesian inference of the SBM for a network of American college football teams [30]. (a) The partition that maximizes the posterior probability of Equation (11.9), or, equivalently, minimizes the description length of Equation (11.24). Nodes marked in red are not classified according to the known division into “conferences.” (b) Description length as a function of the number of groups of the corresponding optimal partition, for both the original and randomized data.

We now illustrate this approach with a real-world dataset of American college football teams [30], where a node is a team and an edge exists if two teams play against each other in a season. If we find the partition that maximizes the posterior distribution, we uncover ![]() groups, as can be seen in Figure 11.3a. If we compare this partition with the known division of the teams into “conferences” [23,24], we find that they match with a high degree of precision, with the exception of only a few nodes.12 In Figure 11.3b we show the description length of the optimal partitions if we constrain them to have a pre-specified number of groups, which allows us to see how the approach penalizes both too simple and too complex models, with a global minimum at

groups, as can be seen in Figure 11.3a. If we compare this partition with the known division of the teams into “conferences” [23,24], we find that they match with a high degree of precision, with the exception of only a few nodes.12 In Figure 11.3b we show the description length of the optimal partitions if we constrain them to have a pre-specified number of groups, which allows us to see how the approach penalizes both too simple and too complex models, with a global minimum at ![]() , corresponding to the most compressive partition. Importantly, if we now randomize the network, by placing all its edges in a completely random fashion, we obtain instead a trivial partition into

, corresponding to the most compressive partition. Importantly, if we now randomize the network, by placing all its edges in a completely random fashion, we obtain instead a trivial partition into ![]() group, indicating that the best model for this data is indeed a fully random graph. Hence, we see that this approach completely avoids the pitfall discussed in Section 11.2 and does not identify groups in fully random networks, and that the division shown in Figure 11.3a points to a statistically significant structure in the data that cannot be explained simply by random fluctuations.

group, indicating that the best model for this data is indeed a fully random graph. Hence, we see that this approach completely avoids the pitfall discussed in Section 11.2 and does not identify groups in fully random networks, and that the division shown in Figure 11.3a points to a statistically significant structure in the data that cannot be explained simply by random fluctuations.

11.6 The “Resolution Limit” Underfitting Problem and the Nested SBM

Although the Bayesian approach outlined above is in general protected against overfitting, it is still susceptible to underfitting, i.e. when we mistake statistically significant structure for randomness, resulting in the inference of an overly simplistic model. This happens whenever there is a large discrepancy between our prior assumptions and what is observed in the data. We illustrate this problem with a simple example. Consider a network formed of 64 isolated cliques of size 10, as shown in Figure 11.4a. If we employ the approach described in the previous section, and maximize the posterior of Equation (11.9), we obtain a partition into ![]() groups, where each group is composed of two cliques. This is a fairly unsatisfying characterization of this network, and also somewhat perplexing, since the probability that the inferred SBM will generate the observed network, i.e. each of the 32 groups will simultaneously and spontaneously split in two disjoint cliques, is vanishingly small. Indeed, intuitively it seems we should do significantly better with this rather obvious example, and that the best fit would be to put each of the cliques in their own group. In order to see what went wrong, we need to revisit our prior assumptions, in particular our choice for

groups, where each group is composed of two cliques. This is a fairly unsatisfying characterization of this network, and also somewhat perplexing, since the probability that the inferred SBM will generate the observed network, i.e. each of the 32 groups will simultaneously and spontaneously split in two disjoint cliques, is vanishingly small. Indeed, intuitively it seems we should do significantly better with this rather obvious example, and that the best fit would be to put each of the cliques in their own group. In order to see what went wrong, we need to revisit our prior assumptions, in particular our choice for ![]() in Equation (11.19) or, equivalently, our choice of

in Equation (11.19) or, equivalently, our choice of ![]() in Equation (11.23) for the microcanonical formulation. In both cases, they correspond to uninformative priors, which put approximately equal weight on all allowed types of large-scale structures. As argued before, this seems reasonable at first, since we should not bias our model before we observe the data. However, the implication of this choice is that we expect a priori the structure of the network at the aggregate group level, i.e. considering only the groups and the edges between them (not the individual nodes) to be fully random. This is indeed not the case in the simple example of Figure 11.4, and in fact it is unlikely to be the case for most networks that we encounter, which will probably be structured at a higher level as well. The unfavorable outcome of the uninformative assumption can also be seen by inspecting its effect on the description length of Equation (11.24). If we revisit our simple model with

in Equation (11.23) for the microcanonical formulation. In both cases, they correspond to uninformative priors, which put approximately equal weight on all allowed types of large-scale structures. As argued before, this seems reasonable at first, since we should not bias our model before we observe the data. However, the implication of this choice is that we expect a priori the structure of the network at the aggregate group level, i.e. considering only the groups and the edges between them (not the individual nodes) to be fully random. This is indeed not the case in the simple example of Figure 11.4, and in fact it is unlikely to be the case for most networks that we encounter, which will probably be structured at a higher level as well. The unfavorable outcome of the uninformative assumption can also be seen by inspecting its effect on the description length of Equation (11.24). If we revisit our simple model with ![]() cliques of size

cliques of size ![]() , grouped uniformly into

, grouped uniformly into ![]() groups of size

groups of size ![]() , and we assume that these values are sufficiently large so that Stirling's factorial approximation

, and we assume that these values are sufficiently large so that Stirling's factorial approximation ![]() can be used, the description length becomes

can be used, the description length becomes

Figure 11.4 Inference of the SBM on a simple artificial network composed of 64 cliques of size 10, illustrating the underfitting problem. (a) The partition that maximizes the posterior probability of Equation (11.9) or, equivalently, minimizes the description length of Equation (11.24). The 64 cliques are grouped into 32 groups composed of two cliques each. (b) Minimum description length as a function of the number of groups of the corresponding partition, both for the SBM and its nested variant, which is less susceptible to underfitting, and puts all 64 cliques in their own groups.

where ![]() is the total number of nodes and

is the total number of nodes and ![]() is the total number of edges, and we have omitted terms that do not depend on

is the total number of edges, and we have omitted terms that do not depend on ![]() . From this, we see that if we increase the number of groups

. From this, we see that if we increase the number of groups ![]() , this incurs a quadratic penalty in the description length given by the second term of Equation (11.27), which originates precisely from our expression of

, this incurs a quadratic penalty in the description length given by the second term of Equation (11.27), which originates precisely from our expression of ![]() : it corresponds to the amount of information necessary to describe all entries of a symmetric

: it corresponds to the amount of information necessary to describe all entries of a symmetric ![]() matrix that takes independent values between 0 and

matrix that takes independent values between 0 and ![]() . Indeed, a slightly more careful analysis of the scaling of the description length [79,85] reveals that this approach is unable to uncover a number of groups that is larger than

. Indeed, a slightly more careful analysis of the scaling of the description length [79,85] reveals that this approach is unable to uncover a number of groups that is larger than ![]() , even if their existence is obvious, as in our example of Figure 11.4.13

, even if their existence is obvious, as in our example of Figure 11.4.13

Trying to avoid this limitation might seem like a conundrum, since replacing the uninformative prior for ![]() amounts to making a more definite statement on the most likely large-scale structures that we expect to find, which we might hesitate to stipulate, as this is precisely what we want to discover from the data in the first place, and we want to remain unbiased. Luckily, there is in fact a general approach available to us to deal with this problem: we postpone our decision about the higher-order aspects of the model until we observe the data. In fact, we already saw this approach in action when we decided on the prior for the partitions; we do so by replacing the uninformative prior with a parametric distribution, whose parameters are in turn modelled by a another distribution, i.e. a hyperprior. The parameters of the prior then become latent variables that are learned from data, allowing us to uncover further structures, while remaining unbiased.

amounts to making a more definite statement on the most likely large-scale structures that we expect to find, which we might hesitate to stipulate, as this is precisely what we want to discover from the data in the first place, and we want to remain unbiased. Luckily, there is in fact a general approach available to us to deal with this problem: we postpone our decision about the higher-order aspects of the model until we observe the data. In fact, we already saw this approach in action when we decided on the prior for the partitions; we do so by replacing the uninformative prior with a parametric distribution, whose parameters are in turn modelled by a another distribution, i.e. a hyperprior. The parameters of the prior then become latent variables that are learned from data, allowing us to uncover further structures, while remaining unbiased.

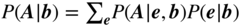

The microcanonical formulation allows us to proceed in this direction in a straightforward manner, as we can interpret the matrix of edge counts ![]() as the adjacency matrix of a multigraph where each of the groups is represented as a single node. Within this interpretation, an elegant solution presents itself, where we describe the matrix

as the adjacency matrix of a multigraph where each of the groups is represented as a single node. Within this interpretation, an elegant solution presents itself, where we describe the matrix ![]() with another SBM, i.e. we partition each of the groups into meta-groups and the edges between groups are placed according to the edge counts between meta-groups. For this second SBM, we can proceed in the same manner and model it by a third SBM, and so on, forming a nested hierarchy, as illustrated in Figure 11.5 [82]. More precisely, if we denote by

with another SBM, i.e. we partition each of the groups into meta-groups and the edges between groups are placed according to the edge counts between meta-groups. For this second SBM, we can proceed in the same manner and model it by a third SBM, and so on, forming a nested hierarchy, as illustrated in Figure 11.5 [82]. More precisely, if we denote by ![]() ,

, ![]() and

and ![]() the number of groups, the partition and the matrix of edge counts at level

the number of groups, the partition and the matrix of edge counts at level ![]() , we have

, we have

with ![]() counting the number of

counting the number of ![]() -combinations with repetitions from a set of size

-combinations with repetitions from a set of size ![]() . Equation (11.28) is the likelihood of a maximum-entropy multigraph SBM, i.e. every multigraph occurs with the same probability, provided they fulfill the imposed constraints14 [78]. The prior for the partitions is again given by Equation (11.17),

. Equation (11.28) is the likelihood of a maximum-entropy multigraph SBM, i.e. every multigraph occurs with the same probability, provided they fulfill the imposed constraints14 [78]. The prior for the partitions is again given by Equation (11.17),

with ![]() , so that the joint probability of the data, edge counts, and the hierarchical partition

, so that the joint probability of the data, edge counts, and the hierarchical partition ![]() becomes

becomes

where we impose the boundary conditions ![]() and

and ![]() . We can treat the hierarchy depth

. We can treat the hierarchy depth ![]() as a latent variable as well, by placing a prior on it

as a latent variable as well, by placing a prior on it ![]() , where

, where ![]() is the maximum value allowed. But since this only contributes to an overall multiplicative constant, it has no effect on the posterior distribution, and thus can be omitted. If we impose

is the maximum value allowed. But since this only contributes to an overall multiplicative constant, it has no effect on the posterior distribution, and thus can be omitted. If we impose ![]() , we recover the uninformative prior for

, we recover the uninformative prior for ![]() ,

,

which is different from Equation (11.23) only in that the number of edges ![]() is not allowed to fluctuate.15 The inference of this model is done in the same manner as the uninformative one, by obtaining the posterior distribution of the hierarchical partition

is not allowed to fluctuate.15 The inference of this model is done in the same manner as the uninformative one, by obtaining the posterior distribution of the hierarchical partition

and the description length is given analogously by

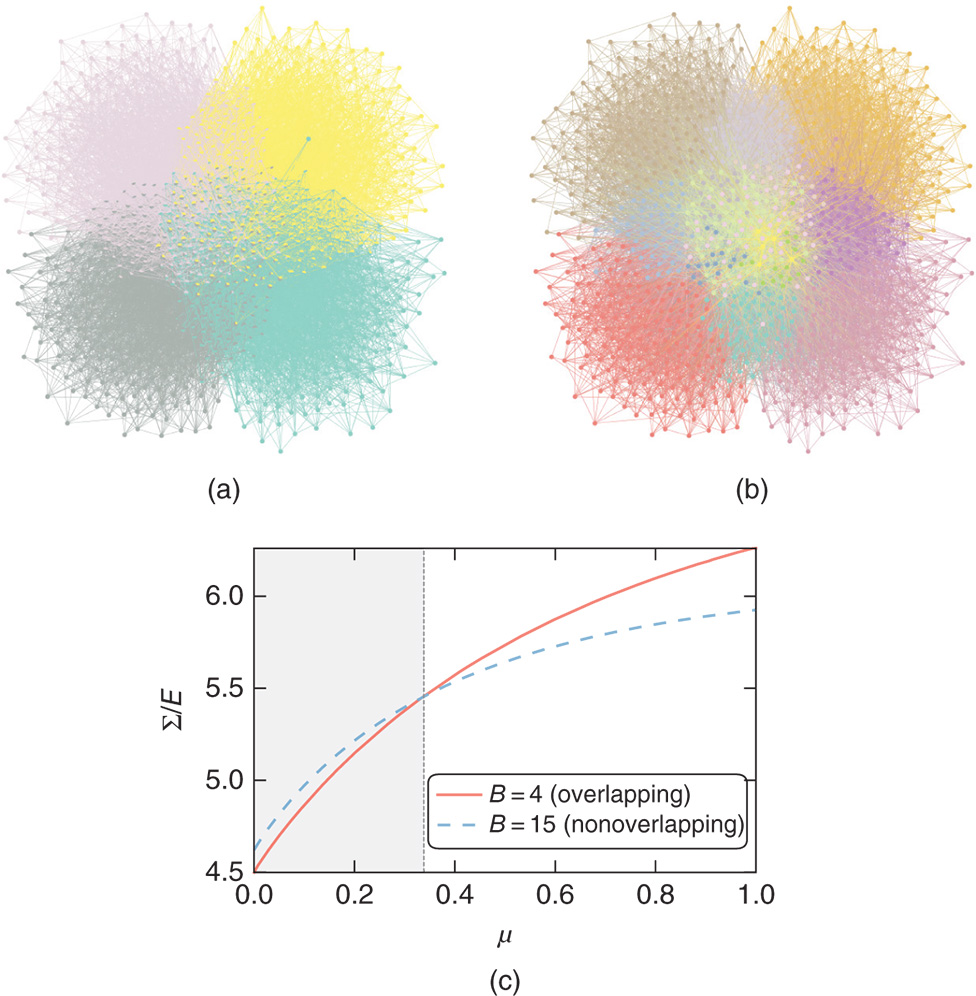

This approach has a series of advantages; in particular, we remain a priori agnostic with respect to what kind of large-scale structure is present in the network, having constrained ourselves simply in that it can be represented as a SBM at a higher level, and with the uninformative prior as a special case. Despite this, we are able to overcome the underfitting problem encountered with the uninformative approach: if we apply this model to the example of Figure 11.4, we can successfully distinguish all 64 cliques, and provide a lower overall description length for the data, as can be seen in Figure 11.4b. More generally, by investigating the properties of the model likelihood, it is possible to show that the maximum number of groups that can be uncovered with this model scales as ![]() , which is significantly larger than the limit with uninformative priors [82,85]. The difference between both approaches manifests itself very often in practice, as shown in Figure 11.5b, where systematic underfitting is observed for a wide variety of network datasets, which disappears with the nested model, as seen in Figure 11.5c. Crucially, we achieve this decreased tendency to underfit without sacrificing our protection against overfitting: Despite the more elaborate model specification, the inference of the nested SBM is completely nonparametric, and the same Bayesian and information-theoretical principles still hold. Furthermore, as we have already mentioned, the uninformative case is a special case of the nested SBM, i.e. when

, which is significantly larger than the limit with uninformative priors [82,85]. The difference between both approaches manifests itself very often in practice, as shown in Figure 11.5b, where systematic underfitting is observed for a wide variety of network datasets, which disappears with the nested model, as seen in Figure 11.5c. Crucially, we achieve this decreased tendency to underfit without sacrificing our protection against overfitting: Despite the more elaborate model specification, the inference of the nested SBM is completely nonparametric, and the same Bayesian and information-theoretical principles still hold. Furthermore, as we have already mentioned, the uninformative case is a special case of the nested SBM, i.e. when ![]() , and hence it can only improve the inference (e.g. by reducing the description length), with no drawbacks. We stress that the number of hierarchy levels, as with any other dimension of the model, such as the number of groups in each level, is inferred from data and does not need to be determined a priori.

, and hence it can only improve the inference (e.g. by reducing the description length), with no drawbacks. We stress that the number of hierarchy levels, as with any other dimension of the model, such as the number of groups in each level, is inferred from data and does not need to be determined a priori.

Figure 11.5 (a) Diagrammatic representation of the nested SBM described in the text, with  levels, adapted from [82]. (b) Average group sizes

levels, adapted from [82]. (b) Average group sizes  obtained with the SBM using uninformative priors, for a variety of empirical networks, listed in [82]. The dashed line shows a slope

obtained with the SBM using uninformative priors, for a variety of empirical networks, listed in [82]. The dashed line shows a slope  , highlighting the systematic underfitting problem. (c) The same as in (b), but using the nested SBM, where the underfitting has virtually disappeared, with datasets randomly scattered in the allowed range.

, highlighting the systematic underfitting problem. (c) The same as in (b), but using the nested SBM, where the underfitting has virtually disappeared, with datasets randomly scattered in the allowed range.

In addition to the above, the nested model also gives us the capacity of describing the data at multiple scales, which could potentially exhibit different mixing patterns. This is particularly useful for large networks, where the SBM might still give us a very complex description, which becomes easier to interpret if we concentrate first on the upper levels of the hierarchy. A good example is the result obtained for the internet topology at the autonomous systems level, shown in Figure 11.6. The lowest level of the hierarchy shows a division into a large number of groups, with a fairly complicated structure, whereas the higher levels show an increasingly simplified picture, culminating in a core-periphery organization as the dominating pattern.

Figure 11.6 Fit of the (degree-corrected) nested SBM for the internet topology at the autonomous systems level, adapted from [82]. The hierarchical division reveals a core-periphery organization at the higher levels, where most routes go through a relatively small number of nodes (shown in the inset and in the map). The lower levels reveal a more detailed picture, where a large number of groups of nodes are identified according to their routing patterns (amounting largely to distinct geographical regions). The layout is obtained with an edge bundling algorithm by Holten [45], which uses the hierarchical partition to route the edges.

11.7 Model Variations

Varying the number of groups and building hierarchies is not the only way we have of adapting the complexity of the model to the data. We may also change the internal structure of the model, and how the division into groups affects the placement of edges. In fact, the basic ansatz of the SBM is very versatile, and many variations have been proposed in the literature. In this section we review two important ones – SBMs with degree correction and group overlap – and review other model flavors in a summarized manner.

Before we go further into the model variations, we point out that the multiplicity of models is a strength of the inference approach. This is different from the broader field of network clustering, where a large number of available algorithms often yield conflicting results for the same data, leaving practitioners lost in how to select between them [32,46]. Instead, within the inference framework we can in fact compare different models in a principled manner and select the best one according to the statistical evidence available. We proceed with a general outline of the model selection procedure before following with specific model variations.

11.7.1 Model Selection

Suppose we define two versions of the SBM, labeled ![]() and

and ![]() , each with their own posterior distribution of partitions,

, each with their own posterior distribution of partitions, ![]() and

and ![]() . Suppose we find the most likely partitions

. Suppose we find the most likely partitions ![]() and

and ![]() , according to

, according to ![]() and

and ![]() , respectively. How do we decide which partition is more representative of the data? The consistent approach is to obtain the so-called posterior odds ratio [48,49]

, respectively. How do we decide which partition is more representative of the data? The consistent approach is to obtain the so-called posterior odds ratio [48,49]

where ![]() is our prior belief that variant

is our prior belief that variant ![]() is valid. A value of

is valid. A value of ![]() indicates that the choice

indicates that the choice ![]() is

is ![]() times more plausible as an explanation for the data than the alternative,

times more plausible as an explanation for the data than the alternative, ![]() . If we are a priori agnostic with respect to which model flavor is best, i.e.

. If we are a priori agnostic with respect to which model flavor is best, i.e. ![]() , we have then

, we have then

where ![]() is the description length difference between both choices. Hence, we should generally prefer the model choice that is most compressive, i.e. with the smallest description length. However, if the value of

is the description length difference between both choices. Hence, we should generally prefer the model choice that is most compressive, i.e. with the smallest description length. However, if the value of ![]() is close to 1, we should refrain from forcefully rejecting the alternative, as the evidence in data would not be strongly decisive either way. In other words the actual value of

is close to 1, we should refrain from forcefully rejecting the alternative, as the evidence in data would not be strongly decisive either way. In other words the actual value of ![]() gives us the confidence with which we can choose the preferred model. The final decision, however, is subjective, since it depends on what we might consider plausible. A value of

gives us the confidence with which we can choose the preferred model. The final decision, however, is subjective, since it depends on what we might consider plausible. A value of ![]() , for example, typically cannot be used to forcefully reject the alternative hypothesis, whereas a value of

, for example, typically cannot be used to forcefully reject the alternative hypothesis, whereas a value of ![]() might.

might.

An alternative test we can make is to decide which model class is most representative of the data, when averaged over all possible partitions. In this case, we proceed in an analogous way by computing the posterior odds ratio

where

is the model evidence. When ![]() ,

, ![]() is called the Bayes factor, with an interpretation analogous to

is called the Bayes factor, with an interpretation analogous to ![]() above, but where the statement is made with respect to all possible partitions, not only the most likely one. Unfortunately, as mentioned previously, the evidence

above, but where the statement is made with respect to all possible partitions, not only the most likely one. Unfortunately, as mentioned previously, the evidence ![]() cannot be computed exactly for the models we are interested in, making this criterion more difficult to employ in practice (although approximations have been proposed, see e.g. [85]). We return to the issue of when it should we optimize or sample from the posterior distribution in Section 11.9, and hence which of the two criteria should be used.

cannot be computed exactly for the models we are interested in, making this criterion more difficult to employ in practice (although approximations have been proposed, see e.g. [85]). We return to the issue of when it should we optimize or sample from the posterior distribution in Section 11.9, and hence which of the two criteria should be used.

11.7.2 Degree Correction

The underlying assumption of all variants of the SBM considered so far is that nodes that belong to the same group are statistically equivalent. As it turns out, this fundamental aspect results in a very unrealistic property. Namely, this generative process implies that all nodes that belong to the same group receive on average the same number of edges. However, a common property of many empirical networks is that they have very heterogeneous degrees, often broadly distributed over several orders of magnitudes [66]. Therefore, in order for this property to be reproduced by the SBM, it is necessary to group nodes according to their degree, which may lead to some seemingly odd results. An example of this was given in [50] and is shown in Figure 11.7a. It corresponds to a fit of the SBM to a network of political blogs recorded during the 2004 American presidential election campaign [2], where an edge exists between two blogs if one links to the other. If we guide ourselves by the layout of the figure, we identify two assortative groups, which happen to be those aligned with the Republican and Democratic parties. However, inside each group there is a significant variation in degree, with a few nodes with many connections and many with very few. Because of what just has been explained, if we perform a fit of the SBM using only ![]() groups, it prefers to cluster the nodes into high-degree and low-degree groups, completely ignoring the party alliance.16 Arguably, this is a bad fit of this network, since – similarly to the underfitting example of Figure 11.4 – the probability of the fitted SBM generating a network with such a party structure is vanishingly small. In order to solve this undesired behavior, Karrer and Newman [50] proposed a modified model, which they dubbed the degree-corrected SBM (DC-SBM). In this variation, each node

groups, it prefers to cluster the nodes into high-degree and low-degree groups, completely ignoring the party alliance.16 Arguably, this is a bad fit of this network, since – similarly to the underfitting example of Figure 11.4 – the probability of the fitted SBM generating a network with such a party structure is vanishingly small. In order to solve this undesired behavior, Karrer and Newman [50] proposed a modified model, which they dubbed the degree-corrected SBM (DC-SBM). In this variation, each node ![]() is attributed with a parameter

is attributed with a parameter ![]() that controls its expected degree, independently of its group membership. Given this extra set of parameters, a network is generated with probability

that controls its expected degree, independently of its group membership. Given this extra set of parameters, a network is generated with probability

where ![]() again controls the expected number of edges between groups

again controls the expected number of edges between groups ![]() and

and ![]() . Note that since the parameters

. Note that since the parameters ![]() and

and ![]() always appear multiplying each other in the likelihood, their individual values may be arbitrarily scaled, provided their products remain the same. If we choose the parametrization

always appear multiplying each other in the likelihood, their individual values may be arbitrarily scaled, provided their products remain the same. If we choose the parametrization ![]() for every group

for every group ![]() , then they acquire a simple interpretation:

, then they acquire a simple interpretation: ![]() is the expected number of edges between groups

is the expected number of edges between groups ![]() ans

ans ![]() ,

, ![]() , and

, and ![]() is proportional to the expected degree of node

is proportional to the expected degree of node ![]() ,

, ![]() .

.

When inferring this model from the political blogs data – again forcing ![]() – we obtain a much more satisfying result, where the two political factions are neatly identified, as seen in Figure 11.7b. As this model is capable of fully decoupling the community structure from the degrees, which are captured separately by the parameters

– we obtain a much more satisfying result, where the two political factions are neatly identified, as seen in Figure 11.7b. As this model is capable of fully decoupling the community structure from the degrees, which are captured separately by the parameters ![]() and

and ![]() , respectively, the degree heterogeneity of the network does not interfere with the identification of the political factions.

, respectively, the degree heterogeneity of the network does not interfere with the identification of the political factions.

Based on the above example, and on the knowledge that most networks possess heterogeneous degrees, we could expect the DC-SBM to provide a better fit for most of them. However, before we jump to this conclusion, we must first acknowledge that the seemingly increased quality of fit obtained with the SBM came at the expense of adding an extra set of parameters, ![]() [110]. However intuitive we might judge the improvement brought on by degree correction, simply adding more parameters to a model is an almost sure recipe for overfitting. Therefore, a more prudent approach is once more to frame the inference problem in a Bayesian way, by focusing on the posterior distribution

[110]. However intuitive we might judge the improvement brought on by degree correction, simply adding more parameters to a model is an almost sure recipe for overfitting. Therefore, a more prudent approach is once more to frame the inference problem in a Bayesian way, by focusing on the posterior distribution ![]() , and on the description length. For this, we must include a prior for the node propensities

, and on the description length. For this, we must include a prior for the node propensities ![]() . The uninformative choice is the one which ascribes the same probability to all possible choices,

. The uninformative choice is the one which ascribes the same probability to all possible choices,

Figure 11.7 Inferred partition for a network of political blogs [2] using (a) the SBM and (b) the DC-SBM, in both cases forcing  groups. The node sizes are proportional to the node degrees. The SBM divides the network into low and high-degree groups, whereas the DC-SBM prefers the division into political factions.

groups. The node sizes are proportional to the node degrees. The SBM divides the network into low and high-degree groups, whereas the DC-SBM prefers the division into political factions.

Using again an uninformative prior for ![]() ,

,

with ![]() , the marginal likelihood now becomes

, the marginal likelihood now becomes

where ![]() is the degree of node

is the degree of node ![]() , which can be used in the same way to obtain a posterior for

, which can be used in the same way to obtain a posterior for ![]() , via Equation (11.9). Once more, the model above is equivalent to a microcanonical formulation [85], given by

, via Equation (11.9). Once more, the model above is equivalent to a microcanonical formulation [85], given by

with

and ![]() given by Equation (11.23). In the model above,

given by Equation (11.23). In the model above, ![]() is the probability of generating a multigraph where the edge counts between groups as well as the degrees

is the probability of generating a multigraph where the edge counts between groups as well as the degrees ![]() are fixed to specific values (see Figure 11.8).17 The prior

are fixed to specific values (see Figure 11.8).17 The prior ![]() is the uniform probability of generating a degree sequence, where all possibilities that satisfy the constraints imposed by the edge counts

is the uniform probability of generating a degree sequence, where all possibilities that satisfy the constraints imposed by the edge counts ![]() , namely

, namely ![]() , occur with the same probability. The description length of this model is then given by

, occur with the same probability. The description length of this model is then given by

Because uninformative priors were used to derive the above equations, we are once more subject to the same underfitting problem described previously. Luckily, from the microcanonical model we can again derive a nested DC-SBM, by replacing ![]() by a nested sequence of SBMs, exactly in the same was as was done before [82,85]. We also have the opportunity of replacing the uninformative prior for the degrees in Equation (11.44) with a more realistic option. As was argued in [85], degree sequences generated by Equation (11.44) result in exponential degree distributions, which are not quite as heterogeneous as what is often encountered in practice. A more refined approach, which is already familiar to us at this point, is to increase the Bayesian hierarchy and choose a prior that is conditioned on a higher-order aspect of the data, in this case the frequency of degrees, i.e.

by a nested sequence of SBMs, exactly in the same was as was done before [82,85]. We also have the opportunity of replacing the uninformative prior for the degrees in Equation (11.44) with a more realistic option. As was argued in [85], degree sequences generated by Equation (11.44) result in exponential degree distributions, which are not quite as heterogeneous as what is often encountered in practice. A more refined approach, which is already familiar to us at this point, is to increase the Bayesian hierarchy and choose a prior that is conditioned on a higher-order aspect of the data, in this case the frequency of degrees, i.e.

where ![]() , with

, with ![]() being the number of nodes of degree

being the number of nodes of degree ![]() in group

in group ![]() . In the above,

. In the above, ![]() is a uniform distribution of frequencies and

is a uniform distribution of frequencies and ![]() generates the degrees according to the sampled frequencies (we omit the respective expressions for brevity, and refer to [85] instead). Thus, this model is capable of using regularities in the degree distribution to inform the division into groups and is generally capable of better fits than the uniform model of Equation (11.44).

generates the degrees according to the sampled frequencies (we omit the respective expressions for brevity, and refer to [85] instead). Thus, this model is capable of using regularities in the degree distribution to inform the division into groups and is generally capable of better fits than the uniform model of Equation (11.44).

Figure 11.8 Illustration of the generative process of the microcanonical DC-SBM. Given a partition of the nodes, the edge counts between groups are sampled (a), followed by the degrees of the nodes (b) and finally the network itself (c). Adapted from [85].

Figure 11.9 Most likely hierarchical partitions of a network of political blogs [2], according to the three model variants considered, as well as the inferred number of groups  at the bottom of the hierarchy, and the description length

at the bottom of the hierarchy, and the description length  : (a) NDC-SBM,

: (a) NDC-SBM,  ,

,  bits, (b) DC-SBM,

bits, (b) DC-SBM,  ,

,  bits, (c) DC-SBM with the degree prior of Equation (11.46),

bits, (c) DC-SBM with the degree prior of Equation (11.46),  ,

,  bits. The nodes circled in blue were classified as “liberals” and the remaining ones as “conservatives” in [2] based on the blog contents. Adapted from [85].

bits. The nodes circled in blue were classified as “liberals” and the remaining ones as “conservatives” in [2] based on the blog contents. Adapted from [85].

If we apply this nonparametric approach to the same political blog network of Adamic and Glance [2], we find a much more detailed picture of its structure, revealing many more than two groups, as shown in Figure 11.9, for three model variants: the nested SBM, the nested DC-SBM, and the nested DC-SBM with the degree prior of Equation (11.46). All three model variants are in fact capable of identifying the same Republican/Democrat division at the topmost hierarchical level, showing that the non-degree-corrected SBM is not as inept in capturing this aspect of the data as the result obtained by forcing ![]() might suggest. However, the internal divisions of both factions that they uncover are distinct from each other. If we inspect the obtained values of the description length with each model we see that the DC-SBM (in particular when using Equation (11.46)) results in a smaller value, indicating that it better captures the structure of the data, despite the increased number of parameters. Indeed, a systematic analysis carried out in [85] showed that the DC-SBM does in fact yield shorter description lengths for a majority of empirical datasets, thus ultimately confirming the original intuition behind the model formulation.

might suggest. However, the internal divisions of both factions that they uncover are distinct from each other. If we inspect the obtained values of the description length with each model we see that the DC-SBM (in particular when using Equation (11.46)) results in a smaller value, indicating that it better captures the structure of the data, despite the increased number of parameters. Indeed, a systematic analysis carried out in [85] showed that the DC-SBM does in fact yield shorter description lengths for a majority of empirical datasets, thus ultimately confirming the original intuition behind the model formulation.

11.7.3 Group Overlaps

Another way we can change the internal structure of the model is to allow the groups to overlap, i.e. we allow a node to belong to more than one group at the same time. The connection patterns of the nodes are then assumed to be a mixture of the “pure” groups, which results in a richer type of model [5]. Following Ball et al. [7], we can adapt the Poisson formulation to overlapping SBMs in a straightforward manner,

with

where ![]() is the probability with which node

is the probability with which node ![]() is chosen from group

is chosen from group ![]() , so that

, so that ![]() , and

, and ![]() is once more the expected number of edges between groups

is once more the expected number of edges between groups ![]() and

and ![]() . The parameters

. The parameters ![]() replace the disjoint partition

replace the disjoint partition ![]() we have been using so far by a “soft” clustering into overlapping categories.18 Note, however, that this model is a direct generalization of the non-overlapping DC-SBM of Equation (11.38), which is recovered simply by choosing

we have been using so far by a “soft” clustering into overlapping categories.18 Note, however, that this model is a direct generalization of the non-overlapping DC-SBM of Equation (11.38), which is recovered simply by choosing ![]() . The Bayesian formulation can also be performed by using an uninformative prior for

. The Bayesian formulation can also be performed by using an uninformative prior for ![]() ,

,

in addition to the same prior for ![]() in Equation (11.40). Unfortunately, computing the marginal likelihood using Equation (11.47) directly,

in Equation (11.40). Unfortunately, computing the marginal likelihood using Equation (11.47) directly,

is not tractable, which prevents us from obtaining the posterior ![]() . Instead, it is more useful to consider the auxiliary labelled matrix, or tensor,