Chapter 2. Algorithms for Bias Mitigation

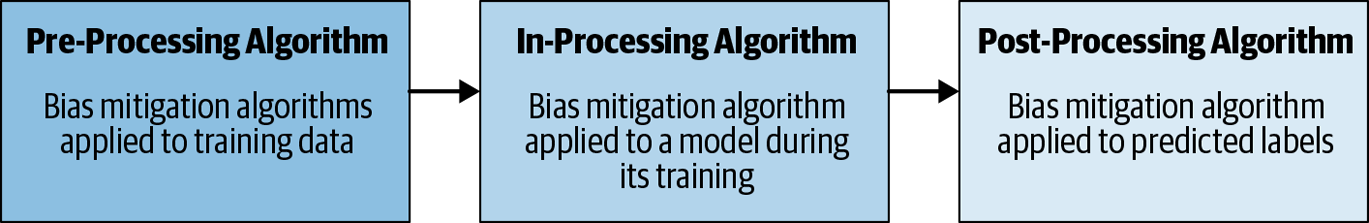

We can measure data and model fairness at different points in the machine learning pipeline. In this chapter, we look at the pre-processing, in-processing, and post-processing categories of bias mitigation algorithms.

Most Bias Starts with Your Data

AIF360’s bias mitigation algorithms are categorized based on where in the machine learning pipeline they are deployed, as illustrated in Figure 2-1. As a general guideline, you can use its pre-processing algorithms if you can modify the training data. You can use in-processing algorithms if you can change the learning procedure for a machine learning model. If you need to treat the learned model as a black box and cannot modify the training data or learning algorithm, you will need to use the post-processing algorithms.

Figure 2-1. Where can you intervene in the pipeline?

Pre-Processing Algorithms

Pre-processing is the optimal time to mitigate bias given that most bias is intrinsic to the data. With pre-processing algorithms, you attempt to reduce bias by manipulating the training data before training the algorithm. Although this is conceptually simple, there are two key issues to consider. First, data can be biased in complex ways, so it is difficult for an algorithm to translate one dataset to a new dataset which is both accurate and unbiased. Second, there can be legal issues involved: in some cases, training the decision algorithm on nonraw data can violate antidiscrimination laws.

The following are the pre-processing algorithms in AIF360 as of early 2020:

- Reweighing Pre-Processing

-

Generates weights for the training samples in each (group, label) combination differently to ensure fairness before classification. It does not change any feature or label values, so this is ideal if you are unable to make value changes.

- Optimized Pre-Processing

-

Learns a probabilistic transformation that edits the features and labels in the data with group fairness, individual distortion, and data fidelity constraints and objectives.

- Learning Fair Representations

-

Finds a latent representation that encodes the data well but obfuscates information about protected attributes.

- Disparate-Impact Remover

-

Edits feature values to increase group fairness while preserving rank ordering within groups.

If your application requires transparency about the transformation, Disparate-Impact Remover and Optimized Pre-Processing are ideal.

In-Processing Algorithms

You can incorporate fairness into the training algorithm itself by using in-processing algorithms that penalize unwanted bias. In-processing is done through fairness constraints (e.g., older people should be accepted at the same rate as young people) that influence the loss function in the training algorithm. Here are some of the most widely used in-processing algorithms in AIF360:

- Adversarial Debiasing

-

Learns a classifier to maximize prediction accuracy and simultaneously reduces an adversary’s ability to determine the protected attribute from the predictions. This approach leads to a fair classifier because the predictions can’t carry any group discrimination information that the adversary can exploit.

- Prejudice Remover

-

Adds a discrimination-aware regularization term to the learning objective.

- Meta Fair Classifier

-

Takes the fairness metric as part of the input and returns a classifier optimized for the metric.

Post-Processing Algorithms

In some instances, a user might have access to only black-box models, so post-processing algorithms must be used. AIF360 post-processing algorithms (see Figure 2-2) can be used to reduce bias by manipulating the output predictions after training the algorithm. Post-processing algorithms are easy to apply to existing classifiers without retraining. However, a key challenge in post-processing is finding techniques that reduce bias and maintain model accuracy. AIF360’s post-processing algorithms include the following:

- Equalized Odds

-

Solves a linear program to find probabilities with which to change output labels to optimize equalized odds.

- Calibrated Equalized Odds

-

Optimizes over calibrated classifier score outputs to find probabilities with which to change output labels with an equalized odds objective.

- Reject Option Classification

-

Gives favorable outcomes to unprivileged groups and unfavorable outcomes to privileged groups in a confidence band around the decision boundary with the highest uncertainty.

Figure 2-2. Algorithms in the machine learning pipeline

Continuous Pipeline Measurement

The probability distribution governing data can change over time, resulting in the training data distribution drifting away from the actual data distribution. It can also be difficult to obtain sufficient labeled training data to model the current data distribution correctly. This is known as dataset drift or dataset shift. Training models on mismatched data can severely degrade prediction accuracy and performance, so bias measurement and mitigation should be integrated into your continuous pipeline measurements, in a similar manner that functional testing is integrated with each application change.