13

Analyzing Fake News with Transformers

We were all born thinking that the Earth was flat. As babies, we crawled on flat surfaces. As kindergarten children, we played on flat playgrounds. In elementary school, we sat in flat classrooms. Then, our parents and teachers told us that the Earth was round and that the people on the other side of it were upside down. It took us quite a while to understand why they did not fall off the Earth. Even today, when we see a beautiful sunset, we still see the “sun set,” and not the Earth rotating away from the sun!

It takes time and effort to figure out what is fake news and what isn’t. Like children, we have to work our way through something we perceive as fake news.

This chapter will tackle some of the topics that create tensions. We will check the facts on topics such as climate change, gun control, and Donald Trump’s Tweets. We will analyze Tweets, Facebook posts, and other sources of information.

Our goal is certainly not to judge anybody or anything. Fake news involves both opinions and facts. News often depends on the perception of facts by local culture. We will provide ideas and tools to help others gather more information on a topic and find their way in the jungle of information we receive daily.

We will be focusing on ethical methods, not the performance of transformers. We will not be using a GPT-3 engine for this reason. We are not replacing human judgment. Instead, we are providing tools for humans to make their own judgments manually. GPT-3 engines have attained human-level performance for many tasks. However, we should leave moral and ethical decision making to humans.

Therefore, first, we will begin by defining the path that leads us to react emotionally and rationally to fake news.

We will then define some methods to identify fake news with transformers and heuristics.

We will be using the resources we built in the previous chapters to understand and explain fake news. We will not judge. We will provide transformer models that explain the news. Some might prefer to create a universal absolute transformer model to detect and assert that a message is fake news.

I choose to educate users with transformers, not to lecture them. This approach is my opinion, not a fact!

This chapter covers the following topics:

- Cognitive dissonance

- Emotional reactions to fake news

- A behavioral representation of fake news

- A rational approach to fake news

- A fake news resolution roadmap

- Applying sentiment analysis transformer tasks to social media

- Analyzing gun control perceptions with NER and SRL

- Using information extracted by transformers to find reliable websites

- Using transformers to produce results for educational purposes

- How to read former President Trump’s Tweets with an objective but critical eye

Our first step will be to explore the emotional and rational reactions to fake news.

Emotional reactions to fake news

Human behavior has a tremendous influence on our social, cultural, and economic decisions. Our emotions influence our economy as much as, if not more than, rational thinking. Behavioral economics drives our decision-making process. We buy consumer goods that we physically need and satisfy our emotional desires. We might even buy a smartphone in the heat of the moment, although it exceeds our budget.

Our emotional and rational reactions to fake news depend on whether we think slowly or react quickly to incoming information. Daniel Kahneman described this process in his research and book, Thinking, Fast and Slow (2013).

He and Vernon L. Smith were awarded the Nobel Memorial Prize in Economic Sciences for behavioral economics research. Behavior drives decisions we previously thought were rational. Unfortunately, many of our decisions are based on emotions, not reason.

Let’s translate these concepts into a behavioral flowchart applied to fake news.

Cognitive dissonance triggers emotional reactions

Cognitive dissonance drives fake news up to the top ranks of Twitter, Facebook, and other social media platforms. If everybody agrees with the content of a Tweet, nothing will happen. If somebody writes a Tweet saying, “Climate change is important,” nobody will react.

We enter a state of cognitive dissonance when tensions build up between contradictory thoughts in our minds. As a result, we become nervous, agitated, and it wears us down like a short-circuit in a toaster.

We have many examples to think about. Is wearing a mask with COVID-19 necessary when we are outdoors? Are lockdowns a good or bad thing? Are the coronavirus vaccines effective? Or are coronavirus vaccines dangerous? Cognitive dissonance is like a musician that keeps making mistakes while playing a simple song. It drives us crazy!

The fake news syndrome increases cognitive dissonance exponentially! One expert will assert that vaccines are safe, and another that we need to be careful. One expert says that wearing a mask outside is useless, and another one asserts on a news channel that we must wear one! Each side accuses the other of fake news!

It appears for one side a significant portion of fake news is the other side’s truth.

We are in 2022, and the US Republicans and Democrats are still unable to agree on national election rules in the wake of the 2020 presidential elections, or the organization of the upcoming elections.

We could go on and find scores of other topics by just opening one newspaper and then read another view in another opposing one! Nevertheless, some common-sense premises to this chapter can be drawn from these examples:

- Finding a transformer model that automatically detects fake news makes no sense. In the world of social media and multicultural expression, each group has a sense of knowing the truth, and the other group is expressing fake news.

- Trying to express our view as being the truth from one culture to another makes no sense. In a global world, cultures vary in each country, each continent, and everywhere in social media.

- Fake news as an absolute is a myth.

- We need to find a better definition of fake news.

My opinion (not a fact, of course!) is that fake news is a state of cognitive dissonance that can only be resolved by cognitive reasoning. Thus, resolving the problem of fake news is exactly like trying to resolve a conflict between two parties or within our own minds.

In this chapter and life, my recommendation is to analyze each conflictual tension by deconstructing the conflict and ideas with transformer models. We are not “combating fake news,” “finding inner peace,” or pretending to use transformers to find “the absolute truth to oppose fake news.”

We use transformers to obtain a deeper understanding of a sequence of words (a message) to form a more profound and broader opinion on a topic.

Once that is done, let the lucky user of transformer models obtain a better vision and opinion.

To do this, I designed the chapter as a classroom exercise we can use for ourselves and others. Transformers are a great way to deepen our understanding of language sequences, form broader opinions, and develop our cognitive abilities.

Let’s start by seeing what happens when somebody posts a conflictual Tweet.

Analyzing a conflictual Tweet

The following Tweet is a message posted on Twitter (I paraphrased it). The Tweets shown in this chapter are in raw dataset format, not the Twitter interface display. You can be sure that many people would disagree with the content if a leading political figure or famous actor tweeted it:

Climate change is bogus. It's a plot by the liberals to take the economy down.

It would trigger emotional reactions. Tweets would pile up on all sides. It would go viral and trend!

Let’s run the Tweet on transformer tools to understand how this Tweet could create a cognitive dissonance storm in somebody’s mind.

Open Fake_News.ipynb, the notebook we will be using in this section.

If you have trouble running this notebook on Google Colab, try running the Fake_News_Analsis_with_ChatGPT.ipynb notebook instead

We will begin with resources from the Allen Institute for AI. We will run the RoBERTa transformer model we used for sentiment analysis in Chapter 12, Detecting Customer Emotions to Make Predictions.

We will first install allennlp-models:

!pip install allennlp==1.0.0 allennlp-models==1.0.0

AllenNLP is continuously updating the versions as they progress. Version 2.4.0 exists at the time of the writing but provides no additional value to the examples provided in this chapter at this date. Stochastic algorithms or models that are updated can produce different outputs from one to another.

We then run the next cell with Bash to analyze the output of the Tweet in detail (information on the model and the output):

!echo '{"sentence":"Climate change is bogus. It's a plot by the liberals to take the economy down."}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/sst-roberta-large-2020.06.08.tar.gz -

The output shows that the Tweet is negative. The positive value is 0, and the negative value is near 1:

"probs": [0.0008486526785418391, 0.999151349067688]

The output might vary from one run to the other since transformers are stochastic algorithms.

We will now go to https://allennlp.org/ to get a visual representation of the analysis.

The output might change from one run to another. Transformer models are continuously trained and updated. Our goal in this chapter is to focus on the reasoning of transformer models.

We select Sentiment Analysis (https://demo.allennlp.org/sentiment-analysis) and choose the RoBERTa large model to run the analysis.

We obtain the same negative result. However, we can investigate further and see what words influenced RoBERTa’s decision.

Go to Model Interpretations.

Interpreting the model will provide insights into how a result was obtained. We can choose one or look into three options:

- Simple Gradient Visualization: This approach provides two visualizations. The first one computes the gradient of the score of a class related to the input. The second one is a saliency (main features) map inferred from the class and input.

- Integrated Gradient Visualization: This model requires no changes to a neural network.

- The motivation is to design calls to the gradient used to generate an attribution of the predictions of a neural network to its inputs.

- Smooth Gradient Visualization: This approach computes the gradients using the output predictions and the input. The goal is to identify the features of the input. However, noise is added to improve the interpretation.

In this section, go to Model Interpretations and then click on Simple Gradient Visualization and on Interpret Prediction to obtain the following representation:

Figure 13.1: Visualizing the top 3 most important words

is + bogus + plot mostly influenced the negative prediction.

At this point, you may be wondering why we are looking at such a simple example to explain cognitive dissonance. The explanation comes from the following Tweet.

A staunch Republican wrote the first Tweet. Let’s call the member Jaybird65. To his surprise, a fellow Republican tweeted the following Tweet:

I am a Republican and think that climate change consciousness is a great thing!

This Tweet came from a member we will call Hunt78. Let’s run this sentence in Fake_News.ipynb:

!echo '{"sentence":"I am a Republican and think that climate change consciousness is a great thing!"}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/sst-roberta-large-2020.06.08.tar.gz -

The output is positive, of course:

"probs": [0.9994876384735107, 0.0005123814917169511]

A cognitive dissonance storm is building up in Jaybird65's mind. He likes Hunt78 but disagrees. His mind storm is intensifying! If you read the subsequent Tweets that ensue between Jaybird65 and Hunt78, you would discover some surprising facts that hurt Jaybird65's feelings:

Jaybird65 and Hunt78 obviously know each other.

- If you go to their respective Twitter accounts, you will see that they are both hunters.

- You can see that they are both staunch Republicans.

Jaybird65's initial Tweet came from his reaction to an article in the New York Times stating that climate change was destroying the planet.

Jaybird65 is quite puzzled. He can see that Hunt78 is a Republican like him. He is also a hunter. So how can Hunt78 believe in climate change?

This Twitter thread goes on for a massive number of raging Tweets.

However, we can see that the roots of fake news discussions lie in emotional reactions to the news. A rational approach to climate change would simply be:

- No matter what the cause is, the climate is changing.

- We do not need to take the economy down to change humans.

- We need to continue to build electric cars, more walking space in large cities, and better agricultural habits. We just need to do business in new ways that will most probably generate revenue.

But emotions are strong in humans!

Let’s represent the process that leads from news to emotional and rational reactions.

Behavioral representation of fake news

Fake news starts with emotional reactions, builds up, and often leads to personal attacks.

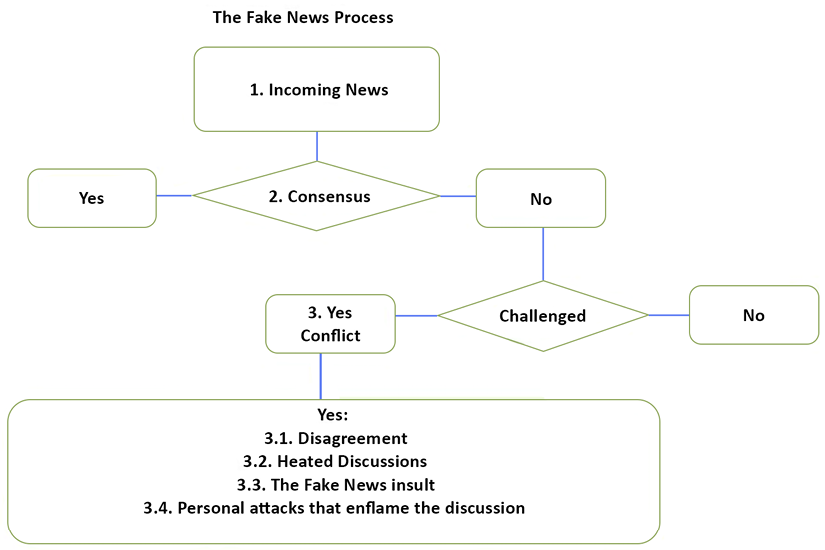

Figure 13.2 represents the three-phase emotional reaction path to fake news when cognitive dissonance clogs our thinking process:

Phase 1: Incoming News

Two persons or groups of persons react to the news they obtained through their respective media: Facebook, Twitter, other social media, TV, radio, websites. Each source of information contains biased opinions.

Phase 2: Consensus

The two persons or groups of persons can agree or disagree. If they disagree, we will enter phase 3, during which the conflict might rage.

If they agree, the consensus stops the heat from building up, and the news is accepted as real news. However, even if all parties believe the news they are receiving is not fake, it does not mean that it is not fake. Here are some things that explain that news labeled as not fake news can be fake news:

- In the early 12th century, most people in Europe agreed that Earth was the center of the universe and that the solar system rotated around the Earth.

- In 1900, most people believed that there would never be such a thing as an airplane that would fly over oceans.

- In January 2020, most Europeans believed that COVID-19 was a virus impacting only China and not a global pandemic.

The bottom line is that a consensus between two parties or even a society as a whole does not mean that the incoming news is true or false. If two parties disagree, this will lead to a conflict:

Figure 13.2: Representation of the path from news to a fake news conflict

Let’s face it. On social media, members usually converge with others that have the same ideas and rarely change their minds no matter what. This representation shows that a person will often stick to their opinion expressed in a Tweet, and the conflict escalates as soon as somebody challenges their message!

Phase 3: Conflict

A fake news conflict can be divided into four phases:

- 3.1: The conflict begins with a disagreement. Each party will Tweet or post messages on Facebook or other platforms. After a few exchanges, the conflict might wear out because each party is not interested in the topic.

- 3.2: If we go back to the climate change discussion between

Jaybird65andHunt78, we know things can get nasty. The conversation is heating up! - 3.3: At one point, inevitably, the arguments of one party will become fake news.

Jaybird65will get angry and show it in numerous Tweets and say that climate change due to humans is fake news.Hunt78will get angry and say that denying humans’ contribution to climate change is fake news. - 3.4: These discussions often end in personal attacks. Godwin’s law often enters the conversation even if we don’t know how it got there. Godwin’s law states that one party will find the worst reference possible to describe the other party at one point in a conversation. It sometimes comes out as “You liberals are like Hitler trying to force our economy down with climate change.” This type of message can be seen on Twitter, Facebook, and other platforms. It even appears in real-time chats during presidential speeches on climate change.

Is there a rational approach to these discussions that could soothe both parties, calm them down, and at least reach a middle-ground consensus to move forward?

Let’s try to build a rational approach with transformers and heuristics.

A rational approach to fake news

Transformers are the most powerful NLP tools ever. This section will first define a method that can take two parties engaged in conflict over fake news from an emotional level to a rational level.

We will then use transformer tools and heuristics. We will run transformer samples on gun control and former President Trump’s Tweets during the COVID-19 pandemic. We will also describe heuristics that could be implemented with classical functions.

You can implement these transformer NLP tasks or other tasks of your choice. In any case, the roadmap and method can help teachers, parents, friends, co-workers, and anybody seeking the truth. Thus, your work will always be worthwhile!

Let’s begin with the roadmap of a rational approach to fake news that includes transformers.

Defining a fake news resolution roadmap

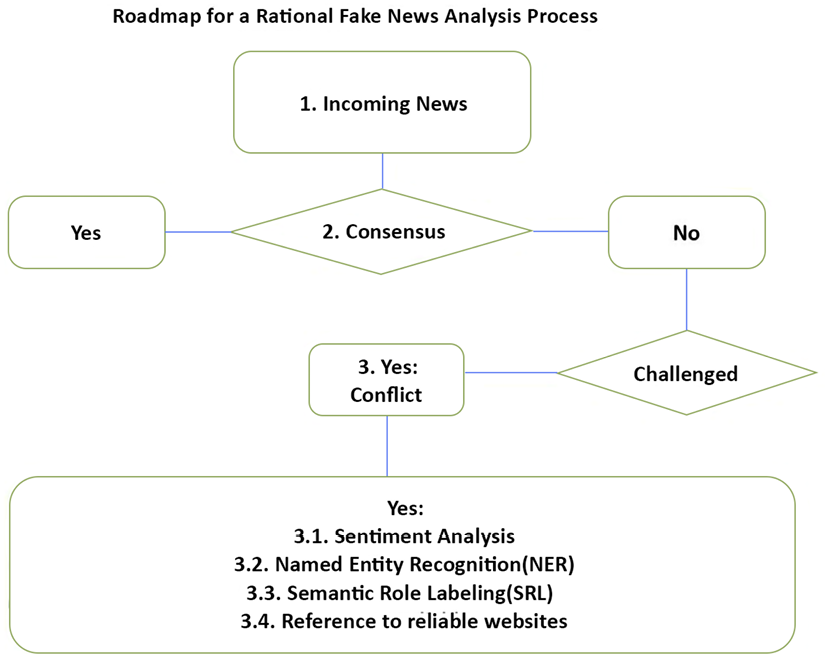

Figure 13.3 defines a roadmap for a rational fake news analysis process. The process contains transformer NLP tasks and traditional functions:

Figure 13.3: Going from emotional reactions to fake news to rational representations

We see that a rational process will nearly always begin once an emotional reaction has begun. The rational process must kick in as soon as possible to avoid building up emotional reactions that could interrupt the discussion.

Phase 3 now contains four tools:

- 3.1: Sentiment Analysis to analyze the top-ranking “emotional” positive or negative words. We will use

AllenNLPresources to run a RoBERTa large transformer in ourFake_News.ipynbnotebook. We will use AllenNLP’s visual tools to visualize the keywords and explanations. We introduced sentiment analysis in Chapter 12, Detecting Customer Emotions to Make Predictions. - 3.2: Named Entity Recognition (NER) to extract entities from social media messages for Phase 3.4. We described NER in Chapter 11, Let Your Data Do the Talking: Story, Questions, and Answers. We will use Hugging Face’s BERT transformer model for the task. In addition, we will use AllenNLP.org’s visual tools to visualize the entities and explanations.

- 3.3: Semantic Role Labeling (SRL) to label verbs from social media messages for Phase 3.4. We described SRL in Chapter 10, Semantic Role Labeling with BERT-Based Transformers. We will use AllenNLP’s BERT model in

Fake_News.ipynb. We will use AllenNLP.org’s visual tools to visualize the output of the labeling task. - 3.4: References to reliable websites will be described to show how classical coding can help.

Let’s begin with gun control.

The gun control debate

The Second Amendment of the Constitution of the United States asserts the following rights:

A well regulated Militia, being necessary to the security of a free State, the right of the people to keep and bear Arms, shall not be infringed.

America has been divided on this subject for decades:

- On the one hand, many argue that it is their right to bear firearms, and they do not want to endure gun control. They argue that it is fake news to contend that possessing weapons creates violence.

- On the other hand, many argue that bearing firearms is dangerous and that without gun control, the US will remain a violent country. They argue that it’s fake news to contend that it is not dangerous to carry weapons.

We need to help each party. Let’s begin with sentiment analysis.

Sentiment analysis

If you read Tweets, Facebook messages, YouTube chats during a speech, or any other social media, you will see that the parties are fighting a raging battle. You do not need a TV show. You can just eat your popcorn as the Tweet battles tear the parties apart!

Let’s take a Tweet from one side and a Facebook message from the opposing side. I changed the members’ names and paraphrased the text (not a bad idea considering the insults in the messages). Let’s start with the pro-gun Tweet:

Pro-guns analysis

This Tweet is the honest opinion of a person:

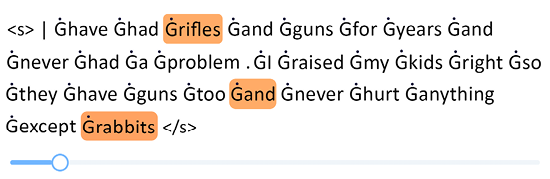

Afirst78: I have had rifles and guns for years and never had a problem. I raised my kids right so they have guns too and never hurt anything except rabbits.

Let’s run this in Fake_News.ipynb:

!echo '{"sentence": "I have had rifles and guns for years and never had a problem. I raised my kids right so they have guns too and never hurt anything except rabbits."}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/sst-roberta-large-2020.06.08.tar.gz -

The prediction is positive:

prediction: {"logits": [1.9383275508880615, -1.6191326379776], "probs": [0.9722791910171509, 0.02772079035639763]

We will now visualize the result on AllenNLP. Simple Gradient Visualization provides an explanation:

Figure 13.4: Simple Gradient Visualization of a sentence

The explanation shows that sentiment analysis on the Tweet by Afirst78 highlights rifles + and + rabbits.

Results may vary at each run or over time. This is because transformer models are continuously trained and updated. However, the focus in this chapter is on the process, not a specific result.

We will pick up ideas and functions at each step. Fake_News_FUNCTION_1 is the first function in this section:

Fake_News_FUNCTION_1: rifles + and + rabbits can be extracted and noted for further analysis. We can see that “rifles” are not “dangerous” in this example.

We will now analyze NYS99's view that guns must be controlled.

Gun control analysis

NYS99: "I have heard gunshots all my life in my neighborhood, have lost many friends, and am afraid to go out at night."

Let’s first run the analysis in Fake_News.ipynb:

!echo '{"sentence": "I have heard gunshots all my life in my neighborhood, have lost many friends, and am afraid to go out at night."}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/sst-roberta-large-2020.06.08.tar.gz -

The result is naturally negative:

prediction: {"logits": [-1.3564586639404297, 0.5901418924331665], "probs": [0.12492450326681137, 0.8750754594802856]

Let’s find the keywords using AllenNLP online. We run the sample and can see that Smooth Gradient Visualization highlights the following:

Figure 13.5: Smooth Gradient Visualization of a sentence

The keyword afraid stands out for function 2 of this section. We now know that “afraid is associated with “guns.”

We can see that the model has problems interpreting these cognitive dissonances. Our human critical thinking is still necessary!

Fake_News_FUNCTION_2: afraid and guns (the topic) can be extracted and noted for further analysis.

If we now put our two functions side by side, we can clearly understand why the two parties are fighting each other:

Fake_News_FUNCTION_1:rifles+and+rabbitsAfirst78probably lives in a mid-western state in the US. Many of these states have small populations, are very quiet, and enjoy low crime rates.Afirst78may never have traveled to a major city, enjoying the pleasure of a quiet life in the country.Fake_News_FUNCTION_2:afraid+ the topicgunsNYS99probably lives in a big city or a greater area of a major US city. Crime rates are often high, and violence is a daily phenomenon.NYS99may never have traveled to a mid-western state and seen howAfirst78lives.

These two honest but strong views prove why we need to implement solutions like those described in this chapter.

Better information is the key to fewer fake news battles.

We will follow our process and apply named entity recognition to our sentence.

Named entity recognition (NER)

This chapter shows that by using several transformer methods, the user will benefit from a broader perception of a message through different angles. An HTML page could sum up this chapter’s transformer methods and even contain other transformer tasks in production mode.

We must now apply our process to the Tweet and Facebook message, although we can see no entities in the messages. However, the program does not know that. We will only run the first message to illustrate this step of the process.

We will first install Hugging Face transformers:

!pip install -q transformers

from transformers import pipeline

from transformers import AutoTokenizer, AutoModelForSequenceClassification,AutoModel

Now, we can run the first message:

nlp_token_class = pipeline('ner')

nlp_token_class('I have had rifles and guns for years and never had a problem. I raised my kids right so they have guns too and never hurt anything except rabbits.')

The output produces no result since there are no entities. However, this doesn’t mean it should be taken out of the pipeline. Another sentence might contain the name of the location of a person providing clues on the culture in that area.

Let’s check the model we are using before we move on:

nlp_token_class.model.config

The output shows that the model uses 9 labels and 1,024 features for the attention layers:

BertConfig {

"_num_labels": 9,

"architectures": [

"BertForTokenClassification"

],

"attention_probs_dropout_prob": 0.1,

"directionality": "bidi",

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 1024,

"id2label": {

"0": "O",

"1": "B-MISC",

"2": "I-MISC",

"3": "B-PER",

"4": "I-PER",

"5": "B-ORG",

"6": "I-ORG",

"7": "B-LOC",

"8": "I-LOC"

},

We are using a BERT 24-layer transformer model. If you wish to explore the architecture, run nlp_token_class.model.

We will now run SRL on the messages.

Semantic Role Labeling (SRL)

We will continue to run Fake_News.ipynb cell by cell in the order found in the notebook. We will examine both points of view.

Let’s start with a pro-gun perspective.

Pro-guns SRL

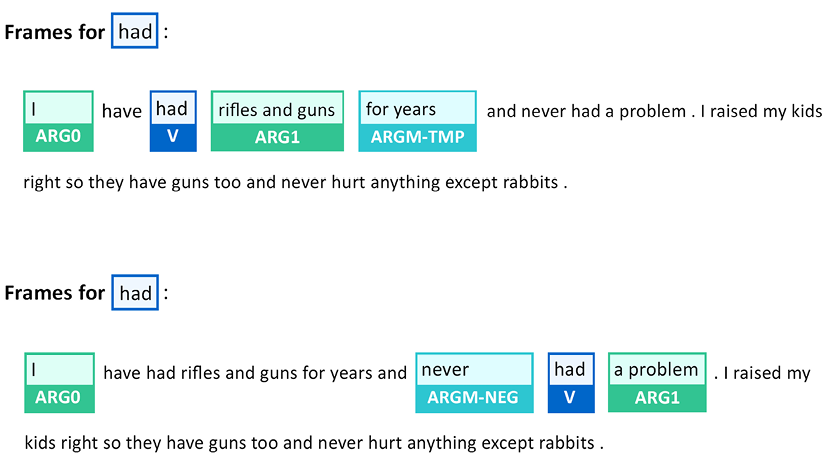

We will first run the following cell in Fake_News.ipynb:

!echo '{"sentence": "I have had rifles and guns for years and never had a problem. I raised my kids right so they have guns too and never hurt anything except rabbits."}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz -

The output is very detailed and can be useful if you wish to investigate or parse the labels in detail, as shown in this excerpt:

prediction: {"verbs": [{"verb": "had", "description": "[ARG0: I] have [V: had] [ARG1: rifles and guns] [ARGM-TMP: for years] and never had a problem ...

Now let’s go into visual detail on AllenNLP in the Semantic Role Labeling section. We first run the SRL task for this message. The first verb, had, shows that Afirst78 is an experienced gun owner:

Figure 13.6: SRL for the verb “had”

The arguments of had sum up Afirst78's experience: I + rifles and guns + for years.

The second frame for had adds the information I + never + had + a problem

The arguments of raised display Afirst78's parental experience:

Figure 13.7: SRL verb and arguments for the verb “raised”

The arguments explain many pro-gun positions: my kids + …have guns too and never hurt anything.

The results may vary from one run to another or when the model is updated, but the process remains the same.

We can add what we found here to our collection of functions with some parsing:

Fake_News_FUNCTION_3:I+rifles and guns+for yearsFake_News_FUNCTION_4:my kids+have gunstoo andnever hurt anything

Now let’s explore the gun control message.

Gun control SRL

We will first run the Facebook message in Fake_News.ipynb. We will just continue to run the notebook cell by cell in the order they were created in the notebook:

!echo '{"sentence": "I have heard gunshots all my life in my neighborhood, have lost many friends, and am afraid to go out at night."}' |

allennlp predict https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz -

The result labels the key verbs in the sequence in detail, as shown in the following excerpt:

prediction: {"verbs": [{"verb": "heard", "description": "[ARG0: I] have [V: heard] [ARG1: gunshots all my life in my neighborhood]"

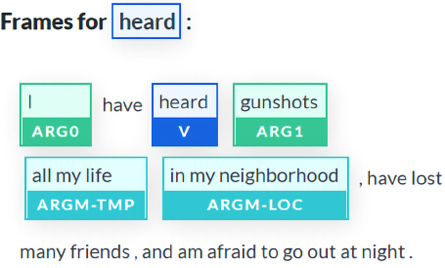

We continue to apply our process, go to AllenNLP, and then to the Semantic Role Labeling section. We enter the sentence and run the transformer model. The verb heard shows the tough reality of this message:

Figure 13.8: SRL representation of the verb “heard”

We can quickly parse the words for our fifth function:

Fake_News_FUNCTION_5:heard+gunshots+all my life

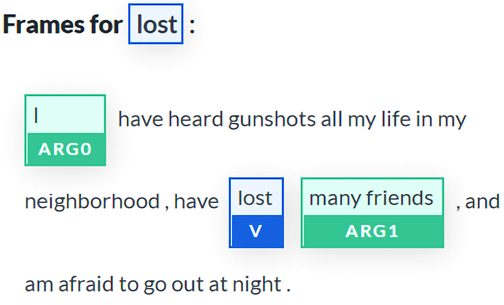

The verb lost shows significant arguments related to it:

Figure 13.9: SRL representation of the verb “lost”

We have what we need for our sixth function:

Fake_News_FUNCTION_6:lost+many+friends

It is good to suggest reference sites to the user once different transformer models have clarified each aspect of a message.

Reference sites

We have run the transformers on NLP tasks and described traditional heuristic hard coding that needs to be developed to parse the data and generate six functions.

Bear in mind that the results may vary from one run to another. The six functions were generated at different times and provided slightly different results from the previous section. However, the main ideas remain the same. Let’s now focus on these six functions.

- Pro-guns:

Fake_News_FUNCTION_1:never+problem+guns - Gun control:

Fake_News_FUNCTION_2:heard+afraid+guns - Pro-guns:

Fake_News_FUNCTION_3:I+rifles and guns+for years - Pro-guns:

Fake_News_FUNCTION_4:my kids+have guns+never hurt anything - Gun control:

Fake_News_FUNCTION_5:heard+gunshots+all my life - Gun control:

Fake_News_FUNCTION_6:lost+many+friends

Let’s reorganize the list and separate both perspectives and draw some conclusions to decide our actions.

Pro-guns and gun control

The pro-gun arguments are honest, but they show that there is a lack of information on what is going on in major cities in the US:

- Pro-guns:

Fake_News_FUNCTION_1:never+problem+guns - Pro-guns:

Fake_News_FUNCTION_3:I+rifles and guns+for years - Pro-guns:

Fake_News_FUNCTION_4:my kids+have guns+never hurt anything

The gun control arguments are honest, but they show that there is a lack of information on how large quiet areas of the Midwest can be:

- Gun control:

Fake_News_FUNCTION_2:heard+afraid+guns - Gun control:

Fake_News_FUNCTION_5:heard+gunshots+all my life - Gun control:

Fake_News_FUNCTION_6:lost+many+friends

Each function can be developed to inform the other party.

For example, let’s take FUNCTION1 and express it in pseudocode:

Def FUNCTION1:

call FUNCTIONs 2+5+6 Keywords and simplify

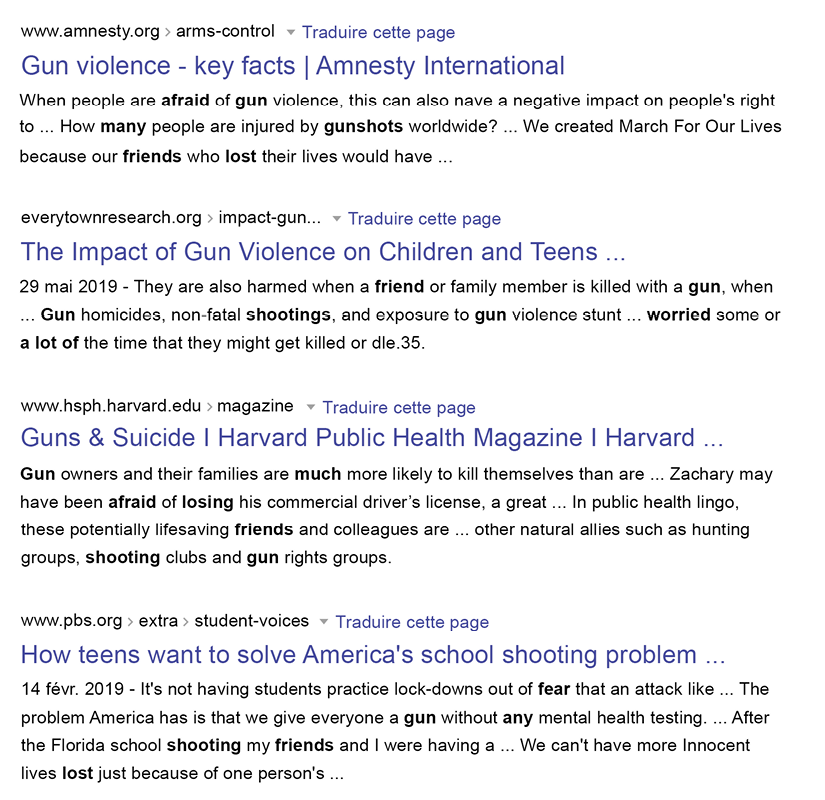

Google search=afraid guns lost many friends gunshots

The goal of the process is:

- First, run transformer models to deconstruct and explain the messages. Using an NLP transformer is like a mathematical calculator. It can produce good results, but it takes a free-thinking human mind to interpret them!

- Then, ask a trained NLP human user to be proactive, search, and read information better.

Transformer models help users understand messages more deeply; they don’t think for them! We are trying to help users, not lecture or brainwash them!

Parsing would be required to process the results of the functions. However, if we had hundreds of social media messages, we could automatically let our program do the whole job.

The links will change as Google modifies its searches. However, the first links that appear are interesting to show to pro-gun advocates:

Figure 13.10: Guns and violence

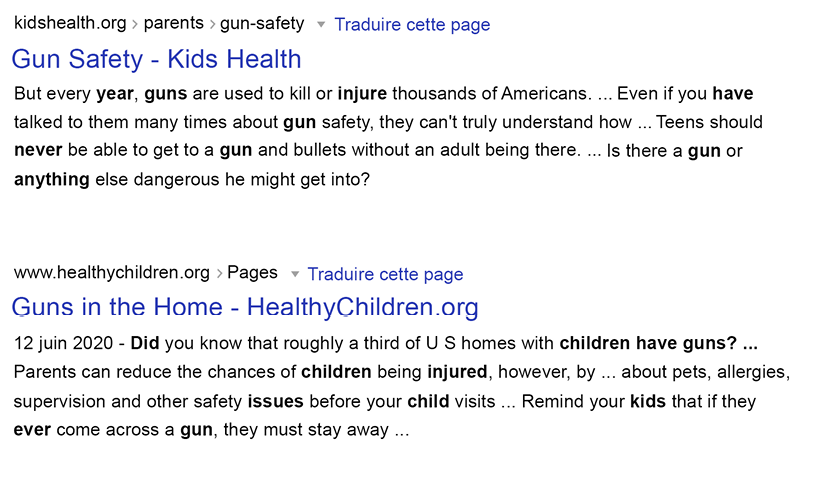

Let’s imagine we are searching gun control advocates with the following pseudocode:

Def FUNCTION2:

call FUNCTIONs 1+3+4 Keywords and simplify

Google search=never problem guns for years kids never hurt anything

The Google search returned no clear positive results in favor of pro-gun advocates. The most interesting ones are neutral and educational:

Figure 13.11: Gun safety

You could run automatic searches on Amazon’s bookstore, magazines, and other educational material.

Most importantly, it is essential for people with opposing ideas to talk to each other without getting into a fight. Understanding each other is the best way to develop empathy on both sides.

One might be tempted to trust social media companies. I recommend never letting a third party act as a proxy for your mind process. Use transformer models to deconstruct messages but remain proactive!

A consensus on this topic could be to agree on following safety guidelines with gun possession. For example, one can choose either not to have guns at home or lock them up safely, so children do not have access to them.

Let’s move on to COVID-19 and former President Trump’s Tweets.

COVID-19 and former President Trump’s Tweets

No matter your political opinion, there is so much being said by Donald Trump and about Donald Trump that it would take a book in itself to analyze all of the information! This is a technical, not a political book, so we will analyze the Tweets scientifically.

We described an educational approach to fake news in the Gun control section of this chapter. We do not need to go through the whole process again.

We implemented and ran AllenNLP’s SRL task with a BERT model in our Fake_News.ipynb notebook in the Gun control section.

In this section, we will focus on the logic of fake news. We will run the BERT model on SRL and visualize the results on AllenNLP’s website.

Now, let’s go through some presidential Tweets on COVID-19.

Semantic Role Labeling (SRL)

SRL is an excellent educational tool for all of us. We tend just to read Tweets passively and listen to what others say about them. Breaking messages down with SRL is a good way to develop social media analytical skills to distinguish fake from accurate information.

I recommend using SRL transformers for educational purposes in class. A young student can enter a Tweet and analyze each verb and its arguments. It could help younger generations become active readers on social media.

We will first analyze a relatively undivided Tweet and then a conflictual Tweet:

Let’s analyze the latest Tweet found on July 4 while writing this book. I took the name of the person who is referred to as a “Black American” out and paraphrased some of the former President’s text:

X is a great American, is hospitalized with coronavirus, and has requested prayer. Would you join me in praying for him today, as well as all those who are suffering from COVID-19?

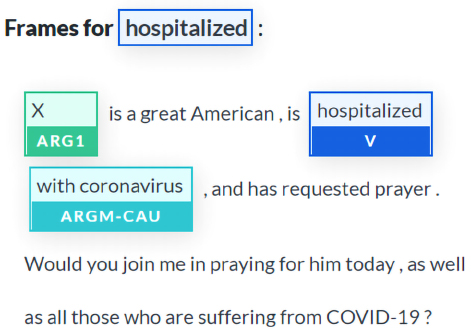

Let’s go to AllenNLP’s Semantic Role Labeling section, run the sentence, and look at the result. The verb hospitalized shows the member is staying close to the facts:

Figure 13.12: SRL arguments of the verb “hospitalized”

The message is simple: X + hospitalized + with coronavirus.

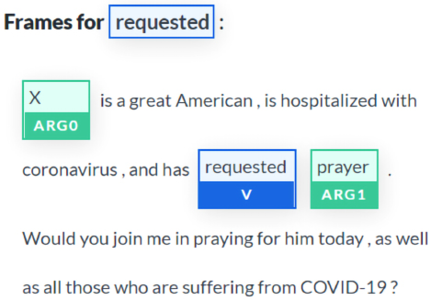

The verb requested shows that the message is becoming political:

Figure 13.13: SRL arguments of the verb “requested”

We don’t know if the person requested the former President to pray or decided he would be the center of the request.

A good exercise would be to display an HTML page and ask the users what they think. For example, the users could be asked to look at the results of the SRL task and answer the two following questions:

Was former President Trump asked to pray, or did he deviate a request made to others for political reasons?

Is the fact that former President Trump states that he was indirectly asked to pray for X fake news or not?

You can think about it and decide for yourself!

Let’s have a look at one banned from Twitter. I took the names out and paraphrased it and toned it down. Still, when we run it on AllenNLP and visualize the results, we get some surprising SRL outputs.

Here is the toned-down and paraphrased Tweet:

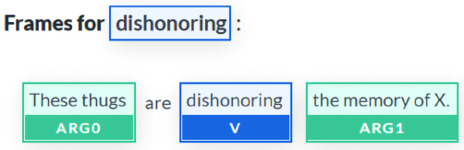

These thugs are dishonoring the memory of X.

When the looting starts, actions must be taken.

Although I suppressed the main part of the original Tweet, we can see that the SRL task shows the bad associations made in the Tweet:

Figure 13.14: SRL arguments of the verb “dishonoring”

An educational approach to this would be to explain that we should not associate the arguments thugs and memory and looting. They do not fit together at all.

An important exercise would be to ask a user why the SRL arguments do not fit together.

I recommend many such exercises so that the transformer model users develop SRL skills to have a critical view of any topic presented to them.

Critical thinking is the best way to stop the propagation of the fake news pandemic!

We have gone through rational approaches to fake news with transformers, heuristics, and instructive websites. However, in the end, a lot of the heat in fake news debates boils down to emotional and irrational reactions.

In a world of opinion, you will never find an entirely objective transformer model that detects fake news since opposing sides never agree on what the truth is in the first place! One side will agree with the transformer model’s output. Another will say that the model is biased and built by enemies of their opinion!

The best approach is listening to others and keeping the heat down!

Before we go

This chapter focused more on applying transformers to a problem than finding a silver bullet transformer model, which does not exist.

You have two main options to solve an NLP problem: find new transformer models or create reliable, durable methods to implement transformer models.

We will now conclude the chapter and move on to interpret transformer models.

Summary

Fake news begins deep inside our emotional history as humans. When an event occurs, emotions take over to help us react quickly to a situation. We are hardwired to react strongly when we are threatened.

Fake news spurs strong reactions. We fear that this news could temporarily or permanently damage our lives. Many of us believe climate change could eradicate human life from Earth. Others believe that if we react too strongly to climate change, we might destroy our economies and break society down. Some of us believe that guns are dangerous. Others remind us that the Second Amendment of the United States Constitution gives us the right to possess a gun in the US.

We went through other raging conflicts over COVID-19, former President Trump, and climate change. In each case, we saw that emotional reactions are the fastest ones to build up into conflicts.

We then designed a roadmap to take the emotional perception of fake news to a rational level. We used some transformer NLP tasks to show that it is possible to find key information in Tweets, Facebook messages, and other media.

We used news perceived by some as real news and others as fake news to create a rationale for teachers, parents, friends, co-workers, or just people talking. We added classical software functions to help us on the way.

At this point, you have a toolkit of transformer models, NLP tasks, and sample datasets in your hands.

You can use artificial intelligence for the good of humanity. It’s up to you to take these transformer tools and ideas to implement them to make the world a better place for all.

A good way to understand transformers is to visualize their internal process. We will analyze how a transformer gradually builds a representation of a sequence in the next chapter, Interpreting Black Box Transformer Models.

Questions

- News labeled as fake news is always fake. (True/False)

- News that everybody agrees with is always accurate. (True/False)

- Transformers can be used to run sentiment analysis on Tweets. (True/False)

- Key entities can be extracted from Facebook messages with a DistilBERT model running NER. (True/False)

- Key verbs can be identified from YouTube chats with BERT-based models running SRL. (True/False)

- Emotional reactions are a natural first response to fake news. (True/False)

- A rational approach to fake news can help clarify one’s position. (True/False)

- Connecting transformers to reliable websites can help somebody understand why some news is fake. (True/False)

- Transformers can make summaries of reliable websites to help us understand some of the topics labeled as fake news. (True/False)

- You can change the world if you use AI for the good of us all. (True/False)

References

- Daniel Kahneman, 2013, Thinking, Fast and Slow

- Hugging Face pipelines: https://huggingface.co/transformers/main_classes/pipelines.html

- The Allen Institute for AI: https://allennlp.org/

Join our book’s Discord space

Join the book’s Discord workspace:

https://www.packt.link/Transformers