- The first step, as always, is including the necessary modules:

import tensorflow as tf

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

import matplotlib.pyplot as plt

import math

%matplotlib inline

- Load the input data:

mnist = input_data.read_data_sets("MNIST_data/")

trX, trY, teX, teY = mnist.train.images, mnist.train.labels, mnist.test.images, mnist.test.labels

- Define the network parameters. Here, we also calculate the spatial dimensions of the output of each max-pool layer; we need this information to upsample the image in the decoder network:

# Network Parameters

h_in, w_in = 28, 28 # Image size height and width

k = 3 # Kernel size

p = 2 # pool

s = 2 # Strides in maxpool

filters = {1:32,2:32,3:16}

activation_fn=tf.nn.relu

# Change in dimensions of image after each MaxPool

h_l2, w_l2 = int(np.ceil(float(h_in)/float(s))) , int(np.ceil(float(w_in)/float(s))) # Height and width: second encoder/decoder layer

h_l3, w_l3 = int(np.ceil(float(h_l2)/float(s))) , int(np.ceil(float(w_l2)/float(s))) # Height and width: third encoder/decoder layer

- Create placeholders for input (noisy image) and target (corresponding clear image):

X_noisy = tf.placeholder(tf.float32, (None, h_in, w_in, 1), name='inputs')

X = tf.placeholder(tf.float32, (None, h_in, w_in, 1), name='targets')

- Build the Encoder and Decoder networks:

### Encoder

conv1 = tf.layers.conv2d(X_noisy, filters[1], (k,k), padding='same', activation=activation_fn)

# Output size h_in x w_in x filters[1]

maxpool1 = tf.layers.max_pooling2d(conv1, (p,p), (s,s), padding='same')

# Output size h_l2 x w_l2 x filters[1]

conv2 = tf.layers.conv2d(maxpool1, filters[2], (k,k), padding='same', activation=activation_fn)

# Output size h_l2 x w_l2 x filters[2]

maxpool2 = tf.layers.max_pooling2d(conv2,(p,p), (s,s), padding='same')

# Output size h_l3 x w_l3 x filters[2]

conv3 = tf.layers.conv2d(maxpool2,filters[3], (k,k), padding='same', activation=activation_fn)

# Output size h_l3 x w_l3 x filters[3]

encoded = tf.layers.max_pooling2d(conv3, (p,p), (s,s), padding='same')

# Output size h_l3/s x w_l3/s x filters[3] Now 4x4x16

### Decoder

upsample1 = tf.image.resize_nearest_neighbor(encoded, (h_l3,w_l3))

# Output size h_l3 x w_l3 x filters[3]

conv4 = tf.layers.conv2d(upsample1, filters[3], (k,k), padding='same', activation=activation_fn)

# Output size h_l3 x w_l3 x filters[3]

upsample2 = tf.image.resize_nearest_neighbor(conv4, (h_l2,w_l2))

# Output size h_l2 x w_l2 x filters[3]

conv5 = tf.layers.conv2d(upsample2, filters[2], (k,k), padding='same', activation=activation_fn)

# Output size h_l2 x w_l2 x filters[2]

upsample3 = tf.image.resize_nearest_neighbor(conv5, (h_in,w_in))

# Output size h_in x w_in x filters[2]

conv6 = tf.layers.conv2d(upsample3, filters[1], (k,k), padding='same', activation=activation_fn)

# Output size h_in x w_in x filters[1]

logits = tf.layers.conv2d(conv6, 1, (k,k) , padding='same', activation=None)

# Output size h_in x w_in x 1

decoded = tf.nn.sigmoid(logits, name='decoded')

loss = tf.nn.sigmoid_cross_entropy_with_logits(labels=X, logits=logits)

cost = tf.reduce_mean(loss)

opt = tf.train.AdamOptimizer(0.001).minimize(cost)

- Start the session:

sess = tf.Session()

- Fit the model for the given input:

epochs = 10

batch_size = 100

# Set's how much noise we're adding to the MNIST images

noise_factor = 0.5

sess.run(tf.global_variables_initializer())

err = []

for i in range(epochs):

for ii in range(mnist.train.num_examples//batch_size):

batch = mnist.train.next_batch(batch_size)

# Get images from the batch

imgs = batch[0].reshape((-1, h_in, w_in, 1))

# Add random noise to the input images

noisy_imgs = imgs + noise_factor * np.random.randn(*imgs.shape)

# Clip the images to be between 0 and 1

noisy_imgs = np.clip(noisy_imgs, 0., 1.)

# Noisy images as inputs, original images as targets

batch_cost, _ = sess.run([cost, opt], feed_dict={X_noisy: noisy_imgs,X: imgs})

err.append(batch_cost)

if ii%100 == 0:

print("Epoch: {0}/{1}... Training loss {2}".format(i, epochs, batch_cost))

- The error of the network as it learns is as follows:

plt.plot(err)

plt.xlabel('epochs')

plt.ylabel('Cross Entropy Loss')

The plot is as follows:

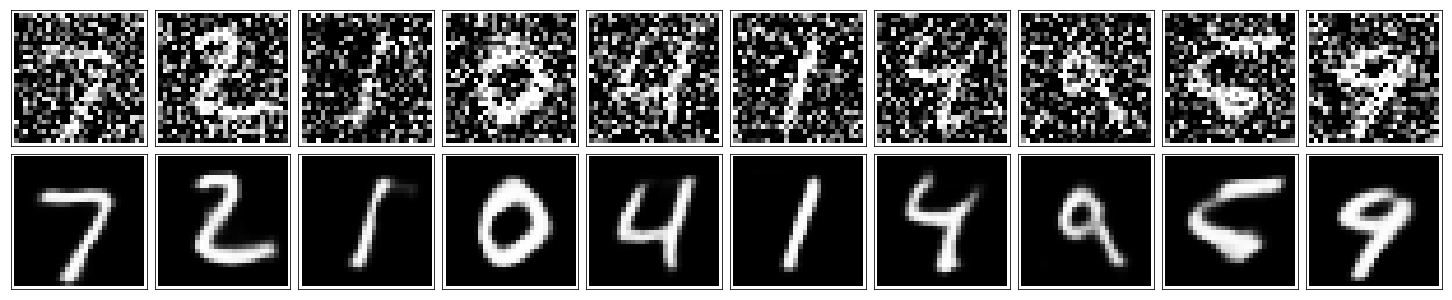

- Finally, let's see the reconstructed images:

fig, axes = plt.subplots(rows=2, cols=10, sharex=True, sharey=True, figsize=(20,4))

in_imgs = mnist.test.images[:10]

noisy_imgs = in_imgs + noise_factor * np.random.randn(*in_imgs.shape)

noisy_imgs = np.clip(noisy_imgs, 0., 1.)

reconstructed = sess.run(decoded, feed_dict={X_noisy: noisy_imgs.reshape((10, 28, 28, 1))})

for images, row in zip([noisy_imgs, reconstructed], axes):

for img, ax in zip(images, row):

ax.imshow(img.reshape((28, 28)), cmap='Greys_r')

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

Here is the output of the preceding code:

- Close the Session:

sess.close()