Chapter 7

Cloning and Data Sharing

THE SNOWPRO CORE EXAM TOPICS COVERED IN THIS CHAPTER INCLUDE THE FOLLOWING:

Snowflake's unique architecture, reliance on micro-partitions, excellent cloud services layer, and metadata management underpin several novel features. These features include zero-copy cloning and data sharing without moving the data. This chapter describes zero-copy cloning and how it works before taking you through some hands-on exercises on cloning. The chapter then describes data sharing and how it works, and describes the three high-level approaches to data sharing in Snowflake.

Zero-Copy Cloning

Cloning, or zero-copy cloning, is a feature provided by Snowflake that allows users to create a clone of a table, schema, or database without physically copying the data. Cloning provides immediate benefits by reducing the need for extra storage and fast speed of the copy operation. Since cloning does not physically copy data, the process is much faster than a traditional copy operation. It does not require additional storage space because there is no physical copying of data.

A snapshot of the data present in the cloning source is taken and is made available in the cloned object. The source object and the cloned object are independent of each other, and you can perform data modification operations on either object without affecting the other.

Although the CLONE command can create a copy of various Snowflake objects, we will focus on cloning tables, schemas, and databases in this chapter. The following object types are supported for cloning.

Objects that contain data:

- Databases

- Schemas

- Tables

- Streams

Other objects supported for cloning:

- Stages

- File formats

- Sequences

- TasksStreams

The objects that contain data are cloned using the zero-copy cloning technique, which we discuss in detail in this chapter.

How Zero-Copy Cloning Works

Let's discuss how zero-copy cloning works under the covers. In Chapter 2, “Snowflake Architecture,” you learned that Snowflake stores its data in the form of micro-partitions. Micro-partitions are the reason zero-copy cloning is so fast and efficient. Snowflake does not copy any micro-partitions; instead, within its metadata, it points the cloned table to the existing micro-partitions. This concept is illustrated in Figure 7.1. The figure illustrates that the cloned table, T2, was cloned from the source table, T1, which has three micro-partitions. When initially cloned, T2 shares T1's micro-partitions. Because this is a metadata-only operation, there is no data movement or additional storage requirements. The operation is very fast and does not require a running virtual warehouse.

FIGURE 7.1 Zero-copy cloning and micro-partitions

Earlier, you also learned that the source object and the cloned object are independent of each other. You can perform data modification operations to either object without affecting the other. This is because Snowflake is tracking the micro-partitions in the metadata for each table independently. Snowflake updates the micro-partition information for only the updated table when either table is updated.

This concept is illustrated in Figure 7.2, which builds on the example shown in Figure 7.1. Here, data in the source table, T1, has been updated. Because of the update, one of the micro-partitions is no longer part of the table and is shown deemphasized in the figure. However, note that the micro-partition which is no longer part of table T1 is still part of the cloned table, T2. Finally, an additional micro-partition is added to T1, shown as the highlighted micro-partition in Figure 7.2.

FIGURE 7.2 Updates to the source table in a cloning scenario

It is worth noting that there is no additional storage requirement for the cloned table when a table is cloned. However, as new data is inserted or updates are performed to either table, micro-partitions may be added or removed. If new micro-partitions are added, then additional storage costs are charged.

Figure 7.3 illustrates the scenario when data in a cloned table is changed. As shown in the figure, data in the cloned table, T2, was updated, resulting in a new micro-partition to be written out. Consequently, one of the micro-partitions in the source table, T1, is no longer pointed to by the cloned table.

FIGURE 7.3 Updates to the cloned table in a cloning scenario

Cloning is a great feature for making copies of data for debugging purposes or for standing up replicas of whole production environments. It minimizes the need for additional storage requirements for cloned objects. Additional storage is only required when data is inserted or updated in either the source or the cloned table, resulting in additional micro-partitions to be written out and consequently additional storage and charges.

Database, Schema, and Table Cloning

Although cloning can be performed for various objects, this chapter focuses on database, schema, and table cloning. Zero-copy cloning is not limited to tables but can be performed even at a schema or database level. When a complete schema or database is cloned, all objects in that schema are made available in the cloned schema.

Databases, schemas, and tables can be cloned using the CLONE syntax in the CREATE statement. The basic syntax for cloning a database, schema, or table is as follows. Note that a simplified syntax is shown here. For a complete syntax, please refer to Snowflake's documentation.

CREATE { DATABASE | SCHEMA | TABLE } <object_name>CLONE <source:object_name>;

Cloning a Table

Exercise 7.1 demonstrates the cloning of a table and how fast the operation is compared to a regular copy operation. The exercise also demonstrates that updates can be made to a cloned table independent of the source.

We cloned a table using the zero-copy cloning method, a metadata operation that is fast to complete. As opposed to physical copying of data, zero-copy cloning of a table doesn't move any data but instead points to the same micro-partitions as the source table. However, from the time the table was cloned, the two tables can exist independently—that is, updates, inserts, and deletes can be performed to either table, and they do not impact the other table.

Cloning a Database

Exercise 7.2 tackles the cloning of a complete database, demonstrating that database child objects like schemas and tables are recursively cloned when a database is cloned.

We cloned a complete database in this exercise. Cloning a database results in all child schemas of that database and objects contained inside those schemas being cloned recursively.

Cloning with Time Travel

Cloning can be combined with Time Travel to create a clone of a table, a database, or a schema as they existed at a point in time. Cloning combined with Time Travel is a powerful feature that can be used for standing up testing environments at a point in time for debugging or testing purposes. You can clone an entire production environment through this feature while traveling back in time. For example, you can create a replica of production one month ago and use it to debug old data issues that may have escaped detection. The syntax for cloning with Time Travel is similar to the usual cloning syntax, except it includes the Time Travel extensions in the syntax, as shown here:

CREATE { DATABASE | SCHEMA | TABLE } <object_name>CLONE <source:object_name>[ { AT | BEFORE } ( { TIMESTAMP => <timestamp> | OFFSET => <time_difference> | STATEMENT => <id> } ) ]

Exercise 7.3 demonstrates the concept of cloning a table while traveling back in time. In this exercise, you create a table, populate it with some data, update the data, and then clone the table as it existed before the update was run.

In this exercise, we cloned a table while traveling back in time, demonstrating that we can make clones of tables as they existed at a point in time. Cloning with Time Travel is a novel feature that simplifies standing up testing environments and helps debug and fix data issues that may have occurred over time.

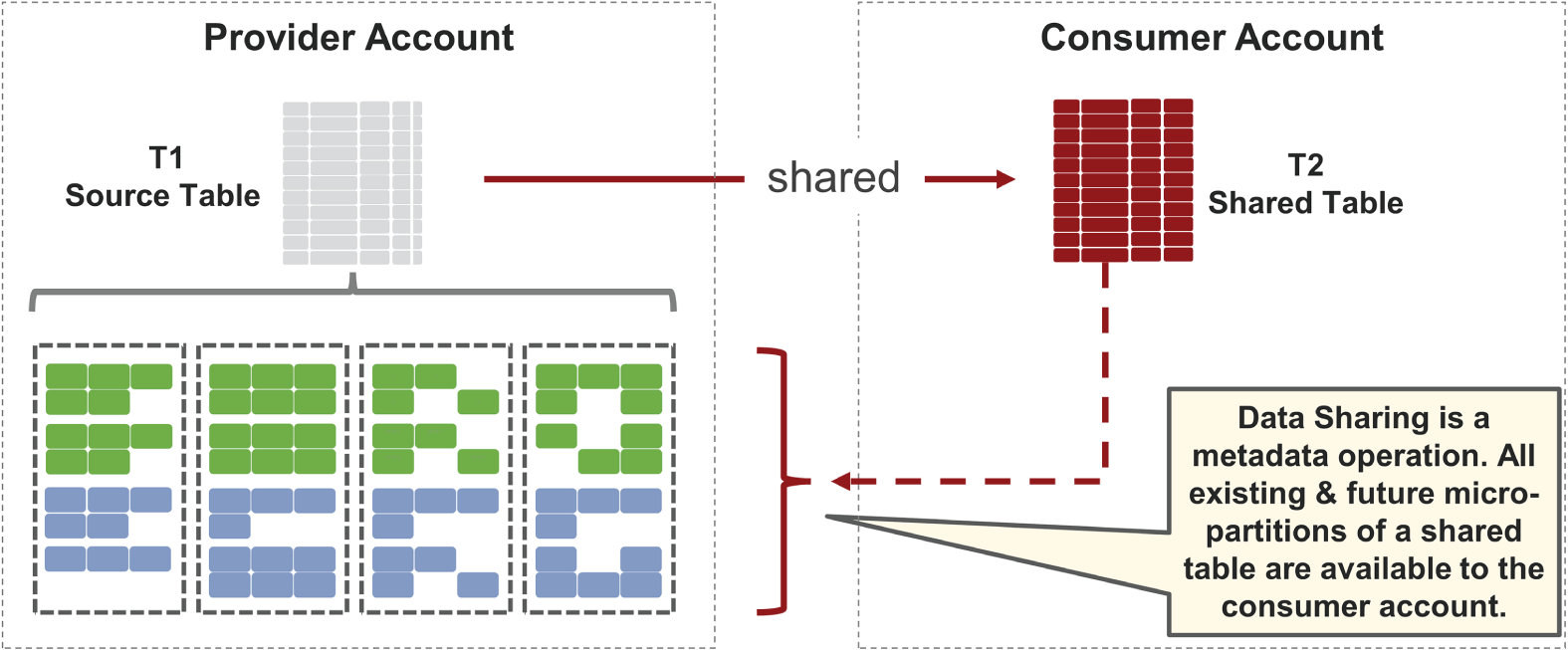

Secure Data Sharing in Snowflake

Like Time Travel and Cloning, Secure Data Sharing does not move data; instead, it is a metadata operation. Secure Data Sharing is performed through Snowflake's cloud services and the metadata layer. There is no movement or copying of data during a data sharing operation; instead, the shared table references the original table and its underlying micro-partitions. This ensures that a sharing operation is almost instantaneous and that any updates in the source table are automatically and immediately reflected in the shared table. Figure 7.4 illustrates the concept of Secure Data Sharing and how it is achieved. Data is shared by a data provider, any Snowflake account that creates a share and makes it available to other accounts to consume. A data consumer is any account that creates a database from the share and consumes data shared by the provider.

There is no data movement during data sharing since the source table's micro-partitions are referred to in the cloned table. Therefore, there are no additional storage requirements either, which means there are no storage costs for the consumer.

FIGURE 7.4 Data sharing and micro-partitions

Snowflake Offerings for Data Sharing

Snowflake provides three types of product offerings for Secure Data Sharing, all of which utilize the sharing mechanisms discussed in the preceding section. The Secure Data Sharing product offerings provided by Snowflake are as follows:

- Direct Sharing Account-to-account data sharing.

- Snowflake Data Marketplace Consume free and paid datasets provided by various data providers. It's possible to publish your own datasets as well.

- Data Exchange A private data marketplace created and controlled by the customer to publish and consume data internally and with limited external parties.

Direct Sharing

Direct Sharing is the simplest form of data sharing used to share data from one account to another. Direct sharing uses the concept of data providers, data consumers, and the share object to share data with other Snowflake accounts. As explained previously, no actual data is copied or transferred between accounts during sharing. The sharing is achieved through metadata operations in the cloud services layer. Therefore, the data consumer incurs no additional storage costs because the provider account stores and pays for the data storage. However, the consumer account must pay for the compute resources used to run queries on the shared data.

Let's discuss a few key data sharing concepts through the high-level process for Direct Sharing, shown in Figure 7.5.

FIGURE 7.5 Direct Sharing

A Provider account is where the data sharing journey begins. Any Snowflake account can be a data provider that can share one or more objects with one or more Snowflake accounts.

A data provider starts by creating a Share object. A Share object can be thought of as a container of all the information required for sharing an object. Each Share object contains the following:

- Objects that are being shared, such as tables. Tables can be added to a share by granting the share

SELECTaccess to the tables. - Database and schema containing the shared object. The Share object must be provided

USAGEaccess to the schema and the shared object database. - One or more Snowflake accounts with which data is being shared, also known as consumers.

Once a consumer's account number is added to a share, the share starts appearing in the consumer account. The consumer then creates a read-only database on the Share object and can see all of the shared objects under that read-only database.

The high-level flow for Direct Sharing is shown in Figure 7.6.

FIGURE 7.6 Process flow for direct data sharing

The following types of objects can be shared through direct data sharing:

- Tables

- External tables

- Secure views

- Secure materialized views

- Secure UDFs

Sharing a Table with Another Snowflake Account

Exercise 7.4 demonstrates sharing of a table with another Snowflake account. We create a new Share object, add a new table to that Share object, add the necessary grants, and make the Share object available to a consumer. Then, as the consumer account we create a read-only database using the share and can then consume the shared data.

Notice that you need two Snowflake accounts for this exercise, one to act as a data provider and the other as a data consumer. For this exercise, we suggest that the two accounts you use be on the same cloud provider and in the same region. Although Snowflake supports data sharing across regions and cloud providers, it requires data replication to be set up, which is beyond the scope of this chapter.

Note that we use the ACCOUNTADMIN role for creating and managing the share. Alternatively, a role with the CREATE SHARE privilege can be used.

In the preceding exercise, we shared a table from one Snowflake account to another by creating a Share object, adding a table to the share, and adding a consumer account.

Sharing Tables That Exist in Different Databases

If you need to share data from multiple tables that exist in different databases, creating a secure view is an option. Since multiple databases cannot be added to a single share, Snowflake's suggested approach is to create secure views in a single database. The views can point to different tables in different databases. The secure views may also be used to join data from different tables and present the data as a single view for sharing. The concept is illustrated in Figure 7.7. The figure illustrates four tables shared from three different databases. A new database is created solely for sharing to share these tables optimally. Several secure views are created in this new database, pointing directly to a table or joining data from multiple tables. This shared database and the secure views are then shared with the consumer accounts.

FIGURE 7.7 Sharing secure views

Sharing secure views is almost identical to sharing a table; however, an additional step is required to provide the Share object with the REFERENCE USAGE privilege on the databases underlying the secure views. For example, in the scenario shown in Figure 7.7, the Share object needs to have REFERENCE USAGE granted on the DB 1, DB 2, and DB 3 databases. The syntax for a reference usage grant follows:

GRANT REFERENCE USAGE ON DATABASE <database_name> TO SHARE <share_name>;

Sharing with a Non-Snowflake User

We have so far discussed sharing data with other Snowflake accounts. It is possible in Snowflake to share data with a non-Snowflake user by creating an account for the non-Snowflake user. This reader account is created by the data provider for sharing. The data provider then creates an initial administrator user and a virtual warehouse in the new reader account. The sharing process is the same as sharing with any other Snowflake account. The concept of data sharing through a reader account is illustrated in Figure 7.8.

FIGURE 7.8 Secure Data Sharing with non-Snowflake users

Because the data provider creates the reader account, all reader account's compute costs are billed to the provider account. Any virtual warehouse usage by the reader account is added to the compute costs of the provider account.

Snowflake Data Marketplace

Snowflake Data Marketplace is a marketplace for discovering and accessing third-party datasets that have been made available by various organizations. These third-party datasets are often offered for a cost but they can sometimes be available for free. Once you have obtained access to a dataset, the dataset is available in your Snowflake account just like any other table, and you can join the dataset with existing tables. Snowflake accounts can also publish datasets to Snowflake Data Marketplace and monetize them.

Snowflake Data Marketplace uses the same underlying principles that underpin data sharing and were explained earlier in this chapter.

The Snowflake Data Marketplace is accessible only through the new Snowflake web interface, using the Marketplace icon under Data in the left navigation pane. The classic Snowflake interface also has a button for the Marketplace and redirects to the new interface. The Data Marketplace interface is shown in Figure 7.9, displaying a subset of free datasets available.

FIGURE 7.9 Snowflake Data Marketplace interface

As the user proceeds through various steps of obtaining data, they are asked the database name under which the shared data will be available. The concept, illustrated in Figure 7.10, is similar to how a read-only database is required to be created during direct data sharing.

FIGURE 7.10 Get Data button in the Data Marketplace

Snowflake Data Marketplace has two types of data listings:

- Standard Data Listings Provide immediate access to the published dataset. This type of listing is used for providing generic data that any organization can use.

- Personalized Data Listings Provide customized datasets that can be requested and are provided on demand. Personalized data listings may produce data specific to each consumer.

Data Exchange

Data Exchange is your own private data sharing hub that can be used to share data among a selected group of invite-only members. The account owning the Data Exchange is responsible for inviting members and specifying whether they can share, consume, or do both. The concept of Data Exchange is illustrated in Figure 7.11, demonstrating that the owning account creates and controls a Data Exchange hub. Member accounts can be set to either consume, publish, or both. The result is a hub where members specified by the owning account can exchange and share data.

The purpose of Data Exchange is to allow enterprises to share data with trusted partners, suppliers, vendors, and customers. Data Exchange functionality is available to all Snowflake accounts except VPS Snowflake accounts. The functionality may not be enabled by default, and, if not enabled, requires a Snowflake support request to enable.

FIGURE 7.11 Data Exchange

Summary

This chapter discussed cloning and data sharing, two novel features provided by Snowflake. You can create instant copies of tables, schemas, and databases by using zero-copy cloning without additional storage costs. You can also combine cloning with Time Travel. The chapter included two cloning exercises to demonstrate these concepts.

This chapter also described data sharing in Snowflake. Like cloning, data sharing is also almost instantaneous and doesn't move data. We practiced the steps for direct data sharing with another account and discussed Data Marketplace and Data Exchange, two other mechanisms for data sharing.

Exam Essentials

- Be familiar with zero-copy cloning and how it works. Snowflake's zero-copy cloning functionality lets users make clones of tables, schemas, and databases without physically copying data. Cloning reduces the demand for extra storage and speeds up the copy process. Because cloning does not duplicate data physically, it is substantially faster than physical copying. The cloned object contains a snapshot of the data in the cloning source. The source and cloned objects are independent, so you may modify data on either without impacting the other. Micro-partitions are the reason behind rapid and efficient zero-copy cloning. No micro-partitions are copied, but metadata points the cloned table to the existing micro-partitions. Additional storage is not required for the cloned table when cloning a table. However, as new data is stored or updated in either table, micro-partitions may be created or deleted, and additional storage may be required.

Although cloning is a fast operation, as a metadata process, it may not be instantaneous. Large tables with millions of micro-partitions may take longer to clone while updating metadata. Cloning and Time Travel can be used together to generate a clone of a table, database, or schema at a specific point in time. Named internal stages and external tables are some objects that can't be cloned. Cloning a database copies all its child schemas and objects; however, Snowpipes that reference an internal named stage are not copied. A cloned object does not inherit any of the parent object's privileges. However, when you clone a database or schema child objects inherit the rights.

- Understand data sharing in Snowflake. Data sharing, like Time Travel and cloning, is a metadata operation. Snowflakes cloud services and the metadata layer facilitate data sharing. During data sharing, no data is moved or copied; instead, the shared table refers to the original table and its micro-partitions. That way, any changes in the source table are automatically mirrored in the shared table.

Snowflake's data sharing products include Direct Sharing, Snowflake Data Marketplace, and Data Exchange. Direct Sharing is the simplest method of sharing data across accounts. The provider account stores and pays for the data; the consumer incurs no additional storage expenses. The consumer, however, pays for the compute resources required to conduct queries on shared data.

Any Snowflake account can be a data provider, sharing data with other Snowflake accounts. A data provider first creates a share, a container for all the data necessary to share an object. Share objects contain shared objects, the shared object's database, schema, and one or more Snowflake accounts (consumers) with whom data is shared. The consumer sees the shared objects by creating a read-only database on the share object. Only the ACCOUNTADMIN role or roles with the

CREATE SHAREprivilege can create a share. Similarly, only the ACCOUNTADMIN role or roles specifically allowed theIMPORT SHAREprivilege can create a read-only database on the share as a consumer account.It is possible in Snowflake to share data with a non-Snowflake user, which is done by creating a reader account. Because the data provider creates and owns the reader account, all the reader account's compute costs are billed to the provider account.

Snowflake Data Marketplace allows users to search for and access third-party datasets made public by various organizations. Datasets are typically supplied for a fee or for free. Once accessed, a dataset becomes a table in your Snowflake account, and you may join it with other tables. Snowflake accounts may also sell datasets on the Data Marketplace.

Data Exchange is a private data sharing hub where you may share data with a small number of accounts. The Data Exchange owner invites members and specifies whether they can share, consume, or do both.

Review Questions

- Which of the following are true regarding cloned tables? (Select all that apply.)

- Cloning physically copies data from one table to another.

- A cloned table does not contribute to the overall storage.

- Cloning is a metadata operation.

- Cloning is almost instantaneous.

- True or False: Cloning is a metadata operation.

- True

- False

- Which of the following can be cloned? (Select all that apply.)

- Databases

- Schemas

- Tables

- True or False: After cloning, any updates to the cloned table automatically update the source table.

- True

- False

- Which of the following are true regarding cloning? (Select all that apply.)

- Cloning a database does not copy any of its child schemas or their objects.

- Cloning a database copies all of its child schemas and their objects.

- A database cannot be cloned.

- If a database or schema is cloned, child object privileges are copied.

- Which of the following is correct regarding cloning? (Select all that apply.)

- Internal named stages cannot be cloned.

- A Snowflake account can be cloned.

- When a database or schema is cloned, any Snowpipes that reference an internal named stage are not cloned.

- Which of the following are Snowflake's product offerings for Secure Data Sharing? (Select all that apply.)

- Direct Sharing

- Data Monetization

- Snowflake Data Marketplace

- Data Exchange

- A data provider has shared a set of tables with a consumer through a share. The consumer has created a read-only database on the share and can successfully see and read the table data. If the data provider adds new tables to the share, which of the following statements apply?

- The consumer is required to drop and re-create the database on the share to see the new tables.

- The new tables automatically appear in the read-only database.

- It is not possible to add tables to a share after it has been shared with a consumer.

- A data provider has shared a set of tables with a data consumer who is a Snowflake customer. Who is billed for the compute usage when the consumer runs queries on the shared data?

- The data provider

- The data consumer

- A data provider has shared a set of tables with a non-Snowflake user. The data provider created a reader account to enable the sharing. Who is billed for the compute usage when the consumer runs queries on the shared data?

- The data provider

- The data consumer

- Is it possible to add multiple consuming accounts to a single share?

- Yes

- Only if you are an Enterprise customer

- No

- Only when using AWS as the cloud provider

- Which of the following roles can create a Share in Snowflake? (Select all that apply.)

- ACCOUNTADMIN

- SECURITYADMIN

- SYSADMIN

- A role that has been granted the

CREATE SHAREprivilege

- Which of the following roles can create a read-only database from a share in Snowflake? (Select all that apply.)

- ACCOUNTADMIN

- SECURITYADMIN

- SYSADMIN

- A role that has been granted the

IMPORT SHAREprivilege

- Which of the following is true regarding Data Exchange in Snowflake? (Select all that apply.)

- Data Exchange is your own private data sharing hub where you can share data with an invite-only group of people and organizations.

- Participating members can share, consume, or do both, depending on their privileges.

- The account owning the Data Exchange is responsible for inviting members.

- Data Exchange allows businesses to share data among a limited set of trustworthy partners, suppliers, vendors, and customers.

- Which of the following is true regarding Data Marketplace in Snowflake? (Select all that apply.)

- Snowflake accounts can also publish and monetize datasets on the Marketplace.

- Snowflake Data Marketplace is a marketplace for discovering and gaining access to third-party datasets made available by various organizations.

- The third-party datasets on Data Marketplace are always free.

- Except for VPS Snowflake accounts, all Snowflake accounts have access to the Snowflake Data Marketplace.