Chapter 4. Building Tests

When Martin originally wrote Refactoring, tests were anything but mainstream. However, even back then he knew: If you want to refactor, the essential precondition is having solid tests. Even if you are fortunate enough to have a tool that can automate the refactorings, you still need tests.

Since the days of the original Refactoring book, creating self-testing code has become a much more common activity. And, while this book isn’t about testing, if you want to refactor, you must have tests.

The Value of Self-Testing Code

If you look at how most programmers who do not write self-testing code spend their time, you’ll find that writing code actually is quite a small fraction. Some time is spent figuring out what ought to be going on, some time is spent designing, but most time is spent debugging. Those programmers can tell a story of a bug that took a whole day (or more) to find. Fixing the bug is usually pretty quick, but finding it is a nightmare. Some stand by this style of development; however, I’ve found life to be much easier if I have a test suite to lean on.

The event that started Martin on the road to self-testing code was a talk at OOPSLA in 1992. Someone (I think it was Dave Thomas, coauthor of The Pragmatic Programmer) said offhandedly, “Classes should contain their own tests.” And thus were the early days of self-testing code.

Since those days, it’s become a standard to follow the Red/Green/Refactor movement. In short, you write a failing test, make it pass, and then refactor the code to the best of your ability. This process is done many times a day, at least once with each new feature added. As you add features to the system, you build a regression suite that verifies that an application runs as expected. When developing in this manner you can complete large refactorings and have the confidence that you haven’t broken existing features of the system. The result is a tremendous productivity gain. Additionally, I find I hardly ever spend more than a few minutes debugging per day.

Of course, it is not so easy to persuade others to follow this route. Tests themselves are a lot of extra code to write. Unless you have actually experienced the way it speeds programming, self-testing does not seem to make sense. This is not helped by the fact that many people have never learned to write tests or even to think about tests.

As the Red/Green/Refactor movement advocates, one of the most useful times to write tests is before you start programming. When you need to add a feature, begin by writing the test. This isn’t as backward as it sounds. By writing the test you are asking yourself what needs to be done to add the function. Writing the test also concentrates on the interface rather than the implementation (which is always a good thing). It also means you have a clear point at which you are done coding—when the test works.

This notion of frequent testing is an important part of extreme programming [Beck, XP]. The name conjures up notions of programmers who are fast and loose hackers. But extreme programmers are dedicated testers. They want to develop software as fast as possible, and they know that tests help you to go as fast as you possibly can.

That’s enough of the polemic. Although I believe everyone would benefit by writing self-testing code, it is not the point of this book. This book is about refactoring. Refactoring requires tests. If you want to refactor, you have to write tests. This chapter gives you a start in doing this for Ruby. This is not a testing book, so I’m not going to go into much detail. But with testing I’ve found that a remarkably small amount can have surprisingly big benefits.

As with everything else in this book, I describe the testing approach using examples. When I develop code, I write the tests as I go. But often when I’m working with people on refactoring, we have a body of non-self-testing code to work on. So first we have to make the code self-testing before we refactor.

The standard Ruby idiom for testing is to build separate test classes that work in a framework to make testing easier. The most popular framework is Test::Unit, and it is part of the Ruby standard library.

The Test::Unit Testing Framework

A number of testing frameworks are available in Ruby. The original was Test::Unit, an open-source testing framework developed by Nathaniel Talbott. The framework is simple, yet it allows you to do all the key things you need for testing. In this chapter we use this framework to develop tests for some IO classes. We considered using RSpec for the test examples (another popular testing framework), but decided against it because we felt that test/unit examples resulted in a lower barrier of entry for the readers.

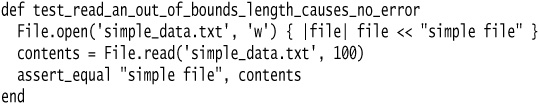

To begin, I’m going to write some tests for Ruby’s File class. I wouldn’t normally write tests for a language class—I’d hope that the author of the language has taken care of that—but it will serve as a good example. To begin I create a FileTest class. Any class that contains tests must subclass the TestCase class from the testing framework. The framework uses the composite pattern [Gang of Four] and groups all the tests into a suite (see Figure 4.1). This makes it easy to run all the tests as one suite automatically.

Figure 4.1 The composite structure of tests.

My first job is to set up the test data. Because I’m reading a file I need to set up a test file, as follows:

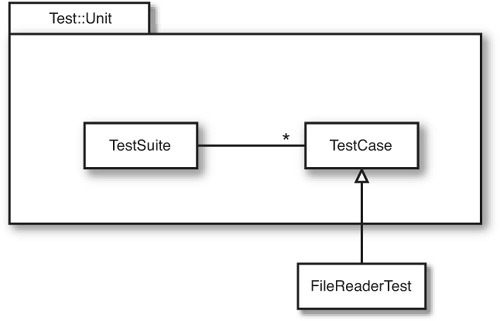

Now that I have the test fixture in place, I can start writing tests. The first is to test the read method. To do this I read the entire file and then check that the fourth character is the character I expect.

The automatic test is the assert_equal method. If the expected value is equal to the actual value, all is well. Otherwise we signal an error. I show how the framework does that later.

To execute the test, simply use Ruby to run the file.

ruby file_test.rb

You can take a look at the Test::Unit source code to figure out how it does it. I just treat it as magic.

It’s easy to run a group of tests simply by requiring each test case.

![]()

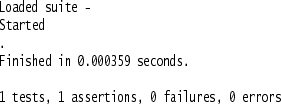

The preceding code creates the test suite and when I run it I see:

Test::Unit prints a period for each test that runs (so you can see progress). It tells you how long the tests have taken to run. It then says the number of tests, assertions, failures, and errors. I can run a thousand tests, and if all goes well, I’ll see that. This simple feedback is essential to self-testing code. Without it you’ll never run the tests often enough. With it you can run masses of tests and see the results immediately.

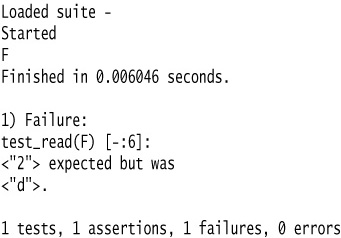

What happens if something goes wrong? I’ll demonstrate by putting in a deliberate bug, as follows:

The result looks like this:

Again I’ll mention that when I’m writing tests, I start by making them fail. With existing code I either change it to make it fail (if I can touch the code) or put an incorrect expected value in the assertion. I do this because I like to prove to myself that the test does actually run and the test is actually testing what it’s supposed to (which is why I prefer changing the tested code if I can). This may be paranoia, but you can really confuse yourself when tests are testing something other than what you think they are testing.

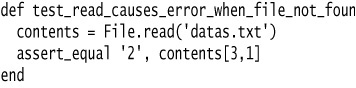

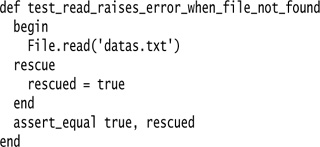

In addition to catching failures (assertions coming out false), the framework also catches errors (unexpected exceptions). If I attempt to open a file that doesn’t exist, I should get an exception. I can test this with:

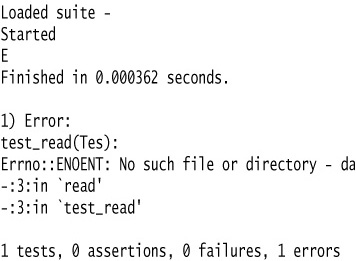

If I run this I get:

It is useful to differentiate failures and errors, because they tend to turn up differently and the debugging process is different.

Developer and Quality Assurance Tests

This framework is used for developer tests, so I should mention the difference between developer tests and quality assurance (QA) tests. The tests I’m talking about are developer tests. I write them to improve my productivity as a programmer. Making the quality assurance department happy is just a side effect.

Quality assurance tests are a different animal. They are written to ensure the software as a whole works. They provide quality assurance to the customer and don’t care about programmer productivity. They should be developed by a different team, one who delights in finding bugs. This team uses heavyweight tools and techniques to help them do this.

Functional tests typically treat the whole system as a black box as much as possible. In a GUI-based system, they operate through the GUI. In a file or database update program, the tests just look at how the data is changed for certain inputs.

When quality assurance testers, or users, find a bug in the software, at least two things are needed to fix it. Of course you have to change the production code to remove the bug. But you should also add a developer test that exposes the bug. Indeed, when I get a bug report, I begin by writing a developer test that causes the bug to surface. I write more than one test if I need to narrow the scope of the bug, or if there may be related failures. I use the developer tests to help pin down the bug and to ensure that a similar bug doesn’t get past my developer tests again.

Tip

When you get a bug report, start by writing a unit test that exposes the bug.

The Test::Unit framework is designed for writing developer tests. Quality assurance tests often are performed with other tools. GUI-based test tools are good examples. Often, however, you write your own application-specific test tools that make it easier to manage test-cases than do GUI scripts alone. You can perform quality assurance tests with Test::Unit, but it’s usually not the most efficient way. For refactoring purposes, I count on the developer tests—the programmer’s friend.

Adding More Tests

Now we should continue adding more tests. The style I follow is to look at all the things the class should do and test each one of them for any conditions that might cause the class to fail. This is not the same as “test every public method,” which some programmers advocate. Testing should be risk driven; remember, you are trying to find bugs now or in the future. So I don’t test accessors that just read and write. Because they are so simple, I’m not likely to find a bug there.

This is important because trying to write too many tests usually leads to not writing enough. I’ve often read books on testing, and my reaction has been to shy away from the mountain of stuff I have to do to test. This is counterproductive, because it makes you think that to test you have to do a lot of work. You get many benefits from testing even if you do only a little testing. The key is to test the areas that you are most worried about going wrong. That way you get the most benefit for your testing effort.

Tip

It is better to write and run incomplete tests than not to run complete tests.

At the moment I’m looking at the read method. What else should it do? One thing it says is that it can return a specified length. Let’s test it.

Running the test file causes each of its tests (the two test-cases) to run. It’s important to write isolated tests that do not depend on each other. There’s no guarantee on what order the test runner will run the tests. You wouldn’t want to get test failures where the code was actually correct, but your test depended on a previous test running.

Test::Unit identifies each test by finding all the methods that begin with the “test_” prefix. Following this convention means that each test I write is automatically added to the suite.

Tip

Think of the boundary conditions under which things might go wrong and concentrate your tests there.

Part of looking for boundaries is looking for special conditions that can cause the test to fail. For files, empty files are always a good choice:

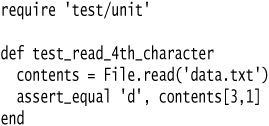

What happens if you attempt to read a length larger than the length of the file? The entire file should be returned with no error. I can easily add another test to ensure that:

Notice how I’m playing the part of an enemy to code. I’m actively thinking about how I can break it. I find that state of mind to be both productive and fun. It indulges the mean-spirited part of my psyche.

When you are doing tests, don’t forget to check that expected errors occur properly. If you try to read a file that doesn’t exist, you should get an exception. This too should be tested:

In fact, testing that exceptions are correctly raised is common enough that Test::Unit has an assert_raises method designed for exactly that.

Tip

Don’t forget to test that exceptions are raised when things are expected to go wrong.

Fleshing out the tests continues along these lines. It takes a while to go through the public methods of some classes to do this, but in the process you get to really understand the interface of the class. In particular, it helps to think about error conditions and boundary conditions. That’s another advantage for writing tests as you write code, or even before you write the production code.

When do you stop? I’m sure you have heard many times that you cannot prove a program has no bugs by testing. That’s true but does not affect the ability of testing to speed up programming. I’ve seen various proposals for rules to ensure you have tested every combination of everything. It’s worth taking a look at these, but don’t let them get to you. There is a point of diminishing returns with testing, and there is the danger that by trying to write too many tests, you become discouraged and end up not writing any. You should concentrate on where the risk is. Look at the code and see where it becomes complex. Look at the function and consider the likely areas of error. Your tests will not find every bug, but as you refactor you will understand the program better and thus find more bugs. Although I always start refactoring with a test suite, I invariably add to it as I go along.

Tip

Don’t let the fear that testing can’t catch all bugs stop you from writing the tests that will catch most bugs.

One of the tricky things about objects is that the inheritance and polymorphism can make testing harder, because there are many combinations to test. If you have three classes that collaborate and each has three subclasses, you have nine alternatives but twenty-seven combinations. I don’t always try to test all the combinations possible, but I do try to test each alternative. It boils down to the risk in the combinations. If the alternatives are reasonably independent of each other, I’m not likely to try each combination. There’s always a risk that I’ll miss something, but it is better to spend a reasonable time to catch most bugs than to spend ages trying to catch them all.

A difference between test code and production code is that it is okay to copy and edit test code. When dealing with combinations and alternatives, I often do that. I begin by writing a test for a “regular pay event” scenario, next I write a test for a “seniority” scenario, finally I create a test for a “disabled before the end of the year” scenario. After those tests are passing I create test scenarios without “seniority” and “disabled before the end of the year,” and so on. With simple alternatives like that on top of a reasonable test structure, I can generate tests quickly.

I hope I have given you a feel for writing tests. I can say a lot more on this topic, but that would obscure the key message. Build a good bug detector and run it frequently. It is a wonderful tool for any development and is a precondition for refactoring.