In corpus linguistics, text categorization or tagging into various word classes or lexical categories is considered to be the second step in NLP pipeline after tokenization. We have all studied parts of speech in our elementary classes; we were familiarized with nouns, pronouns, verbs, adjectives, and their utility in English grammar. These word classes are not just the salient pillars of grammar, but also quite pivotal in many language processing activities. The process of categorizing and labeling words into different parts of speeches is known as parts of speech tagging or simply tagging.

The goal of this chapter is to equip you with the tools and the associated knowledge about different tagging, chunking, and entailment approaches and their usage in natural language processing.

Earlier chapters focused on basic text processing; this chapter improvises on those concepts to explain the different approaches of tagging texts into lexical categories, chunking methods, statistical analysis on corpus data, and textual entailment.

In this chapter, we will cover the following topics:

- Parts of speech tagging

- Hidden Markov Models for POS tagging

- Collocation and contingency tables

- Feature extraction

- Log-linear models

- Textual entailment

In text mining we tend to view free text as a bag of tokens (words, n-grams). In order to do various quantitative analyses, searching and information retrieval, this approach is quite useful. However, when we take a bag of tokens approach, we tend to lose lots of information contained in the free text, such as sentence structure, word order, and context. These are some of the attributes of natural language processing which humans use to interpret the meaning of given text. NLP is a field focused on understanding free text. It attempts to understand a document completely like a human reader.

POS tagging is a prerequisite and one of the most import steps in text analysis. POS tagging is the annotation of the words with the right POS tags, based on the context in which they appear, POS taggers categorize words based on what they mean in a sentence or in the order they appear. POS taggers provide information about the semantic meaning of the word. POS taggers use some basic categories to tag different words — some basic tags are noun, verb, adjective, number and proper noun. POS tagging is also important for information extraction and sentiment analysis.

Let us see how to tag a text with R OpenNLP package.

The parts of speech Tagger tags each token with their corresponding parts of speech, utilizing lexical statistics, context, meaning, and their relative position with respect to adjacent tokens. The same token may be labeled with multiple syntactic labels based on the context. Or some word tokens may be labeled with X POS tag (in Universal POS Tagger) to denote short-hand for common words or misspelled words. POS tagging helps a great deal in resolving lexical ambiguity. R has an OpenNLP package that provides POS tagger functions, leveraging maximum entropy model:

library("NLP")

library("openNLP")

library("openNLPdata")Let's take a simple and short text as shown in the following code:

s <- "Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 .Mr. Vinken is chairman of Elsevier N.V. , the Dutch publishing group ." str <- as.String(s)

First, we will annotate the sentence using the function Maxent_Sent_Token_Annotator (); we can use different models for different languages. The default language used by the functions is en language="en", which will use the default model under the language en that is under OpenNLPdata, that is, en-sent.bin:

sentAnnotator <- Maxent_Sent_Token_Annotator(language = "en", probs = TRUE, model =NULL)

The value for the model is a character string giving the path to the Maxent model file to be used, NULL indicating the use of a default model file for the given language.

You can explore the available mode files at:

http://opennlp.sourceforge.net/models-1.5/

annotated_sentence <- annotate(s,sentAnnotator) annotated_sentence

The output of the preceding code is as shown in the following screenshot. Let's look at how to interpret the output:

- The id column is just a numbering of the number of detected sentences

- The start column denotes the character at which the sentence started

- The end column denotes the character at which the sentence ended

- The features columns tells us the confidence level or the probability of the detected sentences

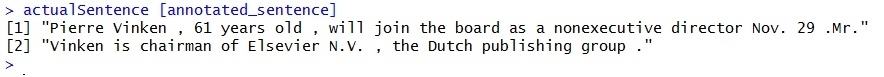

In order to apply the id to actual text, that is to find out all the sentences, we can pass the actual text to the annotator object as shown in the following code:

actualSentence <- str actualSentence [annotated_sentence]

Once we have annotated the sentence we can go to the next step of annotating the words. We can annotate each word by passing the annotated sentence to a word annotator Maxent_Word_Token_Annotator () as shown in the following code. We can use different models for different languages. The default language is en. This uses the model that is under OpenNLPdata, that is, en-token.bin:

wordAnnotator <- Maxent_Word_Token_Annotator(language = "en", probs = TRUE, model =NULL) annotated_word<- annotate(s,wordAnnotator,annotated_sentence)

We have to pass the sentence to the word annotator function. If the sentence annotator is not executed — that is if the sentences are not annotated — the word annotator will produce an error as shown in the following screenshot:

annotated_word head(annotated_word)

Let's look at the output shown here and understand how to interpret it:

- The id column is just a numbering of the number of detected sentences/words

- The start column denotes the character at which the word started

- The end column denotes the character at which the word ended

- The features columns tells us the confidence level or the probability of the detected words

Now, we are going to do POS tagging on the sentence to which we have applied both sentence and word annotator.

We can use different models for different languages. The default is en; it uses the model that is under OpenNLPdata, that is, en-pos-maxent.bin.

If needed, we can load a different model as shown in the following code:

install.packages("openNLPmodels.en", repos = "http://datacube.wu.ac.at/", type = "source")

library("openNLPmodels.en")

pos_token_annotator_model <- Maxent_POS_Tag_Annotator(language = "en", probs = TRUE, model = system.file("models", "en-pos-perceptron.bin", package = "openNLPmodels.en"))For this example, we will go ahead with the default model. First, the POS model must be loaded into the memory from disk or other source:

pos_tag_annotator <- Maxent_POS_Tag_Annotator(language = "en", probs = TRUE, model =NULL) pos_tag_annotator

The POS tagger instance is now ready to tag data. It expects a tokenized sentence as input, which is represented as a string array; each string object in the array is one token:

posTaggedSentence <- annotate(s, pos_tag_annotator, annotated_word) posTaggedSentence head(posTaggedSentence)

From the following screenshot, we can see that each word contains one parts of speech tag, the start and the end index of the word, and the confidence scores of each tag:

Let's concentrate on the distribution of POS tags for word tokens:

posTaggedWords <- subset(posTaggedSentence, type == "word")

Extract only the features from the annotator:

tags <- sapply(posTaggedWords$features, `[[`, "POS") tags

table(tags), in the following screenshot, we can see the number of tags present in the sentence, for example, how many "noun, proper, singular", "noun, common, plural" are present, and many more:

Let's extract word/POS pairs to make it more readable:

sprintf("%s -- %s", str[posTaggedWords], tags)This prints all the words and their tags side by side for easy reading, as shown in the following screenshot:

The tag set used by the English POS model is the Penn Treebank tag set. The following is a list of tags and their description:

Table reference http://www.comp.leeds.ac.uk/amalgam/tagsets/upenn.html.

Some of the common tag sets are as follows:

|

Number |

Tag |

Description |

|---|---|---|

|

1 |

CC |

Coordinating conjunction |

|

2 |

CD |

Cardinal number |

|

3 |

DT |

Determiner |

|

4 |

EX |

Existential there |

|

5 |

FW |

Foreign word |

|

6 |

IN |

Preposition or subordinating conjunction |

|

7 |

JJ |

Adjective |

|

12 |

NN |

Noun, singular or mass |

|

13 |

NNS |

Noun, plural |

|

14 |

NNP |

Proper noun, singular |

|

15 |

NNPS |

Proper noun, plural |

|

16 |

PDT |

Predeterminer |

|

17 |

POS |

Possessive ending |

|

18 |

PRP |

Personal pronoun |

|

19 |

PRP$ |

Possessive pronoun |

|

25 |

TOTo | |

|

26 |

UH |

Interjection |

|

27 |

VB |

Verb, base form |

|

28 |

VBD |

Verb, past tense |

|

33 |

WDT |

Wh-determiner |

|

34 |

WP |

Wh-pronoun |

We can use a tag dictionary, which specifies the tags each token can have. Using a tag dictionary has two advantages. Firstly, inappropriate tags can not bee assigned to tokens in the dictionary, and secondly, the beam search algorithm has to consider fewer possibilities and can search faster. Various pre-trained POS models for OpenNLP can be found at: