Performance considerations for managing distributed workloads

In this chapter, we provide an overview of the specific performance considerations for managing workloads that originate from distributed platforms. We limit the concept of distributed workloads to the direct interfaces between distributed platforms and IMS, with an emphasis on online transactions and database access through the IMS Open Database architecture.

The chapter contains the following topics:

7.1 General considerations

Real-time connectivity to and from IMS and distributed platforms is more critical today than at any other time in the history of IMS. This connectivity presents a number of challenges:

•How do you account for difference between the business conception of the transaction and the IMS-centric view of a transaction?

•How do you monitor performance in a way that is meaningful to the business?

•How do you assist application developers on distributed platforms to optimize for performance?

There are also concerns at the organizational level:

•How do you triage and assign problems that can exist across multiple stakeholders?

•How do you create meaningful test and production checks for performance?

•How do you communicate performance issues (and successes) to parts of your organization that are not familiar with IMS?

An effective performance management strategy depends on accounting for these challenges by understanding the types of workloads that must be handled, and by understanding how to monitor the various components that provide connectivity to IMS. One of these core components is providing direct TCP/IP connectivity to IMS transactions data.

7.2 TCP/IP connectivity through IMS Connect

IMS Connect provides a TCP/IP gateway into OTMA and IMS database (through IMS Open Database Manager). Because we discuss Open Database access in 7.3, “Open Database” on page 300, this section is limited only to managing transactional requests.

In this context, IMS Connect connects TCP/IP clients to IMS applications. It provides a simple pathway for you to create non-SNA (and often non-mainframe) applications that connect to IMS applications, and it is the pathway that other products, such as WebSphere Application Server and the IMS SOAP Gateway, use to connect to IMS.

IMS Connect clients use a protocol that is specific to IMS Connect to connect to IMS Connect. The protocol is the IMS Request Message (IRM) protocol. IMS Connect then uses message exits to do the following functions:

•Convert IRM requests to OTMA.

•Convert IMS Transaction Resource Adapter (ITRA) requests to OTMA. These are requests originating from Java clients running under WebSphere.

•Translate between EBCDIC and ASCII if required.

•Provide user authentication and authorization.

In addition to these functions, IMS Connect can be customized to provide site-specific functions such as additional security services, routing, and handling of proprietary payload formats.

7.2.1 Possible performance benefits of IMS Connect

IMS Connect helps you reduce network management costs by using more ubiquitous TCP/IP infrastructure and skill sets and also simplify your enterprise architecture by providing a more direct means of connecting to IMS.

In the broader context of the IMS Connect ecosystem, various IMS users find that the overall process of migrating to IMS Connect provides significantly improved transaction performance, more predictable scaling, and greater levels of availability.

This chapter explores ways in which these customers achieved these outcomes.

7.2.2 Routing messages to improve performance

The IMS Connect architecture provides opportunities for which parallelism can help improve transaction performance, throughput, and reliability by increasing the number of the following items:

•Ports that service a given IMS Connect instance

•IMS Connect instances that serve a given IMS

•TCBs between a given IMS Connect and IMS

The actual benefits (if any) of using these approaches depends on factors such as the use of security, the type of message payload, the dominant programming model, and other resource considerations. Fundamentally, determining which approach to use depends on trial and error, and monitoring.

Increasing the number of IMS Connect instances

By increasing the number of IMS Connect instances, you can more easily provide additional parallel pathways into OTMA. You can use a product such as SYSPLEX DISTRIBUTOR to distribute incoming TCP/IP session requests across the front ends.

The disadvantages of using multiple IMS Connect instances are the increased management overhead, and address space utilization.

Because the amount of CPU used for gross IMS Connect processing is typically low, you might be able to achieve similar advantages by using multiple OTMA TCBs (see “Increasing the number of OTMA TCBs” on page 293).

Increasing the number of OTMA TCBs

When a message comes in to IMS Connect, it specifies a target data store. The target data store maps to an XCF TMEMBER(i.e. IMS system). If there are any bottlenecks in OTMA, this aspect of transactional processing can provide room for improving transactional performance.

To improve parallelism here, you can create multiple data store definitions that each point to the same XCF TMEMBER, and then distribute messages between these data stores by using any of the following approaches:

•Client side: Implement a battery of target data stores on the client that each map to the same underlying IMS instance. The client alternates the various target data store values in the input message stream.

•Customized user message exit: Customize the IMS Connect message exit to map a single logical data store into multiple target data stores.

•Available tool: Use an IMS Tool such as IMS Connect Extensions for z/OS to define routing rules to provide the improved performance.

The main advantage of the first two approaches is that no additional tooling is necessary. The disadvantage is that in the long term such schemes are hard to maintain. In the case of client side batteries, changes to client collections require rollout to any number of potential clients, synchronization between distributed and IMS teams, and also introduce the potential for intermittent errors.

Customizing message exits is a better approach because they are easier to centrally manage, and allows the distributed clients to maintain a simple programming model.

You can customize the exits; you can use two tables that are available to IMS Connect Message exits:

•The INIT TABLE, which allows user data to be stored

•The DATASTORE TABLE, which contains data store IDs, statuses, and, optionally, user data.

You can then customize the user initialization exit routine (HWSUINIT) to load the tables, obtain the needed storage, and add any required user data to build your routing scheme. Modification of the message exit becomes more difficult if acknowledgement of transaction responses is required. In this case, some technique is necessary for remembering the original transaction routing so that the acknowledgement can be sent to the same data store. The use of commit mode 1 sync level NONE transactions does not totally eliminate the need for the remote client to provide acknowledgement for some IMS messages that might be received by the client.

Alternatively, you can use a tools such as IMS Connect Extensions for z/OS. The advantage of using this tool is that it makes this definition process simple and easy to update, including making real-time updates.

As shown in Figure 7-1, with IMS Connect Extensions for z/OS, you can do the following tasks:

•Map a single DESTID value into multiple values, each mapping to a separate TCB.

•Provide a degree of redundancy. Recall that if the IMS system fails, all data stores will also be unavailable. IMS Connect Extensions for z/OS can detect this condition so a fallback on a remote system can be done.

Figure 7-1 Parallelism can help improve OTMA performance

Figure 7-2 shows how to do this configuration in IMS Connect Extensions for z/OS.

Figure 7-2 Specifying routing rules to improve parallelism

7.2.3 Recording IMS Connect events

Recording IMS Connect activity introduces additional overhead in terms of CPU and I/O. The extent of the overhead depends on site-specific factors and is difficult to predict. Although event collection can become a significant proportion of IMS Connect CPU utilization, it is typically only an incidental factor in the overall resource usage of the transaction. Again however, these numbers are highly site-specific.

In real-world cases, many customers learn that the benefits of providing event collection, in terms of reporting and problem determination, outweighs the cost in CPU utilization. However, recording events can provide additional motivation for minimizing the overall number of IMS Connect systems that process messages.

7.2.4 Real-time capacity management

Another aspect of managing TCP/IP performance is to use the capacity of IMS systems to dictate the distribution of messages from IMS Connect. This aspect helps tune performance, improve availability, and optimize resource utilization.

Consider the following scenarios that you might want to do:

•Send the majority of messages to the local IMS system to improve performance and availability but route some messages to a remote IMS to use its spare capacity.

•Send the majority of messages to the LPAR with the greatest capacity.

•Redirect messages to another IMS, because its default IMS performance is degraded or because you want to stop it for maintenance.

These kinds of scenarios can be implemented through more sophisticated routing schemes (those that account for system capacity). By using IMS Connect Extensions for z/OS, you can add a capacity weight to each target data store. The capacity weight dictates the probability that IMS Connect Extensions for z/OS will pick a particular data store relative to other data stores.

A key advantage of using IMS Connect Extensions for z/OS is that you can change these capacity weights in real time.

7.2.5 Message expiration and its impact on performance

For IMS Connect clients that access IMS TM, you can specify timeout values in the TCP/IP and DATASTORE configuration statements, and also in the IRM header of input messages.

On the TCP/IP configuration statement, the TIMEOUT keyword controls two aspects of communication wait time:

•How long IMS Connect keeps a connection open if the client does not send any input after the connection is first established

•For input messages that do not specify a timeout interval in the IRM, how long IMS Connect waits for a response from IMS before notifying the client of the timeout and returning the socket connection to a RECV state

IMS Connect can also adjust the expiration time for IMS transactions to match the timeout value of the socket connection on which the transaction is submitted.

If an expiration time is specified in IMS, transactions can expire and be discarded if IMS does not process them before the expiration time is exceeded. The expiration time is set in the IMS definition of the transaction by the EXPRTIME parameter on either the TRANSACT stage-1 system definition macro or either of the dynamic resource definition type-2 commands, CREATE TRAN or UPDATE TRAN. A transaction expiration time set by IMS Connect overrides any transaction expiration time specified in the definition of the transaction in IMS.

Setting the expiration time to match the socket timeout is generally preferred.

You might find that setting the timeout value requires more tweaking:

•Setting a timeout value that is too short results in having the additional overhead of clients needlessly reconnecting.

•Setting a timeout value that is too high results in needlessly holding resources on transactions that are not likely to complete.

Depending on the nature of the workload, both these errors can have a significant impact on performance.

We prefer setting the timeout value in the IRM giving more flexibility to change the setting over time and to make it dependent on the client that is connecting, One alternative is to use IMS Connect Extensions for z/OS to set these values for you. The benefits are that you do not need to modify clients, and that you can tweak these settings dynamically. Figure 7-3 shows such settings.

Figure 7-3 Setting transaction timer options and activating transaction expiration

7.2.6 User ID caching

With IMS 12, you can now cache user IDs and passwords. This helps improve performance and reduce processing overhead if you are performing user ID validation in IMS Connect. After being activated, IMS Connect will cache the user credentials and not require IBM RACF® for further re-authentications.

You activate user ID caching in the HWSCFGxx member by using the RACF, UIDCACHE, and UIDAGE parameters.

When IMS Connect is running, you can issue a type 2 command to refresh the details of a specific user:

UPDATE IMSCON TYPE(RACFUID) NAME(userid) OPTION(REFRESH)

You can also enable or disable the cache when IMS Connect is running with by issuing the following type 2 command:

UPDATE IMSCON TYPE(CONFIG) SET(UIDCACHE(ON | OFF))

If you have IMS Connect Extensions for z/OS, you can cache user IDs with any supported IMS version. In this case, you must not use IMS Connect validation, and only use IMS Connect Extensions for z/OS to do user validation; that is, if you activate IMS Connect Extensions for z/OS security, disable IMS Connect validation.

If you are using IMS 12, the main advantage of using IMS Connect Extensions for z/OS is that it provides additional security options, such as validations the port and IP address to connect on a given IMS Connect instance.

7.2.7 Specifying NODELAYACK

An ACK delay can be a significant contributor to your total end-to-end response time. It is particularly important for clients that do multiple writes, although can significantly add to the total response time.

Figure 7-4 demonstrates the issue. It shows the delay that is introduced for an IMS Connect input message when the TCP/IP ‘ACK DELAY’ parameter is defaulted. In the figure, port 7771 uses a default of approximately 250 ms for ACK delay; however, port 7772 specifies NODELAYACK.

Figure 7-4 The effect of ACK delay on overall IMS Connect performance

Figure 7-5 shows a report that is produced by the IMS Performance Analyzer for z/OS. IMS Performance Analyzer for z/OS is reporting from the IMS Connect Extensions for z/OS journal event records. This example of the IMS Connect trace report shows significant IMS Connect events and the elapsed time delta between the events. The first event for an IMS Connect transaction is normally the READ PREPARE event. This trace is for port 7771 and it shows a time delta of 265 ms between the READ PREPARE and READ SOCKET events. This time is close to the ACK DELAY default of 250 ms.

Because this transaction is a SYNCLEVEL=CONFIRM type of transaction, the ACK DELAY also effects the client acknowledgement. The time delta between the first and second READ SOCKET events to read the acknowledgement is 265 ms. Again, this number is close to the ACK DELAY default.

Figure 7-5 Report demonstrating slow performance from ACK delay

Figure 7-6 shows the same IMS Performance Analyzer for z/OS report, but for port 7772 (that was defined with NODELAYACK). The elapsed time between the READ PREPARE and READ SOCKET events is now less than 1 ms. Similarly, the time for reading the clients ACK is close to 1 ms.

Figure 7-6 Report demonstrating improved performance with NODELAYACK

7.3 Open Database

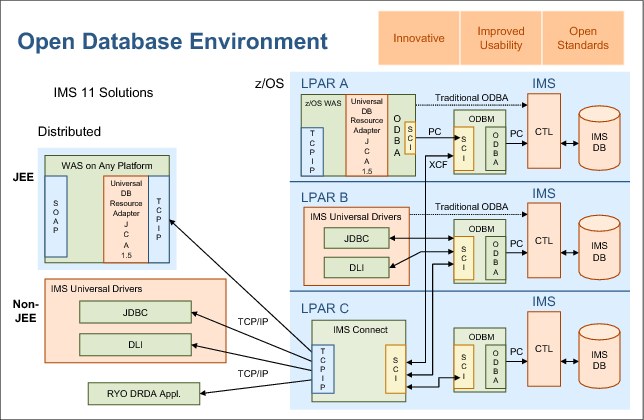

Open Database, introduced in IMS 11, provides a significant innovation that allows greatly simplified access to IMS data from distributed platforms. Although Open Database presents a number of architectures, including local connectivity, we focus solely on distributed access in this section.

In this section, Open Database calls involve IMS Connect as the TCP/IP front end and acts as the DRDA Application Server (AS), a new address space named Open Database Manager (ODBM) that manages the requests and forwards them to IMS.

From a functional perspective, with Open Database, programmers on distributed platforms can access IMS data in a standards-based way by using either DL/I or SQL. See Figure 7-7.

Figure 7-7 Open Database distributed architecture

7.3.1 Challenges of Open Database performance management

The Open Database architecture presents a number of new performance challenges that you must address:

•Access to IMS database by programmers who are not familiar with IMS

•Unexpected DL/I call trees emerging from SQL queries with unexpected performance implications

•Multiple calls for the same logical requests (which cannot easily be tied together)

•Performance that depends on multiple address spaces such as ODBM and IMS Connect

7.3.2 Open Database call-flows and timings

Open Database requests are based on open standards and can therefore be analyzed by standard tools. Figure 7-8 shows a Wireshark analysis of a DRDA request to IMS. Note the calls generated by the client, and the timings for each response.

Figure 7-8 Wireshark analysis of a DRDA request

Also notice the following information:

•Although Wireshark identifies each of the stages in the call flow and understands many of the code points, several code points are IMS-specific.

•Wireshark can show the DRDA flow but cannot interpret what is being requested.

Open Database (DRDA) analysis using IMS Connect Extensions for z/OS data

Consider the following features:

•IMS Connect Extensions for z/OS event journaling provides a comprehensive trace of every Distributed Relational Database Architecture (DRDA) call that is issued by the application (Figure 7-9).

Figure 7-9 IMS Problem Investigator: ODBM Open Database formatting

•Provides easy-to-read formatting of all DRDA code points, for both the open standard (Figure 7-10) and for IMS (Figure 7-11).

Figure 7-10 IMS Problem Investigator: DRDA open source code-point formatting

Figure 7-11 IMS Problem Investigator: DRDA IMS code-point formatting

•Tracks application calls that are associated with a single thread to identify bottlenecks.

•Analyzes DL/I call results including I/O and feedback areas (Figure 7-12).

Figure 7-12 IMS Problem Investigator: DL/I call analysis

•Merges the IMS Connect Extensions for z/OS journal with the IMS log to see the complete end-to-end picture of the session thread of a distributed transaction (Figure 7-13).

Figure 7-13 IMS Problem Investigator: Merged IMS log and connect extension journal

7.4 Synchronous callout

Synchronous callout allows an IMS application to call out to a web service, receive a response, and continue processing the transaction. As a feature, synchronous callout allows IMS to participate in a service-oriented architecture (SOA).

As shown in Figure 7-14, a callout is processed by IMS Connect and is passed to a web service either through the SOAP gateway, WebSphere, or a custom application.

Figure 7-14 A synchronous callout allows an IMS transaction to reach web services

As shown in Figure 7-15 on page 308, the call flow for a synchronous callout involves a number of steps. From a performance perspective, the RESUME TPIPE that is used by IMS Connect to first initialize a connection with a web service is not significant. However, the actual call flow can have significantly affect performance.

Moreover, understanding and reporting synchronous callout performance requires information from additional points in the transaction flow. For example, if the synchronous callout takes a long time to complete, how do you eliminate IMS and IMS Connect as potential sources of the problem?

Figure 7-15 A synchronous callout consists of multiple interrelated steps

IMS Connect provides communication between IMS and the callout server. Understanding and analyzing synchronous callout requires information from both IMS and IMS Connect.

Using the data that is provided by IMS Connect Extensions for z/OS or your own collection in IMS, you can report on the synchronous callout performance by using IMS Performance Analyzer for z/OS.

Figure 7-16 shows an IMS Performance Analyzer for z/OS report. The sync callout response time is a large percentage of the overall processing time, indicative of a delay.

Figure 7-16 Report showing synchronous callout performance

Although the previous report shows the synchronous callout characteristics, performance tuning requires a deeper investigation into the callout.

As Figure 7-17 shows, you can use IMS Problem Investigator for z/OS to display the flow of a single synchronous callout, and use an elapsed time view to identify timing delays in the lifecycle of the synchronous callout request.

Figure 7-17 Synchronous callout event flow

7.5 Identifying and managing performance blind spots

The scenarios and issues raised in this chapter share a common theme: the more complicated a transaction is, the more likely it is to involve areas for which you have limited performance information. Traditional IMS performance reporting, which is centered around the time between when the input message is received by IMS and then is put on the output queue by IMS, can be insufficient because too many scenarios are possible, in which the client’s perspective of the problem can imply significant performance issues. However, the actual input-output queue processing in IMS is optimal. See Figure 7-18.

Figure 7-18 Distributed workloads change the perspective of performance problems

You can illuminate a performance issue by eliminating alternative explanations for which we do have performance information.

For example, in Figure 7-19 on page 311, IMS is showing rapid response times, IMS Connect response times are slow, but is still fast before the message enters OTMA. Therefore, OTMA is the source of the problem.

Notice that although there is no direct measurement of OTMA performance in the report, the performance of OTMA can be deduced by covering the performance for the transaction end points. However, without IMS Connect information, diagnosing and tracing the performance problem to its source becomes more difficult.

Figure 7-19 shows that OTMA is responsible for the majority of processing time.

|

IMS Performance Analyzer

Combined tran list

LIST0001 Printed at 19:33:38 12Dec2007 Data from 13.57.52 12Dec2007

CON Tran CON Resp PreOTMA OTMAproc IMS Tran InputQ Process Total PostOTMA

Start Trancode OTMA Time Time Time Start Time Time IMS Time Time

13.57.52.714 IMSTRANS CONNECT 1.810 0.000 1.803 13.57.54.517 0.000 0.001 0.001 0.006

13.57.52.964 IMSTRANS CONNECT 1.575 0.000 1.574 13.57.54.538 0.000 0.001 0.001 0.000

13.57.52.972 IMSTRANS CONNECT 1.588 0.000 1.588 13.57.54.548 0.009 0.002 0.011 0.000

13.57.53.091 IMSTRANS CONNECT 1.716 0.002 1.714 13.57.54.806 0.000 0.001 0.001 0.000

13.57.53.567 IMSTRANS CONNECT 1.839 0.000 1.839 13.57.55.403 0.000 0.002 0.002 0.000

13.57.54.044 IMSTRANS CONNECT 1.800 0.000 1.799 13.57.55.836 0.006 0.001 0.007 0.001

|

Figure 7-19 IMS performance is optimal; most of the time is spent in IMS Connect

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.