Transparent Cloud Tiering

In this chapter, we describe how Transparent Cloud Tiering (TCT) extends the tiering process a step further, by adding cloud object storage as another tier.

This chapter includes the following topics:

3.1 Transparent Cloud Tiering overview

DS8000 TCT provides the framework that enables z/OS applications to move data to cloud object storage with minimum host I/O workload. The host application initiates the data movement, but the actual data transfer is performed by the DS8000. In this section, we provide a high-level overview of how TCT works.

|

Note: At the time of writing, there are three use cases for TCT:

•The Hierarchical Storage Manager (HSM) component of z/OS Data Facility Storage Management Subsystem (DFSMS) (DFSMShsm or HSM) can migrate and recall data sets to object storage.

•You can use DFSMShsm full volume dump (FVD) to dump to and restore from cloud object storage.

•You can use a DFSMSdss data set or FVD to back up and restore data to and from cloud object storage.

|

HSM can migrate data to cloud object storage instead of its traditional Maintenance Levels 1 or 2. You can call HSM manually, or define rules for automatic migration. You can also use HSM to dump full volumes to cloud object storage instead of tape devices. When HSM migrates or dumps data to cloud storage, it creates and runs a dump job for the DFSMS data mover service (DFSMSdss). For DFSMSdss FVD and restore, you can run the DFSMSdss commands manually or in a batch job. in both cases, DFSMSdss then initiates the move of the data:

1. It creates metadata objects that describe the data set and are needed to rebuild it in a recall.

2. It sends these metadata objects to the cloud storage.

3. It sends instructions to the DS8000 to compose and send the extent objects that contain the actual customer data to cloud storage. The DS8000 creates separate extent objects for each volume that the data is stored on.

|

Note: Originally, the DS8000 created one extent object for each volume:

•One extent object for each volume that a data set is allocated on for HSM migrations.

•One extent object for each volume that is backed up with DFSMSdss FVD.

With recent code releases and TS7700 as object storage target, the extent data is split into objects of 2 GiB to improve parallelism and error recovery.

|

HSM maintains a record in its Control Data Set (CDS), describing if a data set is migrated to cloud storage. When recalling a data set, it uses this record to compose a restore job definition for DFSMSdss, which initiates the data movement back from cloud storage to active DS8000 volumes:

1. It reads the metadata objects that describe the data set.

2. It prepares the restoration of the data by selecting a volume and allocating space.

3. It sends instructions to the DS8000 to retrieve and store the extent objects that contain the actual customer data.

For more information about the DFSMSdss operations and metadata objects, see 3.3, “Storing and retrieving data by using DFSMS” on page 24.

DS8000 TCT supports several cloud storage target types:

•Swift: the Open Stack cloud object storage implementation, public or on-premises.

•IBM Cloud Object Storage in the public IBM cloud or as on-premises cloud object storage solution.

•Amazon Web Services Simple Storage Service (AWS S3) in the Amazon public cloud.

•IBM Virtual Tape Server TS7700 as cloud object storage.

To create metadata objects, DFSMS must communicate with the cloud object storage solution. Depending on the target type, this process happens in one of two ways:

•For the Swift target type, DFSMS communicates directly to the cloud storage.

•For all other target types, the DS8000 Hardware Management Console (HMC) acts as a cloud proxy. DFSMS sends commands and objects to the HMC. The HMC passes them on to the cloud storage by using the appropriate protocol for the target type.

3.2 Transparent Cloud Tiering data flow

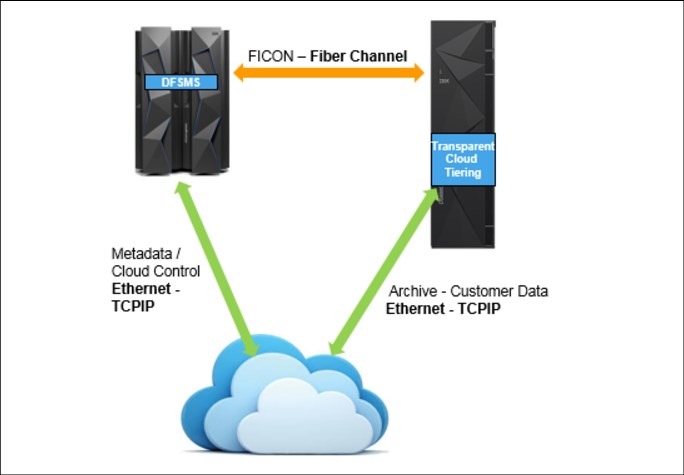

Traditional data movement during archive or backup operations is performed over the FICON infrastructure only. The host application reads data from the direct access storage device controller and writes it to a tape controller, and vice versa. With TCT, there are several data flows and connections between the host (z/OS DFSMS), the storage controller (DS8000) and the cloud target.

3.2.1 DS8000 cloud connection

The DS8000 connects to the cloud object storage targets through TCP/IP by using Ethernet ports in each of the two internal servers. You can either use available ports that are available onboard in each server, or purchase and connect a separate pair of more powerful Ethernet controllers. Connect the ports that you intend to use for TCT to the networks that extend to the cloud targets. Both DS8000 internal servers must be able to access all defined cloud targets.

The DS8000 uses these connections to send and retrieve the extent objects that contain the actual customer data. In cloud proxy mode (see “Other cloud target types” on page 23), it also transfers cloud requests and objects on behalf of DFSMS and HSM.

When storing or retrieving data from the cloud, the system uses the Ethernet ports of the internal server that owns the logical subsystem (LSS) that is associated with the request. This approach can lead to unbalanced migrations and recalls if most of the data is on a specific LSS.

Defining storage groups with volumes from LSSs that are equally distributed over both internal servers can reduce the risk of having an unbalanced link usage.

The instructions that cause the DS8000 to initiate the transfer of an object to or from cloud storage come from DFSMS and are transmitted over the FICON connection between z/OS and the DS8000.

3.2.2 DFSMS cloud connection

DFSMS must be able to communicate with the cloud object storage to store and retrieve the metadata objects that it needs to identify, describe, and reconstruct the migrated data sets. For this situation, you define a Network Connection for each cloud object storage target in the DFSMS management application (Interactive Storage Management Facility (ISMF)). HSM also uses this connection for maintenance purposes, such as removing objects that are not used anymore, or for reporting and auditing.

The communication between the mainframe and the cloud a Representational State Transfer (REST) interface. REST is a lightweight, scalable protocol that uses the HTTP standard. The z/OS Web Enablement Toolkit (WETK) provides the necessary support for secure HTTPS communication between endpoints by using the Secure Sockets Layer (SSL) protocol or the Transport Layer Security (TLS) protocol.

To access the cloud object storage, cloud credentials like a user ID and password are required by DFSMSdss. The user IDs (or equivalent credentials) are included in the DFSMS Network Connection constructs. The matching password must be provided to DFSMSdss for each TCT operation.

There is a common method to store and manage cloud storage credentials securely for DFSMSdss and HSM. It uses the DFSMS Cloud Data Access (CDA) framework, which again relies on the IBM Integrated Cryptographic Service Facility (ICSF). For HSM, there is a second method to manage credentials itself. We call this method the legacy method. We describe both ways in Chapter 8, “Managing cloud credentials” on page 79.

|

Note: Any users with access to the user ID and password to the cloud have full access to the data from z/OS or other systems perspective. Ensure that only authorized and required personnel can access this information.

|

Swift cloud type

For Swift cloud object storage targets, DFSMS sends and receives metadata directly to and from the cloud storage by using the z/OS WETK. It uses FICON in-band communication to instruct the DS8000 to move the actual extent objects with the customer data to and from cloud storage. The DS8000 sends and receives these objects through a TCP/IP connection to the cloud storage solution. The communication flow for Swift type cloud object storage is illustrated in Figure 3-1 on page 23.

Figure 3-1 Cloud communication with the Swift API

For the DFSMS cloud connection definition, you need information about your cloud storage environment, including the endpoint URL and credentials, such as username and password.

Other cloud target types

The integration between z/OS DFSMS and the other cloud target types (Amazon S3, IBM Cloud Object Storage, and TS7700) is different from the one used for Swift. DFSMS does not communicate with the cloud storage directly.

DFSMS uses the DS8000 as cloud proxy instead, as shown in Figure 3-2. The DFSMS cloud connection definition points to the DS8000 HMC, and uses DS8000 credentials (username and password). It sends cloud commands and metadata objects to the HMC. The HMC passes them on to the DS8000 itself, which is connected to the cloud storage.

Figure 3-2 Cloud communication with Amazon S3 and IBM Cloud Object Storage APIs

3.3 Storing and retrieving data by using DFSMS

From a z/OS perspective, cloud object storage is an auxiliary storage option, but unlike tapes, it does not provide a block-level I/O interface. Instead, it provides only a simple HTTP get-and-put interface that works at the object level.

DFSMSdss is the data mover that stores and retrieves data to and from object storage. The number of objects for each data set or volume can vary, based on the following factors:

•The number of volumes that a data set is on. For each volume that the data set is stored on, an extent object and an extent metadata object are created.

•When Virtual Storage Access Method (VSAM) data sets are migrated to cloud, each component has its own object, meaning a key-sequenced data set (KSDS) has at least one object for the data component and another for the index. The same concept is applied to alternative indexes.

Also, several metadata objects are created to store information about the data set, the dumped volume, and the application invoking TCT.

To maintain some structure in the potentially large number of objects, HSM uses containers. By default, a new container is created every 92 days. The container name reflects the creation date and the HSMplex name. Within the container, HSM addresses the data sets with automatically created unique object prefixes. For more information, see 9.2, “Cloud container management” on page 92.

|

Note: If you use DFSMSdss directly to dump and restore data, you must maintain container and object prefix naming yourself.

|

Table 3-1 lists some objects that are created as part of the DFSMSdss data set dump process.

Table 3-1 Created objects

|

Object name

|

Description

|

|

objectprefix/HDR

|

Metadata object that contains ADRTAPB

prefix.

|

|

objectprefix/DTPDSNLnnnnnnn

|

n = list sequence in hexadecimal. Metadata object that contains a list of data set names that are successfully dumped.

Note: This object differs from dump processing that uses OUTDD where the list consists of possibly dumped data sets. For cloud processing, this list includes data sets that were successfully dumped.

|

|

objectprefix/dsname/DTPDSHDR

|

Metadata object that contains data set dumped. If necessary, this object also contains DTCDFATT and DTDSAIR.

|

|

objectprefix/dsname/DTPVOLDnn/desc/META

|

Metadata object that contains attributes of the data set dumped:

•desc = descriptor

•NVSM = NONVSAM

•DATA = VSAM Data Component

•INDX = VSAM Index Component

•nn = volume sequence in decimal, 'nn' is determined from DTDNVOL field inDTDSHDR

|

|

objectprefix/dsname/DTPSPHDR

|

Metadata object that contains Sphere information. If necessary, this object also contains DTSAIXS, DTSINFO, and DTSPATHD.

Present if DTDSPER area in DTDSHDR is ON.

|

|

objectprefix/dsname/DTPVOLDnn/desc/EXTENTS

|

Data object. This object contains the data that is found within the extents for the source data set on a per volume basis:

•desc = descriptor

•NVSM = NONVSAM

•DATA = VSAM Data Component

•INDX = VSAM Index Component

|

|

objectprefix/dsname/APPMETA

|

Application metadata object that is provided by application in EIOPTION31 and provided to application in EIOPTION32.

|

After DFSMSdss creates the metadata objects, DS8000 TCT creates and stores the extent (data) objects. In a data set dump, a data object consists of the extents of the data set that is on the source volume. This process is repeated for every source volume where parts of the data set are stored. For a DFSMS FVD, all allocated extents of a volume are stored in one extent object.

After all volumes for a data set are processed (where Data Storage Services (DSS) successfully stored all the necessary metadata and data objects), DSS creates an extra application metadata object. DFSMSdss supports one application metadata object for each data set that is backed up.

There are some considerations that you must account for when planning to use TCT:

•Because data movement is offloaded to the DS8000, a data set cannot be manipulated as it is dumped or restored. For example, DFSMSdss cannot do validation processing for indexed VSAM data sets, compress a partitioned data set (PDS) on RESTORE, or reblock data sets, as would be possible during traditional dump and restore operations.

•TCT cannot move data that is already migrated or dumped to (virtual) tape or an HSM maintenance level (Migration Level 1 (ML1) or Migration Level 2 (ML2)). If you want to relocate such data to cloud object storage, you must read it by using traditional methods, and then backup or migrate with TCT.

•Data that was migrated or dumped to cloud with TCT can be restored only to volumes on a TCT capable DS8000. If you have a multi-vendor direct access storage device environment, or run a mix of DS8000 generations, the volume allocation for a restore operation must use only TCT-capable volumes.

|

Note: If you have more than one DS8000 attached to your system, make sure that all of them have access to migrate and recall data from the cloud.

|

3.4 Transparent Cloud Tiering and disaster recovery

Having a working disaster recovery (DR) solution is vital to maintaining the highest levels of system availability. These solutions can range from the simplest volume dump to tape and tape movement management, to high availability (HA) multi-target Peer to Peer Remote Copy (PPRC) and IBM HyperSwap® solutions. The use of TCT, and storing data in cloud storage can affect your DR strategy. You must review and adapt your DR plans, documentation, procedures, and tools.

Some of the steps required to recover your migrated data after a disaster include, but are not limited to:

•Network connectivity

Make sure that your DR has network access to the cloud environment, which might include configuring proxy, firewall, and other network settings to secure your connection.

•Cloud configuration

Your DR DS8000 must be configured with the information necessary to access the cloud storage, including certificates to allow SSL connections. You also might need to set up your z/OS to connect to the cloud environment, depending on your configuration.

•User ID administration

You might also need to create the user ID and password on your DR DS8000 if you use Amazon S3 or IBM Cloud Object Storage clouds. Update your z/OS to connect to the new DS8000, and define the user ID in your storage.

•Bandwidth

Keep in mind that during a disaster, a large amount of data set recalls might be requested, such as migrated image copies, and other data sets used only for DR purposes. If these data sets are stored in the cloud, make sure to have enough bandwidth available in your recovery site to avoid recovery delays that are related to network issues.

3.5 Transparent Cloud Tiering and DS8000 Copy Services

Many DR solutions are based on DS8000 Copy Services for data replication. TCT supports all DS8000 data replication technologies (Copy Services), except z/OS Global Mirror, also know as Extended Remote Copy (XRC). In the following sections, we describe the way TCT and the various Copy Services Solution interact.

3.5.1 IBM FlashCopy

As shown in Figure 3-3, you can use TCT to migrate data from IBM FlashCopy® source and target volumes. It does not matter whether a FlashCopy is issued with or without background copy or whether the background copy is still ongoing.

Figure 3-3 TCT migration with FlashCopy

A potential use case for TCT migration from a FlashCopy target volume is the migration of an IBM Db2® image copy that was created with FlashCopy.

Recalling data with TCT currently works only from FlashCopy source volumes, as shown in Figure 3-4. A TCT recall is treated like regular host write I/O, and any data that is overwritten by the recall operation is backed up to the FlashCopy target to preserve the point in time data of the FlashCopy.

Figure 3-4 TCT recall with FlashCopy

3.5.2 Metro Mirror

With TCT, you can migrate and recall data from volumes that are in Metro Mirror primaries, as shown in Figure 3-5.

Figure 3-5 TCT with Metro Mirror

As with FlashCopy, TCT recalls are treated the same way as regular host I/O operations, and Metro Mirror replicates recalled data to the secondary volumes. Make sure that your secondary DS8000 is connected to the same cloud object storage targets as the primary. This way, you can continue to migrate and recall after a recovery to the secondary.

|

Note: If you use TCT with S3, IBM COS, or TS7700 as cloud target type, you might need to change the DFSMS cloud definition after a recovery to the secondary DS8000. You can define only one DS8000 HMC as the cloud proxy, and the one you use might be unavailable after a failure in the primary site.

|

3.5.3 Metro Mirror with HyperSwap

HyperSwap is a z/OS high availability function. It switches I/O operations seamlessly from the Metro Mirror primary volumes to the secondaries. HyperSwaps can be triggered manually or automatically, for example in case of a primary volume I/O error. HyperSwap needs one of the following management interfaces:

•IBM Globally Dispersed Parallel Sysplex (GDPS)

•IBM Copy Services Manager (CSM)

|

Note: If you want to learn more about HyperSwap for z/OS, you can refer to the following IBM Redbooks publications:

•IBM GDPS: An Introduction to Concepts and Capabilities, SG246374

•Best Practices for DS8000 and z/OS HyperSwap with Copy Services Manager, SG248431

|

DS8000 TCT operations can get into a conflict with HyperSwap situations. A HyperSwap can occur, while a TCT operation is ongoing, or vice versa. Since HyperSwap changes the volumes that are accessed for I/O, it will impact running TCT operations.

With one exception, the TCT operation will be interrupted by the HyperSwap and fail when a HyperSwap and TCT operation coincide:

•In case TCT was writing to cloud object storage, the complete operation fails and must be re-issued by the user.

•In case TCT was reading from cloud object storage, the operation is automatically re-driven by DFSMS.

•When a planned HyperSwap is initiated in a GDPS managed HyperSwap configuration, GDPS will wait for a maximum of 10 seconds to allow the completion of an ongoing TCT operation. If a TCT operation persists beyond these 10 seconds, the planned HyperSwap will fail.

3.5.4 Global Mirror

As with Metro Mirror, TCT also supports migrate and recall operations to and from Global Mirror primary volumes, as illustrated in Figure 3-6.

Figure 3-6 TCT and Global Mirror

TCT recalls are treated the same way as regular host I/O operations, and Global Mirror replicates recalled data to the remote site. If you must continue TCT migrate and recall operations after a recovery to the remote site, your remote DS8000 and host systems must be connected to the same cloud storage as the primary site.

|

Note: z/OS Global Mirror, also known as XRC, is not supported by TCT.

|

3.5.5 Multi-site data replication

TCT allows migrate and recall operations in all supported combinations of DS8000 Copy Services:

•Cascaded Metro Global Mirror

•Multi-Target Metro Mirror - Metro Mirror

•Multi-Target Metro Mirror - Global Mirror

•4-site Metro Mirror - Global Mirror combinations with remote Global Copy

All implications regarding TCT operations after recovery that are described for the 2-site configurations are valid for multi-site, too.

3.6 DS8900F multi-cloud support

Before Release 9.2, the DS8000 supported only a single cloud connection. With the introduction of multi-cloud support, you can now define up to eight cloud connections to a DS8000, which provides more flexibility and opens the TCT solution for a range of new use cases, such as the following ones:

•Use the TS7700 to define multiple object storage locations and replication options by using TS7700 Object Policies.

•Multiple TS7700 GRIDs: Connect to multiple GRIDs, for example, to separate production from test GRID environments.

•Public versus private cloud for different types of data: For example, keeping confidential data onsite while other data can move to a public cloud.

•Performance differentiation: Keep more active backups or frequently recalled data onsite on the TS7700, and move unreferenced, colder data to public clouds.

•Application separation: Separate HSM and DSS workloads, or maintain individual credentials for applications.

•Managed service providers: Provide clients with backup and archive solutions that are tailored to each client that is served by a single DS8000.

•Test environments: Take advantage of having multiple clouds for testing various types of clouds without reconfiguration.

|

Note: The DS8000 cloud configuration process is different for single and multi-cloud definitions. For more information, see 6.1, “Configuring the IBM DS8000 for TCT” on page 50.

|

3.7 Transparent Cloud Tiering encryption

TCT object storage can be located outside of your own data center, maybe even in a public cloud outside of your country or on another continent. To protect the migrated data from unauthorized access, even in potentially insecure storage locations, TCT provides encryption capability.

When TCT encryption is enabled, data is encrypted by the DS8000 internal servers when it is about to be transferred over the network. TCT uses IBM Power hardware accelerated 256-bit AES encryption at full line speed, without impact on I/O performance. The data remains encrypted in the cloud storage. When recalled, the data is decrypted when it is received back in the DS8000.

If the data set is already encrypted by data set level encryption, DFSMS informs the DS8000, and TCT encryption will not encrypt again.

|

Restriction: TCT encryption as described here is not supported by TS7700. However, encryption for data in flight data is possible by using the TS7700 secure data transfer feature (see 3.8, “Transparent Cloud Tiering secure data transfer with TS7700” on page 32).

|

TCT encryption relies on external key servers for key management: It uses the industry standard Key Management Interoperability Protocol (KMIP). TCT encryption does not require a specific license and can be used with or independently from data at rest encryption.

In HA and DR scenarios that use Metro Mirror or Global Mirror, all DS8000 systems must be connected to the same cloud object storage, and all must be configured for TCT encryption. Every DS8000 must be added to the TCT encryption group of the key manager. This way any DS8000 in the DR configuration can decrypt the data in the cloud, even if it was encrypted by another one.

At the time of writing, the following key management solutions are supported for TCT encryption:

•IBM Security® Guardium® Key Lifecycle Manager (GKLM) 4.1 or later in multi-master or master-clone configurations:

– IBM Security Guardium Key Lifecycle Manager Traditional Edition

– IBM Security Guardium Key Lifecycle Manager Container Edition

Earlier versions of GKLM and its predecessor products might be supported, but they have different requirements.

•Gemalto SafeNet KeySecure

•Thales Vormetric Data Security Manager

•Thales CipherTrust Manager

See IBM DS8000 Encryption for Data at Rest, Transparent Cloud Tiering, and Endpoint Security (DS8000 Release 9.2), REDP-4500 for more details about TCT encryption and implementation instructions..

3.8 Transparent Cloud Tiering secure data transfer with TS7700

If you use a TS7700 Virtualization Engine as the object storage target, TCT supports encryption of the data that transfers over the IP network. Encryption is applied as the data is sent or retrieved to and from the target. This approach is pure TLS and in-flight encryption only: Data is encrypted as it is sent out to the network, and decrypted when it is received by the target port. You don’t need an external key manager, as it is not necessary to be able to decrypt data when it is retrieved back from the object storage target.

If the requirements for TCT secure data transfer are fulfilled, you can enable it without further configuration (see Chapter 4, “Requirements” on page 37 and “Connecting to a TS7700 Virtualization Engine” on page 57).

|

Note: TCT uses IBM Power® hardware-accelerated functions for encryption and compression. Both functions work with line speed and do not affect performance.

|

3.9 Transparent Cloud Tiering compression with TS7700

If you use a TS7700 Virtualization Engine as the object storage target, TCT also supports compression of the data that is stored on the object storage. Using compression, you can reduce the transfer time and required space on the object storage target. Data that is already compressed or encrypted with native z/OS methods is not compressed again. DFSMS detects that data is already encrypted or compressed and informs the DS8900F not to do compression for this transfer.

If the requirements for TCT compression are fulfilled, you can turn it on without further configuration (see Chapter 4, “Requirements” on page 37 and 7.5, “Enabling TCT compression with a TS7700” on page 77).

At the time of this writing, no compression is performed by the DS8000 during data migration for any of the other object storage targets. However, your data might already be compressed or encrypted when originally stored on the DS8000. Such data is offloaded to cloud in its original condition: compressed or encrypted. (Compression or encryption by the host is typically done by zEDC or pervasive encryption.)

3.10 Selecting data to store in a cloud

When you decide to implement TCT, you also must plan for who can use this cloud and the type of data that you want to store. Defining correct data to be offloaded to cloud gives you more on-premises storage to allocate to other critical data.

As described in this chapter, the cloud should be considered an auxiliary storage option within a z/OS system, meaning that no data that requires online or immediate access should be moved to the cloud.

If you use DFSMShsm as application to migrate and restore date to and from cloud, container and object management is automated. If you decide to use or allow DFSMSdss direct dump and restore with TCT, you must plan and implement ways for manual container and object management.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.