C H A P T E R 13

Migrating to Exadata

So the day is finally here. Your Exadata Database Machine is installed, configured, tuned, tweaked and ready to go. By now you’ve probably invested many, many hours learning about Exadata, proving its value to the company, and planning how you will make the most of this powerful database platform. No doubt it has been a long road to travel but you aren’t there quite yet. Now the real work begins—migration.

This was a much more difficult chapter to write than we expected. We can’t count all the migrations we’ve been through over the years. But when we considered all the various versions of Oracle, the migration tools available, and how they have changed from one version to the next, it became clear that we needed to narrow the scope somewhat. So to keep this interesting and save a few trees we’ll be focusing on version 11.2 Enterprise Edition for a majority of this chapter. Along the way we’ll point out ways to make the most of the features available in previous versions of the Oracle database.

There are many methods, tools, and techniques for migrating your database from legacy hardware to Exadata, but generally speaking they fall into two broad categories: physical migration and logical migration. While there are several factors that determine which method is best, the decision making process is usually dominated by one factor, the available down time to complete the move. The good news is that there are several strategies to help you get there. Each method comes with its own pro’s and con’s. In this chapter we’re going to dig into each of these methods. We’ll talk about reasons you might use one over the other, the relative advantages and disadvantages, and what common pitfalls you should watch out for.

![]() Note: Migrating your applications to Oracle Exadata from non-Oracle platforms is out of the scope of this book, so it won’t be covered here.

Note: Migrating your applications to Oracle Exadata from non-Oracle platforms is out of the scope of this book, so it won’t be covered here.

![]() Kevin Says: During Technical Review I approached this chapter with skepticism. In my thinking, the idea of an entire chapter dedicated to migrating a database to the Exadata Database Machine seemed like a waste of space. Allow me to explain.

Kevin Says: During Technical Review I approached this chapter with skepticism. In my thinking, the idea of an entire chapter dedicated to migrating a database to the Exadata Database Machine seemed like a waste of space. Allow me to explain.

I routinely remind people that unless Exadata Hybrid Columnar Compression (EHCC) is being used, there is no difference between data segments stored in Exadata Storage Server cells and those in conventional storage. The Oracle Database software deployed in the Exadata Database Machine is Oracle Database 11g—with a very small amount of code that knows how to compress data into EHCC form and otherwise interface with Exadata via iDB. I conducted my first review pass of this chapter with the mindset of an imaginary customer who, for whatever reason, finds no need for EHCC. With that mindset I expected this chapter to be nothing more than an overview of database migration concepts, an occasional reference to Oracle product documentation, and perhaps, a paragraph or two of special handling considerations for EHCC. However, after my first reading of the chapter I felt compelled to add this note to the reader. Even if you know everything there is to know about database migration, in the context of Oracle database, I encourage you to read this chapter. Having said that, I still wish to reinforce the principle that there is no difference between a database stored in Exadata Storage Server cells and one stored in conventional storage—unless HCC is involved. To end up with an Oracle database stored in Exadata Storage Server cells, you have to flow the data through the database grid using the same tools used for any other migration to Oracle. However, I believe that the principles conveyed in this chapter will be quite helpful in any migration scenario to Oracle. I consider that a bonus!

Migration Strategies

Once you have a good understanding what Exadata is, and how it works, you are ready to start thinking about how you are going to get your database moved. Migration strategies fall into two general categories, logical migration and physical migration. Logical migration involves extracting the data from one database and loading it into another. Physical migration refers to lifting the database, block by block, from one database server and moving it to another. The data access characteristics of your database are a key consideration when deciding which migration method is best. This is primarily because of the way the data is accessed on Exadata. OLTP databases tend to use single block reads and update data across all tables, whereas Data Warehouse (DW) databases are typically optimized for full table scans and only update current data. Exadata uses Flash Cache on the storage cells to optimize single block reads and improve the overall performance for OLTP databases. For DW databases Exadata uses Smart Scan technology to optimize full table scans. The details of these two optimization methods are covered in Chapters 2 and 5. Logical migration allows you the opportunity to make changes to your database to optimize it for the Exadata platform. Such changes might include resizing extents, implementing or redesigning your current partitioning schemes, and compressing tables using HCC. These are all very important storage considerations for large tables and especially so for DW databases. Because OLTP applications tend to update data throughout the database, HCC compression is not a good fit and would actually degrade performance. And while large extents (4MB and 8MB+) are beneficial for DW databases, they are less advantageous for OLTP databases, which use mostly index-based access and “random” single-block reads. Physical migration, by its very nature, allows no changes to be made to the storage parameters for tables and indexes in the database, while logical migration allows much more flexibility in redefining storage, compression, partitioning and more.

Logical Migration

Regardless of the technology used, logical migration consists of extracting objects from the source database and reloading them into a target database. Even though logical migration strategies tend to be more complicated than physical strategies, they are usually preferable because of the following advantages:

Staged Migration :Tables and partitions that are no longer taking updates can be moved outside of the migration window, reducing the volume to be moved during the final cut over.

Selective Migration: Often times the source database has obsolete user accounts and database objects that are no longer needed. With the logical method these objects may be simply omitted from the migration. The old database may be kept around for awhile in case you later decide you need something that didn’t get migrated.

Platform Differences: Data is converted to target database block size automatically. Big-endian to little-endian conversion is handled automatically.

Exadata hybrid columnar compression (HCC) can be configured before data is moved. That is the tables may be defined with HCC in the Exadata database so that data is compressed as it is loaded into the new database.

Extent Sizing: Target tables, partitions, and indexes may be pre-created with optimal extent sizes (multiples of 4MB) before the data is moved.

Allows Merging of Databases: This is particularly important when Exadata is used as a consolidation platform. If your Exadata is model V2 or X2-2, memory on the database servers may be a somewhat limiting factor. V2 database servers are configured with 72G of RAM each, while X2-2 comes with 96G RAM per server. This is a lot of memory when dealing with 10 or fewer moderate to large sized databases. But it is becoming fairly common to see 15 or more databases on a server. For example, one of us worked on a project where Exadata was used to host PeopleSoft HR and Financials databases. The implementer requested 15 databases for this effort. Add to this the 10 databases in their plan for other applications and SGA memory became a real concern. The solution of course is to merge these separate databases together allowing them to share memory more efficiently. This may or may not be a difficult task depending on how contained the databases are at the schema level.

If using the “create table as select” method or “insert into as select” method (CTAS or IAS) over a database link, then the data may also be sorted as it is loaded into the target database to improve index efficiency, optimize for Exadata Storage Indexes and achieve better compression ratios.

There are basically two approaches for logical migration. One involves extracting data from the source database and loading it into the target database. We’ll call this the “Extract and Load” method. Tools commonly used in this approach are Data Pump, Export/Import, and CTAS (or IAS) through a database link. The other method is to replicate the source database during normal business operations. When the time comes to switch to the new database, replication is cancelled and client applications are redirected to the new database. We’ll refer to this as the “Replication-Based” method. Tools commonly used in the Replication-Based method are Oracle Streams, Oracle Data Guard (Logical Standby), and Oracle Golden Gate. It is also possible to use a combination of physical and logical migration, such as copying (mostly) read only tablespaces over well ahead of the final cut-over and applying changes to them via some replication method like Streams.

Extract and Load

Generally speaking, the Extract and Load method requires the most downtime of all the migration strategies. This is because once the extract begins, and for the duration of the migration, all DML activity must be brought to a stop. Data warehouse environments are the exception to the rule, because data is typically organized in an “age-in/age-out” fashion. Since data is typically partitioned by date range, static data is separated from data that is still undergoing change. This “read only” data may be migrated ahead of time, outside the final migration window; perhaps even during business hours. The biggest advantage of the Extract and Load strategy is its simplicity. Most DBAs have used Data Pump or CTAS for one reason or another, so the tool set is familiar. Another big advantage is the control it gives you. One of the great new features Exadata brings to the table is Hybrid Columnar Compression (HCC). Since you have complete control over how the data is loaded into the target database, it is a relatively simple task to employ HCC to compress tables as they are loaded in. Extract and Load also allows you to implement partitioning or change partitioning strategies. Loading data using CTAS allows you to sort data as it is loaded, which improves the efficiency of Exadata’s storage indexes. One could argue that all these things could be done post migration, and that is true. But why move the data twice when it can be incorporated into the migration process itself? In some situations it may not even be possible to fit the data onto the platform without applying compression. In the next few sections we will cover several approaches for performing Extract and Load migrations.

![]() Kevin Says: The point about ordered loading of data is a very important topic. It is true that this may not always be an option, but the benefit can go even further than the improved storage index efficiency already mentioned. Ordered loading can increase the compression ratio of Exadata Hybrid Columnar Compression as well. In spite of the sophisticated load-time compression techniques employed by the HCC feature, the fundamentals can never be forgotten. In the case of compression, like-values always compress more deeply. It is worth considering whether this approach fits into the workflow and opportunity window for data loading.

Kevin Says: The point about ordered loading of data is a very important topic. It is true that this may not always be an option, but the benefit can go even further than the improved storage index efficiency already mentioned. Ordered loading can increase the compression ratio of Exadata Hybrid Columnar Compression as well. In spite of the sophisticated load-time compression techniques employed by the HCC feature, the fundamentals can never be forgotten. In the case of compression, like-values always compress more deeply. It is worth considering whether this approach fits into the workflow and opportunity window for data loading.

Data Pump

Data Pump is an excellent tool for moving large quantities of data between databases. Data Pump consists of two programs, expdp and impdp. The expdp command is used to extract database objects out of the source database. It can be used to dump the contents of an entire database or, more selectively, by schema or by table. Like its predecessor Export (exp), Data Pump extracts data and saves it into a portable data file. This file can then be copied to Exadata and loaded into the target database using the impdp command. Data Pump made its first appearance in Oracle 10g, so if your database is version 9i or earlier, you will need to use the old Export/Import (exp/imp) instead. Export and Import have been around since Oracle 7, and although they are getting a little long in the tooth they are still very effective tools for migrating data and objects from one database to another. And, even though Oracle has been talking about dropping exp and imp for years now, they are still part of the base 11.2 install. First we’ll talk about Data Pump and how it can be used to migrate to Exadata. After that we can take a quick look at ways to migrate older databases using Export and Import. Keep in mind that new features and parameters are added to Data Pump with each major release. Check the Oracle documentation for capabilities and features specific to your database version.

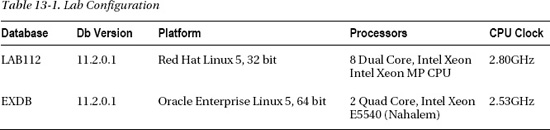

From time to time in this chapter we’ll make reference to tests and timings we saw in our lab. Table 13-1 shows some of the relevant characteristics of the servers and databases we used for these tests. The LAB112 database is the source database and EXDB is the target (Exadata) database. It is an Exadata V2 quarter rack configuration.

Here is a breakdown of the segments in my test database.

SEGMENT_TYPE MBYTES

-------------------- ------------

CLUSTER 63

INDEX 13,137

INDEX PARTITION 236

LOBINDEX 48

LOBSEGMENT 290

TABLE 20,662

TABLE PARTITION 1,768

TYPE2 UNDO 142Now, let’s take a look at some of the Data Pump parameters you’ll want to know about. Here are some of the key parameters that are useful for migrating databases.

COMPRESSION: Data Pump compression is a relatively new feature. In 10g you had the ability to compress metadata, but in 11g this capability was extended to table data as well. Valid options areALL,DATA_ONLY,METADATA_ONLYandNONE. Using theCOMPRESSION=ALLoption Data Pump reduced the size of our export from 13.4G to 2.5G, a compression ratio of over 5 times. That’s a pretty significant savings in storage. When we ran the test with compression turned on, we fully expected it to slow down the export, but instead it actually reduced our export time from 39 minutes to just over 9 minutes. This won’t always be the case, of course. On our test system the export was clearly I/O-bound. But it does point out that compression can significantly reduce the storage requirements for exporting your database without necessarily slowing down the process. Unfortunately, the ability to compress table data on the fly was not introduced until release 11gR1. If your database is 10g and you need to compress your dumpfiles before transferring them to Exadata, you will need to do that using external tools like gzip, zip, or compress. Note that the use of the dataCOMPRESSIONoption in Data Pump requires Oracle Advanced Compression licenses.

FLASHBACK_TIME,FLASHBACK_SCN: Believe it or not, by default Data Pump does not guarantee the read consistency of your export. To export a read-consistent image of your database you must use either theFLASHBACK_SCNor theFLASHBACK_TIMEparameter. If you useFLASHBACK_TIME, Data Pump looks up the nearest System Change Number (SCN) corresponding to the time you specified and exports all data as of that SCN.FLASHBACK_TIMEcan be passed in to Data Pump as follows:FLASHBACK_TIME="to_timestamp('05-SEP-2010 21:00:00','DD-MON-YYYY HH24:MI:SS')"If you choose to use

FLASHBACK_SCN, you can get the current SCN of your database by running the following query:SQL> select current_scn from v$database;

FULL,SCHEMAS,TABLES: These options are mutually exclusive and specify whether the export will be for the full database, a selection of schemas, or a selection of individual tables. Note that certain schemas, like SYS, MDSYS, CTXSYS, and DBSNMP, are never exported when doing a full database export.

PARALLEL: ThePARALLELparameter instructs Data Pump to split the work up into multiple parts and run them concurrently.PARALLELcan vastly improve the performance of the export process.

NETWORK_LINK: This parameter specifies a database link in the target database to be used for the export. It allows you to export a database from a remote server, pull the data directly through the network via database link (in the target database), and land the files on an Exadata file system. We see this as more of a convenience than anything else, as it saves you the extra step of transporting the dumpfiles manually at the end of the export. It is used by Grid Control to automate the migration process using the “Import From Database” process. Using this method for manual migration doesn’t make much sense— if you are going to copy the data over a database link anyway, why not load it to target tables directly, using CTAS or direct-path insert, instead of dumping it to disk and reloading back later on?

Now let’s turn our attention to the import process. Schema-level import is usually preferable when migrating databases. It allows you to break the process up into smaller, more manageable parts. This is not always the case, and there are times when a full database import is the better choice. Most of the tasks we will talk about here apply to both schema-level and full database imports, As we go along, we’ll note any exceptions you will need to be aware of. If you choose not to do a full database import, be aware that system objects including roles, public synonyms, profiles, public database links, system privileges, and others will not be imported. You will need to extract the DDL for these objects using the SQLFILE parameter and a FULL=Y import. You can then execute the DDL into the target database to create them. Let’s take a look at some of the parameters useful for migrating databases.

REMAP_SCHEMA: As the name implies, this parameter tells Data Pump to change the ownership of objects from one schema to another during the course of the import. This is particularly useful for resolving schema conflicts when merging multiple databases into one Exadata database.

REMAP_DATAFILE: Datafiles can be renamed dynamically during the import process using this parameter. This allows ASM to automatically organize and name the datafiles according to Oracle Managed Files (OMF) rules.

REMAP_TABLESPACE: This option changes the tablespace name reference for segments from one tablespace to another. It is useful when you want to physically relocate tables from one tablespace to another during the import.

SCHEMAS: List of schemas to import.

SQLFILE: Instead of importing anything into the database, Object definitions (DDL) are written to an SQL script. This can be quite useful for pre-building objects if you want to make changes to their physical structure, such as partitioning or using HCC compression.

TABLE_EXISTS_ACTION: The action to take if the imported object already exists. Valid keywords areAPPEND,REPLACE,[SKIP], andTRUNCATE.

TABLES: A list of tables to import. For example,TABLES=KSO.SKEW, RJOHNSON.TEST

TRANSFORM: This parameter allows you to make changes to segment attributes in object-creation DDL statements, like storage attributes. This provides a convenient way to optimize extent sizes for tables when they are created in Exadata.

Before you begin importing schemas into your Exadata database, be aware that Data Pump only creates tablespaces automatically when a full database import is done. So if you are importing at the schema or table level you will need to create your tablespaces manually. To do this, generate the DDL for tablespaces using the parameters FULL=yes and SQLFILE={your_sql_script}. This produces a script with the DDL for all objects in the dumpfile, (including datafiles). One thing you may notice about the CREATE TABLESPACE DDL is that the datafile file names are fully qualified. This isn’t at all what we want, because it circumvents OMF and creates hard-coded file names that cannot be managed by the database. The REMAP_DATAFILE parameter allows you to rename your datafiles to reflect the ASM disk groups in your Exadata database. The syntax looks something like this:

REMAP_DATAFILE='/u02/oradata/LAB112/example01.dbf':'+DATA'One final note before we move on to Export/Import. Character set translation between the source and target databases is done automatically with Data Pump. Make sure the character set of the source database is a subset of the target database, or something may be lost in translation. For example, it’s okay if your source database is US7ASCII (7 bit) and the target database is WE8ISO8859P1 (8 bit). But migrating between different 8-bit character sets or going from 8 bit to 7 bit may cause special characters to be dropped.

Export and Import

If the database you are migrating to Exadata is a release prior to version 10g, Data Pump won’t be an option. Instead you will need to work with its predecessors, Export (exp) and Import (imp). Export/Import features haven’t changed much since Oracle 9.2, but if you are migrating from a previous release, you will notice that some features may be missing. Hopefully you aren’t still supporting 8i databases, or God forbid 7.x, but not to worry. Even though some options like FLASHBACK_SCN and PARALLEL are not options in these older releases, there are ways to work around these missing features.

PARALLEL is strictly a Data Pump feature but you can still parallelize database exports by running concurrent schema exports. This is a much less convenient way of “parallelizing” your export process. If you have to parallelize your export process in this way you will have to do the work of figuring out which schemas, grouped together, are fairly equal in size to minimize the time it takes for all of them to complete.

COMPRESSION is another feature missing from Export. This has never been much of an issue for DBAs supporting Unix/Linux platforms. These systems provide the ability to redirect the output from Export through the compress or gzip commands by means of a named pipe, something like this (the $ sign is the shell prompt, of course):

$ mkfifo exp.dmp

$ ls -l exp.dmp

prw-rw-r-- 1 rjohnson dba 0 Oct 2 15:17 exp.dmp

$ cat exp.dmp | gzip -c > my_compressed_export.dmp.gz &

$ exp system file=exp.dmp owner=rjohnson consistent=y compress=n statistics=none log=my_compressed_export.log

$ ls -l my_compressed_export.*

-rw-rw-r-- 1 rjohnson dba 3134148165 Oct 2 22:32 my_compressed_export.dmp.gz

-rw-rw-r-- 1 rjohnson dba 1432 Oct 2 22:32 my_compressed_export.logThe REMAP_TABLESPACE parameter is not available in Export/Import. To work around this you will have to generate a SQL file using the INDEXFILE parameter which produces a SQL script like Data Pump’s SQLFILE parameter. You can then modify tablespace references and pre-create segments in the new tablespace as needed. Using the IGNORE parameter will allow Import to simply perform an insert into the tables you manually created ahead of time. The REMAP_SCHEMA parameter takes on a slightly different form in Import. To change the name of a schema during import, use the FROMUSER and TOUSER parameters.

There is one limitation with Export/Import that cannot be escaped. Import does not support Exadata Hybrid Columnar Compression (HCC). Our tests show that when importing data using Import, the best table compression you can expect to get is about what you would get with tables compressed for OLTP (also known in 11g as “Advanced Compression”). It doesn’t matter if a table is configured for any one of the four HCC compression modes available on Exadata, (Query Low/High and Archive Low/High). This is because HCC compression can only occur if the data is direct-path inserted, using syntax like insert /*+ APPEND */, for example. According the Exadata User’s Guide, “Conventional inserts and updates are supported,” but “result in a less compressed format, and reduced compression ratio.” This “reduced compression ratio” is actually the same as the OLTP compression provided by the Advanced Compression option, which HCC falls back to for normal inserts. By the way, Import will not complain or issue any warnings to this effect. It will simply import the data at a much lower compression rate, silently eating up far more storage than you planned or expected. There is nothing you can do about it other than rebuild the affected tables after the import is complete. The important thing to understand is that you cannot exploit Exadata’s HCC compression using Export/Import.

The Export/Import approach also does not support Transparent Data Encryption (TDE). If your database uses TDE you will need to use Data Pump to migrate this data. If you are importing at the schema level, system objects like roles, public synonyms, profiles, public database links, system privileges, and others will not be imported. System objects like these can be extracted by doing a full database import and with the INDEXFILE parameter to extract the DDL to create these objects. This step is where the most mistakes are made. It is a tedious process and careful attention must be given so that nothing falls through the cracks. Fortunately, there are third-party tools that do a very good job of comparing two databases and showing you where you’ve missed something. Most of these tools, like TOAD and DB Change Manager from Embarcadero, also provide a feature to synchronize the object definitions across to the new database.

If you are still thinking about using Export/Import, note that as the data loading with Import doesn’t use direct-path load inserts, it will have much higher CPU usage overhead due to undo and redo generation and buffer cache management. You would also have to use a proper BUFFER parameter for array inserts (you’ll want to insert thousands of rows at a time) and use COMMIT=Y (which will commit after every buffer insert) so you wouldn’t fill up the undo segments with one huge insert transaction.

When to Use Data Pump or Export/Import

Data Pump and Export/Import are volume-sensitive operations. That is, the time it takes to move your database will be directly tied to its size and the bandwidth of your network. For OLTP applications this is downtime. As such, it is better suited for smaller OLTP databases. It is also well suited for migrating large DW databases, where read-only data is separated from read-write data. Take a look at the downtime requirements of your application and run a few tests to determine whether Data Pump is a good fit. Another benefit of Data Pump and Export/Import is that they allow you to copy over all the objects in your application schemas easily, relieving you from manually having to copy over PL/SQL packages, views, sequence definitions, and so on. It is not unusual to use Export/Import for migrating small tables and all other schema objects, while the largest tables are migrated using a different method.

What to Watch Out for when Using Data Pump or Export/Import

Character-set differences between the source and target databases are supported, but if you are converting character sets make sure the character set of the source database is a subset of the target. If you are importing at the schema level, check to be sure you are not leaving behind any system objects, like roles and public synonyms, or database links. Remember that HCC is only supported in Data Pump. Be sure you use the consistency parameters of Export or Data Pump to ensure that your data is exported in a read-consistent manner. Don’t forget to take into account the load you are putting on the network.

Data Pump and Export/Import methods also require you to have some temporary disk space (both in the source and target server) for holding the dumpfiles. Note that using Data Pump’s table data compression option requires you to have Oracle Advanced Compression licenses both for the source and target database (only the metadata_only compression option is included in the Enterprise Edition license).

Copying Data over a Database Link

When extracting and copying very large amounts of data—many terabytes—between databases, database links may be your best option. Unlike the DataPump option, with database links you will read your data once (from the source), transfer it immediately over the network, and write it once (into the target database). With Data Pump, Oracle would have to read the data from source, then write it to a dumpfile, and then you’ll transfer the file with some file-transfer tool (or do the network copy operation using NFS), read the dumpfile in the target database, and then write it into the target database tables. In addition to all the extra disk I/O done for writing and reading the dumpfiles, you would need extra disk space for holding these dumpfiles during the migration. Now you might say “Hold on, DataPump does have the NETWORK_LINK option and the ability to transfer data directly over database links.” Yes that’s true, but unfortunately when using impdp with database links, Data Pump performs conventional INSERT AS SELECTs, not direct path inserts. And this means that the inserts will be much slower, generate lots of redo and undo, and possibly run out of undo space. And more importantly, conventional path IAS does not compress data with HCC compression (but resorts to regular OLTP compression instead if HCC is enabled for the table). So this makes the DataPump file-less import over database links virtually useless for loading lots of data into Exadata fast. You would have to use your own direct path IAS statements with APPEND hints to get the benefits of direct path loads and maximum performance out of file-less transfer over database links.

![]() Kevin Says: Add to this list of possible dumpfile transfer techniques the capability known as Injecting Files with Database File System (DBFS). The DBFS client (

Kevin Says: Add to this list of possible dumpfile transfer techniques the capability known as Injecting Files with Database File System (DBFS). The DBFS client (dbfs_client) has been ported to all Linux and Unix platforms and can be used to copy files into the Exadata Database Machine even if the DBFS file system is not mounted on the hosts of the Exadata Database Machine nor on the sending system. It is a mount-free approach. Oracle Documentation provides clear examples of how to use the built-in copy command that dbfs_client supports. The data flows from the dbfs_client executable over SQL*Net and is inserted directly into SecureFile LOBs. The transfer is much more efficient than the NFS protocol and is secure. Most Exadata Database Machine deployments maintain DBFS file systems, so there is less of a need for local disk capacity used as a staging area.

There are some cases where moving your data through database links may not perform as well as the DataPump approach. If you have a slow network link between the source and target (migrations between remote data centers, perhaps) then you may benefit more from compressing the dumpfiles, while database links over Oracle Net won’t do as aggressive compression as, for example, gzip can do. Oracle Net (SQL*Net) does simple compression by de-duplicating common column values within an array of rows when sent over SQL*Net. This is why the number of bytes transferred over SQL*Net may show a smaller value than the total size of raw data.

Transferring the data of a single table over a database link is very easy. In the target (Exadata) database you’ll need to create a database link pointing to the source database, then just issue either a CTAS or INSERT SELECT command over the database link:

CREATE DATABASE LINK sourcedb

CONNECT TO source_user

IDENTIFIED BY source_password

USING 'tns_alias';

CREATE TABLE fact AS SELECT * FROM fact@sourcedb;This example will create the table structure and copy the data, but it won’t create any other objects such as indexes, triggers or constraints for the table. These objects must be manually created later, either by running the DDL scripts or by doing a metadata-only import of the schema objects.

![]() Note: When creating the database link, you can specify the database link’s TNS connect string directly, with a USING clause, like this:

Note: When creating the database link, you can specify the database link’s TNS connect string directly, with a USING clause, like this:

CREATE DATABASE LINK ... USING '(DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HO/ST =

localhost)(PORT = 1521)) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = ORA10G)))'That way, you don’t have to set up tnsnames.ora entries in the database server.

Another option is to use INSERT SELECT for loading data into an existing table structure. We want to bypass the buffer cache, and the undo-generation-and-redo-logging mechanism for this bulk data load, so we can use the APPEND hint to make this a direct path load insert:

INSERT /*+ APPEND */ INTO fact SELECT * FROM fact@sourcedb;

COMMIT;We’re assuming here that the database is in NOARCHIVELOG mode during the migration, so we haven’t set any logging attributes for the table being loaded. In NOARCHIVELOG mode all bulk operations (such as INSERT APPEND, index REBUILD and ALTER TABLE MOVE) are automatically NOLOGGING.

If your database must be in ARCHIVELOG mode during such data loading, but you still want to perform the loading of some tables without logging, then you can just temporarily turn off logging for those tables for the duration of the load:

ALTER TABLE fact NOLOGGING;

INSERT /*+ APPEND */ INTO fact SELECT * FROM fact@sourcedb;

ALTER TABLE fact LOGGING;Of course if your database or tablespace containing this table is marked FORCE LOGGING, then logging will still occur, despite any table-level NOLOGGING attributes.

Achieving High-Throughput CTAS or IAS over a Database Link

While the previous examples are simple, they may not give you the expected throughput, especially when the source database server isn’t in the same LAN as the target. The database links and the underlying TCP protocol must be tuned for high throughput data transfer. The data transfer speed is limited obviously by your networking equipment throughput and is also dependent on the network round-trip time (RTT) between the source and target database.

When moving tens of terabytes of data in a short time, you obviously need a lot of network throughput capacity. You must have such capacity from end to end, from your source database to the target Exadata cluster. This means that your source server must be able to send data as fast as your Exadata cluster has to receive it, and any networking equipment (switches, routers) in between must also be able to handle that, in addition to all other traffic that has to flow through them. Dealing with corporate network topology and network hardware configuration is a very wide topic and out of the scope of this book, but we’ll touch the subject of the network hardware built in to Exadata database servers here.

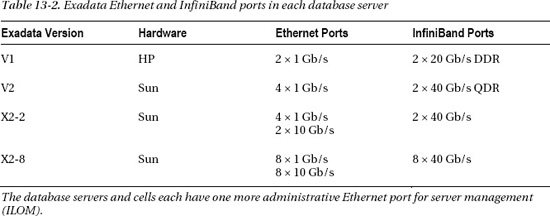

In addition to the InfiniBand ports, Exadata clusters also have built-in Ethernet ports. Table 13-2 lists all the Ethernet and InfiniBand ports.

Note that this table shows the number of network ports per database server. So, while Exadata V2 does not have any 10GbE ports, it still has 4 × 1GbE ports per database server. With 8 database servers in a full rack, this would add up to 32 × 1 GbE ports, giving you a maximum theoretical throughput of 32 gigabits per second when using only Ethernet ports. With various overheads, 3 gigabytes per second of transfer speed would theoretically still be achievable if you manage to put all of the network ports equally into use and there are no other bottlenecks. This would mean that you have to either bond the network interfaces or route the data transfer of different datasets via different network interfaces. Different dblinks’ connections can be routed via different IPs or DataPump dumpfiles transferred via different routes.

This already sounds complicated, that’s why companies migrating to Exadata often used the high-throughput bonded InfiniBand links for migrating large datasets with low downtime. Unfortunately, the existing database networking infrastructure in most companies does not include InfiniBand (in old big iron servers). The standard usually is a number of switched and bonded 1 GbE Ethernet ports or 10 GbE ports in some cases. That’s why, for Exadata V1/V2 migrations, you would have had to either install an InfiniBand card into your source server or use a switch capable of both handling the source Ethernet traffic and flowing it on to the target Exadata InfiniBand network.

Luckily the new Exadata X2-2 and X2-8 releases both have 10 GbE ports included in them, so you don’t need to go through the hassle of getting your old servers InfiniBand-enabled anymore and can resort to 10 GbE connections (if your old servers or network switches have 10GbE Ethernet cards in place). Probably by the time this book comes out, nobody plans large-scale migrations to Exadata V1 and V2 anymore.

![]() Note: Remember that if you do not have huge data transfer requirements within a very low downtime window, then the issues described here may not be problems for you at all. You would want to pick the easiest and simplest data transfer method that gets the job done within the required time window. It’s important to know the amount of data to be transferred in advance and test the actual transfer speeds in advance to see whether you would fit into the planned downtime. If your dblink transfer or dumpfile copy operation is too slow, then you can use free tools like iPerf (

Note: Remember that if you do not have huge data transfer requirements within a very low downtime window, then the issues described here may not be problems for you at all. You would want to pick the easiest and simplest data transfer method that gets the job done within the required time window. It’s important to know the amount of data to be transferred in advance and test the actual transfer speeds in advance to see whether you would fit into the planned downtime. If your dblink transfer or dumpfile copy operation is too slow, then you can use free tools like iPerf (http://iperf.sourceforge.net) to test out your network throughput between source and target servers. If the dblinks or datapump dumpfile transfer is significantly worse than iPerf’s results, there must be a configuration bottleneck somewhere.

This leads us to software configuration topics for high network throughput for database migrations. This chapter does not aim to be a network tuning reference, but we would like to explain some challenges we’ve seen. Getting the Oracle database links throughput right involves changing multiple settings and requires manual parallelization. Hopefully this section will help you avoid reinventing the wheel when dealing with huge datasets and low downtime requirements.

In addition to the need for sufficient throughput capacity at the network hardware level, there are three major software configuration settings that affect Oracle’s data transfer speed:

- Fetch array size (

arraysize) - TCP send and receive buffer sizes

- Oracle Net Session Data Unit (SDU) size

With regular application connections, the fetch array size has to be set to a high value, ranging from hundreds to thousands, if you are transferring lots of rows. Otherwise, if Oracle sends too few rows out at a time, most of the transfer time may end up being spent waiting for SQL*Net packet ping-pong between the client and server.

However, with database links, Oracle is smart enough to automatically set the fetch array size to the maximum—it transfers 32767 rows at a time. So, we don’t need to tune it ourselves.

Tuning TCP Buffer Sizes

The TCP send and receive buffer sizes are configured at the operating-system level, so every O/S has different settings for it. In order to achieve higher throughput, the TCP buffer sizes have to be increased in both ends of the connection. We’ll cover a Linux and Solaris example here; read your O/S networking documentation if you need to do this on other platforms. We often use the Pittsburgh Supercomputing Center’s “Enabling High Performance Data Transfers” page for reference (http://www.psc.edu/networking/projects/tcptune/). First, you’ll need to determine the maximum buffer size TCP (per connection) in your system.

On the Exadata servers, just keep the settings for TCP buffer sizes as they were set during standard Exadata install. On Exadata the TCP stack has already been changed from generic Linux defaults. Do not configure Exadata servers settings based on generic database documentation (such as the “Oracle Database Quick Installation Guide for Linux”).

However, on the source system, which is about to send large amounts of data, the default TCP buffer sizes may become a bottleneck. You should add these lines to /etc/sysctl.conf if they’re not there already:

net.core.rmem_default = 262144

net.core.rmem_max = 1048576

net.core.wmem_default = 262144

net.core.wmem_max = 4194304Then issue a sysctl –p command to apply these values into the running kernel.

The maximum read and write buffer sizes per TCP connection will be set to 1MB and 4MB respectively, but the default starting value for buffers is 256kB for each. Linux kernels 2.4.27 (and higher) and 2.6.17 (and higher) can automatically tune the actual buffer sizes from default values up to allowed maximums during runtime. If these parameters are not set, Linux kernel (2.6.18) defaults to 128kB buffer sizes, which may become a bottleneck when transferring large amounts of data. The write buffer has been configured bigger as the source data would be sending (writing) the large amounts of data, the amount of data received will be much smaller.

The optimal buffer sizes are dependent on your network roundtrip time (RTT) and the network link maximum throughput (or desired throughput, whichever is lower). The optimal buffer size value can be calculated using the Bandwidth*Delay product (BDP) formula, also explained in the “Enabling High Performance Data Transfers” document mentioned earlier in this section. Note that changing the kernel parameters shown earlier means a global change within the server, and if your database server has a lot of processes running, the memory usage may rise thanks to the increased buffer sizes. So you might not want to increase these parameters until the actual migration happens.

Here’s an example from another O/S type. On Solaris 8 and newer versions, the default send and receive buffer size is 48KB. This would result in even poorer throughput compared to Linux’s default 128KB. On Solaris you can check the max buffer size and max TCP congestion window with the following commands:

$ ndd /dev/tcp tcp_max_buf

1048576

$ ndd /dev/tcp tcp_cwnd_max

1048576This output shows that the maximum (send or receive) buffer size in the Solaris O/S is 1MB. This can be changed with the ndd –set command, but you’ll need root privileges for that:

# ndd - set/dev/tcp tcp_max_buf 4194304

# ndd - set/dev/tcp tcp_cwnd_max 4194304This, however just sets the maximum TCP buffer sizes (and the TCP congestion window) per connection, but not the default buffer sizes, which a new connection would actually get. The default buffer sizes can be read this way:

$ ndd /dev/tcp tcp_xmit_hiwat

49152

$ ndd /dev/tcp tcp_recv_hiwat

49152Both the default send buffer (xmit means transmit) and receive buffer sizes are 48KB. To change these defaults to 4MB each, we would run these commands:

# ndd -set /dev/tcp tcp_recv_hiwat 4194304

# ndd -set /dev/tcp tcp_xmit_hiwat 4194304Note that these settings are not persistent, they will be lost after a reboot. If you want to persist these settings, you should add a startup script into rc3.d (or some rc.local equivalent), which would re-run the previous commands. Another option would be to put these values to /etc/system, but starting from Solaris 10 the use of the global /etc/system settings is not encouraged. Yet another approach would be to put the values into a Solaris Service Management Framework (SMF) manifest file, but that is out of the scope of this book.

If the source server is still going to be actively in use (in production) during the data transfer, then think twice before increasing the default buffer sizes globally. While you could potentially make the bulk data transfer much faster for everybody with larger buffer sizes, your server memory usage would also grow (TCP buffers live in kernel memory) and you could run into memory shortage issues.

This is where a more sophisticated way for changing buffer sizes becomes very useful. Solaris allows you to set the default send and receive buffer sizes at route level. So, assuming that the target server is in a different subnet from most other production servers, you can configure the buffer sizes for a specific route only.

Let’s check the current routes first:

# netstat -rn

Routing Table: IPv4

Destination Gateway Flags Ref Use Interface

-------------------- -------------------- ----- ----- ---------- ---------

default 192.168.77.2 UG 1 0 e1000g0

default 172.16.191.1 UG 1 1

172.16.0.0 172.16.191.51 U 1 2 e1000g1

192.168.77.0 192.168.77.128 U 1 3 e1000g0

224.0.0.0 192.168.77.128 U 1 0 e1000g0

127.0.0.1 127.0.0.1 UH 1 82 lo0Let’s assume that that the target Exadata server uses subnet 192.168.77.0, and one of the servers has IP 192.168.77.123. You can use the route get command in the Solaris machine to see if there are any existing route-specific settings:

# route get 192.168.77.123

route to: 192.168.77.123

destination: 192.168.77.0

mask: 255.255.255.0

interface: e1000g0

flags: <UP,DONE>

recvpipe sendpipe ssthresh rtt,ms rttvar,ms hopcount mtu expire

0 0 0 0 0 0 1500 0The recvpipe and sendpipe values are zero; they use whatever are the O/S system-wide defaults. Let’s change the route settings now and check the settings with route get again:

# route change -net 192.168.77.0 -recvpipe 4194304 -sendpipe 4194304

change net 192.168.77.0

# route get 192.168.77.123

route to: 192.168.77.123

destination: 192.168.77.0

mask: 255.255.255.0

interface: e1000g0

flags: <UP,DONE>

recvpipe sendpipe ssthresh rtt,ms rttvar,ms hopcount mtu expire

4194304 4194304 0 0 0 0 1500 0Now all connections between the source server and that subnet would request both send and receive socket buffer size 4 MB, but connections using other routes would continue using default values.

Note that this route change setting isn’t persistent across reboots, so you would need to get the sysadmin to add this command in a Solaris (or SMF service) startup file.

Before you change any of these socket buffer setting at the O/S level, there’s some good news if your source database is Oracle 10g or newer. Starting from Oracle 10g, it is possible to make Oracle request a custom buffer size itself (up to the tcp_max_buf limit) when a new process is started. You can do this by changing the listener.ora on the server side (source database) and tnsnames.ora (or the raw TNS connect string in database link definition) in the target database side. The target database acts as the client in the database link connection pointing from target to source. This is well documented in the Optimizing Performance section of the Oracle Database Net Services Administrator’s Guide, section “Configuring I/O Buffer Size.”

Additionally, you can reduce the number of syscalls Oracle uses for sending network data, by increasing the Oracle Net Session Data Unit (SDU) size. This requires either a change in listener.ora or setting the default SDU size in server-side sqlnet.ora. Read the Oracle documentation for more details. Here is the simplest way to enable higher SDU and network buffer in Oracle versions 10g and newer.

Add the following line to the source database’s sqlnet.ora:

DEFAULT_SDU_SIZE=32767Make sure the source database’s listener.ora contains statements like the following example:

LISTENER =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC1521))

(ADDRESS =

(PROTOCOL = TCP)

(HO/ST = solaris01)

(PORT = 1521)

(SEND_BUF_SIZE=4194304)

(RECV_BUF_SIZE=1048576)

)

)

)The target Exadata server’s tnsnames.ora would then look like this example:

SOL102 =

(DESCRIPTION =

(SDU=32767)

(ADDRESS =

(PROTOCOL = TCP)

(HO/ST = solaris01)

(PORT = 1521)

(SEND_BUF_SIZE=1048576)

(RECV_BUF_SIZE=4194304)

)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = SOL102)

)

)With these settings, when the database link connection is initiated in the target (Exadata) database, the tnsnames.ora connection string additions will make the target Oracle database request a larger TCP buffer size for its connection. Thanks to the SDU setting in the target database’s tnsnames.ora and the source database’s sqlnet.ora, the target database will negotiate the maximum SDU size possible—32767 bytes.

![]() Note: If you have done SQL*Net performance tuning in old Oracle versions, you may remember another SQL*Net parameter:

Note: If you have done SQL*Net performance tuning in old Oracle versions, you may remember another SQL*Net parameter: TDU (Transmission Data Unit size). This parameter is obsolete and is ignored starting with Oracle Net8 (Oracle 8.0).

It is possible to ask for different sizes for send and receive buffers. This is because during the data transfer the bulk of data will move from source to target direction. Only some acknowledgement and “fetch more” packets are sent in the other direction. That’s why we’ve configured the send buffer larger in the source database (listener.ora) as the source will do mostly sending. On the target side (tnsnames.ora), we’ve configured the receive buffer larger as the target database will do mostly receiving. Note that these buffer sizes are still limited by the O/S-level maximum buffer size settings (net.core.rmem_max and net.core.wmem_max parameters in /etc/sysctl.conf in Linux and tcp_max_buf kernel setting in Solaris).

Parallelizing Data Load

If you choose the extract-load approach for your migration, there’s one more bottleneck to overcome in case you plan to use Exadata Hybrid Columnar Compression (EHCC). You probably want to use EHCC to save the storage space and also get better data scanning performance (compressed data means fewer bytes to read from disk). Note that faster scanning may not make your queries significantly faster if most of your query execution time is spent in operations other than data access, like sorting, grouping, joining and any expensive functions called either in the SELECT list or filter conditions. However, EHCC compression requires many more CPU cycles than the classic block-level de-duplication, as the final compression in EHCC is performed with heavy algorithms (LZO, ZLib or BZip, depending on the compression level). Also, while decompression can happen either in the storage cell or database layer, the compression of data can happen only in the database layer. So, if you load lots of data into a EHCC-compressed table using a single session, you will be bottlenecked by the single CPU you’re using. Therefore you’ll need to parallelize the data load to take advantage of all the database layer’s CPUs to get the data load done faster.

Sounds simple—we’ll just add a PARALLEL flag to the target table or a PARALLEL hint into the query, and we should be all set, right? Unfortunately things are more complex than that. There are a couple of issues to solve; one of them is easy, but the other one requires some effort.

Issue 1—Making Sure the Data Load Is Performed in Parallel

The problem here is that while parallel Query and DDL are enabled by default for any session, the parallel DML is not. Therefore, parallel CTAS statements will run in parallel from end to end, but the loading part of parallel IAS statements will be done in serial! The query part (SELECT) will be performed in parallel, as the slaves pass the data to the single Query Coordinator and the QC is the single process, which is doing the data loading (including the CPU-intensive compression).

This problem is simple to fix, though; you’ll just need to enable parallel DML in your session. Let’s check the parallel execution flags in our session first:

SQL> SELECT pq_status, pdml_status, pddl_status, pdml_enabled

2> FROM v$session WHERE sid = SYS_CONTEXT('userenv','sid'),

PQ_STATUS PDML_STATUS PDDL_STATUS PDML_ENABLED

--------- ----------- ----------- ---------------

ENABLED DISABLED ENABLED NOThe parallel DML is disabled in the current session. The PDML_ENABLED column is there for backward compatibility. Let’s enable PDML:

SQL> ALTER SESSION ENABLE PARALLEL DML;

Session altered.

SQL> SELECT pq_status, pdml_status, pddl_status, pdml_enabled

2> FROM v$session WHERE sid = SYS_CONTEXT('userenv','sid'),

PQ_STATUS PDML_STATUS PDDL_STATUS PDML_ENABLED

--------- ----------- ----------- ---------------

ENABLED ENABLED ENABLED YESAfter enabling parallel DML, the INSERT AS SELECTs are able to use parallel slaves for the loading part of the IAS statements.

Here’s one important thing to watch out for, regarding parallel inserts. In the next example we have started a new session (thus the PDML is disabled in it), and we’re issuing a parallel insert statement. We have added a “statement-level” PARALLEL hint into both insert and query blocks, and the explained execution plan output (the DBMS_XPLAN package) shows us that parallelism is used. However, this execution plan would be very slow loading into a compressed table, as the parallelism is enabled only for the query (SELECT) part, not the data loading part!

Pay attention to where the actual data loading happens—in the LOAD AS SELECT operator in the execution plan tree. This LOAD AS SELECT, however, resides above the PX COORDINATOR row source (this is the row source that can pull rows and other information from slaves into QC). Also, in line 3 you see the P->S operator, which means that any rows passed up the execution plan tree from line 3 are received by a serial process (QC).

SQL> INSERT /*+ APPEND PARALLEL(16) */ INTO t2 SELECT /*+ PARALLEL(16) */ * FROM t1

---------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | TQ |IN-OUT| PQ Distrib |

---------------------------------------------------------------------------------------

| 0 | INSERT STATEMENT | | 8000K| | | |

| 1 | LOAD AS SELECT | T2 | | | | |

| 2 | PX COORDINATOR | | | | | |

| 3 | PX SEND QC (RANDOM) | :TQ10000 | 8000K| Q1,00 | P->S | QC (RAND) |

| 4 | PX BLOCK ITERATOR | | 8000K| Q1,00 | PCWC | |

| 5 | TABLE ACCESS STORAGE FULL| T1 | 8000K| Q1,00 | PCWP | |

---------------------------------------------------------------------------------------

Note

-----

- Degree of Parallelism is 16 because of hint

16 rows selected.The message “Degree of Parallelism is 16 because of hint” means that a request for running some part of the query with parallel degree 16 was understood by Oracle and this degree was used in CBO calculations, when optimizing the execution plan. However, as explained above, this doesn’t mean that this parallelism was used throughout the whole execution plan. It’s important to check whether the actual data loading work (LOAD AS SELECT) is done by the single QC or by PX slaves.

Let’s see what happens when we enable parallel DML:

SQL> ALTER SESSION ENABLE PARALLEL DML;

Session altered.

SQL> INSERT /*+ APPEND PARALLEL(16) */ INTO t2 SELECT /*+ PARALLEL(16) */ * FROM t1

---------------------------------------------------------------------------------------

| Id | Operation | Name | Rows | TQ |IN-OUT| PQ Distrib |

---------------------------------------------------------------------------------------

| 0 | INSERT STATEMENT | | 8000K| | | |

| 1 | PX COORDINATOR | | | | | |

| 2 | PX SEND QC (RANDOM) | :TQ10000 | 8000K| Q1,00 | P->S | QC (RAND) |

| 3 | LOAD AS SELECT | T2 | | Q1,00 | PCWP | |

| 4 | PX BLOCK ITERATOR | | 8000K| Q1,00 | PCWC | |

| 5 | TABLE ACCESS STORAGE FULL| T1 | 8000K| Q1,00 | PCWP | |

---------------------------------------------------------------------------------------

Note

-----

- Degree of Parallelism is 16 because of hintNow, compare this plan to the previous one. They are different. In this case the LOAD AS SELECT operator has moved down the execution plan tree, it’s not a parent of PX COORDINATOR anymore. How you can read this simple execution plan is that the TABLE ACCESS STORAGE FULL sends rows to PX BLOCK ITERATOR (which is the row-source who actually calls the TABLE ACCESS and passes it the next range of data blocks to read). PX BLOCK ITERATOR then sends rows back to LOAD AS SELECT, which then immediately loads the rows to the inserted table, without passing them to QC at all. All the SELECT and LOAD work is done within the same slave, there’s no interprocess communication needed. How do we know that? It is because the IN-OUT column says PCWP (Parallel operation, Combined With Parent) for both operations, and the TQ value for both of the operations is the same (Q1,00). This indicates that parallel execution slaves do perform all these steps, under the same Table Queue node, without passing the data around between slave sets.

The proper execution plan when reading data from a database link looks like this:

SQL> INSERT /*+ APPEND PARALLEL(16) */ INTO tmp

2> SELECT /*+ PARALLEL(16) */ * FROM dba_source@srcdb

-----------------------------------------------------------------------------------

| Id | Operation | Name | Rows | TQ/Ins |IN-OUT| PQ Distrib |

-----------------------------------------------------------------------------------

| 0 | INSERT STATEMENT | | 211K| | | |

| 1 | PX COORDINATOR | | | | | |

| 2 | PX SEND QC (RANDOM) | :TQ10001 | 211K| Q1,01 | P->S | QC (RAND) |

| 3 | LOAD AS SELECT | TMP | | Q1,01 | PCWP | |

| 4 | PX RECEIVE | | 211K| Q1,01 | PCWP | |

| 5 | PX SEND ROUND-ROBIN| :TQ10000 | 211K| | S->P | RND-ROBIN |

| 6 | REMOTE | DBA_SOURCE | 211K| SRCDB | R->S | |

-----------------------------------------------------------------------------------

Remote SQL Information (identified by operation id):

----------------------------------------------------

6 - SELECT /*+ OPAQUE_TRANSFORM SHARED (16) SHARED (16) */

"OWNER","NAME","TYPE","LINE","TEXT"

FROM "DBA_SOURCE" "DBA_SOURCE" (accessing 'SRCDB' )

Note

-----

Degree of Parallelism is 16 because of hintIn this example, because we enabled parallel DML at the session level, the data loading is done in parallel; the LOAD AS SELECT is a parallel operation (the IN-OUT column shows PCWP) executed within PX slaves and not the QC.

Note that DBMS_XPLAN shows the SQL statement for sending to the remote server over the database link (in the Remote SQL Information section above). Instead of sending PARALLEL hints to the remote server, an undocumented SHARED hint is sent, which is an alias of the PARALLEL hint.

This was the easier issue to fix. If your data volumes are really big, then there is another problem to solve with database links, and it’s explained below.

Issue 2—Achieving Fully Parallel Network Data Transfer

Another issue with database links and parallel execution is that even if you manage to run parallel execution on both ends of the link, it is the query coordinators that actually open the database link and do the network transfer. The PX slaves don’t somehow magically open their own database link connections to the other database, all traffic flows through the single query coordinator of a query. So you can run your CTAS/IAS statement with hundreds of PX slaves—but you’ll still have only a single database link connection doing network transfer. While you can optimize the network throughput by increasing the TCP buffer and SDU sizes, there’s still a limit of how much data the QC process (on a single CPU) is able to ingest.

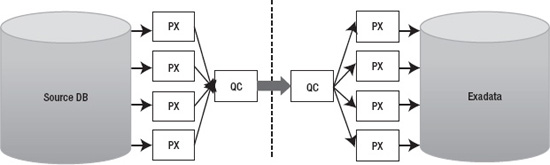

Figure 13-1 illustrates how the data flows through a single query coordinators despite all the parallel execution.

Figure 13-1. Data flow is single-threaded through query coordinators.

In addition to the single QC database link bottleneck, sending data (messages) between the QC and PX slaves takes some extra CPU time. This is where fine-tuning the parallel_execution_message_size parameter has helped a little in the past, but starting from Oracle 11.2 its value defaults to 16KB anyway, so there probably won’t be any significant benefit in adjusting this further. And if you are doing parallel data loads across multiple nodes in a RAC instance, there will still be a single QC per query with a single network connection. So, if QC runs in node 1 and the parallel slaves in node 2, the QC will have to send the data it fetches across the dblink to the PX slaves over the RAC interconnect as PX messages.

So if you choose to use database links with parallel slaves, you should run multiple separate queries in different instances and force the PX slaves to be in the same instance as the QC, using the following command:

SQL> ALTER SESSION SET parallel_force_local = TRUE;

Session altered.That way you will avoid at least the RAC inter-instance traffic and remote messaging CPU overhead, but the QC to PX slave intra-instance messaging (row distribution) overhead still remains here.

Now, despite all these optimizations and migrating different large tables using parallel queries in different instances, you may still find that a single database link (and query coordinator) does not provide enough throughput to migrate the largest fact tables of your database within your downtime. As stated earlier, the database links approach is best used for the few huge tables in your database, and all the rest can be exported/imported with Data Pump. Perhaps you only have couple of huge fact tables, but if you have eight RAC instances in the full rack Exadata cluster, how could you make all the instances transfer and load data efficiently? You would want to have at least one parallel query with its query coordinator and database link per instance and likely multiple such queries if a single QC process can’t pull and distribute data fast enough.

The obvious solution here is to take advantage of partitioning, as your large multi-terabyte tables are likely partitioned in the source database anyway. So you can copy the huge table in multiple separate parts. However there are a couple of problems associated with this approach.

The first issue is that when performing the usual direct path load insert (which is needed for Hybrid Columnar Compression and for NOLOGGING loads), your session would lock the table it inserts into exclusively for itself. Nobody else can modify nor insert into that table while there is an uncommitted direct path load transaction active against that table. Note that others can still read that table, as SELECT statements don’t take enqueue locks on tables they select from.

So, how to work around this concurrency issue? Luckily Oracle INSERT syntax allows you to specify the exact partition or subpartition where you want to insert, by its name:

INSERT /*+ APPEND */

INTO

fact PARTITION ( Y20080101 )

SELECT

*

FROM

fact@sourcedb

WHERE

order_date >= TO_DATE('20080101 ', 'YYYYMMDD ')

AND order_date < TO_DATE('20080102', 'YYYYMMDD ')With this syntax, the direct-path insert statement would lock only the specified partition, and other sessions could freely insert into other partitions of the same table. Oracle would still perform partition key checking, to ensure that data wouldn’t be loaded into wrong partitions. If you attempt to insert an invalid partition key value into a partition, Oracle returns the error message:

ORA-14401: inserted partition key is outside specified partition.Note that we did not use the PARTITION ( partition_name ) syntax in the SELECT part of the query. The problem here is that the query generator (unparser), which composes the SQL statement to be sent over the database link, does not support the PARTITION syntax. If you try it, you will get an error:

ORA-14100: partition extended table name cannot refer to a remote object.That’s why we are relying on the partition pruning on the source database side—we just write the filter predicates in the WHERE condition so that only the data in the partition of interest would be returned. In the example just shown, the source table is range-partitioned by order_date column; and thanks to the WHERE clause passed to the source database, the partition pruning optimization in that database will only scan through the required partition and not the whole table.

Note that we are not using the BETWEEN clause in this example, as it includes both values in the range specified in the WHERE clause, whereas Oracle Partitioning option’s “values less than” clause excludes the value specified in DDL from the partition’s value range.

It is also possible to use subpartition-scope insert syntax, to load into a single subpartition (thus locking only a single subpartition at time). This is useful when even a single partition of data is too large to be loaded fast enough via a single process/database link, allowing you to split your data into even smaller pieces:

INSERT /*+ APPEND */

INTO

fact SUBPARTITION ( Y20080101_SP01 )

SELECT

*

FROM

fact@sourcedb

WHERE

order_date >= TO_DATE('20080101 ', 'YYYYMMDD ')

AND order_date < TO_DATE('20080102', 'YYYYMMDD ')

AND ORA_HASH(customer_id, 63, 0) + 1 = 1In this example the source table is still range-partitioned by order_date, but it is hash partitioned to 64 subpartitions. As it’s not possible to send the SUBPARTITION clause through the database link, either, we have used the ORA_HASH function to fetch only the rows belonging to the first hash subpartition of 64 total subpartitions. The ORA_HASH SQL function uses the same kgghash() function internally, which is used for distributing rows to hash partitions and subpartitions. If we had 128 subpartitions, we would change the 63 in the SQL syntax to 127 (n – 1).

As we would need to transfer all the subpartitions, we would copy other subpartitions in parallel, depending on the server load of course, by running slight variations of the above query and changing only the target subpartition name and the ORA_HASH output to corresponding subpartition position.

INSERT /*+ APPEND */

INTO

fact SUBPARTITION ( Y20080101_SP02 )

SELECT

*

FROM

fact@sourcedb

WHERE

order_date >= TO_DATE('20080101 ', 'YYYYMMDD ')

AND order_date < TO_DATE('20080102', 'YYYYMMDD ')

AND ORA_HASH(customer_id, 63, 0) + 1 = 2And so you’ll need to run a total of 64 versions of this script:

... INTO fact SUBPARTITION ( Y20080101_SP03 ) ... WHERE ORA_HASH(customer_id, 63, 0) + 1 = 3

...

... INTO fact SUBPARTITION ( Y20080101_SP04 ) ... WHERE ORA_HASH(customer_id, 63, 0) + 1 = 4

...

... INTO fact SUBPARTITION ( Y20080101_SP64 ) ... WHERE ORA_HASH(customer_id, 63, 0) + 1 = 64

...If your table’s hash subpartition numbering scheme in the subpartition name doesn’t correspond to the real subpartition position (the ORA_HASH return value), then you’ll need to query DBA_TAB_SUBPARTITIONS and find the correct subpartition_name using the SUBPARTITION_PO/SITION column.

There’s one more catch though. While the order_date predicate will be used for partition pruning in the source database, the ORA_HASH function won’t—the query execution engine just doesn’t know how to use the ORA_HASH predicate for subpartition pruning. In other words, the above query will read all 64 subpartitions of a specified range partition, then the ORA_HASH function will be applied to every row fetched and the rows with non-matching ORA_HASH result will be thrown away. So, if you have 64 sessions, each trying to read one subpartition with the above method, each of them would end up scanning through all subpartitions under this range partition, this means 64 × 64 = 4096 subpartition scans.

We have worked around this problem by creating views on the source table in the source database. We would create a view for each subpartition, using a script, of course. The view names would follow a naming convention such as V_FACT_Y2008010_SP01, and each view would contain the SUBPARTITION ( xyz ) clause in the view’s SELECT statement. Remember, these views would be created in the source database, so there won’t be an issue with database links syntax restriction. And when it’s time to migrate, the insert-into-subpartition statements executed in the target Exadata database would reference appropriate views depending on which subpartition is required. This means that some large fact tables would have thousands of views on them. The views may be in a separate schema, as long as the schema owner has read rights on the source table. Also, you probably don’t have to use this trick on all partitions of the table, as if your largest tables are time-partitioned by some order_date or similar, then you can probably transfer much of the old partitions before the downtime window, so you won’t need to use such extreme measures.

The techniques just discussed may seem quite complicated and time consuming, but if you have tens or hundreds of terabytes of raw data to extract, transfer, and compress, and all this has to happen very fast, such measures are going to be useful. We have used these techniques for migrating VLDB data warehouses up to 100TB in size (compressed with old-fashioned block compression) with raw data sets exceeding a quarter of a petabyte. However, we need to optimize our own time too, so read the next section about when it is feasible to go with database links and when it is not.

When to Use CTAS or IAS over Database Links

If you have allocated plenty of downtime, and the database to be migrated isn’t too big, and you don’t want to do major reorganization of your database schemas, then you probably don’t have to use data load over database links. A full DataPump export/import is much easier if you have the downtime and disk space available; everything can be exported and imported with a simple command.

However, when you are migrating VLDBs with low downtime windows, database links can provide one performance advantage—with database links you don’t have to dump the data to a disk file just to copy it over and reload it back in the other server. Also, you don’t need any intermediate disk space for keeping dumps when using database links. With database links you read the data from disk once (in the source database), transfer it over the wire, and write it to disk once in the target.

Transferring lots of small tables may actually be faster with the Export/Import or Data Pump method. Also, the other schema objects (views, sequences, PL/SQL, and so on) have to be somehow migrated anyway. So it’s a good idea to transfer only the large tables over database links and use Export/Import or Data Pump for migrating everything else. And by the way, Data Pump has a nice EXCLUDE: parameter you can use for excluding the large manually transferred tables from an export job.

What to Watch Out For When Copying Tables over Database Links

Copying tables during business hours can impact performance on the source database. It can also put a load on the network, sometimes even when dedicated network hardware is installed for reducing the impact of high-throughput data transfer during production time.

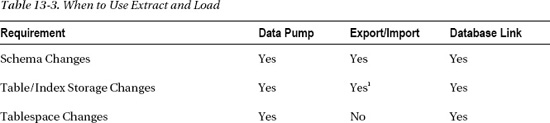

Table 13-3 shows a summary of the capabilities of each of these Extract and Load methods.

Replication-Based Migration

Generally speaking, replication-based migration is done by creating a copy of the source database and then keeping it in sync by applying changes to the copy, or “target database.” There are two very different methods for applying these changes. Physical replication ships archived redo logs to the target where it is applied to the database using its internal recovery mechanisms. This is called “Redo Apply”. We’ll talk more about how that works in the “Physical Migration” section later in this chapter. With logical replication, changes to the source database are extracted as SQL statements and executed on the target database. The technique of using SQL statements to replicate changes from source to target is called SQL Apply. Back in the 90,s when Oracle 7 and 8 were all the rage, it was common practice among DBAs to use snapshots to keep tables in a remote database in sync with master tables in the source database. These snapshots used triggers to capture changes to the master table and execute them on the target. Snapshot logs were used to queue up these changes when the target tables were unavailable so they could be executed at a later time. It was a simple form of logical replication. Of course, this was fine for a handful of tables but it became unwieldy when replicating groups of tables or entire schemas. In later releases Oracle wrapped some manageability features around this technology and branded it ”Simple Replication.” Even back in the early days of database replication there were companies that figured out how to mine database redo logs to capture DML more efficiently and less intrusively than triggers could. Today there are several products on the market that do a very good job of using this “log mining” technique to replicate databases. The advantage logical replication has over physical replication is in its flexibility. For example, logical replication allows the target to be available for read access. In some cases it also allows you to implement table compression and partitioning in the target database. In the next few sections we’ll discuss several tools that support replication-based migration.

Oracle Streams and Golden Gate

Oracle Streams is included in the base RDBMS product and was introduced as a new feature in version 9i. Golden Gate is a product recently acquired by Oracle. Both products replicate data in much the same way. A copy of the source database is created (the target) and started up. This may be done from a full database backup using Recovery Manager or by using Data Pump to instantiate SELECT schemas. Then changes in the source database are extracted, or mined, from the redo logs. The changes are then converted to equivalent DML and DDL statements and executed in the target database. Oracle calls these steps Capture, Stage, and Apply.

Regardless of which product you use, the target database remains online and available for applications to use while replication is running. New tables may be created in the target schema or in other schemas. Any restrictions on the target are limited to the tables being replicated. This is particularly useful when your migration strategy involves consolidation—taking schemas from multiple source databases and consolidating them into one database. Because of its extremely high performance and scalability, most companies use Exadata for database consolidation to at least some degree, so this is a very useful feature. If you read between the lines, you realize that this means you can migrate multiple databases concurrently.

Another capability of both Streams and Golden Gate is the ability to do data transformation. This is not something we would normally associate with database migration, but it is available should you need it. Since Streams uses SQL Apply to propagate data changes to the target, you can implement changes to the target tables to improve efficiencies. For example, you can convert conventional target tables to partitioned tables. You can also add or drop indexes and change extent sizes to optimize for Exadata. Be aware that even though replication provides this capability, it can get messy. If you are planning a lot of changes to the source tables consider implementing them before you begin replication.

Tables in the target database may be compressed using Exadata HCC. Be prepared to test performance when inserting data and using HCC compression. It is a CPU-intensive process, much more so than Basic and OLTP compression. However, the rewards are pretty significant in terms of storage savings and query performance, depending on the nature of the query of course. If conventional inserts or updates are executed on HCC-compressed tables, Oracle switches to OLTP compression (for the affected rows) and your compression ratio drops significantly. Direct path inserts can be done by implementing the insert append hint as follows:

insert /*+ append */ into my_hcc_table select ...;

insert /*+ append_values */ into my_hcc_table values ( <array of rows> );Note that the append_values hint only works from Oracle 11gR2 onwards.