4

Syntax Sphere

4.1. Basic syntactic concepts

4.1.1. Delimitation of the field of syntax

Syntax is a key branch of linguistics. It focuses on the scientific study of the structure of the sentence as an independent unit. The word order, the dependency relationships between these words and, in some languages, the relationships of agreement as well as the case marking, are among the points that attract the attention of most of the researchers. The final objective of syntax is to produce a formal description of underlying regularities with regard to sentence organization and to determine the principles that govern the combination and dependency relationships of words and word sequences within the sentence.

Syntax, which is actually at the heart of linguistics, maintains fairly close relationships with the other branches of linguistics, including phonology, morphology and semantics.

With phonology and more particularly with prosody, the relationships are well-known. For example, the syntactic process of emphasis, which manifests itself in the form of dislocation (Yesterday evening, John came to see me), or clefting (It is John who came to see me yesterday evening) is systematically accompanied by a particular intonation (see [NES 07, INK 90] for an introduction to these issues).

Compared to morphology, syntax is distinguished by the fact that it focuses on the relationships between words, whereas morphology focuses on variations of word forms. Note that some linguistic currents consider that the morpheme is the basic unit of syntax. This leads us to consider that the processes of word creation and sentence construction are of the same nature. So, in this case we refer to morphosyntax.

Essentially formal, syntax focuses on the linguistic form of the sentence without giving paramount importance to the meaning, which is the object of study for semantics. If we want to simplify, we can say that syntax focuses on the relationships between linguistic signs, while semantics focuses on the relationships between these signs and those signified by them, as well as on the overall meaning of the sentence that will be produced by syntax. However, the boundaries of the two disciplines are not very clear. In fact, it is being widely accepted that the complementarity of these two sources of knowledge is indispensable to be able to correctly understand a sentence, particularly in the case of syntactic ambiguities or semantic anomalies (see [ANT 94, MAH 95], for a review of these studies in the field of psycholinguistics and NLP).

4.1.2. The concept of grammaticality

As linguistics is a descriptive and non-normative discipline, it is appropriate to begin with a clarification of the descriptive concept. In the NLP field, grammar is not a set of rules that a speaker must follow as its production is considered to be well-formed (normative grammar), but rather a description of the syntactic phenomena used by any linguistic community at a given time. This description is, therefore, used as a reference to distinguish what is said from what is not said.

According to Chomsky, grammar is a device capable of carrying out grammaticality judgments, that is to classify input units sequences (lexical strings) in two groups: the correctly and the incorrectly formed strings (see Figure 4.1). It is this device which characterizes the competence of an average speaker.

Figure 4.1. The role of grammar according to Chomsky

This original conception of grammar has important implications on the concept of grammaticality. On one hand, grammaticality is different from the concept of frequency of use of a phenomenon within a linguistic community, made evident by the number of occurrence(s) of the phenomenon in question, in a corpus which is considered to be representative of the language of this community. Let us take a look at the sentence [4.1]:

The dotted lines in the sentence [4.1] can be replaced by several words that would be both syntactically and semantically acceptable, including spatial, infrared and black. Furthermore, although the words table (noun) and temporal (adjective) are statistically unlikely in this context, only the adjective temporal allows us to create a grammatical sentence:

The group of sentences [4.2] leads us to the second important distinction between grammaticality and interpretability. In fact, sentence (a) is interpretable, both grammatically and semantically, whereas sentence (b) is syntactically acceptable but not semantically interpretable. Finally, sentence (c) is neither grammatically nor semantically interpretable.

Note that grammaticality is not a necessary condition for comprehension. Although agrammatical, the famous sentence: Me Tarzan, You Jane, is quite understandable.

4.1.3. Syntactic constituents

Parsing consists of the decomposition of sentences in major syntactic units and of the identification of dependency relationships. This analysis often leads to a graphical representation in the form of a box according to the original approach proposed by the American linguist Charles Francis Hockett [HOC 58]. Generally, it takes the form of a tree diagram. As we will see later, this type of analysis has a strong power of explanation and particularly takes into account the syntactic ambiguities. But the question arises as to what is the nature of the constituents of a sentence. Is a constituent a word or a word sequence which has a particular syntactic role within the sentence?

This is the question which we will try to answer.

4.1.3.1. Words

As we have seen in the sphere of words, in spite of the problems related to its definition, the word is recognized more or less explicitly as a syntactical unit by different theories from different currents. In addition, many NLP applications presuppose a linguistic material in which words are labeled. That is why we believe that it is useful to begin with a classification of words according to their syntactic categories, commonly known as parts of speech.

Some linguists deny the existence of linguistic units which are higher than words. That is why they assume that syntactic relationships are limited to the dependencies between the words in the sentence. In the tradition of the Slavic language, the French linguist Lucien Tesnière has laid the foundations of a linguistic theory known as dependency grammar [TES 59]. Recovered, developed and applied in the works of several linguists throughout the world [HAY 60, MEL 88, HDU 84, HUD 00, SLE 91], dependency grammar is still a minority current in modern syntax.

From a formal point of view, a dependency tree (or a stemma), according to Tesnière’s terminology, is a graph or a tree that represents the syntactic dependencies between the words of a sentence. Thus, dependency grammar is based on these explicit- or implicit-dependency relationships rather than on a precise theoretical framework. The shapes of the trees vary according to specific theories in spite of shared theoretical foundations.

For example, the arguments of a verb (subject, object, adverbials, etc.) are all syntactic functions, represented by arcs, which come from the verb. In the grammatical framework of Word Grammar (WG) by Hudson, we have four relationships: pre-adjoint, post-adjoint, subject and complement (see Figure 4.2).

Figure 4.2. Relationships in the framework of formalism, WG [HUD 10]

Like other dependency formalisms, WG bestows a central place to the verb which has the pivotal role in the entire sentence (see example in Figure 4.3).

Figure 4.3. Analysis of a simple sentence by the formalism of WG

The adoption of the word as the central unit of syntactic analysis has several advantages. In fact, on one hand, it is easier to establish relationships with phonological levels, such as binding phenomena and semantics with semantic roles. On the other hand, it is particularly suitable for processing phenomena such as discontinuity which are, among others, observed in German [KRO 01]. As for the disadvantages, we can note the difficulty of processing phenomena such as coordination which often involves word blocks and not simple words.

A discussion of the formal equivalence of dependency grammars with the formalisms based on superior constituents is proposed in [KAH 12]. We should also note that parsing modules based on dependency grammars have been developed for several languages including English [SLE 91], French [CAN 10] and Arabic [MAR 13].

4.1.3.2. Clauses

The concept of clause is transdisciplinary to the extent that it is used in a variety of disciplines such as linguistics and logic. In the field of logic, it is a utterance which accepts a truth value: it can be either true or false.

In the field of linguistics, it refers to sequences of words containing at least a subject and a verb predicate explicitly or implicitly present (in the case of an ellipsis). A sentence can, generally, be divided into several clauses each contained in the other. A more detailed discussion of these issues follows later in section 4.1.3.6.

4.1.3.3. Phrase

A phrase, according to classical terminology, is a word or a consecutive word sequence which has a specific syntactic role and which we can, consequently, associate with a single category. This unit is defined based on the criterion of substitutability. It is, thus, located among the higher unit of syntax, i.e. the sentence and the word. However, some believe that this concept, as relevant as it is, does not justify by itself that we devote to it a fulllevel of analysis, because the information that a phrase conveys is already present at the level of the words that comprise it (see the previous section).

Each phrase has a kernel of which it inherits the category and the function. A kernel can be a simple word or a compound; it can even be another phrase. Sometimes, a phrase has two or several kernels which are either coordinated or juxtaposed [4.3]:

One of the specificities of the phrase is that it is a recursive unit. In other words, a phrase can have as a unit another phrase as in [4.4], where the prepositional phrase (of the village) are included in the principal phrase, the doctor of the village:

The question that now arises is: how can we identify a phrase with the help of rigorous linguistic tests? In fact, several experiments or linguistic tests allow us to highlight the unitary character of a word sequence, such as commutation, ellipsis, coordination, shift, topicalization, clefting and negation.

Commutation or substitution is the simplest test which consists of replacing a series of words by a single word without changing the meaning or the grammaticality. Pronominalization is the most well-known form of this process as in [4.5]:

Ellipsis is one of these tests, as it allows us to substitute a word sequence with a zero element. In French, noun phrases can be elided, unless they are subjects or obligatory complements, prepositional phrases, as well as phrasal complements (see [4.6]):

As we can see in [4.6], we replace only the complement noun phrase of an indirect object with a null element (a) and we also replace the verb phrase and its complement with a null element (b).

In the case of discursive ellipses, responses to partial questions can be achieved with an ellipsis. In the group of responses [4.7], case (a) represents the possibility of a response without omitting the subject and the object noun phrases. Response (b) provides a possible response with only the complement noun phrase. Response (c) gives us a case of a truncated constituent, while response (d) gives us an example of a response with a very long and, therefore, not acceptable sequence as a constituent.

Coordination can also serve as a test to identify units, because the basic principle of coordination is to coordinate only the elements of the same category and whose result is an element which keeps the category of coordinated elements. Let us look at the examples [4.8]:

As we can see in example [4.8], we can coordinate noun phrases (a), verb phrases (b) or entire sentences (c, d and e).

Dislocations or shifts consist of changing the location of a set of words that form a constituent. There is a variety of shift types, such as topicalization, clefting, pseudo-clefting, interrogative movement, shift of the most important constituent to the right.

Topicalization is the dislocation of a constituent at the head of the sentence by means of a separator such as a comma:

In the series of example [4.9], we have a shift of the noun phrase phonetics in (b) while a shift of the verb phrase write a poem in (d).

Interrogative movement is also a common case of the interrogative that is to separate the complement noun phrase from the object as in [4.10]:

Clefting consists of highlighting a constituent of the sentence CONST by delimiting it, respectively, by a presentative and a relative. Two main types of clefting exist in French: clefting on the subject and clefting on the object. To these, we can also add pseudo-clefting. The patterns that follow these three types are presented in Table 4.1.

Table 4.1. Clefting patterns

Type |

Pattern |

Examples |

Clefting on the subject |

It is CONST. that Y. |

It is John that will go to the market. It is the neighborhood postman that brings the letters every day. |

Clefting on the object |

It is X that CONST. |

It is carrots that John will buy from the market tomorrow evening. It is tomorrow evening that John will buy carrots from the market. |

Pseudo-clefting |

What X has done, it is CONST. |

What he asked the school principal, it is a question. To whom he asked a question, it is the journalist from Washington Post. To whom he asked a question, it is to the school director. |

Restrictive negation (which is sometimes called exceptive) can also be used as a test, because only a constituent can be the focus of the restrictive negation. In fact, from a semantics point of view, it is not truly a negation but rather a restriction that excludes from its scope everything that follows. As we can see in Table 4.2, these excluded elements are constituents which have varied roles.

Table 4.2. Examples of restrictive negation

Example |

Excluded constituent |

The newly arrived worker says only nonsense. |

NP/Direct object complement |

I am only a poor man. |

NP/Subject attribute |

She eats only in the evening with her best friends. |

NP/Adverbial phrase |

She eats in the evening only with her best friends. |

PP/Indirect object complement |

It is only the young from Toulon that says nonsense. |

VP |

He only says nonsense. |

VP |

Note that sometimes restrictive negation excludes more than one constituent at a time as in [4.9] even if, in this case, we can consider the relative act as a genitive construction:

Another test consists of separating a constituent from its neighbor by using the adverbs only or even whose role is to draw the line between the two constituents. Let us look at the examples [4.12]:

As we note in group [4.12], this test does not allow us to identify only a single boundary of a constituent. Consequently, when the delimited element is at the head of the sentence as in (a). On the contrary, in one of the contexts as in the case of (b), we must perform other tests for complete identification.

4.1.3.4. Chunks

Proposed by Steven Abney, these are the smallest word sequences to which we can associate a category as a nominal or verb phrase [ABN 91a]. Unlike phrases, chunks or segments are non-recursive units (they must not have a constituent of the same nature). That is why some as [TRO 09] prefer to call them nuclear phrases or kernel phrases. Just like phrases, chunks typically have a keyword (the head) which is surrounded by a constellation of satellite words (functional). For example, in the tree in the meadow, there are, in fact, two separate chunks: the tree and in the meadow. A more comprehensive example of the analysis is provided in Figure 4.4.

Figure 4.4. Example of an analysis by chunks [ABN 91a]

Although it does not offer a fundamentally different conception on the theoretical level, the adoption of chunks as a unit of analysis has two advantages. On the one hand, it allows us to identify more easily the prosodic and syntactic parallels, because the concept of a “chunk” is also prosodically anchored. On the other hand, the simplification of syntactic units has opened new roads in the field of the robust parsing (see section 4.4.10).

4.1.3.5. Construction

Unlike other linguistic formalisms which establish a clear boundary between morphemes and constituents of higher rank as a syntactical unit, the construction grammar (CG) argues that the two can coexist within the same theoretical framework [FIL 88] (see [GOL 03, YAN 03] for an introduction). According to this concept, grammar is seen as a network of construction families. The basic constructions in this CG are very close to those of the HPSG formalism that we are going to present in detail in section 4.3.2.

4.1.3.6. Sentence

As the objective of syntax is to study the structure of the sentence of which it is the privileged unit, it seems important to understand the structure of this unit. The simplest definition of a sentence is based on spelling criteria according to which it is a word sequence that begins with a capital letter and ends with a full stop. From a syntactic point of view, sentence decomposes in phrases, typically a noun phrase and a verb phrase for a simple sentence. Similarly, it consists of a single clause in the case of a simple sentence or of several clauses in the case of a complex sentence. We distinguish between several types of complex sentences based on the nature of the relationships that link the clauses. These are the following three: coordination, juxtaposition and subordination.

Coordination consists of the comparison of at least two clauses within a sentence by means of a coordinating conjunction, such as and, or, but, neither, etc. (see [4.13]):

Juxtaposition consists of using two joint clauses by a punctuation mark that does not mark the end of a sentence [4.14]. It is, according to some, a particular case of coordination to the extent that we can, in most cases, replace the comma with a coordinating conjunction without changing the nature of the syntactic or semantic relationships of the clauses:

The relationship of subordination implies a relationship of domination between a main clause which serves as the framework in the sentence and a dependent clause which is called subordinate. Often linked by a complementizer which can be a subordinating conjunction (that, when, as, etc.) or a relative pronoun (who, what, when, if, etc.), the subordinating clauses are sometimes juxtaposed without the presence of a subordinating element that connects them as in [4.15]:

Traditionally, we distinguish between two types of subordinates, namely the completives [4.16] and the relatives [4.16] and [4.16]:

In the case of completives, the subordinate plays the role of a completive to the verb of the main clause. Thus, the completive in [4.16a] has the same role as the complement to the direct object of [4.16b]. With regard to relatives, they complement a noun phrase in the main clause that we call antecedent. For example, John and all songs are, respectively, the antecedents of relative subordinate clauses in [4.16c] and [4.16d].

4.1.4. Syntactic typology of topology and agreement

Topology concerns the order in which the words are arranged within the sentence. In general, topology allows us to know the function of an argument according to its position in relation to the verb [LAZ 94]. For example, French is a language with the order SVO (subject-verb-object). Other languages are of the SOV type, such as German, Japanese, Armenian, Turkish and Urdu, whereas languages such as Arabic and Hawaiian are of the VSO type. Note that, depending on the language, this order can vary from fixed to totally variable. We will refer to [MUL 08] for a more complete presentation of the word order in French.

The relationship of agreement consists of a morphological change that affects a given word due to its dependency on another word. They are a reflection of the privileged syntactic links that exist between the constituents of the sentence. In French, it is a mechanism according to which a given noun or pronoun exerts a formal constraint on the pronouns which represent it, on the verbs of which it is a subject, on the adjectives or past participles which relate to it [DUB 94].

4.1.5. Syntactic ambiguity

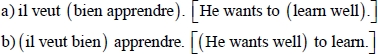

Syntactic ambiguity, which is sometimes called amphibology, concerns the possibility to associate at least two different analyses with a single sentence. Unlike lexical ambiguities, the source of the syntactic ambiguity is not the polysemy of the words that make up the sentence, but rather the differences of dependency relationships that the constituents of the sentence can have. Therefore, we refer to attachment ambiguity of which the most notable case is the attachment of the prepositional phrase see Figure 4.5 for an example.

Figure 4.5. Example of attachment ambiguity of a prepositional phrase

The attachment of the adjective to the nominal group can sometimes cause an ambiguity of syntactic analysis as in [4.17]:

Coordination consists of coordinating two or several elements that have the same syntactic nature. In this situation, there may be an ambiguity when there are two coordinating conjunctions of which we cannot delimit the scope. For example, in the sentence [4.18], we have two conjunctions and and or without knowing whether each of these conjunctions focuses on a simple element (tea, coffee) or on the result of the other conjunction: (sugar and coffee) and (tea or coffee):

Ambiguity can also focus on the attachment of the adverb as in the French examples [4.19]:

In group [4.19], in case (a) the adverb bien (well) depends on the verb apprendre (learn), whereas in case (b) this same adverb depends on the semi-auxiliary veut (wants) with which it also forms a construction of collocation type.

Obviously, syntactic ambiguity directly affects the semantic interpretation of a sentence. In fact, sometimes, multiple analyses that allow the syntax are all semantically interpretable as in the sentences [4.19] and [4.17]; and sometimes there is only a subset of these analyses that is semantically interpretable as in the sentence [4.20]:

We note in the group [4.20] that only structure (a) where the adjective expired qualifies the noun drug can receive a semantic interpretation, whereas (b) where the adjective expired qualifies the noun atherosclerosis does not have a particular meaning. With this example, we return to the concept of grammaticality which was discussed at the beginning of this section.

4.1.6. Syntactic specificities of spontaneous oral language

In the field of speech, quite a considerable number of studies has focused on phonetic and phonological aspects. However, syntax, which is a central discipline in linguistics, is the only one to remain subject to the reign of the scripturocentrism as highlighted in [KER 96]. In fact, syntactic studies have focused primarily on the written word while neglecting the oral dimension, which was considered as an impoverished and sometimes deviant form of writing. The lack of linguistic resources due to the difficulties of collecting and transcribing spoken dialogues (see [BLA 87] for a general review of these issues), as well as the relatively limited importance of the syntactic processing of the spoken language before the 1990s are some other reasons for this delay.

4.1.6.1. Topology in spoken language

Spoken language does not seem to obey the same standard as the written text regarding word order. For example, the utterances of Table 4.3 are perfectly possible in a spoken conversation or in a pseudo-writing used in discussion forums on the Internet, while they are not acceptable in a standard written text:

Table 4.3. A few examples of variation of the word order at the oral framework

Example |

Structure |

Order of elements |

My notebook I forgot it at home |

Anteposition of an NP |

OSV |

At 200 meters you will find a pharmacy |

Anteposition of an PP |

OSVO |

Me my father I love him very much |

Double marking |

SOSVO |

The question that arises is to know what is the importance of these cases in terms of frequency in the spoken conversations and then to know whether this frequency depends on the syntactic context (i.e. is it more important in a syntactic context C1 than in another syntactic context C2?). [ANT 01] have tried to answer these questions in their study which is based on three corpora of spoken French1. Thus, these researchers have shown that in ordinary situations the finalized language respects the privileged sequencing.

4.1.6.2. Agreement in gender and number

According to the constructions, agreement is often respected in the spoken language, but not always. For example, non-respect of the agreement between the noun and/or its adjectives is very rare, while non-agreement in gender between the attribute and the word to which it relates is very frequent [4.21] [SAU 72]:

4.1.6.3. Extragrammaticalities of the oral language

Extragrammaticalities, unexpected structural elements, spontaneities and disfluencies are among other terms that have been proposed in the literature to designate the spontaneous phenomena of the spoken language such as hesitation, repetition, self-correction, etc. [LIC 94, SHR 94, HEE 97, COR 99, MCK 98]. Each of these terms has its motivation. The term “extragrammaticality” which has been adopted by [CAR 83] seems to be the most appropriate, because it is sufficiently general and specific to cover the different spontaneous phenomena of the spoken language which do not depend directly on the syntax of the language. Three key phenomena are distinguished within extragrammaticalities: repetitions, self-corrections and false starts.

Repetition is the repetition of a word or a series of words. It is defined on purely morphological criteria. Consequently, the formulation and the paraphrase of a utterance or a segment (where we repeat two segments that have the same meaning) are not considered as repetitions: it would be a Paris Delhi flight rather than a flight a domestic flight.

Repetition is not always a redundancy. It can also have a communicative function. For example, when a speaker is not sure if his message (or a part of his message) will be perceived clearly by its audience because of a poor articulation, a noise in the channel, etc., he repeats it. In addition, repetition is a quite common pragmatic means to mark an affirmation or an insistence as in the utterance [4.22]:

In the utterance 4.22, the repetition of the word yes has an affirmative function.

Self-correction is to replace a word or a series of words with others in order to modify or correct the meaning of the utterance. Self-correction is not completely random and often focuses on a segment that can have one or several phrases [COR 99]. That is why it is frequently accompanied by a partial repetition of the corrected segment. Let us look at the utterance [4.23]:

In this utterance, self-correction is performed by repeating the segment I have and by replacing the word a with the word the. We note that the two words have the same morphological category (definite article) and the same syntactic function (determiner).

A false start is to abandon what has been said and starting over with another utterance. Syntactically, this is manifested with the succession of an incomplete (or an incorrectly formed) segment and with a complete segment. Let us look at the utterance [4.24]:

Unlike self-correction, there is no analogy between the replaced segment and the rest of the utterance. Thus, we can notice in the example [4.24] that the abandoned segment it is at has almost no relationship with this is taken…. This form of extragrammaticality is the most difficult to process given that the detection criteria (essentially the incompleteness of a segment) are very vague and can lead to many problems both of overgeneration and of undergeneration.

4.2. Elements of formal syntax

4.2.1. Syntax trees and rewrite rules

After reviewing the different constituents of a sentence and the subtleties of the relationships that may exist between these constituents, we will now address the question of the analysis of the sentence by assembling the pieces of the puzzle. To do this, we will begin with phrases and end with complex sentences.

The idea to use rules to describe the syntax of a particular language goes back to the beginning of the past century. It was formalized in the 1950s, particularly with [CHO 56, BAC 59].

A syntax tree is a non-oriented (there is not a predetermined direction to traverse the tree) and acyclic (we cannot traverse the tree and then return to the starting point) graph consisting of nodes connected by arcs. A node represents a constituent or a morphological or syntactic category connected by an arc with the dominant node. Each constituent must be directly dominated by the corresponding category. Inspired by family relationships, the dominant node is called “parent node” and the dominated node is called “child node”. Similarly, the highest node of a tree (the one that dominates all other nodes) is called the “root node” of this tree, whereas the lowest nodes in the hierarchy and which, therefore, do not dominate other nodes are called “leaf nodes”. In syntax trees, each word is dominated by its morphological category and phrases by their syntactic categories. Logically, the S (Sentence) is the root node of these trees and the words of the analyzed sentence are the leaf nodes.

Let us begin with the noun phrases. As we have seen, a noun phrase can consist of a proper noun only in a common noun surrounded by a wide range of varied satellite words. Let us look at the phrases in Table 4.4.

Table 4.4. Examples of noun phrases and their morphological sequences

Noun phrase |

Sequence of categories |

John |

NP |

My small red car |

Det Adj N Adj |

The house of the family |

Det N prep Det N |

As we can see in Table 4.4, each noun phrase is provided with the sequence of morphological categories of which it is composed. This sequence provides quite important information in order to understand the syntactic structure of these phrases, but it is, however, not sufficient because it omits the dependency relationships between these categories. To fill this lack of information, syntax trees can shed light on both the order of constituents and their hierarchy. Let us examine the syntax trees of the phrases provided in Figure 4.6.

Figure 4.6. Syntax trees of some noun phrases

As we note in Figure 4.6, all the trees have an NP as a root node (the highest node) since they all correspond to noun phrases. Tree (a) is the simplest one since it is a proper noun which is capable of achieving a noun phrase without another constituent. In tree (b), the kernel of the phrase, the noun car, is qualified by an anteposed adjective small and by a postponed adjective red. Tree (c) shows how a prepositional phrase acts as a genitive construction. This prepositional phrase consists in turn of a preposition and a noun phrase.

A rewrite rule is an equation between symbols of two types: terminal and non-terminal symbols. Terminal symbols are symbols that cannot be replaced by other symbols and which correspond to words or morphemes of the language in question. Non-terminal symbols are symbols that can be replaced by terminal symbols or other non-terminal symbols. In grammar, they correspond to morphological categories such as noun, verb and adjective or to syntactic categories such as NP, VP, PP, etc.

The transformation of the syntax trees shown in Figure 4.6 in rewrite rules provides the grammar in Figure 4.7.

Figure 4.7. Grammar for the structures as shown in Figure 4.6

Let us look at the noun phrase of the Figure 4.8. It is a noun phrase which includes an adverb and an adjective. What is specific in the phrase is that the adverb does not modify the noun but rather the adjective which in turn qualifies the noun. To understand this dependency relationship, the creation of an adjective phrase seems to be necessary.

Figure 4.8. Syntax trees and rewrite rules of an adjective phrase

One of the specificities of natural languages is the production capacity of an infinite number of sentences. Among the sources of this generativity is the ability to emphatically repeat the same element especially in the spoken language. Let us look at the sentence [4.25], where we can repeat the adjective an indefinite number of times:

This poses a problem because we have to repeat the same rule each time with an increase in the number of symbols (see grammar in Figure 4.9).

Figure 4.9. Grammar for the structures presented in Figure 4.8

An elegant and practical solution to this problem is to use recursive rules that contain the same non-terminal symbol, both in its left and right hand sides (grammar in Figure 4.10).

Figure 4.10. Grammar for the noun phrase with a recursion

According to the first rule of the mini grammar in Figure 4.10, a noun phrase consists of a determiner, a noun and an adjective phrase. According to our second rule, an adjective phrase consists of an indefinite number of adjectives: it adds an adjective and is called the rule of the adjective phrase. According to the third rule, the adjective phrase can consist of a single adjective and has the function to stop the looping to infinity of the second rule.

The adverbial phrase has for a kernel an adverb which can be modified by another adverb as in [4.26]:

Note that in order to avoid circularity in the analysis, we must distinguish the adverbs of degree (very, little, too, etc.) from other adverbs in the rewrite rules. This provides the rules of the form: AdvP → AdvDeg Adv.

The verb phrase is, as we have seen, the kernel of the sentence because it is the bridge that connects the subject with potential complements. Let us look at the sentences [4.27] with different complements:

These three sentences are analyzed with the syntax tree of the Figure 4.11.

Figure 4.11. Examples of VP with different complement types

Complex sentences is another type of syntactic phenomena which deserves to be examined in the framework of syntactic grammar. What is significant at this level is to be able to represent the dependencies of the clauses within the sentence.

Completives consist of adding a subordinate which acts as a complement in relation to the verb of the main clause. Thus, the overall structure of the sentence remains the same regardless of the type of the complement. The question is: how should this phrasal complement be represented? To answer this question, we can imagine that the complex sentence has a phrase of a particular type (SPh (Sentence Phrase)) which begins with a complementizer (comp) followed by the sentence. Figure 4.12 shows a parallel between a phrasal complement and a pronominal complement (ordinary NP).

Figure 4.12. Analysis of two types of sentences with two types of complements

The processing of relatives is similar to the processing of cleft sentences to the extent that we consider the subordinates as phrasal complements. Naturally, in the case of relative subordinates, the attachment is performed at the noun phrase as in Figure 4.13.

Coordinated sentences consist of two or several clauses (simple sentences) connected with a coordinating conjunction. The analysis of this type of structures is quite simple since it implies a symmetry of the coordinated constituents dominated by an element that has the same category as the coordinated elements. This rule applies both at the level of constituents and at the level of the entire sentence (see Figure 4.14).

Figure 4.13. Example of analysis of two relative sentences

Figure 4.14. Examples of the coordination of two phrases and two sentences

We should also note that syntax trees are a very good way of highlighting the syntactical ambiguity which is manifested by the allocation of at least two valid syntax trees for the same syntactic unit (see Figure 4.15).

Figure 4.15. Two syntax tree for a syntactically ambiguous sentence

At the end of this section, it seems necessary to note that phrase structure grammars, despite their simplicity and efficiency, are not a perfect solution to understand all syntactic phenomena. In fact, some linguistic cases pose serious problems for the phrase structure model [4.28]:

4.2.2. Languages and formal grammars

Formal language is a set of symbol strings of finite length, constructed on the basis of a given vocabulary (alphabet) and which is sometimes constrained by rules that are specific to this language (see [WEH 97, XAV 05] for a detailed introduction). Vocabulary, which is conventionally represented by lowercase letters of the Latin alphabet, corresponds to the words of the language which are the produced strings. To describe a formal language, the simplest way is to list all the strings produced by this language. For example, L1= {a, ab, ba, b}. The problem is that formal languages often produce an infinite number of strings whose listing is impossible. This requires the use of a formulation which characterizes the strings without having to list them all.

Let us look at L2, a formal language which includes all non-null sequences of the symbol a: L2 = {a, aa, aaa, aaaa, ….}. As it is impossible to list all the words of the language, we can use an expression of the form: {ai│i ≥1} where no limit is imposed on the maximum value of i, so as to be capable of generating an infinite number of strings. For a linguistic initiation to phrase structure and formal grammars, we refer to the books by Lélia Picabia and Maurice Gross [PIC 75, GRO 12].

When we refer to formal language, it is intuitive to evoke the concept of formal grammar. In fact, a formal language can be seen as the product of a grammar that describes it. Formally, such a grammar is defined by a quadruplet: G = (VN, VT, S, S) where:

- – VN: the non-terminal vocabulary;

- – VT: this vocabulary brings together all of the terminals of the grammar, which are commonly called the words of the language;

- – P: the set of the rewrite rules of grammar (production rules);

- – S: sometimes called an axiom, it is a special element of the set VN which corresponds to well-formed sentences.

Note that the sets VN and VT are disjointed (their intersection is zero) and that their union forms the vocabulary V of the grammar. In addition, V* denotes the set of all the strings of finite length which are formed by the concatenation of elements taken from the vocabulary including the null string and V+ is equal to V* except for the fact that it does not contain the empty string: V+ = V*− {Φ}.

Rewrite rules have the following form: α→β where α ![]() V+ ( however, it contains a non-terminal element) β

V+ ( however, it contains a non-terminal element) β ![]() V *.

V *.

Conventionally, lowercase letters at the beginning of the Latin alphabet are used to represent the terminal elements a, b, c, etc. We also use lowercase letters at the end of the Latin alphabet to represent strings of terminal elements w, x, z, etc. and the uppercase letters A, E, C, etc. to represent non-terminal elements. Finally, lowercase Greek letters represent the strings of terminal and non-terminal elements α, β, γ, δ, etc.

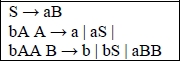

Let us consider a formal grammar G = (VN, VT, P, S) with:

The language defined by this grammar has the following form: L (G) = a*b. This means that the strings which are acceptable by this language are formed by a sequence of zero or more occurrences of a followed by a single occurrence of b: b, ab, aab, aaab, aaaab, etc.

To generate these language strings from the grammar, we begin with the special symbol S. We apply the set of rules which have the letter “S” in their left-hand side until there are no more terminals in the input string. The process to obtain strings from a grammar is called derivation.

Thus, in our grammar, the simplest derivation is to apply the rule #2 to replace the symbol S with the terminal b: S => b. Similarly, we can derive the sentence aab by the successive application of the rule #1 twice and the rule #2 only once: S => aS => aaS => aab.

4.2.3. Hierarchy of languages (Chomsky–Schützenberger)

After having presented languages and formal grammars, we can rightfully ask the following questions. What is the expressive power of a particular grammar? In other words, can a grammar G1 describe all the languages produced by a grammar G2? Can it describe other languages? How can we decide whether two languages described by two different formalisms are formally equivalent? To answer all these questions, the American linguist Noam Chomsky and the French mathematician Marcel Paul Schützenberger have proposed a framework for classifying the formal grammars and the languages they generate according to their complexity [CHO 56, CHO 63]. As this framework is capable of characterizing all recursively enumerable languages, it is commonly referred to as the hierarchy of languages or Chomsky hierarchy. It is a typology which includes four types of grammars, each included in the higher type and numbered between 0 and 4.

Type-0 grammar, which is also called unrestricted grammar, is the most general form of the grammar because it allows us to transform an arbitrary non null number of symbols into an arbitrary number of symbols (potentially zero symbols). It refers to grammar which accepts, for example, the rules whose right-hand side is longer than their left-hand side, as the rule: A N E → a N.

Although they are the most general, these types of grammar are the least useful for linguists and computer scientists. The languages generated by a grammar of this type are called recursively enumerable languages and the tool to recognize them is the Turing machine2.

Type-1 grammar, which is also called context-sensitive grammar, are types of grammars whose rules follow the following pattern: αAβ → αγβ.

With a non-terminal symbol A and sequences of terminal or non-terminal symbols α, γ and β knowing that α and β can be empty unlike γ. Another characteristic of these grammars is that they do not accept rules whose right-hand side is longer than the left-hand side.

The typical language generated by this type of grammars is of the form: an bn cn. This language can be generated by the grammar shown in Figure 4.17.

Figure 4.17. Grammar for the language an bn cn

The grammar in Figure 4.17 allows us to generate strings such as: abc, aabbcc, aaabbbccc, etc.

Figure 4.18. Syntax tree for the strings: abc and aabbcc

As we can see in Figure 4.18, the derivation of the abc string is performed in a direct way with a single rule: S → abc, whereas the derivation of the aabbcc string requires contextual rules such as: bEc → bbcc.

Type-1 grammar has been used for syntactic and morphological analysis particularly with augmented transition networks.

Type-2 grammar, which is called context-free grammar or context free grammar (CFG) is a type of grammar in which all rules follow the pattern: A → γ. With a non-terminal symbol A and a sequence of terminal and non-terminal symbols γ. The typical language generated by Type-2 grammar is: anbn (see Figure 4.19 for an example).

Figure 4.19. The derivation of strings: ab, aabb, aaabbb

To obtain the string ab in tree [4.19a], it is sufficient to apply the first rule: S → a B and then we replace “B” with “b” with the rule B → b. In tree (b), we apply the rule: B → a B b only once, whereas in tree (c) we must apply it twice.

Thanks to its simplicity, Type-2 grammar is quite commonly used, particularly for parsing. The automaton which allows us to recognize this type is the recursive transition network (RTN). As we will see in section 4.4.2, there is a multitude of parsing algorithms of various types to perform parsing of natural languages with context-free grammar.

Several forms, which are called normal, have been proposed to simplify Type-2 grammar without reducing their generative capacities. Among these forms, those of Chomsky and those of Greibach deserve to be addressed. Note that these normal forms retain ambiguity. This means that an ambiguous sentence generated by a Type-2 G grammar is also ambiguous in languages G’ and G’’, which are the normal forms of this grammar according to Chomsky and Greibach formats, respectively. Finally, note that the equivalence between a CFG and its standardized form is low because, although both generate exactly the same language, they do not perform the analysis in the same way.

Chomsky’s normal form imposes a clear separation between the derivation of terminal elements and the derivation of non-terminal elements. Thus, a grammar is said to be in Chomsky normal form if its rules follow the three following patterns: A →B C, A → a, S → ϵ.

Where A, B and C are non-terminals other than the special symbol of the grammar S. a is a terminal and ϵ represents the empty symbol whose use is only allowed when the language generated by the grammar is considered as a well-formed string. The constraints imposed by this normal form are that all structures (syntax trees) associated with sentences generated by a grammar in Chomsky normal form are strictly binary in their non-terminal part.

If we go back to the grammar in Figure 4.20, we note that only the rule: B→ a B b violates the diagrams imposed by Chomsky’s normal form, because its right-hand side contains more than two symbols. Thus, to make our grammar compatible with Chomsky’s normal form, we make the changes provided in Figure 4.20.

Figure 4.20. Example of a grammar in Chomsky normal form with examples of syntax trees

Note that in linguistic grammars, to avoid the three-branches rules as the one provided in Figure 4.21: NP → Det N PP, some assume the existence of a unit equivalent to a simple phrase that is called group: noun, verb, adjective, etc. (see Figure 4.21).

Figure 4.21. Syntax tree of an NP in Chomsky normal form

Grammar is said to be in Greibach normal form if all of its rules follow the two following patterns [GRE 65]: A→a and A → aB1 B2. . . Bn,

Let us consider the grammar in Figure 4.22 for an example of the grammar in Greibach normal form.

Figure 4.22. Example of grammar in Greibach normal form

It should be noted that there are algorithms to convert any Type-2 grammar in a grammar in Chomsky or Greibach normal form. We should also note that beyond their theoretical interest, some parsing algorithms require standardized grammar.

Type-3 grammar, which is sometimes called regular grammar, is a type of grammar whose rules follow the two following patterns: A → a and A → a B.

Where A and B are non-terminal symbols, whereas a is a terminal symbol. This is a typical G grammar of regular languages which generates the language: L (G) = anbm with n, m > 0 (see Figure 4.23).

Figure 4.23. Regular grammar that generates the language anbm

Finally, we should mention that it is formally proved that the languages generated by a regular grammar can also be generated by an finite-state automaton.

A particular form of regular grammar has been named “Type-4 grammar”. The rules in this grammar follow the diagram: A → a. In other words, no non-terminal symbol is allowed in this type of grammar. This grammar, which is of limited usefulness, is used to represent the lexicon of a given language.

Table 4.5 summarizes the properties of the types of grammar that we have just reviewed.

Table 4.5. Summary of formal grammars

| Type | Form of rules | Typical example | Equivalent model |

| 0 | α → β (no limits) | Any calculable function | Turing machine |

| 1 | αAβ → αγβ | anbncn | Augmented Transition Networks (ATN) |

| 2 | A → γ | anbn | Recursive Transition Networks (RTN) |

| 3 | A → a A → aB |

a* = an | Finite-state automata |

The presentation of the different categories of formal languages and their principal characteristics leads us to the following fundamental question: what type of grammar is able to represent all the subtleties of natural languages? To answer this question, we are going to discuss the limits of each type based on Type-3 grammar.

From a syntactic point of view, natural languages are not regular because some complex sentences have a self-embedded structure which requires Type-2 grammar for their processing (examples [4.29]):

To understand the differences between the abstract structures of complex sentences, let us look at Figure 4.24.

Figure 4.24. Types of branching in complex sentences

The syntax of natural languages is not independent of context because, in a language such as French, there is a multitude of phenomena, whose realization requires the consideration of the context. For example, we can mention the following: agreement, unbounded dependencies and passive voice.

Agreement exists between certain words or phrases in person, number and gender. In a noun phrase, the noun, the adjective and the determiner agree in gender and number. In a verb phrase, the subject and the verb agree in person and number. To integrate the constraints of agreement in a Type-2 grammar, we must diversify the non-terminals and then create as many rules for the possible combinations. The rule S → NP VP will be declined in six different rules as in the context-free grammar shown in Figure 4.25.

Figure 4.25. Type-2 grammar modified to account for the agreement

This solution significantly reduces the generative power of grammar and makes its management very difficult in practice, because non-terminals are difficult to be read by humans. Some cases of agreement are even more complicated, as the agreement between the post-verbal attributive adjective of an infinitive and the subject noun phrase [4.30]:

Type-2 grammar does not allow us to specify the sub-categorization of a verb predicate (the required complements) or to require that the verb does not have complements. Here a possible solution is also to vary categories. These types of grammar do not allow the expression of structural generalizations such as the relationship between the passive and the active voice.

4.2.4. Feature structures and unification

The formalisms that we have just seen allow us to represent the information on the hierarchical dependencies of the constituents and their order. We have seen that with the grammar in Figure 4.25, it is quite important to take into account the relationships of agreement between these different constituents with a simple phrase structure grammar. The most current solution in the field of linguistics to solve this problem is to enrich the linguistic units with feature structures (FS), which contain information of different natures (morphological, syntactic and semantic) and to express the possible correlations among them. They also allow us to refine the constraints at the lexicon level and, thus, to simplify the rules of grammar. For more information on feature structures and unification, we refer to [SHI 86, CHO 91, WEH 97, SAG 03, FRA 12].

As we have seen in the sphere of speech, the first use of features in modern linguistics was proposed in the field of phonology, particularly with studies by Roman Jacobson, Noam Chomsky and Morris Halle. Since the 1980s, FSs have been adopted in the field of syntax, particularly in the framework of formalisms based on unification. It is a family of linguistic formalisms that allows us to specify lexical and syntactic information. The main innovation of these formalisms lies on two points: the use of features for encoding of information and the unification operation for the construction of higher constituents than the word level.

The unification operation is applied on features in order to test, compare or combine the information they contain. The origin of the unification concept is double: on the one hand, it comes from the studies on the logic programming language “Prolog” [COL 78, CLO 81] and on the other hand, it is the result of studies in theoretical and computational linguistics on the functional unification grammar (FUG) [KAY 83], the lexical functional grammar (LFG) [BRE 82, KAP 83] and the generalized phrase structure grammar (GPSG) [GAZ 85].

We distinguish two types of feature structures: atomic feature structures in which all features have a simple value and complex feature structures (CFS) where features can have other feature structures as a value. Below is the structural feature of the noun “house” and of the verb “love” which are shown in Figure 4.26. For example, the verb (FS b) has two features: an atomic feature VerbType and a complex feature Agreement.

Figure 4.26. Feature structures of the noun “house” and of the verb “love”

Beyond the words, all other levels, such as phrases, clauses and sentences, can be enriched by CFSs. To clarify the enrichment of supralexical units with features, let us take a simple sentence such as [4.31]:

As there is no consensus as to the necessary features for the analysis of a sentence, the linguistic theories which adopt the features as a mode of expression of linguistic properties differ on this point. Thus, we can adopt the following features to analyze the sentence [4.31]:

- – category: specifies the grammatical category of the sentence: S;

- – head: corresponds to the head of the sentence which is the verb and specifies the features: tense, voice, and number;

- – subject: noun phrase which is an argument of the verb;

- – object: noun phrase which is an argument of the verb.

This provides the CFS presented in Figure 4.27.

Figure 4.27. CFS of a simple sentence

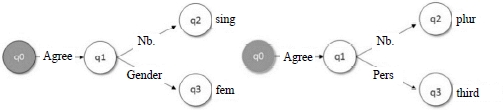

A variant of the feature structures was proposed by Aït-Kaci [AIT 84] where each structural feature has a type which limits the features that can be included, as well as the values that the atomic features can have. This concept is comparable to the types in the framework of the object-oriented programming. For example, the complex feature subject can understand the category and agreement features and the atomic feature Nb. can have the values: sing and plur.

We should also note that CFSs can take the form of feature graphs, such as the examples of the feature structures presented in Figure 4.28.

Figure 4.28. Feature graphs for the agreement feature for the words “house” and “love”

As we see in Figure 4.28, feature graphs are oriented, as we cannot navigate in the direction of the arrow (or arc). As these graphs are a data structure whose mathematical properties are well-known, this allows us to improve our understanding of CFS properties. Such a graph can be seen as a quadruplet: {Q, q’, δ, θ}. Let us look at the graph (a) in Figure 4.28 as an example, to make our explanation more concrete.

- – Q is a finite set of nodes: Q = {q0, q1, q2, q3};

- – q’ ϵ Q is the initial state of the graph q’ = q0. As we can note, in Figure 4.28, the initial state of this graph is colored to differentiate it from other states;

- – δ represents a partial function in the graph such as: δ(q0, Agreement)= q1, δ(q1, Nb.)= q2, δ(q1, Gender)= q3;

- – θ represents a leaf that corresponds to a feature. Thus: θ(q2)=sing, θ(q3)=fem.

The representation of the features in the form of a graph leads us to the path concept which is intimately associated with this. A path is a sequence of features used to specify a particular component of a structural feature. The paths that interest us are those that connect the initial element and a leaf. If we accept that a path is part of our CFS: π ϵ CFS, we can say that our function δ(q, π) provides the value of path π from the node q. Now, if two different paths begin with the initial state of the graph and ultimately lead to the same node, we then say that there is a reentrancy relationship between these two paths. Formally, we can express this as: δ(q0, π) = δ(q0, π’) and π≠π’. By extension, a structural feature is called reentrant if it contains two features that share a common value. It is appropriate to distinguish the reentrancy of cases where two different paths lead to two different features which occasionally have the same value. In this kind of case, we refer to paths of similar values. Let us consider the examples of CFSs in Figure 4.29 to clarify this distinction.

Figure 4.29. Example of structures of shared value and of a reentrant structure

As we can see in Figure 4.29, structures of similar values consist of an occasional resemblance between the values of different features (q3 and q4). This means that it is not a constraint imposed by grammar but a simple coincidence. A current example is when two noun phrases, subject and object direct, share the same features of agreement in gender and number. By contrast, the reentrant features are the shared product of two different paths of the same feature (q4) as in the constraints on the agreement between two different constituents as the subject noun phrase and the verb phrase.

In matrix form, reentrant features are marked with specific indices. Thus, the symbol ![]() in Figure 4.30 means that the features f and g must have the same value.

in Figure 4.30 means that the features f and g must have the same value.

Figure 4.30. Example of structures of shared value and of a reentrant structure

If we approach the issue of the comparison of two feature structures, a key question arises: are there any CFSs which are more generic than others? To answer this question, the concepts of subsumption and extension have been proposed.

Subsumption is a partial order relationship that we can define on feature structures and which focuses both on the compatibility and the relative specificity of the information they contain. It is noted with the help of the symbol: ⊆. Let us look at the two feature structures FS and FS’. We say that FS is a subsumption of FS’ if all information contained in FS is also present in FS’.

In Figure 4.31, we have three CFSs that maintain the following subsumption relationships: a ⊆ b and a ⊆ c and b ⊆ c. In other words, as the CFS a is the least specific and consequently more abstract, it subsumes the structures b and c which are more generic. Obviously, the subsumption between these structures would not exist without the compatibility between these three structures. If we go back to Figure 4.26, the feature of agreement of the structure (a) does not subsume the feature of agreement of (b) and vice versa, because of the incompatibility between the two features: the feature gender in (a) and the feature pers in (b).

Figure 4.31. Examples of feature structures with subsumption relationships

Extension is the inverse relationship of subsumption. It can be defined in the following manner: let us consider two structures FS and FS’. We can say that FS’ is an extension of FS, if and only if: all atomic features of FS’ are present in FS with the same values, and if for all non-atomic features ti, in FS’, there is an atomic feature ti’ in FS such that the value of ti’ is an extension of the value of the feature ti.

Naturally, all feature structures are not in a relationship of extension or subsumption. In fact, some CFSs can contain different but compatible information, such as the relationship between the object and the subject in matrix (c) as shown in the Figure 4.25. In addition, the structures “subject” and “head” are both different and incompatible.

We have seen that the syntactic analysis process consists of combining the representations of syntactic units to achieve a representation of the structure of the sentence. We have also seen examples of syntactic operations which allow us to combine words and phrases, etc. The question which arises at this stage is: how can we combine the representations of constituents enriched with features? The answer to this question is to use the unification operation. It is an operation which determines, from a set of compatible structures, a structure that contains all the information present in each of the members of the set and nothing else. The unification of the two feature structures FS1 and FS2 has, as a result, the smallest structure FS3 which is an extension both of FS and FS’. If such a structure does not exist, then the unification is indefinite. Symbolized by the union operator U, unification can also be reformulated:

Note that the unification operation is both commutative and associative:

As we can see in Figure 4.32, unification can be performed in quite varied configurations. In case (a), it is clear that the unification of a structural feature with itself is possible. Case (b) shows how we can unify the two different structures to obtain a richer structure. Case (c) shows that logically the unification of two incompatible feature structures is impossible because it leads to an inconsistency. In case (d), we see that the null structure acts as a neutral element and unifies with all structures without modifying them. Case (e) shows how we can perform unification with reentrant features. Finally, in case (f), we see a unification operation of more complex linguistic structures.

It should be noted that there are several generic tools that provide implementations of the unification process and which are available to the community. Among the best-known we can mention: PATRII, Prolog, and NLTK.

The logic programming language “Prolog”, as we have seen, is the tool which inspired the first studies and is still valid. In fact, the unification of terms which are provided natively in Prolog significantly reduces the application development time.

PATRII is another tool available to the community in the field of unification. Originally proposed in SRI International by Stuart Shieber, PATRII is both a formalism and a programming environment written in Prolog [SHI 87]. It is possible to use PATRII to implement a limited variety of formalisms. It is based on Type-2 grammars with which FS are associated.

Figure 4.32. Examples of unifications

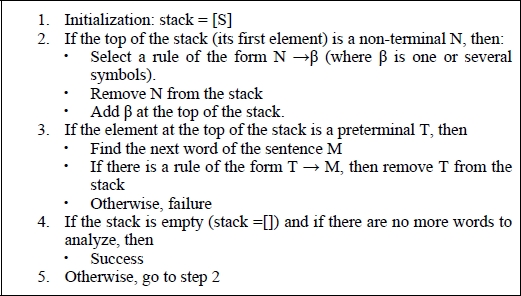

4.2.5. Definite clause grammar

Definite clause grammar (DCG) is a logical representation of linguistic grammars. The first form of this grammar, which was called “metamorphosis grammar”, was introduced in 1978 at the University of Marseille following Alain Colmerauer’s studies, of which the first application was on automatic translation. Afterward, David Warren and Fernando Pereira of the University of Edinburgh proposed a particular case of metamorphosis grammars which were named DCG [PER 80]. DCGs were created to develop and test grammars on the computer, particularly with the logic programming language “Prolog” and more recently with the language “Mercury”. From a functional point of view, DCGs allow us to analyze and generate string lists. Let us look at the DCG in Figure 4.33.

Figure 4.33. DCG Grammar

The first note that we can make in respect of the grammar in Figure 4.31 is that its format is very close to the format of the ordinary Type-2 grammars, apart from a few small details. For example, non-terminals do not begin with a capital letter. As the latter is the indication of a variable according to the syntax of Prolog, terminals are provided in square brackets which are used in Prolog to designate lists. We also note that DCG allows the writing of recursive rules (the p symbol exists in the left and the right-hand side of the rule).

It is also necessary to add that DCGs can be extended to enrich the structures with features (see grammar in Figure 4.34).

Figure 4.34. DCG enriched with FS

Several extensions have been proposed to improve DCGs including XGs by Pereira [PER 81], the definite clause translation grammars (DCTGs) by [ABR 84] and the multi-modal definite clause grammars (MM-DCGs) by [SHI 95].

4.3. Syntactic formalisms

Given the interest in syntax, a considerable number of theories in this field have been introduced. Different reasons are behind this diversity, including the disagreement on the main units of analysis (morpheme, word or phrase), the necessary knowledge to describe these units, as well as the dependency relationships between them.

In this section, we retained three formalisms, including two that are based on unification: X-bar, HPSG and LTAG.

4.3.1. X-bar

Before introducing the X-bar theory, let us begin with a critical assessment of the phrase structure model presented in section 4.2.1. Let us look at the sentences [4.32] and the rewrite rule and the syntax tree of the sentence [4.32a] shown in Figure 4.35:

Figure 4.35. Rewrite rule and syntax tree of a complex noun phrase

The sentence [4.32a] mentions a pharmacist in the city of Aleppo who has a blue shirt and the sentence [4.32b] mentions a reportage of Istanbul about the Mediterranean. Intuitively, we have the impression that the two prepositional phrases in the two cases do not have the same importance. To demonstrate this, it is sufficient to perform a permutation between the two phrases in each of the sentences. This provides the sentences [4.32c] and [4.32d] which are not semantically equivalent, initial sentences ([4.32a] and [4.32b]) whose grammaticality is questionable. Thus, we can conclude that the two prepositional phrases do not have the same status. However, the plain phrase structure analysis proposed in Figure 4.35 assigns equal weight to them. Consequently, a revision of our model seems necessary in order to account for these syntactic subtleties.

Moreover, in the framework of a universal approach to the modeling of the language, we observe that the rules that we have proposed up to now are specific to the grammars of European languages such as English or French and do not necessarily apply to other languages such as Arabic, Pashto or Portuguese.

These considerations, among others, led to the proposal in 1970 of a modified model of the generative and transformational grammar which was named X-bar theory. Initiated by Noam Chomsky, this theory has been developed afterwards by Ray Jackendoff [CHO 70, JAC 77]. It allows us to impose restrictions on the class of possible grammatical categories while allowing a parallel of these latter elements thanks to metarules (generalization of several rules). It is also a strong hypothesis on the structure of constituents across languages. It rests on two strong hypotheses:

- – all phrases, regardless of their categorical nature, have the same structure;

- – this structure is the same for all languages, regardless of the word order.

The term X-bar is explained as follows. The letter X corresponds to a variable in the general diagram of the structure in constituents, which applies to all syntactic categories (N, V, A, S, Adv., etc.). The term bar refers to the notation adopted by this formalism to differentiate the fundamental levels in the analysis. They are noted with one or two bars above the categorical symbol of the head. Usually, for typographical reasons, we replace the notation bar with a prime notation. N”, V”, A” and S” are thus notational variants respectively of NP, VP, ITS, PP. The notation that we have adopted and the alternatives in the literature are presented in Table 4.6.

Table 4.6. Adopted notation and variants in the literature

| Level | Our notation | Alternatives |

| Phrase level | NP | N”, N", |

| Intermediate level | N’ | |

| Head/Word level | N | N0 |

In the framework of the X-bar theory, a phrase is defined as the maximum projection of a head. The head of a phrase is a unique element of zero rank, word or morpheme, which is of the same category as the phrase as in the grammar of the Figure 4.36.

Figure 4.36. Examples of phrases with their heads

This leads us to a generalized and unique form for all rules: SX → X SY, where X and Y are variables. Furthermore, according to the X-bar theory, a phrase accepts only three analysis levels:

- – level 0 (X) = head;

- – level 1 (X) = head + complement(s);

- – level 2 (X) = specifier + [head + complement(s)].

The specifier is usually an element of zero rank, but sometimes a phrase can have this role: NP (spec(my beautiful) N0 (flowers)). The specifier is a categorical property of the head word. For example, the determiner is a property of the category of nouns. Similarly, the complement is a lexical property of the head word: taking two object complements by the verb give is a lexical property of this specific verb, not a categorical property of the verb, in general. Let us examine the diagrams of the general structures of the phrases provided in Figure 4.37.

Figure 4.37. Diagrams of the two basic rules

In the two diagrams shown in Figure 4.37, we have the symbols X, Y and Z which represent the categories, Spec (X) which corresponds to a specifier of and Comp(X) for complement of. The categories specifier and complement designate the types of constituents. Thus, we have:

- – Spec N = D (Det);

- – Spec V = Vaux;

- – Spec A = DEG (degree);

- – Comp X’ = N”.

The hierarchical structure of the two syntactic relationships specifier and complement induces a constraint on the variation of order within the phrases. The X-bar theory predicts that the complement can be located to the left or to the right of the head and that the determiner is placed to the left of the “head+complement” group, or to the right of this group.

If we go back to our starting noun phrase a pharmacist from Aleppo with the blue shirt or even a variant of this phrase with an antepozed adjective to the noun phrase such as good, we obtain the analyses provided in Figure 4.38.

Figure 4.38. Examples of noun phrases

As we observe, the analyses proposed in Figure 4.38 take into account the functional difference between the two prepositional phrases. Similarly, the adjective responsible with respect to the head is processed as a specifier of the head of the phrase.

Although they appear to be acceptable, some researchers have doubted the adaptation of the analyses proposed in Figure 4.39 with respect to the principles of the X-bar theory. In fact, one of the principles, which form the basis of this theory, is to consider that all constituents, other than the head, must themselves be phrases. It is rather motivated by reasons related to the elegance of the theory than by linguistic principles. In the case of a noun phrase, this means that the only constituent that is not phrasal is the noun. However, in the proposed analysis, the determiner is analyzed similarly to the head. To resolve this problem, some have proposed an original approach which is to consider that NP depends in reality upon a determiner phrase whose head is the determiner [ABN 87]. The diagram of an NP according to this approach is provided in Figure 4.39.

Figure 4.39. Diagram and example of a determiner phrase according to [ABN 87]

The processing of the verb phrase follows the standard diagram as can be seen in Figure 4.40.

After having shown how we analyze noun, verb and prepositional phrases in the framework of the X-bar theory, it is time to proceed with the analysis of an entire sentence. We have seen that it is generally easy to adapt the conventional analyses of constituents in the framework of the X-bar theory, but this is less obvious in terms of the sentence itself. Consider the conventional rewrite rule for the sentence: S → NP VP. The question that arises is to determine if the root of the sentence S is a projection of V (the head of VP) or a projection of N (the head of the subject NP). Two syntactic phenomena deserve to be examined prior to decide. Firstly, we must report the phenomenon of agreement between the subject NP and the VP in our sentence analysis. To do this, it would be useful to postulate the existence of a node between the subject NP and the VP, which would establish the link of agreement between these two phrases. Secondly, we can observe differences in the behavior of infinitive verbs and conjugated verbs. The two differ in the place they occupy with respect to the negation markers, certain adverbs and quantifiers. These differences confirm the special relationship which connects the conjugate verb with the subject noun phrase since it does not allow an interruption by these particular words. Invariably, the infinitive verb has no relationship of agreement with the subject (see [ROB 02] for some examples in French).

Figure 4.40. Example of the processing of a verb phrase with the X-bar theory

To account for the relationship between the subject NP and the VP, we can use an intermediate node between these two constituents. The role of this node is to convey the necessary information to the agreement of the verb AGG with the subject, as well as to provide the tense T. This node is called Inflectional Phrase (IP). We can now postulate that the sentence is a maximum projection of IP and that its head is, therefore, IP (see Figure 4.41).

Figure 4.41. Diagram and example of analysis of entire sentences

The analysis of complex sentences is similar, in principle, to the analysis that we have already seen in the previous section. We assume the existence of a phrasal complement (PC) (see Figure 4.2).

Figure 4.42. Analysis of a completive subordinate

Despite its elegance and its scientific interest, the X-bar theory has several limitations. Among them, we should mention the problem of infinitives and proper nouns, where it is difficult to identify the head.

The step that has followed the X-bar theory in the generative syntax was the proposal of the government and binding (GB) theory by Noam Chomsky at the beginning of the 1980s [CHO 81]. We refer to [POL 98, ROB 02, CAR 06, DEN 13] for an introduction to this formalism, of which we will present only the general features.

To account for the different aspects of the language, GB is designed in a modular fashion involving a set of principles of which the most important are:

- – government principle: this principle describes the phenomena of reaction. It addresses all conditions and constraints between the governors and those being governed;

- – instantiation criteria of thematic roles: each lexical head associates the syntactic roles with their arguments (theta roles). Each argument of the sentence must receive a role and each and every role must be distributed. To receive a case, there must be a lexical or governed entity;

- – binding principle: this principle describes the phenomena of co-referential anaphora.

In spite of its high complexity, GB formalism has been the subject of several operations in different application contexts [SHA 93, WEH 97, BOU 98]. GB formalism has, in turn, been the subject of amendments in the framework of Chomsky’s minimalist program [CHO 95].

Finally, it is probably necessary to add that some concepts of the X-bar theory have also found their place in the theories related to generative grammar, including the HPSG formalism, to which we will devote the next section.

4.3.2. Head-driven phrase structure grammar

4.3.2.1. Fundamental principles

Head-driven phrase structure grammar (HPSG) was originally proposed to combine the different levels of linguistic knowledge: phonetic, syntactic and semantic. This formalism is presented as an alternative to the transformational model [POL 87, POL 96, POL 97]. Although it is considered as a generative approach to syntax, HPSG is inspired by several formalisms which come from several theoretical currents, including the generalized phrase structure grammar (GPSG), the lexical functional grammar (LFG) and the categorial grammar (CG). In addition, there are notable similarities between HPSG and construction grammar (CG) which can be noted. In fact, although CG focuses on essentially cognitive postulates, it has several points in common with HPSG, particularly with regard to the flexibility in the processing unit, as well as the representation of linguistic knowledge within these units [FIL 88] (see [GOL 03] and [YAN 03]). The legacy of these unification grammars, heirs themselves of several studies in artificial intelligence and cognitive sciences on the representation and the processing of knowledge, makes HPSG particularly suitable for IT implementations, which explains its popularity in the NLP community.

The processing architecture in the HPSG formalism is based on the single analysis level and does not therefore assume levels distributed as in the LFG formalism with the double structures: functional structure and structure of constituents. This is the same concerning the transformational model which assumes the existence of a deep structure and of a surface structure, or even as the GB model that attempts to explain the syntactic phenomena with the movement mechanism.

From a linguistic point of view, HPSG is composed of the following elements:

- – a lexicon: which groups the basic words which are, in turn, complex objects;

- – lexical rules: for derivative words;

- – immediate dominance patterns: for structures of constituents;

- – rules of linear precedence: which allow us to specify word order;

- – a set of grammatical principles: which allow us to express generalizations about linguistic objects.

Since the complete presentation of a formalism as rich as the HPSG is difficult to achieve in a small section such as this, we refer the reader to the articles by [BLA 95, DES 03], as well as to Anne Abeillé’s book [ABE 93] (in particular, the chapters on the GPSG and HPSG formalisms). We also refer to Ivan Sag’s book and his collaborators [SAG 03] which constituted the primary source of information for this section.

4.3.2.2. Feature structures