Chapter 3: Threat Modeling

Kubernetes is a large ecosystem comprising multiple components such as kube-apiserver, etcd, kube-scheduler, kubelet, and more. In the first chapter, we highlighted the basic functionality of different Kubernetes components. In the default configuration, interactions between Kubernetes components result in threats that developers and cluster administrators should be aware of. Additionally, deploying applications in Kubernetes introduces new entities that the application interacts with, adding new threat actors and attack surfaces to the threat model of the application.

In this chapter, we will start with a brief introduction to threat modeling and discuss component interactions within the Kubernetes ecosystem. We will look at the threats in the default Kubernetes configuration. Finally, we will talk about how threat modeling an application in the Kubernetes ecosystem introduces additional threat actors and attack surfaces.

The goal of this chapter is to help you understand that the default Kubernetes configuration is not sufficient to protect your deployed application from attackers. Kubernetes is a constantly evolving and community-maintained platform, so some of the threats that we are going to highlight in this chapter do not have mitigations because the severity of the threats varies with every environment.

This chapter aims to highlight the threats in the Kubernetes ecosystem, which includes the Kubernetes components and workloads in a Kubernetes cluster, so developers and DevOps engineers understand the risks of their deployments and have a risk mitigation plan in place for the known threats. In this chapter, we will cover the following topics:

- Introduction to threat modeling

- Component interactions

- Threat actors in the Kubernetes environment

- The Kubernetes components/objects threat model

- Threat modeling applications in Kubernetes

Introduction to threat modeling

Threat modeling is a process of analyzing the system as a whole during the design phase of the software development life cycle (SDLC) to identify risks to the system proactively. Threat modeling is used to think about security requirements early in the development cycle to reduce the severity of risks from the start. Threat modeling involves identifying threats, understanding the effects of each threat, and finally developing a mitigation strategy for every threat. Threat modeling aims to highlight the risks in an ecosystem as a simple matrix with the likelihood and impact of the risk and a corresponding risk mitigation strategy if it exists.

After a successful threat modeling session, you're able to define the following:

- Asset: A property of an ecosystem that you need to protect.

- Security control: A property of a system that protects the asset against identified risks. These are either safeguards or countermeasures against the risk to the asset.

- Threat actor: A threat actor is an entity or organization including script kiddies, nation-state attackers, and hacktivists who exploit risks.

- Attack surface: The part of the system that the threat actor is interacting with. It includes the entry point of the threat actor into the system.

- Threat: The risk to the asset.

- Mitigation: Mitigation defines how to reduce the likelihood and impact of a threat to an asset.

The industry usually follows one of the following approaches to threat modeling:

- STRIDE: The STRIDE model was published by Microsoft in 1999. It is an acronym for Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, and Escalation of Privilege. STRIDE models threats to a system to answer the question, 'What can go wrong with the system?'

- PASTA: Process for Attack Simulation and Threat Analysis is a risk-centric approach to threat modeling. PASTA follows an attacker-centric approach, which is used by the business and technical teams to develop asset-centric mitigation strategies.

- VAST: Visual, Agile, and Simple Threat modeling aims to integrate threat modeling across application and infrastructure development with SDLC and agile software development. It provides a visualization scheme that provides actionable outputs to all stakeholders such as developers, architects, security researchers, and business executives.

There are other approaches to threat modeling, but the preceding three are the most used within the industry.

Threat modeling can be an infinitely long task if the scope for the threat model is not well defined. Before starting to identify threats in an ecosystem, it is important that the architecture and workings of each component, and the interactions between components, are clearly understood.

In previous chapters, we have already looked in detail at the basic functionality of every Kubernetes component. Now, we will look at the interactions between different components in Kubernetes before investigating the threats within the Kubernetes ecosystem.

Component interactions

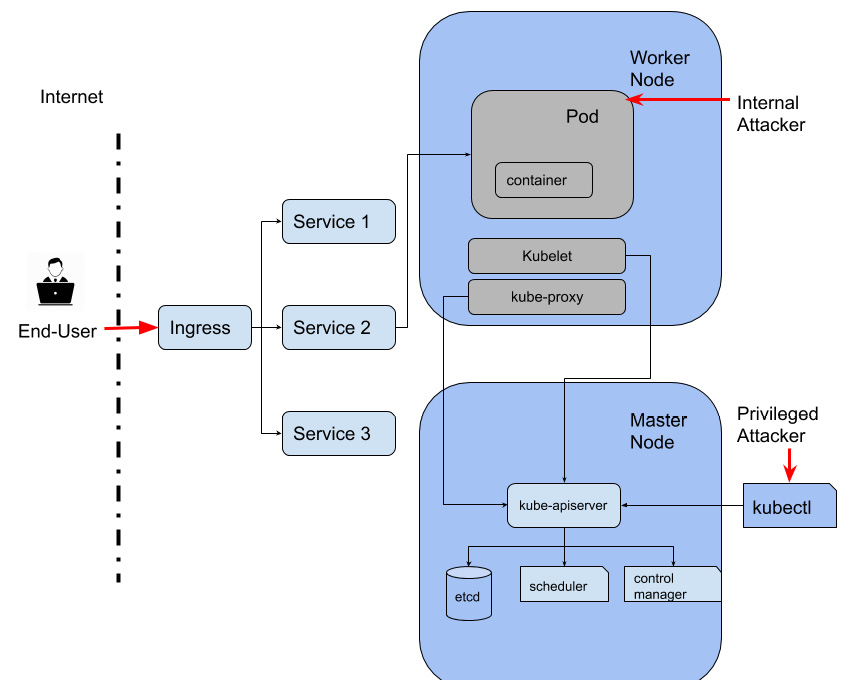

Kubernetes components work collaboratively to ensure the microservices running inside the cluster are functioning as expected. If you deploy a microservice as a DaemonSet, then the Kubernetes components will make sure there will be one pod running the microservice in every node, no more, no less. So what happens behind the scenes? Let's look at a diagram to show the components' interaction at a high level:

Figure 3.1 – Interactions between Kubernetes components

A quick recap on what these components do:

- kube-apiserver: The Kubernetes API server (kube-apiserver) is a control plane component that validates and configures data for objects.

- etcd: etcd is a high-availability key-value store used to store data such as configuration, state, and metadata.

- kube-scheduler: kube-scheduler is a default scheduler for Kubernetes. It watches for newly created pods and assigns the pods to nodes.

- kube-controller-manager: The Kubernetes controller manager is a combination of the core controllers that watch for state updates and make changes to the cluster accordingly.

- cloud-controller-manager: The cloud controller manager runs controllers to interact with the underlying cloud providers.

- kubelet: kubelet registers the node with the API server and monitors the pods created using Podspecs to ensure that the pods and containers are healthy.

It is worth noting that only kube-apiserver communicates with etcd. Other Kubernetes components such as kube-scheduler, kube-controller-manager, and cloud-controller manager interact with kube-apiserver running in the master nodes in order to fulfill their responsibilities. On the worker nodes, both kubelet and kube-proxy communicate with kube-apiserver.

Let's use a DaemonSet creation as an example to show how these components talk to each other:

Figure 3.2 – Creating a DaemonSet in Kubernetes

To create a DaemonSet, we use the following steps:

- The user sends a request to kube-apiserver to create a DaemonSet workload via HTTPS.

- After authentication, authorization, and object validation, kube-apiserver creates the workload object information for the DaemonSet in the etcd database. Neither data in transit nor at rest is encrypted by default in etcd.

- The DaemonSet controller watches that a new DaemonSet object is created, and then sends a pod creation request to kube-apiserver. Note that the DaemonSet basically means the microservice will run inside a pod in every node.

- kube-apiserver repeats the actions in step 2 and creates the workload object information for pods in the etcd database.

- kube-scheduler watches as a new pod is created, then decides which node to run the pod on based on the node selection criteria. After that, kube-scheduler sends a request to kube-apiserver for which node the pod will be running on.

- kube-apiserver receives the request from kube-scheduler and then updates etcd with the pod's node assignment information.

- The kubelet running on the worker node watches the new pod that is assigned to this node, then sends request to the Container Runtime Interface (CRI) components, such as Docker, to start a container. After that, the kubelet will send the pod's status back to kube-apiserver.

- kube-apiserver receives the pod's status information from the kubelet on the target node, then updates the etcd database with the pod status.

- Once the pods (from the DaemonSet) are created, the pods are able to communicate with other Kubernetes components and the microservice should be up and running.

Note that not all communication between components is secure by default. It depends on the configuration of those components. We will cover this in more detail in Chapter 6, Securing Cluster Components.

Threat actors in Kubernetes environments

A threat actor is an entity or code executing in the system that the asset should be protected from. From a defense standpoint, you first need to understand who your potential enemies are, or your defense strategy will be too vague. Threat actors in Kubernetes environments can be broadly classified into three categories:

- End user: An entity that can connect to the application. The entry point for this actor is usually the load balancer or ingress. Sometimes, pods, containers, or NodePorts may be directly exposed to the internet, adding more entry points for the end user.

- Internal attacker: An entity that has limited access inside the Kubernetes cluster. Malicious containers or pods spawned within the cluster are examples of internal attackers.

- Privileged attacker: An entity that has administrator access inside the Kubernetes cluster. Infrastructure administrators, compromised kube-apiserver instances, and malicious nodes are all examples of privileged attackers.

Examples of threat actors include script kiddies, hacktivists, and nation-state actors. All these actors fall into the three aforementioned categories, depending on where in the system the actor exists.

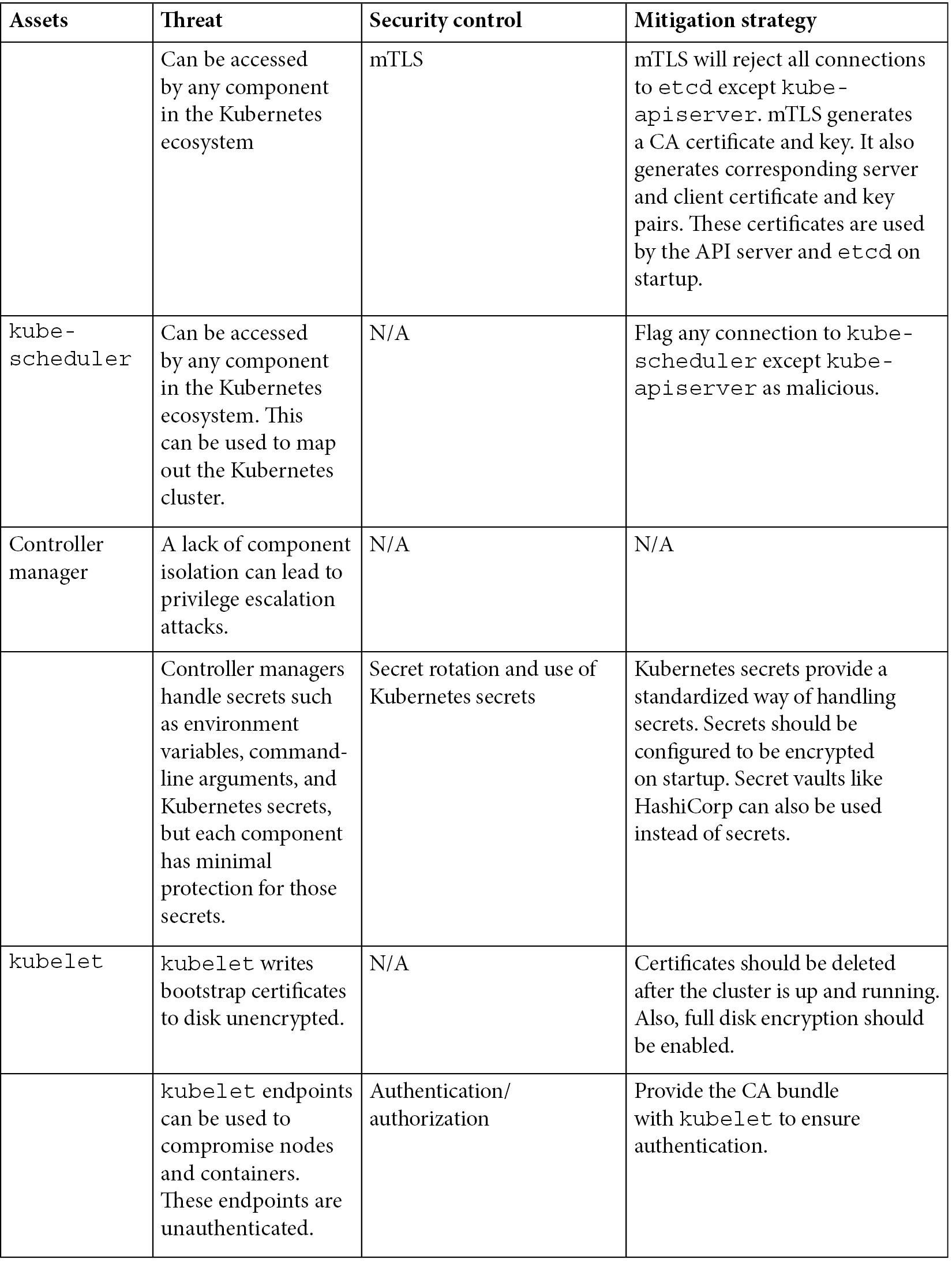

The following diagram highlights the different actors in the Kubernetes ecosystem:

Figure 3.3 – Threat actors in Kubernetes environments

As you can see in this diagram, the end user generally interacts with the HTTP/HTTPS routes exposed by the ingress controller, the load balancer, or the pods. The end user is the least privileged. The internal attacker on the other hand has limited access to resources within the cluster. The privileged attacker is most privileged and has the ability to modify the cluster. These three categories of attackers help determine the severity of a threat. A threat involving an end user has a higher severity compared to a threat involving a privileged attacker. Although these roles seem isolated in the diagram, an attacker can change from an end user to an internal attacker using an elevation of privilege attack.

Threats in Kubernetes clusters

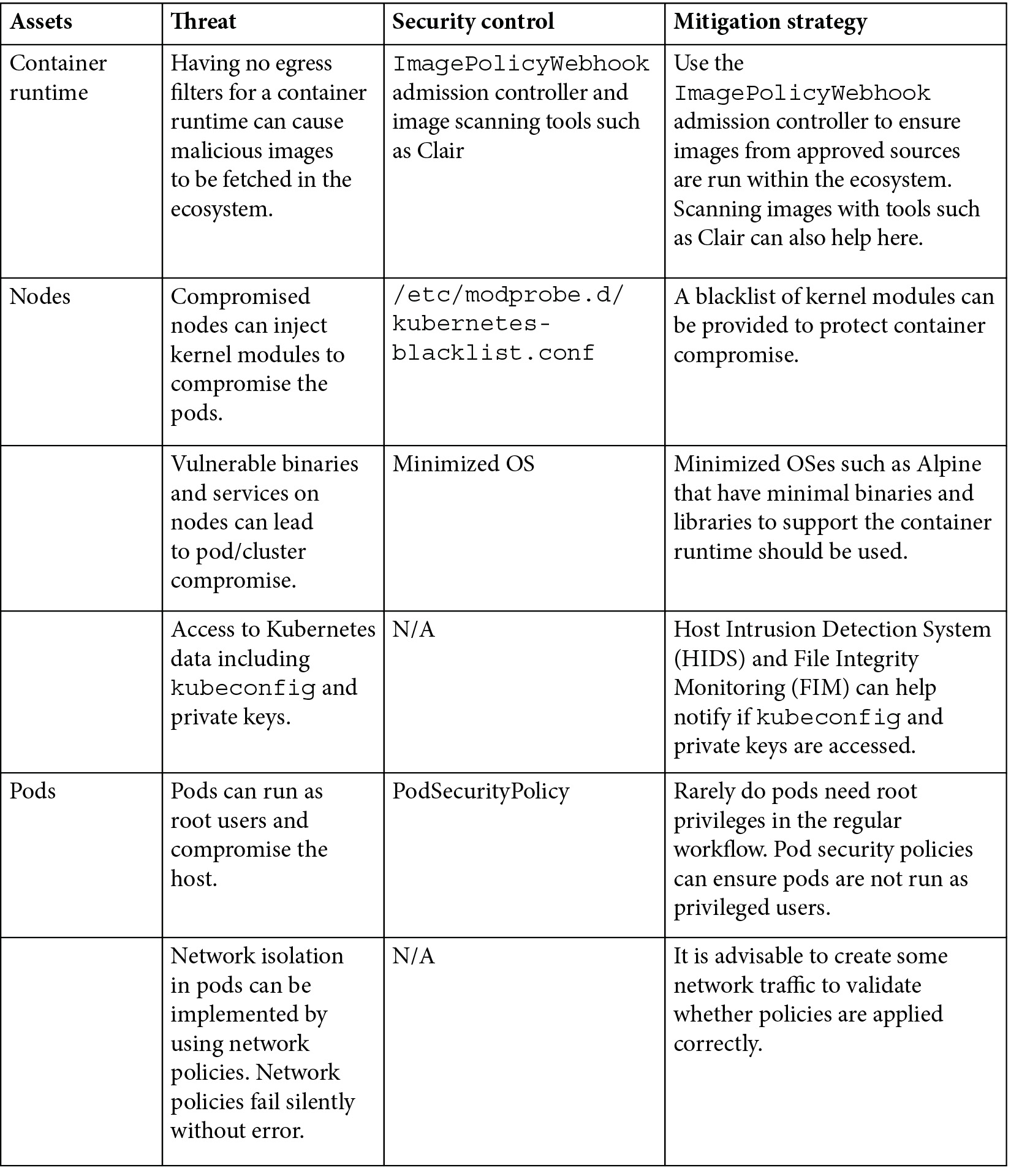

With our new understanding of Kubernetes components and threat actors, we're moving on to the journey of threat modeling a Kubernetes cluster. In the following table, we cover the major Kubernetes components, nodes, and pods. Nodes and pods are the fundamental Kubernetes objects that run workloads. Note that all these components are assets and should be protected from threats. Any of these components getting compromised could lead to the next step of an attack, such as privilege escalation. Also, note that kube-apiserver and etcd are the brain and heart of a Kubernetes cluster. If either of them were to get compromised, that would be game over.

The following table highlights the threats in the default Kubernetes configuration. This table also highlights how developers and cluster administrators can protect their assets from these threats:

This table only highlights some of the threats. There are more threats, which will be covered in later chapters. We hope the preceding table will inspire you to think out loud about what needs to be protected and how to protect it in your Kubernetes cluster.

Threat modeling application in Kubernetes

Now that we have looked at threats in a Kubernetes cluster, let's move on to discuss how threat modeling will differ for an application deployed on Kubernetes. Deployment in Kubernetes adds additional complexities to the threat model. Kubernetes adds additional considerations, assets, threat actors, and new security controls that need to be considered before investigating the threats to the deployed application.

Let's look at a simple example of a three-tier web application:

Figure 3.4 – Threat model of a traditional web application

The same application looks a little different in the Kubernetes environment:

Figure 3.5 – Threat model of the three-tier web application in Kubernetes

As shown in the previous diagram, the web server, application server, and databases are all running inside pods. Let's do a high-level comparison of threat modeling between traditional web architecture and cloud-native architecture:

To summarize the preceding comparison, you will find that more assets need to be protected in a cloud-native architecture, and you will face more threat actors in this space. Kubernetes provides more security controls, but it also adds more complexity. More security controls doesn't necessarily mean more security. Remember: complexity is the enemy of security.

Summary

In this chapter, we started by introducing the basic concepts of threat modeling. We discussed the important assets, threats, and threat actors in Kubernetes environments. We discussed different security controls and mitigation strategies to improve the security posture of your Kubernetes cluster.

Then we walked through application threat modeling, taking into consideration applications deployed in Kubernetes, and compared it to the traditional threat modeling of monolithic applications. The complexity introduced by the Kubernetes design makes threat modeling more complicated, as we've shown: more assets to be protected and more threat actors. And more security control doesn't necessarily mean more safety.

You should keep in mind that although threat modeling can be a long and complex process, it is worth doing to understand the security posture of your environment. It's quite necessary to do both application threat modeling and infrastructure threat modeling together to better secure your Kubernetes cluster.

In the next chapter, to help you learn about securing your Kubernetes cluster to the next level, we will talk about the principle of least privilege and how to implement it in the Kubernetes cluster.

Questions

- When do you start threat modeling your application?

- What are the different threat actors in Kubernetes environments?

- Name one of the most severe threats to the default Kubernetes deployment.

- Why is threat modeling more difficult in a Kubernetes environment?

- How does the attack surface of deployments in Kubernetes compare to deployments in traditional architectures?

Further reading

Trail of Bits and Atredis Partners have done a good job on Kubernetes components' threat modeling. Their whitepaper highlights in detail the threats in each Kubernetes component. You can find the whitepaper at https://github.com/kubernetes/community/blob/master/wg-security-audit/findings/Kubernetes%20Threat%20Model.pdf.

Note that the intent, scope, and approach of threat modeling was different for the preceding whitepaper. So, the results will look a little different.