Chapter 2. Design and implement a storage and data strategy

In this section, we’ll look at most of the various methods of handling data and state in Microsoft Azure. All of the different data options can be somewhat overwhelming. For the last several decades, application state was primarily stored in a relational database system, like Microsoft SQL Server. Microsoft Azure has non-relational storage products, like Azure Storage Tables, Azure CosmosDB, and Azure Redis Cache. You might ask yourself which data product do you choose? What are the differences between each one? How do I get started if I have little or no experience with one? This chapter will explain the differences between relational data stores, file storage, and JSON document storage. It will also help you get started with the various Azure data products.

Skills in this chapter:

![]() Skill 2.1: Implement Azure Storage blobs and Azure files

Skill 2.1: Implement Azure Storage blobs and Azure files

![]() Skill 2.2: Implement Azure Storage tables and queues

Skill 2.2: Implement Azure Storage tables and queues

![]() Skill 2.3. Manage access and monitor storage

Skill 2.3. Manage access and monitor storage

![]() Skill 2.4: Implement Azure SQL Databases

Skill 2.4: Implement Azure SQL Databases

![]() Skill 2.5: Implement Azure Cosmos DB

Skill 2.5: Implement Azure Cosmos DB

![]() Skill 2.6: Implement Redis caching

Skill 2.6: Implement Redis caching

![]() Skill 2.7: Implement Azure Search

Skill 2.7: Implement Azure Search

Skill 2.1: Implement Azure Storage blobs and Azure files

File storage is incredibly useful in a wide variety of solutions for your organization. Whether storing sensor data from refrigeration trucks that check in every few minutes, storing resumes as PDFs for your company website, or storing SQL Server backup files to comply with a retention policy. Microsoft Azure provides several methods of storing files, including Azure Storage blobs and Azure Files. We will look at the differences between these products and teach you how to begin using each one.

Azure Storage blobs

Azure Storage blobs are the perfect product to use when you have files that you’re storing using a custom application. Other developers might also write applications that store files in Azure Storage blobs, which is the storage location for many Microsoft Azure products, like Azure HDInsight, Azure VMs, and Azure Data Lake Analytics. Azure Storage blobs should not be used as a file location for users directly, like a corporate shared drive. Azure Storage blobs provide client libraries and a REST interface that allows unstructured data to be stored and accessed at a massive scale in block blobs.

Create a blob storage account

Sign in to the Azure portal.

Click the green plus symbol on the left side.

On the Hub menu, select New > Storage > Storage account–blob, file, table, queue.

Click Create.

Enter a name for your storage account.

For most of the options, you can choose the defaults.

Specify the Resource Manager deployment model. You should choose an Azure Resource Manager deployment. This is the newest deployment API. Classic deployment will eventually be retired.

Your application is typically made up of many components, for instance a website and a database. These components are not separate entities, but one application. You want to deploy and monitor them as a group, called a resource group. Azure Resource Manager enables you to work with the resources in your solution as a group.

Select the General Purpose type of storage account.

There are two types of storage accounts: General purpose or Blob storage. General purpose storage type allows you to store tables, queues, and blobs all-in-one storage. Blob storage is just for blobs. The difference is that Blob storage has hot and cold tiers for performance and pricing and a few other features just for Blob storage. We’ll choose General Purpose so we can use table storage later.

Under performance, specify the standard storage method. Standard storage uses magnetic disks that are lower performing than Premium storage. Premium storage uses solid-state drives.

Storage service encryption will encrypt your data at rest. This might slow data access, but will satisfy security audit requirements.

Secure transfer required will force the client application to use SSL in their data transfers.

You can choose several types of replication options. Select the replication option for the storage account.

The data in your Microsoft Azure storage account is always replicated to ensure durability and high availability. Replication copies your data, either within the same data center, or to a second data center, depending on which replication option you choose. For replication, choose carefully, as this will affect pricing. The most affordable option is Locally Redundant Storage (LRS).

Select the subscription in which you want to create the new storage account.

Specify a new resource group or select an existing resource group. Resource groups allow you to keep components of an application in the same area for performance and management. It is highly recommended that you use a resource group. All service placed in a resource group will be logically organized together in the portal. In addition, all of the services in that resource group can be deleted as a unit.

Select the geographic location for your storage account. Try to choose one that is geographically close to you to reduce latency and improve performance.

Click Create to create the storage account.

Once created, you will have two components that allow you to interact with your Azure Storage account via an SDK. SDKs exist for several languages, including C#, JavaScript, and Python. In this module, we’ll focus on using the SDK in C#. Those two components are the URI and the access key. The URI will look like this: http://{your storage account name from step 4}.blob.core.windows.net.

Your access key will look like this: KEsm421/uwSiel3dipSGGL124K0124SxoHAXq3jk124vuCjw35124fHRIk142WIbxbTmQrzIQdM4K5Zyf9ZvUg==

Read and change data

First, let’s use the Azure SDK for .NET to load data into your storage account.

Create a console application.

Use Nuget Package Manager to install WindowsAzure.Storage.

In the Using section, add a using to Microsoft.WindowsAzure.Storage and Microsoft.WindowsAzure.Storage.Blob.

Create a storage account in your application like this:

CloudStorageAccount storageAccount;

storageAccount =

CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName={your

storage account name};AccountKey={your storage key}");Azure Storage blobs are organized with containers. Each storage account can have an unlimited amount of containers. Think of containers like folders, but they are very flat with no sub-containers. In order to load blobs into an Azure Storage account, you must first choose the container.

Create a container using the following code:

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference("democontainerblo

ckblob");

try

{

await container.CreateIfNotExistsAsync();

}

catch (StorageException ex)

{

Console.WriteLine(ex.Message);

Console.ReadLine();

throw;

}In the following code, you need to set the path of the file you want to upload using the ImageToUpload variable.

const string ImageToUpload = @"C: empHelloWorld.png";

CloudBlockBlob blockBlob = container.GetBlockBlobReference("HelloWorld.png");

// Create or overwrite the "myblob" blob with contents from a local file.

using (var fileStream = System.IO.File.OpenRead(ImageToUpload))

{

blockBlob.UploadFromStream(fileStream);

}Every blob has an individual URI. By default, you can gain access to that blob as long as you have the storage account name and the access key. We can change the default by changing the Access Policy of the Azure Storage blob container. By default, containers are set to private. They can be changed to either blob or container. When set to Public Container, no credentials are required to access the container and its blobs. When set to Public Blob, only blobs can be accessed without credentials if the full URL is known. We can read that blob using the following code:

foreach (IListBlobItem blob in container.ListBlobs())

{

Console.WriteLine("- {0} (type: {1})", blob.Uri, blob.GetType());

}

Note how we use the container to list the blobs to get the URI. We also have all of the information necessary to download the blob in the future.

Set metadata on a container

Metadata is useful in Azure Storage blobs. It can be used to set content types for web artifacts or it can be used to determine when files have been updated. There are two different types of metadata in Azure Storage Blobs: System Properties and User-defined Metadata. System properties give you information about access, file types, and more. Some of them are read-only. User-defined metadata is a key-value pair that you specify for your application. Maybe you need to make a note of the source, or the time the file was processed. Data like that is perfect for user-defined metadata.

Blobs and containers have metadata attached to them. There are two forms of metadata:

![]() System properties metadata

System properties metadata

![]() User-defined metadata

User-defined metadata

System properties can influence how the blob behaves, while user-defined metadata is your own set of name/value pairs that your applications can use. A container has only read-only system properties, while blobs have both read-only and read-write properties.

Setting user-defined metadata

To set user-defined metadata for a container, get the container reference using GetContainerReference(), and then use the Metadata member to set values. After setting all the desired values, call SetMetadata() to persist the values, as in the following example:

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container =

blobClient.GetContainerReference("democontainerblockblob");

container.Metadata.Add("counter", "100");container.SetMetadata();

Reading user-defined metadata

To read user-defined metadata for a container, get the container reference using GetContainerReference(), and then use the Metadata member to retrieve a dictionary of values and access them by key, as in the following example:

container.FetchAttributes();

foreach (var metadataItem in container.Metadata)

{

Console.WriteLine(" Key: {0}", metadataItem.Key);

Console.WriteLine(" Value: {0}", metadataItem.Value);

}

Reading system properties

To read a container’s system properties, first get a reference to the container using GetContainerReference(), and then use the Properties member to retrieve values. The following code illustrates accessing container system properties:

container = blobClient.GetContainerReference("democontainerblockblob");

container.FetchAttributes();

Console.WriteLine("LastModifiedUTC: " + container.Properties.LastModified);

Console.WriteLine("ETag: " + container.Properties.ETag);

There are three types of blobs used in Azure Storage Blobs: Block, Append, and Page. Block blobs are used to upload large files. They are comprised of blocks, each with its own block ID. Because the blob is divided up in blocks, it allows for easy updating or resending when transferring large files. You can insert, replace, or delete an existing block in any order. Once a block is updated, added, or removed, the list of blocks needs to be committed for the file to actually record the update.

Page blobs are comprised of 512-byte pages that are optimized for random read and write operations. Writes happen in place and are immediately committed. Page blobs are good for VHDs in Azure VMs and other files that have frequent, random access.

Append blobs are optimized for append operations. Append blobs are good for logging and streaming data. When you modify an append blob, blocks are added to the end of the blob.

In most cases, block blobs will be the type you will use. Block blobs are perfect for text files, images, and videos.

A previous section demonstrated how to interact with a block blob. Here’s how to write a page blob:

string pageBlobName = "random";

CloudPageBlob pageBlob = container.GetPageBlobReference(pageBlobName);

await pageBlob.CreateAsync(512 * 2 /*size*/); // size needs to be multiple of 512 bytes

byte[] samplePagedata = new byte[512];

Random random = new Random();

random.NextBytes(samplePagedata);

await pageBlob.UploadFromByteArrayAsync(samplePagedata, 0, samplePagedata.Length);

To read a page blob, use the following code:

int bytesRead = await pageBlob.DownloadRangeToByteArrayAsync(samplePagedata,

0, 0, samplePagedata.Count());

You can stream blobs by downloading to a stream using the DownloadToStream() API method. The advantage of this is that it avoids loading the entire blob into memory, for example before saving it to a file or returning it to a web request.

Secure access to blob storage implies a secure connection for data transfer and controlled access through authentication and authorization.

Azure Storage supports both HTTP and secure HTTPS requests. For data transfer security, you should always use HTTPS connections. To authorize access to content, you can authenticate in three different ways to your storage account and content:

![]() Shared Key Constructed from a set of fields related to the request. Computed with a SHA-256 algorithm and encoded in Base64.

Shared Key Constructed from a set of fields related to the request. Computed with a SHA-256 algorithm and encoded in Base64.

![]() Shared Key Lite Similar to Shared Key, but compatible with previous versions of Azure Storage. This provides backwards compatibility with code that was written against versions prior to 19 September 2009. This allows for migration to newer versions with minimal changes.

Shared Key Lite Similar to Shared Key, but compatible with previous versions of Azure Storage. This provides backwards compatibility with code that was written against versions prior to 19 September 2009. This allows for migration to newer versions with minimal changes.

![]() Shared Access Signature Grants restricted access rights to containers and blobs. You can provide a shared access signature to users you don’t trust with your storage account key. You can give them a shared access signature that will grant them specific permissions to the resource for a specified amount of time. This is discussed in a later section.

Shared Access Signature Grants restricted access rights to containers and blobs. You can provide a shared access signature to users you don’t trust with your storage account key. You can give them a shared access signature that will grant them specific permissions to the resource for a specified amount of time. This is discussed in a later section.

To interact with blob storage content authenticated with the account key, you can use the Storage Client Library as illustrated in earlier sections. When you create an instance of the CloudStorageAccount using the account name and key, each call to interact with blob storage will be secured, as shown in the following code:

string accountName = "ACCOUNTNAME";

string accountKey = "ACCOUNTKEY";

CloudStorageAccount storageAccount = new CloudStorageAccount(new

StorageCredentials(accountName, accountKey), true);

It is possible to copy blobs between storage accounts. You may want to do this to create a point-in-time backup of your blobs before a dangerous update or operation. You may also want to do this if you’re migrating files from one account to another one. You cannot change blob types during an async copy operation. Block blobs will stay block blobs. Any files with the same name on the destination account will be overwritten.

Blob copy operations are truly asynchronous. When you call the API and get a success message, this means the copy operation has been successfully scheduled. The success message will be returned after checking the permissions on the source and destination accounts.

You can perform a copy in conjunction with the Shared Access Signature method of gaining permissions to the account. We’ll cover that security method in a later topic.

A Content Delivery Network (CDN) is used to cache static files to different parts of the world. For instance, let’s say you were developing an online catalog for a retail organization with a global audience. Your main website was hosted in western United States. Users of the application in Florida complain of slowness while users in Washington state compliment you for how fast it is. A CDN would be a perfect solution for serving files close to the users, without the added latency of going across country. Once files are hosted in an Azure Storage Account, a configured CDN will store and replicate those files for you without any added management. The CDN cache is perfect for style sheets, documents, images, JavaScript files, packages, and HTML pages.

After creating an Azure Storage Account like you did earlier, you must configure it for use with the Azure CDN service. Once that is done, you can call the files from the CDN inside the application.

To enable the CDN for the storage account, follow these steps:

In the Storage Account navigation pane, find Azure CDN towards the bottom. Click on it.

Create a new CDN endpoint by filling out the form that popped up.

Azure CDN is hosted by two different CDN networks. These are partner companies that actually host and replicate the data. Choosing a correct network will affect the features available to you and the price you pay. No matter which tier you use, you will only be billed through the Microsoft Azure Portal, not through the third-party. There are three pricing tiers:

Premium Verizon The most expensive tier. This tier offers advanced real-time analytics so you can know what users are hitting what content and when.

Premium Verizon The most expensive tier. This tier offers advanced real-time analytics so you can know what users are hitting what content and when. Standard Verizon The standard CDN offering on Verizon’s network.

Standard Verizon The standard CDN offering on Verizon’s network. Standard Akamai The standard CDN offering on Akamai’s network.

Standard Akamai The standard CDN offering on Akamai’s network.Specify a Profile and an endpoint name. After the CDN endpoint is created, it will appear on the list above.

Once this is done, you can configure the CDN if needed. For instance, you can use a custom domain name is it looks like your content is coming from your website.

Once the CDN endpoint is created, you can reference your files using a path similar to the following:

Error! Hyperlink reference not valid.>

If a file needs to be replaced or removed, you can delete it from the Azure Storage blob container. Remember that the file is being cached in the CDN. It will be removed or updated when the Time-to-Live (TTL) expires. If no cache expiry period is specified, it will be cached in the CDN for seven days. You set the TTL is the web application by using the clientCache element in the web.config file. Remember when you place that in the web.config file it affects all folders and subfolders for that application.

Design blob hierarchies

Azure Storage blobs are stored in containers, which are very flat. This means that you cannot have child containers contained inside a parent container. This can lead to organizational confusion for users who rely on folders and subfolders to organize files.

A hierarchy can be replicated by naming the files something that’s similar to a folder structure. For instance, you can have a storage account named “sally.” Your container could be named “pictures.” Your file could be named “product1mainFrontPanel.jpg.” The URI to your file would look like this: http://sally.blob.core.windows.net/pictures/product1/mainFrontPanel.jpg

In this manner, a folder/subfolder relationship can be maintained. This might prove useful in migrating legacy applications over to Azure.

Configure custom domains

The default endpoint for Azure Storage blobs is: (Storage Account Name).blob.core.windows.net. Using the default can negatively affect SEO. You might also not want to make it obvious that you are hosting your files in Azure. To obfuscate this, you can configure Azure Storage to respond to a custom domain. To do this, follow these steps:

Navigate to your storage account in the Azure portal.

On the navigation pane, find BLOB SERVICE. Click Custom Domain.

Check the Use Indirect CNAME Validation check box. We use this method because it does not incur any downtime for your application or website.

Log on to your DNS provider. Add a CName record with the subdomain alias that includes the Asverify subdomain. For example, if you are holding pictures in your blob storage account and you want to note that in the URL, then the CName would be Asverify.pictures (your custom domain including the .com or .edu, etc.) Then provide the hostname for the CNAME alias, which would also include Asverify. If we follow the earlier example of pictures, the hostname URL would be sverify.pictures.blob.core.windows.net. The hostname to use appears in #2 of the Custom domain blade in the Azure portal from the previous step.

In the text box on the Custom domain blade, enter the name of your custom domain, but without the Asverify. In our example, it would be pictures.(your custom domain including the .com or .edu, etc.) .

Select Save.

Now return to your DNS provider’s website and create another CNAME record that maps your subdomain to your blob service endpoint. In our example, we can make pictures.(your custom domain) point to pictures.blob.core.windows.net.

Now you can delete the azverify CName now that it has been verified by Azure.

Why did we go through the azverify steps? We were allowing Azure to recognize that you own that custom domain before doing the redirection. This allows the CNAME to work with no downtime.

In the previous example, we referenced a file like this: http://sally.blob.core.windows.net/pictures/product1/mainFrontPanel.jpg.

With the custom domain, it would now look like this: http://pictures.(your custom domain)/pictures/product1/mainFrontPanel.jpg.

Scale blob storage

We can scale blob storage both in terms of storage capacity and performance. Each Azure subscription can have 200 storage accounts, with 500TB of capacity each. That means that each Azure subscription can have 100 petabytes of data in it without creating another subscription.

An individual block blob can have 50,000 100MB blocks with a total size of 4.75TB. An append blob has a max size of 195GB. A page blob has a max size of 8TB.

In order to scale performance, we have several features available to us. We can implement an Azure CDN to enable geo-caching to keep blobs close to the users. We can implement read access geo-redundant storage and offload some of the reads to another geographic location (thus creating a mini-CDN that will be slower, but cheaper).

Azure Storage blobs (and tables, queues, and files, too) have an amazing feature. By far, the most expensive services for most cloud vendors is compute time. You pay for how many and how fast the processors are in the service you are using. Azure Storage doesn’t charge for compute. It only charges for disk space used and network bandwidth (which is a fairly nominal charge). Azure Storage blobs are partitioned by storage account name + container name + blob name. This means that each blob is retrieved by one and only one server. Many small files will perform better in Azure Storage than one large file. Blobs use containers for logical grouping, but each blob can be retrieved by different compute resources, even if they are in the same container.

Azure files

Azure file storage provides a way for applications to share storage accessible via SMB 2.1 protocol. It is particularly useful for VMs and cloud services as a mounted share, and applications can use the File Storage API to access file storage.

Implement blob leasing

You can create a lock on a blob for write and delete operations. The lock can be between 15 and 60 seconds or it can be infinite. To write to a blob with an active lease, the client must include the active lease ID with the request.

When a client requests a lease, a lease ID is returned. The client may then use this lease ID to renew, change, or release the lease. When the lease is active, the lease ID must be included to write to the blob, set any meta data, add to the blob (through append), copy the blob, or delete the blob. You may still read a blob that has an active lease ID to another client and without using the lease ID.

The code to acquire a lease looks like the following example (assuming the blockBlob variable was instantiated earlier):

TimeSpan? leaseTime = TimeSpan.FromSeconds(60);

string leaseID = blockBlob.AcquireLease(leaseTime, null);

Create connections to files from on-premises or cloudbased Windows or, Linux machines

Azure Files can be used to replace on-premise file servers or NAS devices. You can connect to Azure Files using Windows, Linux, or MacOS.

You can mount an Azure File share using Windows File Explorer, PowerShell, or the Command Prompt. To use File Explorer, follow these steps:

Open File Explorer

Under the computer menu, click Map Network Drive (see Figure 2-1).

Copy the UNC path from the Connect pane in the Azure portal, as shown in Figure 2-2.

Select the drive letter and enter the UNC path.

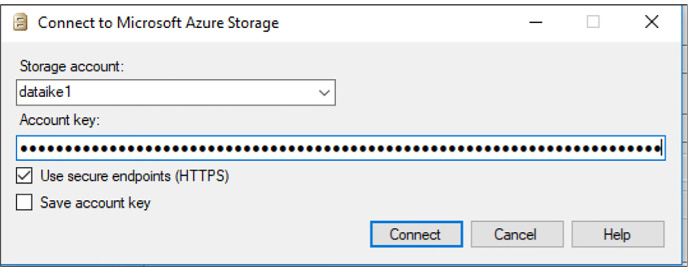

Use the storage account name prepended with Azure as the username and the Storage Account Key as the password (see Figure 2-3).

The PowerShell code to map a drive to Azure Files looks like this:

$acctKey = ConvertTo-SecureString -String "<storage-account-key>" -AsPlainText

-Force

$credential = New-Object System.Management.Automation.PSCredential -ArgumentList

"Azure<storage-account-name>", $acctKey

New-PSDrive -Name <desired-drive-letter> -PSProvider FileSystem -Root

"\<storage-account-name>.file.core.windows.net<share-name>" -Credential $credential

To map a drive using a command prompt, use a command that looks like this:

net use <desired-drive-letter>: \<storage-account-name>.file.core.windows.net

<share-name> <storage-account-key> /user:Azure<storage-account-name>

To use Azure Files on a Linux machine, first install the cifs-utils package. Then create a folder for a mount point using mkdir. Afterwards, use the mount command with code similar to the following:

sudo mount -t cifs //<storage-account-name>.file.core.windows.net/<share-name>

./mymountpoint -o vers=2.1,username=<storage-account-name>,password=<storage-

account-key>,dir_mode=0777,file_mode=0777,serverino

Shard large datasets

Each blob is held in a container in Azure Storage. You can use containers to group related blobs that have the same security requirements. The partition key of a blob is account name + container name + blob name. Each blob can have its own partition if load on the blob demands it. A single blob can only be served by a single server. If sharding is needed, you need to create multiple blobs.

Skill 2.2: Implement Azure Storage tables, queues, and Azure Cosmos DB Table API

Azure Tables are used to store simple tabular data at petabyte scale on Microsoft Azure. Azure Queue storage is used to provide messaging between application components so they can be de-coupled and scale under heavy load.

Azure Table Storage

Azure Tables are simple tables filled with rows and columns. They are a key-value database solution, which references how the data is stored and retrieved, not how complex the table can be. Tables store data as a collection of entities. Each entity has a property. Azure Tables can have 255 properties (or columns to hijack the relational vocabulary). The total entity size (or row size) cannot exceed 1MB. That might seem small initially, but 1MB can store a lot of tabular data per entity. Azure Tables are similar to Azure Storage blobs, in that you are not charged for compute time for inserting, updating, or retrieving your data. You are only charged for the total storage of your data.

Azure Tables are stored in the same storage account as Azure Storage blobs discussed earlier. Where blobs organize data based on container, Azure Tables organize data based on table name. Entities that are functionally the same should be stored in the same table. For example, all customers should be stored in the Customers table, while their orders should be stored in the Orders table.

Azure Tables store entities based on a partition key and a row key. Partition keys are the partition boundary. All entities stored with the same PartitionKey property are grouped into the same partition and are served by the same partition server. Choosing the correct partition key is a key responsibility of the Azure developer. Having a few partitions will improve scalability, as it will increase the number of partition servers handling your requests. Having too many partitions, however, will affect how you do batch operations like batch updates or large data retrieval. We will discuss this further at the end of this section.

Later in this chapter, we will discuss Azure SQL Database. Azure SQL Database also allows you to store tabular data. Why would you use Azure Tables vs Azure SQL Database? Why have two products that have similar functions? Well, actually they are very different.

Azure Tables service does not enforce any schema for tables. It simply stores the properties of your entity based on the partition key and the row key. If the data in the entity matches the data in your object model, your object is populated with the right values when the data is retrieved. Developers need to enforce the schema on the client side. All business logic for your application should be inside the application and not expected to be enforced in Azure Tables. Azure SQL Database also has an incredible amount of features that Azure Tables do not have including: stored procedures, triggers, indexes, constraints, functions, default values, row and column level security, SQL injection detection, and much, much more.

If Azure Tables are missing all of these features, why is the service so popular among developers? As we said earlier, you are not charged for compute resources when using Azure Tables, and you are charged in Azure SQL DB. This makes Azure Tables extremely affordable for large datasets. If we effectively use table partitioning, Azure Tables will also scale very well without sacrificing performance.

Now that you have a good overview of Azure Tables, let’s dive right in and look at using it. If you’ve been following along through Azure Storage blobs, some of this code will be familiar to you.

Using basic CRUD operations

In this section, you learn how to access table storage programmatically.

Creating a table

Create a C# console application.

In your app.config file, add an entry under the Configuration element, replacing the account name and key with your own storage account details:

<configuration>

<appSettings>

<add key="StorageConnectionString" value="DefaultEndpointsProtocol=

https;AccountName=<your account name>;AccountKey=<your account key>" />

</appSettings>

</configuration>

Use NuGet to obtain the Microsoft.WindowsAzure.Storage.dll. An easy way to do this is by using the following command in the NuGet console:

Install-package windowsazure.storage

Add the following using statements to the top of your Program.cs file:

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.Auth;

using Microsoft.WindowsAzure.Storage.Table;

using Microsoft.WindowsAzure;

using System.Configuration;Add a reference to System.Configuration.

Type the following command to retrieve your connection string in the Main function of Program.cs:

var storageAccount =CloudStorageAccount.Parse

( ConfigurationManager.AppSettings["StorageConnectionString"]);Use the following command to create a table if one doesn’t already exist:

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable table = tableClient.GetTableReference("orders");

table.CreateIfNotExists();

Inserting records

To add entries to a table, you create objects based on the TableEntity base class and serialize them into the table using the Storage Client Library. The following properties are provided for you in this base class:

![]() Partition Key Used to partition data across storage infrastructure

Partition Key Used to partition data across storage infrastructure

![]() Row Key Unique identifier in a partition

Row Key Unique identifier in a partition

![]() Timestamp Time of last update maintained by Azure Storage

Timestamp Time of last update maintained by Azure Storage

![]() ETag Used internally to provide optimistic concurrency

ETag Used internally to provide optimistic concurrency

The combination of partition key and row key must be unique within the table. This combination is used for load balancing and scaling, as well as for querying and sorting entities.

Follow these steps to add code that inserts records:

Add a class to your project, and then add the following code to it:

using System;

using Microsoft.WindowsAzure.Storage.Table;

public class OrderEntity : TableEntity

{

public OrderEntity(string customerName, string orderDate)

{

this.PartitionKey = customerName;

this.RowKey = orderDate;

}

public OrderEntity() { }

public string OrderNumber { get; set; }

public DateTime RequiredDate { get; set; }

public DateTime ShippedDate { get; set; }

public string Status { get; set; }

}Add the following code to the console program to insert a record:

CloudTableClient tableClient = storageAccount.CreateCloudTableClient();

CloudTable table = tableClient.GetTableReference("orders");

OrderEntity newOrder = new OrderEntity("Archer", "20141216");

newOrder.OrderNumber = "101";

newOrder.ShippedDate = Convert.ToDateTime("12/18/2017");

newOrder.RequiredDate = Convert.ToDateTime("12/14/2017");

newOrder.Status = "shipped";

TableOperation insertOperation = TableOperation.Insert(newOrder);

table.Execute(insertOperation);

Inserting multiple records in a transaction

You can group inserts and other operations into a single batch transaction. All operations in the batch must take place on the same partition. You can have up to 100 entities in a batch. The total batch payload size cannot be greater than four MBs.

The following code illustrates how to insert several records as part of a single transaction. This is done after creating a storage account object and table.:

TableBatchOperation batchOperation = new TableBatchOperation();

OrderEntity newOrder1 = new OrderEntity("Lana", "20141217");

newOrder1.OrderNumber = "102";

newOrder1.ShippedDate = Convert.ToDateTime("1/1/1900");

newOrder1.RequiredDate = Convert.ToDateTime("1/1/1900");

newOrder1.Status = "pending";

OrderEntity newOrder2 = new OrderEntity("Lana", "20141218");

newOrder2.OrderNumber = "103";

newOrder2.ShippedDate = Convert.ToDateTime("1/1/1900");

newOrder2.RequiredDate = Convert.ToDateTime("12/25/2014");

newOrder2.Status = "open";

OrderEntity newOrder3 = new OrderEntity("Lana", "20141219");

newOrder3.OrderNumber = "103";

newOrder3.ShippedDate = Convert.ToDateTime("12/17/2014");

newOrder3.RequiredDate = Convert.ToDateTime("12/17/2014");

newOrder3.Status = "shipped";

batchOperation.Insert(newOrder1);

batchOperation.Insert(newOrder2);

batchOperation.Insert(newOrder3);

table.ExecuteBatch(batchOperation);

Getting records in a partition

You can select all of the entities in a partition or a range of entities by partition and row key. Wherever possible, you should try to query with the partition key and row key. Querying entities by other properties does not work well because it launches a scan of the entire table.

Within a table, entities are ordered within the partition key. Within a partition, entities are ordered by the row key. RowKey is a string property, so sorting is handled as a string sort. If you are using a date value for your RowKey property use the following order: year, month, day. For instance, use 20140108 for January 8, 2014.

The following code requests all records within a partition using the PartitionKey property to query:

TableQuery<OrderEntity> query = new TableQuery<OrderEntity>().Where(

TableQuery.GenerateFilterCondition("PartitionKey", QueryComparisons.Equal, "Lana"));

foreach (OrderEntity entity in table.ExecuteQuery(query))

{

Console.WriteLine("{0}, {1} {2} {3}", entity.PartitionKey, entity.RowKey,

entity.Status, entity.RequiredDate);

}

Console.ReadKey();

Updating records

One technique you can use to update a record is to use InsertOrReplace(). This creates the record if one does not already exist or updates an existing record, based on the partition key and the row key. In this example, we retrieve a record we inserted during the batch insert example, change the status and shippedDate property and then execute an InsertOrReplace operation:

TableOperation retrieveOperation = TableOperation.Retrieve<OrderEntity>("Lana",

"20141217");

TableResult retrievedResult = table.Execute(retrieveOperation);

OrderEntity updateEntity = (OrderEntity)retrievedResult.Result;

if (updateEntity != null)

{

updateEntity.Status = "shipped";

updateEntity.ShippedDate = Convert.ToDateTime("12/20/2014");

TableOperation insertOrReplaceOperation = TableOperation.

InsertOrReplace(updateEntity);

table.Execute(insertOrReplaceOperation);

}

Deleting a record

To delete a record, first retrieve the record as shown in earlier examples, and then delete it with code, such as assuming deleteEntity is declared and populated similar to how we created one earlier:

TableOperation deleteOperation = TableOperation.Delete(deleteEntity);

table.Execute(deleteOperation);

Console.WriteLine("Entity deleted.");

Querying using ODATA

The Storage API for tables supports OData, which exposes a simple query interface for interacting with table data. Table storage does not support anonymous access, so you must supply credentials using the account key or a Shared Access Signature (SAS) (discussed in “Manage Access”) before you can perform requests using OData.

To query what tables you have created, provide credentials, and issue a GET request as follows:

https://myaccount.table.core.windows.net/Tables

To query the entities in a specific table, provide credentials, and issue a GET request formatted as follows:

https://<your account name>.table.core.windows.net/<your table

name>(PartitionKey=’<partition-key>’,RowKey=’<row-key>’)?$select=

<comma separated

property names>

Designing, managing, and scaling table partitions

The Azure Table service can scale to handle massive amounts of structured data and billions of records. To handle that amount, tables are partitioned. The partition key is the unit of scale for storage tables. The table service will spread your table to multiple servers and key all rows with the same partition key co-located. Thus, the partition key is an important grouping, not only for querying but also for scalability.

There are three types of partition keys to choose from:

![]() Single value There is one partition key for the entire table. This favors a small number of entities. It also makes batch transactions easier since batch transactions need to share a partition key to run without error. It does not scale well for large tables since all rows will be on the same partition server.

Single value There is one partition key for the entire table. This favors a small number of entities. It also makes batch transactions easier since batch transactions need to share a partition key to run without error. It does not scale well for large tables since all rows will be on the same partition server.

![]() Multiple values This might place each partition on its own partition server. If the partition size is smaller, it’s easier for Azure to load balance the partitions. Partitions might get slower as the number of entities increases. This might make further partitioning necessary at some point.

Multiple values This might place each partition on its own partition server. If the partition size is smaller, it’s easier for Azure to load balance the partitions. Partitions might get slower as the number of entities increases. This might make further partitioning necessary at some point.

![]() Unique values This is many small partitions. This is highly scalable, but batch transactions are not possible.

Unique values This is many small partitions. This is highly scalable, but batch transactions are not possible.

For query performance, you should use the partition key and row key together when possible. This leads to an exact row match. The next best thing is to have an exact partition match with a row range. It is best to avoid scanning the entire table.

Azure Storage Queues

The Azure Storage Queue service provides a mechanism for reliable inter-application messaging to support asynchronous distributed application workflows. This section covers a few fundamental features of the Queue service for adding messages to a queue, processing those messages individually or in a batch, and scaling the service.

Adding messages to a queue

You can access your storage queues and add messages to a queue using many storage browsing tools; however, it is more likely you will add messages programmatically as part of your application workflow.

The following code demonstrates how to add messages to a queue. In order to use it, you will need a using statement for Microsoft.WindowsAzure.Storage.Queue. You can also create a queue in the portal called, “queue:”

CloudQueueClient queueClient = storageAccount.CreateCloudQueueClient();

//This code assumes you have a queue called "queue" already. If you don’t have one, you

should call queue.CreateIfNotExists();

CloudQueue queue = queueClient.GetQueueReference("queue");

queue.AddMessage(new CloudQueueMessage("Queued message 1"));

queue.AddMessage(new CloudQueueMessage("Queued message 2"));

queue.AddMessage(new CloudQueueMessage("Queued message 3"));

In the Azure Portal, you can browse to your storage account, browse to Queues, click the queue in the list and see the above messages.

Processing messages

Messages are typically published by a separate application in the system from the application that listens to the queue and processes messages. As shown in the previous section, you can create a CloudQueue reference and then proceed to call GetMessage() to de-queue the next available message from the queue as follows:

CloudQueueMessage message = queue.GetMessage(new TimeSpan(0, 5, 0));

if (message != null)

{

string theMessage = message.AsString;

// your processing code goes here

}

Retrieving a batch of messages

A queue listener can be implemented as single-threaded (processing one message at a time) or multi-threaded (processing messages in a batch on separate threads). You can retrieve up to 32 messages from a queue using the GetMessages() method to process multiple messages in parallel. As discussed in the previous sections, create a CloudQueue reference, and then proceed to call GetMessages(). Specify the number of items to de-queue up to 32 (this number can exceed the number of items in the queue) as follows:

IEnumerable<CloudQueueMessage> batch = queue.GetMessages(10, new TimeSpan(0, 5, 0));

foreach (CloudQueueMessage batchMessage in batch)

{

Console.WriteLine(batchMessage.AsString);

}

Scaling queues

When working with Azure Storage queues, you need to consider a few scalability issues, including the messaging throughput of the queue itself and the design topology for processing messages and scaling out as needed.

Each individual queue has a target of approximately 20,000 messages per second (assuming a message is within 1 KB). You can partition your application to use multiple queues to increase this throughput value.

As for processing messages, it is more cost effective and efficient to pull multiple messages from the queue for processing in parallel on a single compute node; however, this depends on the type of processing and resources required. Scaling out compute nodes to increase processing throughput is usually also required.

You can configure VMs or cloud services to auto-scale by queue. You can specify the average number of messages to be processed per instance, and the auto-scale algorithm will queue to run scale actions to increase or decrease available instances accordingly.

Choose between Azure Storage Tables and Azure Cosmos DB Table API

Azure Cosmos DB is a cloud-hosted, NoSQL database that allows different data models to be implemented. NoSQL databases can be key/value stores, table stores, and graph stores (along with several others). Azure Cosmos DB has different engines that accommodate these different models. Azure Cosmos DB Table API is a key value store that is very similar to Azure Storage Tables.

The main differences between these products are:

![]() Azure Cosmos DB is much faster, with latency lower than 10ms on reads and 15ms on writes at any scale.

Azure Cosmos DB is much faster, with latency lower than 10ms on reads and 15ms on writes at any scale.

![]() Azure Table Storage only supports a single region with one optional readable secondary for high availability. Azure Cosmos DB supports over 30 regions.

Azure Table Storage only supports a single region with one optional readable secondary for high availability. Azure Cosmos DB supports over 30 regions.

![]() Azure Table Storage only indexes the partition key and the row key. Azure Cosmos DB automatically indexes all properties.

Azure Table Storage only indexes the partition key and the row key. Azure Cosmos DB automatically indexes all properties.

![]() Azure Table Storage only supports strong or eventual consistency. Consistency refers to how up to date the data is that you read and weather you see the latest writes from other users. Stronger consistency means less overall throughput and concurrent performance while having more up to date data. Eventual consistency allows for high concurrent throughput but you might see older data. Azure Cosmos DB supports five different consistency models and allows those models to be specified at the session level. This means that one user or feature might have a different consistency level than a different user or feature.

Azure Table Storage only supports strong or eventual consistency. Consistency refers to how up to date the data is that you read and weather you see the latest writes from other users. Stronger consistency means less overall throughput and concurrent performance while having more up to date data. Eventual consistency allows for high concurrent throughput but you might see older data. Azure Cosmos DB supports five different consistency models and allows those models to be specified at the session level. This means that one user or feature might have a different consistency level than a different user or feature.

![]() Azure Table Storage only charges you for the storage fees, not for compute fees. This makes Azure Table Storage very affordable. Azure Cosmos DB charges for a Request Unit (RU) which really is a way for a PAAS product to charge for compute fees. If you need more RUs, you can scale them up. This makes Cosmos DB significantly more expensive than Azure Storage Tables.

Azure Table Storage only charges you for the storage fees, not for compute fees. This makes Azure Table Storage very affordable. Azure Cosmos DB charges for a Request Unit (RU) which really is a way for a PAAS product to charge for compute fees. If you need more RUs, you can scale them up. This makes Cosmos DB significantly more expensive than Azure Storage Tables.

Skill 2.3: Manage access and monitor storage

We have already learned how Azure Storage allows access through access keys, but what happens if we want to gain access to specific resources without giving keys to the entire storage account? In this topic, we’ll introduce security issues that may arise and how to solve them.

Azure Storage has a built-in analytics feature called Azure Storage Analytics used for collecting metrics and logging storage request activity. You enable Storage Analytics Metrics to collect aggregate transaction and capacity data, and you enable Storage Analytics Logging to capture successful and failed request attempts to your storage account. This section covers how to enable monitoring and logging, control logging levels, set retention policies, and analyze the logs.

Generate shared access signatures

By default, storage resources are protected at the service level. Only authenticated callers can access tables and queues. Blob containers and blobs can optionally be exposed for anonymous access, but you would typically allow anonymous access only to individual blobs. To authenticate to any storage service, a primary or secondary key is used, but this grants the caller access to all actions on the storage account.

An SAS is used to delegate access to specific storage account resources without enabling access to the entire account. An SAS token lets you control the lifetime by setting the start and expiration time of the signature, the resources you are granting access to, and the permissions being granted.

The following is a list of operations supported by SAS:

![]() Reading or writing blobs, blob properties, and blob metadata

Reading or writing blobs, blob properties, and blob metadata

![]() Leasing or creating a snapshot of a blob

Leasing or creating a snapshot of a blob

![]() Listing blobs in a container

Listing blobs in a container

![]() Deleting a blob

Deleting a blob

![]() Adding, updating, or deleting table entities

Adding, updating, or deleting table entities

![]() Querying tables

Querying tables

![]() Processing queue messages (read and delete)

Processing queue messages (read and delete)

![]() Adding and updating queue messages

Adding and updating queue messages

![]() Retrieving queue metadata

Retrieving queue metadata

This section covers creating an SAS token to access storage services using the Storage Client Library.

Creating an SAS token (Blobs)

The following code shows how to create an SAS token for a blob container. Note that it is created with a start time and an expiration time. It is then applied to a blob container:

SharedAccessBlobPolicy sasPolicy = new SharedAccessBlobPolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

sasPolicy.Permissions = SharedAccessBlobPermissions.Read | SharedAccessBlobPermissions.

Write | SharedAccessBlobPermissions.Delete | SharedAccessBlobPermissions.List;

CloudBlobContainer files = blobClient.GetContainerReference("files");

string sasContainerToken = files.GetSharedAccessSignature(sasPolicy);

The SAS token grants read, write, delete, and list permissions to the container (rwdl). It looks like this:

?sv=2014-02-14&sr=c&sig=B6bi4xKkdgOXhWg3RWIDO5peekq%2FRjvnuo5o41hj1pA%3D&st=2014

-12-24T14%3A16%3A07Z&se=2014-12-24T15%3A21%3A07Z&sp=rwdl

You can use this token as follows to gain access to the blob container without a storage account key:

StorageCredentials creds = new StorageCredentials(sasContainerToken);

CloudStorageAccount accountWithSAS = new CloudStorageAccount(accountSAS, "account-name",

endpointSuffix: null, useHttps: true);

CloudBlobClientCloudBlobContainer sasFiles = sasClient.GetContainerReference("files");

With this container reference, if you have write permissions, you can interact with the container as you normally would assuming you have the correct permissions.

Creating an SAS token (Queues)

Assuming the same account reference as created in the previous section, the following code shows how to create an SAS token for a queue:

CloudQueueClient queueClient = account.CreateCloudQueueClient();

CloudQueue queue = queueClient.GetQueueReference("queue");

SharedAccessQueuePolicy sasPolicy = new SharedAccessQueuePolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.Permissions = SharedAccessQueuePermissions.Read |

SharedAccessQueuePermissions.Add | SharedAccessQueuePermissions.Update |

SharedAccessQueuePermissions.ProcessMessages;

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

string sasToken = queue.GetSharedAccessSignature(sasPolicy);

The SAS token grants read, add, update, and process messages permissions to the container (raup). It looks like this:

?sv=2014-02-14&sig=wE5oAUYHcGJ8chwyZZd3Byp5jK1Po8uKu2t%2FYzQsIhY%3D&st=2014-12-2 4T14%3A23%3A22Z&se=2014-12-24T15%3A28%3A22Z&sp=raup

You can use this token as follows to gain access to the queue and add messages:

StorageCredentials creds = new StorageCredentials(sasContainerToken);

CloudQueueClient sasClient = new CloudQueueClient(new

Uri("https://dataike1.queue.core.windows.net/"), creds);

CloudQueue sasQueue = sasClient.GetQueueReference("queue");

sasQueue.AddMessage(new CloudQueueMessage("new message"));

Console.ReadKey();

Creating an SAS token (Tables)

The following code shows how to create an SAS token for a table:

SharedAccessTablePolicy sasPolicy = new SharedAccessTablePolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.Permissions = SharedAccessTablePermissions.Query |

SharedAccessTablePermissions.Add | SharedAccessTablePermissions.Update |

SharedAccessTablePermissions.Delete;

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

string sasToken = table.GetSharedAccessSignature(sasPolicy);

The SAS token grants query, add, update, and delete permissions to the container (raud). It looks like this:

?sv=2014-02-14&tn=%24logs&sig=dsnI7RBA1xYQVr%2FTlpDEZMO2H8YtSGwtyUUntVmxstA%3D&s

t=2014-12-24T14%3A48%3A09Z&se=2014-12-24T15%3A53%3A09Z&sp=raud

Renewing an SAS token

SAS tokens have a limited period of validity based on the start and expiration times requested. You should limit the duration of an SAS token to limit access to controlled periods of time. You can extend access to the same application or user by issuing new SAS tokens on request. This should be done with appropriate authentication and authorization in place.

Validating data

When you extend write access to storage resources with SAS, the contents of those resources can potentially be made corrupt or even be tampered with by a malicious party, particularly if the SAS was leaked. Be sure to validate system use of all resources exposed with SAS keys.

Create stored access policies

Stored access policies provide greater control over how you grant access to storage resources using SAS tokens. With a stored access policy, you can do the following after releasing an SAS token for resource access:

![]() Change the start and end time for a signature’s validity

Change the start and end time for a signature’s validity

![]() Control permissions for the signature

Control permissions for the signature

![]() Revoke access

Revoke access

The stored access policy can be used to control all issued SAS tokens that are based on the policy. For a step-by-step tutorial for creating and testing stored access policies for blobs, queues, and tables, see http://azure.microsoft.com/en-us/documentation/articles/storage-dotnet-shared-access-signature-part-2.

Regenerate storage account keys

When you create a storage account, two 512-bit storage access keys are generated for authentication to the storage account. This makes it possible to regenerate keys without impacting application access to storage.

The process for managing keys typically follows this pattern:

When you create your storage account, the primary and secondary keys are generated for you. You typically use the primary key when you first deploy applications that access the storage account.

When it is time to regenerate keys, you first switch all application configurations to use the secondary key.

Next, you regenerate the primary key, and switch all application configurations to use this primary key.

Next, you regenerate the secondary key.

Regenerating storage account keys

To regenerate storage account keys using the portal, complete the following steps:

Navigate to the management portal accessed via https://portal.azure.com.

Select your storage account from your dashboard or your All Resources list.

Click the Keys box.

On the Manage Keys blade, click Regenerate Primary or Regenerate Secondary on the command bar, depending on which key you want to regenerate.

In the confirmation dialog box, click Yes to confirm the key regeneration.

Configure and use Cross-Origin Resource Sharing

Cross-Origin Resource Sharing (CORS) enables web applications running in the browser to call web APIs that are hosted by a different domain. Azure Storage blobs, tables, and queues all support CORS to allow for access to the Storage API from the browser. By default, CORS is disabled, but you can explicitly enable it for a specific storage service within your storage account.

Configure storage metrics

Storage Analytics metrics provide insight into transactions and capacity for your storage accounts. You can think of them as the equivalent of Windows Performance Monitor counters. By default, storage metrics are not enabled, but you can enable them through the management portal, using Windows PowerShell, or by calling the management API directly.

When you configure storage metrics for a storage account, tables are generated to store the output of metrics collection. You determine the level of metrics collection for transactions and the retention level for each service: Blob, Table, and Queue.

Transaction metrics record request access to each service for the storage account. You specify the interval for metric collection (hourly or by minute). In addition, there are two levels of metrics collection:

![]() Service level These metrics include aggregate statistics for all requests, aggregated at the specified interval. Even if no requests are made to the service, an aggregate entry is created for the interval, indicating no requests for that period.

Service level These metrics include aggregate statistics for all requests, aggregated at the specified interval. Even if no requests are made to the service, an aggregate entry is created for the interval, indicating no requests for that period.

![]() API level These metrics record every request to each service only if a request is made within the hour interval.

API level These metrics record every request to each service only if a request is made within the hour interval.

Capacity metrics are only recorded for the Blob service for the account. Metrics include total storage in bytes, the container count, and the object count (committed and uncommitted).

Table 2-1 summarizes the tables automatically created for the storage account when Storage Analytics metrics are enabled.

TABLE 2-1 Storage metrics tables

METRICS |

TABLE NAMES |

Hourly metrics |

$MetricsHourPrimaryTransactionsBlob $MetricsHourPrimaryTransactionsTable $MetricsHourPrimaryTransactionsQueue $MetricsHourPrimaryTransactionsFile |

Minute metrics (cannot set through the management portal) |

$MetricsMinutePrimaryTransactionsBlob $MetricsMinutePrimaryTransactionsTable $MetricsMinutePrimaryTransactionsQueue $MetricsMinutePrimaryTransactionsFile |

Capacity (only for the Blob service) |

$MetricsCapacityBlob |

Retention can be configured for each service in the storage account. By default, Storage Analytics will not delete any metrics data. When the shared 20-terabyte limit is reached, new data cannot be written until space is freed. This limit is independent of the storage limit of the account. You can specify a retention period from 0 to 365 days. Metrics data is automatically deleted when the retention period is reached for the entry.

When metrics are disabled, existing metrics that have been collected are persisted up to their retention policy.

Configuring storage metrics and retention

To enable storage metrics and associated retention levels for Blob, Table, and Queue services in the portal, follow these steps:

Navigate to the management portal accessed via https://portal.azure.com.

Select your storage account from your dashboard or your All resources list.

Scroll down to the Usage section, and click the Capacity graph check box.

On the Metric blade, click Diagnostics Settings on the command bar.

Click the On button under Status. This shows the options for metrics and logging.

If this storage account uses blobs, select Blob Aggregate Metrics to enable service level metrics. Select Blob Per API Metrics for API level metrics.

If this storage account uses blobs, select Blob Aggregate Metrics to enable service level metrics. Select Blob Per API Metrics for API level metrics. If this storage account uses tables, select Table Aggregate Metrics to enable service level metrics. Select Table Per API Metrics for API level metrics.

If this storage account uses tables, select Table Aggregate Metrics to enable service level metrics. Select Table Per API Metrics for API level metrics. If this storage account uses queues, select Queue Aggregate Metrics to enable service level metrics. Select Queue Per API Metrics for API level metrics.

If this storage account uses queues, select Queue Aggregate Metrics to enable service level metrics. Select Queue Per API Metrics for API level metrics.

Provide a value for retention according to your retention policy. Through the portal, this will apply to all services. It will also apply to Storage Analytics Logging if that is enabled. Select one of the available retention settings from the slider-bar, or enter a number from 0 to 365.

Analyze storage metrics

Storage Analytics metrics are collected in tables as discussed in the previous section. You can access the tables directly to analyze metrics, but you can also review metrics in both Azure management portals. This section discusses various ways to access metrics and review or analyze them.

Monitor metrics

At the time of this writing, the portal features for monitoring metrics is limited to some predefined metrics, including total requests, total egress, average latency, and availability (see Figure 2-4). Click each box to see a Metric blade that provides additional detail.

To monitor the metrics available in the portal, complete the following steps:

Navigate to the management portal accessed via https://portal.azure.com.

Select your storage account from your dashboard or your All Resources list.

Scroll down to the Monitor section, and view the monitoring boxes summarizing statistics. You’ll see TotalRequests, TotalEgress, AverageE2ELatency, and AvailabilityToday by default.

Click each metric box to view additional details for each metric. You’ll see metrics for blobs, tables, and queues if all three metrics are being collected.

Configure Storage Analytics Logging

Storage Analytics Logging provides details about successful and failed requests to each storage service that has activity across the account’s blobs, tables, and queues. By default, storage logging is not enabled, but you can enable it through the management portal, by using Windows PowerShell, or by calling the management API directly.

When you configure Storage Analytics Logging for a storage account, a blob container named $logs is automatically created to store the output of the logs. You choose which services you want to log for the storage account. You can log any or all of the Blob, Table, or Queue servicesLogs are created only for those services that have activity, so you will not be charged if you enable logging for a service that has no requests. The logs are stored as block blobs as requests are logged and are periodically committed so that they are available as blobs.

Retention can be configured for each service in the storage account. By default, Storage Analytics will not delete any logging data. When the shared 20-terabyte limit is reached, new data cannot be written until space is freed. This limit is independent of the storage limit of the account. You can specify a retention period from 0 to 365 days. Logging data is automatically deleted when the retention period is reached for the entry.

Set retention policies and logging levels To enable storage logging and associated retention levels for Blob, Table, and Queue services in the portal, follow these steps:

Navigate to the management portal accessed via https://portal.azure.com.

Select your storage account from your dashboard or your All resources list.

Under the Metrics section, click Diagnostics.

Click the On button under Status. This shows the options for enabling monitoring features.

If this storage account uses blobs, select Blob Logs to log all activity.

If this storage account uses tables, select Table Logs to log all activity.

If this storage account uses queues, select Queue Logs to log all activity.

Provide a value for retention according to your retention policy. Through the portal, this will apply to all services. It will also apply to Storage Analytics Metrics if that is enabled. Select one of the available retention settings from the drop-down list, or enter a number from 0 to 365.

Enable client-side logging

You can enable client-side logging using Microsoft Azure storage libraries to log activity from client applications to your storage accounts. For information on the .NET Storage Client Library, see: http://msdn.microsoft.com/en-us/library/azure/dn782839.aspx. For information on the Storage SDK for Java, see: http://msdn.microsoft.com/en-us/library/azure/dn782844.aspx.

Analyze logs

Logs are stored as block blobs in delimited text format. When you access the container, you can download logs for review and analysis using any tool compatible with that format. Within the logs, you’ll find entries for authenticated and anonymous requests, as listed in Table 2-2.

TABLE 2-2 Authenticated and anonymous logs

Request type |

Logged requests |

Authenticated requests |

|

Anonymous requests |

|

Logs include status messages and operation logs. Status message columns include those shown in Table 2-3. Some status messages are also reported with storage metrics data. There are many operation logs for the Blob, Table, and Queue services.

TABLE 2-3 Information included in logged status messages

Column |

Description |

Status Message |

Indicates a value for the type of status message, indicating type of success or failure |

Description |

Describes the status, including any HTTP verbs or status codes |

Billable |

Indicates whether the request was billable |

Availability |

Indicates whether the request is included in the availability calculation for storage metrics |

Finding your logs

When storage logging is configured, log data is saved to blobs in the $logs container created for your storage account. You can’t see this container by listing containers, but you can navigate directly to the container to access, view, or download the logs.

To view analytics logs produced for a storage account, do the following:

Using a storage browsing tool, navigate to the $logs container within the storage account you have enabled Storage Analytics Logging for using this convention: https://<accountname>.blob.core.windows.net/$logs.

View the list of log files with the convention <servicetype>/YYYY/MM/DD/HHMM/<counter>.log.

Select the log file you want to review, and download it using the storage browsing tool.

View logs with Microsoft Excel

Storage logs are recorded in a delimited format so that you can use any compatible tool to view logs. To view logs data in Excel, follow these steps:

Open Excel, and on the Data menu, click From Text.

Find the log file and click Import.

During import, select Delimited format. Select Semicolon as the only delimiter, and Double-Quote (“) as the text qualifier.

Analyze logs

After you load your logs into a viewer like Excel, you can analyze and gather information such as the following:

![]() Number of requests from a specific IP range

Number of requests from a specific IP range

![]() Which tables or containers are being accessed and the frequency of those requests

Which tables or containers are being accessed and the frequency of those requests

![]() Which user issued a request, in particular, any requests of concern

Which user issued a request, in particular, any requests of concern

![]() Slow requests

Slow requests

![]() How many times a particular blob is being accessed with an SAS URL

How many times a particular blob is being accessed with an SAS URL

![]() Details to assist in investigating network errors

Details to assist in investigating network errors

Skill 2.4: Implement Azure SQL databases

In this section, you learn about Microsoft Azure SQL Database, a PaaS offering for relational data.

Choosing the appropriate database tier and performance level

Choosing a SQL Database tier used to be simply a matter of storage space. Recently, Microsoft added new tiers that also affect the performance of SQL Database. This tiered pricing is called Service Tiers. There are three service tiers to choose from, and while they still each have restrictions on storage space, they also have some differences that might affect your choice. The major difference is in a measurement called database throughput units (DTUs). A DTU is a blended measure of CPU, memory, disk reads, and disk writes. Because SQL Database is a shared resource with other Azure customers, sometimes performance is not stable or predictable. As you go up in performance tiers, you also get better predictability in performance.

![]() Basic Basic tier is meant for light workloads. There is only one performance level of the basic service tier. This level is good for small use, new projects, testing, development, or learning.

Basic Basic tier is meant for light workloads. There is only one performance level of the basic service tier. This level is good for small use, new projects, testing, development, or learning.

![]() Standard Standard tier is used for most production online transaction processing (OLTP) databases. The performance is more predictable than the basic tier. In addition, there are four performance levels under this tier, levels S0 to S3 (S4 – S12 are currently in preview).

Standard Standard tier is used for most production online transaction processing (OLTP) databases. The performance is more predictable than the basic tier. In addition, there are four performance levels under this tier, levels S0 to S3 (S4 – S12 are currently in preview).

![]() Premium Premium tier continues to scale at the same level as the standard tier. In addition, performance is typically measured in seconds. For instance, the basic tier can handle 16,600 transactions per hour. The standard/S2 level can handle 2,570 transactions per minute. The top tier of premium can handle 735 transactions per second. That translates to 2,645,000 per hour in basic tier terminology.

Premium Premium tier continues to scale at the same level as the standard tier. In addition, performance is typically measured in seconds. For instance, the basic tier can handle 16,600 transactions per hour. The standard/S2 level can handle 2,570 transactions per minute. The top tier of premium can handle 735 transactions per second. That translates to 2,645,000 per hour in basic tier terminology.

There are many similarities between the various tiers. Each tier has a 99.99 percent uptime SLA, backup and restore capabilities, access to the same tooling, and the same database engine features. Fortunately, the levels are adjustable, and you can change your tier as your scaling requirements change.

The management portal can help you select the appropriate level. You can review the metrics on the Metrics tab to see the current load of your database and decide whether to scale up or down.

Click the SQL database you want to monitor.

Click the DTU tab, as shown in Figure 2-5.

Add the following metrics:

CPU Percentage

CPU Percentage Physical Data Reads Percentage

Physical Data Reads Percentage Log Writes Percentage

Log Writes Percentage

All three of these metrics are shown relative to the DTU of your database. If you reach 80 percent of your performance metrics, it’s time to consider increasing your service tier or performance level. If you’re consistently below 10 percent of the DTU, you might consider decreasing your service tier or performance level. Be aware of momentary spikes in usage when making your choice.

In addition, you can configure an email alert for when your metrics are 80 percent of your selected DTU by completing the following steps:

Click the metric.

Click Add Rule.

The first page of the Create Alert Rule dialog box is shown in Figure 2-6. Add a name and description, and then click the right arrow.

Scroll down for the rest of the page of the Create Alert Rule dialog box, shown in Figure 2-7, select the condition and the threshold value.

Select your alert evaluation window. An email will be generated if the event happens over a specific duration. You should indicate at least 10 minutes.

Select the action. You can choose to send an email either to the service administrator(s) or to a specific email address.

Configuring and performing point in time recovery

Azure SQL Database does a full backup every week, a differential backup each day, and an incremental log backup every five minutes. The incremental log backup allows for a point in time restore, which means the database can be restored to any specific time of day. This means that if you accidentally delete a customer’s table from your database, you will be able to recover it with minimal data loss if you know the timeframe to restore from that has the most recent copy.

The length of time it takes to do a restore varies. The further away you get from the last differential backup determines the longer the restore operation takes because there are more log backups to restore. When you restore a new database, the service tier stays the same, but the performance level changes to the minimum level of that tier.

Depending on your service tier, you will have different backup retention periods. Basic retains backups for 7 days. Standard and premium retains for 35 days.

You can restore a database that was deleted as long as you are within the retention period. Follow these steps to restore a database:

Select the database you want to restore, and then click Restore.

The Restore dialog box opens, as shown in Figure 2-8.

Select a database name.

Select a restore point. You can use the slider bar or manually enter a date and time.

You can also restore a deleted database. Click on the SQL Server (not the database) that once held the database you wish to restore. Select the Deleted Databases tab, as shown in Figure 2-9.

Select the database you want to restore.

Click Restore as you did in Step 1.

Specify a database name for the new database.

Click Submit.

Enabling geo-replication

Every Azure SQL Database subscription has built-in redundancy. Three copies of your data are stored across fault domains in the datacenter to protect against server and hardware failure. This is built in to the subscription price and is not configurable.

In addition, you can configure active geo-replication. This allows your data to be replicated between Azure data centers. Active geo-replication has the following benefits:

![]() Database-level disaster recovery goes quickly when you’ve replicated transactions to databases on different SQL Database servers in the same or different regions.

Database-level disaster recovery goes quickly when you’ve replicated transactions to databases on different SQL Database servers in the same or different regions.

![]() You can fail over to a different data center in the event of a natural disaster or other intentionally malicious act.

You can fail over to a different data center in the event of a natural disaster or other intentionally malicious act.

![]() Online secondary databases are readable, and they can be used as load balancers for read-only workloads such as reporting.

Online secondary databases are readable, and they can be used as load balancers for read-only workloads such as reporting.