Chapter 4. Design and implement Azure PaaS compute and web and mobile services

The Azure platform provides a rich set of Platform-as-a-Service (PaaS) capabilities for hosting web applications and services. The platform approach provides more than just a host for running your application logic; it also includes robust mechanisms for managing all aspects of your web application lifecycle, from configuring continuous and staged deployments to managing runtime configuration, monitoring health and diagnostic data, and of course, helping with scale and resilience. Azure Apps Services includes a number of features to manage web applications and services including Web Apps, Logic Apps, Mobile Apps and API Apps. API Management provides additional features with first class integration to APIs hosted in Azure. Azure Functions and Azure Service Fabric enable modern microservices architectures for your solutions, in addition to several third-party platforms that can be provisioned via Azure Quickstart Templates. These key features are of prime importance to the modern web application, and this chapter explores how to leverage them.

Skills in this chapter:

![]() Skill 4.1: Design Azure App Service Web Apps

Skill 4.1: Design Azure App Service Web Apps

![]() Skill 4.2: Design Azure App Service API Apps

Skill 4.2: Design Azure App Service API Apps

![]() Skill 4.3: Develop Azure App Service Logic Apps

Skill 4.3: Develop Azure App Service Logic Apps

![]() Skill 4.4: Develop Azure App Service Mobile Apps

Skill 4.4: Develop Azure App Service Mobile Apps

![]() Skill 4.5: Implement API Management

Skill 4.5: Implement API Management

![]() Skill 4.6: Implement Azure Functions and WebJobs

Skill 4.6: Implement Azure Functions and WebJobs

![]() Skill 4.7: Design and implement Azure Service Fabric Apps

Skill 4.7: Design and implement Azure Service Fabric Apps

![]() Skill 4.8: Design and implement third-party Platform as a Service (PaaS)

Skill 4.8: Design and implement third-party Platform as a Service (PaaS)

![]() Skill 4.9: Design and implement DevOps

Skill 4.9: Design and implement DevOps

Skill 4.1: Design Azure App Service Web Apps

Azure App Service Web Apps (or, just Web Apps) provides a managed service for hosting your web applications and APIs with infrastructure services such as security, load balancing, and scaling provided as part of the service. In addition, Web Apps has an integrated DevOps experience from code repositories and from Docker image repositories. You pay for compute resources according to your App Service Plan and scale settings. This section covers key considerations for designing and deploying your applications as Web Apps.

Define and manage App Service plans

An App Service plan defines the supported feature set and capacity of a group of virtual machine resources that are hosting one or more web apps, logic apps, mobile apps, or API apps (this section discusses web apps specifically, and the other resources are covered in later sections in this chapter).

Each App Service plan is configured with a pricing tier (for example, Free, Shared, Basic, and Standard), and each tier describes its own set of capabilities and cost. An App Service plan is unique to the region, resource group, and subscription. In other words, two web apps can participate in the same App Service plan only when they are created in the same subscription, resource group, and region (with the same pricing tier requirements).

This section describes how to create a new App Service plan without creating a web app, and how to create a new App Service plan while creating a web app. It also reviews some of the settings that can be useful for managing the App Service plan.

Creating a new App Service plan

To create a new App Service plan in the portal, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select New on the command bar.

Within the Marketplace (Figure 4-1) search text box, type App Service Plan and press Enter.

Select App Service Plan from the results.

On the App Service Plan blade, select Create.

On the New App Service Plan blade (Figure 4-2), provide a name for your App Service plan, choose the subscription, resource group, operating system (Windows or Linux), and location into which you want to deploy. You should also confirm and select the desired pricing tier.

Click Create to create the new App Service plan.

Following the creation of the new App Service plan, you can create a new web app and associate this with the previously created App Service plan. Or, as discussed in the next section, you can create a new App Service plan as you create a new web app.

Creating a new Web App and App Service plan

To create a new Web App and a new App Service plan in the portal, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select New on the command bar.

Within the Marketplace list (Figure 4-3), select the Web + Mobile option.

On the Web + Mobile blade, select Web App.

On the Web App blade (Figure 4-4), provide an app name, choose the subscription, resource group, operating system (Windows or Linux), and choose a setting for Application Insights. You also select the App Service plan into which you want to deploy.

When you click the App Service plan selection, you can choose an existing App Service plan, or create a new App Service plan. To create a new App Service plan, click Create New from the App Service Plan blade.

From the New App Service Plan blade (Figure 4-5), choose a name for the App Service plan, select a location, and select a pricing tier. Click OK and the new App Service plan is created with these settings.

From the Web App blade, click Create to create the web app and associate it with the new App Service plan.

Review App Service plan settings

Once you’ve created a new App Service plan, you can select the App Service plan in the portal and manage relevant settings including managing web apps and adjusting scale.

To manage an App Service plan, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter text box, type App Service Plans, and select App Service Plans (Figure 4-6).

Review the list of App Service plans (Figure 4-7). Note the number of apps deployed to each is shown in the list. You can also see the pricing tiers. Select an App Service plan from the list to navigate to the App Service Plan blade.

From the left navigation pane, select Apps to view the apps that are deployed to the App Service plan (Figure 4-8). You can select from the list of apps to navigate to the app blade and manage its settings.

From the left navigation pane, select Scale Up to choose a new pricing tier for the App Service plan.

From the left navigation pane, select Scale Out to increase or decrease the number of instances of the App Service plan, or to configure Autoscale settings.

Configure Web App settings

Azure Web Apps provide a comprehensive collection of settings that you can adjust to establish the environment in which your web application runs, as well as tools to define and manage the values of settings used by your web application code. You can configure the following groups of settings for your applications:

![]() Application type and library versions

Application type and library versions

![]() Load balancing

Load balancing

![]() Slot management

Slot management

![]() Debugging

Debugging

![]() App settings and connection strings

App settings and connection strings

![]() IIS related settings

IIS related settings

To manage Web App settings follow these steps:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Select the Application settings tab from the left navigation pane. The setting blade appears to the right.

Choose from the general settings required for the application:

Choose the required language support from .NET Framework, PHP, Java, or Python, and their associated versions.

Choose between 32bit and 64bit runtime execution.

Choose web sockets if you are building a web application that leverages this feature from the browser.

Choose Always On if you do not want the web application to be unloaded when idle. This reduces the load time required for the next request and is a required setting for web jobs to run effectively.

Choose the type of managed pipeline for IIS. Integrated is the more modern pipeline and Classic would only be used for legacy applications (Figure 4-9).

Choose your setting for ARR affinity (Figure 4-10). If you choose to enable ARR affinity your users will be tied to a particular host machine (creating a sticky session) for the duration of their session. If you disable this, your application will not create a sticky session and your application is expected to support load balancing between machines within a session.

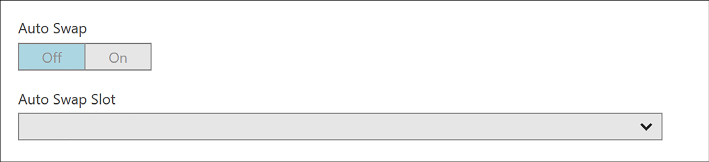

When you first create your web app, the auto swap settings are not available to configure. You must first create a new slot, and from the slot you may configure auto swap to another slot (Figure 4-11).

Enable remote debugging (Figure 4-12) if you run into situations where deployed applications are not functioning as expected. You can enable remote debugging for Visual Studio versions 2012, 2013, 2015, and 2017.

Configure the app settings required for your application. These app settings (Figure 4-13) override any settings matching the same name from your application.

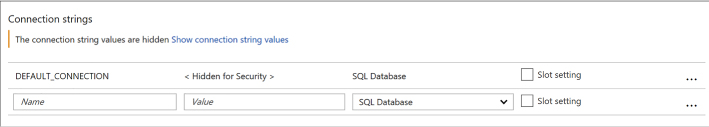

Configure any connection strings for your application (Figure 4-14). These connection string settings override any settings matching the same key name from your application configuration. For connection strings, once you create the settings, save, and later return to the application settings blade; those settings are hidden unless you select it to show the value again.

Configure IIS settings related to default documents, handlers, and virtual applications and directories required for your application (Figure 4-15). This allows you to control these IIS features related to your application.

Configure Web App certificates and custom domains

When you first create your web app, it is accessible through the subdomain you specified in the web app creation process, where it takes the form <yourwebappname>.azurewebsites.net. To map to a more user-friendly domain name (such as contoso.com), you must set up a custom domain name.

If your website will use HTTPS to secure communication between it and the browser using Transport Layer Security (TLS), more commonly (but less accurately) referred to in the industry as Secure Socket Layer (SSL), you need to utilize an SSL certificate. With Azure Web Apps, you can use an SSL certificate with your web app in one of two ways:

![]() You can use the “built-in” wildcard SSL certificate that is associated with the *.azurewebsites.net domain.

You can use the “built-in” wildcard SSL certificate that is associated with the *.azurewebsites.net domain.

![]() More commonly you use a certificate you purchase for your custom domain from a third-party certificate authority.

More commonly you use a certificate you purchase for your custom domain from a third-party certificate authority.

Mapping custom domain names

Web Apps support mapping to a custom domain that you purchase from a third-party registrar either by mapping the custom domain name to the virtual IP address of your website or by mapping it to the <yourwebappname>.azurewebsites.net address of your website. This mapping is captured in domain name system (DNS) records that are maintained by your domain registrar. Two types of DNS records effectively express this purpose:

![]() A records (or, address records) map your domain name to the IP address of your website.

A records (or, address records) map your domain name to the IP address of your website.

![]() CNAME records (or, alias records) map a subdomain of your custom domain name to the canonical name of your website, expressed as <yourwebappname>.azurewebsites.net.

CNAME records (or, alias records) map a subdomain of your custom domain name to the canonical name of your website, expressed as <yourwebappname>.azurewebsites.net.

Table 4-1 shows some common scenarios along with the type of record, the typical record name, and an example value based on the requirements of the mapping.

TABLE 4-1 Mapping domain name requirements to DNS record types, names, and values

Requirement |

Type of Record |

Record Name |

Record Value |

contoso.com should map to my web app IP address |

A |

@ |

138.91.240.81 IP address |

contoso.com and all subdomains demo.contoso.com and www.contoso.com should map to my web app IP address |

A |

* |

138.91.240.81 IP address |

www.contoso.com should map to my web app IP address |

A |

www |

138.91.240.81 IP address |

www.contoso.com should map to my web app canonical name in Azure |

CNAME |

www |

Canonical name in Azure |

Note that whereas A records enable you to map the root of the domain (like contoso.com) and provide a wildcard mapping for all subdomains below the root (like www.contoso.com and demo.contoso.com), CNAME records enable you to map only subdomains (like the www in www.contoso.com).

Configuring a custom domain

To configure a custom domain, you need access to your domain name registrar setup for the domain while also editing configuration for your web app in the Azure portal.

These are the high-level steps for creating a custom domain name for your web app:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Ensure your web app uses an App Service plan that supports custom domains.

Click Custom Domains from the left navigation pane.

On the Custom Domains blade (Figure 4-16) note the external IP address of your web app.

Select Add Hostname to open the Add Hostname blade. Enter the hostname and click Validate for the portal to validate the state of the registrar setup with respect to your web app. You can then choose to set up an A record or CNAME record (Figure 4-17).

To set up an A record, select A Record and follow the instructions provided in the blade. It guides you through the following steps for an A record setup:

You first add a TXT record at your domain name registrar, pointing to the default Azure domain for your web app, to verify you own the domain name. The new TXT record should point to <yourwebappname>.azurewebsites.net.

In addition, you add an A record pointing to the IP address shown in the blade, for your web app.

To set up a CNAME record, select CNAME record, and follow the instructions provided in the blade.

If using a CNAME record, following the instructions provided by your domain name registrar, add a new CNAME record with the name of the subdomain, and for the value, specify your web app’s default Azure domain with <yourwebappname>.azurewebsites.net.

Save your DNS changes. Note that it may take some time for the changes to propagate across DNS. In most cases, your changes are visible within minutes, but in some cases, it may take up to 48 hours. You can check the status of your DNS changes by doing a DNS lookup using third-party websites like http://mxtoolbox.com/DNSLookup.aspx.

After completing the domain name registrar setup, from the Custom Domains blade, click Add Hostname again to configure your custom domain. Enter the domain name and select Validate again. If validation has passed, select Add Hostname to complete the assignment.

Configuring SSL certificates

To configure SSL certificates for your custom domain, you first need to have access to an SSL certificate that includes your custom domain name, including the CNAME if it is not a wildcard certificate.

To assign an SSL certificate to your web app, follow these steps:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Click SSL certificates from the left navigation pane.

From the SSL certificates (Figure 4-18) blade you may choose to import an existing app service certificate, or upload a new certificate.

You can then select Add Binding to set up the correct binding. You can set up bindings that point at your naked domain (contoso.com), or to a particular CNAME (www.contoso.com, demo.contoso.com), so long as the certificate supports it.

You can choose between Server Name Indication (SNI) or IP based SSL when you create the binding for your custom domain (Figure 4-19).

Manage Web Apps by using the API, Azure PowerShell, and Xplat-CLI

In addition to configuring and managing Web Apps via the Azure portal, programmatic or script-based access is available for much of this functionality and can satisfy many development requirements.

The options for this include the following:

![]() Azure Resource Manager (ARM) Azure Resource Manager provides a consistent management layer for the management tasks you can perform using Azure PowerShell, Azure CLI, Azure portal, REST API, and other development tools. For more information on this see https://docs.microsoft.com/en-us/azure/azure-resource-manager/.

Azure Resource Manager (ARM) Azure Resource Manager provides a consistent management layer for the management tasks you can perform using Azure PowerShell, Azure CLI, Azure portal, REST API, and other development tools. For more information on this see https://docs.microsoft.com/en-us/azure/azure-resource-manager/.

![]() REST API The REST API enables you to deploy and manage Azure infrastructure resources using HTTP request and JSON payloads. For more details on this see https://docs.microsoft.com/en-us/rest/api/resources/.

REST API The REST API enables you to deploy and manage Azure infrastructure resources using HTTP request and JSON payloads. For more details on this see https://docs.microsoft.com/en-us/rest/api/resources/.

![]() Azure PowerShell Azure PowerShell provides cmdlets for interacting with Azure Resource Manager to manage infrastructure resources. The PowerShell modules can be installed to Windows, macOS, or Linux. For additional details see https://docs.microsoft.com/en-us/powershell/azure/overview.

Azure PowerShell Azure PowerShell provides cmdlets for interacting with Azure Resource Manager to manage infrastructure resources. The PowerShell modules can be installed to Windows, macOS, or Linux. For additional details see https://docs.microsoft.com/en-us/powershell/azure/overview.

![]() Azure CLI Azure CLI (also known as XplatCLI) is a command line experience for managing Azure resources. This is an open source SDK that works on Windows, macOS, and Linux platforms to create, manage, and monitor web apps. For details see https://docs.microsoft.com/en-us/cli/azure/overview.

Azure CLI Azure CLI (also known as XplatCLI) is a command line experience for managing Azure resources. This is an open source SDK that works on Windows, macOS, and Linux platforms to create, manage, and monitor web apps. For details see https://docs.microsoft.com/en-us/cli/azure/overview.

Implement diagnostics, monitoring, and analytics

Without diagnostics, monitoring, and analytics, you cannot effectively investigate the cause of a failure, nor can you proactively prevent potential problems before your users experience them. Web Apps provide multiple forms of logs, features for monitoring availability and automatically sending email alerts when the availability crosses a threshold, features for monitoring your web app resource usage, and integration with Azure Analytics via Application Insights.

Configure diagnostics logs

A web app can produce many different types of logs, each focused on presenting a particular source and format of diagnostic data. The following list describes each of these logs:

![]() Event Log The equivalent of the logs typically found in the Windows Event Log on a Windows Server machine, this is a single XML file on the local file system of the web application. In the context of web apps, the Event Log is particularly useful for capturing unhandled exceptions that may have escaped the application’s exception handling logic and surfaced to the web server. Only one XML file is created per web app.

Event Log The equivalent of the logs typically found in the Windows Event Log on a Windows Server machine, this is a single XML file on the local file system of the web application. In the context of web apps, the Event Log is particularly useful for capturing unhandled exceptions that may have escaped the application’s exception handling logic and surfaced to the web server. Only one XML file is created per web app.

![]() Web server logs Web server logs are textual files that create a text entry for each HTTP request to the web app.

Web server logs Web server logs are textual files that create a text entry for each HTTP request to the web app.

![]() Detailed error message logs These HTML files are generated by the web server and log the error messages for failed requests that result in an HTTP status code of 400 or higher. One error message is captured per HTML file.

Detailed error message logs These HTML files are generated by the web server and log the error messages for failed requests that result in an HTTP status code of 400 or higher. One error message is captured per HTML file.

![]() Failed request tracing logs In addition to the error message (captured by detailed error message logs), the stack trace that led to a failed HTTP request is captured in these XML documents that are presented with an XSL style sheet for in-browser consumption. One failed request trace is captured per XML file.

Failed request tracing logs In addition to the error message (captured by detailed error message logs), the stack trace that led to a failed HTTP request is captured in these XML documents that are presented with an XSL style sheet for in-browser consumption. One failed request trace is captured per XML file.

![]() Application diagnostic logs These text-based trace logs are created by web application code in a manner specific to the platform the application is built in using logging or tracing utilities.

Application diagnostic logs These text-based trace logs are created by web application code in a manner specific to the platform the application is built in using logging or tracing utilities.

To enable these diagnostic settings from the Azure portal, follow these steps:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Select the Diagnostics Logs tab from the left navigation pane. The Diagnostics Logs blade (Figure 4-20) will appear to the right. From this blade you can choose to configure the following:

Enable application logging to the file system for easy access through the portal.

Enable storing application logs to blob storage for longer term access.

Enable Web Server logging to the file system or to blob storage for longer term access.

Enable logging detailed error messages.

Enable logging failed request messages.

If you enable files system logs for application and Web Server logs, you can view those from the Log Streaming tab (Figure 4-21).

You can access more advanced debugging and diagnostics tools from the Advanced Tools tab (Figure 4-22).

Table 4-2 describes where to find each type of log when retrieving diagnostic data stored in the web app’s local file system. The Log Files folder is physically located at D:homeLogFiles.

TABLE 4-2 Locations of the various logs on the web app’s local file system

Log Type |

Location |

Event Log |

LogFileseventlog.xml |

Web server logs |

LogFileshttpRawLogs*.log |

Detailed error message logs |

LogFilesDetailedErrorsErrorPage######.htm |

Failed request tracing logs |

LogFilesW3SVC**.xml |

Application diagnostic logs (.NET) |

LogFilesApplication*.txt |

Deployment logs |

LogFilesGit. This folder contains logs generated by the internal deployment processes used by Azure web apps, as well as logs for Git deployments |

Configure endpoint monitoring

App Services provide features for monitoring your applications directly from the Azure portal. There are many metrics available for monitoring, as listed in Table 4-3.

TABLE 4-3 List of available metrics that are monitored for your web apps

METRIC |

DESCRIPTION |

Average Response Time |

The average time taken for the app to serve requests in ms. |

Average memory working set |

The average amount of memory in MiBs used by the app. |

CPU Time |

The amount of CPU in seconds consumed by the app. |

Data In |

The amount of incoming bandwidth consumed by the app in MiBs. |

Data Out |

The amount of outgoing bandwidth consumed by the app in MiBs. |

Http 2xx |

Count of requests resulting in a http status code >= 200 but < 300. |

Http 3xx |

Count of requests resulting in a http status code >= 300 but < 400. |

Http 401 |

Count of requests resulting in HTTP 401 status code. |

Http 403 |

Count of requests resulting in HTTP 403 status code. |

Http 404 |

Count of requests resulting in HTTP 404 status code. |

Http 406 |

Count of requests resulting in HTTP 406 status code. |

Http 4xx |

Count of requests resulting in a http status code >= 400 but < 500. |

Http Server Errors |

Count of requests resulting in a http status code >= 500 but < 600. |

Memory working set |

Current amount of memory used by the app in MiBs. |

Requests |

Total number of requests regardless of their resulting HTTP status code. |

You can monitor metrics from the portal and customize which metrics should be shown by following these steps:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Select the Overview tab from the left navigation pane. This pane shows a few default charts for metrics including server errors, data in and out, requests, and average response time (Figure 4-23 and 4-24).

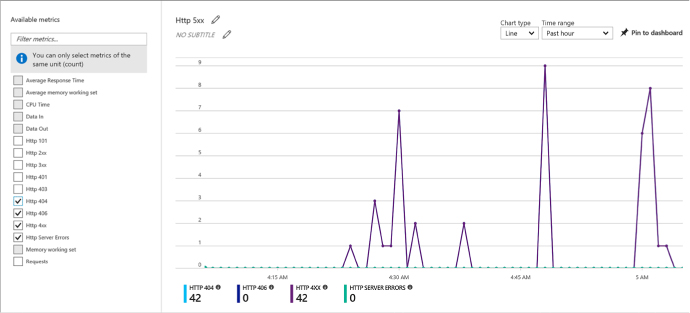

You can customize the metrics (Figure 4-25) shown by creating new graphs and pinning those to your dashboard.

Click one of the graphs. You’ll be taken to edit the metrics blade for the graph, limited to compatible metrics for the selection.

Select the metrics to add or remove from the graph.

Save the graph to the dashboard. You can now navigate to your portal dashboard to view the selected metrics without having to navigate to the web app directly. From here you can also edit the graph by selecting it, editing metrics, and saving back to the same pinned graph.

You can also add alerts for metrics. From the Metrics blade click Add Metric alert from the command bar at the top of the blade. This takes you to the Add Rule blade (Figure 4-26) where you can configure the alert. To configure an alert for slow requests, as an example, do the following:

Provide a name for the rule.

Optionally change the subscription, resource group, and resource but it will default to the current web app.

Choose Metrics for the alert type.

Choose the metric from the drop-down list (Figure 4-27), in this case Average Response Time with a condition greater than a threshold of 2 seconds over a 15 minute period.

From the same blade you can also indicate who to notify, configure a web hook, or even configure a Logic App to produce a workflow based on the alert.

Click OK to complete the alert configuration.

You can view the alerts from the Alerts tab of the navigation pane.

Design and configure Web Apps for scale and resilience

App Services provide various mechanisms to scale your web apps up and down by adjusting the number of instances serving requests and by adjusting the instance size. You can, for example, increase the number of instances (scale out) to support the load you experience during business hours, but then decrease (scale in) the number of instances during less busy hours to save costs. Web Apps enable you to scale the instance count manually, automatically via a schedule, or automatically according to key performance metrics. Within a datacenter, Azure load balances traffic between all of your Web Apps instances using a round-robin approach.

You can also scale a web app by deploying to multiple regions around the world and then utilizing Microsoft Azure Traffic Manager to direct web app traffic to the appropriate region based on a round robin strategy or according to performance (approximating the latency perceived by clients of your application). Alternately, you can configure Traffic Manager to use the alternate regions as targets for failover if the primary region becomes unavailable.

In addition to scaling instance counts, you can manually adjust your instance size (scale up or down). For example, you can scale up your web app to utilize more powerful VMs that have more RAM memory and more CPU cores to serve applications that are more demanding of memory consumption or CPU utilization, or scale down your VMs if you later discover your requirements are not as great.

To scale your web app, follow these steps:

Navigate to the blade of your web app in the portal accessed via https://portal.azure.com.

Select the App Service plan tab from the left navigation pane. This takes you to the App Service Plan blade.

Select the Scale Up tab from the left navigation pane and you’ll be taken to a blade to select the new pricing tier for your web app VMs.

Select the Scale Out tab and you’ll be taken to the Scale Out blade to choose the number of instances to scale out or into (Figure 4-28).

If you select Enable autoscale, you can create conditions based on metrics and rules in order for the site to automatically adjust instance count.

Skill 4.2: Design Azure App Service API Apps

Azure API Apps provide a quick and easy way to create and consume scalable RESTful APIs, using the language of your choice, in the cloud. As part of the Azure infrastructure, you can integrate API Apps with many Azure services such as API Management, Logic Apps, Functions, and many more. Securing your APIs can be done with a few clicks, whether you are using Azure Active Directory, OAuth, or social networks for single sign-on.

If you have existing APIs written in .NET, Node.js, Java, Python, or PHP, they can be brought into App Services as API Apps. When you need to consume these APIs, enable CORS support so you can access them from any client. Swagger support makes generating client code to use your API simple. Once you have your API App set up, and clients are consuming it, it is important to know how to monitor it to detect any issues early on.

Create and deploy API Apps

There are different ways you can create and deploy API Apps, depending on the language and development environment of choice. For instance, if you are using Visual Studio, you can create a new API Apps project and publish to a new API app, which provisions the service in Azure. If you are not using Visual Studio, you can provision a new API App service using the Azure portal, Azure CLI, or PowerShell.

Creating a new API App from the portal

To create a new API app in the portal, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select New on the command bar.

Within the Marketplace (Figure 4-29) search text box, type API App, and press Enter.

Select API App from the results.

On the API App blade, select Create.

On the Create API App blade, choose your Azure subscription, select a Resource Group, select or create an App Service Plan, select whether you want to enable Application Insights, and then click Create.

Creating and deploying a new API app with Visual Studio 2017

Visual Studio 2017 comes preconfigured with the ability to create an API app when you have installed the ASP.NET and web development, as well as Azure development workloads. Follow these steps to create a new API app with Visual Studio 2017:

Launch Visual Studio, and then select File > New > Project.

In the New Project dialog, select ASP.NET Web Application (.NET Framework) within the Cloud category (Figure 4-30). Provide a name and location for your new project, and then click OK.

FIGURE 4-30 The ASP.NET Web Application Cloud project type

Select the Azure API App template (Figure 4-31), and then click OK.

Visual Studio creates a new API App project within the specified directory, adding useful NuGet packages, such as:

![]() Newtonsoft.Json for deserializing requests and serializing responses to and from your API app.

Newtonsoft.Json for deserializing requests and serializing responses to and from your API app.

![]() Swashbuckle to add Swagger for rich discovery and documentation for your API REST endpoints.

Swashbuckle to add Swagger for rich discovery and documentation for your API REST endpoints.

In addition, Web API and Swagger configuration classes are created in the project’s startup folder. All you need to do from this point, to deploy your API app is to complete your Controller actions, and publish from Visual Studio.

Follow these steps to deploy your API app from Visual Studio:

Right-click your project in the Visual Studio Solution Explorer (Figure 4-32), then click Publish.

In the Publish dialog (Figure 4-33), select the Create New option underneath Microsoft Azure App Service, and then click Publish. This creates a new API app in Azure and publishes your solution to it. You could alternately select the Select Existing option to publish to an existing API App service.

In the Create App Service dialog (Figure 4-34), provide a unique App name, select your Azure subscription and resource group, select or create an App Service Plan, and then click Create.

When your API app is finished publishing, it will open in a new web browser. When the page is displayed, navigate to the /swagger path to view your generated API details, and to try out the REST methods. For example http://<YOUR-API-APP>.azurewebsites.net/swagger/ (Figure 4-35).

Automate API discovery by using Swashbuckle

Swagger is a popular, open source framework backed by a large ecosystem of tools that helps you design, build, document, and consume your RESTful APIs. The previous section included a screenshot of the Swagger page generated for an API App. This was generated by the Swashbuckle NuGet package.

The core component of Swagger is the Swagger Specification, which is the API description metadata in the form of a JSON or YAML file. The specification creates the RESTful contract for your API, detailing all its resources and operations in a human and machine-readable format to simplify development, discovery, and integration with other services. This is a standardized OpenAPI Specification (OAS) for defining RESTful interfaces, which makes the generated metadata valuable when working with a wide range of consumers. Included in the list of consumers that can read the Swagger API metadata are several Azure services, such as Microsoft PowerApps, Microsoft Flow, and Logic Apps. Meaning, when you publish your API App service with Swagger, these Azure services and more immediately know how to interact with your API endpoints with no further effort on your part.

Beyond other Azure services being able to more easily use your API App, Swagger RESTful interfaces make it easier for other developers to consume your API endpoints. The API explorer that comes with swagger-ui makes it easy for other developers (and you) to test the endpoints and know what the data format looks like that need to be sent and should be returned in kind.

Generating this Swagger metadata manually can be a very tedious process. If you build your API using ASP.NET or ASP.NET Core, you can use the Swashbuckle NuGet package to automatically do this for you, saving a lot of time initially creating the metadata, and maintaining it. In addition to its Swagger metadata generator engine, Swashbuckle also contains an embedded version of swagger-ui, which it will automatically serve up once Swashbuckle is installed.

Use Swashbuckle in your API App project

Swashbuckle is provided by way of a set of NuGet packages: Swashbuckle and Swashbuckle.Core. When you create a new API App project using the Visual Studio template, these NuGet packages are already included. If you don’t have them installed, follow these steps to add Swashbuckle to your API App project:

Install the Swashbuckle NuGet package, which includes Swashbuckle.Core as a dependency, by using the following command from the NuGet Package Manager Console:

Install-Package Swashbuckle

The NuGet package also installs a bootstrapper (App_Start/SwaggerConfig.cs) that enables the Swagger routes on app start-up using WebActivatorEx. You can configure Swashbuckle’s options by modifying the GlobalConfiguration.Configuration.EnableSwagger extension method in SwaggerConfig.cs. For example, to exclude API actions that are marked as Obsolete, add the following configuration:

public static void Register()

{

var thisAssembly = typeof(SwaggerConfig).Assembly;

GlobalConfiguration.Configuration

.EnableSwagger(c =>

{

…

…

// Set this flag to omit descriptions for any actions

decorated with the Obsolete attribute

c.IgnoreObsoleteActions();

…

…

});

}Modify your project’s controller actions to include Swagger attributes to aid the generator in building your Swagger metadata. Listing 4-1 illustrates the use of the SwaggerResponseAttribute at each controller method.

Swashbuckle is now configured to generate Swagger metadata for your API endpoints with a simple UI to explore that metadata. For example, the controller in Listing 4-1 may produce the UI shown in Figure 4-36.

LISTING 4-1 C# code showing Swagger attributes added to the API App’s controller actions

/// <summary>

/// Gets the list of contacts

/// </summary>

/// <returns>The contacts</returns>

[HttpGet]

[SwaggerResponse(HttpStatusCode.OK,

Type = typeof(IEnumerable<Contact>))]

[Route("~/contacts")]

public async Task<IEnumerable<Contact>> Get()

{

…

}

/// <summary>

/// Gets a specific contact

/// </summary>

/// <param name="id">Identifier for the contact</param>

/// <returns>The requested contact</returns>

[HttpGet]

[SwaggerResponse(HttpStatusCode.OK,

Description = "OK",

Type = typeof(IEnumerable<Contact>))]

[SwaggerResponse(HttpStatusCode.NotFound,

Description = "Contact not found",

Type = typeof(IEnumerable<Contact>))]

[SwaggerOperation("GetContactById")]

[Route("~/contacts/{id}")]

public async Task<Contact> Get([FromUri] int id)

{

…

}

/// <summary>

/// Creates a new contact

/// </summary>

/// <param name="contact">The new contact</param>

/// <returns>The saved contact</returns>

[HttpPost]

[SwaggerResponse(HttpStatusCode.Created,

Description = "Created",

Type = typeof(Contact))]

[Route("~/contacts")]

public async Task<Contact> Post([FromBody] Contact contact)

{

…

}

You can test any of the API methods by selecting it from the list. Here we selected the /contacts/{id} GET method and tested it by entering a value of 2 in the id parameter, and clicking the Try It Out! button. Notice that Swagger details the return model schema, shows a Curl command and a Request URL for invoking the method, and shows the actual response body after clicking the button (Figure 4-37).

Enable CORS to allow clients to consume API and Swagger interface

Before clients, such as other web services or client code generators, can consume your API endpoints and Swagger interface, you need to enable CORS on the API App in Azure. To enable CORS, follow these steps:

Navigate to the portal accessed via https://portal.azure.com.

Open your API App service. You can find this by navigating to the Resource Group in which you published your service.

Select CORS from the left-hand menu (Figure 4-38). Enter one or more allowed origins, then select Save. To allow all origins, enter an asterisk (*) in the Allowed Origins field and remove all other origins from the list.

Use Swagger API metadata to generate client code for an API app

There are tools available to generate client code for your API Apps that have Swagger API definitions, like the swagger.io online editor. The previous section demonstrated how you can automatically generate the Swagger API metadata, using the Swashbuckle NuGet package.

To generate client code for your API app that has Swagger API metadata, follow these steps:

Find your Swagger 2.0 API definition document by navigating to http://<your-api-app/swagger/docs/v1 (v1 is the API version). Alternately, you can find it by navigating to the Azure portal, opening your API App service, and selecting API definition from the left-hand menu. This displays your Swagger 2.0 API definition URL (Figure 4-39).

Navigate to https://editor.swagger.io to use the Swagger.io Online Editor.

Select File > Import URL. Enter your Swagger 2.0 metadata URL in the dialog box and click OK (Figure 4-40).

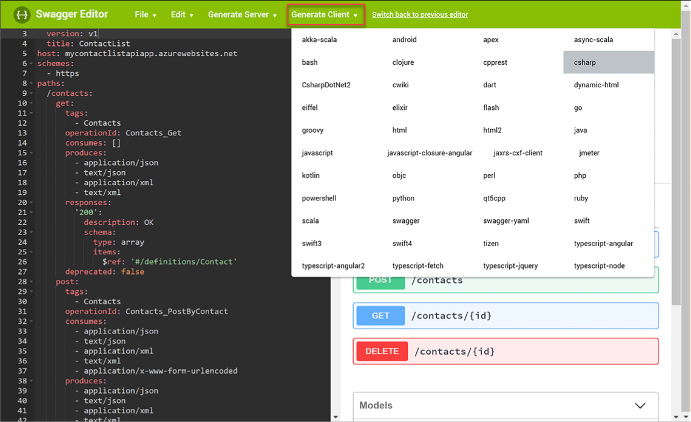

After a few moments, your Swagger metadata appears on the left-hand side of the editor, and the discovered API endpoints will be displayed on the right. Verify that all desired API endpoints appear, and then select Generate Client from the top menu. Select the desired language or platform for the generated client app. This initiates a download of a zip file containing the client app (Figure 4-41).

Monitor API Apps

App Service, under which API Apps reside, provides built-in monitoring capabilities, such as resource quotas and metrics. You can also set up alerts and automatic scaling based on these metrics. In addition, Azure provides built-in diagnostics to assist with debugging an App Service web or API app. A combination of the monitoring capabilities and logging should provide you with the information you need to monitor the health of your API app, and determine whether it is able to meet capacity demands.

Using quotas and metrics

API Apps are subject to certain limits on the resources they can use. The limits are defined by the App Service plan associated with the app. If the application is hosted in a Free or Shared plan, and then the limits on the resources the app can use are defined by Quotas, as discussed earlier for Web Apps.

If you exceed the CPU and bandwidth quotas, your app will respond with a 403 HTTP error, so it’s best to keep an eye on your resource usage. Exceeding memory quotas causes an application reset, and exceeding the filesystem quota will cause write operations to fail, even to logs. If you need to increase or remove any of these quotas, you can upgrade your App Service plan.

Metrics that you can view pertaining to your apps are the same as shown earlier in Table 4-3. As with Web Apps, metrics are accessed from the Overview blade of your API App within the Azure portal by clicking one of the metrics charts, such as Requests or Average Response Time. Once you click a chart, you can customize it by clicking it and selecting edit chart. From here you can change the time range, chart type, and metrics to display.

Enable and review diagnostics logs

By default, when you provision a new API App, diagnostics logs are disabled. These are detailed server logs you can use to troubleshoot and debug your app. To enable diagnostics logging, perform the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Open your API App service. You can find this by navigating to the Resource Group in which you published your service.

Select Diagnostics logs from the left-hand menu (Figure 4-42). Turn on any logs you wish to capture. When you enable application diagnostics, you also choose the Level. This setting allows you to filter the information captured to informational, warning, or error information. Setting this to verbose will log all information produced by the application. This is also where you can go to retrieve FTP information for downloading the logs.

You can download the diagnostics logs via FTP, or they can be downloaded as a zip archive by using PowerShell or the Azure CLI.

The types of logs and structure for accessing logs follow that described for Web Apps and shown in Table 4-2.

Skill 4.3: Develop Azure App Service Logic Apps

Azure Logic Apps is a fully managed iPaaS (integration Platform as a Service) that helps you simplify and implement scalable integrations and workflows in the cloud. As such, you don’t have to worry about infrastructure, management, scalability, and availability because all of that is taken care of for you. Its Logic App Designer gives you a nice way to model and automate your process visually, as a series of steps known as a workflow. At its core, it allows you to quickly integrate with many services and protocols, inside of Azure, outside of Azure, as well as on-premises. When you create a Logic App, you start out with a trigger, like ‘When an email arrives at this account,’ and then you act on that trigger with many combinations of actions, condition logic, and conversions.

Create a Logic App connecting SaaS services

One of the strengths of Logic Apps is its ability to connect a large number of SaaS (Software as a Service) services to create your own custom workflows. In this example, we will connect Twitter with an Outlook.com or hosted Office 365 mailbox to email certain tweets as they arrive.

To create a new Logic App in the portal, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select New on the command bar.

Select Enterprise Integration, then Logic App (Figure 4-43).

Provide a unique name, select a resource group and location, check Pin To Dashboard, and then click Create (Figure 4-44).

Follow the above steps to create new Logic Apps as needed in the remaining segments for this skill.

Once the Logic App has been provisioned, open it to view the Logic Apps Designer. This is where you design or modify your Logic App. You can select from a series of commonly used triggers, or from several templates you can use as a starting point. The following steps show how to create one from scratch.

Select Blank Logic App under Templates.

All Logic Apps start with a trigger. Search the list for Twitter, and then select it.

Click Sign in to create a connection to Twitter with your Twitter account. A dialog will appear where you sign in and authorize the Logic App to access your account.

In the Twitter trigger form on the designer (Figure 4-45), enter your search text to return certain tweets (such as #nasa), and select an interval and frequency, establishing how often you wish to check for items, returning all tweets during that time span.

Select the + New Step button, and then choose Add An Action.

Type outlook in the search box, and then select Office 365 Outlook (Send An Email) from the results. Alternately, you can select Outlook.com from the list (Figure 4-46).

Click Sign In to create a connection to your Office 365 Outlook account (Figure 4-47).

In the Send An Email form, provide values for the email recipient, the subject of the email, and the body. In each of these fields, you can select parameters from the Twitter Connector, such as the tweet’s text and who posted it.

Click Save in the Logic Apps Designer menu. Your Logic App is now live. If you wish to test right away and not wait for your trigger interval, click Run.

Create a Logic App with B2B capabilities

Logic Apps support business-to-business (B2B) workflows and communication through the Enterprise Integration Pack. This allows organizations to exchange messages electronically, even if they use different protocols and formats. Enterprise integration allows you to store all your artifacts in one place, within your integration account, and secure messages through encryption and digital signatures. To access these artifacts from a logic app, you must first link it to your integration account. Your integration account needs both Partner and Agreement artifacts prior to creating B2B workflows for your logic app.

Create an integration account

To get started with the Enterprise Integration Pack so you can create B2B workflows, you must first create an integration account, following these steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type integration, and then select Integration Accounts in the results list (Figure 4-48).

At the top of the Integration Accounts blade, select + Add.

Provide a name for your Integration Account (Figure 4-49), select your resource group, location, and a pricing tier. Once validation has passed, click Create.

Add partners to your integration account

Partners are entities that participate in B2B transactions and exchange messages between each other. Before you can create partners that represent you and another organization in these transactions, you must both share information that identifies and validates messages sent by each other. After you discuss these details and are ready to start your business relationship, you can create partners in your integration account to represent you both. These message details are called agreements. You need at least two partners in your integration account to create an agreement. Your organization must be the host partner, and the other partner(s) guests. Guest partners can be outside organizations, or even a department in your own organization.

To add a partner to your integration account, follow these steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type integration, then select Integration Accounts in the results list.

Select your integration account, and then select the Partners tile.

In the Partners blade, select + Add.

Provide a name for your partner (Figure 4-50), select a Qualifier, and then enter a Value to help identify documents that transfer through your apps. When finished, click OK.

After a few moments, the new partner (Figure 4-51) will appear in your list of partners.

Add an agreement

Now that you have partners associated with your integration account, you can allow them to communicate seamlessly using industry standard protocols through agreements. These agreements are based on the type of information exchanged, and through which protocol or transport standards they will communicate: AS2, X12, or EDIFACT.

Follow these steps to create an AS2 agreement:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type integration, and then select Integration Accounts in the results list (Figure 4-52).

Select your integration account, and then select the Agreements tile.

In the Agreements blade, select + Add.

Provide a name for your agreement and select AS2 for the agreement type. Now select the Host Partner, Host Identity, Guest Partner, and Guest Identity. You can override send and receive settings as desired. Click OK.

Link your Logic app to your Enterprise Integration account

You will need to link your Logic app to your integration account so you can create B2B workflows using the partners and agreements you’ve created in your integration account. You must make sure that both the integration account and Logic app are in the same Azure region before linking.

To link, follow these steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type logic, and then select Logic Apps in the results list.

Select your logic app, and then select Workflow settings.

In the Workflow settings blade, select your integration account from the select list, and click Save (Figure 4-53).

Use B2B features to receive data in a Logic App

After creating an integration account, adding partners and agreements to it, and linking it to a Logic app, you can now create a B2B workflow using the Enterprise Integration Pack, following these steps:

Open the Logic App Designer on the Logic app that has a linked integration account.

Select Blank Logic App under Templates.

Search for “http request” in the trigger filter, and then select Request (When an HTTP request is received) from the list of results (Figure 4-54).

Select the + New Step button, and then choose Add An Action.

Type as2 in the search box, and then select AS2 (Decode AS2 Message) from the results (Figure 4-55).

In the form that follows, provide a connection name, and then select your integration account, and click Create (Figure 4-56).

Add the Body that you want to use as input. In this example, we selected the body of the HTTP request that triggers the Logic app. Add the required Headers for AS2. In this example, we selected the headers of the HTTP request that triggers the Logic app (Figure 4-57).

Select the + New Step button, and then choose Add An Action.

Type x12 in the search box, and then select X12 (Decode X12 Message) from the results (Figure 4-58).

In the form that follows, provide a connection name, and then select your integration account as before, and click Create (Figure 4-59).

The input for this new action is the output for the previous AS2 action. Because the actual message content is JSON-formatted and base64-encoded, you must specify an expression as the input. To do this, you type the following into the X12 Flat File Message to Decode field: @base64ToString(body(‘Decode_AS2_Message’)?[‘AS2Message’]?[‘Content’])

Select the + New Step button, and then choose Add An Action (Figure 4-60).

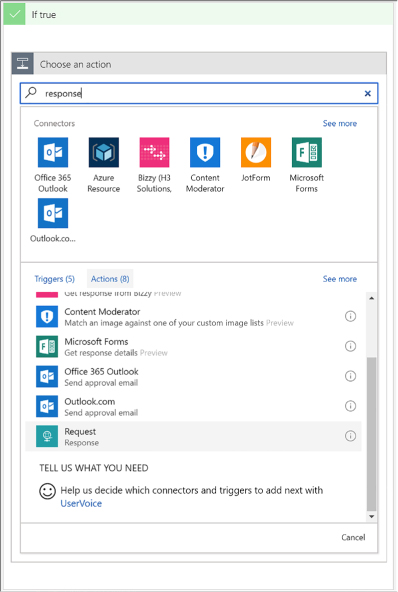

Type response in the search box, and then select Request (Response) from the results.

The response body should include the MDN from the output of the Decode X12 Message action (Figure 4-61). To do this, we type the following into the Body field: @base64ToString(body(‘Decode_AS2_message’)?[‘OutgoingMdn’]?[‘Content’])

Click Save in the Logic Apps Designer menu.

Create a Logic App with XML capabilities

Oftentimes, businesses send and receive data between one or more organizations in XML format. Due to the dynamic nature of XML documents, schemas are used to confirm that the documents received are valid and are in the correct format. Schemas are also used to transform data from one format to another. Transforms are also known as maps, which consist of source and target XML schemas. When you link your logic app with an integration account, the schema and map artifacts within enable your Logic app to use these Enterprise Integration Pack XML capabilities.

The XML features included with the Enterprise Integration pack are:

![]() XML validation Used to validate incoming and outgoing XML messages against a specific schema.

XML validation Used to validate incoming and outgoing XML messages against a specific schema.

![]() XML transform Used to convert data from one format to another.

XML transform Used to convert data from one format to another.

![]() Flat file encoding/decoding Used to encode XML content prior to sending, or to convert XML content to flat files.

Flat file encoding/decoding Used to encode XML content prior to sending, or to convert XML content to flat files.

![]() XPath Used to extract specific properties from a message, using an xpath expression.

XPath Used to extract specific properties from a message, using an xpath expression.

Add schemas to your integration account

Since schemas are used to validate and transform XML messages, you must add one or more to your integration account before working with the Enterprise Integration Pack XML features within your linked logic app. To add a new schema, follow these steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type integration, and then select Integration Accounts in the results list (Figure 4-62).

Select your integration account, and then select the Schemas tile.

In the Schemas blade, select + Add.

Provide a name for your schema and select whether it is a small file (<= 2MB) or a large file (> 2MB). If it is a small file, you can upload it here. If you select Large file, then you need to provide a publicly accessible URI to the file. In this case, we’re uploading a small file. Click the Browse button underneath Schema to select a local XSD file to upload. Click OK.

Add maps to your Integration account

When you want to your Logic app to transform data from one format to another, you first add a map (schema) to your linked Integration account.

To add a new schema, follow these steps:

Navigate to the portal accessed via https://portal.azure.com.

Select More Services on the command bar.

In the filter box, type integration, then select Integration Accounts in the results list.

Select your integration account, and then select the Maps tile.

In the Maps blade, select + Add.

Provide a name for your map and click the Browse button underneath Map to select a local XSLT file to upload. Click OK (Figure 4-63).

Add XML capabilities to the linked Logic App

After adding an XML schema and map to the Integration account, you are ready to use the Enterprise Integration Pack’s XML validation, XPath Extract, and Transform XML operations in a Logic App.

Once your LogicAapp has been linked to the Integration account with these artifacts, follow these steps to use the XML capabilities in your Logic App:

Open the Logic App Designer on the Logic pp that has a linked Integration account.

Select Blank Logic App under Templates.

Search for “http request” in the trigger filter, and then select Request (When An HTTP Request Is Received) from the list of results (Figure 4-64).

Select the + New Step button, and then choose Add An Action.

Type xml in the search box, and then select XML (XML Validation) from the results.

In the form that follows, select the Body parameter from the HTTP request trigger for the Content value. Select the Order schema in the Schema Name select list, which is the schema we added to the Integration account (Figure 4-65).

Select the + New Step button, and then choose Add An Action.

Type xml in the search box, and then select Transform XML from the results (Figure 4-66).

In the form that follows, select the Body parameter from the HTTP request trigger for the Content value. Select the SAPOrderMap map in the Map select list, which is the map we added to the Integration account (Figure 4-67).

In the Condition form that appears, select the Edit In Advanced Mode link, and then type in your XPath expression. In our case, we type in the following (Figure 4-68): @equals(xpath(xml(body(‘Transform_XML’)), ‘string(count(/.))’), ‘1’)

In the “If true” condition block beneath, select Add An Action. Search for “response,” and then select Request (Response) from the resulting list of actions (Figure 4-69).

In the Response form, select the Transformed XML parameter from the previous Transform XML step. This returns a 200 HTTP response containing the transformed XML (an SAP order) within the body (Figure 4-70).

Click Save in the Logic Apps Designer menu.

Trigger a Logic App from another app

There are many triggers that can be added to a Logic App. Triggers are what kick off the workflow within. The most common type of triggers you can use to trigger, or call, your Logic Apps from another app, are those that create HTTP endpoints. Triggers based on HTTP endpoints tend to be more widely used due to the simplicity of making REST-based calls from practically any web-enabled development platform.

These are the triggers that create HTTP endpoints:

![]() Request Responds to incoming HTTP requests to start the Logic App’s workflow in real time. Very versatile, in that it can be called from any web-based application, external webhook events, even from another Logic App with a request and response action.

Request Responds to incoming HTTP requests to start the Logic App’s workflow in real time. Very versatile, in that it can be called from any web-based application, external webhook events, even from another Logic App with a request and response action.

![]() HTTP Webhook Event-based trigger that does not rely on polling for new items. Register subscribe and unsubscribe methods with a callback URL used to trigger the logic app. Whenever your external app or service makes an HTTP POST to the callback URL, the logic app fires, and includes any data passed into the request.

HTTP Webhook Event-based trigger that does not rely on polling for new items. Register subscribe and unsubscribe methods with a callback URL used to trigger the logic app. Whenever your external app or service makes an HTTP POST to the callback URL, the logic app fires, and includes any data passed into the request.

![]() API Connection Webhook The API connection trigger is similar to the HTTP trigger in its basic functionality. However, the parameters for identifying the action are slightly different.

API Connection Webhook The API connection trigger is similar to the HTTP trigger in its basic functionality. However, the parameters for identifying the action are slightly different.

Create an HTTP endpoint for your logic app

To create an HTTP endpoint to receive incoming requests for a Request Trigger, follow these steps:

Open the Logic App Designer on the logic app to which you will be adding an HTTP endpoint.

Select Blank Logic App under Templates.

Search for “http request” in the trigger filter, and then select Request (When An HTTP Request Is Received) from the list of results.

You can optionally enter a JSON schema for the payload, or data, that you expect to be sent to the trigger. This schema can be added to the Request Body JSON Schema field. To generate the schema, select the Use Sample Payload To Generate Schema link at the bottom of the form. This displays a dialog where you can type in or paste a sample JSON payload. This generates the schema when you click Done. The advantage to having a schema defined is that the designer will use the schema to generate tokens that your logic app can use to consume, parse, and pass data from the trigger through your workflow (Figure 4-71).

Click Save in the Logic Apps Designer menu.

After saving, the HTTP POST URL is generated on the Receive trigger (Figure 4-72). This is the URL your app or service uses to trigger your logic app. The URL contains a Shared Access Signature (SAS) key used to authenticate the incoming requests.

Create custom and long-running actions

You can create your own APIs that provide custom actions and triggers. Because these are web-based APIs that use REST HTTP endpoints, you can build them in any language framework like .NET, Node.js, or Java. You can also host your APIs on Azure App Service as either web apps or API apps. However, API apps are preferred because they will make it easier to build, host, and consume your APIs used by Logic Apps. Another recommendation is to provide an OpenAPI (previously Swagger) specification to describe your RESTful API endpoints, their operations, and parameters. This makes it much easier to reference your custom API from a logic app workflow because all of the endpoints are selectable within the designer. You can use libraries like Swashbuckle to automatically generate the OpenAPI (Swagger) file for you.

If your custom API has long-running tasks to perform, it is more than likely that your logic app will time out waiting for the operation to complete. This is because Logic Apps will only wait around two minutes before timing out a request. If your long-running task takes several minutes, or hours to complete, you need to implement a REST-based async pattern on your API. These types of patterns are already fully supported natively by the Logic Apps workflow engine, so you don’t need to worry about the implementation there.

Long-running action patterns

Your custom API operations serve as endpoints for the actions in your Logic App’s workflow. At a basic level, the endpoints accept an HTTP request and return an HTTP response within the Logic App’s request timeout limit. When your custom action executes a long-running operation that will exceed this timeout, you can follow either the asynchronous polling pattern or the asynchronous webhook pattern. These patterns allow your logic app to wait for these long-running tasks to finish.

Asynchronous polling

The way the asynchronous polling pattern works is as follows:

When your API receives the initial request to start work, it starts a new thread with the long-running task, and immediately returns an HTTP Response “202 Accepted” with a location header. This immediate response prevents the request from timing out, and causes the workflow engine to start polling for changes.

The location header points to the URL for the Logic Apps to check the status of the long-running job. By default, the engine checks every 20 seconds, but you can also add a “Retry-after” header to specify the number of seconds until the next poll.

After the allotted time (20 seconds), the engine polls the URL on the location header. If the long-running job is still going, you should return another “202 Accepted” with a location header. If the job has completed, you should return a “200 OK” along with any relevant data. This is what the Logic Apps engine will continue the workflow with.

Asynchronous Webhooks

The asynchronous webhook pattern works by creating two endpoints on your API controller:

![]() Subscribe The Logic Apps engine calls the subscribe endpoint defined in the workflow action for your API. Included in this call is a callback URL created by the logic app that your API stores for when work is complete. When your long-running task is complete, your API calls back with an HTTP POST method to the URL, along with any returned content and headers, as input to the logic app.

Subscribe The Logic Apps engine calls the subscribe endpoint defined in the workflow action for your API. Included in this call is a callback URL created by the logic app that your API stores for when work is complete. When your long-running task is complete, your API calls back with an HTTP POST method to the URL, along with any returned content and headers, as input to the logic app.

![]() Unsubscribe This endpoint is called any time the logic app run is cancelled. When your API receives a request to this endpoint, it should unregister the callback URL and stop any running processes.

Unsubscribe This endpoint is called any time the logic app run is cancelled. When your API receives a request to this endpoint, it should unregister the callback URL and stop any running processes.

Monitor Logic Apps

When you create a logic app, you can use out-of-the-box tools within Logic Apps to monitor your app and detect any issues it may have, such as failures. You can view runs and trigger history, overall status, and performance.

If you want real-time event monitoring, as well as richer debugging, you can enable diagnostics on your logic app and send events to OMS with Log Analytics, or to other services, such as Azure Storage and Event Hubs.

Select Metrics (Figure 4-73) under Monitoring in the left-hand menu of your logic app to view performance information and the overall state, such as how many actions succeeded or failed, over the specified time period. It will display an interactive chart based on the selected metrics.

Select Alert Rules under Monitoring to create alerts based on metrics (such as any time failures occur over a 1-hour period), activity logs (with categories such as security, service health, autoscale, etc.), and near real time metrics, based on the data captured by your Logic App’s metrics, in time periods spanning from one minute to 24 hours. Alerts can be emailed to one or more recipients, route alerts to a webhook, or run a logic app.

The overview blade of your logic app displays both Runs History and Trigger History (Figure 4-74). This view lets you see at a glance how often the app was called, and whether those operations succeeded. Select a run history to see its details, including any data it received.

Skill 4.4: Develop Azure App Service Mobile Apps

Mobile Apps in Azure App Service provides a platform for the development of mobile applications, providing a combination of back-end Azure hosted services with device side development frameworks that streamline the integration of the back-end services.

Mobile Apps enables the development of applications across a variety of platforms, targeting native iOS, Android, and Windows apps, cross-platform Xamarin (Android, Forms and iOS) and Cordova. Mobile Apps includes a comprehensive set of open source SDKs for each of the aforementioned platforms, and together with the services provided in Azure provide functionality for:

![]() Authentication and authorization Enables integration with identity providers including Azure Active Directory, Facebook, Google, Twitter, and Microsoft Account.

Authentication and authorization Enables integration with identity providers including Azure Active Directory, Facebook, Google, Twitter, and Microsoft Account.

![]() Data access Enables access to tabular data stored in an Azure SQL Database or an on-premises SQL Server (via a hybrid connection) via an automatically provisioned and mobile-friendly OData v3 data source.

Data access Enables access to tabular data stored in an Azure SQL Database or an on-premises SQL Server (via a hybrid connection) via an automatically provisioned and mobile-friendly OData v3 data source.

![]() Offline sync Enables reads as well as create, update, and delete activity to happen against the supporting tables even when the device is not connected to a network, and coordinates the synchronization of data between local and cloud stores as dictated by the application logic (e.g., network connectivity is detected or the user presses a “Sync” button).

Offline sync Enables reads as well as create, update, and delete activity to happen against the supporting tables even when the device is not connected to a network, and coordinates the synchronization of data between local and cloud stores as dictated by the application logic (e.g., network connectivity is detected or the user presses a “Sync” button).

![]() Push notifications Enables the sending of push notifications to app users via Azure Notifications Hubs, which in turn supports the sending of notifications across the most popular push notifications services for Apple (APNS), Google (GCM), Windows (WNS), Windows Phone (MPNS), Amazon (ADM) and Baidu (Android China) devices.

Push notifications Enables the sending of push notifications to app users via Azure Notifications Hubs, which in turn supports the sending of notifications across the most popular push notifications services for Apple (APNS), Google (GCM), Windows (WNS), Windows Phone (MPNS), Amazon (ADM) and Baidu (Android China) devices.

Identify the target device platforms you want your app to target.

Prepare your development environment.

Deploy an Azure Mobile App Service instance.

Configure the Azure Mobile App Service.

Configure your client application.

Augment your project with authentication/authorization, offline data sync, or push notification capabilities.

The sections that follow cover each of these steps in greater detail.

Identify the target device platforms

The first decision you make when creating an mobile app is choosing which device platforms to support. For device platforms, you can choose from the set that includes native Android, Cordova, native iOS (Objective-C or Swift), Windows (C#), Xamarin Android, Xamarin Forms and Xamarin iOS.

Because each device platform brings with it a set of requirements, it can make getting started an almost overwhelming setup experience. One way to approach this is to start with one device platform so that you can complete the end-to-end process, and then layer on additional platforms after you have laid the foundation for one platform. Additionally, if you choose to use Xamarin or Cordova as your starting platform you gain the advantage that these platforms can themselves target multiple device platforms, allowing you to write portable code libraries once that is shared by projects that are specific to each target device.

Prepare your development environment

The requirements for your development environment vary depending on the device platforms you wish to target. The pre-requisites here include the supported operating system (e.g., macOS, Windows), the integrated development environment (e.g., Android Studio, Visual Studio for Windows, Visual Studio for Mac or Xcode) and the devices (e.g., the emulators/simulators or physical devices used for testing your app from the development environment of your choice).

Table 4-4 summarizes key requirements by device platform.

TABLE 4-4 Requirements for each target platform

Target Platform |

Requirements |

Android |

OS: macOS or Windows IDE: Android Studio Devices: Android emulator and devices |

Cordova |

OS: macOS and Windows IDE: Visual Studio for Windows Devices: Android, iOS*, Windows emulators and devices. |

iOS |

OS: macOS IDE: Xcode Devices: iOS simulator and devices |

Windows |

OS: Windows IDE: Visual Studio for Windows Devices: Windows desktop and phone |

Xamarin.Android |

OS: macOS or Windows IDE: Visual Studio for mac or Windows Devices: Android emulators and devices. |

Xamarin.Forms |

OS: macOS and Windows IDE: Visual Studio for mac or Windows Devices: Android, iOS*, Windows emulators and devices. |

Xamarin.iOS |

OS: macOS IDE: Visual Studio for mac or Windows Devices: iOS* simulator and devices |

* Running the iOS simulator or connecting to an iOS device requires a computer running macOS that is reachable across the network from the Windows development computer, or running the indicated IDE on a macOS.

Deploy an Azure Mobile App Service

With the aforementioned decisions in place, you are now ready to deploy an Azure Mobile App Service instance to provide the backend services to your app. Follow these steps:

In the Azure Portal, select New, and search for Mobile App, and select the Mobile App entry.

Select Create.

Provide a unique name for your Mobile App.

Select an Azure subscription and Resource Group.

Select an existing App Service Plan or create a new one.

Select Create to deploy the mobile app.

Configure the mobile app

Once you have deployed your mobile app, you need to configure where it will store its tabular data and the language (your options are C# or Node.js) in which the backend APIs are implemented (which affects the programming language you use when customizing the backend behavior). The following steps walk you through preparing the quick start solution, which you can use as a starting point for your mobile app. Follow these steps:

In the Azure Portal, navigate to the blade for your mobile app.

From the menu, under the Deployment heading, select Quick Start.

On the General listing, select the platform you wish to target first.

On the Quick Start blade, select the button underneath the header 1 Connect a database that reads You Will Need A Database In Order To Complete This Quickstart. Click Here To Create One.”

On the Data Connections blade, select + Add.

On the Add Data Connection blade, leave the Type drop-down at SQL Database.

Select SQL Database - Configure Required Settings.

On the Database blade, select an existing Azure SQL Database, or create a new database (and optionally a new SQL Database Server).

Back on the Add Data Connection blade, select Connection String.

Provide the name to use for referring to this connection string in configuration.

Select OK.

Select OK once more to add the data connection (and create the SQL Database if so configured).

In a few minutes (when creating a new SQL Database), the new entry appears in the Data Connections blade. When it does, close the Data Connections blade.

On the Quick Start blade, underneath the header, Create A Table API, choose Node.js and select the check box I Acknowledge That This Will Overwrite All Site Contents. Then select the Create TodoItem table button that is enabled. If you choose to use C#, note that you will have to download the zip provided, extract it, open it in Visual Studio, compile and then publish the App Service to your Mobile App instance. This is performed in the same way as you deploy Web Apps as described previously.

Leave the Quick Start blade open and continue to the next section.

Configure your client application

Now that you have a basic mobile app backend deployed, you are now ready to create the application that will run on your targeted devices. You can create a new application from a generated quick start project or by connecting an existing application:

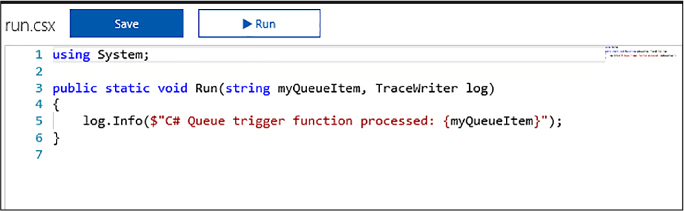

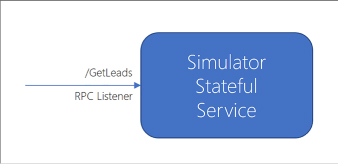

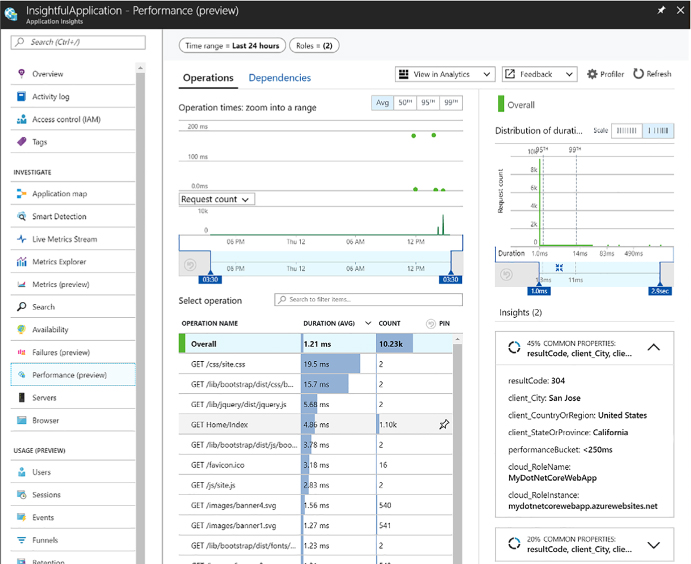

From the Quick Start blade of your mobile app, underneath the header, Configure Your Client Application, set the toggle to create A New App If You Want To Create A Solution or Connect An Existing App If You Already Have A Solution Built and just need to connect it to the mobile app.