17.2.4. PN Triangles

Given an input triangle mesh with normals at each vertex, the goal of the PN triangle scheme by Vlachos et al. [1819] is to construct a better-looking surface compared to using just triangles.

The letters “PN” are short for “point and normal,” since that is all the data you need to generate the surfaces.

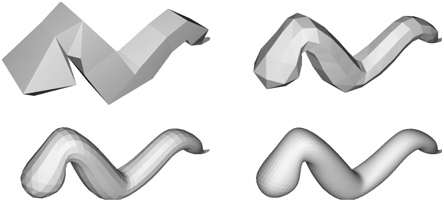

They are also called N-patches. This scheme attempts to improve the triangle mesh’s shading and silhouettes by creating a curved surface to replace each triangle. Tessellation hardware is able to make each surface on the fly because the tessellation is generated from each triangle’s points and normals, with no neighbor information needed. See Figure 17.27 for an example. The algorithm presented here builds upon work by van Overveld and Wyvill [1341].

Figure 17.27. The columns show different levels of detail of the same model. The original triangle data, consisting of 414 triangles, is shown on the left. The middle model has 3,726 triangles, while the right has 20,286 triangles, all generated with the presented algorithm. Note how the silhouette and the shading improve. The bottom row shows the models in wireframe, which reveals that each original triangle generates the same amount of subtriangles. (Model courtesy of id Software. Images from ATI Technologies Inc. demo.)

Figure 17.28. How the Bézier point p210

Assume that we have a triangle with vertices p300

To shorten notation, w=1-u-v

(17.43)

p(u,v)=∑i+j+k=3B3ijk(u,v)pijk=u3p300+v3p030+w3p003+3u2vp210+3u2wp201+3uv2p120+3v2wp021+3vw2p012+3uw2p102+6uvwp111.See Figure 17.22. To ensure C0

(assuming that normals are shared between adjacent triangles).

Say that we want to compute p210

Assuming normalized normals, the point p210

(17.44)

p210=13(2p300+p030-(n200·(p030-p300))n200).The other border control points can be computed similarly, so it only remains to compute the interior control point, p111

and this choice follows a quadratic polynomial [457,458]:

(17.45)

p111=14(p210+p120+p102+p201+p021+p012)-16(p300+p030+p003).Instead of using Equation 17.42 to compute the two tangents on the surface, and subsequently the normal, Vlachos et al. [1819] choose to interpolate the normal using a quadratic scheme, as shown here:

(17.46)

n(u,v)=∑i+j+k=2B2ijk(u,v)nijk=u2n200+v2n020+w2n002+2(uvn110+uwn101+vwn011).This can be thought of as a Bézier triangle of degree two, where the control points are six different normals. In Equation 17.46, the choice of the degree, i.e., quadratic, is quite natural, since the derivatives are of one degree lower than the actual Bézier triangle, and because linear interpolation of the normals cannot describe an inflection. See Figure 17.29.

Figure 17.29. This figure illustrates why quadratic interpolation of normals is needed, and why linear interpolation is not sufficient. The left column shows what happens when linear interpolation of normals is used. This works fine when the normals describe a convex surface (top), but breaks down when the surface has an inflection (bottom). The right column illustrates quadratic interpolation. (Illustration after van Overveld and Wyvill [1342].)

To be able to use Equation 17.46, the normal control points n110

Also, note that each normal should be normalized. Mathematically, the unnormalized version of n110

(17.47)

n′110=n200+n020-2(p030-p300)·(n200+n020)(p030-p300)·(p030-p300)(p030-p300).Originally, van Overveld and Wyvill used a factor of 3 / 2 instead of the 2 in this equation. Which value is best is hard to judge from looking at images, but using 2 gives the nice interpretation of a true reflection in the plane.

Figure 17.30. Construction of n110

At this point, all Bézier points of the cubic Bézier triangle and all the normal vectors for quadratic interpolation have been computed. It only remains to create triangles on the Bézier triangle so that they can be rendered. Advantages of this approach are that the surface gets a better silhouette and shape for relatively lower cost.

One way to specify levels of detail is the following. The original triangle data are considered LOD 0. The LOD number then increases with the number of newly introduced vertices on a triangle edge. So, LOD 1 introduces one new vertex per edge, and so creates four subtriangles on the Bézier triangle, and LOD 2 introduces two new vertices per edge, generating nine subtriangles. In general, LOD n generates (n+1)2

One problem with PN triangles is that creases are hard to control, and often one needs to insert extra triangles near a desired crease. The continuity between Bézier triangles is only C0

17.2.5. Phong Tessellation

Boubekeur and Alexa [182] presented a surface construction called Phong tessellation, which has many similarities with PN triangles, but is faster to evaluate and simpler to implement. Call the vertices of a base triangle p0

(17.48)

p(u,v)=(u,v,1-u-v)·(p0,p1,p2).In Phong shading, the normals are interpolated over the flat triangle, also using the equation above, but with the points replaced by normals. Phong tessellation attempts to create a geometric version of Phong shading normal interpolation using repeated interpolation, which results in a Bézier triangle. For this discussion, we will refer to Figure 17.31.

Figure 17.31. Phong tessellation construction illustrated using curves instead of surfaces, which means that p(u)

The first step is to create a function that projects a point q

(17.49)

ti(q)=q-((q-pi)·ni)ni.Instead of using the triangle vertices to perform linear interpolation (Equation 17.48), linear interpolation is done using the function ti

(17.50)

p∗(u,v)=(u,v,1-u-v)·(t0(u,v),t1(u,v),t2(u,v)).To add some flexibility, a shape factor α

(17.51)

p∗α(u,v)=(1-α)p(u,v)+αp∗(u,v),where α=0.75

Figure 17.32. Phong tessellation applied to the monster frog. From left to right: base mesh with flat shading, base mesh with Phong shading, and finally, Phong tessellation applied to the base mesh. Note the improved silhouettes. In this example, we used α=0.6

17.2.6. B-Spline Surfaces

Section 17.1.6 briefly introduced B-spline curves, and here we will do the same for introducing B-spline surfaces. Equation 17.24 on page 732 can be generalized to B-spline patches as

(17.52)

sn(u,v)=∑k∑lck,lβn(u-k)βn(v-l),which is fairly similar to the Bézier patch formula (Equation 17.32). Note that sn(u,v)

Figure 17.33. The setting for a bicubic B-spline patch, which has 4×4

For a bicubic B-spline patch, the β3(t)

17.3 Implicit Surfaces

To this point, only parametric curves and surfaces have been discussed. Implicit surfaces form another useful class for representing models. Instead of using some parameters, say u and v, to explicitly describe a point on the surface, the following form, called the implicit function, is used:

(17.53)

f(x,y,z)=f(p)=0.This is interpreted as follows: A point p

Some examples of implicit surfaces, located at the origin, are

(17.54)

fs(p,r)=||p||-r,sphere;fxz(p)=py,planeinxz;frb(p,d,r)=||max(|p|-d,0)||-r,roundedbox.These deserve some explanation. The sphere is simply the distance from p

To get a non-rounded box, simply set r=0

Figure 17.34. Left: a non-rounded box, whose signed distance function is ||max(|p|-d,0)||

The normal of an implicit surface is described by the partial derivatives, called the gradient and denoted ∇f

(17.55)

∇f(x,y,z)=(∂f∂x,∂f∂y,∂f∂z).To be able to evaluate it exactly, f in Equation 17.55 must be differentiable, and thus also continuous. In practice, one often use a numerical technique called central differences, which samples using the scene function f [495]:

(17.56)

∇fx≈f(p+ϵex)-f(p-ϵex),and similarly for ∇fy

To build a scene with the primitives in Equation 17.54, the union operator, ∪

Figure 17.35. Left: pairs of spheres blended with different increasing (from left to right) blend radii and with a ground floor composed of repeated rounded boxes. Right: three spheres blended together.

Blending of implicit surfaces is a nice feature that can be used in what is often referred to as blobby modeling [161], soft objects, or metaballs [67,558]. See Figure 17.35 for some examples. The basic idea is to use several simple primitives, such as spheres, ellipsoids, or whatever is available, and blend these smoothly. Each object can be seen as an atom, and after blending the molecule of the atoms is obtained. Blending can be done in many different ways. An often-used method [1189,1450] to blend two distances, d1

(17.57)

h=min(max(0.5+0.5(d2-d1)/rb,0.0),1.0),d=(1-h)d2+hd1+rbh(1-h),where d is the blended distance. While this function only blends the shortest distances to two objects, the function can be use repeatedly to blend more objects (see the right part of Figure 17.35).

Figure 17.36. Ray marching with signed distance fields. The dashed circles indicate the distance to the closest surface from their centers. A position can advance along the ray to the border of the previous position’s circle.

To visualize a set of implicit functions, the usual method used is ray marching [673]. Once you can ray-march through a scene, it is also possible to generate shadows, reflections, ambient occlusion, and other effects. Ray marching within a signed distance field is illustrated in Figure 17.36. At the first point, p

Figure 17.37. Rain forest (left) and a snail (right) created procedurally using signed distance functions and ray marching. The trees were generated using ellipsoids displaced with procedural noise. (Images generated with Shadertoy using programs from I nigo Quilez.)

Every implicit surface can also be turned into a surface consisting of triangles. There are several algorithms available for performing this operation [67,558]. One well-known example is the marching cubes algorithm, described in Section 13.10. Code for performing polygonalization using algorithms by Wyvill and Bloomenthal is available on the web [171], and de Araújo et al. [67] present a survey on recent techniques for polygonalization of implicit surfaces. Tatarchuk and Shopf [1744] describe a technique they call marching tetrahedra, in which the GPU can be used to find isosurfaces in a three-dimensional data set. Figure on page 48 shows an example of isosurface extraction using the geometry shader. Xiao et al. [1936] present a fluid simulation system in which the GPU computes the locations of 100k particles and uses them to display the isosurface, all at interactive rates.

17.4 Subdivision Curves

Subdivision techniques are used to create smooth curves and surfaces. One reason why they are used in modeling is that they bridge the gap between discrete surfaces (triangle meshes) and continuous surfaces (e.g., a collection of Bézier patches), and can therefore be used for level of detail techniques (Section ). Here, we will first describe how subdivision curves work, and then discuss the more popular subdivision surface schemes.

Subdivision curves are best explained by an example that uses corner cutting. See Figure 17.38. The corners of the leftmost polygon are cut off, creating a new polygon with twice as many vertices. Then the corners of this new polygon are cut off, and so on to infinity (or, more practically, until we cannot see any difference). The resulting curve, called the limit curve, is smooth since all corners are cut off. This process can also be thought of as a low-pass filter since all sharp corners (high frequency) are removed. This process is often written as P0→P1→P2⋯→P∞

This subdivision process can be done in many different ways, and each is characterized by a subdivision scheme. The one shown in Figure 17.38 is called Chaikin’s scheme [246] and works as follows. Assume the n vertices of a polygon are P0={p00,⋯,p0n-1}

Figure 17.38. Chaikin’s subdivision scheme in action. The initial control polygon P0

of subdivision. Chaikin’s scheme creates two new vertices between each subsequent pair of vertices, say pki

(17.58)

pk+12i=34pki+14pki+1andpk+12i+1=14pki+34pki+1.As can be seen, the superscript changes from k to k+1

There are two different classes of subdivision schemes, namely approximating and interpolating. Chaikin’s scheme is approximating, as the limit curve, in general, does not lie on the vertices of the initial polygon. This is because the vertices are discarded (or updated, for some schemes). In contrast, an interpolating scheme keeps all the points from the previous subdivision step, and so the limit curve P∞

(17.59)

pk+12i=pki,[3pt]pk+12i+1=(12+w)(pki+pki+1)-w(pki-1+pki+2).

Figure 17.39. The 4-point subdivision scheme in action. This is an interpolating scheme as the curve goes through the initial points, and in general curve Pi+1

The first line in Equation 17.59 simply means that we keep the points from the previous step without changing them (i.e., interpolating), and the second line is for creating a new point in between pki and pki+1 . The weight w is called a tension parameter. When w=0 , linear interpolation is the result, but when w=1/16 , we get the kind of behavior shown in Figure 17.39. It can be shown [402] that the resulting curve is C1 when 0<w<1/8 . For open polylines we run into problems at the endpoints because we need two points on both sides of the new point, and we only have one. This can be solved if the point next to the endpoint is reflected across the endpoint. So, for the start of the polyline, p1 is reflected across p0 to obtain p-1 . This point is then used in the subdivision process. The creation of p-1 is shown in Figure 17.40.

Figure 17.40. The creation of a reflection point, p-1 , for open polylines. The reflection point is computed as: p-1=p0-(p1-p0)=2p0-p1 .

Another approximating scheme uses the following subdivision rules:

(17.60)

pk+12i=34pki+18(pki-1+pki+1),[3pt]pk+12i+1=12(pki+pki+1).The first line updates the existing points, and the second computes the midpoint on the line segment between two neighboring points. This scheme generates a cubic B-spline curve (Section 17.1.6). Consult the SIGGRAPH course on subdivision [1977], the Killer B’s book [111], Warren and Weimer’s subdivision book [1847], or Farin’s CAGD book [458] for more about these curves.

Given a point p and its neighboring points, it is possible to directly “push” that point to the limit curve, i.e., determine what the coordinates of p would be on P∞ . This is also possible for tangents. See, for example, Joy’s online introduction to this topic [843].

Many of the concepts for subdivision curves also apply to subdivision surfaces, which are presented next.

17.5 Subdivision Surfaces

Subdivision surfaces are a powerful paradigm for defining smooth, continuous, crackless surfaces from meshes with arbitrary topology. As with all other surfaces in this chapter, subdivision surfaces also provide infinite level of detail. That is, you can generate as many triangles or polygons as you wish, and the original surface representation is compact. An example of a surface being subdivided is shown in Figure 17.41. Another advantage is that subdivision rules are simple and easily implemented. A disadvantage is that the analysis of surface continuity often is mathematically involved. However, this sort of analysis is often of interest only to those who wish to create new subdivision schemes, and is out of the scope of this book. For such details, consult Warren and Weimer’s book [1847] and the SIGGRAPH course on subdivision [1977].

In general, the subdivision of surfaces (and curves) can be thought of as a two-phase process [915]. Starting with a polygonal mesh, called the control mesh or the control cage, the first phase, called the refinement phase, creates new vertices and reconnects to create new, smaller triangles. The second, called the smoothing phase, typically computes new positions for some or all vertices in the mesh. This is illustrated in Figure 17.42. It is the details of these two phases that characterize a subdivision scheme. In the first phase, a polygon can be split in different ways, and in the second phase, the choice of subdivision rules give different characteristics such as the level of continuity, and whether the surface is approximating or interpolating, which are properties described in Section 17.4.

A subdivision scheme can be characterized by being stationary or non-stationary, by being uniform or nonuniform, and whether it is triangle-based or polygon-based. A stationary scheme uses the same subdivision rules at every subdivision step, while a nonstationary may change the rules depending on which step currently is being processed. The schemes treated below are all stationary. A uniform scheme uses the same rules for every vertex or edge, while a nonuniform scheme may use different rules for different vertices or edges. As an example, a different set of rules is often used for edges that are on the boundaries of a surface. A triangle-based scheme only operates on triangles, and thus only generates triangles, while a polygon-based scheme operates on arbitrary polygons.

Figure 17.41. The top left image shows the control mesh, i.e., that original mesh, which is the only geometrical data that describes the resulting subdivision surface. The following images are subdivided one, two, and three times. As can be seen, more and more polygons are generated and the surface gets smoother and smoother. The scheme used here is the Catmull-Clark scheme, described in Section 17.5.2.

Figure 17.42. Subdivision as refinement and smoothing. The refinement phase creates new vertices and reconnects to create new triangles, and the smoothing phase computes new positions for the vertices.

Figure 17.43. The connectivity of two subdivision steps for schemes such as Loop’s method. Each triangle generates four new triangles.

Several different subdivision schemes are presented next. Following these, two techniques are presented that extend the use of subdivision surfaces, along with methods for subdividing normals, texture coordinates, and colors. Finally, some practical algorithms for subdivision and rendering are presented.

17.5.1. Loop Subdivision

Loop’s method [767,1067] was the first subdivision scheme for triangles. It is like the last scheme in Section 17.4 in that it is approximating, and that it updates each existing vertex and creates a new vertex for each edge. The connectivity for this scheme is shown in Figure 17.43. As can be seen, each triangle is subdivided into four new triangles, so after n subdivision steps, a triangle has been subdivided into 4n triangles.

First, let us focus on an existing vertex pk , where k is the number of subdivision steps. This means that p0 is the vertex of the control mesh.

After one subdivision step, p0 turns into p1 . In general, p0→p1→p2→⋯→p∞ , where p∞ is the limit point. If pk has n neighboring vertices, pki , i∈{0,1,⋯,n-1} , then we say that the valence of pk is n. See Figure 17.44 for the notation described above. Also, a vertex that has valence 6 is called regular or ordinary. Otherwise it is called irregular or extraordinary.

Below, the subdivision rules for Loop’s scheme are given, where the first formula is the rule for updating an existing vertex pk into pk+1 , and the second formula is for creating a new vertex, pk+1i , between pk and each of the pki . Again, n is the valence of pk :

(17.61)

pk+1=(1-nβ)pk+β(pk0+⋯+pkn-1),pk+1i=3pk+3pki+pki-1+pki+18,i=0⋯n-1.

Figure 17.44. The notation used for Loop’s subdivision scheme. The left neighborhood is subdivided into the neighborhood to the right. The center point pk is updated and replaced by pk+1 , and for each edge between pk and pki , a new point is created ( pk+1i , i∈1,⋯,n ).

Note that we assume that the indices are computed modulo n, so that if i=n-1 , then for i+1 , we use index 0, and likewise when i=0 , then for i-1 , we use index n-1 . These subdivision rules can easily be visualized as masks, also called stencils. See Figure 17.45. The major use of these is that they communicate almost an entire subdivision scheme using only a simple illustration. Note that the weights sum to one for both masks. This is a characteristic that is true for all subdivision schemes, and the rationale for this is that a new point should lie in the neighborhood of the weighted points.

In Equation 17.61, the constant β is actually a function of n, and is given by

(17.62)

β(n)=1n(58-(3+2cos(2π/n))264).Loop’s suggestion [1067] for the β function gives a surface of C2 continuity at every regular vertex, and C1 elsewhere [1976], that is, at all irregular vertices. As only regular vertices are created during subdivision, the surface is only C1 at the places where we had irregular vertices in the control mesh. See Figure 17.46 for an example of a mesh subdivided with Loop’s scheme.

Figure 17.45. The masks for Loop’s subdivision scheme (black circles indicate which vertex is updated/generated). A mask shows the weights for each involved vertex. For example, when updating an existing vertex, the weight 1-nβ is used for the existing vertex, and the weight β is used for all the neighboring vertices, called the 1-ring.

Figure 17.46. A worm subdivided three times with Loop’s subdivision scheme.

A variant of Equation 17.62, which avoids trigonometric functions, is given by Warren and Weimer [1847]:

(17.63)

β(n)=3n(n+2).For regular valences, this gives a C2 surface, and C1 elsewhere. The resulting surface is hard to distinguish from a regular Loop surface. For a mesh that is not closed, we cannot use the presented subdivision rules. Instead, special rules have to be used for such boundaries. For Loop’s scheme, the reflection rules of Equation 17.60 can be used. This is also treated in Section 17.5.3.

The surface after infinitely many subdivision steps is called the limit surface. Limit surface points and limit tangents can be computed using closed form expressions. The limit position of a vertex is computed [767,1977] using the formula on the first row in Equation 17.61, by replacing β(n) with

(17.64)

γ(n)=1n+38β(n).Two limit tangents for a vertex pk can be computed by weighting the immediate neighboring vertices, called the 1-ring or 1-neighborhood, as shown below [767,1067]:

(17.65)

tu=∑n-1i=0cos(2πi/n)pki,tv=∑n-1i=0sin(2πi/n)pki.The normal is then n=tu×tv . Note that this often is less expensive [1977] than the methods described in Section 16.3, which need to compute the normals of the neighboring triangles. More importantly, this gives the exact normal at the point.

A major advantage of approximating subdivision schemes is that the resulting surface tends to get fair. Fairness is, loosely speaking, related to how smoothly a curve or surface bends [1239]. A higher degree of fairness implies a smoother curve or surface. Another advantage is that approximating schemes converge faster than interpolating schemes. However, this means that the shapes often shrink. This is most notable for small, convex meshes, such as the tetrahedron shown in Figure 17.47.

Figure 17.47. A tetrahedron is subdivided five times with Loop’s, the √3 , and the modified butterfly (MB) scheme [1975]. Loop’s and the √3 -scheme [915] are both approximating, while MB is interpolating, where the latter means that the initial vertices are located on the final surface. We cover only approximating schemes in this book due to their popularity in games and offline rendering.

One way to decrease this effect is to use more vertices in the control mesh, i.e., care must be taken while modeling it. Maillot and Stam present a framework for combining subdivision schemes so that the shrinking can be controlled [1106]. A characteristic that can be used to great advantage at times is that a Loop surface is contained inside the convex hull of the original control points [1976].

The Loop subdivision scheme generates a generalized three-directional quartic box spline. 1 So, for a mesh consisting only of regular vertices, we could actually describe the surface as a type of spline surface. However, this description is not possible for irregular settings. Being able to generate smooth surfaces from any mesh of vertices is one of the great strengths of subdivision schemes. See also Sections 17.5.3 and 17.5.4 for different extensions to subdivision surfaces that use Loop’s scheme.

17.5.2. Catmull-Clark Subdivision

The two most famous subdivision schemes that can handle polygonal meshes (rather than just triangles) are Catmull-Clark [239] and Doo-Sabin [370]. 2 Here, we will only briefly present the former. Catmull-Clark surfaces have been used in Pixar’s short film Geri’s Game [347], Toy Story 2, and in all subsequent feature films from Pixar. This subdivision scheme is also commonly used for making models for games, and is probably the most popular one. As pointed out by DeRose et al. [347], Catmull-Clark surfaces tend to generate more symmetrical surfaces. For example, an oblong box results in a symmetrical ellipsoid-like surface, which agrees with intuition. In contrast, a triangular-based subdivision scheme would treat each cube face as two triangles, and would hence generate different results depending on how the square is split.

The basic idea for Catmull-Clark surfaces is shown in Figure 17.48, and an actual example of Catmull-Clark subdivision is shown in Figure 17.41 on page 757.

Figure 17.48. The basic idea of Catmull-Clark subdivision. Each polygon generates a new point, and each edge generates a new point. These are then connected as shown to the right. Weighting of the original points is not shown here.

As can be seen, this scheme only generates faces with four vertices. In fact, after the first subdivision step, only vertices of valence 4 are generated, thus such vertices are called ordinary or regular (compared to valence 6 for triangular schemes).

Following the notation from Halstead et al. [655], let us focus on a vertex vk with n surrounding edge points eki , where i=0⋯n-1 . See Figure 17.49. Now, for each face, a new face point fk+1 is computed as the face centroid, i.e., the mean of the points of the face. Given this, the subdivision rules are [239,655,1977]

(17.66)

vk+1=n-2nvk+1n2n-1∑j=0ekj+1n2n-1∑j=0fk+1j,[3pt]ek+1j=vk+ekj+fk+1j-1+fk+1j4.As can be seen, the vertex vk+1 is computed as weighting of the considered vertex, the average of the edge points, and the average of the newly created face points. On the other hand, new edge points are computed by the average of the considered vertex, the edge point, and the two newly created face points that have the edge as a neighbor.

Figure 17.49. Before subdivision, we have the blue vertices and corresponding edges and faces. After one step of Catmull-Clark subdivision, we obtain the red vertices, and all new faces are quadrilaterals. (Illustration after Halstead et al. [655].)

The Catmull-Clark surface describes a generalized bicubic B-spline surface. So, for a mesh consisting only of regular vertices we could actually describe the surface as a bicubic B-spline surface (Section 17.2.7) [1977]. However, this is not possible for irregular mesh settings, and being able to handle these using subdivision surfaces is one of the scheme’s strengths.

Limit positions and tangents are also possible to compute, even at arbitrary parameter values using explicit formulae [1687]. Halstead et al. [655] describe a different approach to computing limit points and normals.

See Section 17.6.3 for a set of efficient techniques that can render Catmull-Clark subdivision surfaces using the GPU.

17.5.3. Piecewise Smooth Subdivision

In a sense, curved surfaces may be considered boring because they lack detail. Two ways to improve such surfaces are to use bump or displacement maps (Section 17.5.4). A third approach, piecewise smooth subdivision, is described here. The basic idea is to change the subdivision rules so that darts, corners, and creases can be used. This increases the range of different surfaces that can be modeled and represented. Hoppe et al. [767] first described this for Loop’s subdivision surfaces. See Figure 17.50 for a comparison of a standard Loop subdivision surface, and one with piecewise smooth subdivision.

Figure 17.50. The top row shows a control mesh, and the limit surface using the standard Loop subdivision scheme. The bottom row shows piecewise smooth subdivision with Loop’s scheme. The lower left image shows the control mesh with tagged edges (sharp) shown in a light gray. The resulting surface is shown to the lower right, with corners, darts, and creases marked. (Image courtesy of Hugues Hoppe.)

To actually be able to use such features on the surface the edges that we want to be sharp are first tagged, so we know where to subdivide differently. The number of sharp edges coming in at a vertex is denoted s. Then the vertices are classified into: smooth ( s=0 ), dart ( s=1 ), crease ( s=2 ), and corner ( s>2 ). Therefore, a crease is a curve on the surface, where the continuity across the curve is C0 . A dart is a non-boundary vertex where a crease ends and smoothly blends into the surface. Finally, a corner is a vertex where three or more creases come together. Boundaries can be defined by marking each boundary edge as sharp.

After classifying the various vertex types, Hoppe et al. use a table to determine which mask to use for the various combinations. They also show how to compute limit surface points and limit tangents. Biermann et al. [142] present several improved subdivision rules. For example, when extraordinary vertices are located on a boundary, the previous rules could result in gaps. This is avoided with the new rules. Also, their rules make it possible to specify a normal at a vertex, and the resulting surface will adapt to get that normal at that point. DeRose et al. [347] present a technique for creating soft creases. They allow an edge to first be subdivided as sharp a number of times (including fractions), and after that, standard subdivision is used.

17.5.4. Displaced Subdivision

Bump mapping (Section ) is one way to add detail to otherwise smooth surfaces. However, this is just an illusionary trick that changes the normal or local occlusion at each pixel. The silhouette of an object looks the same with or without bump mapping. The natural extension of bump mapping is displacement mapping [287], where the surface is displaced. This is usually done along the direction of the normal. So, if the point of the surface is p , and its normalized normal is n , then the point on the displaced surface is

(17.67)

s(u,v)=p(u,v)+d(u,v)n(u,v).The scalar d is the displacement at the point p . The displacement could also be vector-valued [938].

In this section, the displaced subdivision surface [1006] will be presented. The general idea is to describe a displaced surface as a coarse control mesh that is subdivided into a smooth surface that is then displaced along its normals using a scalar field. In the context of displaced subdivision surfaces, p in Equation 17.67 is the limit point on the subdivision surface (of the coarse control mesh), and n is the normalized normal at p , computed as

(17.68)

n=n′||n′||,wheren′=pu×pv,In Equation 17.68, pu and pv are the first-order derivative of the subdivision surface. Thus, they describe two tangents at p . Lee et al. [1006] use a Loop subdivision surface for the coarse control mesh, and its tangents can be computed using Equation 17.65. Note that the notation is slightly different here; we use pu and pv instead of tu and tv . Equation 17.67 describes the displaced position of the resulting surface, but we also need a normal, ns , on the displaced subdivision surface in order to render it correctly. It is computed analytically as shown below [1006]:

(17.69)

ns=su×sv,wheresu=∂s∂u=pu+dun+dnuandsv=∂s∂v=pv+dvn+dnv.To simplify computations, Blinn [160] suggests that the third term can be ignored if the displacements are small. Otherwise, the following expressions can be used to compute nu (and similarly nv ) [1006]:

(17.70)

ˉnu=puu×pv+pu×puv,nu=ˉnu-(ˉnu·n)n||n′||.Note that ˉnu is not any new notation, it is merely a “temporary” variable in the computations. For an ordinary vertex (valence n=6 ), the first and second order derivatives are particularly simple. Their masks are shown in Figure 17.51.

Figure 17.51. The masks for an ordinary vertex in Loop’s subdivision scheme. Note that after using these masks, the resulting sum should be divided as shown. (Illustration after Lee et al. [1006].)

For an extraordinary vertex (valence n≠6 ), the third term in rows one and two in Equation 17.69 is omitted.

An example using displacement mapping with Loop subdivision is shown in Figure 17.52.

When a displaced surface is far away from the viewer, standard bump mapping could be used to give the illusion of this displacement. Doing so saves on geometry processing. Some bump mapping schemes need a tangent-space coordinate system at the vertex, and the following can be used for that: (b,t,n) , where t=pu/||pu|| and b=n×t .

Nießner and Loop [1281] present a similar method as the one presented above by Lee et al., but they use Catmull-Clark surfaces and use direct evaluation of the derivatives on the displacement function, which is faster. They also use the hardware tessellation pipeline (Section ) for rapid tessellation.

Figure 17.52. To the left is a coarse mesh. In the middle, it is subdivided using Loop’s subdivision scheme. The right image shows the displaced subdivision surface. (Image courtesy of Aaron Lee, Henry Moreton, and Hugues Hoppe.)

17.5.5. Normal, Texture, and Color Interpolation

In this section, we will present different strategies for dealing with normals, texture coordinates and color per vertex.

As shown for Loop’s scheme in Section 17.5.1, limit tangents, and thus, limit normals can be computed explicitly. This involves trigonometric functions that may be expensive to evaluate. Loop and Schaefer [1070] present an approximative technique where Catmull-Clark surfaces always are approximated by bicubic Bézier surfaces (Section 17.2.1). For normals, two tangent patches are derived, that is, one in the u-direction and one the v-direction. The normal is then found as the cross product between those vectors. In general, the derivatives of a Bézier patch are computed using Equation 17.35. However, since the derived Bézier patches approximate the Catmull-Clark surface, the tangent patches will not form a continuous normal field. Consult Loop and Schaefer’s paper [1070] on how to overcome these problems. Alexa and Boubekeur [29] argue that it can be more efficient in terms of quality per computation to also subdivide the normals, which gives nicer continuity in the shading. We refer to their paper for the details on how to subdivide the normals. More types of approximations can also be found in Ni et al.’s SIGGRAPH course [1275].

Assume that each vertex in a mesh has a texture coordinate and a color. To be able to use these for subdivision surfaces, we also have to create colors and texture coordinates for each newly generated vertex, too. The most obvious way to do this is to use the same subdivision scheme as we used for subdividing the polygon mesh. For example, you can treat the colors as four-dimensional vectors (RGBA), and subdivide these to create new colors for the new vertices. This is a reasonable way to do it, since the color will have a continuous derivative (assuming the subdivision scheme is at least C1 ), and thus abrupt changes in colors are avoided over the surface The same can certainly be done for texture coordinates [347]. However, care must be taken when there are boundaries in texture space. For example, assume we have two patches sharing an edge but with different texture coordinates along this edge. The geometry should be subdivided with the surface rules as usual, but the texture coordinates should be subdivided using boundary rules in this case.

A sophisticated scheme for texturing subdivision surfaces is given by Piponi and Borshukov [1419].

17.6 Efficient Tessellation

To display a curved surface in a real-time rendering context, we usually need to create a triangle mesh representation of the surface. This process is known as tessellation. The simplest form of tessellation is called uniform tessellation. Assume that we have a parametric Bézier patch, p(u,v) , as described in Equation 17.32. We want to tessellate this patch by computing 11 points per patch side, resulting in 10×10×2=200 triangles. The simplest way to do this is to sample the uv-space uniformly. Thus, we evaluate p(u,v) for all (uk,vl)=(0.1k,0.1l) , where both k and l can be any integer from 0 to 10. This can be done with two nested for-loops. Two triangles can be created for the four surface points p(uk,vl) , p(uk+1,vl) , p(uk+1,vl+1) , and p(uk,vl+1) .

While this certainly is straightforward, there are faster ways to do it. Instead of sending tessellated surfaces, consisting of many triangles, over the bus from the CPU to the GPU, it makes more sense to send the curved surface representation to the GPU and let it handle the data expansion. Recall that the tessellation stage is described in Section 3.6. For a quick refresher, see Figure 17.53.

Figure 17.53. Pipeline with hardware tessellation, where the new stages are shown in the middle three (blue) boxes. We use the naming conventions from DirectX here, with the OpenGL counterparts in parenthesis. The hull shader computes new locations of control points and computes tessellation factors as well, which dictates how many triangles the subsequent step should generate. The tessellator generates points in the uv-space, in this case a unit square, and connects them into triangles. Finally, the domain shader computes the positions at each uv-coordinate using the control points.

The tessellator may use a fractional tessellation technique, which is described in the following section. Then follows a section on adaptive tessellation, and finally, we describe how to render Catmull-Clark surfaces and displacement mapped surfaces with tessellation hardware.

17.6.1. Fractional Tessellation

To obtain smoother level of detail for parametric surfaces, Moreton introduced fractional tessellation factors [1240]. These factors enable a limited form of adaptive tessellation, since different tessellation factors can be used on different sides of the parametric surface. Here, an overview of how these techniques work will be presented.

In Figure 17.54, constant tessellation factors for rows and columns are shown on the left, and independent tessellation factors for all four edges on the right. Note that the tessellation factor of an edge is the number of points generated on that edge, minus one. In the patch on the right, the greater of the top and bottom factors is used in the interior for both of these edges, and similarly the greater of the left and right factors is used in the interior. Thus, the basic tessellation rate is 4×8 . For the sides with smaller factors, triangles are filled in along the edges. Moreton [1240] describes this process in more detail.

Figure 17.54. Left: normal tessellation—one factor is used for the rows, and another for the columns. Right: independent tessellation factors on all four edges. (Illustration after Moreton [1240].)

Figure 17.55. Top: integer tessellation. Middle: fractional tessellation, with the fraction to the right. Bottom: fractional tessellation with the fraction in the middle. This configuration avoids cracks between adjacent patches.

The concept of fractional tessellation factors is shown for an edge in Figure 17.55. For an integer tessellation factor of n, n+1 points are generated at k / n, where k=0,⋯,n . For a fractional tessellation factor, r, ⌈r⌉ points are generated at k / r, where k=0,⋯,⌊r⌋ . Here, ⌈r⌉ computes the ceiling of r, which is the closest integer toward +∞ , and ⌊r⌋ computes the floor, which is the closest integer toward -∞ . Then, the rightmost point is just “snapped” to the rightmost endpoint. As can be seen in the middle illustration in Figure 17.55, this pattern in not symmetric. This leads to problems, since an adjacent patch may generate the points in the other direction, and so give cracks between the surfaces. Moreton solves this by creating a symmetric pattern of points, as shown at the bottom of Figure 17.55. See also Figure 17.56 for an example.

Figure 17.56. A patch with the rectangular domain fractionally tessellated. (Illustration after Moreton [1240].)

Figure 17.57. Fractional tessellation of a triangle, with tessellation factors shown. Note that the tessellation factors may not correspond exactly to those produced by actual tessellation hardware. (Illustration after Tatarchuk [1745].)

So far, we have seen methods for tessellating surfaces with a rectangular domain, e.g., Bézier patches. However, triangles can also be tessellated using fractions [1745], as shown in Figure 17.57. Like the quadrilaterals, it is also possible to specify an independent fractional tessellation rate per triangle edge. As mentioned, this enables adaptive tessellation (Section 17.6.2), as illustrated in Figure 17.58, where displacement mapped terrain is rendered.

Once triangles or quadrilaterals have been created, they can be forwarded to the next step in the pipeline, which is treated in the next subsection.

17.6.2. Adaptive Tessellation

Uniform tessellation gives good results if the sampling rate is high enough. However, in some regions on a surface there may not be as great a need for high tessellation as in other regions. This may be because the surface bends more rapidly in some area and therefore may need higher tessellation there, while other parts of the surface are almost flat or far away, and only a few triangles are needed to approximate them. A solution to the problem of generating unnecessary triangles is adaptive tessellation, which refers to algorithms that adapt the tessellation rate depending on some measure (for example curvature, triangle edge length, or some screen size measure). Figure 17.58 shows an example of adaptive tessellation for terrain.

Figure 17.58. Displaced terrain rendering using adaptive fractional tessellation. As can be seen in the zoomed-in mesh to the right, independent fractional tessellation rates are used for the edges of the red triangles, which gives us adaptive tessellation. (Images courtesy of Game Computing Applications Group, Advanced Micro Devices, Inc.)

Figure 17.59. To the left, a crack is visible between the two regions. This is because the right has a higher tessellation rate than the left. The problem lies in that the right region has evaluated the surface where there is a black circle, and the left region has not. The standard solution is shown to the right.

Care must be taken to avoid cracks that can appear between different tessellated regions. See Figure 17.59. When using fractional tessellation, it is common to base the edge tessellation factors on information that comes from only the edge itself, since the edge data are all that is shared between the two connected patches. This is a good start, but due to floating point inaccuracies, there can still be cracks. Nießner et al. [1279] discuss how to make the computations fully watertight, e.g., by making sure that, for an edge, the exact same point is returned regardless of whether tessellation is done from p0 to p1 , or vice versa.

In this section, we will present some general techniques that can be used to compute fractional tessellation rates, or to decide when to terminate further tessellation and when to split larger patches into a set of smaller ones.

Terminating Adaptive Tessellation

To provide adaptive tessellation, we need to determine when to stop the tessellation, or equivalently how to compute fractional tessellation factors. Either you can use only the information of an edge to determine if tessellation should be terminated, or use information from an entire triangle, or a combination.

It should also be noted that with adaptive tessellation, one can get swimming or popping artifacts from frame to frame if the tessellation factors for a certain edge change too much from one frame to the next. This may be a factor to take into consideration when computing tessellation factors as well. Given an edge, (a,b) , with an associated curve, i.e., a patch edge curve, we can try to estimate how flat the curve is between a and b . See Figure 17.60.

Figure 17.60. The points a and b have already been generated on this surface. The question is: Should a new point, that is c , be generated on the surface?

The midpoint in parametric space between a and b is found, and its three-dimensional counterpart, c , is computed. Finally, the length, l, between c and its projection, d , onto the line between a and b , is computed. This length, l, is used to determine whether the curve segment on that edge if flat enough. If l is small enough, it is considered flat. Note that this method may falsely consider an S-shaped curve segment to be flat. A solution to this is to randomly perturb the parametric sample point [470]. An alternative to using just l is to use the ratio l/||a-b|| , giving a relative measure [404]. Note that this technique can be extended to consider a triangle as well, where you simply compute the surface point in the middle of the triangle and use the distance from that point to the triangle’s plane. To be certain that this type of algorithm terminates, it is common to set some upper limit on how many subdivisions can be made. When that limit is reached, the subdivision ends. For fractional tessellation, the vector from c to d can be projected onto the screen, and its (scaled) length used as a tessellation rate.

So far we have discussed how to determine the tessellation rate from only the shape of the surface. Other factors that typically are used for on-the-fly tessellation include whether the local neighborhood of a vertex is [769,1935]:

- Inside the view frustum.

- Frontfacing.

- Occupying a large area in screen space.

- Close to the silhouette of the object.

These factors will be discussed in turn here. For view frustum culling, one can place a sphere to enclose the edge. This sphere is then tested against the view frustum. If it is outside, we do not subdivide that edge further.

For face culling, the normals at a , b , and possibly c can be computed from the surface description. These normals, together with a , b , and c , define three planes. If all are backfacing, it is likely that no further subdivision is needed for that edge.

There are many different ways to implement screen-space coverage (see also Section ). All methods project some simple object onto the screen and estimate the length or area in screen space. A large area or length implies that tessellation should proceed. A fast estimation of the screen-space projection of a line segment from a to b is shown in Figure 17.61.

Figure 17.61. Estimation of the screen-space projection, s, of the line segment.

First, the line segment is translated so that its midpoint is on the view ray. Then, the line segment is assumed to be parallel to the near plane, n, and the screen-space projection, s, is computed from this line segment. Using the points of the line segment a′ and b′ to the right in the illustration, the screen-space projection is then

(17.71)

s=√(a′-b′)·(a′-b′)v·(a′-e).The numerator is simply the length of the line segment. This is divided by the distance from the eye, e , to the line segment’s midpoint. The computed screen-space projection, s, is then compared to a threshold, t, representing the maximum edge length in screen space. Rewriting the previous equation to avoid computing the square root, if the following condition is true, the tessellation should continue:

(17.72)

s>t⟺(a′-b′)·(a′-b′)>t2(v·(a′-e))2.Note that t2 is a constant and so can be precomputed. For fractional tessellation, s from Equation 17.71 can be used as the tessellation rate, possibly with a scaling factor applied. Another way to measure projected edge length is to place a sphere at the center of the edge, make the radius half the edge length, and then use the projection of the sphere as the edge tessellation factor [1283]. This test is proportional to the area, while the test above is proportional to edge length.

Increasing the tessellation rate for the silhouettes is important, since they play a prime role for the perceived quality of the object. Finding if a triangle is near a silhouette edge can be done by testing whether the dot product between the normal at a and the vector from the eye to a is close to zero. If this is true for any of a , b , or c , further tessellation should be done.

For displaced subdivision, Nießner and Loop [1281] use one of the following factors for each base mesh vertex v , which is connected to n edge vectors ei , i∈{0,1,⋯,n-1} :

(17.73)

f1=k1·||c-v||,f2=k2√∑ei×ei+1,f3=k3max(||e0||,||e1||,⋯,||en-1||),where the loop index, i, goes over all n edges ei connected to v , c is the position of the camera, and ki are user-supplied constants. Here, f1 is simply based on the distance to the vertex from the camera, f2 computes the area of the quads connected to v , and f3 uses the largest edge length. The tessellation factor for a vertex is then computed as the maximum of the edge’s two base vertices’ tessellation factors. The inner tessellation factors are computed as the maximum of the opposite edge’s tessellation factor (in u and v). This method can be used with any of the edge tessellation factor methods presented in this section.

Notably, Nießner et al. [1279] recommend using a single global tessellation factor for characters, which depends on the distance to the character. The number of subdivisions is then ⌈log2f⌉ , where f is the per-character tessellation factor, which can be computed using any of the methods above.

It is hard to say what methods will work in all applications. The best advice is to test several of the presented heuristics, and combinations of them.

Split and Dice Methods

Cook et al. [289] introduced a method called split and dice, with the goal being to tessellate surfaces so that each triangle becomes pixel-sized, to avoid geometrical aliasing. For real-time purposes, this tessellation threshold should be increased to what the GPU can handle. Each patch is first split recursively into a set of subpatches until it is estimated that if uniform tessellation is used for a certain subpatch, the triangles will have the desired size. Hence, this is also a type of adaptive tessellation.

Imagine a single large patch being used for a landscape. In general, there is no way that fractional tessellation can adapt so that the tessellation rate is higher closer to the camera and lower farther away, for example. The core of split and dice may therefore be useful for real-time rendering, even if the target tessellation rate is to have larger triangles than pixel-sized in our case.

Next, we describe the general method for split and dice in a real-time graphics scenario. Assume that a rectangular patch is used. Then start a recursive routine with the entire parametric domain, i.e., the square from (0, 0) to (1, 1). Using the adaptive termination criteria just described, test whether the surface is tessellated enough. If it is, then terminate tessellation. Otherwise, split this domain into four different equally large squares and call the routine recursively for each of the four subsquares. Continue recursively until the surface is tessellated enough or a predefined recursion level is reached. The nature of this algorithm implies that a quadtree is recursively created during tessellation. However, this will give cracks if adjacent subsquares are tessellated to different levels. The standard solution is to ensure that two adjacent subsquares only differ in one level at most. This is called a restricted quadtree. Then the technique shown to the right in Figure 17.59 is used to fill in the cracks. A disadvantage with this method is that the bookkeeping is more involved.

Liktor et al. [1044] present a variant of split and dice for the GPU. The problem is to avoiding swimming artifacts and popping effects when suddenly deciding to split one more time due to, e.g., the camera having moved closer to a surface. To solve that, they use a fractional split method, inspired by fractional tessellation. This is illustrated in Figure 17.62.

Figure 17.62. Fractional splitting is applied to a cubic Bézier curve. The tessellation rate t is shown for each curve. The split point is the large black circle, which is moved in from the right side of the curve toward the center of the curve. To fractionally split the cubic curve, the black point is smoothly moved toward the curve’s center and the original curve is replaced with two cubic Bézier segments that together generate the original curve. To the right, the same concept is illustrated for a patch, which has been split into four smaller subpatches, where 1.0 indicates that the split point is on the center point of the edge, and 0.0 means that it is at the patch corner. (Illustration after Liktor et al. [1044].)

Since the split is smoothly introduced from one side toward the center of the curve, or toward the center of the patch side, swimming and popping artifacts are avoided. When the termination criteria for the adaptive tessellation have been reached, each remaining subpatch is tessellated by the GPU using fractional tessellation as well.

17.6.3. Fast Catmull-Clark Tessellation

Catmull-Clark surfaces (Section 17.5.2) are frequently used in modeling software and in feature film rendering, and hence it is attractive to be able to render these efficiently using graphics hardware as well. Fast tessellation methods for Catmull-Clark surfaces have been an active research field over recent years. Here, we will present a handful of these methods.

Approximating Approaches

Loop and Schaefer [1070] present a technique to convert Catmull-Clark surfaces into a representation that can be evaluated quickly in the domain shader, without the need to know the polygons’ neighbors.

As mentioned in Section 17.5.2, the Catmull-Clark surface can be described as many small B-spline surfaces when all vertices are ordinary. Loop and Schaefer convert a quadrilateral (quad) polygon in the original Catmull-Clark subdivision mesh to a bi-cubic Bézier surface (Section 17.2.1). This is not possible for non-quadrilaterals, and so we assume that there are no such polygons (recall that after the first step of subdivision there are only quadrilateral polygons). When a vertex has a valence different from four, it is not possible to create a bicubic Bézier patch that is identical to the Catmull-Clark surface. Hence, an approximative representation is proposed, which is exact for quads with valence-four vertices, and close to the Catmull-Clark surface elsewhere. To this end, both geometry patches and tangent patches are used, which will be described next.

The geometry patch is simply a bicubic Bézier patch

with 4×4 control points. We will describe how these control points are computed. Once this is done, the patch can be tessellated and the domain shader can evaluate the Bézier patch quickly at any parametric coordinate (u, v). So, assuming we have a mesh consisting of only quads with vertices of valence four, we want to compute the control points of the corresponding Bézier patch for a certain quad in the mesh. To that end, the neighborhood around the quad is needed. The standard way of doing this is illustrated in Figure 17.63, where three different masks are shown. These can be rotated and reflected in order to create all the 16 control points. Note that in an implementation the weights for the masks should sum to one, a process that is omitted here for clarity.

Figure 17.63. Left: part of a quad mesh, where we want to compute a Bézier patch for the gray quad. Note that the gray quad has vertices with only valence four. The blue vertices are neighboring quads’ vertices, and the green circles are the control points of the Bézier patch. The following three illustrations show the different masks used to compute the green control points. For example, to compute one of the inner control points, the middle right mask is used, and the vertices of the quad are weighted with the weight shown in the mask.

Figure 17.64. Left: a Bézier patch for the gray quad of the mesh is to be generated. The lower left vertex in the gray quad is extraordinary, since its valence is n≠4 . The blue vertices are neighboring quads’ vertices, and the green circles are the control points of the Bézier patch. The following three illustrations show the different masks used to compute the green control points.

Figure 17.65. Left: quad structure of a mesh. White quads are ordinary, green have one extraordinary vertex, and blue have more than one. Middle left: geometry patch approximation. Middle right: geometry patches with tangent patches. Note that the obvious (red circle) shading artifacts disappeared. Right: true Catmull-Clark surface. (Image courtesy of Charles Loop and Scott Schaefer, reprinted with permission from Microsoft Corporation.)

The above technique computes a Bézier patch for the ordinary case. When there is at least one extraordinary vertex, we compute an extraordinary patch [1070]. The masks for this are shown in Figure 17.64, where the lower left vertex in the gray quad is an extraordinary vertex.

Note that this results in a patch that approximates the Catmull-Clark subdivision surface, and, it is only C0 along edges with an extraordinary vertex. This often looks distracting when shading is added, and hence a similar trick as used for N-patches (Section 17.2.5) is suggested. However, to reduce the computational complexity, two tangent patches are derived: one in the u-direction, and one the v-direction. The normal is then found as the cross product between those vectors. In general, the derivatives of a Bézier patch are computed using Equation 17.35. However, since the derived Bézier patches approximate the Catmull-Clark surface, the tangent patches will not form a continuous normal field. Consult Loop and Schaefer’s paper [1070] on how to overcome these problems. Figure 17.65 shows an example of the types of artifacts that can occur.

Kovacs et al. [931] describe how the method above can be extended to also handle creases and corners (Section 17.5.3), and implement these extensions in Valve’s Source engine.

Feature Adaptive Subdivision and OpenSubdiv

Pixar presented an open-source system called OpenSubdiv that implements a set of techniques called feature adaptive subdivision (FAS) [1279,1280,1282]. The basic approach is rather different from the previous technique just discussed. The foundation of this work lies in that subdivision is equivalent to bicubic B-spline patches (Section 17.2.7) for regular faces, i.e., quads where each vertex is regular, which means that the vertex has valence four. So, subdivision continues recursively only for non-regular faces, up until some maximum subdivision level is reached.

Figure 17.66. Left: recursive subdivision around an extraordinary vertex, i.e., the middle vertex has three edges. As recursion continues, it leaves a band of regular patches (with four vertices, each with four incoming edges) behind. Right: subdivision around a smooth crease indicated by the bold line in the middle. (Illustration after Nießner et al. [1279].)

This is illustrated on the left in Figure 17.66. FAS can also handle creases and semi-smooth creases [347], and the FAS algorithm also needs to subdivide around such creases, which is illustrated on the right in Figure 17.66. The bicubic B-spline patches can be rendered directly using the tessellation pipeline.

The method starts by creating a table using the CPU. This table encodes indices to vertices that need to be accessed during subdivision up to a specified level. As such, the base mesh can be animated since the indices are independent of the vertex positions. As soon as a bicubic B-spline patch is generated, recursion needs not to be continued, which means that the table usually becomes relatively small. The base mesh and the table with indices and additional valence and crease data are uploaded to the GPU once.

To subdivide the mesh one step, new face points are computed first, followed by new edge points, and finally the vertices are updated, and one compute shader is used for each of these types. For rendering, a distinction between full patches and transition patches is made. A full patch (FP) only shares edges with patches of the same subdivision level, and a regular FP is directly rendered as a bicubic B-spline patch using the GPU tessellation pipeline. Subdivision continues otherwise. The adaptive subdivision process ensures that there is at most a difference of one subdivision level between neighboring patches. A transition patch (TP) has a difference in subdivision level against at least one neighbor. To get crack-free renderings, each TP is split into several subpatches as shown in Figure 17.67. In this way, tessellated vertices match along both sides of each edge.

Each type of subpatch is rendered using different hull and domain shaders that implement interpolation variants. For example, the leftmost case in Figure 17.67 is rendered as three triangular B-spline patches. Around extraordinary vertices, another domain shader is used where limit positions and limit normals are computed using the method of Halstead et al. [655]. An example of Catmull-Clark surface rendering using OpenSubdiv is shown in Figure 17.68.

Figure 17.67. Red squares are transition patches, and each has four immediate neighbors, which are either blue (current subdivision level) or green (next subdivision level). This illustration shows the five configurations that can occur, and how they are stitched together. (Illustration after Nießner et al. [1279].)

Figure 17.68. Left: the control mesh in green and red lines with the gray surface (8k vertices) generated using one subdivision step. Middle: the mesh subdivided an additional two steps (102k vertices). Right: the surface generated using adaptive tessellation (28k vertices). (Images generated using OpenSubdiv’s dxViewer.)

The FAS algorithm handles creases, semi-smooth creases, hierarchical details, and adaptive level of detail. We refer to the FAS paper [1279] and Nießner’s PhD thesis [1282] for more details. Schäfer et al. [1547] presents a variant of FAS, called DFAS, which is even faster.

Adaptive Quadtrees

Brainerd et al. [190] present a method called adaptive quadtrees. It is similar to the approximating scheme by Loop and Schaefer [1070] in that a single tessellated primitive is submitted per quad of the original base mesh. In addition, it precomputes a subdivision plan, which is a quadtree that encodes the hierarchical subdivision (similar to feature adaptive subdivision) from an input face, down to some maximum subdivision level. The subdivision plan also contains a list of stencil masks of the control points needed by the subdivided faces.

During rendering, the quadtree is traversed, making it possible to map (u, v)-coordinates to a patch in the subdivision hierarchy, which can be directly evaluated. A quadtree leaf is a subregion of the domain of the original face, and the surface in this subregion can be directly evaluated using the control points in the stencil. An iterative loop is used to traverse the quadtree in the domain shader, whose input is a parametric (u, v)-coordinate. Traversal needs to continue until a leaf node is reached in which the (u, v)-coordinates are located. Depending on the type of node reached in the quadtree, different actions are taken. For example, when reaching a subregion that can be evaluated directly, the 16 control points for its corresponding bicubic B-spline patch are retrieved, and the shader continues with evaluating that patch.

See Figure 17.1 on page 718 for an example rendered using this technique. This method is the fastest to date that renders Catmull-Clark subdivision surfaces exactly, and handles creases and other topological features. An additional advantage of using adaptive quadtrees over FAS is illustrated in Figure 17.69 and further shown in Figure 17.70.

Figure 17.69. Left: hierarchical subdivision according to feature adaptive subdivision (FAS), where each triangle and quad is rendered as a separate tessellated primitive. Right: hierarchical subdivision using adaptive quadtrees, where the entire quad is rendered as a single tessellated primitive. (Illustration after Brainerd et al. [190].)

Adaptive quadtrees also provide more uniform tessellations since there is a one-to-one mapping between each submitted quad and tessellated primitive.

Figure 17.70. Subdivision patches using adaptive quadtrees. Each patch, corresponding to a base mesh face, is surrounded by black curves on the surface and the subdivision steps are illustrated hierarchically inside each patch. As can be seen, there is one patch with uniform color in the center. This implies that it was rendered as a bicubic B-spline patch, while others (with extraordinary vertices) clearly show their underlying adaptive quadtrees. (Image courtesy of Wade Brainerd.)

Further Reading and Resources

The topic of curves and surfaces is huge, and for more information, it is best to consult the books that focus solely on this topic. Mortenson’s book [1242] serves as a good general introduction to geometric modeling. Books by Farin [458,460], and by Hoschek and Lasser [777] are general and treat many aspects of Computer Aided Geometric Design (CAGD). For implicit surfaces, consult the book by Gomes et al. [558] and the more recent article by de Araújo et al. [67]. For much more information on subdivision surfaces, consult Warren and Heimer’s book [1847] and the SIGGRAPH course notes on “Subdivision for Modeling and Animation” by Zorin et al. [1977]. The course on substitutes for subdivision surfaces by Ni et al. [1275] is a useful resource here as well. The survey by Nießner et al. [1283] and Nießner’s PhD thesis [1282] are great for information on real-time rendering of subdivision surfaces using the GPU.

For spline interpolation, we refer the interested reader to the Killer B’s book [111] in addition to the books above by Farin [458] and Hoschek and Lasser [777]. Many properties of Bernstein polynomials, both for curves and surfaces, are given by Goldman [554]. Almost everything you need to know about triangular Bézier surfaces can be found in Farin’s article [457]. Another class of rational curves and surfaces is the nonuniform rational B-spline (NURBS) [459,1416,1506], often used in CAD.

1 These spline surfaces are out of the scope of this book. Consult Warren’s book [1847], the SIGGRAPH course [1977], or Loop’s thesis [1067].

2 Incidentally, both were presented in the same issue of the same journal.