5

Modernize IT to Secure IT

As a leading CISO in the health care industry points out, “Everybody likes to pretend that security is this extra, expensive thing you need to do. Much of what’s important for good security is just good IT.”

Unfortunately, as companies established cybersecurity as a control function, they layered security on top of existing technology environments along a number of dimensions. They installed not only antivirus software but also a range of security tools on the client device. They surrounded the network with not only firewalls, but also technologies such as intrusion detection and web filtering in order to repel attackers. They even created governance to encourage new applications to be developed in accordance with secure architecture standards.

Much of this activity was utterly necessary and dramatically improved companies’ levels of security. It did not, however, address two critical underlying problems: first, companies were designing their technology environment around everything but security, making them intrinsically insecure, and second, a set of trends was making technology environments themselves less rather than more secure (Figure 5.1).

FIGURE 5.1 Broad Set of Components in Technology Environment Contribute to Vulnerabilities

Just as manufacturers have found that it’s impossible to “inspect quality in,” IT organizations increasingly find it hard to “layer security on top.” This approach does not address gaping vulnerabilities in the environment, and at least some of the bandages applied to compensate for an intrinsically insecure environment have downgraded user experiences and reduced the ability of IT organizations to innovate.

Many high-impact mechanisms for protecting critical information assets involve changes in the broader application, data center, network, or end-user client environments—areas that cybersecurity teams can influence even if they are controlled by application development and infrastructure managers.

SIX WAYS TO EMBED CYBERSECURITY INTO THE IT ENVIRONMENT

The most forward-thinking companies have recognized the challenge of trying to add security in a piecemeal fashion and are starting to act aggressively to put security at the very heart of their technology environments. Specifically, they are accelerating their adoption of private clouds, using public cloud services selectively, building security into applications from day one, virtualizing end-user devices, implementing software-defined networking, and reducing the use of e-mail as a substitute for document management.

Naturally, many of these improvements depend on the success of major initiatives led from outside the security team and implemented for a combination of reasons, including efficiency and flexibility even more than for their security benefits.

1. Accelerate Migration to the Private Cloud

Motivated by lower costs and vastly improved flexibility, most large companies are putting in place standardized, shared, virtualized, and highly automated environments to host their business applications. These are—with all their various permutations—known in practice as the “private cloud.”1 As early as 2011, nearly 85 percent of large companies we interviewed said that cloud computing was one of their top innovation priorities, and 70 percent said that they were either planning or had launched a private cloud program.2 Those that are further along have often found that they could migrate 60 percent of their workload3 to these much more capable and cheaper private cloud environments (Figure 5.2).

FIGURE 5.2 Private Cloud Hosting Will Become Dominant Model by 2019

However, despite the uptake, there remains a debate over the benefits of hosting applications in a private cloud environment. Some cybersecurity professionals argue that the drivers of the improvements in efficiency and flexibility can also make it easier for attackers to exploit the corporate network. Virtualization—the co-locating of multiple images of operating systems on a single physical server—allows malware to spread from workload to workload. Standardization simplifies a data center environment, which makes life easier for IT teams but also creates a security monoculture that is easier for attackers to understand. Similarly, while automation helps system administrators enormously in managing the data center environment, it can be tremendously destructive if an attacker gains access to a system administrator’s login credentials.

Some of the most sophisticated IT organizations have exactly the reverse point of view. They have concluded that traditional data center environments are entirely unsustainable and that well-designed private cloud programs provide the opportunity to improve cost, flexibility, and security simultaneously.

Data Center Complexity Breeds Insecurity

Senior (or at least long-tenured) technology executives will still remember the days when data center environments were pretty simple. As recently as the early 1990s, even a top-tier investment bank might have run all of its business applications on a few mainframes, a few dozen minicomputers, and perhaps a hundred or so Unix servers. The pace and extent of change since then has been dizzying.

Now that same investment bank might still have only a few mainframes (each with orders of magnitude more processing capacity), but it would also have more than 100,000 operating system (OS) images running on tens of thousands of physical servers. More importantly, the bank’s IT team would have to support a dozen or more OS versions (some of which could be years out of date) and thousands of configurations that require heavy manual activity to maintain. This complexity makes it harder and harder for large enterprises to protect their data center environments.

Two issues are especially vexing. First, keeping track of complex, rapidly changing data center environments makes it hard for security teams to identify the anomalies that would indicate a server has been compromised. When a company adds hundreds of server images and performs thousands of configuration changes per month, it is virtually impossible to maintain an up-to-date view of what the data center environment should look like. Without this overview, it is much harder for the security operations team to notice that a server is transmitting data externally when it shouldn’t be, for example.

The second issue is that data center environments make it hard to install security patches for commercial software in a timely fashion, which exposes servers to new types of attacks. Developers constantly find new vulnerabilities in the software tools (operating systems, databases, middleware, utilities, and business applications) that run in corporate data centers and issue software updates known as patches that are designed to address these vulnerabilities.

Security expert Bruce Schneier described 2014’s Heartbleed bug as “catastrophic” because it enabled hackers to coax sensitive information such as passwords from OpenSSL, an open source tool designed to enable encrypted communications between users and Internet applications.4 OpenSSL made a patch available at almost exactly the same time that it announced the vulnerability.5 This is normal; vendors usually make patches available to fix 80 percent of vulnerabilities the same day they disclose them,6 but there can be a (sometimes lengthy) delay between the patch being released and a company applying it to protect itself. Nor do all patches solve the problem. For example, many initial patches for the Shellshock Bash vulnerability in 2014 proved ineffective, leaving many companies exposed.7

Patching is a constant responsibility for data center teams. For example, Microsoft releases security patches for its products on the second Tuesday of the month, every month, although for more urgent security situations, it releases patches as needed.8 Staying on top of this is incredibly hard. In one organization, there were weekly patching meetings to determine what needed doing next. It was impossible to automate this process, as there was no complete software inventory, which is not unusual. OS patches tend to be applied and distributed very quickly, while those for less business-critical software might be addressed only quarterly. Of course, these are the very applications through which hackers can gain access. Some extremely vulnerable applications had dozens of variant versions sitting on end-users’ devices and servers, either because they were deemed noncritical or simply because the previous version had not been uninstalled after an update.

IT executives tell us that staffing limitations mean teams have neither the time nor resources to test patches to see whether they can be applied safely. Fragile architectures make developers reluctant to install any patches to some applications except in the most extreme circumstances. One bank had issues with the stability of some of its systems, so it started including the servers in a list of “do not touch” assets, which were not to be patched until they could be made stable. Within two years, this do-not-touch list included hundreds of server images, with thousands of patches not applied. An internal audit uncovered this, and a special one-off remediation program had to be instigated to make everything secure.

Distributing patches across the company is both time consuming and labor intensive, and some patches get constantly pushed down the priority list. The number of changes required mean there may not be enough maintenance windows—typically, time slots in the early morning hours or weekends—to install all the patches. Vendors can also stop supporting outdated technologies, meaning security patches may no longer be available for software that companies use. In 2014, Microsoft stopped support for Windows XP (first released in 2001), yet more than 90 percent of ATMs were still running on the outdated operating system.9

Faced with all these challenges, it is easy to see how organizations fall behind when it comes to patching. One insurance company found that more than half of its servers were at least three generations behind in terms of security patches, making it vulnerable to any attacks that capitalized on a more recently discovered vulnerability. It is not alone—the large majority of breaches can be traced to failure to patch a relatively small number of software packages.10

Private Cloud May Make It Possible to Secure the Data Center

Can private cloud be a mechanism for reducing vulnerabilities and improving security? If companies design and manage their private cloud program appropriately, they can substantially reduce exposures in their data center environment. The highly standardized hardware and software associated with the cloud should drive down the use of outdated technology over time, while patching becomes part of the standard offering and does not require manual effort for every server. Automated provisioning11 reduces the risk of configuration mistakes that create vulnerabilities and also makes it much easier to enforce policies on which applications can run on the same server or on a certain part of the network. The combination of automated provisioning and standardization creates far more transparency into the environment, which makes it easier to spot the anomalies that can signify a breach.

Some companies are even using private cloud technologies to facilitate sophisticated security analytics. One health care company is using the virtualization tool12 in its private cloud environment to inspect network traffic in real time and flag workloads when they act in unexpected ways that may be indicative of a malware infection.

However, concerns that moving to private cloud could introduce new types of vulnerabilities are not spurious. Private cloud programs require careful planning to build security in. For example, cutting-edge companies are configuring their environment to minimize the risk of an attacker jumping from one system to another once inside by requiring the virtualization tool to be run from read-only memory and placing limitations on how programs can give instructions to the virtualization tool. They are also separating some operational roles to limit the damage that a single malicious insider could do if he had access to management tools. Not only must the security team help design in security measures from the outset, security considerations must also play a much more important role in the business case for private cloud programs.

Obviously, migrating to a private cloud requires both a sizeable investment, and organizational change. As a result, many companies’ private cloud programs stall, and executives are left underwhelmed by the speed and level of migration.

The companies that have succeeded so far have distinguished themselves in four ways.

- They focus on alignment among executives on the business case for private cloud, including the extent to which their company’s security will improve by migrating workloads from outdated data center environments. One investment bank decided to implement the next version of its private cloud environment not because of the potential efficiencies that also came along but because of the additional security.

- They roll out functionality in stages, so they can learn and build capabilities over time; in particular, they focus on creating an increasingly attractive developer experience with each release, so they create demand for the new platform.

- They build and automate new operations and support processes for the cloud platform so that it does not replicate the inefficiencies of the legacy platform and can scale efficiently.

- They create a focused team with top management support to specify, design, roll out, and operate the new platform so that it does not become an afterthought compared to existing environments.

2. Use the Public Cloud Selectively and Intentionally

A few years ago, account executives from a major public cloud provider called on the head of infrastructure at one of the world’s largest banks. They made a compelling presentation about how much they had invested in their cloud platform and the richness of its capabilities. As the pitch concluded, the head of infrastructure complimented the account team but had just one question: “I have data that can’t leave the United States. I have data that can’t enter the United States. I have data that can’t leave the European Union. I have data that can’t leave Taiwan. If I used your service, how would I know all those things would be true?”

One of the account team replied, “That doesn’t make any sense. Why would you want to run your business that way?”

To which the head of infrastructure said, “You seem like nice boys. Why don’t you come back in a few years when you have this figured out.”

It is for just these reasons that large enterprises have been reluctant to turn to public cloud services (especially infrastructure). They remain unconvinced that providers have figured out how to provide enterprise-grade compliance, resiliency, and security. Our survey results back this up. On average, companies are delaying the use of cloud computing by almost 18 months because of security concerns. In many interviews, we heard chief information officers (CIOs) and CISOs express concerns that malware could move laterally from another company’s public cloud-hosted virtual servers to their own because they would both be running on the same underlying infrastructure.

Nevertheless, if companies put in place the mechanisms to channel the right workloads to the appropriate cloud services, then it is perfectly possible to make exciting capabilities available while continuing to protect important information assets.

Therefore, IT organizations cannot take an absolutist position against public cloud services. Given the resources that providers are investing in new capabilities and the attractive costs—especially during the price war that is being waged as we write—there will continue to be strong demand for these services. As a result, IT organizations that try to prevent their use may find themselves in a challenging position. From one direction, business partners will see them as being obstructive—grist to the mill for those who constantly perceive cybersecurity as blocking value creation. From the other direction, end users and even application developers will simply work around or ignore corporate policies given how easy public cloud services are to procure. If your users have credit cards, they have access to the public cloud, but without guidance they may select services with weaker security capabilities and use these services without the additional security controls that enterprises can apply.

An approach that protects an institution’s important information assets while supporting innovation by providing access to public cloud services must take into account four factors:

- The sensitivity of information assets. There are huge variations in the sensitivity of corporate data, as we discussed earlier in the book. Some workloads process incredibly sensitive customer information or intellectual property (IP). Others process important information, but disclosure would not have grave business consequences.13 Ensuring that only those less sensitive workloads are directed to the public cloud would ease the concerns of cybersecurity managers.

- The security capabilities of specific public cloud offerings. There are also huge variations in the security models for public cloud offerings. At one end of the spectrum there are security practices that might be politely described as “consumer grade,” while at the other end we see providers employing robust perimeter protection, encrypting data at rest, and placing strict controls on how and when operators can access their customers’ data. Choosing the right vendor is therefore paramount.

- The security of comparable internal capabilities. When the business asks cybersecurity teams about the security of public cloud offerings, the response has to be “compared to what?” There are many situations where a public cloud offering might be riskier than a service from the company’s strategic data center but far more secure than a comparable service hosted by an internal regional IT organization that has been starved of investment for years.

- The possible enhancements of public cloud offerings. Many start-ups are racing to develop the technology that will allow enterprises to use public cloud offerings securely by encrypting data transmitted to cloud providers or entire cloud sessions and allowing enterprises to retain the keys required for decryption.

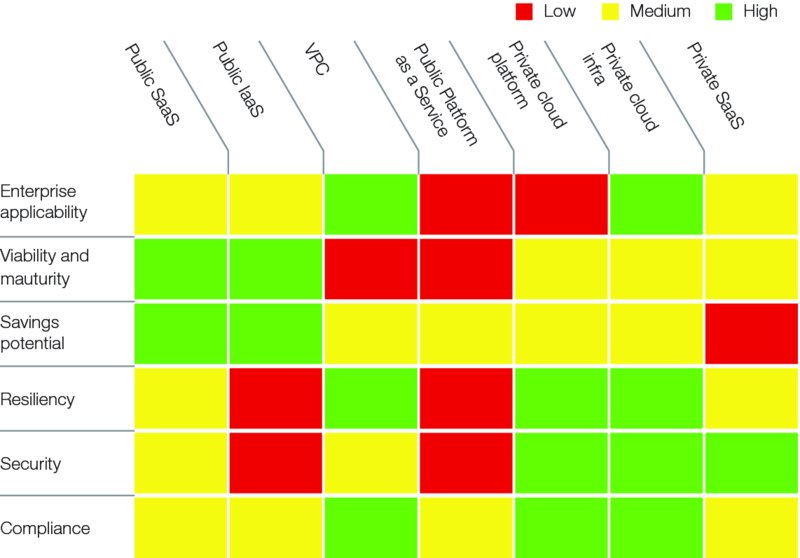

A transportation company seeking to develop a cloud strategy identified all the workloads required to run its business and assessed each one in terms of critical requirements, including the sensitivity of data processed. It was then able to map each workload to a hosting model: traditional hosting, private cloud, Software as a Service (SaaS), or public cloud infrastructure (Figure 5.3). For workloads that could be hosted on SaaS or public cloud infrastructure, the company identified specific vendors that could at least match its own internal capabilities in terms of security and then examined ways to put additional software around these public offerings to enhance security further.

FIGURE 5.3 How to Assess Public Cloud Services versus Other Options

3. Build Security into Applications

Application security mattered less when employees accessed corporate applications from desks inside the company’s buildings. Today, companies cannot control such a clear bricks-and-mortar perimeter, and highly functional applications are available to both customers and employees who expect to access such applications anytime, anywhere. The result is that hackers can use application-level attacks, gaining entry through the web browsers that provide a gateway into the application. For example, hackers were able to lift customer data from one bank because developers exposed sensitive information via the browser bar in the company’s online banking applications.

To the layman—or the board member with no IT background—this sounds like a rudimentary error from the developer. The reality is that application developers do not understand how to write or test for secure code; most computer science courses do not require much information security training and what does exist tends to be a checkbox set of practices, rather than an immersive course that teaches the problem solving required for secure application development. Many development teams, for example, do not follow best practice and include libraries to scan for known code vulnerabilities or run them as part of their nightly build. Instead, security functionality tends to slip down the agenda as understaffed teams rush to deliver business functionality. Even intelligence agencies have asked for rigorous password capabilities to be deprioritized so that time could be spent improving an application’s user interface.

On top of all this, many companies have weak identity and access management (I&AM) capabilities for validating which users can access which application. Applications may use home-brewed or out-of-date I&AM and a wide variety of different capabilities. This makes it even harder to enforce common password policies and requires users to remember a variety of passwords. One industrial company found that senior executives had to use as many as 20 different passwords; no surprise that some of them wrote them down in a notebook or saved them in files stored on their desktop.

The final problem for IT is that legacy applications can predate the development of secure coding practices. An insurance company reviewed its cybersecurity setup and found that it was doing a pretty good job of securing new applications, but the backlog of applications that could not be made secure cost-effectively ran into the thousands.

Although enhancing application-level security can be an even more challenging organizational change than addressing other technology issues such as networks or end-user devices, some companies have found effective practices that can help them improve their position:

- Incorporate instruction on secure coding practice and security problem solving into development training from day one; one financial institution devotes nearly 25 percent of developer training hours to security topics.

- Build a rich set of security tools and application program interfaces (APIs) (for code scanning, security monitoring, and I&AM) into the development environment. This helps minimize the extra time developers need to secure applications.

- Constantly challenge the application portfolio using penetration testing. One bank has a team of 50 security specialists who do nothing but try to break into its applications.

- Use the creation of agile teams as a forcing device for change, and make sure that security requirements are incorporated into agile methodologies.

- Perhaps most importantly, get serious about lean application development. Applying lean operations techniques to application development and maintenance can improve productivity by 20 to 35 percent, but it also reduces security vulnerabilities in application code by bringing all the stakeholders on board early, stabilizing requirements, and putting quality first throughout the development and maintenance processes.14

4. Move to Near Pervasive End-User Virtualization

For all the creativity and resourcefulness of cyber-attackers, one common situation remains the starting point for many attacks. The attacker sends an employee a phishing e-mail and the employee clicks the link, which takes him to a website that installs malware on his device. The attacker is in.

Cybersecurity teams used to rely on antivirus software to protect desktops and laptops from malware, but two developments have reduced the effectiveness of that model. Advances in malware technology have meant more and more of it can slip past antivirus software, and as employees expect to work anywhere, companies have to manage new types of client devices with new types of security vulnerabilities, specifically mobiles and tablets. As a result, sophisticated institutions are finding they have to move to virtual end-user environments, where both traditional and mobile end-user devices simply display information and collect user input but store very little.

For some time, leading CISOs have said they continue to use antivirus software mostly for cosmetic purposes. Antivirus packages used to compare a piece of the software’s executable file against a database of known malware to determine whether the software was safe. Now that cyber-attackers have developed malware that changes form over time, it is no longer effective to compare it against a blacklist. As a result, many CISOs say they continue to pay for antivirus tools mostly to appease regulators or just in case they happen to foil a simplistic attack that would not have been prevented otherwise. The debate over antivirus effectiveness came to an end when executives at Symantec, a leading developer of antivirus tools, announced the death of the antivirus model in the Wall Street Journal.15

Just as antivirus tools became less effective for desktops and laptops, the rise of enterprise mobility presented a whole new challenge. Back in 2009, nobody knew what a tablet was. Today, they are pervasive in executive suites and among mobile professionals. By 2017, enterprises will account for nearly 20 percent of the global tablet market of nearly 400 million units.16

Again and again, CISOs have expressed their nervousness about the immaturity and fragility of the software that connects smartphones and tablets to their corporate networks. Integrating mobile device management, virtual private networks (VPNs), and other software from multiple vendors creates seams and crevices that attackers can exploit. In addition, the complex manual processes required to use mobile devices in an enterprise environment increases the likelihood that security policies will not be implemented correctly on any given device, creating additional footholds for attackers. Bring-your-own-device schemes (in which employees use their own tablets and smartphones for work purposes) and the bewildering proliferation of devices and client OS versions (especially within the Android ecosystem) make secure operations even more difficult.

These challenges exacerbate tensions between embracing mobility and enabling innovation on the one hand and securing the devices and the network on the other. One insurance company CISO had to field calls from board members on successive days, first asking him to promise that a breach couldn’t happen and then protesting that he hadn’t approved the use of tablets for board documents.

Virtualize the Client

In a virtualized model, the user’s device (desktop, laptop, tablet, or smartphone) performs no processing—it just captures input and displays output (e.g., video, sound). The underlying OS is run on a server in a corporate data center, which can be effectively protected and means that the end-user’s device is functioning inside the network perimeter at all times, irrespective of where the user is physically located. It can be protected by web filtering, intrusion detection, and antimalware controls, so sensitive data can remain secure. Even if the device does become compromised with malware, it is easy to flush the OS. Nor is there any need for the device to connect to the enterprise via a VPN, with all the complexity and absence of transparency than VPNs imply. In addition, no data sits on the client device so there is almost no risk if a device is lost or stolen.

The overwhelming majority of enterprises still use mobile device management to provide basic e-mail, contact, and calendar synchronization for tablets and smartphones. As users demand more sophisticated services on their mobile devices (e.g., access to enterprise applications and shared document repositories), companies may have no choices but to bring virtualization to the smartphone and tablet.

Virtualization does not just apply to mobile devices, however. Increasingly, companies are applying the same principles to desktop clients. This brings additional benefits, not least to the user experience. Getting started on a new device becomes nearly instantaneous. A user’s complete desktop environment—applications, settings, data—can follow her from location to location, removing the need to travel with a laptop. Power users, such as traders, engineers, and data analysts, also find they can enjoy much faster processing performance as demanding applications run on powerful servers rather than their own desktop hard drives.

Financial institutions are ahead of the curve, and many are in the process of moving 70 to 80 percent of their end-user environments to virtual desktops, largely to improve control and reduce risk. In most cases, these banks are starting with high-risk users, such as financial advisers in wealth management (who may be independent agents rather than employees). A relatively small percentage of users who travel frequently will continue to have traditional laptops, though some IT executives believe even this will become less necessary over time as wireless becomes pervasive.

Pushed by their financial services clients, some of the largest law firms have also made aggressive moves to virtualize their desktop infrastructure, again for reasons of risk and control. One large New York law firm has moved entirely to virtual desktops: when a lawyer works from home or a client site, she simply logs into a virtual desktop via a web browser. The law firm shut down its VPN a couple of years ago.

Most infrastructure leaders we spoke to report good experiences with early virtual desktop deployments, and enterprise-grade levels of performance and stability are readily achievable. There is far less consensus, however, around the economics. Some companies believe virtual desktops cost about the same as, or a little more than, traditional ones because of back-end hosting costs. This has led them to limit their rollout to specific segments. Others, however, say they have achieved savings of 30 percent over traditional environments because of lower installation and support costs and therefore plan to migrate much of their user base to virtual desktop services over time. The difference between these two views on costs seems to stem from variations in virtual desktop solutions (i.e., whether users get a “thin” device or a full-fledged laptop, how much storage each user gets allocated) and the efficiency of back-end hosting environments.

Forcing users to access enterprise services via a virtual device would create a very different user experience with a hard separation between their work and personal information, but it would enable enterprises to provide extremely rich mobile services and feel confident in their security.

5. Use Software-Defined Networking to Compartmentalize the Network

In a world where nobody can eliminate breaches, it becomes especially important to contain the attacker’s ability to move from one infected node of a technology network to the next. This “lateral movement,” as it has become known, is getting harder to prevent as the corporate network environment has evolved, leaving IT organizations with a tough choice between preventing attackers from expanding their reach and introducing too much operational complexity.

Historically, as companies consolidated their IT organizations, they stitched enterprise networks together from departmental networks and networks picked up in various acquisitions. Different networks used different architectures, different technology standards, and, in some cases, different protocols—and they were walled off from one another by gateways and firewalls. This created complexity and inefficiency and hurt network performance. Network managers had to make manual configuration changes to install new applications and perform detailed analyses to identify the root causes of slow traffic between sites managed by different business units.

Over the past 10 years, most large enterprises have simplified their networks. One global financial institution went from 25 networks to just 2, saving more than $50 million a year. A pharmaceutical company created a global flat network because it wanted to allow seamless videoconferencing between any two offices globally, from Peru to Madagascar (without in fact assessing how many executives in Peru needed to videoconference with their counterparts in Madagascar). Simplification had a downside, though: it created more entry points into its networks.

As companies integrated their supply chains with vendors and exposed more of their technology capabilities to customers, they created more direct network connections between themselves and their business partners. This allowed investment banks to give prime brokerage customers the performance they required and allowed pharmaceutical companies to collaborate closely with research partners. However, it also created more opportunities for attackers to jump from one company’s network to another’s and created more network entry points that network or security operations teams had to monitor. Once an attacker gains entry to the environment, he or she can see all the other systems, which makes them easier to analyze and compromise. The high-profile breach of Target occurred partly because one of its vendors, which needed access only to the billing system, also had access—unwittingly—to the point-of-sale system. Once an attacker had breached the vendor’s security systems, it suddenly had access to data inside Target that never should have been visible.

A particularly important potential vulnerability is having the security controls themselves sit on the same network they protect. This is one of the easiest things to segment, and it is essential to do so; otherwise, the first thing a sophisticated hacker will do once inside the network is disable the organization’s ability to track where he or she is.

Find a Middle Ground between Security and Simplification

The traditional approach to containing lateral movement is network segmentation, but this can seem like a reverse step, removing some of the hard-won benefits of network simplification. Separating two business units’ servers into two separate network segments does dramatically reduce the risk of an attacker moving from one server to another, but it also means that the security team will have to make firewall changes (with all the time and effort that implies) before those two business applications can share data.

Many companies have sought to strike a middle ground and put in place approaches that provide some degree of segregation for their most important information assets without adding too much operational complexity. Some have really focused on protecting a small class of the most important assets. An industrial company decided to divide its networks into a “higher-security” network for its most sensitive IP and a lower security network for everything else. Other companies use less onerous techniques that rely on firewalls and gateways to demarcate networks. One bank used network segmentation in a targeted way to provide differential protection to some of its most important information assets. It created a separate network zone just to host systems supporting its payments processes, which it deemed suitably sensitive, while its far less sensitive ATMs remained outside the zone.

Ultimately, all these tactics are half measures, neither meeting the objectives of operational simplicity nor sufficiently reducing lateral movement. The answer is for companies to adopt software-defined networking (SDN). SDN separates the decisions about where network traffic is sent from the underlying system that transmits the traffic (i.e., the control plane from the data plane). Practically speaking, SDN allows an organization to set up a network in software rather than in the configuration of the underlying hardware. This means that networks can be managed via a set of APIs, and a library of different network configurations can be stored for reuse.

No one should be under any illusion—implementing SDN is a dramatic change that will require investment in new hardware, new operational processes, and new management capabilities. But it also brings huge efficiencies, saving as much as 60 to 80 percent of data center network costs thanks to vastly improved productivity and the use of commoditized hardware. It also helps enormously in the fight against lateral movement by making it much easier to set up network zones and segments in a rapid, automated way, thereby eliminating the tough choice between efficiency and compartmentalization.

6. Use Dedicated Document Management and Workflow Tools Instead of E-mail

Some CISOs say that they are quietly confident about how their company protects the structured data it stores in databases, but have sleepless nights about the extremely sensitive information that executives pass back and forth to each other via e-mail attachments. Documents make up a growing share of their company’s data, and many of the controls they use to protect structured data just do not apply. A few companies have started to get control of this type of data by creating and mandating the use of sophisticated capabilities for managing sensitive documents. More need to catch up with them.

Stories about the theft of millions of customer records often reach the front pages of the broadsheet press. These are embarrassing for the companies concerned; however, some of a company’s most sensitive and valuable corporate strategic information sits around in documents or just in plain text. Senior executives communicate with each other about acquisition targets, markets to enter, business strategies, layoffs, negotiations, divestitures, and so on, using presentations they create on their desktops and share via e-mail. Managers and analysts collaborate on business plans, valuation models, financial forecasts, and pricing strategies using spreadsheets they, again, create on their desktops and share via e-mail. Often, once all these incredibly sensitive documents have been created, they languish either in e-mail inboxes or in file shares.

Documents like this can be extremely complicated to secure because companies have little control and no visibility into who opens, alters, and transmits them. In many cases, entire departments have access to shared folders that hold sensitive documents. Any executive or manager can access an important strategy document and, perhaps without thinking about how sensitive it is, forward it to dozens of people. The more places a document exists in a company’s environment, the more likely an attacker will be able to find it. The insider threat is even more pronounced—the more people have access to a document, the greater the chance that one of them uses it inappropriately.

The companies that are making progress in addressing this issue have fundamentally changed the way their staff work with documents. Law firms may have a reputation for being technology laggards, but in this area they have made the most progress. Spurred by the demands of their clients, most of the largest law firms now use document management tools extensively. If a lawyer works on a client matter, she has no choice but to create all the relevant documents within the document management platform. That means that only a small number of her fellow lawyers can access any set of documents, reducing the impact if attackers compromise any one lawyer’s credentials. Perhaps more importantly, this rigor in document management provides the firm with visibility into who is accessing, transmitting, and altering client materials, which makes it much harder for insiders to exploit client information undetected. Document management systems can also insert tags about the sensitive information any given document contains into its metadata. This makes it exponentially easier for data loss protection (DLP) tools to stop users from e-mailing or printing a document that they should not.

Other companies go even further in protecting their most sensitive information. An oil and gas company moved discussions about negotiation strategies for extraction rights out of clear text in e-mail and into documents secured with digital rights management (DRM), reducing the risk that an unauthorized party could see the maximum amount it bid for a property. A manufacturing company is implementing DRM for the materials containing technical specifications it sends to its suppliers. This makes it much harder to forward this information inappropriately because DRM prevents users from opening, printing, or copying a file unless they have authorization from the document’s creator.

As with the other security improvements discussed in this chapter, protecting unstructured data requires dramatic changes far beyond the cybersecurity function. That said, it does not have to mean a worse user experience. In a world where everyone complains about e-mail overload, how much frustration results from version upon version of a single document clogging up inboxes? How much time do executives, managers, and other professions spend trying to find the latest version of a document or extracting comments from complicated e-mail threads? The browsing, tagging, and search features of a well-designed document management capability make it much easier to find the right document, and collaboration tools make it much easier to aggregate and act on feedback. It is a change of mind-set, but one that can prove popular once adopted.

ENGAGE WITH IT LEADERS TO IMPLEMENT REQUIRED CHANGES

For obvious reasons, relatively few novels focus on enterprise IT or have a head of IT infrastructure as the protagonist. The Phoenix Project,17 a business book masquerading as a novel, clearly harks back to 1980s management classic The Goal.18 Just as The Goal describes how a beleaguered operations executive learned how to remove constraints in order to deliver finished goods efficiently and effectively enough to meet customer demands, The Phoenix Project describes how a beleaguered IT executive learned how to remove constraints in order to deploy and scale a critical e-commerce platform quickly enough to save the business. As the story begins, the CISO himself is one of the biggest roadblocks, constantly raising issues just before a release or demanding additional controls that had not been contemplated in the original architecture. His outdated attire serves as a metaphor for his outdated mind-sets. By the end of the book, just as all the other IT executives and their business partners have learned to operate in new ways, the CISO has both upgraded his wardrobe and started to engage with the rest of the team as a valued peer.

The book’s authors are probably still smarting from a few run-ins with security teams over the years, but the CISO’s evolution over the course of the story is a good road map for how cybersecurity teams broadly, and CISOs in particular, should engage with their colleagues on the IT management team. None of the improvements described in this chapter can be sponsored or orchestrated by the CISO; executives responsible for application hosting, application development, enterprise networking and end-user services will have to be the driving force behind any changes. That said, the security team has both the right and responsibility to initiate discussions about how structural issues across IT introduce vulnerabilities, to weigh in on the business case for major initiatives, to shape new architectures from the outset to make sure they can be protected, and to serve as a thought partner to fellow executives in discovering ways to make IT faster and more efficient. CISOs also need to encourage more of a resiliency culture across IT by sponsoring technical security training for IT personnel, by integrating security managers into application and infrastructure decision making, and by investing in reporting to track progress in addressing technical vulnerabilities.

● ● ●

Insecure application code, servers with unpatched operating systems, flat network architectures—the typical enterprise technology environment is rife with security vulnerabilities. However, there is an emerging technology model characterized by lean application development processes, cloud-based hosting models, virtual clients, and software-defined networking. It has the potential to improve efficiency, agility, and security compared to what exists in most companies today.

Along with risk management and delivery, cybersecurity also has influencing responsibilities. A company’s overall technology architecture can have a profound impact on its ability to protect itself while continuing to drive technology innovations; therefore, applying these responsibilities to the rest of the IT organization purposefully and ambitiously will be a major factor in achieving digital resilience. Naturally, this will require CISOs and the cybersecurity team to work closely and effectively with the rest of the IT team. It will also require CIOs, Chief Technology Officers, and other senior technology executives to prioritize investments in more robust architectures—sometimes at the expense of tactical business requests—and create a culture of risk management and resilience throughout the IT organization.