Automating Analytics

Transformation without analytics is just digitization. Analytics makes it transformative.

David Sweenor, Alteryx

Your business changes. It is inevitable. Consumer trends shift, supply chains evolve, and technology advances. You rely on sales reports, market intelligence, and performance indicators to track the health of our business, but how do you keep track of it all? Do you have a method for seeing all the data flowing through your organization? Is it easy to use? Is it fast? Does it get to the right people and systems at the right time? How you get information and insights from all that data and how you do it quickly enough to make decisions that keep your business moving forward differentiates you from the competition. It is a constant struggle to keep up with the huge amounts of data flowing through and around your organization. Successful industries and organizations understand the need for prompt, reliable insights, and they realize that these insights are founded on fast, reliable analytics.

Creating insights is only part of the equation. How do you get those insights to the people who need them? Business information isn’t just for executives and leadership. What if your employees could identify bottlenecks in your manufacturing process by understanding insights hidden within your data? Could your marketing team use data to identify personas and trends in customer habits to better align their campaigns? Could your HR team rely on personnel data to identify hot spots for turnover or target new talent? Data is everywhere in your organization, and the insights within it can benefit the company at every level. So, the final question you have to ask yourself is this: “How can I get my employees the access, skills, knowledge, and tools they need to make data-driven decisions that will improve my organization’s business outcomes?”

One solution that many organizations and businesses rely on is analytics software combined with data and analytics experts to transform data into insights. While this may be a common solution, it creates a gap in the analytics workflow. In this scenario, data analysts and experts lack the deep domain knowledge of your business to create the insights your business may need. On the other hand, those who have the knowledge of the business usually lack the tools or analytics expertise to do their own analysis. This gap can result in analytic output that misses key business insights or arrives too late to be actionable, resulting in missed opportunities or revenue being left on the table.

Take a look at your own organization. Does data-driven insight flow smoothly through it? Can anyone on any team access the information necessary to make important decisions related to their job? How long does it take to get from your transactional systems to decision-making insights? How easy is it to update your analytic processes if your business changes? Does your leadership team have access to the transformative insights they need? Understanding the typical flow of data (which is ultimately transformed into actionable insights) through an organization will help illustrate the bottlenecks and pitfalls of legacy data and analytic processes, a topic we’ll dig into in the next section.

A more effective approach is to upskill your workforce so they’re able to pair their business domain expertise with reliable data analysis. The result is an organization with employees that are empowered to utilize and analyze data to accelerate and improve decision making. Giving nontechnical users access to information systems and allowing them the power to do analysis and develop insights on their own has been shown to result in unique and meaningful insights that drive the business forward.

But how do you do that? You provide a bridge to give the individuals running the business the capability to derive their own insights without the need of complex tools, code, or technical expertise. By delivering data and analytics capabilities to your workforce, you’re creating an environment of data and analytics democratization within your organization. This means less time is spent relying on outdated processes and waiting on experts to generate analysis results, and more time is spent finding insights across your business so you can achieve your strategic objectives. With these resources, domain experts have the ability to do data preparation, analysis, predictive analytics, and insight generation on their own.

What does this mean for your business? It means that decisions can be made faster and closer to the point where they can be most impactful. It means that your company saves time, money, and effort by empowering your employees with the tools they need to better understand how your business works and to act upon insights that will make it run more efficiently.

So how do you achieve data democratization and empower your employees? Fortunately, there are already platforms available that enable organizations to expand the scope of people benefiting from using analytics, effectively moving this expertise beyond data scientists to allow anyone within the organization to be able to transform data into insights. While no tool will completely eliminate the need for highly skilled data analysts and data scientists, there are several key things that can be done to improve the flow of data from transaction to insight within your business. These tools can also improve the efficiency and capabilities of your business analysts.

To this end, we’ll illuminate how analytics automation can improve or eliminate legacy data-process issues and enable the gains described above. Analytics automation focuses on a human-centered approach to data, analysis, and insights. In this article, we will see how it makes the data flow process easier to understand and work with, enabling data and analytics democratization. We’ll examine how data flow automation reduces labor-intensive tasks, saving time and effort. We’ll also uncover how analytics automation can provide continuous and easily maintained insights to employees and leadership. Through all of these transformational capabilities, we’ll see how analytics automation helps to create an agile organization that can better serve its customers and clients while improving financial success.

The Critical Role of Data in Modern Organizations

We all know that data is important, but why is data so important to your business? Properly analyzed data transforms into information and actionable insights, which can be used for more effective decision making. It can tell you how your business is performing. It can highlight patterns and hidden trends, identify positive and negative influences, give insights on what steps to take in the future, and optimize the best choice among competing decisions. It is the lifeblood of decision making, and without it, you are simply guessing or relying on intuition. A report in the Harvard Business Review cited an executive survey that indicated 70% of organizations with developed analytics reported improvements in productivity, financial performance, reduced risk, and better decision making.1

But if information is so important, why is it so difficult, expensive, and time-consuming to extract it from data? To understand this, we need to understand how data travels through an organization to be transformed into information and insights, ultimately to be used to make better business decisions.

After all, if your business is not reacting and changing as a result of analytics, what is the point in having them?

The first place to examine is the data collection. It might be simple enough to look at an organization and guess at where its data comes from. Stores generate sales data. Schools generate student data. Hospitals generate patient data. People and equipment generate data all the time with their connected devices. But this is just scratching the surface. Transactional systems within an organization do not and cannot operate alone. Let’s take a closer look at a manufacturing example.

A typical company producing a product or service has two major goals: meet customer demands and decrease costs. Meeting customer demand focuses on being able to create as many end products as possible for delivery without creating more than are needed. This means finding the appropriate number of machines, staff, and working hours to meet the demands of your customers while still maintaining a revenue stream higher than the cost. But to truly understand the interwoven complexities of your business, you have to examine all the variables. Some costs are tangible, while others are not. This is where analytic insights can help you quickly identify inefficiencies, allowing for data-driven decision making and improving performance within the business.

Let’s dive a bit deeper into this example. As a leader in a manufacturing company, you generate profit by selling your product to consumers or other businesses. You probably have several metrics on whom to sell to, for how much, and at what price. You may also have insights into how your product or service is being used. While analytics can always be improved in these aspects, let’s look at the other side of the coin—the costs. What is costing your company money? Are you spending too much on materials? Are the materials in or out of spec? Is there a better vendor to purchase from? Do you have the best employees in each position? Do they have the knowledge they need to improve performance in their jobs? There are many places where data can be accessed to improve the business, as noted in Figure 1. Let’s examine this further.

Figure 1. Examining how production data alone is not enough to fully evaluate a manufacturing firm

Imagine what would happen if your organization looked beyond just the manufacturing execution system (MES), enterprise resource planning (ERP), and customer relationship management (CRM) data related to creating your product. There are many places you could use data to reduce costs, optimize processes, and improve efficiency. Is your production line running through the night? How many extra employees and how much additional electricity and water are required to run during these off times? Should the manufacturing environment be adjusted based on weather conditions? Is this extra cost being covered by how much is produced? Is your equipment breaking down unexpectedly? To get these answers, you need to combine your financial data with HR, equipment, facility, and utility costs over time. You need to be able to compare production returns by the hour. Can your business do this now?

What about your supply chain? How do you keep current on resource vendor pricing and values? How much money can you save by switching vendors? The materials may cost less, but you may pay more for shipping. For sensitive materials, how do the specifications compare between suppliers? You need supplier data, materials data, and shipping information for all of your potential suppliers, and you need to be able to combine this data quickly and regularly to make a comparison. This data is valuable to you as a leader, but imagine what it could enable your front-line employees to do. Could your purchasing director utilize quick insights from these data sets? Imagine he or she is making critical and timely data-driven purchasing decisions. What if they could do that themselves without a data analyst or advanced analytics knowledge? How much might your company save? What about other parts of your company?

This idea doesn’t just apply to purchasing, of course, but to every functional area of your organization. Employees, finance, utilities, equipment, users, shipping, and much more all generate data, and that data is vital to making the business successful. To understand the business as a whole, the individual data silos cannot stand alone. The data from each functional area must be combined to give an overall picture of the entire business. The ability to tie transactional and unstructured data together, as well as with relevant external data, is invaluable. Most importantly, the faster this data translates into decision-making insights, the faster your company can react and improve performance. Let’s examine a typical data-to-insights workflow within an organization to help us better understand the challenges and impacts to business.

Challenges with Traditional Methods

So, what steps do you take to gather all of the data your organization creates and needs? How do you pull from transactional systems, record-keeping, external sources, and other places to get a complete and holistic view of your business? Is there data available and beneficial to your company that is not being used? In most organizations, a process called “extract, transform, and load,” or ETL, is used. In general, an ETL system will gather data from production systems on a scheduled basis. It will then take that data and transform it to be easier to read and use. Finally, it will store the data to a central repository, such as a data warehouse, where it can be used by other systems—including reporting and dashboard tools. ETL is an effective way to combine disparate data sources in a centralized area, but what are some of the pitfalls and drawbacks to using it?

Businesses collect huge amounts of data from multiple sources, as depicted in the transactional system segments on the left side of Figure 2. Generally speaking, an ETL tool will not be able to collect all of your data. There are simply too many sources in too many places for those maintaining the data to keep up with. So an organization will typically gather the key functional data sets and rely on other methods, such as data prep, analytics, or reporting software, to blend in additional data. This leaves the data warehouse potentially lacking in data that may be vital to the success of the business. This is especially true of data unrelated to the business process but relevant for analytics, such as geospatial, demographic, firmographic, and reference data.

Figure 2. An example of data flowing through a typical organization from transactional systems to reporting and insight generation

It’s not just missing data that can be a potential issue with a traditional process. Existing data and analytics software require specialized, trained employees familiar with the programming language involved. The process of pulling and transforming data can be complicated and time-consuming. There are obstacles to overcome, such as writing SQL code to access the data, complex calculations to prepare and clean the data, and a knowledge of statistics and predictive analytics to transform the data into insights. This is usually time-consuming, and the code is often rigid and hard to change. The code generated to pull the data is written specifically for the needs at that time, but data can change. As processes evolve or new software or methodology is introduced into a business, the tables, fields, and data will change with them. It takes continuous work from the subject-matter experts, data architects, report writers, and more to keep the analytic pipeline accurate and reliable.

Data standards and governance are also of vital importance. Imagine a company that sells electronics. There is likely data on sales and profit, of course, but there is also data on inventory management, shipping, returns, and more. Now, the software used in each of these areas can be (and most likely is) different. Names of products may differ between the systems. One system, like shipping, may aggregate data on a pallet level where another may track items piece by piece. Getting these systems to talk to one another and use common data definitions is often a huge challenge. The large amount in combination with the complexity of data being generated in business today is quickly outpacing the original infrastructure used to analyze it.

We’ve examined the typical methodologies of retrieving data within an organization, highlighting inefficiencies in the process. We need to find a solution that accesses and prepares data while providing the flexibility, speed, and ease of use to connect to other potential data sources, dynamically adjust to ever-changing business requirements, and promote data democratization within the organization. This is where analytics automation comes into play.

How Analytics Automation Improves Business Outcomes

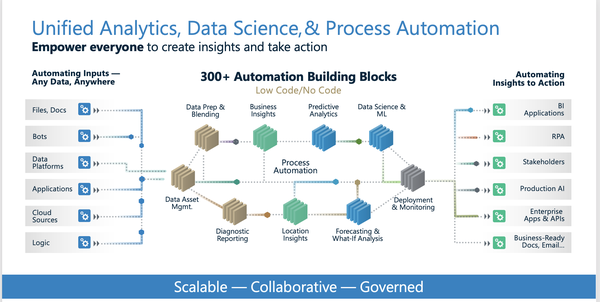

What is analytics automation, and how is it different from what you’re currently using? Just like typical ETL processes, analytics automation software can connect to multiple sources and types of data, then perform blends, joins, filters, sorts, cleansing, aggregations, and more. ETL stops at this level, whereas analytics automation continues to build on this functionality with analysis, reporting, forecasting, predictive analytics, and more. In fact, analytics automation provides a single platform that can connect to, prepare, blend, enrich, analyze, and transform data into insights with data science and machine learning, as shown in Figure 3.

Figure 3. Alteryx-provided model of the steps and capabilities built into an analytics automation platform

Reusable Workflows Unlock the Information Value of Insights

Many data and analytic processes are code-based, but analytics automation doesn’t work this way. In fact, it is the human-centered approach that underlies the benefit of analytics automation. Unlike other software, analytics automation relies on analytic workflows, which are a series of steps that transform data into insights. Once a workflow is built, the results can be fed into other workflows. This creates a reusable, repeatable set of analytic processes allowing for organizations to accelerate their analytic initiatives by avoiding time-consuming rebuilds of existing analytic processes.

The primary steps needed to get the correct data together can be performed by business experts, and capabilities such as data prep, analytics, and predictive analytics can be made available for others to manipulate the generated results. This ensures that the data being investigated is accurate while allowing flexibility for deeper analysis. Executives can now ask questions, and the employees closest to the data can use or build upon existing workflows to get a quick and accurate answer. Better still, workflows can be shared and made available to everyone throughout the organization once they are vetted. This means that accurate insights can be accessed by anyone on demand with little training, while ensuring that the organization’s best practices are being followed. This shortened path from data to analytic insights improves decision making by increasing data accessibility, specificity, flexibility, and speed.

Data collected by a company lacks intrinsic business value. It is not until the data is translated, organized, and manipulated into actionable information that business decisions can be made. The impact of delays is represented in Figure 4. A delay in insights, however small, could be the difference between making money and losing it.

Figure 4. Information value of insights decays over time

Automation decreases time lost, allowing leaders to make effective decisions sooner after the data is created. This nimbleness enables companies to quickly adjust to sudden fluctuations in processes or performance, as seen in Figure 5. It allows a company to adapt the business and shift resources accordingly to things that are working well and respond to those that are failing. It also pivots the company toward predictive insights rather than reactive ones.2

Figure 5. Value captured by reducing business event to action cycle

Speed is twofold in analytics automation platforms. To start with, analytics automation cuts down on the number of tools and the amount of expertise needed to get from source data to insights. A typical data worker will, on average, connect to six different data sources, pull and process forty million rows of data, and produce seven unique outputs when performing one single analytic or data science activity.3 In addition, they will incorporate four to seven different tools to accomplish these tasks. Analytics automation simplifies these actions by providing the ability to connect to multiple sources, the ability to pull and process millions of rows of data in an easily managed and repeatable manner, and the ability to generate multiple types of results. It does all these things in a single end-to-end platform, simplifying the learning curve and reducing the need for additional tool-specific training.

Next, analytics automation is automated. While the initial build of any data or analytic process requires time, analytics automation is designed with reusable building-block capabilities. It allows end users to build on existing validated workflows to generate new insights, and modifying existing workflows can be done without searching through lines and lines of code. A single tool eliminates the friction between the data workflow steps. Automated processes can be built to search, prepare, and analyze data, saving large amounts of valuable expert time. A 2019 survey by IDC indicated that these types of tasks cost analysts around 16 hours a week.4 That’s 40% of a week that could otherwise be used to provide valuable insights on how to improve your business.

Analytics automation doesn’t need to supplant your existing software. It is the perfect complement to existing software tools. It can access data from ETL-generated data warehouses and produce analytic-ready data sets that can be used by reporting and other applications.

Any centralized system will only go so far to address your specific business need. You have a problem to solve now and cannot wait for IT. Analytics automation is often thought of as the last mile of analytics.

When you start utilizing analytics automation in your business, work with it along with existing technology and methodologies. As time passes, evaluate where you can employ analytics automation to improve existing processes. Integrate it into your work and analytic processes. Where can it speed up insight production? Where can it answer questions quickly that currently take a long time? In time, the value of analytics automation will become apparent through time-saving, automation, and ease of use.

Empower Business Users with Code-Free Visual Workflows

Analytics automation translates the steps of the data-to-insight process into a visual workflow with icons on a canvas, with each step further modifying the resulting data set. This graphical user interface is incredibly beneficial to your business. First, it is easier to understand and decipher than code. This means that any leader or employee can interface with data without a background in coding languages. Access to data analysis means that the decision making can move to the users closest to the business, empowering them with the insights to work more efficiently and effectively. This ease of use also makes it simpler to adopt and distribute analytics automation throughout your organization.

The experts in the data also benefit from analytics automation. They can generate workflows that perform business-specific tasks such as sales and use tax compliance, demand forecasting, fraud detection, capital budgeting, and auditing—and then save them for others to use. This ensures that best practices are built-in when future analysis is done. It also enables business domain experts to interact with the data and explore on their own. Your data experts can also easily visualize the data preparation process and make quick changes, something that could take hours or days to do in a traditional code-based system. An example of a graphical user interface is shown in Figure 6.

Figure 6. Example of an analytics automation interface—Alteryx

Once an analytics automation process is built, the pipeline can be scheduled to run on demand or automated to run at a specific date, time, and frequency. The graphical user interface has dozens of building blocks that allow you to:

Access and blend multiple types of data—both structured and unstructured

Prepare data by imputing missing values, removing outliers, and removing redundant variables

Enrich data by combining it with other data sets both internal and external to your organization

Blend your data together regardless of the file type or database structure in which it resides

Structure the data for analytics

Once the data is ready, you can then:

Apply descriptive analytics to understand what happened

Perform diagnostic analytics to identify trends and patterns and why something happened

Use predictive analytics to look ahead, plan for future changes, and understand what is likely to happen

Apply prescriptive analytics to recommend a specific course of action

Analytics automation also provides opportunities to look beyond the functional data of your company to allow for:

Geospatial analysis—building maps, graphs, and statistics on geo-referenced data to make complex spatial relationships understandable

Text analytics—exploring written data to discern themes and sentiment

Data science—applying statistics and algorithms to extract insights from noisy, structured, and unstructured data

Machine learning and AI—using machine algorithms to comb through data to identify patterns that might otherwise be missed

Optimization and simulation—using your own data to improve the business and business processes through examination of “what if” scenarios

The step-by-step process creation allows users to view the analysis output at each step, enabling them to easily visualize how the data is being transformed and analyzed by the process. The workflow can update, clean, and combine the data, correcting errors and adjusting data types. Take, for example, a CSV data source. By default, the values in a CSV file are all strings. You can quickly review the data in an analytics automation building block and change the data type accordingly, making dates into dates, numbers into numbers, and so on. Like many tools, the underlying data sources are not modified, only the resulting output of the analytics pipeline. This means that a process can be automated to run again and again against several data sources—on premises and in the cloud. If something in the source system changes, you can quickly adjust the workflow to account for the change. This is a significant time-and-effort improvement versus asking IT to update their ETL flow and waiting six to nine months for it to happen!

As mentioned, the visual interface eliminates the need to understand code, democratizing access and analysis. This is also beneficial to your company in two other ways. First, it decreases the need for employees who specialize in understanding specific programming techniques. While developers will always be needed to maintain data structures within an organization, the ability to analyze data without knowing how to write code gives the power of analytics to everyone throughout the organization. Second, the ease of use of a graphical interface saves time. Developers no longer have to spend hours or days refining context, joins, and filters, nor do they need to know how to create predictive analytic and ML (machine learning) algorithms. Instead, they can focus on utilizing the analytics automation building blocks to piece together a workflow that solves specific business problems—like predictive maintenance. Analytics automation employs techniques from common data analytic tools as well. By implementing techniques found in other common data and analytic tools, an analytics automation platform can provide resources that are familiar and easy to use. For example, users can use common spreadsheet methods for manipulating data.

The main feature of analytics automation is giving the ability to analyze data to those without programming knowledge. Macromill Inc. took advantage of this usability in its work.5 Prior to the implementation of an analytics automation platform, analysts were required to load survey data into statistical software. The resulting output was then transitioned into a reporting tool where report writers generated the visualizations. This setup relied heavily on the technical expertise of analysts to correctly interpret and process the data through the statistical tools. The complexity of the survey data and the slowness of the statistical software often created delays in the analysis, resulting in slow turnaround time for Macromill’s customers.

The company development group manager decided to try implementing an analytics automation process. He hoped that translating the existing data process into an analytics automation workflow would improve performance and reduce employee training needs. The implementation was very successful. Macromill no longer needed separate tools to clean up and analyze the data. Instead, workflows were developed that did the steps automatically. If a customer changed requirements, the workflow could easily be modified and run again. More importantly, the application the company chose was easy to use and reduced the need for employee training. Now there were more users available to analyze survey data and generate results. The faster turnaround time for analysis led to more time for data visualization, faster results for the customer, and repeatable processes for analysis.

Analyzing Data No Matter Where It’s Stored

Ease of use is only one benefit of the analytic process. The ability to connect to disparate data sources across multiple IT environments in a single interface is also key. Normally, an organization would need separate applications to combine and blend data into new sets. Analytics automation building blocks do this through the visual drag-and-drop interface. They can connect to local files such as Excel, CSV, or Access. They can also connect to hosted data sets such as MySQL, Oracle, PostgreSQL, or Salesforce, as well as cloud sources within Amazon, Microsoft, and Snowflake. An analytics automation application can access and analyze data on premises, in the cloud, and in hybrid environments. The ability to combine different data types opens up possibilities to examine how parts of your company interact and impact each other. This is best illustrated in an example.

A quick-service café franchise well known for its smoothies wanted to dig deeper than just its sales data.6 It wanted to know what things impacted sales, asking questions such as “Is weather related to sales?”, “Do certain flavors have better success in one region over another?”, and “Which marketing plan is most successful during down times?” These types of questions relied on point-of-sale data, of course, but they also needed weather, location, traffic, calendar dates, and promotion data. They even brought in information about their menu layouts! It was suspected that these external factors played a role in the success of the business, and the company wanted a way to analyze and compare this external data to its sales to look for patterns.

Imagine the amount of work and effort that would be required to tie all these data sets together using another platform. Analytics automation, combined with Amazon Web Services and Tableau, made it simple. Analytics automation allowed the company to connect to multiple data sources, identify relationships, and build workflows to answer its critical business questions. The company brought in over three years of historical data and used analytics automation to transform it. The workflows were then automated to run on new data coming in each day. The company was able to establish rules for the six hundred new data sets it receives at the end of each day, tailoring the data flow to only run once 95% of the results are available.

In the end, the company used the results of 15 different workflows together to generate a super workflow. This super workflow generates and updates the data sources needed to populate local self-service reporting tools and company-wide dashboards. It is a central source of truth for all insights related to the company and a complete solution with minimal IT infrastructure. The super workflow also gave the company insights on market trends, identified business inefficiencies, and provided a start toward establishing a data-driven culture.

Another example of combining multiple data sources was demonstrated by Coca-Cola, which used analytics automation building blocks to combine data sets across the organization, making them more accessible.7 In an example from Coca-Cola’s senior business analytics manager, data from multiple, separate bottling data sets was combined and processed in just a few hours. Prior to implementing an analytics automation platform, the analysis was nearly impossible, as the size of the data sets prohibited the use of traditional tools. It is also worth noting that this analysis was done without writing any code and without any experience in the analytics automation platform.

It’s important to know that data doesn’t have to be sourced from files or databases with analytics automation. The building blocks allow you to manually enter data into tables for use in the cleanup process. This may sound like it opens a door for potential errors or data manipulation; however, there are several beneficial uses. First, it can be used to clean up data that has typos or errors (although fixing the issue at the source is a better idea!). Second, it allows you to create bridge tables, small tables of data that can be used to connect or clarify other data sets brought into the workflow. Third, it provides a method to validate a workflow process by allowing you to create a smaller data set to test with as opposed to running a data set of millions of rows.

The ability to connect to and blend together a plethora of data sources gives analytics automation flexibility that is not available in a conventional data flow. The diversity of data types and formats it can connect to gives your company the ability to reach beyond your standard data sets and explore how other factors influence your success. It brings these data sets together in an easy-to-use interface with a multitude of options to clean, prepare, and dissect them. This is not the limit of analytics automation, however. Once the data is collected, it must be analyzed to derive the insights your company needs to be successful.

Accelerating Insights with Automation

The ability to quickly transform data into insights is a key element within analytics automation and a huge benefit to your company. As with data preparation, analytics moves through analytics automation as part of a visual workflow. The analytic steps can home in on key performance indicators within the organization, allowing your business to identify patterns, trends, and outliers quickly. It can perform analytic processes independently or in cooperation with other analytic tools, making it powerful and flexible at the same time. Most importantly, the insight-generating workflows can be saved and repeated as often as needed to provide data-driven monitoring and insights on demand. It also allows workflows to be shared, reused, and governed by the organization to ensure that best practices are adhered to.

Analytics automation also improves the speed of analysis in other ways. The ability to sample data through multiple techniques enables your organization to quickly examine trends and patterns without processing an entire data set. The building-block structure of the software allows you to stop at any point in a workflow, giving you the ability to assess the functionality of the workflow as you go. As mentioned earlier, one workflow can become the basis of other workflows, ensuring that the analysis is done on accurate, governed, reliable data.

At the same time, analytics automation contains the ability to future-proof itself. As your business grows or your software changes, the analytic workflow adapts to include additional columns of information. This means less work rebuilding and reconfiguring your data processes and more time investigating the new field’s impact on your data. This can significantly reduce time spent on development and waiting on coders. This option can usually be enabled or disabled, depending on the analysis and the data set.

All of these processing steps are visible in how the Salvation Army used analytics automation to consolidate, deduplicate, and organize its HR information for Australia.8 Its test lead was tasked with collecting multiple different data sets from Excel to hard copy and combining them into a single source of truth to load into a new software application. Using the features of the analytics automation platform, he was able to join, deduplicate, and clean up multiple data sets. He used repeatable automated data migrations through the analytics automation platform to combine over ten thousand worker records, saving thousands of hours of manual labor.

It is very likely that the Salvation Army analytics team relied on sorted data to validate its workflows. Even though analytics automation output does not necessarily need to be sorted, doing so will arrange the preview results in order, which helps with examining the data, validation, and workflow testing. Some analytics automation platforms can ignore special characters and values as well, allowing you to sort by the value in the fields and ignoring spaces, quotation marks, and punctuation.

After the data has been transformed with the workflow, it needs a place to go. Analytics automation includes options for easy ways to export data. These options typically include delimited text files, spreadsheets, other systems, bots, RPA (robotics process automation) platforms, databases, and many other target systems.

Workflow output is not limited to flat files, either. Analytics automation can be integrated into existing tools and reporting capabilities. By delivering workflow output to other systems, organizations can leverage automation to deliver monitoring systems, regular updated reports, and dynamic processes based on data. In simpler terms, workflows can be designed to alert you when thresholds are met or crossed, provide a regular data source for business processes, generate updated reports to decision makers, and many other automated tasks. In the end, an analytics automation platform enables you to separate the signal from the noise so your business can focus on the relevant insights in a timely manner.

Precision Analytics Group relied on this ability to ingest updated data as part of its processes to publish real-time data on COVID-19.9 It was tasked with providing insights on the pandemic and its impact on hunger, health, education, and housing. These insights would prove to be invaluable to aid organizations struggling to identify areas that needed support. While the US Census Bureau provided large amounts of data to analyze, it was usually hosted in separate files, contained disparate information and areas with missing and caveated data, and was changed on a regular basis. Precision Analytics needed a tool to automate the data retrieval without having to manually manipulate it with each request.

The company turned toward analytics automation for help, using the tool’s ability to combine data, clean data, and break it down into usable chunks. Once all the data was collected and organized, the resulting output was fed back into another workflow. This workflow combined the data with that from previous weeks and enabled the organization to present results based on numerous demographics. More importantly, logic was added to the workflows that allowed the team to break down the data provided by the Census Bureau based on the filenames on the hosted site.

As illustrated in Figure 7, this is an extreme example of an analytics automation workflow, but it highlights the capabilities of the software. This company took disparate data sources that had changing names and used an analytics automation workflow to account for the changes. This example also illustrates how something that would have taken hours or days to do manually can run repeatedly in an extremely short period of time using analytics automation. Repeatability and ease of use combined with complex data handling can take a massively complex task and break it down to a series of simple, easy-to-understand steps. Equally important is that the software lets you review the data after each step to quickly visualize the impact of the change.

Figure 7. Example workflow from Precision Analytics Group, detailing its methods for extracting data from diverse, regularly updated Census Bureau data sets

Democratizing Insight Generation

Making something available to everyone is often referred to as democratization, and democratizing data is incredibly valuable to a business. Having data in the hands of your employees empowers them to analyze their specific tasks and performance. It brings the data from a distant report to something much closer to those doing the actual work. It provides flexibility, repeatability, knowledge sharing, and data literacy to your business. Let’s take a look at how analytics automation differs from a typical workflow and examine how it improves the data to insight process for a business.

Limited Data Access Equals Limited Results

To understand why democratization is important, we need to first understand what issues arise from our employees being unable to access data and insights about their jobs. It is estimated that 44% of data workers’ time is wasted on searching, preparing, and analyzing data.10 Furthermore, five hundred thousand days of managers’ time every year are wasted on ineffective decision making at a typical Fortune 500 company.11 We need to examine a typical analytic workflow and see where the data comes from, who has access to it, and what constraints might exist with deploying that data broadly. Let’s return to our earlier example of an electronics company.

Let’s assume that the organization uses traditional ETL processing methodology. Data is ingested from the areas most vital to the company, such as sales, shipping, and maybe HR. There are several developers devoted to writing code that accesses this transactional data, modifies it, and stores it in a data warehouse. The company is adept with data best practices and provides data dictionaries, standards, and definitions to apply. It also employs a core team of data analysts to ingest the data and generate reports for the company. So what’s the problem with this?

To start, let’s look at access. While the data analysts and the developers may be excellent at their jobs, their entire focus is on the flow of data. The developers are heads-down buried in code and code changes and likely have limited knowledge about how the company operates. Likewise, the analysts probably have some basic knowledge of business methodologies, but their expertise lies in using report building tools to generate visualizations for the company to digest. They rely on the subject-matter expertise within the business to determine which data tables and fields are important and what needs to be reported on.

Access to the data is likely limited to the report writers and a few key stakeholders in each specific area. The report writers focus on generating reports for the leadership, showing the performance of the company, while the area-specific experts use the small subsection of data available to them to make decisions on how their portion of the company performs. The problem with this limited access is twofold. First, employees who are making the company run—from sales to stock to inventory management—likely do not have access to the data the company generates. Second, many of those who do have access to the data lack the subject-matter expertise or the analytic tool expertise to properly analyze it.

In addition to access, speed is an issue in a traditional data workflow. If leadership has a question about the business, or if the environment around the business is changing, they need to see the impacts as quickly as possible; however, the traditional method is full of delay. First, the question being asked may or may not be answerable with the data available from the transactional systems. If necessary data points are missing, this may require updates and changes to the ETL—a process that could take several days to complete. Next, the report writers need time to ingest the data, analyze it, and develop visualizations that provide the answers to the questions being posed. They also need time to communicate with subject-matter experts to validate and confirm the data. In short, from when the question is asked to when it can be answered could be many days apart! Is the answer even valuable anymore once that much time has passed?

The typical data flow process only provides data to small sections of employees:

Those with deep knowledge of the business but limited knowledge of analytic tools

Those with strong skills in analytics and analytic tool usage but only limited knowledge on how the business works

Additionally, the typical data flow is filled with delays and inefficiencies, providing unhelpful and even detrimental insights to leadership at all levels of the organization. How do we improve this access, and what benefits might appear by distributing data beyond just those with the expertise? How do we bridge the gap between subject-matter expertise and data analytic capabilities? The answer to these two questions is what makes analytics automation so powerful.

Upskill Employees to Accelerate Insights

Analytics automation improves access to data, but why would you want to do this? What about the security and privacy of your company’s data? It’s not as scary as it sounds. Empowering your employees to utilize data is a beneficial step in making your business analytically driven. It takes the guesswork out of running the business at the lowest level and provides the subject-matter experts and frontline workers the tools they need to make decisions where and when they need to be made. This simple shift could save your company time and money by eliminating issues before they have a chance to take hold.

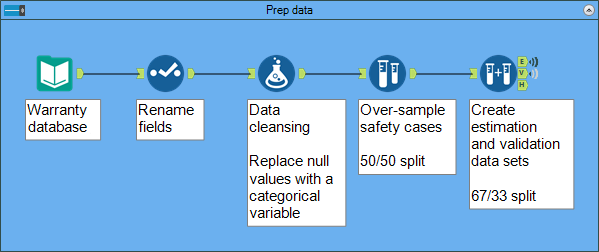

Perhaps the best way to understand how data democratization positively influences a business is to look at an example of where it has been successful. When you think about analytics automation and data in manufacturing, you probably think about reducing supplier costs, increasing efficiency, and reducing expenses. Polaris used it in an entirely different way.12 Instead of focusing on increasing the performance and innovation of its products, Polaris wanted to understand what things it could do to improve the safety of its products for its customers.

Polaris, which manufactures snowmobiles and off-road vehicles, wanted to know what types of safety problems its customers were experiencing and how it could improve product quality to avoid these issues. Typically, this sort of troubleshooting falls on a technical services team, but Polaris wanted to use data, specifically, warranty claims. The idea was that warranty claims would contain large amounts of quantitative and qualitative data, which could be examined to identify potential trends and problems.

Polaris turned to its data and post-sales surveillance teams for help. Together, the teams utilized analytics automation to analyze the data. They pored through warranty claim data, tying it to predictive models and dealer-updated information. Using analytics automation to process the data and analytic modules to run predictive models, the teams were able to create an algorithm that would flag warranty claims for potential defect or safety issues that needed to be addressed. They then automated the process and tied it to the company’s email system. Now, each warranty claim that comes in is processed through the workflow and analyzed for patterns. If one is found, an email goes to the safety team within the organization to further examines. The end result was an increase in safety-related claims being quickly identified and addressed by the manufacturer.

Take a moment to think about this Polaris example. The company was successful by tying data together and delivering it to the employees who had the ability to interpret, analyze, and act on the results. In a traditional system, this would likely have been impossible. Their post-sales surveillance team would have needed to manually comb through large quantities of email for patterns and trends. That alone could have taken days or weeks to complete and, all the while, additional claims would continue to pour in. By implementing analytics automation technology, the team can now dynamically ingest these claims and quickly provide preemptive solutions. The company not only improved the safety and reliability of its product, but by giving data to the right employees, it saved hours and hours of manual time and effort.

Let’s look at another example of providing data manipulation skills to employees to solve problems. The Hong Kong Polytechnic University Institutional Research and Planning Office needed a way to analyze all portions of the university without the technical background in coding.13 The university is filled with data following HR, students, finances, and more. How could it examine all these layers of data without the technical know-how? More importantly, once a method was found, how could the planning office ensure that the processes developed using this method would be translatable to new employees and easily modifiable if university processes changed?

Two of the office’s research analysts took the task head on. Their goal was to provide data and insights to university leadership around all aspects of the university to aid in decision making. Until recently, they relied on error-prone manual processes to gather, examine, and analyze the data. The data was mostly housed in Excel and Access files that numbered in the hundreds. The method was slow, tedious, and time-consuming. They turned to analytics automation for assistance.

Analytics automation provided several key advantages to the team. First, it was easy to use. They didn’t need a computer science or data science background to extract the necessary data and collect it for analysis. Second, it provided a streamlined method to organize and aggregate the extensive list of files containing student, HR, and financial data. Third, it was repeatable and traceable. The workflows enabled the research analysts to not only break down their analytic process into digestible modules but also provide transparency on the processes they performed. This made it easy to identify and correct issues when they occurred. Analytics automation also provided the ability to create and run predictive analytics on their data, giving them the capability to provide projections and insights on incoming applications, admissions, and matriculations.

The end results were workflows designed to handle the process of providing analytically fueled insights on a regular basis to university leadership covering all aspects of university operations. The jobs could be run easily and quickly and were simple enough to understand and hand off as new employees joined the team. The time saved by switching to automated processes can now be put to use doing additional analysis, and, since the process is repeatable, the team is much more confident of its results.

We’ve seen a couple of examples of how employees at the transactional level can utilize data to improve their companies, but let’s take a generalized look at the possible benefits you might see in your own company. What benefits might a data-driven workforce provide? How can you upskill your employees to utilize data? Let’s return to our computer parts sales organization and see how it might benefit from this aspect of analytics automation.

As mentioned before, the analytics automation platform is a low-code/no-code user interface, where users can easily drag and drop tools from the palette to the canvas. This means that the manipulation of data within the organization is done in a workflow-based set of building blocks. How does this help your employees? Well, first, it takes some of the complex code-based methodology and turns it into an icon. A user with some basic knowledge of the data can easily drag a filter building block into a workflow to limit data in a data set. There is no need to understand SQL code or how to write algorithms (e.g., regressions, decision trees, neural networks) that can extract insight from data. Second, the ability of employees to build their own analysis eliminates dependence on your technical analysts’ time and availability. Subject-matter experts can make a pull when and where they need it. Third, it provides the ability to save workflows and create reusable templates, which allows employees to share data manipulation techniques and results with colleagues.

Analytics automation also opens up the ability to tie into systems that might otherwise not have data exposed. Suppose our imaginary company has a transactional system for tracking visits to the store against purchases made. This system may be store-specific and not have data pulled for company-wide use. With analytics automation, the manager or employees at the store level can tie that visit data into the sales data for the store. It helps them pull information on how many people might be coming in to do something other than make a purchase (such as price comparison or making a return).

We mentioned a real-life example of this earlier with the quick-service café franchise well known for its smoothies. That company incorporated regional data such as weather and location information to understand how it impacted the sales. This data isn’t integrated into a company-wide database, but rather is specific to the store or stores in a specific region. This enables data insights and decisions to be made at a local level to improve sales and doesn’t involve waiting on data analysts, ETL code-based processes, or central reporting to complete.

This example as well as many others illustrates the benefits of delivering data to a more granular level of your organization. But delivering data is only part of the process. Data needs to be analyzed and interpreted to become an insight. Let’s next look at data reporting to see how traditional methods and analytics automation compare.

AI and Analytics for Business

Data needs to be transformed into information and insights. How does this happen? How do we turn millions or billions of rows and thousands of columns of data into something that is easy to understand and interpret—something where the insights jump out at you?

Traditional Analytics and Reporting

Report writers have the monumental task of sorting through all of our data—and transforming it into something that is easily understandable. They need to be able to identify the data that is relevant to them, and this is not an easy task. As mentioned before, report writers and analysts lack the in-depth knowledge of the business while the business experts lack the resources and skills to do analysis and report generation. This creates a delay in the production of meaningful insights for the business. Additionally, questions related to the data change based on the time, person, or team asking them. In other words, it’s contextual.

Let’s look at your own business as an example. Your business probably needs to report headcounts. Your HR department wants each person only counted once, and counts distinct employee IDs, regardless of where they work. Another area of your business (such as IT) may focus on physical resource allocation and realize that some employees work in two different jobs. They count and split the individuals evenly between departments, depending on where the employee needs computers and equipment. Your finance department may be interested in the impact each employee has on costs, and it counts employees based on their effort in each department.

All three departments may be correct in how they count employees for their task, but the end result is three different headcount reports. The point is, one report is usually not sufficient, as it is not contextualized to the user who needs the information. How do you take the collected data and make it available and beneficial to the needs of each of these departments? How long would it take each department to develop the reports it needs to accomplish its tasks? Many companies face similar challenges, which can be further complicated if the organization has multiple locations, multiple products or services, or multiple levels of reporting requirements.

This leads us to the next question: how do I find insights if I don’t know where to look? This has long been a struggle for analysts, especially as the volume and variety of data has grown. To fill this void, many organizations turn to data scientists. These data experts apply extensive statistical and computer science knowledge to identify patterns, trends, and outliers in data, then combine these elements with probability to determine what might impact the company in the future. They employ predictive analytic and ML techniques to perform the analysis and generate insights and predictions; however, this process is complicated and time-consuming.

Data scientists use statistical coding languages, such as R, Python, and Julia, to tackle the complexity and depth of their work and enable them to analyze data more quickly and thoroughly; however, the analysis still requires them to understand the data and how the business works to identify the appropriate insights. Stop for a moment and think of your own organization. Imagine how much data you generate in everyday business. Now think of how long it would take the average analyst, with even basic knowledge of your business, to dissect and extract insights from all that data. It’s nearly impossible.

Even with specialized software tools, human input is needed to identify the patterns and insights most relevant to the business. This is especially important when identifying the key performance indicators and patterns relevant to the business. The human aspect involves weeding out information that may be irrelevant or insignificant. While an insight may be valid and identified correctly in the data, the user needs to understand that some outliers and patterns are important, and some are not.

Like many of the tools mentioned above, there are issues with these types of software. For one, not many of these tools incorporate all the processes involved in analytics, which is not just another tool, but rather a collection of software titles that requires funding, technical expertise and support, deployment, and maintenance. It requires some level of human interaction with understanding of business-related processes and standards. These software programs are also potentially another place where data is stored and must be maintained. This requires data security, accessibility, and integrity. If the data is not maintained in this additional system, the value of the tool begins to wane.

Improving Insights with End-to-End Capability

We’ve established that analytics automation improves workflows by democratizing insights through ease of use and upskilling. The ultimate goal is to get the insights needed from any process and make decisions based on the results. The best person to correctly analyze a process is one that is directly involved with how that process functions. That is, you want your subject-matter experts analyzing the data, as they are the most familiar with how that particular process functions. The question is how do you get that data and ability to analyze into the hands of a user who is trained in your business process, not data analytics?

Simplifying the analytic and insight generation process requires building blocks that are able to recreate the functionality of specialized software without the need for specialized code. Analytics automation needs to be able to take on the process tasks that report writers and analysts perform, but it needs to be simple enough for anyone to use. These are the functions that an analytics automation application needs to be able to perform:

- Artificial intelligence

-

Utilize iterative processes and intelligent algorithms to dig through large amounts of data to identify patterns or outliers that might otherwise go unnoticed.

- Data access

-

Access dozens of different types of data sources, allowing users to pull and connect information from multiple places.

- Data preparation

-

Provide options for managing missing values and formatting issues while also handling special characters, white space, and capitalization irregularities.

- Data exploration

-

Include option to quickly view output to better understand the data content, quality, and results.

- Data enrichment

-

Combine data from your company with common public data sets, such as census data and geospatial data, to provide insights beyond your company into things such as demographics, buying habits, and transportation efficiency.

- Data cataloging

-

Provide a centralized repository that combines data sets and analytic workflows, which can be searched to quickly find data relevant to your business, making it easier to track your data assets and establish governance and standards to unify your organization.

- Reporting

-

Provide multiple methods for returning results, both visually and in crosstabs, and the ability to generate dynamic reports at any point within a workflow.

- Data science and machine learning

-

Allow for simple model building and deep analysis of data to ensure that the predictive models generated by all of your data workers (not just data scientists) will be easy to interpret and reliable.

- Other tools

-

Other abilities, from machine learning to text mining, in an easy-to-use format that allows anyone in your organization to perform their own data analysis.

Analytics automation simplifies the process for insight generation. By providing an intuitive, easy-to-use, graphical user interface, it provides a method for business users to transform data into insights. It also provides methods for analysts to develop workflows and building blocks that can be used by others to automate business processes. Your employees don’t need to understand the methodology for pulling, cleaning, and joining data in the tool if your analysts tackle that part of the process for them. Instead, your employees can focus on using the tool to ingest data or build insights relevant to their specific needs. To clarify this point, let’s look at an example.

Coca-Cola used analytics automation to handle the complexity of multiple vendors with multiple needs while also developing reports on a company-wide level.14 As with many businesses and organizations, it had been relying on software that was developed several years earlier. This was an older, Excel-based solution that provided individual location data for each of the subgroups underneath the main umbrella organization. Unfortunately, the system was starting to get overburdened and hadn’t been updated in several years. The company needed a way to gather all of the data from multiple retail locations and consolidate the format and content for centralized reporting. It also needed a way to break down this data to provide location-specific reporting to the store owners.

The analytics manager needed to organize the structure the data in such a way that he could use Tableau to create fast-responding visualizations. He turned to analytics automation for help, using the cleansing and consolidating tools to gather both company-based and customer-based data into one workflow. The support team from analytics automation provider Alteryx helped to develop and evolve the workflow to generate his data set. The end result was exported into a Tableau hyper file, from which he could generate easy-to-use, interactive, dynamic reports.

In the end, the use of analytics automation spread from his team to the organization’s finance, operations, and marketing teams. He highlighted some of the benefits of analytics automation as part of a presentation he did on his success:

It eliminated reliance on third-party vendors and consultants to gather and analyze data.

It reduced the lead time for reporting by over a month and is able to produce reports quarterly instead of semi-annually.

It removed memory-intensive Excel files from the process and replaced them with a fluid, responsive, dynamic dashboard.

It eliminated the need for the manager to upload and print reports; he could instead refresh a Tableau extract in a matter of seconds.

The implementation saved the company an estimated 60% on the reporting process.

This highlights an additional benefit of analytics automation: it does not have to function independently from other data tools. As mentioned when discussing data pulls, analytics automation software has the ability to connect to a wide variety of data sources; however, it also has the ability to work symbiotically with reporting and analytic tools. As mentioned in the Coca-Cola example, analytics automation tools are able to digest data and return it in reporting friendly formats such as Tableau’s hyper file. This means that while analytics automation is capable of doing many things related to data, it does not have to function independently, and it will integrate well with existing reporting platforms.

HCA Healthcare had a similar problem that it solved with analytics automation.15 HCA is an organization of 185 hospitals across 19 states with over 30 million patient encounters each year. The strategic analytics team wanted to provide operational and financial reporting to its leadership, but with such a huge organization with leadership at varying levels, it was challenging to deliver reports at the right level. Leadership at the highest level wanted overarching analysis of the entire organization, while functional managers were focused on the specific areas they maintained. Additionally, the strategic analytics team wanted to provide local analytics teams with tools to validate and improve local reporting.

As with many of our previous examples, the biggest challenge was the multitude of platforms and systems providing data. The data was in several forms, from Oracle to Salesforce to Teradata, and HCA needed to find a way to bring this data together. Again, analytics automation was the solution to the multitude of data sets, the large volumes of data, and the levels of reporting the team needed to provide. The team was able to create workflows that functionally tied the data together and aggregated it to the level of reporting: one workflow for upper management, one for functional managers, and one for local analytics teams.

The end result for HCA Healthcare was the ability to automatically deliver data insights to all three levels in the organization. HCA’s associate vice president of analytics identified the ease of use and format as being critical to reaching all three audiences. Some recipients of the data wanted to see the numbers and nothing more, while others yearned to click into the details. The analytics automation software was able to generate results at a level where both needs could be met. The need to bring insights to management on all levels with varying levels of expertise and technical abilities was accomplished using the workflows of analytics automation.

The examples provided above highlight how analytics automation can be used in different ways. Both organizations utilized analytics automation to accomplish their goals, resulting in a better understanding of how their organization was run through data-driven insights and repeatable processes within analytics automation. The insights provided are inspiring other areas within each organization to consider the power of analytics automation as well. This grassroots spread of data usage throughout their organizations is common in many stories of analytics automation implementation and an excellent way to test the functionality and capabilities of a data automation process. Analytics automation also helped them quickly identify relevant information for their business to help them make effective decisions.

Bringing Analytics to Everyone

Imagine a retail store looking toward the future. If the store can analyze historical data of successes and failures to uncover patterns within the data, it can effectively determine the right dates to offer promotions, what to price its products at, and whom to market to. Predictive analytics gives leaders and employees the knowledge they need to make the best decisions based on known data.

Analytics automation takes all of the complicated processes of analysis and makes them accessible. The built-in capabilities of analytics automation allow a business user to not only quickly grab data but also analyze it by connecting different building blocks together using a drag-and-drop interface. Since the interface is visual and not code-based, users no longer need the extensive knowledge of specialized software or coding to run analysis.

Utilizing historical data and analysis can provide insight on additional dimensions to analyze, key performance indicators to track, and methods to improve business success. Analytics automation takes this analytic process and transfers it from data analysts to any end user. Combined with the algorithms of machine learning, insights can be focused on key indicators and built upon.

Take, for example, agriculture. If the machine learning software recognizes there is a correlation between the fertilizers used and the crops planted, it may remember that and offer it as a potential insight in the future when others query the data. It may also take that correlation and compare it to other dimensions of the data such as the crop, weather, location, and temperature to search for additional relationships. By utilizing this automated investigation, farmers gain insights on when to plant, what to plant, and where to plant it to get the best yields. It can also control equipment and fertilizer spread to optimize plant growth and health.16 The farmer does not need to understand statistics or know how to write code. Analytics automation provides an easier interface to unlocking these insights.

Analytics automation also encompasses text analytics and natural language processing. As mentioned above, the data coming out of the transactional systems and being reported on is process specific. Natural language processing translates that data into something that sounds more like regular conversation than a query. Instead of searching for a sum of profit divided by a sum of sales, the end user can just ask the question “What is my profit ratio?” Most of these tools can both generate natural language and respond to a natural language query. That is, they can use other data source options to generate synonyms for keywords and catalog the variations of words. They can then dissect written questions into the key components needed to produce an answer.

Stratasys, a manufacturer of three-dimensional printers and production systems, utilized analytics automation to communicate with its corporate partners.17 In recent years, the demand for three-dimensionally printed products has grown exponentially and so have the number of providers. Stratasys needed a way to dig through volumes of data from various sources and platforms to gain insights on the performance of the company as a whole. Until they discovered analytics automation, analysts were forced to pull from Salesforce, Excel files, and Oracle to gather all the information from pre- and post-sales transactional data. They manually combined the data and analyzed it in a final Excel file or PowerPoint presentation. The process took days to run and required additional time to address issues with regional and partner data.

The self-service and multiple-source capabilities of analytics automation caught the attention of the Stratasys analysts. The tool had the ability to not only combine the multitude of data sets but also account for regional vendors, address data errors, and process results automatically. The team could use its capabilities to dissect the data from pre- and post-sales, corporate partners, and other sources to create a cohesive source of information from which to build reports. They tied the results in to Tableau, which they used to generate information and insights for their leadership. As a result, a report that used to require 5 hours to run, now took only 30 minutes.

The benefits of analytics automation didn’t end with simplifying the data workflow for the analytics team. Since its implementation, Stratasys has been able to make several gains in efficiency and communications by generating granular reports and insights for its corporate partners. The company has also used analytics automation to provide data to marketing and resellers to improve campaign success. Process mapping and documentation have also improved, as the process is now point and click instead of complex, multi-level Excel file maintenance.

Analytics automation is also having an impact on health care. The impact of data and insights in the medical world cannot be overstated: the correct use of data can mean the difference between life and death. As much of the health care system has been around a long time, data and analytics within the industry have aged with it, with many places relying on antiquated systems and methodologies. Let’s see how one organization used analytics automation to bring data, analytics, and reporting to the next level.

Two large health care systems came together under an umbrella organization called SCL Health. With an influx of new clinics, patients, physicians, and staff, new challenges arose surrounding not only the people but also the stock and vendor management needed to keep valuable medical supplies on the shelf. In the next year, a team was created with the sole purpose of answering important questions about supplying the new organization, with a focus on inventory management and vendor relationships.18

Initially, the organization relied heavily on Excel spreadsheets. It generated large sets of data and simple visualizations into a single sheet, which was then emailed to the supply team leadership. This was incredibly ineffective and inefficient. The insights were not useful or interesting, and the time required to generate the reports was prohibitive. SCL needed another solution—a way to bring the data to the users who needed it and give them the ability to dig down to the granular level needed for their specific unit. In short, it needed to bring the data to the people.

The team was introduced to analytics automation during its introductory meeting and went to work developing workflows to meet the needs of the organization. The first step was to consolidate the data sources, combining inventory, purchasing, contracts, and more. From there, the team developed additional workflows to correct errors and update data with dynamic pricing for the items they used. Once the data was stabilized, they used the output to generate Tableau dashboards with multiple levels of interactivity, enabling end users to dig into the data and compare invoices to purchase orders.

The end results speak for themselves. The organization was able to eliminate unnecessary software subscriptions, reduce incorrect vendor usage, and reduce discrepancies. In total, it has cut hundreds of thousands of dollars in waste annually. The ability of analytics automation to bring data to the people has provided the organization a resource for cost cutting and cost savings. In addition, the success of the software has expanded its scope. The company now uses the tool for prime medical and surgical distribution conversion, backorder management, and product recall management.

Faster Analytics and Better Decisions

As we’ve seen, analytics automation is being used to overcome the technical challenges of wide-ranging and large volume sets of data. It simplifies analysis, reporting, and insight delivery through automation. These changes save time, effort, and money. More importantly, the ease of use and automation also makes it possible for many of these companies to allow more people within the organization to transform data into insights.

The analytic building blocks are also extremely deep in functionality. They include capabilities like data parsing, grouping, modeling, and forecasting. More importantly, with the ability to connect to multiple data types and incorporate integrated data resources, users can examine the business spatially and tie it to common data sets like census data. Let’s look at how some organizations are using these capabilities.

A management consulting firm specializing in the life sciences industry provides consulting to other companies, and in this example, it utilized analytics automation to examine and analyze health care distribution.19 The firm wanted to understand health care distribution across regions within the US to optimize productivity based on workload and potential sales. In short, it wanted to take health care and drug utilization data and tie it to geography to determine how to best allocate staff. This example is unique, because the underlying data being used is not generated by the company itself but instead is a combination of spatial data and drug utilization data provided by the government. It consists of data from about 12 billion health care claims tied together with geographic and demographic population data.

So what did the firm do, and how did it use this data to generate insights? The analytics automation user combined two workflows to make their system effective. The first combined the data mentioned above and was run a few times a year to keep up to date. The second workflow took Excel-based input containing information on drug types, health care services, and workforce and workload desires from a user and processed it using the statistical language R to create regional optimization for workload (see Figure 8). The complete analysis ran in a day as opposed to months. It also saved the organization money by eliminating the need for a third-party vendor to complete the analysis.