NETWORKS HAVE BECOME INCREASINGLY DISTRIBUTED yet remain interconnected. The number and types of threats to computer systems have grown. Meanwhile, the number of forensic tools to prevent unauthorized access and thwart illegal activity has also grown.

Computers store more information today than in the past. The price of storage media has decreased while the capacity has increased. Many people carry flash drives, smartphones, and iPods that contain gigabytes of information. Forensic specialists must sift through a wealth of devices and data as they search for evidence.

As threats have grown and changed, so have the tools for conducting forensic investigations. At one time, forensic inspectors were limited to conducting research only after an unauthorized or illegal activity had taken place. They are now more likely to capture evidence in real time—both on disk and in a computer's memory. In the past, many forensic examiners shut down a system before going to work on it. Many examinations now focus on malware exploitation. Examiners must often collect data from the memory of a running machine that would be lost if the machine were to lose power. Engineers have developed tools to capture data from live, up-and-running systems.

The preceding chapters discuss data collection using an offline approach, such as removing a suspect hard drive and making a write-protected image of the contents. Forensic investigators can also collect information in real time—that is, the actual time during which a process takes place. For example, they can collect data from random access memory (RAM). This chapter discusses live system forensics, which involves uncovering illicit activity and recovering lost data by searching memory in real time.

Forensic investigators can take advantage of a wide variety of system forensics tools. Some are open source and some are commercial tools. These tools let you safely make copies of digital evidence and perform routine investigations. Many existing tools provide intuitive user interfaces. In addition, forensic tools prevent you from having to deal with details, such as physical disk organization, or the specific structure of complicated file types, such as the Windows Registry.

Unfortunately, the current generation of system forensics tools falls short in several ways. These tools can't handle the recent massive increases in storage capacity. Storage capacity and bandwidth available to consumers are growing extremely rapidly. At the same time, unit prices are dropping dramatically. In addition, consumers want to have everything online—including music collections, movies, and photographs. These trends are resulting in even consumer-grade computers and portable devices having huge amounts of storage. From a forensics perspective, this translates into rapid growth in the number and size of potential investigative targets. To deal with all these targets, you'll need to scale up both your machine and human resources.

The traditional investigation methodology was to use a single workstation to examine a single evidence source, such as a hard drive. However, this approach doesn't work with storage capacities of hundreds of gigabytes or terabytes. In some cases, you can speed up traditional investigative steps, such as keyword searches or image thumbnail generation, to meet the challenge of huge data sets. Even so, the forensic world needs much more sophisticated investigative techniques. For example, manually poring over a set of thousands or tens of thousands of thumbnails to discover target images may be difficult but possible. But what will an investigator do when faced with hundreds of thousands—or even millions—of images? The next generation of digital forensics tools must employ high-performance computing and sophisticated data analysis techniques.

An interesting trend in next-generation digital forensics is live system forensics—analysis of machines that may remain in operation as you examine them. You first read about live system forensics in Chapter 1. The idea is appealing particularly for investigation of mission-critical machines. These machines would suffer from the substantial downtime required to do typical dead system analysis by first shutting down the machines. Also, some data exists only in RAM.

A prominent argument among system forensics specialists is whether to conduct analysis on a dead system or a live system. Regardless of the mode you use, keep in mind the three A's:

Acquire evidence without altering or damaging the original.

Authenticate that the recovered evidence is the same as the originally seized data.

Analyze the data without modifying it.

Tip

Whether using traditional or live system forensics, avoid contaminating or corrupting evidence. If you contaminate a crime scene, you probably can't use the evidence gathered.

Unplugging a machine before acquiring an image of the hard drive has several serious drawbacks:

It leads to data corruption and system downtime. It therefore causes revenue loss.

The development of new criminal techniques leaves law enforcement techniques outdated. You can't always use dead digital forensics to gather sufficient evidence to lead to a conviction.

In an attempt to defeat dead system analysis, criminals have resorted to the widespread use of cryptography. While you can create a complete bit-for-bit hard drive image of a suspect system, you can't access its data if the perpetrator has encrypted the system. You'll need the drive's unique password to decrypt it. Investigators often have trouble cracking the password or getting the suspect's cooperation.

The need for acquiring network-related data, such as currently available ports, has grown dramatically. When a computer powers down, it loses volatile information. Therefore, use live system forensics analysis techniques on volatile data.

Traditional forensic rules require you to gather and examine every piece of available data for evidence. As mentioned earlier in this chapter, modern computers consist of gigabytes, and even terabytes, of data. It is increasingly difficult to use modern tools to locate vital evidence within the massive volumes of data. Log files also tend to increase in size and dimension, complicating a forensic investigation even further.

Note

Criminals aren't the only ones who use whole-disk encryption. It's now a default feature of government and corporate system configurations.

Although dead forensic acquisition techniques can produce a substantial amount of information, they can't recover everything. Much potential evidence is lost when you remove the power. To avoid losing any valuable evidence when you turn off a device, forensic techniques have expanded to include evidence acquisition from a live system. Instead of just capturing an image of a persistent storage device, live forensic acquisition captures an image of a running system. A properly acquired live image includes volatile memory images and images of selected persistent storage devices, such as disk drives and universal serial bus (USB) devices. Live forensic acquisition images have the advantage of capturing state information of a running system instead of only producing historical information of a dead system.

Using live forensic acquisition techniques has advantages. It also involves some barriers. For example, the process of proving a live image that's forensically sound and unaltered is more complex than with dead system images. Running systems write to memory many times each second. Each program routinely accesses different areas of memory in its normal operation. The overall procedures in live forensics are similar to dead system image acquisition. However, additional controls are necessary to ensure that all images acquired from a live system are forensically sound.

Live forensic acquisition methods are very similar to the methods commonly used on dead systems. In both cases, you collect, examine, analyze, and report on evidence. Whether you take evidence from a live or dead system, provide proof that you've protected the evidence's integrity. You'll also have to prove that you've taken proactive steps to ensure that the evidence didn't change during the acquisition process.

One of the first steps when acquiring data in an investigation is to determine whether you will use live or dead forensic acquisition techniques. If the computer or device has already been turned off, the best choice is to use dead forensic acquisition techniques. In this case, follow the procedures covered in Chapter 7, "Collecting, Seizing, and Protecting Evidence." Any data that was in volatile memory was lost when power was removed. Dead forensic acquisition techniques provide evidence from only the remaining persistent data.

If the computer or device hasn't been turned off since the decision was made to acquire evidence, you have the option of using live forensic acquisition techniques to collect evidence. Using live forensic techniques can provide more information about the state of the suspect computer or device than similar dead acquisition procedures. If you choose to acquire evidence using a live acquisition process, follow these steps:

Choose between a local or remote connection to acquire evidence. Local connections generally provide a higher transfer rate and make it harder for others to intercept the transfer. Any time interception of evidence collection is a possibility, so is tampering with the evidence. If local connections are possible, choose them rather than remote network connections.

Plan acquisition tasks based on whether the process will be overt or covert. Obviously, covert acquisition should not alert any user that you are collecting evidence.

Acquire all evidence through a write blocker. The courts will require you to demonstrate that you maintained the state of the evidence and didn't modify it in any way. Using a write blocker can provide the assurance that the acquisition process didn't taint the evidence. Depending on the methods you use to acquire evidence, you can employ either hardware or software write blockers.

Even if you have chosen to pursue a live forensic investigation, you have no guarantees that you will get more valuable evidence than you'd get by using dead forensic acquisition techniques. A live system in which a user is logged on may allow you to acquire additional evidence. However, you'll be limited by the access restrictions of the logged-in user. A user account that has administrative or superuser privileges will allow you to access more resources and carry out more tasks than a user account with lesser privileges. However, many user accounts lack this additional level of capability. For instance, many organizations limit what files standard users can access and don't allow them to install new software. You'll have an easier time acquiring evidence when using a user account with elevated privileges.

Another common situation you may encounter during a live forensic investigation is the use of virtual environments. A virtual machine is a software program that appears to be a physical computer and executes programs as if it were a physical computer. You commonly use a virtual machine when you need to run an operating system on another computer. For example, you can run one or more Linux virtual machines on a computer running a Microsoft Windows operating system. Virtual machines are popular in organizations that want to save IT costs by running several virtual machines on a single physical computer. Two of the most popular software packages that implement virtual machines are VMware and VirtualBox.

Whether the suspect computer is a physical machine or virtual machine, you follow basically the same methods. However, if you find that your suspect computer is a virtual machine, you must follow a few additional steps. Because a virtual machine runs as a program on a physical machine, conduct forensic acquisition on the physical computer as well. Many physical computers that host virtual machines tend to host multiple virtual machines. Part of your evidence collection activities should include collecting images of all virtual machines on the same physical computer as the suspect computer. Other virtual machines may contain evidence related to the suspect computer as well.

Today's virtual machine software does a good job of emulating physical computers. It can be difficult to determine whether an environment is operating in the virtual or physical space. You may not immediately know whether a suspect computer is a virtual or physical computer. You can look for a number of clues to determine whether you're examining a virtual machine. Check in system files for notes or vendor identification strings. Common virtual machine software installs easily recognizable device drivers, network interfaces, virtual machine BIOS, and specific helper tools. Look for the existence of any of these telltale indications that you're examining a virtual environment. Another common technique is to use hardware fingerprinting to detect hardware that's always present in a virtual machine. For example, if you're examining a Mac environment but hardware fingerprinting shows that Microsoft Corporation made your motherboard, you can be sure you're looking at a virtual machine. You can also find software that detects the presence of virtual machines. These tools can be handy but may affect the soundness of the evidence you collect. Always investigate tools you plan to use to ensure that they protect the integrity of your evidence. The following are examples of tools that can help detect virtual machines:

ScoopyNG (see

http://www.trapkit.de/research/vmm/scoopyng/index.html)DetectVM (see

http://ctxadmtools.musumeci.com.ar)Virt-what (see

http://people.redhat.com/~rjones/virt-what/)Imvirt (see

http://sourceforge.net/projects/imvirt/)

Live acquisition gives you the ability to collect volatile data that can contain valuable information, such as network and system configuration settings. This volatile data can provide evidence to help prove accusations or claims in court. Examples of volatile data include a list of current network connections and currently running programs. This information would not be available from dead forensic acquisition.

The process of collecting useful volatile data limits your actions more than when you're conducting dead forensic acquisition activities. Live forensic acquisition yields the most valuable data when you collect that data before it changes. As a computer continues to run, it changes more and more of its volatile memory. The sooner you collect evidence, the more pertinent it will be to your investigation. Because volatile memory changes continually, the passing of time decreases how much useful information you can expect to collect. Also, just the presence of another program you're using to collect volatile data may change existing volatile data. It's important that you prioritize what data you need for your investigation and collect it as soon as possible. Focus on the most volatile data first and then collect data that is less sensitive to the passage of time. In other words, collect the data you most need and that is most likely to change in the near term. You can collect data that is not as likely to change later.

Although live acquisition can provide much more useful information than dead forensic acquisition, it poses unique challenges. The richness of today's software provides many options for users to customize their own environments. A forensic specialist must possess a wide breadth of knowledge of common computer hardware, operating systems, and software. Users have many different ways to configure each computer component, and knowing how these components operate is crucial to successfully collecting solid information. For example, one question is whether to terminate a program. Many Web browsers make it easy to delete history and temporary files upon exit from the program. In these cases, you may not want to terminate the program before collecting evidence. Other programs may automatically save data periodically and overwrite previous evidence you may need. In such a case, collect evidence before the program overwrites it. It is also helpful to understand the general reasons users may choose some configuration options. If you find that a user has configured a Web browser to automatically delete all history, you may be investigating someone who has something to hide. While this is not always the case, it may be productive to look for other signs that someone is hiding or cleansing evidence.

Evidence that you can use in court must be in pristine condition. It must be in the same state it was in when you collected it. Any action that changes evidence will likely make the evidence inadmissible and useless in court. Evidence that was changed in the process of acquisition or analysis is forensically unsound. Live forensic acquisition poses more dangers to the soundness of evidence than dead forensic acquisition. Any action, such as examining the central processing unit (CPU) registers, can actually cause the CPU registers, RAM, or even hard drive contents to change values. Any change that is a result of acquisition activities violates the soundness of the evidence. Because today's operating systems use virtual memory, a portion of any program is likely to be swapped, or paged, out to the disk drive at some point during its lifetime. Even the use of write blockers will not protect your disk drive from modifications due to swapping and paging. This common operating system behavior can ruin evidence. It is important that you understand how the live forensic acquisition process works and how to protect evidence from accidental changes.

Another issue that is a common problem with live analysis is slurred images. A slurred image is similar to a photograph of a moving object. A slurred image, in the context of live forensic acquisition, is the result of acquiring a file as it is being updated. Even a small file modification can cause a problem because the operating system reads the metadata section of the hard disk before accessing any file. If a file or metadata folder in the file system changes after the operating system has read the metadata but before it acquires the data, the metadata and data sectors may not totally agree.

Note

Because computers update volatile memory constantly, it is difficult to acquire a true snapshot of memory. The memory changes during the acquisition activity, and the evidence collected represents a span of time. Write blockers generally don't protect volatile memory from write operations, and the changing nature of memory makes it difficult, if not impossible, to create checksums to validate the data's integrity.

Anytime you use live forensic acquisition, recognize that external influences may affect your investigation. Part of your collection process should be to ensure the authenticity and reliability of evidence, especially when acquiring evidence remotely using a network connection. Advances in technology have made anti-forensic devices and software available to a determined person. Suspects can use a number of tools to interfere with or even totally invalidate your evidence collection efforts. One of the simplest methods they use is to monitor a computer for forensic activity and then destroy all evidence before you can collect it. Other programs make it easy to clean up evidence before being detected or just hide it from prying eyes. The following are some programs that remove or hide evidence of activity:

Evidence Eliminator (see

http://www.evidence-eliminator.com)Track Eraser Pro (see

http://www.acesoft.net)TrueCrypt (see

http://www.truecrypt.org)Invisible Secrets (see

http://www.invisiblesecrets.com)CryptoMite (see

http://www.baxbex.com)

As discussed in earlier chapters, volatile data is vital to digital investigation. In traditional computer forensics, where you carry out an investigation on a dead system such as a hard disk, data integrity is the first and foremost issue for the validity of digital evidence. In the context of live system forensics, you acquire volatile data from a running system. Due to the ever-changing nature of volatile data, it is impossible to verify this data's integrity. In addition, data consistency is an especially critical problem with data collected on a live system. This section presents a UNIX-based model to describe data inconsistencies related to live system data collection.

Traditional computer forensics focuses on examining permanent nonvolatile data. This permanent data exists in a specific location and in a file system-defined format. This data is static and persistent. Therefore, you can verify its integrity in the course of legal proceedings from the point of acquisition to its appearance in court.

On a live system, some digital evidence exists in the form of volatile data. The operating system dynamically manages this volatile data. For example, system memory contains information about processes, network connections, and temporary data used at a particular point in time. Unlike nonvolatile data, memory data vanishes and leaves behind no trail after the machine is powered off. You have no way to obtain the original content. Therefore, you have no way to verify the digital evidence obtained from the live system or the dump. The dynamic nature of volatile data makes verifying its integrity extremely difficult.

To produce digital data from a live system as evidence in court, it is essential to justify the validity of the acquired memory data. One common approach is to acquire volatile memory data in a dump file for offline examination. A dump is a complete copy of every bit of memory or cache recorded in permanent storage or printed on paper. You can then analyze the dump electronically or manually in its static state.

Programmers have developed a number of toolkits to collect volatile memory data. These automated programs run on live systems and collect transient memory data. These tools suffer from one critical drawback: If run on a compromised system, such a tool heavily relies on the underlying operating system. This could affect the collected data's reliability. Some response tools may even substantially alter the digital environment of the original system and cause an adverse impact on the dumped memory data. As a result, you may have to study those changes to determine whether the alterations have affected the acquired data. Data in memory is not consistently maintained during system operation. This issue poses a challenge for computer forensics.

Maintaining data consistency is a problem with live system forensics in which data is not acquired at a unified moment and is thus inconsistent. If a system is running, it is impossible to freeze the machine states in the course of data acquisition. Even the most efficient method introduces a time difference between the moment you acquire the first bit and the moment you acquire the last bit. For example, the program may execute function A at the beginning of the memory dump and execute function B at the end.

The data in the dump may correspond to different execution steps somewhere between function A and function B. Because you didn't acquire the data at a unified moment, data inconsistency is inevitable in the memory dump.

Tip

Despite the fact that the acquired memory is inconsistent, a considerable portion of it may be useful digital evidence. Moderate the disputes in presenting this evidence by distinguishing usable data from inconsistent data in a memory dump.

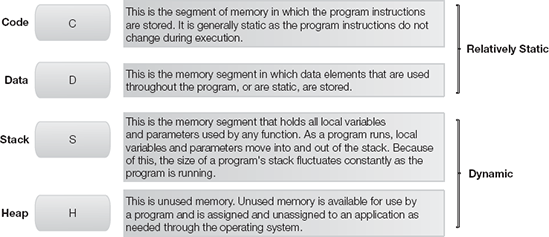

To study the consistency problem, you need to understand the basic structure of memory. When a program is loaded into memory, it is basically divided into segments. The structure may not be the same for all hardware and operating systems, but the concept of memory division is consistent. Windows, for example, has four segments (see Figure 12-1):

Code (C)—The code segment contains the compiled code and all functions of a program. The program's executable instructions reside in this segment. Normally, the data stored in the code segment is static and should not be affected while the memory is running. The other three segments contain data that the program uses and manipulates as it is running.

Data (D)—The data segment is used for global variables and static variables. The data in the data segment is quite stable. It exists for the duration of the process.

Stack (S)—Memory in the stack segment is allocated to local variables and parameters within each function. This memory is allocated based on the last-in, first-out (LIFO) principle. When the program is running, program variables use the memory allocated to the stack area again and again. This segment is the most dynamic area of the memory process. The data within this segment is discrepant and influenced by the program's various function calls.

Heap (H)—Dynamic memory for a program comes from the heap segment. A process may use a memory allocator such as

mallocto request dynamic memory. When this happens, the address space of the process expands. The data in the heap area can exist between function calls. The memory allocator may reuse memory that has been released by the process. Therefore, heap data is less stable than the data in the data segment.

When a program is running, the code, data, and heap segments are usually placed in a single contiguous area. The stack segment is separated from the other segments. It expands within the memory allocated space. Indeed, the memory comprises a number of processes of the operating system or the applications it is supporting. Memory can be viewed as a large array that contains many segments for different processes. In a live system, process memory grows and shrinks, depending on system usage and user activities. This growth and shrinking of memory is either related to the growth of heap data or the expansion and release of stack data. The data in the code segment is static and remains intact at all times for a particular program or program segment. Data in the growing heap segment and stack segment causes inconsistency in the data contained in memory as a whole. The stack data has a greater effect than the heap data. Nevertheless, the code segment contains consistent data. Consistent data is usually dormant and not affected when a process is running in memory. When you obtain a process dump of a running program, data in the code segment remains unchanged because the memory segment contains only code and functions of the program.

The global, static, heap, and stack data can be partitioned within a running process. For example, Di, Hi, and Si (where i =1, 2, 3, ...) can exist in different segments. In an ideal case, when a snapshot is taken of a process at time t, the memory dump is consistent with respect to that point in time. In reality, however, it is impossible to obtain a memory snapshot. Memory dumping takes time. The dumping process spans a time interval.

For a very fast memory dump, the data in C should be consistent, and data in D may be consistent, depending on the program usage. The data in H is likely to be inconsistent because the segment may grow when the program calls it. The data in S is highly likely to be changing due to its dynamic data structure. Variables for the process functions are continually used and reused in this segment. This causes inconsistency in part of the dumped data collected.

The data in C is relatively static and remains consistent in a memory dump. The global and static data in D are less volatile and may remain consistent. Due to the special functionality of heap and stack, the data stored within those segments are more dynamic and may be inconsistent.

Each memory process has its own allocated space in system memory. This space may include both physical and virtual addresses. Locating the heap and stack segments of memory within a logical process dump can help you achieve maximum data validity.

To locate the heap segment, use the system call sbrk to reveal the address space of a process. When you invoke sbrk with 0 as the parameter, it returns a pointer to the current end of the heap segment. The system command pmap displays information about the address space of a process, as well as the size and address of the heap and/or stack segment.

When gathering digital evidence, you can't always just pull the plug and take the machine back to the lab. As technology continues to change, you must adopt new methods and tools to keep up. You must know how to capture an image of the running memory and perform volatile memory analysis.

Standard RAM size is now between 2 GB and 8 GB, malware migrates into memory, and suspects commonly use encryption. Therefore, it is no longer possible to ignore computer memory during data acquisition and analysis. Traditionally, the only useful approach to investigating memory was live response. This involved querying the system using application programming interface (API)-style tools familiar to most network administrators. A first responder would look for rogue connections or mysterious running processes. It was also possible to capture an image of the running memory. Until recently, short of using a string search, it was difficult to gather useful data from a memory dump.

The past few years have seen rapid development in tools focused exclusively on memory analysis. These tools, as described in the following sections, include PsList, ListDLLs, Handle, Netstat, FPort, Userdump, Strings, and PSLoggedOn. The following sections show how you use these tools with the following virtual environment scenario:

A Windows XP, Service Pack 2, virtual machine has an IP address of 192.168.203.132.

Use Netcat to establish a telnet connection on port 4444, process ID (PID): 35 72, with a second machine at 192.168.203.133.

Install and run MACSpoof, PID: 3008.

This machine is compromised. The FUTo rootkit and a ProRat server listening on port 5110 are installed on the machine. The Netcat and MACSpoof processes are then hidden using the FUTo rootkit.

The following sections discuss two possible ways to approach the compromised system. The first approach is a live response process using a variety of tools. The second is a volatile memory analysis of a static memory dump, using open source memory analysis tools.

One technique for approaching a compromised system is live response. In live response, you survey the crime scene, collect evidence, and at the same time probe for suspicious activity. In a live response, you first establish a trusted command shell. In addition, you establish a method for transmitting and storing the information on a data collection system. One option is to redirect the output of the commands on the compromised system to the data collection system. A popular tool for this is Netcat, a network debugging and investigation tool that transmits data across network connections. Another approach is to insert a USB drive and write all query results to that external drive. Finally, attempt to bolster the credibility of the tool output in court.

During a live interrogation of a system, the state of the running machine is not static. You could run the same query multiple times and produce different results, based on when you run it. Therefore, hashing the memory is not effective. Instead, compute a cryptographic checksum of the tool outputs and make a note of the hash value in the log. This would help dispel any notion that the results were altered after the fact. For example, HELIX is a live response tool from a Linux bootable CD. Use this tool to establish a trusted command shell.

When the data collection setup is complete, begin to collect evidence from the compromised system. The tools used in this exercise are PsList, ListDLLs, Handle, Netstat, FPort, Userdump, Strings, and PSLoggedOn. This is not meant to be an exhaustive list. Rather, these are representative of the types of tools available. The common thread for the tools used here is that each relies on native API calls to some degree. Thus the results are filtered through the operating system.

Note

PsList, ListDLLs, Handle, Strings, and PSLoggedOn are all Microsoft Sysinternals Troubleshooting Utilities. They are available as a single suite of tools, available at http://technet.microsoft.com/en-us/sysinternals/bb896649.aspx.

Use PsList to view process and thread statistics on a system. Running PsList lists all running processes on the system. However, it does not reveal the presence of the rootkit or the other processes that the rootkit has hidden—in this case, Netcat and MACSpoof.

ListDLLs allows you to view the currently loaded dynamic link libraries (DLLs) for a process. Running ListDLLs lists the DLLs loaded by all running processes. However, ListDLLs cannot show the DLLs loaded for hidden processes. Thus, it misses critical evidence that could reveal the presence of the rootkit. The problem is that an attacker may have compromised the Windows API on which your toolkit depends. To a degree, this is the case in the current scenario. As a result, these tools can't easily detect rootkit manipulation. You need a more sophisticated and nonintrusive approach to find what could be critical evidence.

With Handle, you can view open handles for any process. It lists the open files for all the running processes, including the path to each file. In this case, one of the command shells is running from a directory labeled ...FUToEXE. This is a strong hint of the presence of the FUTo rootkit. Similarly, there is another instance of cmd.exe running from C: ools

c11nt. The ncllnt folder is a default for the Windows distribution of Netcat.

Tip

It is useful to show the implications of the tool results. However, remember that simply renaming directories or running cmd.exe from a different directory would prevent these disclosures.

Netstat is a command-line tool that displays both incoming and outgoing network connections. It also displays routing tables and a number of network interface statistics. It is available on UNIX, UNIX-like, and Windows-based operating systems.

Use the Netstat utility to view the network connections of a running machine. Running Netstat with the -an option reveals nothing immediately suspicious in this case.

FPort is a free tool from Foundstone (http://www.foundstone.com/us/resources/proddesc/fport.htm). FPort allows you to view all open Transmission Control Protocol/Internet Protocol (TCP/IP) and User Datagram Protocol (UDP) ports. FPort also maps these ports to each process. This is the same information you would see using the netstat -an command, but FPort also maps those ports to running processes with the PID, process name, and path.

In this scenario, FPort does not reveal the presence of the connections hidden by the rootkit.

Userdump is a command-line tool for dumping basic user info from Windows-based systems. With Userdump, you can extract the memory dumps of running processes for offline analysis. dumpchk.exe has a specific metadata format (see http://support.microsoft.com/kb/315271). Therefore, you can use dumpchk.exe to verify that a usable process memory dump was produced. The Strings utility extracts ASCII and Unicode characters from binary files. In this case, you would apply it to the process dumps to see what evidence you can uncover.

Volatile memory analysis is a live system forensic technique in which you collect a memory dump and perform analysis in an isolated environment. Volatile memory analysis is similar to live response in that you must first establish a trusted command shell. Next, you establish a data collection system and a method for transmitting the data. However, you would only acquire a physical memory dump of the compromised system and transmit it to the data collection system for analysis. In this case, VMware allows you to simply suspend the virtual machine and use the .vmem file as a memory image. As in other forensic investigations, you would also compute the hash after you complete the memory capture. Unlike with traditional hard drive forensics, you don't need to calculate a hash before data acquisition. Due to the volatile nature of running memory, the imaging process involves taking a snapshot of a "moving target."

The primary difference between this approach and live response is that you don't need any additional evidence from the compromised system. Therefore, you can analyze the evidence on the collection system.

The following sections discuss the capabilities of two memory analysis tools applied on the memory image example we've been discussing. These tools, The Volatility Framework and PTFinder, are relatively recent additions to the excellent array of open source resources available to digital investigators.

The Volatility Framework from Volatile Systems (see https://www.volatilesystems.com/default/volatility) provides evidence about the attacker's IP address and the connections to the system. In addition, it can help detect rootkits and hidden processes.

The Volatility Framework is a collection of command-line Python script that analyzes memory images. Use it to interrogate an image in a style similar to that used during a live response. Volatility is distributed under a GNU General Public License. It runs on any platform that supports Python, including Windows and Linux.

This exercise uses version 1.1.2 of Volatility. Commands available in this version include ident, datetime, pslist, psscan, thrdscan, dlllist, modules, sockets, sockscan, connections, connscan, vadinfo, vaddump, and vadwalk. This section explains several of these commands.

You use the ident and datetime commands to gather information about the image itself—in this case with the image file WinXP_victim.vmem:

python volatility ident -f WinXP_victim.vmem

and

python volatility datetime -f WinXP_victim.vmem

This ident command provides the operating system type, virtual address translation mechanism, and a starting directory table base (DTB). The datetime command reports the date and time the image was captured. This provides valuable information and assists with documentation in a forensic investigation. Furthermore, it is useful for creating a timeline of events with other pieces of evidence in the investigation.

Using the pslist command produces results similar to those of SysInternal pslist.exe tool used during live response. Similarly, the dlllist command shows the size and path to all the DLLs that the running process uses.

However, when you use the psscan option, you see something new. With a PID of 0, MACSpoof.exe shows up in the list. This command scans for and returns the physical address space for all the EPROCESS objects found.

In the live response scenario, Netstat fails to provide any sign of the Netcat activity. However, using connscan shows the connection with 192.168.203.133 on port 4444. The results also indicate a PID of 3572 associated with this connection. The fact that this PID is missing from the other queries could indicate the presence of a rootkit.

With the Volatility Framework, you can also list all the kernel modules loaded at the time the memory image was captured. While the path of the last entry from the modules command certainly attracts attention, an even less obvious path would show msdirectx.sys, a module that is associated with rootkits.

PTFinder, by Andreas Schuster, is a Perl script memory analysis tool (see http://computer.forensikblog.de/en/2007/11/ptfinder_0_3_05.html). It supports analysis of Windows operating system versions.

PTFinder enumerates processes and threads in a memory dump. It uses a brute-force approach to enumerating the processes and uses various rules to determine whether the information is either a legitimate process or just bytes. Although this tool does not reveal anything new in terms of malware, it does enable repeatability of the results, which is an important benefit in volatile memory analysis.

The "no threads" option on PTFinder provides a list of processes found in a memory dump. PTFinder can also output results in the dot(1) format. This is an open source graphics language that provides a visual representation of the relationships between threads and processes.

The preceding sections discuss two different incident response approaches. The first approach is the well-known live response, in which you survey the crime scene and simultaneously collect evidence and probe for suspicious activity. The second approach is the relatively new field of volatile memory analysis, in which you collect a memory dump and perform analysis in an isolated environment. Each of these approaches gives you different insight into the environment you're investigating.

Several issues with live response hinder effective analysis of a digital crime scene. The purpose of live response is to collect relevant evidence from the system to confirm whether an incident occurred. This process has some significant drawbacks, including the following:

Some tools may rely on the Windows API—If an attacker compromises the system and changes system files but you don't know it, you could collect a large amount of evidence based on compromised sources. This would damage the credibility of your analysis in a court of law.

Live response is not repeatable—The information in memory is volatile, and with every passing second, bytes are overwritten. The tools may produce the correct output and in themselves can be verified by a third-party expert. However, the input data supplied to the tools can never be reproduced. Therefore, it is difficult to prove the correctness of your analysis of the evidence. This puts the evidence collected at risk in a court of law.

You can't ask new questions later—The live response process does not support examination of the evidence in a new way. This is mainly because you can't reproduce the same inputs to the tools from the collection phase. As a result, you can't ask new questions later on in the analysis phase of an investigation. By the analysis phase, it becomes impossible to learn anything new about a compromise. In addition, if you miss critical evidence during collection, you can never recover it. This damages your case against the attacker.

Volatile memory analysis shows promise in that the only source of evidence is the physical memory dump. Moreover, investigators now collect physical memory more commonly. An investigator can build a case by analyzing a memory dump in an isolated environment that is unobtrusive to the evidence. Thus, volatile memory analysis addresses the drawbacks facing live response as follows:

It limits the impact on the compromised system—Unlike live response, memory analysis uses a simplified approach to investigating a crime scene. It involves merely extracting the memory dump and minimizes the fingerprint left on the compromised system. As a result, you get the added benefit of analyzing the memory dump with confidence that the impact to the data is minimal.

Analysis is repeatable—Because memory dumps are analyzed directly and in isolated environments, multiple sources can validate and repeat the analysis. The scenario described earlier shows this. Two tools identified the hidden malware processes. In addition, thanks to repeatability, third-party experts can verify your conclusions. Essentially, repeatability improves the credibility of an analysis in a court of law.

You can ask new questions later—With memory analysis, you can ask new questions later on in an investigation. The scenario described earlier shows this. The initial analysis of the memory dump with Volatility raised suspicion of a rootkit being present on the system. A live response may have missed this important evidence.

One of the greatest drawbacks with volatile memory analysis is that the support for these tools has not matured. With every release of a new operating system, the physical memory structure changes. Development of memory analysis tools has been gaining velocity recently, but kinks remain. Memory analysis tools must be available in parallel with an operating system for maximum utility.

The development of live forensic acquisition in general presents a remedy for some of the problems introduced by traditional forensic acquisition. With current technologies, however, the most effective analytic approach is a hybrid based on situational awareness and triage techniques. Instead of being used to gather exhaustive amounts of data, live response should move to a triage approach, collecting enough information to determine the next appropriate step. In some situations, greater understanding of the running state of the machine is critical to resolving a case. In such cases, use full memory analysis—and the requisite memory acquisition—to augment and supplement traditional digital forensic examination.

Tip

Live system investigation may be necessary to determine the presence of mounted encrypted containers or full-disk encryption. If you detect either, switch to capturing a memory image for offline analysis as well as capturing the data in an unencrypted state.

You have access to a wide variety of tools that preserve and analyze digital evidence. Unfortunately, most current system forensics tools are unable to cope with the ever-increasing storage capacity and expanding number of target devices. As storage capacities creep into hundreds of gigabytes or terabytes, the traditional approach of utilizing a single workstation to perform a digital forensics investigation against a single evidence source is no longer viable. Further, huge data volumes necessitate more sophisticated analysis techniques, such as automated categorization of images and statistical inference.

In the past, many forensic examiners would shut down a system and collect data using a static collection approach. Today, with many examinations focusing more on malware exploitation, examiners need to collect memory data that would be lost if the machine were to lose power. Programmers have developed tools to capture data from live, up-and-running systems. This chapter examines the next generation of live digital forensics tools that search memory in real time.

Computer Forensics Tool Testing (CFTT)

Data consistency

Dead system analysis

Dump

Hardware fingerprinting

Live response

Real time

Slurred image

Virtual machine

Volatile memory analysis

Write blocker

________ is analysis of machines that remain in operation as you examine them.

________ is analysis of machines that have been shut down.

It is not as important to avoid contaminating evidence in live system forensics as it is in dead system forensics.

True

False

Which of the following are drawbacks of dead system forensics? (Select three.)

It leads to corruption of evidence.

It leads to corruption of the original data.

It leads to system downtime.

It leads criminals to use cryptography.

It leads to data consistency problems.

You can use live system forensics to acquire one type of data that dead system forensics can't acquire. What type of data is this?

Binary

Virtual

Volatile

Nonvolatile

Which of the following is a software implementation of a computer that executes programs as if it were a physical computer?

VMware

Write blocker

Hardware fingerprint

Virtual machine

Which of the following are drawbacks of live system forensics? (Select three.)

It leads to system downtime.

Slurred images can result.

Data can be modified.

It leads to data consistency problems.

It leads criminals to use cryptography.

As a result of not acquiring data at a unified moment, live system forensics presents a problem with ________.

What are two possible techniques for approaching a compromised system using live system forensics? (Select two.)

Live response

Hot swapping

Volatile memory analysis

Hardware fingerprinting

The following are some of the benefits of _________: It limits the impact on the compromised system, analysis is repeatable, and you can ask new questions after the analysis.