Global Mirror overview and architecture

This chapter provides a general overview of the DS8000 Global Mirror function, its architecture, and details of the replication process.

This chapter includes the following sections:

1.1 Global Mirror overview

DS8000 Global Mirror is a two-site, unlimited distance data replication solution for both z Systems and Open Systems data.

When you replicate data over long distances, usually beyond 300 km, asynchronous data replication is the preferred approach. With asynchronous replication, the host at the local site receives acknowledgment of a successful write from the local storage instantly. The local storage system then sends the data to the remote storage later. Thus, replication is said to be done asynchronously.

In an asynchronous data replication environment, an application write I/O has the following steps:

1. Write application data to the primary storage system cache.

2. Acknowledge a successful I/O to the application so that the next I/O can be immediately scheduled.

3. Replicate the data from the primary storage system cache to the auxiliary storage system.

4. Acknowledge to the primary storage system that data has successfully arrived at the auxiliary storage system.

In an asynchronous type technique, the data transmission and the I/O completion acknowledge are independent processes, which results in virtually no application I/O impact.

When application data is spread across several volumes and eventually across multiple storage systems, asynchronous replication means that the data at the remote site does not necessarily represent the same order of writes as on the local storage. With asynchronous data replication techniques, special means are required to ensure data consistency for dependent writes at the secondary location.

To ensure consistency, Global Mirror uses the concept of consistency groups. With DS8000 Global Mirror data consistency is provided periodically by the following sequence:

1. The host I/Os to the primary volumes are suspended periodically for a short time.

2. This frozen data, which represents a consistency group, is transmitted to the remote site.

3. Consistent data is saved at the remote site.

The tradeoff of providing consistency in an asynchronous replication is that not all the most recent data can be saved in a consistency group. The reason is that data consistency can only be provided in distinct periods of time. When an incident occurs, only the data from the previous point of consistency creation can be restored. The measurement of the amount of data that is lost in such a case is called the recovery point objective (RPO), which is expressed in units of time, usually seconds or minutes.

The RPO is not a fixed number. It depends on the available bandwidth and the quality of the physical data link, and on the current workload at the local site. How to scale the Global Mirror and how to deal with the RPO is described later.

Global Mirror is based on an efficient combination of Global Copy and IBM FlashCopy® functions. It is the storage system microcode that provides, from the user perspective, a transparent and autonomic mechanism to intelligently use Global Copy with certain FlashCopy operations to attain consistent data at the secondary site.

To accomplish the necessary activities with a minimal impact on the application write I/O, Global Mirror introduces a smart bitmap approach in the primary storage system. Global Mirror uses two different types of bitmaps:

•The Out-Of-Sync (OOS) bitmaps that are used by the Global Copy function

•The Change recording CR bitmap that is allocated during the process of consistency formation

Figure 1-1 identifies the following essential components of the DS8000 Global Mirror architecture:

•Global Copy, which is used to transmit the data asynchronously from the primary (local) volumes H1 to the secondary (remote) volumes H2.

•A FlashCopy relation from the Global Copy secondary volumes H2 to the FlashCopy target volumes Jx.

•A Change Recording (CR) bitmap that is maintained by the Global Mirror process running on the primary storage system while the consistency group is created at the primary site.

When the Global Mirror Process at the primary site creates a consistency group, the primary volumes H1 are frozen for the time it takes to allocate a new CR bitmap in the primary storage memory. All new data that is sent from the hosts is marked for each corresponding track1 in the CR bitmap.

The newly formed consistency group is represented in the Global Copy named OOS bitmaps. When Global Copy sends the consistency group to the remote site, each transmitted track is checked against the OOS bitmap.

Figure 1-1 shows the architecture of DS8000 Mirror.

Figure 1-1 General architecture of DS8000 Global Mirror

When the whole consistency group has been transmitted to the remote site, it must be saved to the Jx volumes by using the FlashCopy function. Doing so ensures that there is always a consistent image of the primary data at the secondary location.

1.1.1 Communication between primary disk systems

A Global Mirror session is a collection of volumes that are managed together when you create consistent copies of data volumes. This set of volumes can be in one or more logical storage subsystems (LSSs) and one or more storage disk systems at the primary site. Open Systems volumes and z/OS volumes can both be members of the same session.

|

Tip: Global Mirror allows for the creation of multiple sessions on a primary disk system with different devices associated with each session. Defining separate sessions for different application hosts allows you to not only specify a different RPO for each application based on their business criticality, but also to leave specific host volumes in one session unaffected when another Global Mirror session must be failed over.

|

Figure 1-2 shows such a Global Mirror structure. A master coordinates all efforts within a Global Mirror environment. After the master is started and manages a Global Mirror environment, the master issues all related commands over Peer-to-Peer Remote Copy (PPRC) links to its attached subordinates at the primary site. The subordinates use inband communication to communicate with their related auxiliary storage systems at the remote site. The master also receives all acknowledgements from the subordinates, and coordinates and serializes all the activities in the Global Mirror session.

Figure 1-2 Global Mirror as a distributed application

With two or more storage systems at the primary site, which participate in a Global Mirror session, the subordinate is external and requires separate attention when you create and manage a Global Mirror session or environment.

To form consistency groups across multiple disk systems, the different disk systems must be able to communicate. This communication path must be resilient and high performance so that it causes minimal effect to the production applications. To provide this path, Fibre Channel links that use FCP protocol are used, which can be direct connections or more typically over a SAN.

|

Communication links: Although just one path between a master LSS and one LSS in the subordinate is required, having two redundant paths is preferred for resiliency.

|

1.2 Setting up a Global Mirror session

To understand how Global Mirror works, this section explains how a Global Mirror environment (a Global Mirror session) is created and started. This approach is a step-by-step one and helps you understand the Global Mirror operational aspects.

1.2.1 A simple configuration

To understand each step and to show the principles, start with a simple application environment where a host makes write I/Os to a single application volume (A) as shown in Figure 1-3.

Figure 1-3 Start with a simple application environment

1.2.2 Establishing connectivity to a secondary site (PPRC paths)

Now, add a distant secondary site that has a storage system (B), and interconnect both sites (see Figure 1-4).

Figure 1-4 Establish Global Copy connectivity between both sites

Figure 1-4 shows how to establish Global Copy paths. Global Copy paths are logical connections that are defined over the physical links that interconnect both sites.

1.2.3 Creating a Global Copy relationship

Next, create a Global Copy relationship (establishing Global Copy pairs) between the primary volume and the secondary volume (see Figure 1-5).

Figure 1-5 Establish a Global Copy volume pair

Creating the Global Copy relationship changes the target volume state from simplex (no relationship) to target Copy Pending. This Copy Pending state applies to both volumes: Primary Copy Pending and secondary Copy Pending.

Data is copied from the primary volume to the secondary volume. An OOS bitmap is created for the primary volume that tracks changed data as it arrives from the applications to the primary disk system. After a first complete pass through the entire A volume, Global Copy scans constantly through the OOS bitmap and replicates the data from the A volume to the B volume based on this out-of-sync bitmap.

Global Copy does not immediately copy the data as it arrives on the A volume. Instead, this process is an asynchronous one. When a track is changed by an application write I/O, it is reflected in the out-of-sync bitmap with all the other changed tracks. Several concurrent replication processes can work through this bitmap, which maximizes the usage of the high-bandwidth Fibre Channel links.

This replication process keeps running until the Global Copy volume pair A-B is explicitly or implicitly suspended or terminated.

Data consistency does not yet exist at the secondary site.

1.2.4 Introducing FlashCopy

FlashCopy is a part of the Global Mirror solution. Establishing FlashCopy pairs is the next step in establishing a Global Mirror session (see Figure 1-6).

Figure 1-6 Introduce FlashCopy in to the Global Mirror solution

Figure 1-6 shows a FlashCopy relationship with a Global Copy secondary volume as the FlashCopy source volume. Volume B is now both a Global Copy secondary volume and a FlashCopy source volume at the same time. In the same storage server is the corresponding FlashCopy target volume.

This FlashCopy relationship has certain attributes that are required when you create a Global Mirror session:

•Inhibit target write: Protect the FlashCopy target volume from being modified by anything other than Global-Mirror-related actions.

•Start change recording: Apply changes only from the source volume to the target volume that occur to the source volume between FlashCopy establish operations, except for the first time that FlashCopy is established.

•Persist: Keep the FlashCopy relationship until explicitly or implicitly terminated. This parameter is automatic because of the change recording property.

•Nocopy: Do not start background copy from source to target, but keep the set of FlashCopy bitmaps (source bitmap and target bitmap) required for tracking the source and target volumes. These bitmaps are established when a FlashCopy relationship is created. Before a track in the source volume B is modified, between consistency group creations, the track is copied to the target volume C to preserve the previous point-in-time copy. This copy includes updates to the corresponding bitmaps to reflect the new location of the track that belongs to the point-in-time copy. The first Global Copy write to its secondary volume track with the window of two adjacent consistency groups causes FlashCopy to perform copy on write operations.

Some interfaces, such as Copy Services Manager, IBM Geographically Dispersed Parallel Sysplex™ (GDPS), and TSO, have these FlashCopy parameters embedded in their copy-services-related commands.

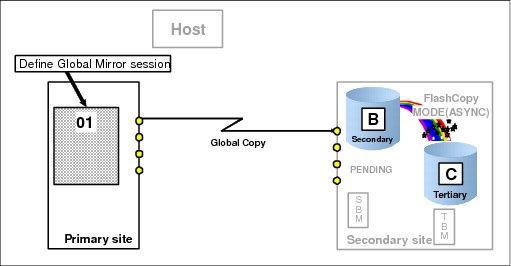

1.2.5 Defining a Global Mirror session

Creating a Global Mirror session does not involve any volume within the primary or secondary sites. The focus is on the primary site (see Figure 1-7).

Figure 1-7 Define a Global Mirror session

Defining a Global Mirror session creates a token, which is a number between 1 and 255. This number represents the Global Mirror session.

This session number is defined at the LSS level. Each LSS that has volumes that are part of the session needs a corresponding session defined. Up to 32 Global Mirror hardware sessions can be supported within the same primary DS8000. Session means a hardware/firmware-based session in the DS8000, which is managed by the DS8000 in an autonomic fashion.

1.2.6 Populating a Global Mirror session with volumes

The next step is the definition of volumes in the Global Mirror session. The focus is still on the primary site (see Figure 1-8). Only Global Copy primary volumes are meaningful candidates to become members of a Global Mirror session.

This process adds primary volumes to a list of volumes in the Global Mirror session. However, it does not perform consistency group formation yet because the Global Mirror session has not started yet. When more volumes are added to the session, they are initially placed in a Join Pending state until they have performed their initial copy. After this process has completed, they join the session when the next consistency group is formed.

Adding a disk system to a Global Mirror session follows the same process except that a brief pause/resume of the consistency group formation process must be performed. This process can take a few seconds and is done to define the new disk system and its control paths to the master process. This process has minimal impact to the environment and only results in a small increase to the RPO for a brief period.

Figure 1-8 Add a Global Copy primary volume to a Global Mirror session

1.2.7 Starting a Global Mirror session

Global Mirror forms consistency groups at the secondary site. As Figure 1-9 indicates, the focus here is on the primary site, with the start command issued to an LSS in the primary storage system. With this start command, you set the master storage system and the master LSS. From now on, session-related commands must go through this master LSS.

Figure 1-9 Start Global Mirror

This start command triggers events that involve all the volumes within the session. These events include fast bitmap management on the primary storage system, issuing inband FlashCopy commands from the primary site to the secondary site, and verifying that the corresponding FlashCopy operations finished successfully. This process happens at the microcode level of the related storage systems that are part of the session, and is fully transparent and autonomic from the user’s perspective.

All B and C volumes that belong to the Global Mirror session comprise the consistency group.

1.3 Forming consistency groups

As described in 1.1, “Global Mirror overview” on page 2, the process for forming consistency groups can be broken down into these steps:

1. Create consistency group on primary disk system

2. Send consistency group to secondary disk system

3. Save consistency group on secondary disk system

1.3.1 The different phases of consistency formation

The numbers in Figure 1-10 illustrate the sequence of the events that are involved in the creation of a consistency group. This illustration provides only a high-level view that is sufficient to understand how this process works.

Figure 1-10 Formation of a consistent set of volumes at the secondary site

Note that before step 1 and after step 3, Global Copy constantly scans through the OOS bitmaps and replicates data from A volumes to B volumes, as described in 1.2.3, “Creating a Global Copy relationship” on page 6.

Consistency group formation

When the creation of a consistency group is triggered by the Global Mirror master, the following steps occur:

1. Coordination phase

All Global Copy primary volumes are serialized. This serialization imposes a brief hold (freeze) on all incoming write I/Os to all involved Global Copy primary volumes. After all primary volumes are serialized across all involved primary DS8000s, the freeze on the incoming write I/Os is released. All further write I/Os are now noted in the CR bitmap of each volume. They are not replicated until step 3 on page 12 (Perform FlashCopy) is completed, but application write I/Os can immediately continue.

|

Maximum coordination time: The maximum coordination time can be modified when the Global Mirror is established. The default value is 50 milliseconds. The maximum value is 65535 milliseconds. In some situations with a master/subordinate configuration, you might need to modify the default value.

|

The default for the maximum co-ordination time of 50 ms is a small value compared to other I/O timeout values like missing interrupt handler for devices in mainframe environment or SCSI I/O timeouts on Distributed platforms. Therefore, even in error situations when this timeout would be triggered, Global Mirror protects production performance rather than affecting the primary site in an attempt to form consistency groups in a time when error recovery or other problems are occurring.

2. Draining phase

In the draining phase, all tracks that were noted in the OOS bitmaps are transmitted to the remote site by using the Global Copy function. After all out-of-sync bitmaps have been processed, step 3, Perform FlashCopy, is triggered by the Master storage system microcode at the primary site.

|

Maximum drain time: The maximum drain time is the maximum amount of time that Global Mirror will spend draining all data still at the primary site and belonging to a consistency group before failing that consistency group formation.

The maximum drain time can also be modified when the Global Mirror is established. The default value is 30 seconds before microcode release 8.1. Starting with microcode release 8.1, the default value was changed to 240 seconds. The default value works well for most of implementations, so leave this parameter at the default.

|

If the maximum drain time is exceeded, then Global Mirror changes to Global Copy mode for a period to catch up in the most efficient manner. While in Global Copy mode, the overhead is lower than continually trying and failing to create consistency groups.

The previous consistency group will still be available on the C devices, so the effect of this process is simply that the RPO increases for a short period. The primary disk system evaluates when it is possible to continue to form consistency groups and restarts consistency group formation then.

The default for the maximum drain time allows a reasonable time to send a consistency group while ensuring that if some non-fatal network or communications issue occurs, then the system does not wait too long before evaluating the situation and potentially dropping into Global Copy mode until the situation is resolved. In this way, production performance is protected rather than attempting (and possibly failing) to form consistency groups at a time when this process might not be appropriate.

In situations with reduced bandwidth, it might be indicated to adapt this value.

If the system is unable to form consistency groups for 8 hours, by default Global Mirror forms a consistency group without regard to the maximum drain time. It is a pokeable value that can be changed if the default is not desirable for your particular environment. Note that pokeable values can be displayed by using DS GUI or Copy Services Manager, but changing a pokeable value can be done by IBM technical support only.

3. Perform FlashCopy

Now the B volumes contain all data as a quasi point-in-time copy and are consistent. A FlashCopy is triggered by the primary system’s microcode as an inband FlashCopy command. This FlashCopy is a two-phase process:

a. FlashCopy operation

First, the FlashCopy command is issued to all involved FlashCopy pairs in the Global Mirror session. A FlashCopy is done for each pair relation, individually grouped per LSS with each B volume, as FlashCopy source, and each C volume as a FlashCopy target volume. A consistency group is considered to be successfully created when all FlashCopy operations are successfully completed.

Before the copy operation starts, an internal attribute named revertible bit is set for each FlashCopy source volume. With this bit, the FlashCopy operation is protected against updates from the local site. In this phase, the data is transmitted from the Global copy target volumes to the FlashCopy target volumes. When the transmission is completed, the FlashCopy sequence number of the pair is increased. The sequence number can be obtained for each pair with the DSCLI command lspprc -l.

b. FlashCopy termination

In this phase, the FlashCopy operation is finalized by terminating the copy process and increasing the FlashCopy sequence number. When all FlashCopy operations have completed, the revertible bits of the FlashCopy primary volumes are reset and the whole FlashCopy operation is committed. Finally, the revertible bit of the FlashCopy pair relation is reset.

When the FlashCopy is complete, a consistent set of volumes is created at the secondary site. This set of volumes, the B and C volumes, represents the consistency group.

For this brief moment only, the B volumes and the C volumes are equal in their content. Immediately after the FlashCopy process is logically complete, the primary systems’ microcode is notified to continue with the Global Copy process from A to B. To replicate the changes to the A volumes that occurred during the step 1 to step 3 window, the change recording bitmap is mapped against the empty out-of-sync bitmap. From now on, all arriving write I/Os end up again in the out-of-sync bitmap. The conventional Global Copy process, as outlined in 1.2.3, “Creating a Global Copy relationship” on page 6, continues until the next consistency group creation process is started.

Consistency group interval time

The time between consistency groups formation is called the consistency group interval time. It is possible to specify a time period when the data transmission is continued in normal Global Copy mode, without forming consistency groups. As a default, the consistency group interval time is set to zero, which means the Global Mirror will form the next consistency group immediately. Valid values are round numbers between 0 and 65,535 seconds.

Figure 1-11 shows a more detailed breakdown of the consistency group formation process.

Figure 1-11 The consistency formation process

|

Important: Although the coordination time, the maximum drain time, and the consistency group interval time are tunable parameters, do not modify the default values before performing a careful analysis of the current behavior of the Global Mirror.

|

1.3.2 Impact of the consistency formation process

One of the key design objectives for Global Mirror is not to affect the production applications. The consistency group formation process involves the holding of production write activity to create dependant write consistency across multiple devices and multiple disk systems.

This process must be fast enough that an impact is negligible and is not perceived by applications. With Global Mirror, the process of forming a consistency group is designed to take 1 - 3 milliseconds. If you form consistency groups every 1 - 3 seconds, then the percentage of production writes impacted and the degree of impact is very small.

The example below shows the type of impact that might be seen from consistency group formation in a Global Mirror environment. Assume the following key data for a high performing storage system:

IO = 100,000 [IO/s] A high load value in many environments

R/W ratio = 1:3 Typical read/write ratio

IOwrite = 25,000 [IO/s] Resulting write operations

RTmin= 0.2 [ms] Assumed to be the DS8880 response time

CGtimemin = 1 [ms] Minimum time to form a consistency group at the GM primary site

CGformmin = 3 [1/s] The fastest time for Global Mirror to form consistency, by assuming unlimited bandwidth to the remote site

CGinterval = 0 [s] Assume that you form consistency groups as fast as possible

First, calculate the number of write operations that might be affected during the consistency group coordination time:

Nwrites = IOwrite x (RTmin + CGtimemin)

|

Note: In reality, not all writes experience a delay during the freeze. To account for the worst possible case, add the storage system response time.

|

With the specific data, the number of impacted writes can be calculated as follows:

25,000 IOwrites/s x (0.0002 s + 0.001 s) = 30 IOwrites

These 30 write operations can occur during the freeze of the primary volumes are delayed by the time it takes to create the consistency group, which is CGtimemin = 1 ms. In the worst case, the consistency formation happens every CGformmax = 3 s.

CGdelay = Nwrites / IOwrites x CGtimemin / CGform min

which is:

30 IOwrites / 25,000 IOwrites/s x 0.001s / 3s = 0.000 000 04 s = 0.0004 ms

So, taking the host response time of RTmin= 0.2 ms, the average delay of 0.0004 ms due to consistency formation would cause 0.2% increase in response time.

As a conclusion, the impact is insignificant for normal performance monitoring tools.

1.3.3 Collisions

Collisions are situations where a host write operation to a certain track is part of the current consistency group that has not yet been sent completely to the remote site. To resolve this situation, the old track, which is presumably still in flight, is stored in an internal sidefile of the DS8000 storage system. This feature is referred to as collision avoidance. When this track is saved in the sidefile, the new track can be written as normal.

For the transmission of the old track, the source of this track is linked to the side file. This configuration ensures that the current consistency is completed in order. The new track will be transmitted with the next consistency group.

1 A track size can be 64 KB in Open Systems or around 57 KB in a mainframe architecture.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.