Chapter 2

Modern IT—the coming inflection point

In this chapter, we discuss what ultimately is the crux of the book, and we discuss the initial topics for beginning to understand the journey of Modernizing IT.

Modern IT is a new approach to information technology that enables companies to maximize their competitiveness by building things that differentiate them from their competitors while they minimize everything else. The term digital transformation is popular these days. That term concisely conveys how every business and endeavor (airlines, retail, manufacturing, and so on) is being transformed by putting the customer at the center of everything and using the power of software to compete. Companies that want to be successful at digital transformation need to adopt modern IT.

Modern IT consists of new

Systems

Roles

Processes

In this chapter, we explore the traditional approach to IT and evaluate its strengths and shortcomings. Then we articulate modern IT’s new approaches and suggest a path to get from traditional IT to modern IT. Finally, we share a set of value propositions for modern IT target audiences.

Traditional approach to IT

The current model for IT was largely established during the 1990s, during what has become known as the “disintegration of the computer industry.” During this period, the computer industry shifted from vertical integration to horizontal integration. In the vertically integrated world, big companies like IBM, Burroughs, and Sperry would build and sell systems from the ground up. They would build chips, boards, operating systems, applications, and management software and sell them as a package to customers.

In the 1990s the industry shifted to a horizontal integration model in which companies specialized and optimized one layer—for example, Intel on chips, Microsoft on operating systems, Lotus on applications, and Tivoli on management. Horizontal integration allowed competition and choice at every layer of the computing stack but required someone to put together the components into a workable system. This is the task of systems integration, and it has been the central mission of traditional IT.

The traditional IT model is powerful and has served organizations around the world well. It includes

Hardware vendors delivering components and systems: disks, NICs, motherboard, servers, and so on

OS vendors delivering software platforms that provide functions and services that facilitate writing applications and allow multiple users to share common resources

Application vendors delivering a domain-specific set of capabilities to accomplish tasks such as accounting, or manufacturing support

Management vendors delivering functions to manage the life cycle of the hardware and software components, including provisioning, configuration, monitoring, diagnostics, and so on

IT departments evaluating and selecting the vendors of each of these horizontal layers, and the IT professional integrating, operating, and maintaining all the components to serve the needs of the business.

Different markets and customers have unique requirements, so vendors produce components that are as general as possible so that they can be customized to meet the needs of most customers. IT departments must plan carefully to integrate all the components to meet their specific business purpose.

The traditional IT model’s conceptual center is an individual server box whose parts are selected by one group of IT pros. Often, another group of IT pros provision and manage that server to deliver a function. That function is then used by yet another group of IT pros. Distributed systems are created using a bottom-up approach.

If you look at the systems created, the traditional IT model has worked incredibly well. If you look at the delivered business results, the model has severe problems that are realized every day. In the past, everyone had to deal with a common set of issues, so the problems of the model were generally hidden from view.

In recent years, technology changes—such high bandwidth/low latency networks, software-defined infrastructure, automation, cloud architectures, containers, and microservices—have allowed companies to take a different approach and achieve extraordinary results. This highlights both the problems of traditional IT and the need to adopt new approaches and architectures in a responsible and thoughtful manner.

Modern IT approach

For those working in Microsoft, a key concern to all roles is to make sure that we are building the right things for the future. Microsoft has more than 100,000 employees and a vast range of products, and it invests more than $10 billion per year in research and development. Imagine the complexity of the task and the importance of getting it right. If we take physics for example, you are always striving to look past the surface level facts and assumptions to understand the underlying principles or laws at play. Once you deduce those, you then can apply them to other circumstances to work out the right answer.

When we consider all the possibilities opened up by the recent technology changes, it doesn’t take long to become overwhelmed or feel the need to retreat by hyper-focusing on one area. Instead, we need to step back, find the core principles, and then use them as the foundation of our new approach. You’ll notice that most of the following principles are not about technology but rather about business.

The purpose of a business is to satisfy the need of a consumer, thereby creating a customer As Peter Drucker said, “Because the purpose of business is to create a customer, the business enterprise has two–and only these two–basic functions: marketing and innovation. Marketing and innovation produce results; all the rest are costs.” Modern IT focuses on delivering customer value through innovation. It optimizes everything in that process and reduces the customer’s involvement and the costs of everything else.

Just because it’s hard doesn’t mean it’s valuable IT operations is hard. It can be incredibly hard. However, the difficulty of something doesn’t automatically mean that it’s delivering innovation or value to the company. Consider this: Email is critical to a modern company, and running it well is very hard. But does any business beat its competition because it runs its email servers better than the competition?

You must be effective; it’s good to be efficient The most important factor in all IT decisions is whether something effectively delivers innovation that makes the company competitive. Once you are effective, it’s good to be efficient to maximize profitability. If you’re efficient but not effective, the company will die.

Customers’ businesses depend upon trust. Trust depends upon security Modern IT prioritizes and delivers security. It takes a structured life cycle approach. It extends the traditional “hardening” model to embrace detection, and impact isolation. The approach also addresses the modern attack surface—admin identity.

The magic of software allows Platinum Service Level Agreements (SLAs) using affordable, high volume hardware Customer value requires systems have 5 9’s SLAs. Modern IT recognizes that availability can be delivered more effectively and more efficiently by using modern software architecture instead of expensive hardware.

Building systems with a modern IT mindset

In the traditional IT approach to building systems, IT is responsible for understanding and forecasting the specific needs of the user community. IT then sizes, builds, and maintains systems to meet those needs in accordance with a budget. It specifies, procures, provisions, configures, and operates the following things:

The components of servers and sets of servers

The operating system

The applications

The management systems (monitoring, backup, security, configuration, desired state, patching, and so on)

The traditional IT approach to building systems expends much energy and effort addressing a set of issues that don’t deliver customer value or move a company’s business forward. After working closely to understand the user’s needs, IT often creates “snowflake” servers that are finely tuned to address those needs but are fragile in the face of change. For IT, screwing up can lead to business failure, but getting things right doesn’t lead to business success. Because reliability is critical, traditional IT often buys very expensive hardware to deliver reliability and avoids changes like patching or upgrading software. IT tightly controls systems to deliver reliability, which often requires users to go through lengthy change review processes.

Modern IT takes a different approach to building systems:

Robustness and reliability are delivered via software rather than by expensive hardware The failure of components and systems is a core assumption and software systems are designed accordingly. Customers achieve greater robustness and reliability by adding more components. This is affordable because systems are built using high-volume, low-cost components.

Snowflake servers are shunned in favor of standardization Individual components can be readily replaced without drama.

IT shifts from a mindset of control to a mindset of empowerment IT delivers pools of capability and empowers teams to move quickly through self-service mechanisms.

A new approach to IT roles

The traditional approach to IT roles was centered in creating a predictable and stable environment that ran applications, controlled costs, and fixed things when they broke. Developers’ responsibilities were to translate business requirements into code. They created business applications and “tossed them over the wall to IT” to be deployed and operated. The focus was on completing feature work on time with quality. Traditional IT was responsible for deploying and supporting those applications in production. The focus was on faults, uptime, compliance, and financial management.

Modern IT takes a different approach to IT roles. Roles are refactored to maximize innovation.

DevOps teams are cross-functional teams comprised of developers and operations people. They have shared responsibility for creating, deploying, and operating customer-focused applications. These are engineering teams using engineering processes. Their work is grounded in code. Some of these engineers produce C# or JavaScript code while others produce PowerShell scripts and configuration documents (for example, ARM templates or DSC docs). All code is under source control so that changes are tracked, results can be reproduced, and mistakes can be reverted. The DevOps teams work in frequent small batches and move fast and safe by having quality controls designed into their processes.

Cloud architects are trusted consultants that help teams understand their choices and produce designs that can scale out or in, are secure, can operate reliably in the face of failures, are optimized for cost, and provide agility. Cloud architects are cognizant of the benefits and drawbacks of using public clouds and on-premises computing. They guide teams in deciding which path to use for which workloads.

Infrastructure administrators create and maintain pools of compute, storage, and networking resources to be used by cloud architects and DevOps teams. Some of these pools are local, on-premises resources, and others are based in public clouds.

Figure 2-1 shows the life cycle all teams will be integrated into in modern IT.

Throughout the rest of this book, the diagram in Figure 2-1 will serve as the basis for our text, and we will discuss and relate all our topics to this life cycle.

New operation approach

The traditional IT approach to processes is grounded in failure avoidance. Because change is the root cause of most failures, traditional IT is wary of changes and focuses on controlling them. A typical strategy is to avoid change—to go for long periods between changes and combine lots of changes into a big change that can be thoroughly tested before it’s deployed into production. Another strategy is to manage change through a formal change review process in which any change is fully documented and reviewed by a board of people who look for things that could go wrong.

Modern IT is grounded in change. Delivering innovation is all about listening to customers, gaining insight into what needs to change, and then delivering that change before the competition does—and then repeating that process over and over. Rapid change is the heart of modern IT. This brings about a new approach to processes:

Modern IT accepts that failures will occur It builds systems processes to minimize the impact. It has a well-defined deployment pipeline and quality controls to “move failures to the right” and catch them early in the process.

Automated testing allows modern IT to go fast with confidence The goal is to “push on green,” which means that when a set of tests succeed, a change is automatically pushed to production. If something goes wrong in production, then it’s a failure of the test rather than the change. Tests always improve because increases in speed translate to increases in the ability to compete.

Modern IT makes lots of small changes quickly When a failure occurs, the fact that the change was small means that the failure will be easy to identify and fix.

Modern IT automates as much as possible Automation allows reproducibility, recoverability, accountability, and speed, and it provides the basis for constant improvement.

Modern IT datacenters

Modern IT datacenters embrace and implement the principles of the cloud model of computing. Hyperstandardization on inexpensive components is combined with software-defined storage, networking, and compute to create a robust, scalable, and agile platform. This platform is then used to create pools of resources that are made available to teams. Sometimes a self-service portal is provided so that teams can get the resources they need when they need them without the intervention of IT.

In Figure 2-2, the two approaches are displayed. The left shows the old approach—with the sometimes-siloed infrastructure for specific tasks—and the right demonstrates the resource pools concept for modern IT.

You have several different ways to use a datacenter built on the cloud model of computing. You can use a public cloud such as Azure, you can buy an on-premises cloud appliance running on integrated systems such as Azure Stack, or you can build your own components using operating systems that incorporate cloud technologies such as Windows Server 2016.

Modern IT security

Modern IT security starts with the understanding that the bad guys have gotten very capable very quickly and action is required. Modern IT security addresses modern threats using modern mechanisms. In the past, security was focused on controlling access to resources through system hardening: setting Access Control Lists (ACLs), firewall rules, minimal services, and so on. Today, the dominant attack vector is identity. A bad actor will use sophisticated phishing emails to steal the credentials of an IT admin and then exploit the enterprise using those admin privileges. Modern IT security also focuses on awareness of the organization and ensuring that employees are aware of all potential security violations. At the same time, it allows the IT organization to secure any device anywhere, greatly reducing attack surfaces and mitigation of a modern device strategy like Bring-Your-Own-Device (BYOD).

Protecting identity

Protecting identities and securing privileged access are core activities of modern IT. Simple things like account separation (giving an admin one account for privileged activities such as server management and a separate account for nonprivileged user activities such as web browsing and email), just-in-time administration, and Just-Enough Administration (JEA) with role-based access controls (RBAC) build the foundation of modern IT security.

However, today it’s simply not enough; we need to employ additional layers for protecting our identities. Two-factor Authentications (2FA) is one of the most common ways to help protect identities and enforce access control. 2FA requires a user to know something (such as a password) and to have something (such as a registered phone). If a hacker steals an admin’s password, he can’t use it to access systems because he doesn’t also have the admin’s phone.

Central auditing and log collection with Big Data analytics to identify irregular patterns across entire IT estates further secure our identities and mitigate attacks. Automation attached to these systems allows responses to potential breaches to further protect a modern IT infrastructure. Figure 2-3 shows an example of using Azure Security Center to collect the logs from multiple sources and apply the necessary analytics to identify threats to an organization. The benefits of a cloud-based product such as Azure Security Center is that it combines a large set of customer signals, massive amounts of cloud computing, and machine learning to identify novel attack vectors. An attack on one is quickly transformed into a defense for all.

In an attempt to protect against admins’ passwords being stolen, people sometimes use privileged service accounts that are created and known by few but their details are written in a notebook and their passwords are set to not expire! This is a risky practice. To evolve away from potential exposures of this sort, we need to examine concepts like just-in-time administration/access in which a person obtains the privilege required to perform the operation she requires for a limited amount of time to help ensure systems stay protected by not exposing privileged accounts.

Furthermore, with Windows 10, Privileged Access Workstations (PAW)/Secure Access Workstation (SAW) (these terms can be used interchangeably) and/or conditional access reduce the entry points to privileged systems and restrict the types of machines that can access them. PAW’s are machines that have been pre-hardened and have specific control software installed to ensure that no unauthorized software will run. PAW’s also have additional layers of authentication required. Privileged systems can implement additional access control lists to prevent non-PAW machines from connecting. Figure 2-4 shows an example of a user trying to access from a generic laptop versus a PAW.

When starting to architect a modern IT system, enterprises should also consider creating a new privileged identity deployment. This hardened implementation would be built to be secure by default, and identities would only be used for privileged access (for example, no browsing or email access). This ensures no legacy service principals exist to potentially expose holes into an otherwise secure environment. Microsoft Privileged Access Management is an example of this approach in which we setup a bastion forest and create time-bound shadow principals when users want to do privileged tasks.

Protecting infrastructure

Designing and implementing controls to ensure that information is protected from unauthorized access or data loss is paramount in modern IT. Controls include disk encryption, transit encryption, and in-memory/process encryption. Modern IT also takes a more mature approach to security. Recognizing that something always can go wrong, modern IT adopts an “assume breach” mindset. In the past, a breach of any component often led to the breach of most components. With an assume breach mindset, IT redesigns systems to minimize the ramifications of a breached component. This is analogous to the design of a submarine where the breach of a compartment does not sink the ship. Table 2-1 highlights the differences in the traditional approach of preventing a breach versus assuming breach.

PREVENT BREACH |

ASSUME BREACH |

|---|---|

Threat model systems |

Threat model components and war games |

Code review |

Centralized security monitor |

Security testing |

Live site penetration testing |

Security development life cycle |

|

As highlighted in the table, while implementing a prevent breach model will provide many layers of security for an organization and keep developers and IT pros in a security-conscious mindset, modern IT goes one step further and takes an active approach, or assume breach, security model.

Beyond the traditional security models, especially when we’re considering protecting infrastructure changes (that is, physical disk or server or network switch and so on), we now must think about public clouds. For example, physical access to these datacenters isn’t possible. Knowing this implies that the processes for managing something like disk destruction also needs to evolve with the choices made regarding who, where, and how data is stored. We must spend time evaluating what we’re going to store, and where it’s going to be stored, and what technology exists to put adequate controls in place.

Even when an environment uses an appliance like Azure Stack or a standardized private cloud solution, a layer of understanding of what the vendor provides versus what is the responsibility domain of the IT organization needs to evolve. Organizations that deploy Microsoft Hyper-V Server 2016 can use features like Guarded Fabric Hosts to ensure that a deployed fabric can run only authorized virtual machines and protect those VMs even from compromised fabric admins.

Simply put, protecting infrastructure means IT pros should implement protections as a fundamental step when designing and implementing infrastructure services. For example, when implementing Storage Spaces Direct, we must enable encryption on the volumes for data at rest. If we’re connecting to services in the public cloud, we must ensure encapsulation of all traffic and secure transport using something like TLS or enhanced multifactor/certificate-based authentication between endpoints.

Protecting the OS

We’ve talked about some infrastructure controls that we can put in place to ensure data leakage is kept to a minimum, but we also must consider the operating system. Modern IT doesn’t just mean implementing new processes and assuming breach; it means stepping back and examining legacy systems and understanding that operating systems need to be updated to achieve this vision.

Take a public cloud, for example; it enforces a policy of running supported operating systems. Support in Azure essentially means an operating system has not been assigned to end of life and will receive no further active development. Even operating systems that have been paid under custom support agreements will not be accepted.

This introduces an obvious challenge for any enterprise because now you need to keep your operating system up to date. Line of business (LOB) applications—both third-party and homegrown—have contributed to many organizations having legacy operating systems in place and complicated the degree of difficulty required to upgrade to a modern operating system.

Windows Server 2016 took some big leaps toward providing safeguards against secret leakages—features like shielded virtual machines, Device Guard, control flow, and credential protection provide ways to mitigate leakages from the host hypervisor up.

Shielded virtual machines protect against a scenario where a corrupt administrator makes a copy of your virtual machines onto some external storage and bypasses all the encryption methods to run them at home at his leisure so he can extract the information he wants access to. Shielded virtual machines essentially won’t boot outside of the hosts you have assigned. Figure 2-5 shows a sample shielded virtual machine infrastructure and highlights that shielded virtual machines will not boot on nonguarded fabric hosts.

Device Guard in Windows provides a way to understand what software is deployed in your IT organization and essentially produce a trusted application list with signatures. Figure 2-6 shows that the feature allows you to monitor your environment, understand what applications are running, and then compare them to what you think the environment should be like. You can disable applications that don’t seem right by using a policy, which blocks them from executing on any system.

Control Flow Guard (CFG) is an operating system security feature that developers can use to protect programs from Return Oriented Program (ROP) attacks. An ROP attack is a sophisticated technique that causes the program to call into a piece of malware that was dynamically constructed in the program’s memory. These types of attacks have been difficult to protect against and extremely difficult to detect when they have been used. CFG is enabled with a compiler switch that modifies the code generation to enable runtime validation of call targets. The operating system supports CFG protection by building a fixed list of the valid call targets of a program (think subroutine names) and then, while the program is running, CFG ensures that every call target goes to a valid call site and terminates the program if an attack is detected.

Credential Guard protects credentials (user accounts and passwords) by isolating them with virtualization-based security, so only specific privileged system software can access them. This protects against one of the most damaging techniques in the malware toolkit: credential theft and lateral traversal. After gaining access to one system, an intruder uses a number of exploits and techniques (for example, mimikatz1) to harvest any credentials that were ever used on that system. The intruder then uses these credentials to attack other systems and repeat the process (“spidering their way through the network”) until he reaches the systems and resources he’s after. Credential Guard thwarts this technique by blocking the malware from harvesting the credentials on a system.

1 I had heard about mimikatz for quite some time before I actually downloaded it and tried it out. It is one thing to hear about the tool, but it’s not the same as the kick-in-the-stomach feeling you get when you actually run the tool and see your passwords in plain text. If you or one of your coworkers is not clear on the importance of this, download and run mimikatz for yourself.

Figure 2-7 illustrates that while users can still access the traditional Local Security Authority Subsystem Service (LSASS) the hashes of the traditional passwords aren’t stored there; in fact they’re stored in an isolated part of the system backed by hypervisor technology, which provides a secure and guarded area that users can’t access.

Windows Defender is enabled in Windows by default to protect you from potential zero second malware when the operating system is installed. Starting with Windows Server 2016, Windows Defender is also available and optimized for servers.

Detecting

Earlier in this section, we mentioned the need to assume breach. Assuming breach involves the proactive probing of your IT environment and trying to understand where you have potential exposure points. Modern IT drives the need to centrally collect environmental logs during these probes, as well as during normal operations. Collecting all this data drives a robust data set for which machine learning algorithms or event correlation techniques can be applied to detect anonymous events within a modern IT Infrastructure.

If we take a step back and take an environment that has a few hundred servers in a single datacenter, how do we collect the information regarding all these servers? It would be rare to have an environment like this that is totally Windows or totally Linux; usually it’s a mixed environment. In traditional approaches, you’d have to deploy a mix of tools to monitor and detect events in this type of environment.

Figure 2-8 illustrates the isolation traditionally found in these environments.

Modern IT requires evolution. Mixed environments are the norm and have been for quite some time; the evolution happens around the management toolset that you use to understand how these environments are operating. Previously siloed Modern IT dictates that driving central simplified monitoring and management is essential. If we add public cloud environments and appliance environments into the overall technology mix, we produce additional challenges that legacy toolsets may not adapt to.

Although there are many toolsets that have multi–operating system management capability and provide a perceived central console for management and operations, the reality is that traditional IT environments still use a mix of tools to obtain the information they want, a separate set of tools to help them correlate events, and another set of tools to automate remediation events. These tools don’t always have native integration and provide “loose” hooks between them.

Another aspect of management tools is the cadence at which they move. A simple example is System Center, which has its management pack technology to help discover and provide monitoring logic. While technology gets patched and potentially new features get included monthly, the management packs don’t necessarily receive an update to adapt to these new features.

This stands true for many management tools available today but hinders the progress if your focus is adopting a modern IT strategy.

Given that threats are getting more complex on a daily/monthly/yearly basis, it’s imperative that any toolset in a modern IT environment moves at similar speeds to the rate at which threats evolve. The tools should capable of detecting the anomalies happening in our environment across any cloud or datacenter you have deployed into.

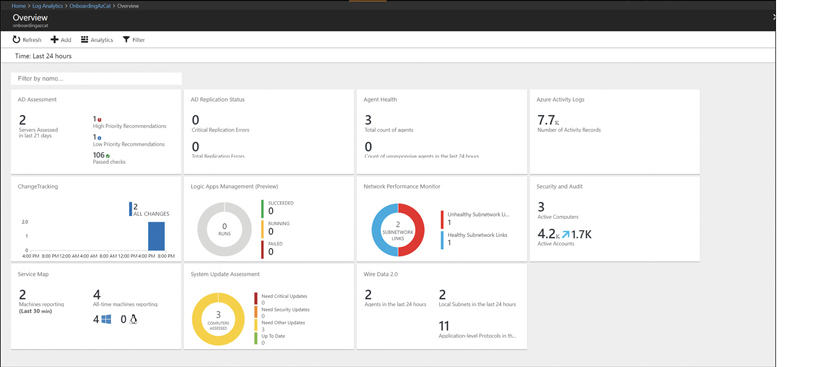

Log Analytics is one of the tools that allow for the central log collection aspects from multiple environments. It’s an agent-based collection mechanism that uses intelligence packs to collect information from the host machines and injections into a central log store. Data also can be injected from multiple non-agent-based sources, and data can be retrieved from native management tools already in place, such as System Center Operations Manager or Nagios.

Once the data is submitted to Log Analytics, you have a few different approaches. You can use inbuilt solution packs that get updated with cloud cadence. These solution packs can correlate data from different sources but which exist centrally in Log Analytics. The solution packs can help you identify problems with latency in your networks, expose ports communicating to unauthorized sources, or detect failed logons from public sources. Figure 2-9 shows a sample dashboard that uses the solution packs available in Azure.

Figure 2-10 shows a more detailed view of the Security and Audit Dashboard. It highlights threats, failed log-on attempts, and a variety information you can use to identify potential security breaches in your IT environment.

Microsoft uses its extensive experience in running hyperscale cloud environments and the data it collects from that to build comprehensive rules to detect potential threats in your environment. Of course, you can build your own intelligence, but this is one of the many elements in choosing the correct toolset for a modern IT environment you need to be aware of.

Microsoft Azure Security Center is another tool in the arsenal that builds on top of log analytics data. It has the ability to help IT pros identify potential threats in their environment and perform remediation activities. It also can be integrated into vulnerability assessment tools to quickly identify wider issues in your environment. This information and alerts can be wired natively into automation tools to help you automatically mitigate potential incidents and exposures.

Figure 2-11 shows the sample Azure Security Center events dashboard. You can see that it will scan your IT environment on premises or in the cloud and provide recommendations based on data that Microsoft has collected and analyzed from operating a hyperscale cloud. It will also use data it analyzes from support cases and best practice recommendations from thousands of customer deployments and the feedback that is shared to ensure that the tool is always up to date and alerting based on industry trends.

This dashboard allows IT pros to take rapid action to secure their environment and monitor it for changes or potential threats. For example, if a new administrator deploys a virtual machine to Azure ad hoc and forgets to secure it correctly, the machine can be auto monitored by Azure and will trigger an alert on this dashboard to highlight a potential exposure point.

Figure 2-12 shows more data about virtual machines and the recommendations and issues that have been detected. The variety of different items it will alert you to are driven from the central log collection, which is performed and built on the rules that Microsoft has implemented from its experience. This information greatly reduces the amount of time it takes to identify systems that could be used to expose data or cause a security breach within an enterprise.

Figure 2-13 shows more information regarding the OS vulnerabilities detected. Microsoft standardizes the information and links the vulnerabilities detected to industry-standard databases, as indicated by the Common Configuration Enumeration ID (CCEID) numbers.

Interestingly, with the rise of PowerShell as a modern management tool to rapidly administer large estates and drive automated tasks across them, the OS Vulnerabilities dashboard has become a tool of a hacker! Malicious IT users can create scripts that will probe IT systems for vulnerabilities. It is often a good idea to create systems in your environment that “look” exposed but have the necessary agents to collect data on malicious users attempting to breach the system. Using tools like Log Analytics and Azure Security Center can help you achieve this honeypot mecca!

Modern IT support for business innovation

Part of the evolution to modern IT is more than just the infrastructure. It also requires a business to look at its applications and the processes surrounding them.

Look at a traditional LOB app as illustrated in Figure 2-14; it has a three-tier architecture. There’s a highly available web tier, app tier, and database tier. The database tier would be deployed with shared storage as an active-passive cluster, the web tier is based on IIS, and the app tier is custom processing logic built for the application.

When the application needs to be upgraded, it requires an outage no matter what we upgrade the web tier, app tier, or database tier to. This is an inherent flaw in the application because it cannot suffer version mismatches in the deployment. The upgrade process can be time consuming because it requires a complete uninstallation of the existing application and then redeployment of the new code provided by the development team. The development team package this as zip file, which requires several steps after it has been unzipped to register specific DLLs and update registry keys. The only part of the system that doesn’t require code redeployment is the database itself; however, there is a service on the database tier that needs to be updated and the cluster failed over to repeat on the passive node.

Upgrades to the operating system are delayed as much as possible because it has caused significant problems in the past due to incompatibilities in modern operating systems.

Modern IT demands a different approach. Modern IT should allow not only the product to be upgraded for new features but require zero to no downtime unless the database itself must be upgraded. Modern IT also demands that applications be broken down into the smallest reusable parts, which can be then be deployed and managed independently. Eventually this will be integrated into an application development life cycle. This is to allow innovation in the technology that supports the app independently. It also will drive the LOB application to be deployable across IT environments no matter the location.

Containers

When approaching application modernization to drive business innovation, one of the first items we look at is containers. Containers allow the rapid deployment of applications across different IT estates and provide the simplest method to begin the modernization journey.

Take our three-tier app. Traditionally each tier would be deployed into a virtual machine, and each tier would be two virtual machines with two virtual CPUs and between 4 and 8 GB of RAM. They have an operating system disk of 60 GB fixed. For now, let’s overlook the database tier and discuss it later. Figure 2-15 shows the difference between a virtual machine and a container in terms of its relative footprint on a host system.

Given that the web tier is an IIS web app, we can deploy this into a container with relative ease. We obtain a base image of either Nano Server or Windows Server Core and deploy our web application into the container and store the changes into a registry.

The registry enables us to quickly download the image into different environments, which allows us to move rapidly from the on-premises datacenter to Azure. We can choose to use a managed service in Azure or build our own container host solution using technologies like Docker or Kubernetes.

When you examine the footprint of a container, you will notice it’s considerably smaller than a virtual machine with a significantly smaller startup time. In our web tier example, we could run our container on one virtual CPU and a fragment of RAM around 150 MB per container. Using container orchestration, it will manage the container life cycle for us. For example, if the container stops responding, the orchestrator will destroy the container that has stopped responding and instantiate a new container to replace the destroyed version.

If the application needs to be patched, you can build out the new container image with no downtime and switch the traffic path to the new containers. This is considerably easier than building new virtual machines. Later in this chapter when we discuss DevOps and Continuous Integration/Continuous Delivery, we dive into this a little deeper.

The application tier can be treated in the same manner with minor exceptions, depending on different aspects of the application. This is beyond the context of this book, but a headless application with externalized state are key markers for an application that can be containerized.

Microservices

When moving toward modern IT, especially relating to modern applications, we begin to try to decouple the application from a single server to reusable components. Microservices allows you to break down an application into micro applications (or, as the name suggests, microservices) that have dedicated functions. These functions will have an interface so other microservices can interact with them and obtain the information that the microservice is designed to do.

Traditionally, if we look at our web application for our LOB application, it might have several sub websites. If we say this LOB application is our stock entry system, then we can clearly identify web apps for inventory, orders, and customers. Each web app has broken-down functions integrated into them; for example, in inventory we have three specific functions: get stock item, assign stock item, and delete stock item. Each of these could represent a microservice and essentially will be a completely independent application.

Figure 2-16 illustrates this concept further. We can see that the inventory and customer web apps are broken down into further components. For example, say we want to allocate stock to a customer. The process would essentially call the Get Stock microservice, which would collect the stock information required. Next it would obtain the customer information by calling the Get Customer microservice. The customer information would be returned and then call the Updated Stock microservice. This in turn would call into the ordering system. That’s not illustrated in Figure 2-16, but it would be the Create Order microservice.

If we want to innovate or modify, the customer must get the Orders microservice to provide more data or be more efficient in its operation. It can be upgraded independently of the entire application. In the traditional IT approach, however, we needed to remove the entire application and reinstall.

This approach can either be combined with containers where each microservice can be its own container, or we can deploy the code to a platform like Service Fabric. Both manage the life cycle of the microservices and scale up or down as required as well as monitoring its health state and recovering from any potential problems.

Microservices also can be written in the best “code” for the job, allowing you to achieve the goals the microservice is designed to do efficiently versus coding to what the language allows you to do.

DevOps

Rarely do people walk away from discussions about DevOps satisfied. We like to joke that at the heart of DevOps there are really just two concepts:

Do work in frequent small batches.2

Stop being jerks to one another.

2 One of the milestones of the DevOps movement was John Allspaw’s 2009 talk at the Velocity conference, “10 Deploys per day, Dev and Ops Cooperation at Flickr.” The slides are available at https://www.slideshare.net/jallspaw/10-deploys-per-day-dev-and-ops-cooperation-at-flickr.

While not entirely accurate, that joke is apropos, and it’s easy to remember. When you put the customer at the center of everything, one thing becomes super clear: Development and operations need to work together much better. These traditionally siloed teams need to begin to understand what is important to each other to make them succeed in a modern IT environment.

Consider the differences in what is important between IT pros and developers in the rollout of an LOB application. IT pros want the application to be stable; they need to ensure the business can operate and process the transactions required. IT pros also want to ensure they can recover quickly if a failure does occur. Development teams want to ensure the code they write meets the business requirements. We can see that the needs of the two groups are different. Modern IT allows us to start to blur the boundaries through a set of practices and culture that has become known as DevOps. The DevOps community likes to spend a lot of time discussing what is and is not DevOps. We encourage you to take a look at some of those discussions, but don’t fall down that rabbit hole.

DevOps also pushes for shorter development cycles with increased deployment frequency while targeting more stable releases that align to the business objectives.

There are multiple phases to DevOps that also can be used as a foundation to help blur the boundaries and establish trust between these siloes to achieve the rapid development and release of stable software.

Plan

Planning is different in DevOps; it is collaborative and continuous. Product owners start by defining what customer scenarios need to be enabled during the next product cycle (typically three- to six-month cycles). The best practice is to define these in terms of a set of customer-focused “I can” statements and avoid any discussions of technology or implementation. This grounds planning in customer value, and it gives the development and operations teams maximal flexibility and freedom in achieving those objectives. Whenever possible those “I can” statements are tied to specific measurable items known as key performance indicators (KPIs). Development and operations teams work together to determine what is feasible in this cycle, and everyone agrees upon a common set of prioritized objectives. Because the customer experience is the highest priority, a very large percentage of time is allocated to work on “Livesite” (addressing issues with the production service) and fundamentals (bug fixing, performance, security, and so on).

Perhaps the most important difference between this and traditional planning is that the software is released on a different cadence than the planning. In the DevOps model, software is released in small batches on a continuous basis. Some teams release dozens or hundreds of changes a day, whereas other teams release once a day or once a week. As new code is released, new insights are gained, and plans are adjusted.

Develop

Like the planning phase, both teams will interoperate and ensure the right code for the business is being written in the most efficient style. Furthermore, if the organization adopts something like containers, the development team will build the container image and fully test out the software in a development environment before deploying into production. The microservice approach allows the development team to evolve into an agile development framework and rapidly meet every changing need of their organization.

Test

Testing is paramount to ensure stable builds—more so building an automated test framework so that people are freed up and you cover all aspects of the software being built is paramount. Testing itself will evolve from the monitoring and planning phase. Whatever bug was identified in the monitoring phase should have an automated test built for identifying that scenario. Testing is an evolving process. Once the tests complete successfully, you can move to packaging.

Package

Packaging of the software involves putting the software in a state and place where it’s possible for other automated tools to pick it up and deploy it. This package contains all the dependencies that are required to install the software. The package should also be in the format necessary for the ecosystems it will be deployed into. Standardization is core here because it will ultimately lead to simplified deployments from this package.

Release

In the release stage, the deployment items have been built and tested by the development team and the automated process has packaged up the release that’s ready for deployment. IT operations have been involved at every stage to ensure a smooth transition from development environments to the production environments. Microservices and containers greatly contribute to this ease of transition.

Configure

When the package gets released, there may be minor configuration items (such as app settings, connection strings, and so on). This again should be automated based on the environment it is being deployed in to.

Monitor

Finally, in this stage, IT operations plays the bigger role by ensuring the software is performing as expected. Where there are issues, the correct feedback is submitted to the development team with all relevant information to help them understand what the problem is and correlating data to help them isolate the problem and rectify the issue.

DevOps is a culture change for an IT organization to adopt. It brings teams together, and building a complete end-to-end process takes time and requires cooperation on every level.

Agile Adoption

One component of modern IT and a DevOps culture is the push to Agile development. Agile development has many different techniques, but when an organization decides to adopt a modern IT culture, Agile development will help it achieve the new culture at a rapid pace. Agile develop aligned with microservices examples allows innovation to happen in software in six-week sprints, for example. The development will be focused on individual microservices, and multiple parallel streams can occur. This leads to fast development time and rapid innovation to meet the demands of both the business and customer.

Agile is mainly a development culture concept, but it could be adopted by IT pros. Doing that would require building in similar automated processes as a DevOps strategy to manage large aspects of their environment. Release cycles for newer innovations in terms of IT infrastructure would shrink from months to weeks to days in most cases. IT pros must also think in terms of the public cloud; although on-premises IT infrastructures will be around for quite some time, public cloud adoption is happening at an accelerated pace. IT pros must have the automated tooling and monitoring systems in place to use the rapid cadence of technology change in public cloud systems.

One example involves virtual machine sizes. Public clouds have fixed configurations of virtual machines at a per-month cost. In month 4 of using a public cloud service, the public cloud vendor may release a new virtual machine size at a lower cost than the original size chosen. The IT pro should have invested in the tooling to allow this change to happen automatically, or maybe it would be triggered manually but the actual steps happen automatically so that they leverage this cost saving.

Ci/cd Pipeline

DevOps wants to reduce the time to innovation, and it wants to empower an IT organization and business to meet the needs of the customers in a rapid fashion while maintaining a stable production environment. A key feature to doing this is the development of a Continuous Integration/Continuous Development (CI/CD) pipeline. This essentially ensures that when new features are to be released, it happens automatically in a consistent and efficient manner.

The process revolves around the development team writing the new code. The code is checked into a source control system, where it undergoes an automated testing process. After its testing, the code gets packaged and deployed to artifact stores ready for deployment. The software is then deployed into the production environment, where it takes over the load from the old code. CI/CD requires modern IT, a culture change, and trust.

Another interesting aspect of CI/CD is that when IT organizations are initially learning about the concepts, they think there will be expensive investment to buy many tools to build a CI/CD pipeline system. Figure 2-17 shows a sample of tools used in a CI /CD pipeline. We can see the process and tools defined as follows:

We begin coding in Visual Studio code.

We use GitHub as a source control system to store our code.

We use Visual Studio Team Services to perform the testing of the code.

We also use Visual Studio Team Services to perform the automated build or packaging of our software.

We can still use Visual Studio Team Services to deploy to our container registry as an artifact store or use the release manager, which could generate some executables or deploy it directly to a container service.

IT organizations likely already own the tools but aren’t using them correctly today. There are hundreds of tools—both open source and full-blown commercial tools—to meet your organization’s needs. The important thing is to standardize the tooling across the entire organization and not allow different branches of development or operations to use different tools. When different branches use different tools, it essentially defeats DevOps, Agile, and CI/CD Pipeline structures.

DevOps is an achievable goal for any IT organization, and those who choose to undertake this journey will benefit from implementing it, and in almost every scenario they’ll be successful. Why? Because they can meet their customer needs rapidly.

However, don’t be mistaken that the transition is an easy or smooth journey. There are several hurdles that span people, process, and technology. All hurdles can be overcome with the right approach and buy-in from all involved teams. Feedback is core, so it’s important to build robust feedback processes so that the correct information is fed to the right teams to help them focus on the right improvement to make or the right feature to implement.

Remember—this is a process, and sometimes it’s a lengthy one!

Eating the Elephant—one Bite at a time

Over the last few sections we talked about many approaches and items related to evolving to a modern IT environment. Even as we write this book, we can hear the tension building and the points you’d like to make: “We don’t have the time,” “Holy cow; the learning involved in achieving that will take years,” or “There is no way we will get buy-in for this.”

Let us stop all these thoughts by stating that anyone who has gone through the journey to a modern IT environment has had the same ideas and has felt like they had come to the dining table with a small plate, a small knife and fork, and a small appetite to eat a huge elephant. Figure 2-18 shows us an elephant with a small piece—one bite, in fact—missing.

One bite at a time—this is how we approach modern IT. What does this look like in practice? Let’s use a simple example: How much time in a day do you or your team spend doing repetitive tasks? Could you script those tasks and share it with your team so the tasks happen more quickly and in a standardized way?

Many years ago, we automated a task of syncing information between two systems. Three people a day performed this job, and each usually took between 10 and 15 minutes per item they needed to duplicate. If there were 20 items per person per day, the total is 10 labor-hours a day duplicating information. Figure 2-19 shows the structure: In IT Silo A, the team managed their records; however, in IT Silo B, the users had to consume the records.

The users in IT Silo B didn’t have access to the ticket system in IT Silo A (the ticket system was proprietary for IT Silo A). The ticket system in IT Silo B was more generic and used by various different teams. The ultimate goal was to either merge the ticket systems or replace them with a central ticket system; however, this would take some time and a large investment.

We implemented a solution with automation using PowerShell. We were able to reduce the time required to scan for new entries, duplicate them, and inject them from ticket system A to ticket system B to roughly 2 seconds per item working on a frequency of every 5 minutes. Furthermore, we added functionality that if a ticket that had been duplicated in ticket system B had been modified, it would update the ticket in ticket system A, giving us bidirectional integration that had not been in place before.

What did this mean for this company? Ultimately this allowed 10 labor-hours to be given back to people to take the next time-consuming task and automate it. A few years later, they had more time to replace the IT systems to more centrally focused IT, and today all tasks undergo an automation process review, instead of someone building a manual task initially.

Eating the elephant is an ever-evolving journey. Through each iteration, each task we automate, and each system we monitor, we take another small bite of the elephant and keeping repeating until it’s all gone!

Proposition on value

So, what is in it for you? Understanding the value of evolving to modern IT is extremely important: Without it, any IT is already a failed projected. Getting teams on board to buy into modern IT, the changes it requires, the culture shock that can come as part of it, and the adoption of a more open organization is difficult. Detailing the value proposition for them is important. It gives teams a chance to understand the goals and the benefits of buying in to modern IT, and it reduces the teams’ resistance to change.

If resistance continues to occur, you need to reflect on the value proposition. Are you articulating the message correctly? Do the benefits of modern IT reflect to the teams the advantages of adopting it? Or are they afraid of a simple fact of losing their employment during this evolution? You must consider all this for everyone in the organization to feel comfortable about moving to modern IT.

To help in highlighting the value propositions, in this section we give detail of some of them to help you make the case for your organization to begin the journey toward modern IT:

For businesses, modern IT maximizes the resources focused on delivering innovation.

Unlike traditional IT, modern IT aggressively outsources everything that doesn’t drive competition. It uses Software as a Service (SaaS) for as many elements as it can.

Unlike Information Technology Infrastructure Library (ITIL), modern IT has a model of shared responsibility and ownership between developers and operators for creating, deploying, and operating business applications.

For businesses, modern IT provides a cost-effective agile platform to grow your business. Unlike traditional IT, modern IT uses the magic of software to deliver great performance and reliability using inexpensive unreliable components.

For infrastructure admins, modern IT minimizes stress and drama. Unlike traditional IT, modern IT allows infrastructure admins.

For developers, modern IT provides an environment where you can do your best work with the lowest amount of friction and the maximal amount of impact.

Unlike traditional IT, modern IT maximizes the speed of changes from customer input to production systems.

Unlike traditional IT, modern IT leverages automation and testing to minimize the risk and impact of failure.

Although this is not a definitive list and, in most cases, you will need to adapt the individual message to your organization, these ideas are a basis for starting a conversation.

The Fourth Coffee case study

As we move throughout this book we want to focus on a set of challenges and opportunities that we consider to be typical of today’s datacenter environments and IT organizations. We lay out our case study here, and throughout the book we refer to our fictitious company and its journey in adopting modern IT.

Consider the case of the mythical Fourth Coffee Corporation and their journey toward modern IT. Fourth Coffee is a trusted coffee company that has a strong and competent IT organization. They have a modest footprint with a few hundred stores scattered throughout the mainland United States and pop up locations in several international countries. However, the company aspires to be bigger! Unfortunately, it has recently seen its customer demand decline after its competitors rolled out a new set of loyalty reward programs and customer agility programs (such as mobile ordering).

Until now, Fourth Coffee ran quite a traditional IT model that was focused on operations. They have core datacenters running large virtualization clusters, and each coffee shop connects back to them via a VPN or leased circuit. The company has a duplicate datacenter for disaster recovery, but it’s aging and becoming very costly to maintain. Figure 2-20 shows a layout of how the coffee shops connect back into Fourth Coffee’s datacenters to the main application.

Fourth Coffee management feels that the company is being left behind in comparison to its competitors. Although the company has been focused on “doing more with less” (which often turned into “doing less with less”), its competitors focused on increasing customer satisfaction through innovation. For example, most of their competitors now have mobile apps that are delivering great functions and stealing customers. The mobile applications allow the competitors to understand their customers’ buying behaviors, which enables those companies to offer incentives to them regarding time and product to make the customers more inclined to buy at the competitors’ stores.

Fourth Coffee needs to switch gears and get into the modern world quickly. They have been focusing on efficiency while their competitors have been focusing on effectiveness. The CEO of Fourth Coffee understands that the company is facing an existential crisis. A change is needed; Fourth Coffee needs to put maximizing customer value at the center of everything it does and have the courage to refactor everything to achieve it. The company needs to digitally transform. The existing CIO is close to retirement and didn’t want to rock the ship. He ensures the low cost of running and keeps it as stable as an uninvested IT infrastructure could be.

With the retirement of the CIO, the CEO knows that his new CIO has to drive digital transformation and the adoption of modern IT so that Fourth Coffee can cover its position and start to grow again. He takes a risk and hires Charlotte. A few years ago, Charlotte was a first-level IT manager of an old-school brick-and-mortar retailer with a lackluster web presence. Charlotte led a grassroots effort within that company to adopt DevOps. She started small, but as her track record of success grew, so did her responsibilities and organization, and she became a well-known name in the DevOps community. The CEO knows that this will be a stretch job for Charlotte, but he’s impressed by her initiative, courage, and grit and knows that these will be far more critical for Fourth Coffee’s success than domain knowledge or a long track record of doing things the old way.

Charlotte is thrilled at the opportunity to try out her new ideas with a larger scope, but there’s a snag. She has no new budget to do the transformation. In fact, given Fourth Coffee’s recent decline in share, she has to reduce the existing budget. She hadn’t planned on this.

To pursue digital transformation, Charlotte realizes that she needs to create bandwidth from what the company has been doing. She needs to find additional money in a failing system as is. First, she does a value inventory of the IT budget. She examines everything that money is spent on. She wants to know how each item contributes to the overall customer value and how it helps Fourth Coffee compete with its competitors and become a global business.

Charlotte also begins brainstorming with her teams about what digital transformation and modern IT means for Fourth Coffee. Her teams were not familiar with this line of thinking and immediately want to jump to technical issues, like moving to public cloud, replatforming the application, implementing an Internet of Things (IoT) solution, and retrieving telemetry data.

She likes those ideas but insists that they focus every conversation around delighting the customers. She tells her teams to think of it this way:

Let’s start with the cash register ringing at Fourth Coffee. What caused the customer to make that choice verses buying from our competitors? Let’s figure that out and then work our way backward by asking “Why did that happen?” For every step in that chain, let’s put together a plan to do it, and for everything that we are doing today that’s not in that chain, let’s figure out how to stop doing it or minimize our investment in it.

In her brainstorming sessions, Charlotte also discovers some culture and morale problems she needs to address. The recent hires have bought into the goals, are active in the discussion, and are eager to get started. They are hungry for change and innovation and for bringing their environments up to modern IT standards and new technology. However, that enthusiasm isn’t evident among the senior IT staff. They’re comfortable with the status quo. They’re the experts at doing things the way they have been done. Many of them got their senior jobs because they invented the things that Charlotte seems to say aren’t mission critical to the company anymore. In one of the brainstorming sessions Eddie, the Exchange expert, opines, “A customer may not buy our coffee because of our Exchange system, but you just try to get anything done around here without it running 24-7.” While the new hires want to automate everything with PowerShell and use public cloud to drive innovation, the tenured staff are more interested in refining the systems that they have with enhancements like checking the backup every morning manually and creating administrative checklists.

Charlotte has seen this before. In her previous job, people initially were reticent to automate tasks because they didn’t have the time to learn how to script and because they were concerned that they would automate themselves out of a job. She knows that she would have to make time for people to learn how to use PowerShell; after they learn, they will realize that scripting automates tasks, not people, and they’ll enjoy their jobs more. Charlotte also knows that she will get higher quality IT operations and that it will free up her people so that they can work on digital transformation and become critical to the company’s business.

During the inventory, the CIO identifies a large number of issues that impact customer value and/or are legacy and require large amounts of capital to replace, including the following:

Eight percent of the IT budget was spent on keeping existing systems running.

A combination of old hardware whose failures lead to service outages and gold-plated purpose-built servers that were reliable but cost them a large portion of their hardware budget.

Multiple legacy hardware and software management tools.

Unreliable and drama-filled operations. Ad hoc operations via Graphical User Interfaces (GUIs) meant that changes to the environment were unreviewed, unaudited, and unrecorded, so no one could determine who did what and when they did it. A “hero” culture in which heroic firefighting was valued without any attention being paid to the fact that the people fighting those fires were also the people who started them.

Infrastructure teams that were unresponsive to the needs and requests of the developers.

Outdated procurement processes leading to excessively long ordering times.

Manual, error-prone provisioning processes for hardware and virtual machines.

No clean hand-over from deployment teams to applications teams.

Too many tightly coupled systems that required exceptional low latency between them.

Virtualization spend that had tripled due to virtual machines sizes that were requested still have been growing.

Waterfall-based development processes with long cycles and a poor track record of actually being deployed into production.

Siloed IT – The Wall: Developers were responsible for creating the code. Operators were responsible for running the code. Developers tossed code over the wall, and operators deployed it and/or threw it back over the wall.

Large datacenter connectivity required to handle all remote shops.

Performance issues on branches furthest away from the datacenter.

Legacy firewalls in place which are costly to maintain.

Disaster recovery had never been fully proven.

One interesting scenario was discovered during the value inventory that highlights all the listed problems and that the preface text in this case study focuses around Exchange. Eddie was an Exchange MVP (Microsoft Most-Valuable Professional). Fourth Coffee was well known in the Exchange community as being particularly well run because of Eddie’s skill and expertise and his willingness to share with the community.

During the value inventory, the department argued that although email wasn’t in the “money path” of the business, it was absolutely mission critical, and they had one of the world’s best Exchange admins in Eddie. The Exchange department’s position was this:

This place would grind to a halt if we couldn’t do email.” Charlotte acknowledged the team’s argument but challenged their thinking by stating, “Although email is mission critical, and it has to work, Microsoft offers this and the other Office products as a very reliable service. So let me asks some questions:

How much time do we spend running Exchange?

Are we up to date on version and patching?

Where do we rate on securing our Exchange systems and how frequently do we perform penetration tests?

How does having a much better email system than our competitors get us more customers or make our customers more loyal?

The last question is the one that got everyone to understand that they need to change, but everyone is concerned about Eddie. He’s a valued member of the team and a mentor to many, and they fear that using Office 365 instead of running their own Exchange servers might mean losing Eddie.

However, Eddie is ready for the change. He understands that reducing the physical infrastructure he has to maintain means freeing up time for him to focus on broadening his skills and building more integrated experiences from his messaging background.

In this case, the change is a win, but in a lot of other scenarios the challenges grow and require complex plans to solve and move forward.

Before we move forward, we’re going to describe the team in a bit more detail to give context to any further conversations and help shape the issues you may come across on your journey to modern IT.

The Fourth Coffee IT team is probably one you are familiar with. They’re best of breed, fully certified, and love their jobs! There are work horses who spend hours managing and maintaining the system and superstars who can fix any problem they’re given. One such superstar is Eddie, who’s an MVP on Exchange and literally one of the best administrators and troubleshooters in the world with regard to Exchange. As we previously mentioned, he’s considered an asset to the team. There are other superstars on the team as well, but Eddie’s MVP helps him stand out.

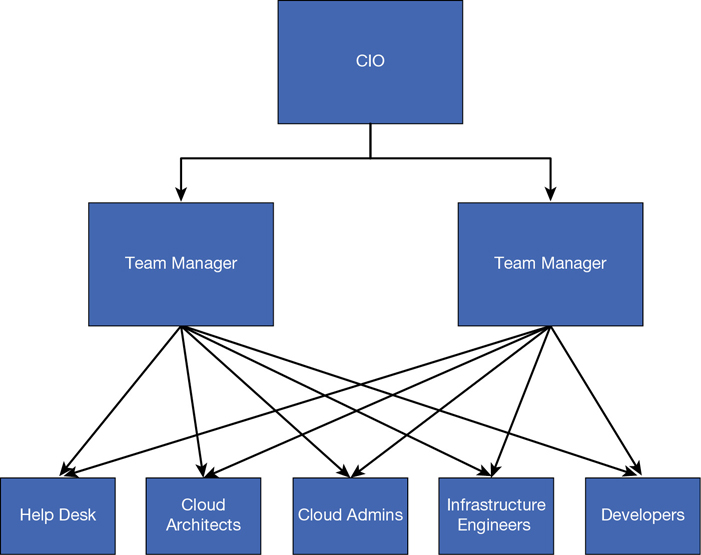

There are separate teams responsible for development and IT pro/operations. Little to no interaction happens between the teams except in the CIO meetings between the team managers.

Figure 2-21 shows the current IT structure for Fourth Coffee. Help desk roles, system administrator roles, infrastructure engineers, and architects are on the IT ops side, and software architects and application-specific development teams on the development side. The application development teams tend to focus on one major project in a year, release it, and move on to the next one.

Updating or patching previous applications happens, but it can take nearly two years before the resources are assigned to do work on the patches, which slows down how quickly Fourth Coffee can innovate with its customers.

The IT operations have similar problems. When new resources are needed, it takes time to get them. Team members have official titles, but many of the roles overlap and have unclear responsibilities. However, in a crisis the team pulls together and keeps the systems and the business running.

While this is good, and it seems like the IT environment is stable and robust, this strong team has put in a lot of unnecessary effort to keep it running; in many respects, the IT system is being held together with tape. This leads to a lot of dangerous practices, like uncontrolled and unrecorded changes in the environment, to keep a system running. These practices mask true problems, which become difficult to identify and remediate.

Charlotte has made an active choice to evolve this structure into a more modern, DevOps-focused team while implementing a strong IT Service Management (ITSM) practice. In a modern IT environment and team, we introduce some new roles which have some of the responsibility of the previous roles with the addition of responsibility to start working with cloud technologies.

For example, the traditional model includes systems administrators; the modern IT model might map this role to a cloud administrator role. The role still requires system administrator tasks, but the breadth of the role expands to include something like Linux or a public cloud environment, whereas it might have originally focused on just Windows or VMware.

The role changes are made to invoke a culture change in the organization. These changes encourage teams to work together closely and strive for the DevOps culture of modern IT while giving the business the agility required to innovate rapidly to meet the customers’ needs.

Figure 2-22 shows how the IT organization will evolve to meet the needs of Fourth Coffee and allow the company to innovate at a rapid pace to compete with its competitors.

In Figure 2-22, you can see we still have our help desk roles, which can manage end-user IT problems. The cloud admins replace the system administrators. The software and infrastructure architects collapse into cloud architects. The infrastructure engineers take on a wider breadth of responsibilities, but we have a central draw toward automation and self-service. Finally, our developers are pooled together; we will assign them to smaller pods with shorter cycles based on needs so they can innovate at cloud cadence.

Regarding the cloud architects role, we choose to merge two previous disparate roles to focus on the journey that organizations take when moving to modern IT. Initially the journey will begin with Infrastructure as a Service (IaaS), but eventually it will move toward Platform as a Service (PaaS) or SaaS depending on the software. If an infrastructure architect or a software architect cannot view the journey in terms of the agility of cloud, then they will not allow the organization to meet the goal of modern IT.

The CIO has a difficult choice when evolving to this type of structure. Sometimes the CIO has to make difficult decisions about people in a role. However, this change often gives stagnant employees a new lease on life and an incredible opportunity to grow.

Sample architecture of their point-of-sale (POS) application

Figure 2-23 shows a sample IT system architecture from Fourth Coffee to provide context for our discussion in terms on what we can do with people, process, and technology to evolve to modern IT.

This architecture is a typical two-tier, highly available monolithic architecture. The application tier performs multiple functions, including hosting a file share where clients connect into and run the POS application. The database is a Windows 2008 R2 Cluster with shared Fiber Channel Storage. The database software runs Microsoft SQL Server 2008 R2. The application tier and database tier are tightly coupled and require less than 2 milliseconds of latency between each other. The database system also requires direct database access into other systems like the Inventory System or the Accounts Payable application.

Credit card processing is done centrally via a dedicated Windows NT 4.0 credit card processing server. This has never been upgraded due to the absolute requirement of being available for stores that have 24-7 opening hours. The reporting system is a single server and is very slow. During month-end reports, it can take up to an hour to run a simple batch of reports to understand the coffee sales in a single state. The application makes calls into the inventory system and accounts payable to help ensure stock is replenished into the stores; however, orders often are wrong, and amounts charged to the stores vary greatly. This leads to a lot of manual verification and modifications.

The application itself is written in .NET 2.0 and has evolved over time to require a significant footprint to meet its ever-increasing demand for resources. The system currently requires the application tier servers to have 64 GB of random-access memory (RAM) and 8 x central processing units (CPUs), whereas the database tier requires 128 GB RAM and 12 CPUs.

These requirements are expected to grow further as new stores are added. All stores connect into the main datacenter via a dedicated line (Multi Protocol Label Switching [MPLS]) or via a VPN tunnel. The firewalls terminating the tunnels have not been upgraded in four years and currently peak at 75% throughput capacity.

The application itself was not designed to be active-active; the architecture is active-passive. Connections from POS systems have to always be directed to the active node. The POS systems themselves are locked down versions of Windows XP. They’re generally stable, but Fourth Coffee has realized they’re becoming a major security risk. As newer retail technologies and innovations are released, Fourth Coffee is unable to adopt them because the newer technologies can’t be plugged into these POS platforms.

The system has no data warehouse for long-term storage, which means that the fiber channel storage area network (SAN) that stores the database is reaching capacity and requires replacement because of its age. This leads to regular performance problems on the database to which additional memory and CPU have been added to “fix” the problem.

Backup and disaster recovery exist, but the IT team is reluctant to test; the team members keep their fingers crossed that a true disaster never happens. They are fairly sure they cannot meet their stated recovery point objective (RPO) and recovery time objective (RTO), but they haven’t been able to address it because the previous CIO felt that they could rebuild from scratch very quickly, which would be sufficient because the company can always make coffee and take cash in their stores.

As previously mentioned, the development teams work on a waterfall development model, which tends to lead to a new release of software per year with features and functionality that are often outdated by the time they are rolled in.

Fourth Coffee has a lot of problems, which many IT organizations would consider to be “normal problems.” But if Fourth Coffee, or any other organization, wants to evolve to a modern IT organization and keep pace with its competitors, many things will need to change.

Various chapters within this book deal with the how of evolving to modern IT. We’ve talked through the why in this chapter. The what of modernizing begins here, but we touch on it throughout the entire book as we approach different subjects and work from real-world examples.

For now, let’s examine how we might evolve Fourth Coffee to a modern IT organization first and then how that evolution can lead to greater innovation in the company’s technology to provide a valuable customer experience which in turn will help it better tailor its business to the customers’ needs.

How can Fourth Coffee evolve to modern IT?

Charlotte has recognized that Fourth Coffee has a willing and capable staff but antiquated processes and technology. The company has multiple challenges to overcome to get to its goal. One of the first things she will bring is an ITSM process with a focus on achieving a robust change-control system. The primary reason for this is to begin to understand the changes that happen in an environment and give everyone (from the executives all the way down to the IT admins) the ability to question and ask why, what customer value does this change bring for our customer.