Chapter 6. Security Assessment and Testing

This chapter covers the following topics:

![]() Assessment and Testing Strategies: Explains the use of assessment and testing strategies.

Assessment and Testing Strategies: Explains the use of assessment and testing strategies.

![]() Security Control Testing: Concepts discussed include the security control testing process, including vulnerability assessments, penetration tests, log reviews, synthetic transactions, code review and testing, misuse case testing, test coverage analysis, and interface testing.

Security Control Testing: Concepts discussed include the security control testing process, including vulnerability assessments, penetration tests, log reviews, synthetic transactions, code review and testing, misuse case testing, test coverage analysis, and interface testing.

![]() Collect Security Process Data: Concepts discussed include NIST SP 800-137, account management, management review, key performance and risk indicators, backup verification data, training and awareness, and disaster recovery and business continuity.

Collect Security Process Data: Concepts discussed include NIST SP 800-137, account management, management review, key performance and risk indicators, backup verification data, training and awareness, and disaster recovery and business continuity.

![]() Analyze and Report Test Outputs: Explains the importance of analyzing and reporting test outputs, including automatic and manual reports.

Analyze and Report Test Outputs: Explains the importance of analyzing and reporting test outputs, including automatic and manual reports.

![]() Internal and Third-Party Audit: Describes the auditing process and the three types of SOC reports.

Internal and Third-Party Audit: Describes the auditing process and the three types of SOC reports.

Security Assessment and Testing covers designing, performing, and analyzing security testing. Security professionals must understand these processes to protect their assets from attacks.

Security assessment and testing requires a number of testing methods to determine an organization’s vulnerabilities and risks. It assists an organization in managing the risks in planning, deploying, operating, and maintaining systems and processes. Its goal is to identify any technical, operational, and system deficiencies early in the process, before those deficiencies are deployed. The earlier you can discover those deficiencies, the cheaper it is to fix them.

This chapter discusses assessment and testing strategies, security control testing, collection of security process data, analysis and reporting of test outputs, and internal and third-party audits.

Foundation Topics

Assessment and Testing Strategies

Security professionals must ensure that their organization plans, designs, executes, and validates appropriate assessment and test strategies to ensure that risks are mitigated. Security professionals must take a lead role in helping the organization implement the appropriate security assessment and testing strategies. The organization should rely on industry best practices, national and international standards, and vendor-recommended practices and guidelines to ensure that the strategies are planned and implemented appropriately.

Organizations will most likely establish a team that will be responsible for executing any assessment and testing strategies. The team should consist of individuals that understand security assessment and testing but should also include representatives from other areas of the organization. Verifying and validating security is an ongoing activity that never really stops. But security professionals should help guide an organization in terms of when a particular type of assessment or testing is best performed.

Security Control Testing

Organizations must manage the security control testing that occurs to ensure that all security controls are tested thoroughly by authorized individuals. The facets of security control testing that organizations must include are vulnerability assessments, penetration tests, log reviews, synthetic transactions, code review and testing, misuse case testing, test coverage analysis, and interface testing.

Vulnerability Assessment

A vulnerability assessment helps to identify the areas of weakness in a network. It can also help to determine asset prioritization within an organization. A comprehensive vulnerability assessment is part of the risk management process. But for access control, security professionals should use vulnerability assessments that specifically target the access control mechanisms.

Vulnerability assessments usually fall into one of three categories:

![]() Personnel testing: Reviews standard practices and procedures that users follow.

Personnel testing: Reviews standard practices and procedures that users follow.

![]() Physical testing: Reviews facility and perimeter protections.

Physical testing: Reviews facility and perimeter protections.

![]() System and network testing: Reviews systems, devices, and network topology.

System and network testing: Reviews systems, devices, and network topology.

The security analyst who will be performing a vulnerability assessment must understand the systems and devices that are on the network and the jobs they perform. The analyst needs this information to be able to assess the vulnerabilities of the systems and devices based on the known and potential threats to the systems and devices.

After gaining knowledge regarding the systems and devices, the security analyst should examine existing controls in place and identify any threats against these controls. The security analyst can then use all the information gathered to determine which automated tools to use to search for vulnerabilities. After the vulnerability analysis is complete, the security analyst should verify the results to ensure that they are accurate and then report the findings to management, with suggestions for remedial action. With this information in hand, the analyst should carry out threat modeling to identify the threats that could negatively affect systems and devices and the attack methods that could be used.

Vulnerability assessment applications include Nessus, Open Vulnerability Assessment System (OpenVAS), Core Impact, Nexpose, GFI LanGuard, QualysGuard, and Microsoft Baseline Security Analyzer (MBSA). Of these applications, OpenVAS and MBSA are free.

When selecting a vulnerability assessment tool, you should research the following metrics: accuracy, reliability, scalability, and reporting. Accuracy is the most important metric. A false positive generally results in time spent researching an issue that does not exist. A false negative is more serious, as it means the scanner failed to identify an issue that poses a serious security risk.

Penetration Testing

The goal of penetration testing, also known as ethical hacking, is to simulate an attack to identify any threats that can stem from internal or external resources planning to exploit the vulnerabilities of a system or device.

The steps in performing a penetration test are as follows:

1. Document information about the target system or device.

2. Gather information about attack methods against the target system or device. This includes performing port scans.

3. Identify the known vulnerabilities of the target system or device.

4. Execute attacks against the target system or device to gain user and privileged access.

5. Document the results of the penetration test and report the findings to management, with suggestions for remedial action.

Both internal and external tests should be performed. Internal tests occur from within the network, whereas external tests originate outside the network and target the servers and devices that are publicly visible.

Strategies for penetration testing are based on the testing objectives defined by the organization. The strategies that you should be familiar with include the following:

![]() Blind test: The testing team is provided with limited knowledge of the network systems and devices that use publicly available information. The organization’s security team knows that an attack is coming. This test requires more effort by the testing team, and the team must simulate an actual attack.

Blind test: The testing team is provided with limited knowledge of the network systems and devices that use publicly available information. The organization’s security team knows that an attack is coming. This test requires more effort by the testing team, and the team must simulate an actual attack.

![]() Double-blind test: This test is like a blind test except the organization’s security team does not know that an attack is coming. Only a few individuals in the organization know about the attack, and they do not share this information with the security team. This test usually requires equal effort for both the testing team and the organization’s security team.

Double-blind test: This test is like a blind test except the organization’s security team does not know that an attack is coming. Only a few individuals in the organization know about the attack, and they do not share this information with the security team. This test usually requires equal effort for both the testing team and the organization’s security team.

![]() Target test: Both the testing team and the organization’s security team are given maximum information about the network and the type of attack that will occur. This is the easiest test to complete but does not provide a full picture of the organization’s security.

Target test: Both the testing team and the organization’s security team are given maximum information about the network and the type of attack that will occur. This is the easiest test to complete but does not provide a full picture of the organization’s security.

Penetration testing is also divided into categories based on the amount of information to be provided. The main categories that you should be familiar with include the following:

![]() Zero-knowledge test: The testing team is provided with no knowledge regarding the organization’s network. The testing team can use any means available to obtain information about the organization’s network. This is also referred to as closed, or black-box, testing.

Zero-knowledge test: The testing team is provided with no knowledge regarding the organization’s network. The testing team can use any means available to obtain information about the organization’s network. This is also referred to as closed, or black-box, testing.

![]() Partial-knowledge test: The testing team is provided with public knowledge regarding the organization’s network. Boundaries might be set for this type of test.

Partial-knowledge test: The testing team is provided with public knowledge regarding the organization’s network. Boundaries might be set for this type of test.

![]() Full-knowledge test: The testing team is provided with all available knowledge regarding the organization’s network. This test is focused more on what attacks can be carried out.

Full-knowledge test: The testing team is provided with all available knowledge regarding the organization’s network. This test is focused more on what attacks can be carried out.

Penetration testing applications include Metasploit, Wireshark, Core Impact, Nessus, BackTrack, Cain & Abel, Kali Linux, and John the Ripper. When selecting a penetration testing tool, you should first determine which systems you want to test. Then research the different tools to discover which can perform the tests that you want to perform for those systems and research the tools’ methodologies for testing. In addition, the organization needs to select the correct individual to carry out the test. Remember that penetration tests should include manual methods as well as automated methods because relying on only one of these two will not yield a thorough result.

Table 6-1 compares vulnerability assessments and penetration tests.

Log Reviews

A log is a recording of events that occur on an organizational asset, including systems, networks, devices, and facilities. Each entry in a log covers a single event that occurs on the asset. In most cases, there are separate logs for different event types, including security logs, operating system logs, and application logs. Because so many logs are generated on a single device, many organizations have trouble ensuring that the logs are reviewed in a timely manner. Log review, however, is probably one of the most important steps an organization can take to ensure that issues are detected before they become major problems.

Computer security logs are particularly important because they can help an organization identify security incidents, policy violations, and fraud. Log management ensures that computer security logs are stored in sufficient detail for an appropriate period of time so that auditing, forensic analysis, investigations, baselines, trends, and long-term problems can be identified.

The National Institute of Standards and Technology (NIST) has provided two special publications that relate to log management: NIST SP 800-92, “Guide to Computer Security Log Management,” and NIST SP 800-137, “Information Security Continuous Monitoring (ISCM) for Federal Information Systems and Organizations.” While both of these special publications are primarily used by federal government agencies and organizations, other organizations may want to use them as well because of the wealth of information they provide. The following section covers NIST SP 800-92, and NIST SP 800-137 is discussed later in this chapter.

NIST SP 800-92

NIST SP 800-92 makes the following recommendations for more efficient and effective log management:

![]() Organizations should establish policies and procedures for log management. As part of the planning process, an organization should:

Organizations should establish policies and procedures for log management. As part of the planning process, an organization should:

![]() Define its logging requirements and goals.

Define its logging requirements and goals.

![]() Develop policies that clearly define mandatory requirements and suggested recommendations for log management activities.

Develop policies that clearly define mandatory requirements and suggested recommendations for log management activities.

![]() Ensure that related policies and procedures incorporate and support the log management requirements and recommendations.

Ensure that related policies and procedures incorporate and support the log management requirements and recommendations.

![]() Management should provide the necessary support for the efforts involving log management planning, policy, and procedures development.

Management should provide the necessary support for the efforts involving log management planning, policy, and procedures development.

![]() Organizations should prioritize log management appropriately throughout the organization.

Organizations should prioritize log management appropriately throughout the organization.

![]() Organizations should create and maintain a log management infrastructure.

Organizations should create and maintain a log management infrastructure.

![]() Organizations should provide proper support for all staff with log management responsibilities.

Organizations should provide proper support for all staff with log management responsibilities.

![]() Organizations should establish standard log management operational processes. This includes ensuring that administrators:

Organizations should establish standard log management operational processes. This includes ensuring that administrators:

![]() Monitor the logging status of all log sources.

Monitor the logging status of all log sources.

![]() Monitor log rotation and archival processes.

Monitor log rotation and archival processes.

![]() Check for upgrades and patches to logging software and acquire, test, and deploy them.

Check for upgrades and patches to logging software and acquire, test, and deploy them.

![]() Ensure that each logging host’s clock is synchronized to a common time source.

Ensure that each logging host’s clock is synchronized to a common time source.

![]() Reconfigure logging as needed based on policy changes, technology changes, and other factors.

Reconfigure logging as needed based on policy changes, technology changes, and other factors.

![]() Document and report anomalies in log settings, configurations, and processes.

Document and report anomalies in log settings, configurations, and processes.

According to this publication, common log management infrastructure components include general functions (log parsing, event filtering, and event aggregation), storage (log rotation, log archival, log reduction, log conversion, log normalization, and log file integrity checking), log analysis (event correlation, log viewing, and log reporting), and log disposal (log clearing.)

Syslog provides a simple framework for log entry generation, storage, and transfer that any operating system, security software, or application could use if designed to do so. Many log sources either use syslog as their native logging format or offer features that allow their log formats to be converted to syslog format. Each syslog message has only three parts. The first part specifies the facility and severity as numerical values. The second part of the message contains a timestamp and the hostname or IP address of the source of the log. The third part is the actual log message content.

No standard fields are defined within the message content; it is intended to be human-readable and not easily machine-parsable. This provides very high flexibility for log generators, which can place whatever information they deem important within the content field, but it makes automated analysis of the log data very challenging. A single source may use many different formats for its log message content, so an analysis program would need to be familiar with each format and be able to extract the meaning of the data within the fields of each format. This problem becomes much more challenging when log messages are generated by many sources. It might not be feasible to understand the meaning of all log messages, so analysis might be limited to keyword and pattern searches. Some organizations design their syslog infrastructures so that similar types of messages are grouped together or assigned similar codes, which can make log analysis automation easier to perform.

As log security has become a greater concern, several implementations of syslog have been created that place greater emphasis on security. Most have been based on IETF’s RFC 3195, which was designed specifically to improve the security of syslog. Implementations based on this standard can support log confidentiality, integrity, and availability through several features, including reliable log delivery, transmission confidentiality protection, and transmission integrity protection and authentication.

Security information and event management (SIEM) products allow administrators to consolidate all security information logs. This consolidation ensures that administrators can perform analysis on all logs from a single resource rather than having to analyze each log on its separate resource. Most SIEM products support two ways of collecting logs from log generators:

![]() Agentless: The SIEM server receives data from the individual hosts without needing to have any special software installed on those hosts. Some servers pull logs from the hosts, which is usually done by having the server authenticate to each host and retrieve its logs regularly. In other cases, the hosts push their logs to the server, which usually involves each host authenticating to the server and transferring its logs regularly. Regardless of whether the logs are pushed or pulled, the server then performs event filtering and aggregation and log normalization and analysis on the collected logs.

Agentless: The SIEM server receives data from the individual hosts without needing to have any special software installed on those hosts. Some servers pull logs from the hosts, which is usually done by having the server authenticate to each host and retrieve its logs regularly. In other cases, the hosts push their logs to the server, which usually involves each host authenticating to the server and transferring its logs regularly. Regardless of whether the logs are pushed or pulled, the server then performs event filtering and aggregation and log normalization and analysis on the collected logs.

![]() Agent-based: An agent program is installed on the host to perform event filtering and aggregation and log normalization for a particular type of log. The host then transmits the normalized log data to the SIEM server, usually on a real-time or near-real-time basis for analysis and storage. Multiple agents may need to be installed if a host has multiple types of logs of interest. Some SIEM products also offer agents for generic formats such as syslog and Simple Network Management Protocol (SNMP). A generic agent is used primarily to get log data from a source for which a format-specific agent and an agentless method are not available. Some products also allow administrators to create custom agents to handle unsupported log sources.

Agent-based: An agent program is installed on the host to perform event filtering and aggregation and log normalization for a particular type of log. The host then transmits the normalized log data to the SIEM server, usually on a real-time or near-real-time basis for analysis and storage. Multiple agents may need to be installed if a host has multiple types of logs of interest. Some SIEM products also offer agents for generic formats such as syslog and Simple Network Management Protocol (SNMP). A generic agent is used primarily to get log data from a source for which a format-specific agent and an agentless method are not available. Some products also allow administrators to create custom agents to handle unsupported log sources.

There are advantages and disadvantages to each method. The primary advantage of the agentless approach is that agents do not need to be installed, configured, and maintained on each logging host. The primary disadvantage is the lack of filtering and aggregation at the individual host level, which can cause significantly larger amounts of data to be transferred over networks and increase the amount of time it takes to filter and analyze the logs. Another potential disadvantage of the agentless method is that the SIEM server may need credentials for authenticating to each logging host. In some cases, only one of the two methods is feasible; for example, there might be no way to remotely collect logs from a particular host without installing an agent onto it.

SIEM products usually include support for several dozen types of log sources, such as OSs, security software, application servers (e.g., web servers, email servers), and even physical security control devices such as badge readers. For each supported log source type, except for generic formats such as syslog, the SIEM products typically know how to categorize the most important logged fields. This significantly improves the normalization, analysis, and correlation of log data over that performed by software with a less granular understanding of specific log sources and formats. Also, the SIEM software can perform event reduction by disregarding data fields that are not significant to computer security, potentially reducing the SIEM software’s network bandwidth and data storage usage.

Typically, system, network, and security administrators are responsible for managing logging on their systems, performing regular analysis of their log data, documenting and reporting the results of their log management activities, and ensuring that log data is provided to the log management infrastructure in accordance with the organization’s policies. In addition, some of the organization’s security administrators act as log management infrastructure administrators, with responsibilities such as the following:

![]() Contact system-level administrators to get additional information regarding an event or to request that they investigate a particular event.

Contact system-level administrators to get additional information regarding an event or to request that they investigate a particular event.

![]() Identify changes needed to system logging configurations (e.g., which entries and data fields are sent to the centralized log servers, what log format should be used) and inform system-level administrators of the necessary changes.

Identify changes needed to system logging configurations (e.g., which entries and data fields are sent to the centralized log servers, what log format should be used) and inform system-level administrators of the necessary changes.

![]() Initiate responses to events, including incident handling and operational problems (e.g., a failure of a log management infrastructure component).

Initiate responses to events, including incident handling and operational problems (e.g., a failure of a log management infrastructure component).

![]() Ensure that old log data is archived to removable media and disposed of properly once it is no longer needed.

Ensure that old log data is archived to removable media and disposed of properly once it is no longer needed.

![]() Cooperate with requests from legal counsel, auditors, and others.

Cooperate with requests from legal counsel, auditors, and others.

![]() Monitor the status of the log management infrastructure (e.g., failures in logging software or log archival media, failures of local systems to transfer their log data) and initiate appropriate responses when problems occur.

Monitor the status of the log management infrastructure (e.g., failures in logging software or log archival media, failures of local systems to transfer their log data) and initiate appropriate responses when problems occur.

![]() Test and implement upgrades and updates to the log management infrastructure’s components.

Test and implement upgrades and updates to the log management infrastructure’s components.

![]() Maintain the security of the log management infrastructure.

Maintain the security of the log management infrastructure.

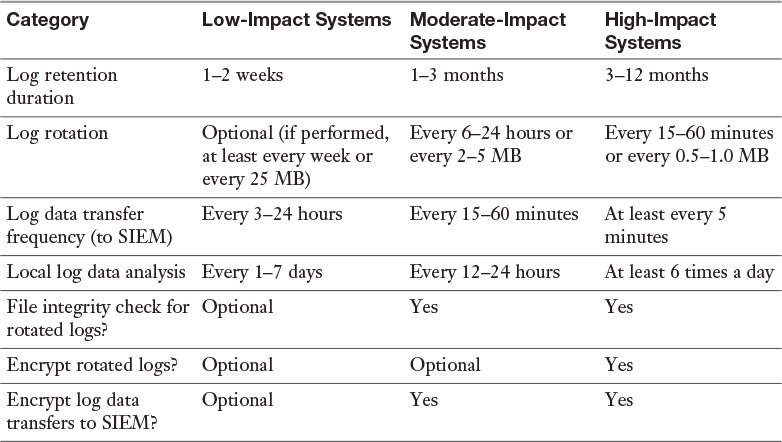

Organizations should develop policies that clearly define mandatory requirements and suggested recommendations for several aspects of log management, including log generation, log transmission, log storage and disposal, and log analysis. Table 6-2 gives examples of logging configuration settings that an organization can use. The types of values defined in Table 6-2 should only be applied to the hosts and host components previously specified by the organization as ones that must or should log security-related events.

Synthetic Transactions

Synthetic transaction monitoring, which is a type of proactive monitoring, is often preferred for websites and applications. It provides insight into the availability and performance of an application and warns of any potential issue before users experience any degradation in application behavior. It uses external agents to run scripted transactions against an application. For example, Microsoft’s System Center Operations Manager uses synthetic transactions to monitor databases, websites, and TCP port usage.

In contrast, real user monitoring (RUM), which is a type of passive monitoring, captures and analyzes every transaction of every application or website user. Unlike synthetic monitoring, which attempts to gain performance insights by regularly testing synthetic interactions, RUM cuts through the guesswork by seeing exactly how users are interacting with the application.

Code Review and Testing

Code review and testing must occur throughout the entire system or application development life cycle. The goal of code review and testing is to identify bad programming patterns, security misconfigurations, functional bugs, and logic flaws.

In the planning and design phase, code review and testing includes architecture security reviews and threat modeling. In the development phase, code review and testing includes static source code analysis, manual code review, static binary code analysis, and manual binary review. Once an application is deployed, code review and testing involves penetration testing, vulnerability scanning, and fuzz testing.

Formal code review involves a careful and detailed process with multiple participants and multiple phases. In this type of code review, software developers attend meetings where each line of code is reviewed, usually using printed copies. Lightweight code review typically requires less overhead than formal code inspections, though it can be equally effective when done properly and includes the following:

![]() Over-the-shoulder: One developer looks over the author’s shoulder as the author walks through the code.

Over-the-shoulder: One developer looks over the author’s shoulder as the author walks through the code.

![]() Email pass-around: Source code is emailed to reviewers automatically after the code is checked in.

Email pass-around: Source code is emailed to reviewers automatically after the code is checked in.

![]() Pair programming: Two authors develop code together at the same workstation.

Pair programming: Two authors develop code together at the same workstation.

![]() Tool-assisted code review: Authors and reviewers use tools designed for peer code review.

Tool-assisted code review: Authors and reviewers use tools designed for peer code review.

Black-box testing occurs when no internal details of the system are known. White-box testing occurs when the source code is known. Other types of testing include dynamic versus static testing and manual versus automatic testing.

Misuse Case Testing

Misuse case testing, also referred to as negative testing, tests an application to ensure that the application can handle invalid input or unexpected behavior. This testing is completed to ensure that an application will not crash and to improve the quality of an application by identifying its weak points. When misuse cast testing is performed, organizations should expect to find issues. Misuse testing should include testing that looks for the following:

![]() Required fields must be populated.

Required fields must be populated.

![]() Fields with a defined data type can only accept data that is the required data type.

Fields with a defined data type can only accept data that is the required data type.

![]() Fields with character limits allow only the configured number of characters.

Fields with character limits allow only the configured number of characters.

![]() Fields with a defined data range accept only data within that range.

Fields with a defined data range accept only data within that range.

![]() Fields accept only valid data.

Fields accept only valid data.

Test Coverage Analysis

Test coverage analysis uses test cases that are written against the application requirements specifications. Individuals involved in this analysis do not need to see the code to write the test cases. Once a document that describes all the test cases is written, test groups refer to a percentage of the test cases that were run, that passed, that failed, and so on. The application developer usually performs test coverage analysis as a part of unit testing. Quality assurance groups use overall test coverage analysis to indicate test metrics and coverage according to the test plan.

Test coverage analysis creates additional test cases to increase coverage. It helps developers find areas of an application not exercised by a set of test cases. It helps in determining a quantitative measure of code coverage, which indirectly measures the quality of the application or product.

One disadvantage of code coverage measurement is that it measures coverage of what the code covers but cannot test what the code does not cover or what has not been written. In addition, this analysis looks at a structure or function that already exists and not those that do not yet exist.

Interface Testing

Interface testing evaluates whether an application’s systems or components correctly pass data and control to one another. It verifies whether module interactions are working properly and errors are handled correctly. Interfaces that should be tested include client interfaces, server interfaces, remote interfaces, graphical user interfaces (GUIs), application programming interfaces (APIs), external and internal interfaces, and physical interfaces.

GUI testing involves testing a product’s GUI to ensure that it meets its specifications through the use of test cases. API testing tests APIs directly in isolation and as part of the end-to-end transactions exercised during integration testing to determine whether the APIs return the correct responses.

Collect Security Process Data

After security controls are tested, organizations must ensure that they collect the appropriate security process data. NIST SP 800-137 provides guidelines for developing an information security continuous monitoring (ISCM) program. Security professionals should ensure that security process data that is collected includes account management, management review, key performance and risk indicators, backup verification data, training and awareness, and disaster recovery and business continuity.

NIST SP 800-137

According to NIST SP 800-137, ISCM is defined as maintaining ongoing awareness of information security, vulnerabilities, and threats to support organizational risk management decisions. Organizations should take the following steps to establish, implement, and maintain ISCM:

1. Define an ISCM strategy based on risk tolerance that maintains clear visibility into assets, awareness of vulnerabilities, up-to-date threat information, and mission/business impacts.

2. Establish an ISCM program that includes metrics, status monitoring frequencies, control assessment frequencies, and an ISCM technical architecture.

3. Implement an ISCM program and collect the security-related information required for metrics, assessments, and reporting. Automate collection, analysis, and reporting of data where possible.

4. Analyze the data collected, report findings, and determine the appropriate responses. It may be necessary to collect additional information to clarify or supplement existing monitoring data.

5. Respond to findings with technical, management, and operational mitigating activities or acceptance, transference/sharing, or avoidance/rejection.

6. Review and update the monitoring program, adjusting the ISCM strategy and maturing measurement capabilities to increase visibility into assets and awareness of vulnerabilities, further enable data-driven control of the security of an organization’s information infrastructure, and increase organizational resilience.

Account Management

Account management is important because it involves the addition and deletion of accounts that are granted access to systems or networks. But account management also involves changing the permissions or privileges granted to those accounts. If account management is not monitored and recorded properly, organizations may discover that accounts have been created for the sole purpose of carrying out fraudulent or malicious activities. Two-person controls should be used with account management, often involving one administrator who creates accounts and another who assigns those accounts the appropriate permissions or privileges.

Escalation and revocation are two terms that are important to security professionals. Account escalation occurs when a user account is granted more permission based on new job duties or a complete job change. Security professionals should fully analyze a user’s needs prior to changing the current permissions or privileges, making sure to grant only permissions or privileges that are needed for the new task and to remove those that are no longer needed. Without such analysis, users may be able to retain permissions that cause possible security issues because separation of duties is no longer retained. For example, suppose a user is hired in the accounts payable department to print out all vendor checks. Later this user receives a promotion to approve payment for the same accounts. If this user’s old permission to print checks is not removed, this single user would be able to both approve the checks and print them, which is a direct violation of separation of duties.

Account revocation occurs when a user account is revoked because a user is no longer with an organization. Security professionals must keep in mind that there will be objects that belong to this user. If the user account is simply deleted, access to the objects owned by the user may be lost. It may be a better plan to disable the account for a certain period. Account revocation policies should also distinguish between revoking an account for a user who resigns from an organization and revoking an account for a user who is terminated.

Management Review

Management review of security process data should be mandatory. No matter how much data an organization collects on its security processes, the data is useless if it is never reviewed by an administrator. Guidelines and procedures should be established to ensure that management review occurs in a timely manner. Without regular review, even the most minor security issue can be quickly turned into a major security breach.

Key Performance and Risk Indicators

By using key performance and risk indicators of security process data, organizations better identify when security risks are likely to occur. Key performance indicators allow organizations to determine whether levels of performance are below or above established norms. Key risk indicators allow organizations to identify whether certain risks are more or less likely to occur.

NIST has released the Framework for Improving Critical Infrastructure Cybersecurity, which focuses on using business drivers to guide cybersecurity activities and considering cybersecurity risks as part of the organization’s risk management processes. The framework consists of three parts: the Framework Core, the Framework Profiles, and the Framework Implementation Tiers.

The Framework Core is a set of cybersecurity activities, outcomes, and informative references that are common across critical infrastructure sectors, providing the detailed guidance for developing individual organizational profiles. The Framework Core consists of five concurrent and continuous functions—identify, protect, detect, respond, and recover.

After each function is identified, categories and subcategories for each function are recorded. The Framework Profiles are developed based on the business needs of the categories and subcategories. Through use of the Framework Profiles, the framework helps an organization align its cybersecurity activities with its business requirements, risk tolerances, and resources.

The Framework Tiers provide a mechanism for organizations to view and understand the characteristics of their approach to managing cybersecurity risk. The following tiers are used: Tier 1, partial; Tier 2, risk informed; Tier 3, repeatable; and Tier 4, adaptive.

Organizations will continue to have unique risks—different threats, different vulnerabilities, and different risk tolerances—and how they implement the practices in the framework will vary. Ultimately, the framework is aimed at reducing and better managing cybersecurity risks and is not a one-size-fits-all approach to managing cybersecurity.

Backup Verification Data

Any security process data that is collected should also be backed up. Security professionals should ensure that their organization has the appropriate backup and restore guidelines in place for all security process data. If data is not backed up properly, a failure can result in vital data being lost forever. In addition, personnel should test the restore process on a regular basis to make sure it works as it should. If an organization is unable to restore a backup properly, the organization might as well not have the backup.

Training and Awareness

All personnel must understand any security assessment and testing strategies that an organization employs. Technical personnel may need to be trained in the details about security assessment and testing, including security control testing and collecting security process data. Other personnel, however, only need to be given more awareness training on this subject. Security professionals should help personnel understand what type of assessment and testing occurs, what is captured by this process, and why this is important to the organization. Management must fully support the security assessment and testing strategy and must communicate to all personnel and stakeholders the importance of this program.

Disaster Recovery and Business Continuity

Any disaster recovery and business continuity plans that an organization develops must consider security assessment and testing, security control testing, and security process data collection. Often when an organization goes into disaster recovery mode, personnel do not think about these processes. As a matter of fact, ordinary security controls often fall by the wayside at such times. A security professional is responsible for ensuring that this does not happen. Security professionals involved in developing the disaster recovery and business continuity plans must cover all these areas.

Analyze and Report Test Outputs

Personnel should understand the automated and manual reporting that can be done as part of security assessment and testing. Output must be reported in a timely manner to management in order to ensure that they understand the value of this process. It may be necessary to provide different reports depending on the level of audience understanding. For example, high-level management may need only a summary of findings. But technical personnel should be given details of the findings to ensure that they can implement the appropriate controls to mitigate or prevent any risks found during security assessment and testing.

Personnel may need special training on how to run manual reports and how to analyze the report outputs.

Internal and Third-Party Audits

Organizations should conduct internal and third-party audits as part of any security assessment and testing strategy. These audits should test all security controls that are currently in place. The following are some guidelines to consider as part of a good security audit plan:

![]() At minimum, perform annual audits to establish a security baseline.

At minimum, perform annual audits to establish a security baseline.

![]() Determine your organization’s objectives for the audit and share them with the auditors.

Determine your organization’s objectives for the audit and share them with the auditors.

![]() Set the ground rules for the audit, including the dates/times of the audit, before the audit starts.

Set the ground rules for the audit, including the dates/times of the audit, before the audit starts.

![]() Choose auditors who have security experience.

Choose auditors who have security experience.

![]() Involve business unit managers early in the process.

Involve business unit managers early in the process.

![]() Ensure that auditors rely on experience, not just checklists.

Ensure that auditors rely on experience, not just checklists.

![]() Ensure that the auditor’s report reflects risks that the organization has identified.

Ensure that the auditor’s report reflects risks that the organization has identified.

![]() Ensure that the audit is conducted properly.

Ensure that the audit is conducted properly.

![]() Ensure that the audit covers all systems and all policies and procedures.

Ensure that the audit covers all systems and all policies and procedures.

![]() Examine the report when the audit is complete.

Examine the report when the audit is complete.

Many regulations today require that audits occur. Organizations used to rely on Statement on Auditing Standards (SAS) 70, which provided auditors information and verification about data center controls and processes related to data center users and their financial reporting. A SAS 70 audit verified that the controls and processes set in place by a data center are actually followed. The Statements on Standards for Attestation Engagement (SSAE) 16 is a newer standard that verifies the controls and processes and also requires a written assertion regarding the design and operating effectiveness of the controls being reviewed.

An SSAE 16 audit results in a Service Organization Control (SOC) 1 report. This report focuses on internal controls over financial reporting. There are two types of SOC 1 reports:

![]() SOC 1, Type 1 report: Focuses on the auditors’ opinion of the accuracy and completeness of the data center management’s design of controls, system, and/or service.

SOC 1, Type 1 report: Focuses on the auditors’ opinion of the accuracy and completeness of the data center management’s design of controls, system, and/or service.

![]() SOC 1, Type 2 report: Includes the Type 1 report as well as an audit of the effectiveness of controls over a certain time period, normally between six months and a year.

SOC 1, Type 2 report: Includes the Type 1 report as well as an audit of the effectiveness of controls over a certain time period, normally between six months and a year.

Two other report types are also available: SOC 2 and SOC 3. Both of these audits provide benchmarks for controls related to the security, availability, processing integrity, confidentiality, or privacy of a system and its information. A SOC 2 report includes service auditor testing and results, and a SOC 3 report provides only the system description and auditor opinion. A SOC 3 report is for general use and provides a level of certification for data center operators that assures data center users of facility security, high availability, and process integrity. Table 6-3 briefly compares the three types of SOC reports.

Exam Preparation Tasks

Review All Key Topics

Review the most important topics in this chapter, noted with the Key Topics icon in the outer margin of the page. Table 6-4 lists a reference of these key topics and the page numbers on which each is found.

Table 6-4 Key Topics for Chapter 6

Define Key Terms

Define the following key terms from this chapter and check your answers in the glossary:

information security continuous monitoring (ISCM)

Review Questions

1. For which of the following penetration tests does the testing team know an attack is coming but have limited knowledge of the network systems and devices and only publicly available information?

a. target test

b. physical test

c. blind test

d. double-blind test

2. Which of the following is NOT a guideline according to NIST SP 800-92?

a. Organizations should establish policies and procedures for log management.

b. Organizations should create and maintain a log management infrastructure.

c. Organizations should prioritize log management appropriately throughout the organization.

d. Choose auditors with security experience.

3. According to NIST SP 800-92, which of the following are facets of log management infrastructure? (Choose all that apply.)

a. general functions (log parsing, event filtering, and event aggregation)

b. storage (log rotation, log archival, log reduction, log conversion, log normalization, log file integrity checking)

c. log analysis (event correlation, log viewing, log reporting)

d. log disposal (log clearing)

4. What are the two ways of collecting logs using security information and event management (SIEM) products, according to NIST SP 800-92?

a. passive and active

b. agentless and agent-based

c. push and pull

d. throughput and rate

5. Which monitoring method captures and analyzes every transaction of every application or website user?

a. RUM

b. synthetic transaction monitoring

c. code review and testing

d. misuse case testing

6. Which type of testing is also known as negative testing?

a. RUM

b. synthetic transaction monitoring

c. code review and testing

d. misuse case testing

7. What is the first step of the information security continuous monitoring (ISCM) plan, according to NIST SP 800-137?

a. Establish an ISCM program.

b. Define the ISCM strategy.

c. Implement an ISCM program.

d. Analyze the data collected.

8. What is the second step of the information security continuous monitoring (ISCM) plan, according to NIST SP 800-137?

a. Establish an ISCM program.

b. Define the ISCM strategy.

c. Implement an ISCM program.

d. Analyze the data collected.

9. Which of the following is NOT a guideline for internal and third-party audits?

a. Choose auditors with security experience.

b. Involve business unit managers early in the process.

c. At minimum, perform bi-annual audits to establish a security baseline.

d. Ensure that the audit covers all systems and all policies and procedures.

10. Which SOC report should be shared with the general public?

a. SOC 1, Type 1

b. SOC 1, Type 2

c. SOC 2

d. SOC 3

Answers and Explanations

1. c. With a blind test, the testing team knows an attack is coming and has limited knowledge of the network systems and devices and publicly available information. A target test occurs when the testing team and the organization’s security team are given maximum information about the network and the type of attack that will occur. A physical test is not a type of penetration test. It is a type of vulnerability assessment. A double-blind test is like a blind test except that the organization’s security team does not know an attack is coming.

2. d. NIST SP 800-92 does not include any information regarding auditors. So the “Choose auditors with security experience” option is NOT a guideline according to NIST SP 800-92.

3. a, b, c, d. According to NIST SP 800-92, log management functions should include general functions (log parsing, event filtering, and event aggregation), storage (log rotation, log archival, log reduction, log conversion, log normalization, log file integrity checking), log analysis (event correlation, log viewing, log reporting), and log disposal (log clearing).

4. b. The two ways of collecting logs using security information and event management (SIEM) products, according to NIST SP 800-92, are agentless and agent-based.

5. a. Real user monitoring (RUM) captures and analyzes every transaction of every application or website user.

6. d. Misuse case testing is also known as negative testing.

7. b. The steps in an ISCM program, according to NIST SP 800-137, are:

1. Define an ISCM strategy.

2. Establish an ISCM program.

3. Implement an ISCM program.

4. Analyze the data collected, and report findings.

5. Respond to findings.

6. Review and update the monitoring program.

8. a. The steps in an ISCM program, according to NIST SP 800-137, are:

1. Define an ISCM strategy.

2. Establish an ISCM program.

3. Implement an ISCM program.

4. Analyze the data collected, and report findings.

5. Respond to findings.

6. Review and update the monitoring program.

9. c. The following are guidelines for internal and third-party audits:

![]() At minimum, perform annual audits to establish a security baseline.

At minimum, perform annual audits to establish a security baseline.

![]() Determine your organization’s objectives for the audit and share them with the auditors.

Determine your organization’s objectives for the audit and share them with the auditors.

![]() Set the ground rules for the audit, including the dates/times of the audit, before the audit starts.

Set the ground rules for the audit, including the dates/times of the audit, before the audit starts.

![]() Choose auditors who have security experience.

Choose auditors who have security experience.

![]() Involve business unit managers early in the process.

Involve business unit managers early in the process.

![]() Ensure that auditors rely on experience, not just checklists.

Ensure that auditors rely on experience, not just checklists.

![]() Ensure that the auditor’s report reflects risks that the organization has identified.

Ensure that the auditor’s report reflects risks that the organization has identified.

![]() Ensure that the audit is conducted properly.

Ensure that the audit is conducted properly.

![]() Ensure that the audit covers all systems and all policies and procedures.

Ensure that the audit covers all systems and all policies and procedures.

![]() Examine the report when the audit is complete.

Examine the report when the audit is complete.

10. d. SOC 3 is the only SOC report that should be shared with the general public.