CHAPTER 12

Chaos, Noise, and Epistemology in the Digital Age

In 1997, the most formidable chess player in the world was defeated by a machine in a six‐game match. The world watched with bated breath as Garry Kasparov, who had held the title of world champion for 12 years, represented humankind against the machine. For those packed into the sold‐out seats in the television studio and the millions of viewers who tuned into the matches at home,1 this had little to do with computation, simulation, or pattern recognition. Because chess is considered both an art and a science, blending left and right brain thinking, it substituted as a match of human intelligence versus machine intelligence.

Thirteen years after The Terminator was released, an emblem of human genius had been conquered by machines.

Chaos, Noise, and the Three Logical Fallacies

That story and the form of its telling is familiar to many. The tempting takeaway is that machines have reached another critical milestone in catching up to human intelligence, but further examination reveals three fallacies commonly at play in this assumption.

First, the slippery slope fallacy: an argument in which a party asserts or assumes that a small first step leads to a chain of related events, culminating in some significant (usually negative) effect. There are those who raise this argument with each step technology takes in any direction.

But human consciousness is irreducibly complex. The path to recreating consciousness is not an accumulation of use cases or systems that can then be assembled into a human‐like intelligence. The machine that beat Garry Kasparov (IBM's Deep Blue) took 12 years to develop, starting as a dissertation project by two researchers at Carnegie Mellon University in 1985, landing them both positions at IBM Research in 1989, where the team grew to six researchers who ultimately developed a machine with 32 processors that can analyze 200 million possible chess positions per second.2 From a computer science perspective, this was a phenomenal breakthrough. This machine cannot, however, be combined with the machine that beat the world Go champion (DeepMind's AlphaGo) to stimulate consciousness, nor was it designed with that end in mind.

Second, a surprising number of people fall prey to the non sequitur fallacy when it comes to machines: an argument in which a conclusion does not follow logically from what precedes it.

When an article boasts of a machine's ability to analyze 200 million positions per second, or puts it in terms of how many years it would take a human to analyze the same number of positions, the non sequitur argument, boiled down to its simplest form, is: machines analyze chess positions faster than humans do; therefore machines can learn poetry and become conscious. An error remains even in the construct of this statement (beyond the obvious logical fallacy)—did you catch it? The use of the word “analyze” personifies the machine. Put more precisely and simply: machines apply math or logic programmed by humans faster than humans do; therefore machines can learn poetry and become conscious.

The ability to perform mathematical calculations at lightning speed, weighing probable outcomes based on a predetermined list of objectives and rules set by human experts, is impressive and useful, but it does not mean that the machine understands chess, nor can it venture beyond chess to any other pool of knowledge, any more than a fast calculator can understand the underlying concept of the oranges divided among friends in a math problem, let alone the concepts of friends or the classroom.

Third, the appeal to authority fallacy: the argument that if a credible source believes something, then it must be true.

This is a tricky topic because some of the voices that have spoken publicly about their concerns with regard to the development of artificial intelligence are regarded as heroes, and have deep credibility in their respective fields. The fog of confusion that often surrounds press about statements from these industry or academic leaders can be lifted with two questions.

First, what did they actually say?

Second, what is their field of expertise?

On December 2, 2014, the British Broadcasting Corporation (BBC) released an article titled: “Stephen Hawking Warns Artificial Intelligence Could End Mankind.” The online post included a five‐minute snippet of an interview with Professor Stephen Hawking, one of Britain's preeminent scientists, transcribed as follows:

Interviewer (Rory Cellan‐Jones):

Stephen Hawking:

Within hours, dozens of articles were released by reputable journalists from established media outlets. Here are some of their titles and first lines:

- “Does Rampant AI Threaten Humanity?,” BBC News

“Pity the poor meat bags.”4

- “‘Artificial Intelligence Could Spell End of Human Race’ – Stephen Hawking,” The Guardian

“Technology will eventually become self‐aware and supersede humanity, says astrophysicist.”5

- “5 Very Smart People Who Think Artificial Intelligence Could Bring the Apocalypse,” Time

“On the list of doomsday scenarios that could wipe out the human race, super‐smart killer robots rate pretty high in the public consciousness.”6

- “Beware the Robots, Says Hawking,” Forbes

“British physicist Stephen Hawking has warned of the apocalyptic threat artificial intelligence (AI) poses to people[…]”7

- “Sure, Artificial Intelligence May End Our World, But That Is Not the Main Problem,” Wired

“The robots will rise, we're told. The machines will assume control. For decades we have heard these warnings and fears about artificial intelligence taking over and ending humankind.”8

Those are some heavy titles, which undoubtedly received a significant degree of attention. The goal of sharing these examples is not to address or change the media, but to equip individuals to find the signal in the noise, which requires returning to what Professor Hawking actually said: “I think the development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence, it would take off on its own […].” There are three important qualifiers in his statement: “full,” “could,” and “would.” Full artificial intelligence is used interchangeably with artificial general intelligence, defined as a machine capable of understanding the world as well as any human, and with the same capacity to learn how to carry out any range of tasks without any additional programming. As of this writing, this kind of system does not exist, nor would the number of researchers and technological breakthroughs required as a predecessor be economically viable. Professor Hawking is speaking of a theoretical situation, as indicated by the use of “could” and “would,” in which the development of full artificial intelligence could spell the end of the human race, as the artificial intelligence would take off on its own.

Having answered the question “What did they actually say?,” the second question is “What is their field of expertise?” If the foremost leader in chemical engineering made a prediction about artificial intelligence, it would be easy, though misguided, to generalize credibility from their field of expertise to the field of computer science, and vice versa. This is not to say that the prediction would inherently be incorrect, but that more digging is required and the prediction should not be taken at face value, particularly when it comes to an existential topic.

Epistemology in the Digital Age

Michelangelo Buonarroti's quotation, “I saw the angel in the marble and carved until I set him free,” is surprisingly applicable to the discussion of humans and machines. If a person's mental picture of a given topic, such as artificial intelligence, begins as a block of marble, an article title alone can be enough to chisel into the stone. A conversation with a colleague, a movie or television show, another article, a rumor about a project at work, a post on social media—as these accumulate, a rough shape begins to emerge. This becomes dangerous when decisions are made based on these rough sketches, especially if each attempt to chisel into the marble is not closely examined.

There are two methods by which to examine inbound information. The first is through zooming out to the whole picture. Returning to the story of Garry Kasparov and Deep Blue, there is another side of the picture that can be illuminated in asking why IBM would invest in an eight‐year journey with six researchers to beat a human at chess. The answer? To build and demonstrate computing capability. Put another way: to create more products, improve existing products, gain visibility, and deepen credibility.

IBM did this again with Jeopardy in 2011,9 and this approach has become an established marketing tactic for technology companies, as it is an engaging way to demonstrate meaningful technological breakthroughs. In 2013, DeepMind built and demonstrated their breakthroughs in reinforcement learning by creating a model that surpassed human expert abilities on Atari games, and they created a computer program (AlphaGo) that beat the Go world champion in 2015.10 The important takeaway is that these are not a part of a cohesive, coordinated advancement of artificial intelligence toward the goal of the singularity, as is often imagined or misinterpreted. Rather, corporations, academics, and research institutes are economically and reputationally incented to demonstrate the power of their platforms and research breakthroughs in memorable ways.

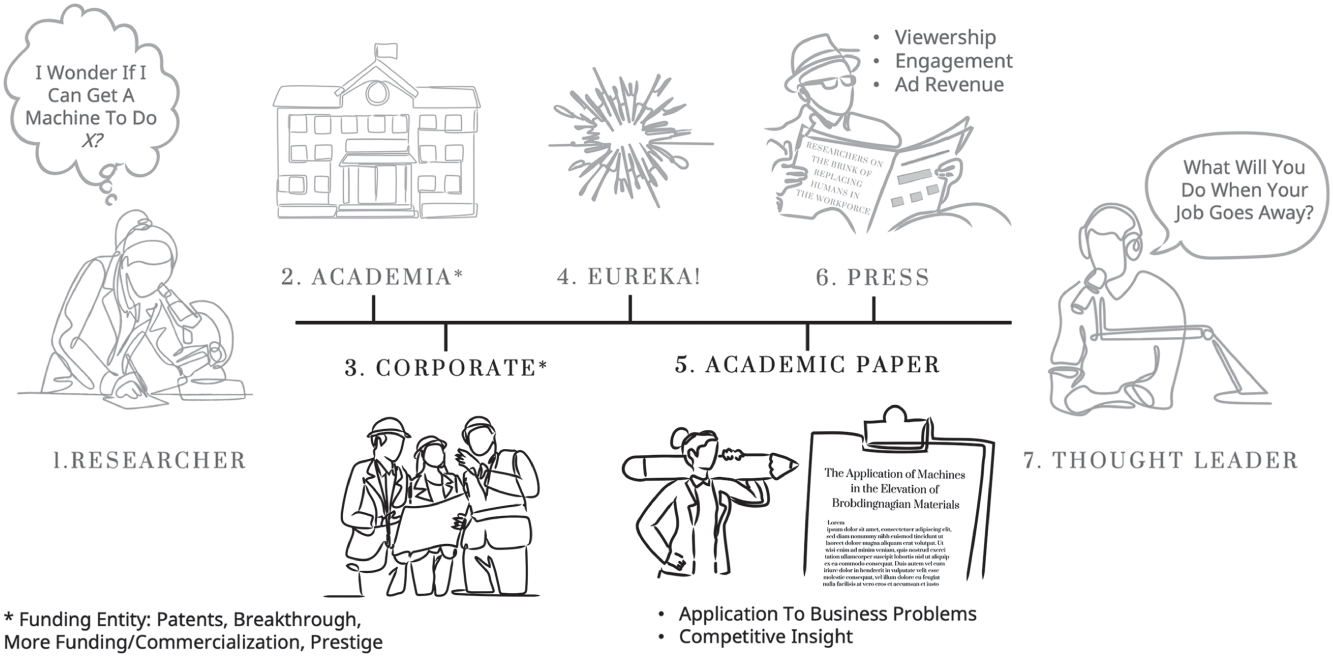

A second application of zooming out to the whole picture can be found in the current state of the life cycle of research, as visualized in Figure 12.1. Research almost always begins with a question of capability. Can a machine consistently beat a human at chess? Can a machine be used to detect cancer? Can a system be created that can accurately detect a spill in a manufacturing plan?

Once the scope of the research has been defined, the researchers then need to secure funding, whether through grants from governments, nonprofits, business partners, or, in the case of corporate researchers, from a central funding entity and/or business units who hope to apply the research to their business.

The eureka moment can arrive within months, after 12 years as in the case of Deep Blue, or it may never come. Leadership in research requires the agility to scrap and learn from research when it becomes clear that it is not feasible or viable to continue pursuing it, as well as the inverse: the resilience to continue funding research against uncertainty.

After the eureka moment, with documented certainty that the breakthrough is repeatable and demonstrable, an academic paper is written and submitted to a peer‐reviewed publication.—in this case, “The Application of Machines in the Elevation of Brobdingnagian Materials,” or “Using Machines to Lift Heavy Things.” For the sake of this example, it would be fair to assume that the researchers, as is commonly observed, speak to potential applications of their research in industry, such as “This type of technology could contribute to human safety in manufacturing and warehousing environments by reducing the need for humans to lift objects greater than 50 pounds.”

Figure 12.1 What Researchers See

From here, the research can take one or more of five paths, without consistent correlation to the quality of the research:

- Path 1: Business leaders endeavor to apply the research to real‐world applications and succeed.

- Path 2: Business leaders endeavor to apply the research to real‐world applications and fail.

- Path 3: Journalists write compelling, accurate accounts of the research.

- Path 4: Journalists write compelling, inaccurate accounts of the research.

- Path 5: The academic paper lives on in archives, but is neither discussed nor applied more broadly.

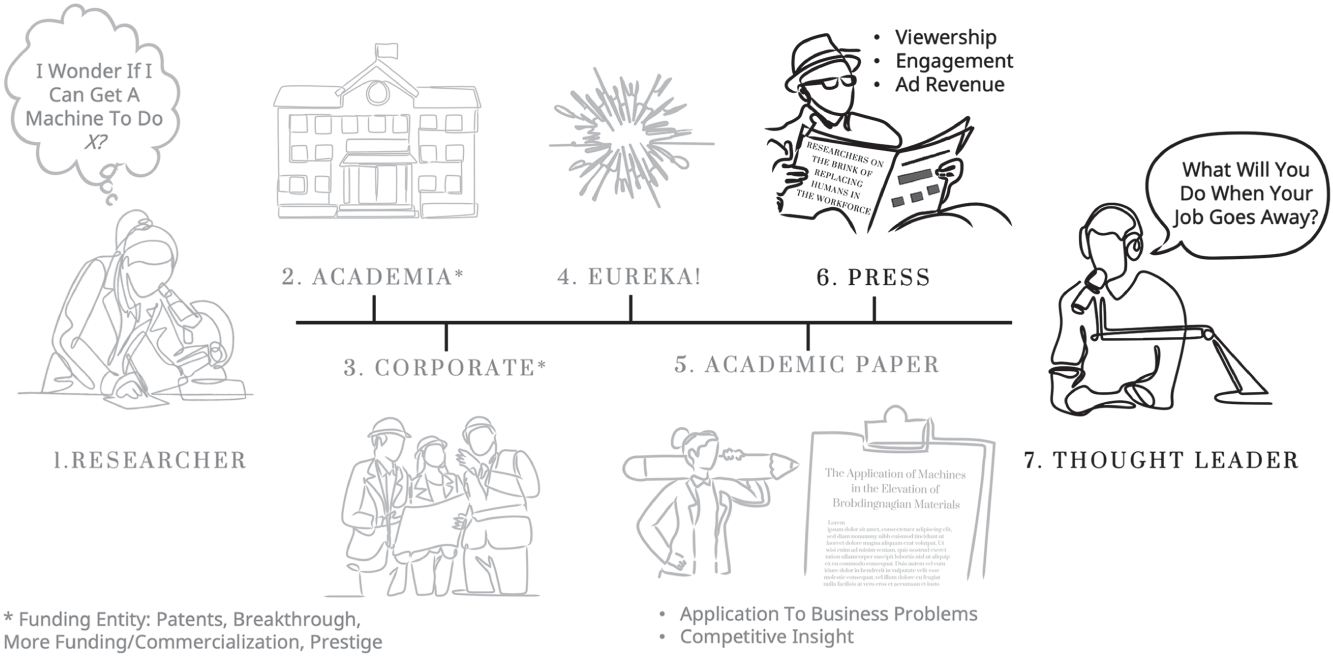

Some research travels concurrently down Paths 1–4. Others, such as in Figure 12.2, jump immediately to Path 4. Thought leaders subscribing to those media channels to gather content for potential posts may or may not see the original academic paper. It is therefore up to readers to dig deeper before allowing an attention‐grabbing post or article to influence their perception of a topic.

The second method by which to examine inbound information is through the lens of economic incentive. In the next chapter, we discuss testing economic incentives in business and advisory relationships, but for the purpose of navigating noise and chaos as it pertains to announcements, press releases, news articles, and posts online, the evaluation of economic incentive is critical to ascertaining the validity of the information.

Figure 12.2 What Business Leaders See

Consider, for example, if a professor at a prestigious institution were to write a paper titled “Why We Need to Stop Investing in Artificial Intelligence Research Immediately.”

Now consider if a vice president at a traditional automation company had written the paper instead.

How about an independent thought leader and keynote speaker?

When it is laid out as above, it becomes fairly clear where economic incentives intertwine with the message, regardless of its validity.

The vice president, for example, may be writing the paper in an effort to thwart research in a technology that is undermining the traditional automation business.

The thought leader may have chosen that title due to its stickiness, and pivot from the dogmatic title to a more general discussion about investments in artificial intelligence research, review trends, and end with a rhetorical question or call to action.

The professor is compelling because, depending on their position at the institution, they would either have no incentive to write such a paper, or there may even be a disincentive, which increases the likelihood that the professor truly believes in the premise of the paper.

Zooming out to see the whole picture in combination with the lens of economic incentive is the first step in gaining clarity amidst the sea of fog that has enveloped organizations and society in the digital age.

Figure 12.3 What the Average Professional Sees

These concepts and frameworks apply to the required evolution of epistemology in the digital era. Mastering the art of epistemology will be a key factor for effective leadership, as well as on the personal level, for ensuring that one is considering the correct existential questions in developing and maintaining a sense of self in the context of the human–machine paradigm. Epistemology, as defined by Merriam‐Webster's dictionary, is “the study or a theory of the nature and grounds of knowledge especially with reference to its limits and validity.”11 As philosophers tend to prefer (also much easier to remember), epistemology can be summarized as “How do you know that you know what you know?”

Reviewing each new piece of information or perspective, be it an article, a conversation, a book, or a meeting, and determining whether the three logical fallacies are at play, zooming out to the whole picture, and examining the economic incentive of the source of the information and of the subjects (both individuals and organizations) will increase confidence and clarity in what you know and whom to trust.

Notes

- 1 IBM, “Deep Blue,” IBM's 100 Icons of Progress, https://www.ibm.com/ibm/history/ibm100/us/en/icons/deepblue (accessed November 17, 2022).

- 2 Ibid.

- 3 R. Cellan‐Jones “Stephen Hawking Warns Artificial Intelligence Could End Mankind,” BBC News, December 2, 2014, https://www.bbc.com/news/technology-30290540 (accessed September 12, 2022).

- 4 W. Ward, “Does Rampant AI Threaten Humanity?,” BBC News, December 2, 2014, https://www.bbc.com/news/technology-30293863 (accessed September 12, 2022).

- 5 S. Clark, “Artificial Intelligence Could Spell End of Human Race: Stephen Hawking,” The Guardian, December 2, 2014, https://www.theguardian.com/science/2014/dec/02/stephen-hawking-intel-communication-system-astrophysicist-software-predictive-text-type (accessed September 12, 2022).

- 6 V. Luckerson, “5 Very Smart People Who Think Artificial Intelligence Could Bring the Apocalypse,” Time, December 2, 2014, https://time.com/3614349/artificial-intelligence-singularity-stephen-hawking-elon-musk/ (accessed September 12, 2022).

- 7 P. Rodgers, “Beware the Robots, Says Hawking,” Forbes, December 2, 2014, https://www.forbes.com/sites/paulrodgers/2014/12/03/computers-will-destroy-humanity-warns-stephen-hawking/ (accessed September 12, 2022).

- 8 M. Coeckelbergh, “Sure, Artificial Intelligence May End Our World, But That Is Not the Main Problem,” Wired, December 4, 2014, https://www.wired.com/2014/12/armageddon-is-not-the-ai-problem/ (accessed September 12, 2022).

- 9 IBM, “Deep Blue.”

- 10 DeepMind, AlphaGo, https://www.deepmind.com/research/highlighted-research/alphago (accessed November 17, 2022).

- 11 “Epistemology,” Merriam‐Webster dictionary, https://www.merriam-webster.com/dictionary/epistemology.