Practical agile integration

In this chapter we progressively build up a scenario that demonstrates use of the integration capabilities to solve some common modern application design challenges using agile integration techniques.

We begin by exposing data from a traditional data source over RESTful APIs. Then, we gradually build up to more sophisticated ways of making that same data, and data from other applications, available via modern microservice-style components.

We have arranged each section to be completely independent of the others, so there is no need to work through the sections in order. Each section is self-sufficient, and provides everything needed to build it out.

|

Note: The solution in each section is complete for a specific scenario. However, none of these solutions forms a global, end-to-end solution for agile integration.

|

This chapter has the following sections. See 6.1, “Introduction” on page 170 for a detailed description of each section:

•Introduction

•Application Integration to front a datastore with a basic API

•Expose an API using API Management

•Messaging for reliable asynchronous data update commands

•Consolidate the new IBM MQ based command pattern into the API

•Advanced API security

•Create event stream from messaging

•Perform event-driven SaaS integration

•Implementing a simple hybrid API

•Implement event sourced APIs

•REST and GraphQL based APIs

•API testing

•Large file movement using the claim check pattern

6.1 Introduction

The scenarios for this chapter are based on a common theme we see with customers. We often begin with a requirement from the business to provide access to a back-end system by fronting it with an API. However, this apparently simple requirement grows in complexity over time, typically to cater to increasingly challenging non-functional requirements such as performance and availability.

Our scenario begins with the basic requirement to make the data from a table in a traditional database available as an API. In our scenario this is a simple single table that holds information about "Products." But in a real scenario it could be multiple tables that are joined in various ways to provide product catalog information.We achieve this integration in its most basic form in section 6.2, “Application Integration to front a data store with a basic API” on page 173. Deliberately, we do this using the fine-grained cloud native style deployment that is fundamental to agile integration as discussed earlier in this book. This ensures that integrations are isolated from on another so they can be changed and scaled independently. This improves agility, resilience, and optimization of the underlying resources.

As shown in Figure 6-1, we then look at improving the exposure of the API, using API management to make the API more discoverable, enable consumers to self-subscribe to use APIs, and enable us to track and control usage of the APIs. This is the topic of 6.3, “Expose an API using API Management” on page 189.

Figure 6-1 Improving the exposure of the API using API management

As a result, we now have a self-contained business component that provides API-based access to product data, that can easily be reused to bring that data into new solutions.

Next comes the non-functional requirements. Although our integrations have been designed in a cloud native way such that they can scale, and indeed scale independently, the back-end database is still a bottleneck. There are times when the number of updates being made to the Product table is affecting the performance of the reads on that table. Indeed, the writes themselves start to take more time. The effect on the user experience of applications based on this API is becoming noticeable, reducing customer satisfaction. With our single-table scenario, clearly these performance issues would be unlikely to occur. But we can imagine a real multi-table scenario — with searches performing multi table joins, and updates

locking multiple tables in order to perform transactional updates with integrity — where performance issues of this type soon become an issue. We decide to tackle this problem on two fronts.

•Change the interaction pattern for updates to be asynchronous:

We change the way updates are performed such that they are done asynchronously after the requests has been acknowledged. Consumers of the API then get an immediate response to assure them that the updates will occur, enabling a much more responsive user interface. Furthermore, it means we can now throttle the rate at which we apply those updates to the database such that they have less effect on the performance of reads. We enable this in 6.4, “Messaging for reliable asynchronous data update commands” on page 207. We provide a route to performing updates asynchronously in a "fire-and-forget" pattern by placing them in a "command store" (in our case, IBM MQ), then responding immediately back to the caller with an acknowledgment. We then make this accessible to a broader audience in 6.5, “Consolidate the new IBM MQ based command pattern into the API” on page 258 by bringing the asynchronous update back into the HTTP based API. Effectively we hide the use of IBM MQ behind the scenes. It should be noted that we have now introduced eventual consistency (rather than immediate consistency). Updates don't occur at the time of the update request. The applications using the API need to take this into account in their design, but as a result they can enjoy the performance improvements.

•Provide a read-optimized datastore:

Now that Product data is so easily available through our API, it is being used in many new and innovative ways. Unfortunately, the way that the data is stored in the current database is poorly suited to the types of queries now being performed. These new queries perform slowly even with our move to asynchronous updates as the issues are more related to how data is aggregated. This may simply be because the new consumers want a very different representation of the Product data. Or it could be because it needs to be combined with other data such as Price before it is useful. To solve this, we decide to implement a new datastore, that is specifically optimized for these new queries. We of course need to keep this new datastore in sync with the original master Product database. To do this, in 6.7, “Create event stream from messaging” on page 321 we show how we can keep a note of all the changes that happen to the Product database and place them in an event store, which in our case is provided by IBM Event Streams. These updates can then be asynchronously applied to the read-optimized datastore as discussed in 6.10, “Implement event sourced APIs” on page 366. Note this further exacerbates the eventual consistency between the updates to the main database, and reads from the read-optimized datastore. However, since users of our API have already had to learn to code for this behavior when we separated out the commands, it should have minimal effect on their applications.

Figure 6-2 on page 172 shows the enhanced integration pattern with asynchronous updates and optimized reads.

Figure 6-2 Performance and availability improvements through eventual consistency patterns

From an integration standpoint, the patterns we've introduced are many decades old. Yet, modern application developers might see this set of patterns implemented together and recognize that it is an implementation of CQRS. CQRS stands for Command Query Responsibility Segregation. It essentially means providing independent paths for commands (create, update, delete) and queries (reads, searches, and so on), just as we have done in our scenario. Because this concept is familiar to many developers, we have tried to use the associated terms in our scenario where appropriate.

From the outside, our logical Product component looks much the same as it did in our first iteration, enabling access to Product data via an API. However, using agile integration techniques:

•The API is now more easily discovered, used, and controlled.

•The implementation is more performant and scalable.

•The patterns give us flexibility to rapidly implement new requirements without destabilizing what we have.

So, we have had a detailed look at how integration capabilities can be involved in the implementation of a reusable business component, from a simple API exposure through to a full CQRS-based implementation. Next, we look at how these business components might interact with one another.

In 6.9, “Implementing a simple hybrid API” on page 344, we look at our new API from the consumers point of view. We consider how much easier exposing something as an API makes the creation of new solutions. In this case we enable a non-integration specialist to build a new API based on existing APIs in order to create a further unique capability.

Going back to the events that we were using internally within the component, let’s consider how they might also be valuable outside the component. The events might become a reusable capability in the same way that our API is. Exposing events beyond the component would enable, for example, a separate application to use the same event-sourced programming models in their own implementations, maintaining their own read-optimized data stores. In 6.8, “Perform event-driven SaaS integration ” on page 327, we extend this thought. We consider an example of how non-integration specialists could use events from our component as triggers on new integrations with modern Software-as-a-Service applications.

We then return to our exposed API and consider how we might want to improve that exposure as we make it available to broader audiences. We explore these issues:

•How to implement the OAuth security model to enable authentication to be handled by a separate provider ([6.6, “Advanced API security” on page 275).

•How to introduce alternative API exposure styles such as GraphQL to give consumers more flexibility in how they consume the data (6.11, “REST and GraphQL based APIs” on page 380).

•How to perform effective, repeatable API testing to ensure that the API behavior remains consistent as we add enhancements (6.12, “API testing” on page 397).

Finally, we consider what to do when the data we need to move between applications is not appropriate for APIs or events. A common example in modern applications is files such as video media. It makes no sense for these large files to travel over an API, or events due to their size, often greater than a Gigabyte. In these circumstances a more logical approach is the claim check pattern explored in 6.13, “Large file movement using the claim check pattern” on page 409. In that example, we store the object in a place that the cloud can reference, then pass only the reference. Of course, the file's content must move to its destination eventually. So, we also discuss the benefits of FASP (the Fast and Secure Protocol) for getting large data across significant network distances.

|

Note: For some of the following exercises, we use IBM Cloud Pak for Integration. If you do not currently have access to an environment, see section 5.1.4, “Getting access to IBM Cloud Pak for Integration for the exercises” on page 145.

|

6.2 Application Integration to front a data store with a basic API

In this section, we demonstrate how to use IBM App Connect to expose a datastore as a RESTful API. The key points implemented here are as follows:

•Fine grained, container-based deployment of integrations, enabling independent maintenance, elastic scaling, and isolated resilience.

•Code free data mapping from a REST data model to database table definition.

•Configuration-based protocol conversion from HTTP to JDBC.

The objective here is not to show in detail how to create the integrations themselves since there is plenty of existing material on building integrations. The key thing to note is that no code is required for these simple integrations, just a simple integration flow that contains a map.

Instead, we want to focus on what it looks like to deploy these to a cloud native style. As such, we begin the exercise with a blank cloud environment, and we deploy the integrations directly to it. There is no preparatory stage of building a shared infrastructure as we would have traditionally. Instead, each integration that is deployed provides its own discrete integration runtime, and the rest (such as HA and scaling) is provided by the container orchestration platform.

Figure 6-3 Deployment of the API across two separate containers

We deliberately demonstrate deployment of this API across two separate containers as shown in Figure 6-3. That way, you see that they could be changed and scaled separately and could have different resilience models.

We create two REST applications in the IBM App Connect Toolkit. One is for Create, Update, and Delete (commands) to a table on IBM Db2 called Products. The other deals exclusively with Read (queries). The flows for the two REST applications are already built and available at this GitHub site:

https://github.com/IBMRedbooks/SG248452-Accelerating-Modernization-with-Agile-Integration/tree/master/chapter6/6.1

https://github.com/IBMRedbooks/SG248452-Accelerating-Modernization-with-Agile-Integration/tree/master/chapter6/6.1

The applications can be opened in the Toolkit by importing the following files:

Alternatively, you can build them yourself as described here:

https://www.ibm.com/support/knowledgecenter/en/SSTTDS_11.0.0/com.ibm.gdm.doc/cm28851_.htm

Each REST application includes:

•JDBC Connection to a Db2 database that uses a policy

•A Db2 database called PRODUCTS, with a schema called PRDCTS and a table called Products as defined in the Products data model in https://github.com/IBMRedbooks/SG248452-Accelerating-Modernization-with-Agile-Integration/blob/master/chapter6/products_data_model.json

•Swagger that describes the data model for each path

•Unique sub flows for each relevant operation (Create, Read, Update, Delete)

Each subflow has an input, a mapping node and an output. Figure 6-4 shows the REST API operation subflow.

Figure 6-4 REST API Operation Subflow

Figure 6-5 shows the Queries flow.

Figure 6-5 Get Product Operation Mapping Node

Figure 6-6 on page 175 through Figure 6-8 on page 176 show the Command flows.

Figure 6-6 Delete Product Operation Mapping Node

Figure 6-7 Post Product Operation Mapping Node

Figure 6-8 Put Product Operation Mapping Node

6.2.1 Db2 setup

For the application in this section to function correctly, a Db2 database needs to be set up for the IBM App Connect flow to operate against.

Next we must create a Db2 instance using the following instructions: https://github.com/IBM/Db2/tree/develop/deployment

1. Configure the instance with the following parameters:

a. Choose a release name.

b. Select a namespace.

Note: This value does not need to be the same namespace where the IBM App Connect container will be deployed.

Note: This value does not need to be the same namespace where the IBM App Connect container will be deployed.

c. Set the Database Name to PRODUCTS.

d. Set the Db2 instance name to db2inst1.

e. Provide a Password for Db2 instance, for example passw0rd.

f. Persistence is optional.

g. Do not select the Db2 HADR mode.

2. After the deployment is complete, observe that ports 50000 and 55000 are both exposed via two types of Kubernetes services, NodePort and ClusterIP in the OpenShift console,

|

Note: For a distinction between NodePort and ClusterIP service types, refer to section 4.4.2, “Container orchestration” on page 107.

|

3. You can use Db2-compatible database client to connect to the Db2 instance via the NodePort service, and to verify that the database called PRODUCTS has been successfully created. In the next section, we use the IBM App Connect Toolkit in the Database Development view.

In the remainder of the chapter, we connect to the Db2 instance by using a policy definition in IBM App Connect. The definition leverages the ClusterIP service, as described in 6.4.6, “Policy definitions” on page 249.

6.2.2 Db2 table setup

To set up a Db2 table for the samples in this chapter, we use the IBM App Connect Toolkit in the Database Development view to connect to the Db2 instance that we spun up in 6.2.1, “Db2 setup” on page 176.

The following tasks are performed on the IBM App Connect Toolkit version 11.0.0.5.

1. Open the toolkit and navigate to the Database Development View. See Figure 6-9 on page 178.

Figure 6-9 Switch to Database Development View

Figure 6-10 New Database Connection

3. In the connection details, select the DB2 for Linux, UNIX, and Windows option in the menu and put the database details from the previous section. Remember to use the NodePort service exposing the container port 50000 for the Db2 instance.

The service is typically exposed on the Proxy Node IP address of Fully Qualified Domain Name. See Figure 6-11 on page 179.

Figure 6-11 Connection details

4. The Products database connection now exists in the Database Connections sidebar. Right click Products and select New SQL Script. See Figure 6-12 on page 180.

Figure 6-12 New SQL Script

5. To create a PRODUCTS table in the PRODUCTS database, type the following SQL command shown in Example 6-1 into the newly opened SQL script.

Example 6-1 SQL script

CREATE TABLE PRODUCTS

(

last_updated varchar(255) NOT NULL,

part_number int NOT NULL,

product_name varchar(255),

quantity int,

description varchar(255),

PRIMARY KEY (part_number)

);

Figure 6-13 Run SQL

This should result in a success report as shown in Figure 6-14 on page 182.

Figure 6-14 - SQL success report

7. You can now insert a new row into the table using the following SQL command and again selecting the Run SQL command as previous.

INSERT INTO PRODUCTS ( last_updated, part_number, product_name, quantity, description)

VALUES ('2019-08-01T09:57:34.265Z', 12, 'duck', 100, 'a waterbird with a broad blunt bill, short legs, webbed feet, and a waddling gait');

8. We can check that the entry has been successfully inserted by using the SELECT SQL command.

SELECT * FROM PRODUCTS

Figure 6-15 shows the SELECT SQL query result.

Figure 6-15 SELECT SQL query result

6.2.3 Swagger definitions

In this section, we package the project files into a BAR file for each application as shown in the following web pages:

Then we create a new server for each. In this chapter we want to use the user interface to achieve this. Later, we see how this can be automated by using pipeline deployment in section 7.5, “Continuous Integration and Continuous Delivery Pipeline using IBM App Connect V11 architecture” on page 465.

1. Log in to the IBM Cloud Pak for Integration instance and the IBM App Connect dashboard. In this view we click Add server as shown in Figure 6-16.

Figure 6-16 Add server in the IBM App Connect dashboard

Figure 6-17 Add a BAR file

3. Next, select the BAR file from the local directory where it is saved and select Choose. See Figure 6-18 on page 184.

Figure 6-18 Choose the relevant BAR file

4. The BAR file is displayed in the user interface, and you can confirm that the correct file has been uploaded, then select Continue. See Figure 6-19.

Figure 6-19 BAR file shown in the user interface

5. In the next screen we copy the Content URL and select Configure release. See Figure 6-20 on page 185.

Figure 6-20 Get Content URL (ConfigureReleaseCopyContentURL)

6. The new page describes the helm chart to be used to deploy the new server into, confirm the Cloud Pak version and select Configure. See Figure 6-21 on page 185.

Figure 6-21 IBM App Connect Helm Chart

7. Type a Helm release name such as redbook-read for the database query BAR and redbook-commands for the database commands BAR. In the Target namespace select icp4i before you read and accept the license agreement by checking, I have read and agreed to the license agreement. See Figure 6-22 on page 186.

Figure 6-22 Helm release name, target namespace and accepted license agreement

8. Paste the previously copied Content URL into the Content Server URL box. See Figure 6-23 on page 186.

Figure 6-23 Pasted Content URL

9. Type the IP address for the Master or Proxy node of the IBM Cloud Pak for Integration instance into NodePort IP box. See Figure 6-24 on page 186.

Figure 6-24 Deployment IP

10. For this example we need only a single replica. So, set the Replica count to ‘1’ and ensure that the Local default Queue Manager is deselected as shown in Figure 6-25 on page 187.

Figure 6-25 Replica Count 1 and Queue Manager deselected

11. After double checking the configuration, select Install.

12. A message confirming the starting of the installation is displayed. The message can be tracked in the helm release by redirecting through the View Helm Release button. See Figure 6-26 on page 187.

Figure 6-26 Helm Deployment successful start

13. The new services for the helm release is displayed. To see further details, including the exposed ports for the server, select the link redbook-read-ibm-ace-server-icip-prod or redbook-commands-ibm-ace-server-icip-prod depending on the BAR file that is being deployed. See Figure 6-27 on page 187.

Figure 6-27 Two services for the deployed IBM App Connect server

14. In Figure 6-28 on page 188 we can see the HTTP server exposed port (31016).

Figure 6-28 Exposed ports for the service

15. We can then use the port to create a test url to make a GET on the redbook-read server. In an API Connect Test and Monitor select a GET operation http://<ibm_cloud_ip>:<http_port>/database_query/v1/products which returns the records from the PRODUCTS table in the database. See Figure 6-29 on page 188.

Figure 6-29 Returned output from the PRODUCTS table

It is worth considering that the policy used in both BAR files is dependent on its reference to the datastore. This must be updated if the port of the database changes. It is possible to deploy the BAR directly to an existing empty server through the Toolkit and as the system gains maturity automated builds based on code repository pushes can be deployed.

We now have two BAR files deployed onto the Cloud that enable us to read/add/delete/update rows in the database. These bar files can be independently maintained and scaled, and can have separately defined availability models.

6.3 Expose an API using API Management

This section shows you how to expose an API using API Management, which brings the following benefits. (Not all of these issues are explored in detail in the example.)

•Discovery: Enable consumers to find the APIs they need, understand their specifications, learn how to use them, and experiment with them before committing to use them.

•Self-subscription: Allow consumers to self-subscribe to use the API using a revocable key.

•Routing: Hide the exact location of the API implementation, and enable version based routing.

•Traffic management: Provide throttling of inbound requests to the API on a per-consumer basis

•Analytics: Provide both consumers and providers with information regarding their API usage, response times and more.

In the first exercise we created the two basic implementations of the REST APIs. We now want to bring those together into a single consolidated API to simplify usage for the consumer. Furthermore, we want to provide API management capabilities as shown in Figure 6-30.

Figure 6-30 Providing API management capabilities

Having created the implementation of REST APIs in the previous section we now want to expose them through an API Management system. This gives the ability to manage how the APIs are consumed, how traffic can be limited and how exposure to external parties is properly handled.

6.3.1 Importing the API definition

There are two methods for exporting an IBM App Connect REST API to IBM API Connect.

Pushing from IBM App Connect to IBM API Connect

The first option is to make use of the Push REST APIs to API Connect... functionality which is available from the App Connect dashboard. This is documented in the IBM Knowledge Center: https://www.ibm.com/support/knowledgecenter/SSTTDS_11.0.0/com.ibm.etools.mft.doc/bn28905_.htm

|

Note: This functionality currently is possible in stand-alone instances of IBM App Connect to any given API Connect instance. Be aware that at the time of writing there was a limitation in IBM Cloud Pak for Integration deployments that meant a callback ‘POST’ to the IBM App Connect Server is not available.

|

Importing the API definition file

The second option is to import the API definition file manually.

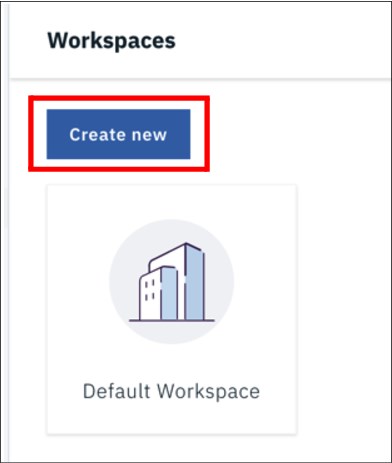

Perform the following steps to import API to the Developer Workspace:

1. First, you must create the API. To do that, click on Develop APIs and Products. See Figure 6-31 on page 190.

Figure 6-31 API Manager main page

Figure 6-32 Import API to the developer workspace 1

Figure 6-33 Import API to the developer workspace 2

Figure 6-34 Import API to the developer workspace 3

|

Note: Download the swagger definition from https://github.com/IBMRedbooks/SG248452-Accelerating-Modernization-with-Agile-Integration/blob/master/chapter6/database_operations_swagger.json.

|

5. Click Browse and choose the Swagger definition that you have downloaded from GitHub. See Figure 6-35.

Figure 6-35 Import API to the developer workspace 4

Figure 6-36 Import API to the developer workspace 5

Figure 6-37 Import API to the developer workspace 6

Figure 6-38 Import API to the developer workspace 7

|

Descriptions:

Secure using Client ID - Select this option to require an Application to provide a Client ID (API Key). This causes the X-IBM-Client-Id parameter to be included in the request header for the API. When this option isselected, you can then select whether to limit the API calls on a per key (per Client ID).

CORS - Select this option to enable cross-origin resource sharing (CORS) support for your API. This allows your API to be accessed from another domain.

|

Figure 6-39 Import API to the developer workspace 8

6.3.2 Configuring the API

In this section we edit the API to include two different APIs from two microservices for the same business function. From a consumer point of view, it looks like a single API but it is actually connected to two different microservices at the back end.

6.3.3 Merging two application flows into a single API

We have now deployed the Commands swagger into the API Management service of API Connect, either through the App Connect push functionality or through a direct import of the API definition into API Connect.

This API and Product now displays the command operations for the database (Add, Update, Delete), but not the query (Read). We add this query manually by navigating to the API in API Connect.

1. Navigate to the API Manager API Definition in API Connect for database_operations and select Paths (Figure 6-40 on page 195) and the /products (Figure 6-41 on page 195) path name.

Figure 6-40 API Definition in API Connect

Figure 6-41 API definition in API Connect in Path

2. In the operations section, we select the Add button to include a get operation by toggling ON the get option and selecting Add. See Figure 6-42 on page 196.

Figure 6-42 Add the GET operation

3. Select the Get operation and give an Operation Id like getProducts and a Description like getProducts. See Figure 6-43 on page 197.

Figure 6-43 Set OperationID and Description

4. In the same page toggle ON the following; Override API Produce Types, application/json, Override API Consume Types, application/json. See Figure 6-44 on page 198.

Figure 6-44 Set the Produces and Consumes types

5. On the same page, we go to Response and select Add before you set Status Code to 200, Schema to object and Description to “The operation was successful”. See Figure 6-45.

Figure 6-45 Create a Response

6. Remember to Save the API before navigating to the Assemble view of the designer.

7. In the assemble view, we need to ensure that there is a Switch object to point to each of the four operations. If not, drag and drop the Switch object from the side panel into the assembly flow. See Figure 6-46 on page 199.

Figure 6-46 Add a Switch statement

8. We add a case condition for each of the path parameters. Click on the Switch in the assembly, and click the + Case button 3 times to give four conditions. Select each to Add the operation from a list of available operations, one to each case. See Figure 6-47.

Figure 6-47 Add Cases for each operation

9. For each operation we include a proxy for the POST, PUT, and DELETE operations and an invoke for the GET. The differences are described here:

https://chrisphillips-cminion.github.io/apiconnect/2017/07/17/Proxy-and-Invoke-What-is-the-difference-in-API-Connect.html.) Also see Figure 6-48.

https://chrisphillips-cminion.github.io/apiconnect/2017/07/17/Proxy-and-Invoke-What-is-the-difference-in-API-Connect.html.) Also see Figure 6-48.

Figure 6-48 Add Proxy and Invokes for each assembly path

10. In the POST, PUT, and DELETE proxies we include the ACE Commands Endpoint and the GET invoke includes the ACE Query Endpoint. See Figure 6-49 on page 201.

Figure 6-49 Define the required endpoints

11. Click Save.

Now that the API is ready to use, we can look at becoming the consumer of this API.

6.3.4 Add simple security to the API

In this section, we show you how to secure the API.

Configure API key security

In this section we will:

1. Define the API with simple security like API key and API secret

2. Publish the product

3. Test the API

Define simple security

Perform the following steps:

Figure 6-50 Defining the security 1

2. Under name type secret, choose APIKey for the Type and Located in Header. Then click Save. See Figure 6-51.

Figure 6-51 Defining the security 2

Your definitions should look like Figure 6-52 on page 203.

Figure 6-52 Defining the security 3

Publish the product

Perform the following steps to publish the product:

1. Click Develop from the left side menu, then click the ellipsis (…) beside the API that you want to publish and click Publish. See Figure 6-53.

Figure 6-53 Publishing the product 1

Figure 6-54 Publishing the product 2

Figure 6-55 Publishing the product 3

Test the product

1. Now you can test the API. Go to Assemble and click the highlighted box in Figure 6-56 on page 205.

Figure 6-56 Testing the API 1

Figure 6-57 Testing the API 2

3. Choose the operation to test. Here, we try the get operation. See Figure 6-58.

Figure 6-58 Testing the API 3

4. Click Invoke. You receive the response from the back end with 200 OK. See Figure 6-59 on page 207.

Figure 6-59 Testing the API (Response)

This was only a simple security using the API Key.

We have shown a basic invocation of the API using the internal testing mechanism. Of course, real consumers would first need to discover the API through the developer portal, then subscribe to use it. We cover this more formal discovery and subscription in “Subscribing to products” on page 295.

6.4 Messaging for reliable asynchronous data update commands

In previous sections, we explored how to move existing centralized ESB based integrations into an Agile Integration paradigm. To do this, we broke the different integrations apart and exposed them through API management. This is a good start, but as part of your overall application modernization strategy, new integration patterns will also emerge.

A good example is event-based programming models for updates. For reasons such as performance or availability of a data source, you might decide to move toward these models and away from traditional synchronous data updates.

In the first section, we deliberately split the traditional CRUD (create, read, update, delete) into separate models for commands. The separate models make changes to data (create, update, delete) and to the other operations that query (search/read) data, to enable them to be changed and scaled independently. However, they were still synchronous in nature, dependent on the datastore's performance and availability.

We can make use of this separation and independently refactor the change operations to be asynchronous, without touching the query path. In our case, we do this by introducing IBM MQ instead of HTTP as the mechanism for the update. Just to be clear what we mean by this, we are not talking about changing only the transport from HTTP to IBM MQ. We are also changing the interaction pattern from request/response to fire and forget. This way, after a request has been made to change data, we can respond immediately to the calling system that the request has been received. We do not have to wait for it to be completed. So, we are no longer dependent on the back-end systems availability or performance. IBM MQ's assured delivery means that we can be confident that it will eventually happen. Furthermore, we can throttle and control when the updates are applied, so that in busy periods they do not affect the performance of queries.

Clearly this model introduces challenges. We don't know exactly when the update will occur. And there might be other updates from other consumers, too. So, we can never be entirely sure of the status of the data in the back-end system. Nowadays we use the term eventual consistency to describe this situation. Clearly it is better suited to some business scenarios than others. In our example, we decided that the increased availability and response time on updates to our "product" data, and the potentially more consistent performance on queries, are more important than knowing that the data is 100% consistent all the time.

The CQRS (Command Query Responsibility Segregation) pattern has become popular in recent years. Data changes (commands) and reads (queries) are treated as separate implementations, to improve reliability and performance. The integrations for these two halves were already separate, but they were both acting synchronously on the same data source. What we are doing in this section can be described as implementing the "command" part of this pattern. In other words, we change the synchronous data changes into a series of asynchronous commands. In later sections, we look at creating even more separation on the "query" side.

Figure 6-60 on page 209 illustrates this pattern.

Figure 6-60 Command and Query pattern

In this section, we explore how you can use IBM MQ and IBM App Connect to implement the Command side of this pattern.

6.4.1 Enable create, update, delete via commands

One of the first choices you must make when implementing this side of the pattern is which protocol mechanism to use for sending the commands to the corresponding component.

Because Commands represent a specific action that must occur, we use a one-way “PUT” to an IBM MQ queue. This approach allows us to decouple the requester from the implementation. At the same time, it provides a reliable messaging platform that allows event collaboration among the different services. IBM MQ's assured "exactly once" delivery of messages is ideal here. And its ability to participate in a transaction with a database offers even more options as we discuss later.

For a clean design, you need three queues that represent each one of the commands. In addition, it is recommended to have two extra queues, one to store potential errors and a second one to log the activity. The second queue can be replaced by any other logging framework that is available in the platform, but we use it here for illustration purposes.

In the next section we show you how to create an IBM MQ queue manager, and the necessary queues for this part of the solution. However, you might already have an existing IBM MQ queue manager (or already have the skills to create one). Example 6-2 on page 210 shows the list of IBM MQ commands that you need to create the corresponding queues and the needed authorization records:

Example 6-2 List of IBM MQ commands

DEF QLOCAL(DB.LOG)

DEF QLOCAL(DB.ERROR)

DEF QLOCAL(DB.CREATE) BOTHRESH(1) BOQNAME(DB.ERROR)

DEF QLOCAL(DB.UPDATE) BOTHRESH(1) BOQNAME(DB.ERROR)

DEF QLOCAL(DB.DELETE) BOTHRESH(1) BOQNAME(DB.ERROR)

SET AUTHREC PROFILE('DB.LOG') OBJTYPE(QUEUE) principal('user11') AUTHADD(ALL)

SET AUTHREC PROFILE('DB.ERROR') OBJTYPE(QUEUE) principal('user11') AUTHADD(ALL)

SET AUTHREC PROFILE('DB.CREATE') OBJTYPE(QUEUE) principal('user11') AUTHADD(ALL)

SET AUTHREC PROFILE('DB.UPDATE') OBJTYPE(QUEUE) principal('user11') AUTHADD(ALL)

SET AUTHREC PROFILE('DB.DELETE') OBJTYPE(QUEUE) principal('user11') AUTHADD(ALL)

Notice that we are taking advantage of the backout feature in IBM MQ to handle potential poison messages. Poison messages are ones that cannot be processed by the receiving system for some reason, but that we do not want to lose until we have had the opportunity to review them. This is also useful for transactional requirements that are discussed later in the section.

6.4.2 Deploy and configure Queue Manager

Now that you have decided to use an asynchronous messaging model, we can leverage the messaging capabilities that are provided by the Cloud Pak for Integration via IBM MQ.

1. Start creating a new instance. For that you can go to the Platform Navigator page and select Add new instance from the MQ tile as shown in Figure 6-61.

Figure 6-61 Creating a new IBM MQ instance - 1

2. You see the following pop-up window (Figure 6-62 on page 211) that provides a brief explanation about some prerequisites for deployment of IBM MQ. This is something that was usually configured at installation time, but you can check with your administrator, as suggested, to validate. After you confirm that your Cloud Pak for Integration is properly configured you can click Continue.

Figure 6-62 Creating a new IBM MQ instance - 2

3. This launches the helm chart that guides you through the deployment process. In the first section of the form, you are required to enter the name of the Helm release, and the namespace and cluster where the queue manager will be deployed. For this scenario, we used the following values:

•Helm release name: mqicp4

•Target namespace: mq

•Target cluster: local-cluster

You need to check the license box where you confirm that you have read and agreed to the licensing agreement. Figure 6-63 on page 212 shows the information:

Figure 6-63 Creating a new IBM MQ instance - 3

4. You can scroll down to access the next set of fields starting with Pod Security. In this section you need to provide only the FQN of the proxy that gives access to your cluster. See Figure 6-64.

Figure 6-64 Creating a new IBM MQ instance - 4

Then you scroll down and expand the All Parameters section to review and modify the rest of the parameters. You can clear the Production Usage field, because this deployment is for test purposes. You can accept the default values for “Image repository” and “Image tag”, unless you want to use your own image. We discuss this scenario later on. Enter the value of the secret with the credentials to access your registry in order to be able to pull the images. In our test environment it is called entitled-registry. And we recommend that you select Always for the image pull policy, so that you are sure to always get the most recent image in the registry. But you can use the other options if needed. Figure 6-65 on page 213 shows the screen with the values:

Figure 6-65 Creating a new IBM MQ instance - 5

5. You can keep the IBM Cloud Pak for Integration section with the default values as shown i in Figure 6-66.

Figure 6-66 Creating a new IBM MQ instance - 6

6. In the next section, select the Generate Certificate checkbox to get a new certificate for the queue manager. The cluster hostname field is prepopulated with the value that you entered in the first part of the form. Figure 6-67 on page 214 shows the form:

Figure 6-67 Creating a new IBM MQ instance - 7

7. The next section in the form is the particular relevance for IBM MQ. By definition the storage in a container is ephemeral. In other words, if for some reason the pod where the container is running dies, the storage that is reserved for the container is also destroyed. And that behavior doesn’t fit well with a resource manager like IBM MQ. With MQ, you can have persistent messages that should be preserved in case of a server or queue manager failure.

The good news is that specialized elements allow you to externalize the storage that is assigned to a pod (container). Therefore, you preserve the information (queues, messages, and so on) that is created by the queue manager.

The specific field to configure this is called “Enabled persistence.” For the test scenario we have cleared this field, but for a production environment you probably must enable it. After you decide to enable this option, you can dynamically allocate the required storage. For this, you must select the Use dynamic provisioning box. In our case, we can clear the box, because we didn’t enable persistent storage.

If you do not want to use dynamic provisioning, you can still enable persistence, but you must create the persistence volume claim (PVC) beforehand and provide the corresponding name. If you opted for dynamic provisioning you must provide the proper storage class name. Figure 6-68 on page 215 shows the four field reflecting our assumption that no persistence is required.

Figure 6-68 Creating a new IBM MQ instance - 8

8. The next section gives you the option to separate the Logs and Queue Manager configuration settings in different persistence volume claims. This approach is similar to what you would do with a regular queue manager and the file system that is associated. But in this case everything is parameterized. In our case, we left the boxed cleared because we decided not to enable persistence storage. See Figure 6-69 on page 215.

Figure 6-69 Creating a new IBM MQ instance - 9

9. The next section will allow you to assign the resources (CPU and memory) that will be assigned to the queue manager. For testing purposes we will use the default values, but for a production environment you can do a sizing exercise to assign the values that fit your needs. For the security section you can use the default values as shown in Figure 6-70 on page 216.

Figure 6-70 Creating a new IBM MQ instance - 10

10. Make sure the box for the last parameter around security named Initialize volume as root is checked to avoid any issue concerning access to the file system that is assigned to the container. See Figure 6-71.

Figure 6-71 Creating a new IBM MQ instance - 11

11. You can leave the rest of the form with the default values as shown in Figure 6-72 and Figure 6-73 on page 217.

Figure 6-72 Creating a new IBM MQ instance - 12

Figure 6-73 Creating a new IBM MQ instance - 13

12. After you review all the parameters, click on Install to start the deployment. See Figure 6-74 on page 218.

Figure 6-74 Creating a new IBM MQ instance - 14

13. After a few moments you receive the following message indicating that the deployment has started. See Figure 6-75.

Figure 6-75 Creating a new IBM MQ instance - 15

14. Next we will monitor the progress of the deployment to confirm that there are no errors and start working with the queue manager. For that, you click on the View Helm Release button from the previous pop up window.

This action takes you to the Cloud Pak Foundation view for Helm Releases. After the new window is open, look for the name used for your deployment. If you are using the names suggested in the book it will be “mqicp4i”, as shown in Figure 6-76 on page 219.

Figure 6-76 Creating a new IBM MQ instance - 16

15. After you find the deployment, click on the name to get the detail. In the new screen, scroll down to check the different objects that are part of the deployment. The one that we will explore in more detail is the StatefulSet, which includes the Pod with the actual queue manager process. But before moving to the next screen, write down the commands provided in the Notes section so that you can get the connection information to the queue manager. We will need this information when we work on the integration project.

See Figure 6-77 through Figure 6-80 on page 221.

Figure 6-77 Creating a new IBM MQ instance - 17

Figure 6-78 Creating a new IBM MQ instance - 18

Figure 6-79 Creating a new IBM MQ instance - 19

Figure 6-80 Creating a new IBM MQ instance - 20

16. After you click on the StatefulSet link you see the following screen (Figure 6-81 on page 221) with the details. From there you can drill down in the pod to review the events it has produced.

Figure 6-81 Creating a new IBM MQ instance - 21

17. In the Pod screen, check the Status to confirm that the queue manager is running. You could have seen the status from previous screens, but we want to show how you can navigate to the pod for potential troubleshooting situations to review the events that were produced during startup. See Figure 6-82 on page 222.

Figure 6-82 Creating a new IBM MQ instance - 22

18. When you have confirmed the queue manager is up and running, go back to the window where you initiated the deployment and click Done to close the pop-up window.

Figure 6-83 Creating a new IBM MQ instance - 23

19. After you close the pop-up window, you see that the new queue manager is displayed in the MQ tile.

Figure 6-84 Creating a new IBM MQ instance - 24

6.4.3 Queue manager configuration

Now that the queue manager is up and running, you configure it with the objects that are required by the integration project. The objects were listed in the previous section as MQSC commands. In order to show several alternatives, we use the new MQ Web UI to create the same objects.

1. After you click in, the queue manager name is displayed in the MQ tile with all the queue managers you have available in the Cloud Pak for Integration. You are taken to the initial queue manager web UI. As you can see, only the Local Queue Manager widget is available. To configure the required objects, you need to add some other widgets. Click the Widget button as shown in Figure 6-85 on page 224.

Figure 6-85 Queue manager configuration -1

2. The Add a new widget dialog is displayed where you can select the different IBM MQ objects that you want to administer. Click the Queues item as shown in Figure 6-87.

Figure 6-86 Queue manager configuration -2

3. The corresponding widget is added to the administration console as shown in Figure 6-87.

Figure 6-87 Queue manager configuration -3

4. Repeat the same process to add the Listener and Channel widgets. The web UI will look like Figure 6-88 after you have added the widgets.

Figure 6-88 Queue manager configuration -4

5. We won’t create any additional Listener, but we have added the widget to validate the default listener was properly configured when we deployed the queue manager. To do this you hover over the gear icon in the Listener widget and click on it to configure it, as shown in Figure 6-89 on page 226.

Figure 6-89 Queue manager configuration -5

6. The Listeners configuration dialog is displayed, where you select Show System objects, and then click Save as shown in the next figure.

Figure 6-90 Queue manager configuration -6

7. The system objects are displayed, and you can see the default listener in the known port 1414 is already running. See Figure 6-91 on page 227.

Figure 6-91 Queue manager configuration -7

Now that we know the listener is up and running, we can proceed to create the required Server Channel. This channel allows the connection between our integration flow running in IBM App Connect with the queue manager that we just deployed. To do this, click the Create button in the Channels widget as shown in Figure 6-92 on page 228.

Figure 6-92 Queue manager configuration -8

8. The Create a Channel dialog box is displayed. In the window, select the type of channel to create to adjust the fields that we need to provide. In this case, we choose the Server-connection. Then, enter the name of the channel, in this case ACE.TO.MQ. But you can use another name. Just be sure to write it down, because you will need this value when you configure the IBM MQ policy in your integration flow. Finally, click Create. Figure 6-93 on page 229 illustrates the process.

Figure 6-93 Queue manager configuration -9

9. After a moment the widget will be updated to show the newly created channel. Notice that the channel is in an Inactive status. This status is normal, because we haven’t deployed yet the integration flow that will use the channel. (You have the option to come back after you deploy the Integration flow to confirm that the status has changed to Active. See Figure 6-94 on page 230.

Figure 6-94 Queue manager configuration -10

Now we can define the queues to use in our integration project. Similar to the channel, move to the Queues widget and click on the Create button as shown in Figure 6-95 on page 231.

Figure 6-95 Queue manager configuration -11

10. The Create a Queue dialog is displayed where you provide the name and queue type for the definition of the required objects. Using the first queue in the list, we enter DB.LOG as the Queue name and Local as the Queue type. Then click the Create button as shown in Figure 6-96.

Figure 6-96 Queue manager configuration -12

11. After a moment the Queues widget is updated to include the newly created queue. See Figure 6-97.

Figure 6-97 Queue manager configuration -13

12. Repeat the same process to create the rest of the queues. As mentioned before, the queue names are: DB.ERROR, DB.CREATE, DB.UPDATE, and DB.DELETE. All of them being local queues. At the end, you see something like Figure 6-98 on page 233.

Figure 6-98 Queue manager configuration -14

13. To complete the configuration, we need to update the definition for the three queues that will process the commands to handle errors and cope with potential poison messages. In the Queues widget, select the DB.CREATE queue, hover over the Properties icon, and click on it as shown in Figure 6-99 on page 234.

Figure 6-99 Queue manager configuration -15

14. This opens the Properties window for this particular queue. You can explore all the parameters, but for our scenario, we will move to the Storage section where we will update the fields Backout requeue queue and Backout threshold. Specifically, we use the DB.ERROR queue that we created in the previous step and assign a value of 1 to the threshold. Depending on your situation, you could use a different value for the threshold, but for the sample scenario we will consider an error after the first attempt. After you enter the values, click Save to update the queue as shown in Figure 6-100 on page 235.

Figure 6-100 Queue manager configuration -16

15. The warning message that you have seen regarding unsaved changes now changes to a new message stating that the properties have been saved. Now you can click Close to return to the Queues widget and proceed to update the other two queues that we are missing.

Figure 6-101 Queue manager configuration -17

To complete the configuration, repeat the process for queues DB.DELETE and DB.UPDATE.

16. After you update the queues you have all the objects that are required for the scenario. However, due to the security changes that were recently introduced by IBM MQ , you also need to create the corresponding Authority Records. These records allow a user to interact with the queues.

Since this is a demonstration scenario, instead of working with Authority Records we show how to disable Connection Authentication at the queue manager level. Keep in mind, this is only for testing purposes. Disabling security is not recommended in a production environment.

|

Note: You can check 9.4, “Automation of IBM MQ provisioning using a DevOps pipeline” on page 592. That section describes how to create a queue manager using DevOps, alongside with the MQSC commands that are listed in the previous section to include the required security as part of your configuration.

|

17. To disable connection security checking, you need to modify the queue manager configuration. To do that, select the queue manager name from the Local Queue Managers widget and hover over the Properties button, clicking it as shown in Figure 6-102.

Figure 6-102 Queue manager configuration -18

18. This click opens the queue manager properties window. Navigate to the Communication section and change the CHLAUTH records field to the Disabled value. Then click Save to update the property has shown in Figure 6-103 on page 237.

Figure 6-103 Queue manager configuration -19

19. This action removes the warning message and confirms that the changes were applied. Now you can click Close as shown in Figure 6-104.

Figure 6-104 Queue manager configuration -20

20. To avoid potential issues using an administrator user we also disable Client Connection checking. Don’t forget that we do this for simplicity reasons, but this is not recommended in a production environment.

First, we add the Authentication Information widget and we configure to Show System objects as we explained before. This adds the tile to the web UI that is shown in Figure 6-105 on page 238.

Figure 6-105 Queue manager configuration -21

21. After the system objects are displayed in the widget, select SYSTEM.DEFAULT.AUTHINFO.IDPWOS and hover over the Properties menu and click on it as shown in Figure 6-106 on page 239.

Figure 6-106 Queue manager configuration -22

22. In the Properties window, navigate to the User ID + password section, and modify the value of the Client connections field to None. Then, click Save as shown in Figure 6-107.

Figure 6-107 Queue manager configuration -23

23. This removes the warning message about unsaved changes and confirms that the properties have been saved. Now you can click Close as shown in Figure 6-108 on page 240.

Figure 6-108 Queue manager configuration -24

24. This takes you to the main administration page and now you can proceed to the final step before you continue with the integration flow development. We need to refresh security to ensure that the changes we made take effect. Scroll as needed to make the Local Queue Manager widget visible. Hover over the ellipsis (...) in the upper menu bar to display the other menu and select the Refresh security option as shown in Figure 6-109.

Figure 6-109 Queue manager configuration -25

25. In the new Refresh security dialog click the Connection authentication link as shown in Figure 6-110.

Figure 6-110 Queue manager configuration -26

26. A message in the upper part of the Web UI indicates that queue manager security was refreshed successfully. Click the X to the right side of the message to dismiss it, as shown in Figure 6-111.

Figure 6-111 Queue manager configuration -27

We are now ready to start the implementation of the integration flow.

6.4.4 DB commands implementation

In the initial design we will leverage the fact that IBM App Connect doesn’t require a local queue manager any more. And we will connect to a central queue manager that acts as the messaging backbone for the whole environment that has been configured with the corresponding persistence volumes to handle high availability. Later on, we will explore the need to support two-phase commit (2PC) and what changes are required to address this requirement. For now, this is the logical representation of the solution.

Figure 6-112 shows the IBM Cloud Pak for Integration Cluster.

Figure 6-112 IBM Cloud Pak for Integration Cluster

The advantage of using IBM App Connect and IBM MQ to build what is effectively a microservice component is that you can leverage the existing skills in your integration community. As you will see, the design of the integration flow in IBM App Connect uses the same core concepts that you have used in the past to interact with IBM MQ and a database.

You can use the steps outlined in 6.2.2, “Db2 table setup” on page 177 to create the resources needed in the Toolkit to interact with a database. As a reference, in the sample implementation we are presenting here, you need a database connection, a database project, and the corresponding database definition as shown in Figure 6-113 and Figure 6-114 on page 243.

Figure 6-113 Database connection -1

Figure 6-114 Figure 6-115 Database connection -2

Figure 6-115 shows the structure of the application.

Figure 6-115 Structure of the application

We have three integration flows, one for each command. The interaction with the database will leverage the database capabilities in the graphical map node. Therefore we have a map for each one of the commands as well.

We will also take advantage of the new capabilities in IBM App Connect to include the jdbc driver as part of the BAR file. So we have included it in the application to minimize external dependencies.

We need to include the reference to the database project so we can use the database definitions in the graphical maps.

In this case we are going to use JSON as the data format to receive the data that will be processed by the commands. So we need to create and include the corresponding JSON schema to simplify the mapping in the map nodes as well.

Example 6-3 shows the JSON schema that is used in the product.json file, which maps the database data model used in this scenario.

Example 6-3 JSON schema used in the product.json file

{

"$id": "https://example.com/person.schema.json",

"$schema": "http://json-schema.org/draft-07/schema#",

"title": "Product",

"type": "object",

"properties": {

"lastUpdate": {

"type": "string"

},

"partNumber": {

"type": "integer"

},

"productName": {

"type": "string"

},

"quantity": {

"type": "integer"

},

"description": {

"type": "string"

}

}

}

|

Note: This is the bare-minimum information that is required to create the JSON schema. In a real-life scenario you might need to extend it.

|

The three flows follow the same basic model as the one depicted in Figure 6-116 on page 244. The differences will be in the queue name that is used in the MQ Input Node called DB Command, and of course in the logic inside the Graphical Map Node called Process Action.

Figure 6-116 The flows

|

Note: The purpose of the scenario is to show the core principles to implement the commands. No error handling is included beyond the backout configuration of the queues.

|

To enable the connectivity to the queue manager and the database we will use the new policies introduced in IBM App Connect that will be included with the BAR file as well. In this way, we minimize external dependencies and fit better in the containerized world of agile integration. We will discuss the policies in the next section.

6.4.5 Graphical data maps implementation

IBM App Connect provides several ways to interact with databases, including ESQL, Java, and graphical data maps (GDM) among others. For this scenario, we decided to use GDMs to avoid writing any code. But in a real-life scenario you can rely on any of the other options, depending on your particular needs.

Create command

Figure 6-117 shows how the map for the Create command looks like.

Figure 6-117 Map for the Create command

As shown in Figure 6-117, we are using the Insert a row into a database table function. It is important to mention that we were able to get the data structure for the input message since we included the JSON schema file as part of the project. Additionally we are mapping the return result from the insert to a single field that will be logged, but here you could add any other information you need.

And this is how the actual mapping looks like, as you can see it is a simple and straight forward mapping. The advantage of using similar names in your data models is that you can take advantage of the “auto map” feature available in GDM. This option is highlighted in Figure 6-118.

Figure 6-118 Auto-map feature

Update command

Figure 6-119 shows the map for the Update command.

Figure 6-119 Map for the Update command

In this case, we are using the Update a row into a database table function. Instead of using straight moves, we are evaluating if each field is present to proceed to do the actual move, this way we avoid undesired consequences. The map looks like in Figure 6-120 on page 247.

Figure 6-120 Update a row into a database table function

The other important difference versus the Insert map is that for the update we have included a “where” clause. This clause is based on the partNumber field that is provided in the input message, which corresponds to the primary key in the table. The definition looks like Figure 6-121 on page 248.

Figure 6-121 Modify Database Table Update

Delete command

Figure 6-122 shows the map for the Delete command.

Figure 6-122 Map for the Delete command

In this case, no data mapping is needed. We just need to set the “where” clause properly to the corresponding input field, so that we delete the right record. Figure 6-123 shows how the configuration would look.

Figure 6-123 Modify Database Table Delete

6.4.6 Policy definitions

After the Integration Flow is ready, the other important elements are the policies associated with the two external resources that are required. Figure 6-124 on page 250 shows the definitions for both policies in the corresponding Policy Project.

Figure 6-124 Policy definitions

In Figure 6-124 1 corresponds to the IBM MQ Endpoint policy and 2 corresponds to the JDBC Provider policy.

The properties for the IBM MQ Endpoint are the shown in Figure 6-125.

Figure 6-125 MQ Endpoint policy properties

Some relevant points about the policy are:

1. This corresponds to a client connection since we are connecting to a remote queue manager as mentioned above.

2. The queue manager hostname corresponds to the internal DNS value of the Cloud Pak for Integration Cluster since the Integration Server is running in the same cluster. If for some reason the queue manager would be running outside the cluster, you use the corresponding value here.

3. The same applies to the listener port. Inside the cluster the queue manager is listening in the default 1414 port. But if you wanted to access the same queue manager from a different location you would need to use the corresponding Node Port value.

4. The security identity corresponds to the secret that was defined in 6.5.3, “Securing the API” on page 264.

The properties for the JDBC Provider are the following shown in Figure 6-126.

Figure 6-126 JDBC Provider policy properties

In this case, the highlights are as follows:

•The name of the policy must match the name of the database, as required by the GDM.

•The server name used is the one used inside the cluster, similar to what we did with the queue manager. If you wanted to access the server from outside the cluster, then the cluster IP address or equivalent DNS must be used.

•The port value is the same, internally Db2 is listening in the default port, but if your integration server would be running outside the cluster you must use the Node Port value instead.

•For the initial scenario we have not enabled global transaction coordination. In the next section we will discuss when you might want to enable this feature.

•As mentioned before, we have included the jdbc JAR file as part of the project as it is good cloud native practice to avoid external dependencies. To use the driver, we need to enable this property to make sure that the Integration Server uses the embedded driver.

6.4.7 BAR file creation

After you have developed the integration application and configured the associated policies, you can proceed to prepare the corresponding BAR file.

1. Start selecting the application as in Figure 6-127.

Figure 6-127 BAR file creation -1

2. Include the Policies project. Remember, this is one of the changes introduced with IBM App Connect. Policies replaced Configurable Services to provide a stateless configuration model that also allows you to include the policy with your BAR file. We take advantage of this feature now, to minimize external dependencies and fit better in the agile integration paradigm. See Figure 6-128 on page 253.

Figure 6-128 BAR file creation -2

3. After you have included both resources, the BAR file content will look like Figure 6-129 on page 254 and you can proceed to build the BAR file.

Figure 6-129 BAR file creation -3

4. At the end, you have the BAR file that you can deploy into the IBM Cloud Pak for Integration using the Application Integration dashboard. You can check 6.2, “Application Integration to front a data store with a basic API” on page 173 for the details in the steps needed to complete the deployment.

6.4.8 Override policies for environment specific values

IBM App Connect gives you the option to embed the policies where you have configured your end points in a single BAR file. However, there will always be circumstances where you want to have the flexibility to override some values. In our example, for instance, we need to override the queue manager hostname and the database server name. That way, we can use the same integration solution in multiple environments — like production and quality assurance — without having to create multiple BAR files. We want to be able to treat the bar file as the unchanging source code and just override the environment-specific values each time.

To handle this situation, IBM Cloud Pak for Integration gives you the option to provide a set of properties in the form of secret keys. You can use the keys at deployment time to override values in the policies. The only consideration is that you need to create the secret before you perform the deployment since you will use the name of the secret when you configure the deployment.

1. As part of the deployment process you are asked to provide the BAR file and then you get the following pop-up window (Figure 6-130 on page 255). There, you have the opportunity to download a configuration package that includes the instructions for creating the secret for the deployment.

Figure 6-130 Download configuration package

2. The file you download is called config.tar.gz, which provides empty files for all the things that you can pass within a secret to Kubernetes for IBM App Connect to pick up on start-up. It also provides a script to generate the secret that we will use later. The content of the config.tar.gz is shown in Figure 6-131.

Figure 6-131 config.tar.gz file contents

As you can see, there are multiple files within config.tar.gz you can configure but for this particular scenario the relevant elements are policyDescriptor.xml and policy.xml.

3. We now need to copy the policy information across from our IBM App Connect Toolkit workspace into the files in the folder where we untared the config.tar.gz file. In this sample, we focus on the JDBC policy.

a. Copy the content of the JDBC policy file (PRODUCTS.policyxml) from your IBM App Connect Toolkit Workspace, and paste it into the policy.xml file.

b. Copy the content of the policy descriptor file (policy.descriptor) from your IBM App Connect Toolkit workspace, and paste it into the policyDescriptor.xml file.

4. You can now proceed to generate the secret using the following command, which is also included with the package:

./generateSecrets.sh <config-secret>

Note that in order to execute the command successfully you need to be logged in to your OCP cluster and using the right project, by default it should be ace.

5. Finally, you use the secret that you created when you were asked to configure the deployment. The field that must include the secret created is highlighted in Figure 6-132. For more details about the full deployment process for a BAR file, see 6.2, “Application Integration to front a data store with a basic API” on page 173.

Figure 6-132 secret file

6.4.9 Global transaction coordination considerations

The design used above achieves the desired goal of implementing the commands to Create/Update/Delete a data source using the Command Query Responsibility Separation (CQRS) paradigm. However, there is an aspect that needs to be considered if the implementation needs to assure consistency among the resource managers that are involved. In this case Db2 and MQ are involved.

As discussed previously, the scenario involves receiving a message with the command instructions and the data to modify the database. Then we are just sending a result message to another queue for logging purposes. Now imagine, there is a business requirement to guarantee that the logging messages are consistent with any change to the database in case there is a failure in any of the two resource managers.

In other words, we need to treat the whole flow as a single unit of work. If we cannot successfully put the log message in the queue, then we need to roll back the change that we made to the database in the previous step. When we want to make consistent changes across separate resources such as a database and a queue, this is known as Global Transaction Coordination or Two-Phase Commit (2PC). The good news is that this is something that IBM App Connect has supported for many years even in its incarnations (such as IBM Integration Bus, WebSphere Message Broker). However, you need to take into account some considerations in the container world, whenever you need to address such a requirement.

IBM App Connect relies on IBM MQ to act as the global transaction coordinator, as explained in the IBM Knowledge Center article titled “Message flow transactions”:

https://www.ibm.com/support/knowledgecenter/en/SSTTDS_11.0.0/com.ibm.etools.mft.doc/ac00645_.htm

If you need to have 2PC, instead of using a remote queue manager, you must create your container using an image that includes both IBM App Connect and a local IBM MQ server.

https://www.ibm.com/support/knowledgecenter/en/SSTTDS_11.0.0/com.ibm.etools.mft.doc/ac00645_.htm

If you need to have 2PC, instead of using a remote queue manager, you must create your container using an image that includes both IBM App Connect and a local IBM MQ server.

Refer to the section “When IBM App Connect needs a local queue manager” for additional details. A high-level diagram for this scenario is shown in Figure 6-133.

Figure 6-133 High-level diagram for the scenario

In this case, you would need to change the JDBC Provided policy to enable “Support for XA coordinated transactions” and make sure that the driver you are using is a JDBC Type 4. Additionally, two extra configuration changes are required to the associated local queue manager:

1. Modify the qm.ini file associated with the queue manager to include a stanza entry for the database with the following format (Example 6-4 on page 257):

Example 6-4 qm.ini file

XAResourceManager:

Name=<Database_Name>

SwitchFile=JDBCSwitch

XAOpenString=<JDBC_DataSource>

ThreadOfControl=THREAD

in our case it would look like this:

XAResourceManager:

Name=SAMPLES

SwitchFile=JDBCSwitch

XAOpenString=SAMPLES

ThreadOfControl=THREAD

Name=<Database_Name>

SwitchFile=JDBCSwitch

XAOpenString=<JDBC_DataSource>

ThreadOfControl=THREAD

in our case it would look like this:

XAResourceManager:

Name=SAMPLES

SwitchFile=JDBCSwitch

XAOpenString=SAMPLES

ThreadOfControl=THREAD

2. Set up queue manager access to the switch file by creating a symbolic link to the switch files that are supplied in the IBM App Connect installation directory. The command would be something like the following:

ln -s /opt/ibm/ace-11/server/lib/libJDBCSwitch.so /var/mqm/exits64/JDBCSwitch

For additional details you can check the IBM App Connect Knowledge Center article titled “Configuring a JDBC type 4 connection for globally coordinated transactions”:

https://www.ibm.com/support/knowledgecenter/SSTTDS_11.0.0/com.ibm.etools.mft.doc/ah61330_.htm

https://www.ibm.com/support/knowledgecenter/SSTTDS_11.0.0/com.ibm.etools.mft.doc/ah61330_.htm

With this in mind, it is important to mention that at the moment of writing this IBM Redbooks publication there is no way to inject those changes at deployment time of the IBM App Connect and IBM MQ container. Therefore, if you need to implement two-phase commit for your integration flows, you must use kubectl in order to access the pod you have deployed. Then, make the changes directly there. However, IBM’s labs are exploring the best option to handle this scenario in the future to have a more natural way to implement it. We will provide an update in the form of a technote after a different approach is defined.

6.4.10 Conclusion

In this section we have demonstrated how you can use IBM App Connect and IBM MQ as part of the IBM Cloud Pak for Integration to implement the “command” side of the Command Query Separation pattern to interact with IBM Db2 using one-phase commit and leverage your existing skills on IBM App Connect and IBM MQ, but we also highlighted the new functionality that is introduced in IBM App Connect to fit better in the agile integration paradigm. And finally we discussed the considerations for implementation of a two-phase commit.

6.5 Consolidate the new IBM MQ based command pattern into the API

In 6.4, “Messaging for reliable asynchronous data update commands” on page 207 we discussed how to use IBM App Connect and IBM MQ to implement the Command side of the Command Query Responsibility Segregating (CQRS) pattern. Using a fire-and-forget pattern over a messaging transport such as IBM MQ was a good option to address the performance issues related to slow response times, and provide availability that is not tied to that of the database.

However, there is an issue with this approach. It requires that the consumer of this service must have the ability to talk to IBM MQ. This requires IBM MQ specific client-side libraries, and indeed the knowledge to use them. We could reduce the knowledge burden on the developer by using the standards-based JMS library to talk to IBM MQ. That way, they would not need to know IBM MQ. But JMS itself is still a reasonably complex interface to learn if you have not used it before.

A good alternative is to make the act of putting the message on an IBM MQ queue available via RESTful API. This provides perhaps the lowest barrier to implementation for most developers, regardless of the programming language they are using.

Notice that we don't have to choose API or IBM MQ, we can have both. We can use the direct IBM MQ-based interface for consumers of the service that would prefer the improved reliability that the IBM MQ Client can provide compared to an HTTP-based API.

In this section we explore how to expose the IBM MQ based "Command" implementation as a RESTful API façade. We keep the messaging layer in place beneath the API to continue to decouple the interaction with the data source and retain the benefits of the command pattern. Figure 6-134 on page 259 shows the extended model.

Figure 6-134 Command pattern exposed as an API

As in previous sections, we will use IBM API Connect (APIC) to expose the API providing discovery, access control, traffic management, governance, and security as discussed in 6.3, “Expose an API using API Management” on page 189.

APIC uses the OpenAPI specification formerly known as Swagger to model the API.

6.5.1 Defining the API data model

The first thing we need to include is the data models — known as “Definitions” in the API world — that will be supported by the API. We will use this information when we work in other areas of the API. This is equivalent to the product.json file we used in IBM App Connect. However, in this case you create the definition as part of the API, because we want the API specification be self-explanatory and to use it as documentation as well, as shown in Figure 6-135 on page 260.

Figure 6-135 Product definitions

The details for the product definition are shown in Figure 6-136.

Figure 6-136 Product definitions- edit

6.5.2 Paths

Now that we have the definitions, we can move to the paths that will represent the location the API can be invoked. To keep a similar approach to the integration flows created in IBM App Connect, we are using three different paths, one for each command. But we can just as easily have one path because we are using different HTTP verbs for each command anyway. Figure 6-137 on page 260 shows the corresponding configuration.

Figure 6-137 Product configuration

Each path will have one operation. In the case of the create command, the convention is to use HTTP POST as shown in Figure 6-138.

Figure 6-138 POST operation

Some of the key aspects of the POST operation are highlighted in Figure 6-139 on page 261.

Figure 6-139 Key aspects of the POST operation