Having better prediction raises the value of judgment. After all, it doesn’t help to know the likelihood of rain if you don’t know how much you like staying dry or how much you hate carrying an umbrella.

Prediction machines don’t provide judgment. Only humans do, because only humans can express the relative rewards from taking different actions. As AI takes over prediction, humans will do less of the combined prediction-judgment routine of decision-making and focus more on the judgment role alone. This will enable an interactive interface between machine prediction and human judgment, much the same way that you run alternative queries when interacting with a spreadsheet or database.

With better prediction come more opportunities to consider the rewards of various actions—in other words, more opportunities for judgment. And that means that better, faster, and cheaper prediction will give us more decisions to make.

Judging Fraud

Credit card networks such as Mastercard, Visa, and American Express predict and judge all the time. They have to predict whether card applicants meet their standards for creditworthiness. If the individual doesn’t, then the company will deny them credit. You might think that’s pure prediction, but a significant element of judgment is involved as well. Being creditworthy is a sliding scale, and the credit card company has to decide how much risk it’s willing to take on at different interest and default rates. Those decisions lead to significantly different business models—the difference between American Express’s high-end platinum card and an entry-level card aimed at college students.

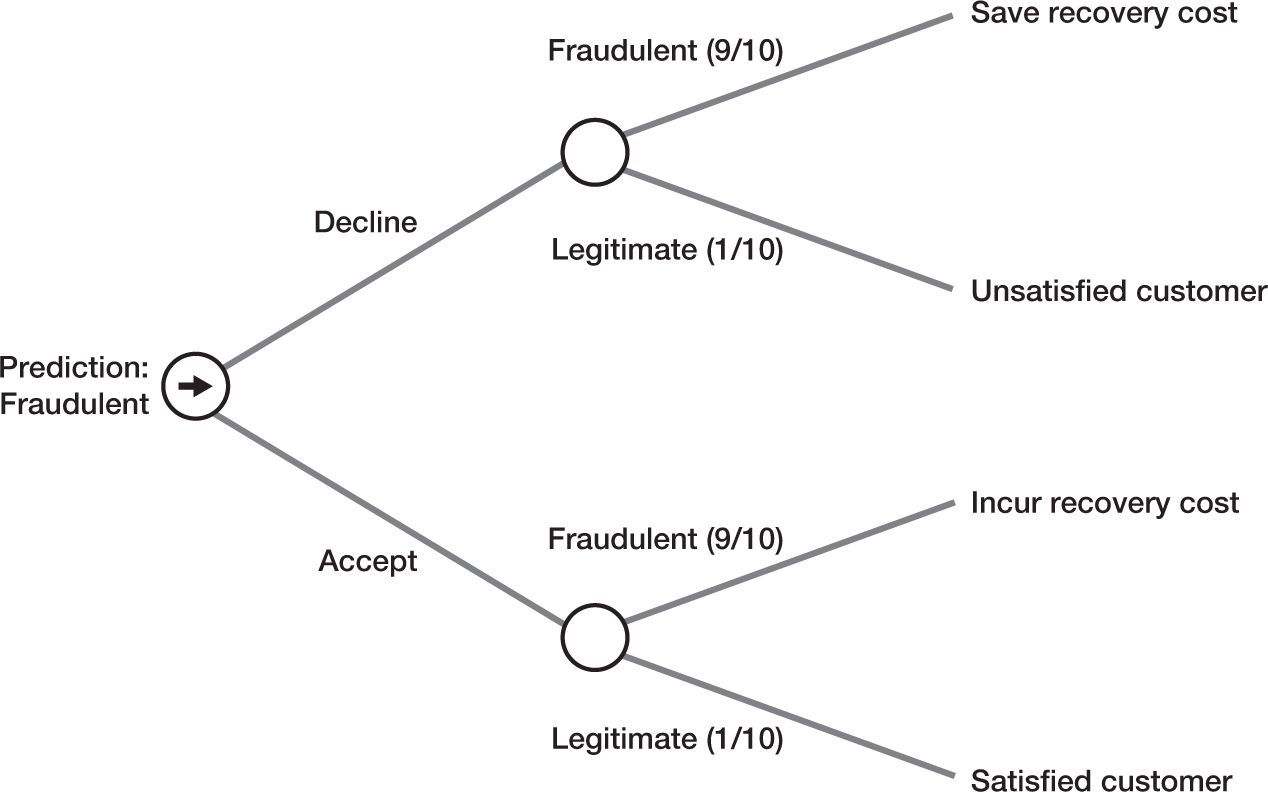

The company also needs to predict whether any given transaction is legitimate. As with your decision to carry an umbrella or not, the company must weigh four distinct outcomes (see figure 9-1). The company has to predict if the charge is fraudulent or legitimate, decide whether to authorize or decline the transaction and then evaluate each outcome (denying a fraudulent charge is good, angering a customer with the denial of a legitimate transaction is bad). If the credit card companies were perfect at predicting fraud, all would be well. But they’re not.

FIGURE 9-1

Four outcomes for credit card companies

For instance, Joshua (one of the authors) has had his credit card company routinely deny transactions when he is shopping for running shoes, something he does about once a year, usually at an outlet mall when he is on vacation. For many years, he had to call the credit card company to lift a restriction.

Credit card theft often happens at malls, and the first few fraudulent purchases might be things like shoes and clothing (easy to convert into cash as returns at a different branch of the same chain). And since Joshua is not in the habit of routinely buying clothes and shoes and rarely goes to a mall, the credit card company predicts that the card has likely been stolen. It’s a fair guess.

Some factors that influence the prediction about whether a card has been stolen are generic (the type of transaction, such as purchasing running shoes), while others are specific to individuals (in this case, age and frequency). That combination of factors means that the eventual algorithm that flags transactions will be complex.

The promise of AI is that it can make prediction much more precise, especially in situations with a mix of generic and personalized information. For instance, given data on Joshua’s years of transactions, a prediction machine could learn the pattern of those transactions, including the fact that he buys shoes around the same time each year. Rather than classifying such a purchase as an unusual event, it could classify it as a usual event for this particular person. A prediction machine may notice other correlations, such as how long it takes someone to shop, working out whether transactions in two different shops are too close together. As the prediction machine becomes more precise in flagging transactions, the card network can become more confident in imposing a restriction and even whether to contact a consumer. This is already happening. Joshua’s last outlet mall purchase of running shoes went smoothly.

But until prediction machines become perfect at predicting fraud, credit card companies will have to figure out the costs of errors, which requires judgment. Suppose that prediction is imperfect and has a 10 percent chance of being incorrect. Then, if the companies decline the transaction, they will do the right thing with a 90 percent chance and save the network the costs of recovering the payment associated with the unauthorized transaction. But they also will decline a legitimate transaction with a 10 percent chance, leaving the network with a dissatisfied customer. To work out the right course of action, they need to be able to balance the costs associated with fraud discovery with the costs associated with customer dissatisfaction. Credit card companies don’t automatically know the right answer to this trade-off. They need to figure it out. Judgment is the process of doing that.

It’s the umbrella case all over again, but instead of burdened/unburdened and wet/dry, there are fraud charges and customer satisfaction. In this case, because this transaction is nine times likelier to be fraudulent than legitimate, the company will deny the charge unless customer satisfaction is nine times more important than the possible loss.

For credit card fraud, many of these payoffs may be easy to judge. It is highly likely that the cost of recovery has a distinct monetary value that a network can identify. Suppose that for a $100 transaction, the recovery cost is $20. If the customer dissatisfaction cost is less than $180, it makes sense to decline the transaction (10 percent of $180 is $18, the same as 90 percent of $20). For many customers, being declined for a single transaction does not lead to the equivalent of $180 in dissatisfaction.

A credit card network also must assess whether that is likely to be the case for a particular customer. For example, a high-net-worth platinum cardholder may have other credit card options and might stop using that particular card if declined. And that person may be on an expensive vacation, so the card network could lose all of the expenditures associated with that trip.

Credit card fraud is a well-defined decision process, which is one reason we keep coming back to it, yet it’s still complicated. By contrast, for many other decisions, not only are the potential actions more complex (not just a simple accept or decline), but the potential situations (or states) also vary. Judgment requires an understanding of the reward for each pair of actions and situations. Our credit card example had just four outcomes (or eight if you distinguish between high-net-worth customers and everyone else). But if you had, say, ten actions and twenty possible situations, then you’re judging two hundred outcomes. As things get even more complicated, the number of rewards can become overwhelming.

The Cognitive Costs of Judgment

People who have studied decisions in the past have generally taken rewards as givens—they simply exist. You may like chocolate ice cream, while your friend may like mango gelato. How you two came to your different views is of little consequence. Similarly, we assume most businesses are maximizing profit or shareholder value. Economists looking at why firms choose certain prices for their products have found it useful to take those objectives on faith.

Payoffs are rarely obvious, and the process of understanding those payoffs can be time-consuming and costly. However, the rise of prediction machines increases the returns to understanding the logic and motivation for payoff values.

In economic terms, the cost of figuring out the payoffs will mostly be time. Consider one particular pathway by which you might determine payoffs: deliberation and thought. Thinking through what you really want to achieve or what the costs of customer dissatisfaction might be takes time spent thinking, reflecting, and perhaps asking others for advice. Or it may be the time spent researching to better understand payoffs.

For credit card fraud detection, thinking through the payoffs of satisfied and unsatisfied customers and the cost of allowing a fraudulent transaction to proceed are necessary first steps. Providing different payoffs for high-net-worth customers requires more thought. Assessing whether those payoffs change when those customers are on vacation requires even more consideration. And what about regular customers when they are on vacation? Are the payoffs in that situation different? And is it worth separating work travel from vacation? Or trips to Rome from trips to the Grand Canyon?

In each case, judging the payoffs requires time and effort: more outcomes mean more judgment means more time and effort. Humans experience the cognitive costs of judgment as a slower decision-making process. We all have to decide how much we want to pin down the payoffs against the costs of delaying a decision. Some will choose not to investigate payoffs for scenarios that seem remote or unlikely. The credit card network might find it worthwhile to separate work trips from vacations but not vacations to Rome from the Grand Canyon.

In such unlikely situations, the card network may guess at the right decision, group things together, or just choose a safer default. But for more frequent decisions (such as travel in general) or ones that appear more important (such as high-net-worth customers), many will take the time to deliberate and identify the payoffs more carefully. But the longer it takes to experiment, the longer it will take before your decision-making is performing as well as it could.

Figuring out payoffs might also be more like tasting new foods: try something and see what happens. Or, rather, in the vernacular of modern business: experiment. Individuals might take different actions in the same circumstances and learn what the reward actually is. They learn the payoffs instead of cogitating on them beforehand. Of course, because experimentation necessarily means making what you will later regard as mistakes, experiments also have costs. You will try foods you don’t like. If you keep trying new foods in the hope of finding some ideal, you are missing out on a lot of good meals. Judgment, whether by deliberation or experimentation, is costly.

Knowing Why You Are Doing Something

Prediction is at the heart of a move toward self-driving cars and the rise of platforms such as Uber and Lyft: choosing a route between origin and destination. Car navigation devices have been around for a few decades, built into cars themselves or as stand-alone devices. But the proliferation of internet-connected mobile devices has changed the data that providers of navigation software receive. For instance, before Google acquired it, the Israeli startup Waze generated accurate traffic maps by tracking the routes drivers chose. It then used that information to provide efficient optimization of the quickest path between two points, taking into account the information it had from drivers as well as continual monitoring of traffic. It could also forecast how traffic conditions might evolve if you were traveling farther and could offer new, more efficient paths on route if conditions changed.

Users of apps like Waze don’t always follow the directions. They don’t disagree with the prediction per se, but their ultimate objective might include more elements than just speed. For instance, the app doesn’t know if someone is running out of fuel and needs a gas station. But human drivers, knowing that they need gas, can overrule the app’s suggestion and take another route.

Of course, apps like Waze can and will get better. For instance, in Tesla cars, which run on electricity, navigation takes into account the need to recharge and the location of charging stations. An app could simply ask you whether you are likely to need fuel or, in the future, even get that data directly from your car. This seems like a solvable problem, just as you can tweak the settings on navigation apps to avoid toll roads.

Other aspects of your preferences are harder to program. For instance, on a long drive, you might want to make sure you pass certain good areas to stop and eat. Or the fastest route might tax the driver by suggesting back roads that only save a minute or two but require a lot of effort. Or you may not enjoy taking winding roads. Again, apps might learn those behaviors, but at any given time, some factors are necessarily not part of a codified prediction to automate an action. A machine has fundamental limitations about how much it can learn to predict your preferences.

The broader point for decisions is that objectives rarely have only a single dimension. Humans have, explicitly and implicitly, their own knowledge of why they are doing something, which gives them weights that are both idiosyncratic and subjective.

While a machine predicts what is likely to happen, humans will still decide what action to take based on their understanding of the objective. In many situations, as with Waze, the machine will give the human a prediction that implies a certain outcome for one dimension (like speed); the human will then decide whether to overrule the suggested action. Depending on the sophistication of the prediction machine, the human may ask it for another prediction based on a new constraint (“Waze, take me past a gas station”).

Hard-Coding Judgment

Ada Support, a startup, is using AI prediction to siphon off the easy from the difficult technical support questions. The AI answers the easy questions and sends the difficult ones to a human. For a typical mobile phone service provider, when consumers call for support, the vast majority of the questions they ask have also been asked by other people. The action of typing the answer is easy. The challenges are in predicting what the consumer wants and judging which answer to provide.

Rather than directing people to a “frequently asked questions” area of a web page, Ada identifies and answers these frequent questions right away. It can match a consumer’s individual characteristics (such as past knowledge of technical competence, the type of phone they are calling from, or past calls) to improve its assessment of the question. In the process, it can diminish frustration, but more importantly, it can handle more interactions quickly without the need to spend money on costlier human call-center operators. The humans specialize in the unusual and more difficult questions, while the machine handles the easy ones.

As machine prediction improves, it will be increasingly worthwhile to prespecify judgment in many situations. Just as we explain our thinking to other people, we can explain our thinking to machines—in the form of software code. When we anticipate receiving a precise prediction, we can hard-code the judgment before the machine predicts. Ada does this for easy questions. Otherwise, it is too time-consuming, with too many possible situations to specify what to do in each situation in advance. So, for the hard questions, Ada calls in the humans for their judgment.

Experience can sometimes make judgment codifiable. Much experience is intangible and so cannot be written down or expressed easily. As Andrew McAfee and Erik Brynjolfsson wrote: “[S]ubstitution (of computers for people) is bounded because there are many tasks that people understand tacitly and accomplish effortlessly but for which neither computer programmers nor anyone else can enunciate the explicit ‘rules’ or procedures.”1 That, however, is not true of all tasks. For some decisions, you can articulate the requisite judgment and express it as code. After all, we often explain our thinking to other people. In effect, codifiable judgment allows you to fill in the part after “then” in “if-then” statements. When this happens, then judgment can be enshrined and programmed.

The challenge is that, even when you can program judgment to take over from a human, the prediction the machine receives must be fairly precise. When there are too many possible situations, it is too time-consuming to specify what to do in each situation in advance. You can easily program a machine to take a certain action when it is clear what is likely to be true; however, when there is still uncertainty, telling the machine what to do requires a more careful weighing of the costs of mistakes. Uncertainty means you need judgment when the prediction turns out to be wrong, not just when the prediction is right. In other words, uncertainty increases the cost of judging the payoffs for a given decision.

Credit card networks have embraced new machine-learning techniques for fraud detection. Prediction machines enable them to be more confident in codifying the decision about whether to block a card transaction. As the predictions on fraud become more precise, the likelihood of mislabeling legitimate transactions as fraudulent is reduced. If the credit card companies are not afraid of making a mistake on the prediction, then they can codify the machine’s decision, with no need to judge how costly it might be to offend particular customers by declining their transaction. Making the decision is easier: if fraud, then reject; otherwise, accept the transaction.

Reward Function Engineering

As prediction machines provide better and cheaper predictions, we need to work out how to best use those predictions. Whether or not we can specify judgment in advance, someone needs to determine the judgment. Enter reward function engineering, the job of determining the rewards to various actions, given the predictions that the AI makes. Doing this job well requires an understanding of the organization’s needs and the machine’s capabilities.

Sometimes reward function engineering involves hard-coding judgment—programming the rewards in advance of the predictions in order to automate actions. Self-driving vehicles are an example of such hard-coded rewards. Once the prediction is made, the action is instant. But getting the reward right isn’t trivial. Reward function engineering has to consider the possibility that the AI will over-optimize on one metric of success and, in doing so, act inconsistently with the organization’s broader goals. Entire committees are working on this for self-driving cars; however, such analysis will be required for a variety of new decisions.

In other cases, the number of possible predictions may make it too costly for anyone to judge all the possible payoffs in advance. Instead, a human needs to wait for the prediction to arrive and then assess the payoff, which is close to how most decision-making currently works, whether or not it includes machine-generated predictions. As we will see in the following chapter, machines are encroaching on this as well. A prediction machine can, in some circumstances, learn to predict human judgment by observing past decisions.

Putting It All Together

Most of us already do some reward function engineering, but for humans, not machines. Parents teach their children values. Mentors teach new workers how the system operates. Managers give objectives to their staff and then tweak them to get better performance. Every day, we make decisions and judge the rewards. But when we do this for humans, we group prediction and judgment together, and the role of reward function engineering is not distinct. As machines get better at prediction, the role of reward function engineering will become increasingly important.

To illustrate reward function engineering in practice, consider pricing decisions at ZipRecruiter, an online job board. Companies pay ZipRecruiter to find qualified candidates for job openings they wish to fill. The core product of ZipRecruiter is a matching algorithm that does this efficiently and at scale, a version of the traditional headhunter that matches job seekers to companies.2

ZipRecruiter wasn’t clear what it should charge companies for its service. Charge too little, and it leaves money on the table. Charge too much, and customers switch to the company’s competitors. To figure out its pricing, ZipRecruiter brought in two experts, J. P. Dubé and Sanjog Misra, economists from the University of Chicago’s Booth School of Business, who designed experiments to determine the best prices. They randomly assigned different prices to different customer leads and determined the likelihood each group would purchase. This allowed them to determine how different customers responded to different price points.

The challenge was to figure out what “best” meant. Should the company just maximize short-term revenue? To do so, it might choose a high price. But a high price means fewer customers (even though each customer is more profitable). That would also mean less word of mouth. In addition, if it has fewer job postings, the number of people who use ZipRecruiter to find jobs might fall. Finally, the customers facing high prices might start looking for alternatives. While they might pay the high price in the short run, they might switch to a competitor in the long run. How should ZipRecruiter weigh these various considerations? What payoff should it maximize?

It was relatively easy to measure the short-run consequences of a price increase. The experts found that increasing prices for some types of new customers would increase profits on a day-to-day basis by over 50 percent. However, ZipRecruiter didn’t act right away. It recognized the longer-term risk and waited to see if the higher-paying customers would leave. After four months, it found that the price increase was still highly profitable. It didn’t want to forgo the higher profits any longer and judged four months to be long enough to implement the price changes.

Figuring out the rewards from these various actions—the key piece of judgment—is reward function engineering, a fundamental part of what humans do in the decision-making process. Prediction machines are a tool for humans. So long as humans are needed to weigh outcomes and impose judgment, they have a key role to play as prediction machines improve.

KEY POINTS

- Prediction machines increase the returns to judgment because, by lowering the cost of prediction, they increase the value of understanding the rewards associated with actions. However, judgment is costly. Figuring out the relative payoffs for different actions in different situations takes time, effort, and experimentation.

- Many decisions occur under conditions of uncertainty. We decide to bring an umbrella because we think it might rain, but we could be wrong. We decide to authorize a transaction because we think it is legitimate, but we could be wrong. Under conditions of uncertainty, we need to determine the payoff for acting on wrong decisions, not just right ones. So, uncertainty increases the cost of judging the payoffs for a given decision.

- If there are a manageable number of action-situation combinations associated with a decision, then we can transfer the judgment from ourselves to the prediction machine (this is “reward function engineering”) so that the machine can make the decision itself once it generates the prediction. This enables automating the decision. Often, however, there are too many action-situation combinations, such that it is too costly to code up in advance all the payoffs associated with each combination, especially the very rare ones. In these cases, it is more efficient for a human to apply judgment after the prediction machine predicts.