Chapter 4. Transports

This chapter covers

- OIO—blocking transport

- NIO—asynchronous transport

- Local transport—asynchronous communications within a JVM

- Embedded transport—testing your ChannelHandlers

The data that flows through a network always has the same type: bytes. How these bytes are moved around depends mostly on what we refer to as the network transport, a concept that helps us to abstract away the underlying mechanics of data transfer. Users don’t care about the details; they just want to be certain that their bytes are reliably sent and received.

If you have experience with network programming in Java, you may have discovered at some point that you needed to support a great many more concurrent connections than expected. If you then tried to switch from a blocking to a non-blocking transport, you might have encountered problems because the two network APIs are quite different.

Netty, however, layers a common API over all its transport implementations, making such a conversion far simpler than you can achieve using the JDK directly. The resulting code will be uncontaminated by implementation details, and you won’t need to perform extensive refactoring of your entire code base. In short, you can spend your time doing something productive.

In this chapter, we’ll study this common API, contrasting it with the JDK to demonstrate its far greater ease of use. We’ll explain the transport implementations that come bundled with Netty and the use cases appropriate to each. With this information in hand, you should find it straightforward to choose the best option for your application.

The only prerequisite for this chapter is knowledge of the Java programming language. Experience with network frameworks or network programming is a plus, but not a requirement.

We’ll start by seeing how transports work in a real-world situation.

4.1. Case study: transport migration

We’ll begin our study of transports with an application that simply accepts a connection, writes “Hi!” to the client, and closes the connection.

4.1.1. Using OIO and NIO without Netty

We’ll present blocking (OIO) and asynchronous (NIO) versions of the application that use only the JDK APIs. The next listing shows the blocking implementation. If you’ve ever experienced the joy of network programming with the JDK, this code will evoke pleasant memories.

Listing 4.1. Blocking networking without Netty

This code handles a moderate number of simultaneous clients adequately. But as the application becomes popular, you notice that it isn’t scaling very well to tens of thousands of concurrent incoming connections. You decide to convert to asynchronous networking, but soon discover that the asynchronous API is completely different, so now you have to rewrite your application.

The non-blocking version is shown in the following listing.

Listing 4.2. Asynchronous networking without Netty

As you can see, although this code does the very same thing as the preceding version, it is quite different. If reimplementing this simple application for non-blocking I/O requires a complete rewrite, consider the level of effort that would be required to port something truly complex.

With this in mind, let’s see how the application looks when implemented using Netty.

4.1.2. Using OIO and NIO with Netty

We’ll start by writing another blocking version of the application, this time using the Netty framework, as shown in the following listing.

Listing 4.3. Blocking networking with Netty

Next we’ll implement the same logic with non-blocking I/O using Netty.

4.1.3. Non-blocking Netty version

The next listing is virtually identical to listing 4.3 except for the two highlighted lines. This is all that’s required to switch from blocking (OIO) to non-blocking (NIO) transport.

Listing 4.4. Asynchronous networking with Netty

Because Netty exposes the same API for every transport implementation, whichever you choose, your code remains virtually unaffected. In all cases the implementation is defined in terms of the interfaces Channel, ChannelPipeline, and ChannelHandler.

Having seen some of the benefits of using Netty-based transports, let’s take a closer look at the transport API itself.

4.2. Transport API

At the heart of the transport API is interface Channel, which is used for all I/O operations. The Channel class hierarchy is shown in figure 4.1.

Figure 4.1. Channel interface hierarchy

The figure shows that a Channel has a ChannelPipeline and a ChannelConfig assigned to it. The ChannelConfig holds all of the configuration settings for the Channel and supports hot changes. Because a specific transport may have unique settings, it may implement a subtype of ChannelConfig. (Please refer to the Javadocs for the ChannelConfig implementations.)

Since Channels are unique, declaring Channel as a subinterface of java.lang.Comparable is intended to guarantee ordering. Thus, the implementation of compareTo() in AbstractChannel throws an Error if two distinct Channel instances return the same hash code.

The ChannelPipeline holds all of the ChannelHandler instances that will be applied to inbound and outbound data and events. These ChannelHandlers implement the application’s logic for handling state changes and for data processing.

Typical uses for ChannelHandlers include:

- Transforming data from one format to another

- Providing notification of exceptions

- Providing notification of a Channel becoming active or inactive

- Providing notification when a Channel is registered with or deregistered from an EventLoop

- Providing notification about user-defined events

Intercepting filter

The ChannelPipeline implements a common design pattern, Intercepting Filter. UNIX pipes are another familiar example: commands are chained together, with the output of one command connecting to the input of the next in line.

You can also modify a ChannelPipeline on the fly by adding or removing ChannelHandler instances as needed. This capability of Netty can be exploited to build highly flexible applications. For example, you could support the STARTTLS[1] protocol on demand simply by adding an appropriate ChannelHandler (SslHandler) to the ChannelPipeline whenever the protocol is requested.

See STARTTLS, http://en.wikipedia.org/wiki/STARTTLS.

In addition to accessing the assigned ChannelPipeline and ChannelConfig, you can make use of Channel methods, the most important of which are listed in table 4.1.

Table 4.1. Channel methods

|

Method name |

Description |

|---|---|

| eventLoop | Returns the EventLoop that is assigned to the Channel. |

| pipeline | Returns the ChannelPipeline that is assigned to the Channel. |

| isActive | Returns true if the Channel is active. The meaning of active may depend on the underlying transport. For example, a Socket transport is active once connected to the remote peer, whereas a Datagram transport would be active once it’s open. |

| localAddress | Returns the local SocketAddress. |

| remoteAddress | Returns the remote SocketAddress. |

| write | Writes data to the remote peer. This data is passed to the ChannelPipeline and queued until it’s flushed. |

| flush | Flushes the previously written data to the underlying transport, such as a Socket. |

| writeAndFlush | A convenience method for calling write() followed by flush(). |

Later on we’ll discuss the uses of all these features in detail. For now, keep in mind that the broad range of functionality offered by Netty relies on a small number of interfaces. This means that you can make significant modifications to application logic without wholesale refactoring of your code base.

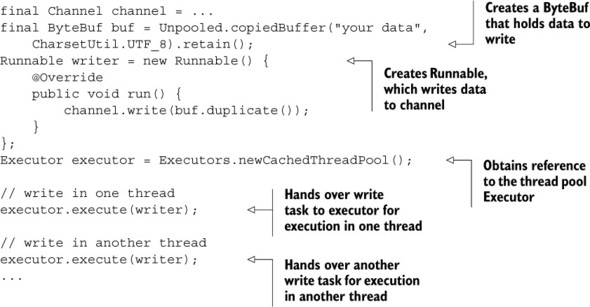

Consider the common task of writing data and flushing it to the remote peer. The following listing illustrates the use of Channel.writeAndFlush() for this purpose.

Listing 4.5. Writing to a Channel

Netty’s Channel implementations are thread-safe, so you can store a reference to a Channel and use it whenever you need to write something to the remote peer, even when many threads are in use. The following listing shows a simple example of writing with multiple threads. Note that the messages are guaranteed to be sent in order.

Listing 4.6. Using a Channel from many threads

4.3. Included transports

Netty comes bundled with several transports that are ready for use. Because not all of them support every protocol, you have to select a transport that is compatible with the protocols employed by your application. In this section we’ll discuss these relationships.

Table 4.2 lists all of the transports provided by Netty.

Table 4.2. Netty-provided transports

|

Name |

Package |

Description |

|---|---|---|

| NIO | io.netty.channel.socket.nio | Uses the java.nio.channels package as a foundation—a selector-based approach. |

| Epoll | io.netty.channel.epoll | Uses JNI for epoll() and non-blocking IO. This transport supports features available only on Linux, such as SO_REUSEPORT, and is faster than the NIO transport as well as fully non-blocking. |

| OIO | io.netty.channel.socket.oio | Uses the java.net package as a foundation—uses blocking streams. |

| Local | io.netty.channel.local | A local transport that can be used to communicate in the VM via pipes. |

| Embedded | io.netty.channel.embedded | An embedded transport, which allows using ChannelHandlers without a true network-based transport. This can be quite useful for testing your ChannelHandler implementations. |

We’ll discuss these transports in greater detail in the next sections.

4.3.1. NIO—non-blocking I/O

NIO provides a fully asynchronous implementation of all I/O operations. It makes use of the selector-based API that has been available since the NIO subsystem was introduced in JDK 1.4.

The basic concept behind the selector is to serve as a registry where you request to be notified when the state of a Channel changes. The possible state changes are

- A new Channel was accepted and is ready.

- A Channel connection was completed.

- A Channel has data that is ready for reading.

- A Channel is available for writing data.

After the application reacts to the change of state, the selector is reset and the process repeats, running on a thread that checks for changes and responds to them accordingly.

The constants shown in table 4.3 represent the bit patterns defined by class java.nio.channels.SelectionKey. These patterns are combined to specify the set of state changes about which the application is requesting notification.

Table 4.3. Selection operation bit-set

|

Name |

Description |

|---|---|

| OP_ACCEPT | Requests notification when a new connection is accepted, and a Channel is created. |

| OP_CONNECT | Requests notification when a connection is established. |

| OP_READ | Requests notification when data is ready to be read from the Channel. |

| OP_WRITE | Requests notification when it is possible to write more data to the Channel. This handles cases when the socket buffer is completely filled, which usually happens when data is transmitted more rapidly than the remote peer can handle. |

These internal details of NIO are hidden by the user-level API common to all of Netty’s transport implementations. Figure 4.2 shows the process flow.

Figure 4.2. Selecting and processing state changes

Zero-copy is a feature currently available only with NIO and Epoll transport. It allows you to quickly and efficiently move data from a file system to the network without copying from kernel space to user space, which can significantly improve performance in protocols such as FTP or HTTP. This feature is not supported by all OSes. Specifically it is not usable with file systems that implement data encryption or compression—only the raw content of a file can be transferred. Conversely, transferring files that have already been encrypted isn’t a problem.

4.3.2. Epoll—native non-blocking transport for Linux

As we explained earlier, Netty’s NIO transport is based on the common abstraction for asynchronous/non-blocking networking provided by Java. Although this ensures that Netty’s non-blocking API will be usable on any platform, it also entails limitations, because the JDK has to make compromises in order to deliver the same capabilities on all systems.

The growing importance of Linux as a platform for high-performance networking has led to the development of a number of advanced features, including epoll, a highly scalable I/O event-notification feature. This API, available since version 2.5.44 (2002) of the Linux kernel, provides better performance than the older POSIX select and poll system calls[2] and is now the de facto standard for non-blocking networking on Linux. The Linux JDK NIO API uses these epoll calls.

See epoll(4) in the Linux manual pages, http://linux.die.net/man/4/epoll.

Netty provides an NIO API for Linux that uses epoll in a way that’s more consistent with its own design and less costly in the way it uses interrupts.[3] Consider utilizing this version if your applications are intended for Linux; you’ll find that performance under heavy load is superior to that of the JDK’s NIO implementation.

The JDK implementation is level-triggered, whereas Netty’s is edge-triggered. See the explanation on the epoll Wikipedia page for details, http://en.wikipedia.org/wiki/Epoll-Triggering_modes.

The semantics of this transport are identical to those shown in figure 4.2, and its use is straightforward. For an example, refer to listing 4.4. To substitute epoll for NIO in that listing, replace NioEventLoopGroup with EpollEventLoopGroup and NioServerSocketChannel.class with EpollServerSocketChannel.class.

4.3.3. OIO—old blocking I/O

Netty’s OIO transport implementation represents a compromise: it is accessed via the common transport API, but because it’s built on the blocking implementation of java.net, it’s not asynchronous. Yet it’s very well-suited to certain uses.

For example, you might need to port legacy code that uses libraries that make blocking calls (such as JDBC[4]) and it may not be practical to convert the logic to non-blocking. Instead, you could use Netty’s OIO transport in the short term, and port your code later to one of the pure asynchronous transports. Let’s see how it works.

JDBC documentation is available at www.oracle.com/technetwork/java/javase/jdbc/index.html.

In the java.net API, you usually have one thread that accepts new connections arriving at the listening ServerSocket. A new socket is created for the interaction with the peer, and a new thread is allocated to handle the traffic. This is required because any I/O operation on a specific socket can block at any time. Handling multiple sockets with a single thread can easily lead to a blocking operation on one socket tying up all the others as well.

Given this, you may wonder how Netty can support NIO with the same API used for asynchronous transports. The answer is that Netty makes use of the SO_TIMEOUT Socket flag, which specifies the maximum number of milliseconds to wait for an I/O operation to complete. If the operation fails to complete within the specified interval, a SocketTimeoutException is thrown. Netty catches this exception and continues the processing loop. On the next EventLoop run, it will try again. This is the only way an asynchronous framework like Netty can support OIO.[5] Figure 4.3 illustrates this logic.

One problem with this approach is the time required to fill in a stack trace when a SocketTimeoutException is thrown, which is costly in terms of performance.

Figure 4.3. OIO processing logic

4.3.4. Local transport for communication within a JVM

Netty provides a local transport for asynchronous communication between clients and servers running in the same JVM. Again, this transport supports the API common to all Netty transport implementations.

In this transport, the SocketAddress associated with a server Channel isn’t bound to a physical network address; rather, it’s stored in a registry for as long as the server is running and is deregistered when the Channel is closed. Because the transport doesn’t accept real network traffic, it can’t interoperate with other transport implementations. Therefore, a client wishing to connect to a server (in the same JVM) that uses this transport must also use it. Apart from this limitation, its use is identical to that of other transports.

4.3.5. Embedded transport

Netty provides an additional transport that allows you to embed ChannelHandlers as helper classes inside other ChannelHandlers. In this fashion, you can extend the functionality of a ChannelHandler without modifying its internal code.

The key to this embedded transport is a concrete Channel implementation called, not surprisingly, EmbeddedChannel. In chapter 9 we’ll discuss in detail how to use this class to create unit test cases for ChannelHandler implementations.

4.4. Transport use cases

Now that we’ve looked at all the transports in detail, let’s consider the factors that go into choosing a protocol for a specific use. As mentioned previously, not all transports support all core protocols, which may limit your choices. Table 4.4 shows the matrix of transports and protocols supported at the time of publication.

Table 4.4. Transports support by network protocols

|

Transport |

TCP |

UDP |

SCTP[*] |

UDT |

|---|---|---|---|---|

| NIO | X | X | X | X |

| Epoll (Linux only) | X | X | — | — |

| OIO | X | X | X | X |

See the explanation of the Stream Control Transmission Protocol (SCTP) in RFC 2960 at www.ietf.org/rfc/rfc2960.txt.

SCTP requires kernel support as well as installation of the user libraries.

For example, for Ubuntu you would use the following command:

# sudo apt-get install libsctp1

For Fedora, you’d use yum:

# sudo yum install kernel-modules-extra.x86_64 lksctp-tools.x86_64

Please refer to the documentation of your Linux distribution for more information about how to enable SCTP.

Although only SCTP has these specific requirements, other transports may have their own configuration options to consider. Furthermore, a server platform will probably need to be configured differently from a client, if only to support a higher number of concurrent connections.

Here are the use cases that you’re likely to encounter.

- Non-blocking code base —If you don’t have blocking calls in your code base—or you can limit them—it’s always a good idea to use NIO or epoll when on Linux. While NIO/epoll is intended to handle many concurrent connections, it also works quite well with a smaller number, especially given the way it shares threads among connections.

- Blocking code base —As we’ve already remarked, if your code base relies heavily on blocking I/O and your applications have a corresponding design, you’re likely to encounter problems with blocking operations if you try to convert directly to Netty’s NIO transport. Rather than rewriting your code to accomplish this, consider a phased migration: start with OIO and move to NIO (or epoll if you’re on Linux) once you have revised your code.

- Communications within the same JVM —Communications within the same JVM with no need to expose a service over the network present the perfect use case for local transport. This will eliminate all the overhead of real network operations while still employing your Netty code base. If the need arises to expose the service over the network, you’ll simply replace the transport with NIO or OIO.

- Testing your ChannelHandler implementations —If you want to write unit tests for your ChannelHandler implementations, consider using the embedded transport. This will make it easy to test your code without having to create many mock objects. Your classes will still conform to the common API event flow, guaranteeing that the ChannelHandler will work correctly with live transports. You’ll find more information about testing ChannelHandlers in chapter 9.

Table 4.5 summarizes the use cases we’ve examined.

Table 4.5. Optimal transport for an application

|

Application needs |

Recommended transport |

|---|---|

| Non-blocking code base or general starting point | NIO (or epoll on Linux) |

| Blocking code base | OIO |

| Communication within the same JVM | Local |

| Testing ChannelHandler implementations | Embedded |

4.5. Summary

In this chapter we studied transports, their implementation and use, and how Netty presents them to the developer.

We went through the transports that ship with Netty and explained their behavior. We also looked at their minimum requirements, because not all transports work with the same Java version and some may be usable only on specific OSes. Finally, we discussed how you can match transports to the requirements of specific use cases.

In the next chapter, we’ll focus on ByteBuf and ByteBufHolder, Netty’s data containers. We’ll show how to use them and how to get the best performance from them.