It is useful to run scripts in the background when they are totally automated. Then your terminal is not tied up and you can work on other things. In this chapter, I will describe some of the subtle points of background processing.

As an example, imagine a script that is supposedly automated but prompts for a password anyway or demands interactive attention for some other reason. This type of problem can be handled with Expect. In this chapter, I will discuss several techniques for getting information to and from background processes in a convenient manner.

I will also describe how to build a telnet daemon in Expect that can be run from inetd. While rewriting a telnet daemon is of little value for its own sake, with a few customizations such a daemon can be used to solve a wide variety of problems.

You can have Expect run in the background in several ways. You can explicitly start it asynchronously by appending & to the command line. You can start Expect and then press ^Z and enter bg. Or you can run Expect from cron or at. Some systems have a third interface called batch.[55] Expect can also put itself into the background using the fork and disconnect commands.

The definition of background is not precisely defined. However, a background process usually means one that cannot read from the terminal. The word terminal is a historic term referring to the keyboard on which you are typing and the screen showing your output.

Expect normally reads from the terminal by using expect_user, "gets stdin“, etc. Writing to the terminal is analogous.

If Expect has been started asynchronously (with an & appended) or suspended and continued in the background (via bg) from a job control shell, expect_user will not be able to read anything. Instead, what the user types will be read by the shell. Only one process can read from the terminal at a time. Inversely, the terminal is said to be controlling one process. The terminal is known as the controlling terminal for all processes that have been started from it.

If Expect is brought into the foreground (by fg), expect_user will then be able to read from the terminal.

It is also possible to run Expect in the background but without a controlling terminal. For example, cron does this. Without redirection, expect_user cannot be used at all when Expect lacks a controlling terminal.

Depending upon how you have put Expect into the background, a controlling terminal may or may not be present. The simplest way to test if a controlling terminal is present is to use the stty command. If stty succeeds, a controlling terminal exists.

if [catch stty] { # controlling terminal does not exist } else { # controlling terminal exists }

If a controlling terminal existed at one time, the global variable tty_spawn_id refers to it. How can a controlling terminal exist at one time and then no longer exist? Imagine starting Expect in the background (using "&“). At this point, Expect has a controlling terminal. If you log out, the controlling terminal is lost.

For the remainder of this chapter, the term background will also imply that the process lacks a controlling terminal.

When Expect has no controlling terminal, you must avoid using tty_spawn_id. And if the standard input, standard output, and standard error have not been redirected, expect_user, send_user, and send_error will not work. Lastly, a stty command without redirection will always fail.

If the process has been started from cron, there are yet more caveats. By default, cron does not use your environment, so you may need to force cron to use it or perhaps explicitly initialize parts of your environment. For example, the default path supplied by cron usually includes only /bin and /usr/bin. This is almost always insufficient.

Be prepared for all sorts of strange things to happen in the default cron environment. For example, many programs (e.g., rn, telnet) crash or hang if the TERM environment variable is not set. This is a problem under cron which does not define TERM. Thus, you must set it explicitly—to what type is usually irrelevant. It just has to be set to something!

Environment variables can be filled in by appropriate assignments to the global array env. Here is an assignment of TERM to a terminal type that should be understood at any site.

set env(TERM) vt100

Later, I will describe how to debug problems that arise due to the unusual environment in cron.

It is possible to start a script in the foreground but later have it move itself into the background and disconnect itself from the controlling terminal. This is useful when there is some point in the script after which no further interaction is required.

For example, suppose you want to automate a command that requires you to type in a password but it is inconvenient for you to enter the password when it is needed (e.g., you plan to be asleep later).

You could embed the password in the script or pass the password as an argument to it, but those are pretty risky ideas. A more secure way is to have the script interactively prompt for the password and remember it until it is needed later. The password will not be available in any public place—just in the memory of the Expect process.

In order to read the password in the first place, Expect needs a controlling terminal. After the password is read, Expect will still tie up the terminal—until you log out. To avoid the inconvenience of tying up the controlling terminal (or making you log out and back in again), Expect provides the fork and disconnect commands.

The fork command creates a new process. The new process is an exact copy of the current Expect process except for one difference. In the new (child) process, the fork command returns 0. In the original Expect process, fork returns the process id of child process.

if [fork] {

# code to be executed by parent

} else {

# code to be executed by child

}If you save the results of the fork command in, say, the variable child_pid, you will have two processes, identical except for the variable child_pid. In the parent process, child_pid will contain the process id of the child. In the child process, child_pid will contain 0. If the child wants its own process id, it can use the pid command. (If the child needs the parent’s process id, the child can call the pid command before the fork and save the result in a variable. The variable will be accessible after the fork.)

If your system is low on memory, swap space, etc., the fork command can fail and no child process will be created. You can use catch to prevent the failure from propagating. For example, the following fragment causes the fork to be retried every minute until it succeeds:

while {1}

if {[catch fork child_pid] == 0} break

sleep 60

}Forked processes exit via the exit command, just like the original process. Forked processes are allowed to write to the log files. However, if you do not disable debugging or logging in most of the processes, the results can be confusing.

Certain side-effects of fork may be non-intuitive. For example, a parent that forks while a file is open will share the read-write pointer with the child. If the child moves it, the parent will be affected. This behavior is not governed by Expect, but is a consequence of UNIX and POSIX. Read your local documentation on fork for more information.

The disconnect command disconnects the Expect process from its controlling terminal. To prevent the terminal from ending up not talking to anything, you must fork before calling disconnect. After forking, the terminal is shared by both the original Expect process and the new child process. After disconnecting, the child process can go on its merry way in the background. Meanwhile, the original Expect process can exit, gracefully returning the terminal to the invoking shell.

This seemingly artificial and arcane dance is the UNIX way of doing things. Thankfully, it is much easier to write using Expect than to describe using English. Here is how it looks when rendered in an Expect script:

if {[fork] != 0} exit

disconnect

# remainder of script is executed in backgroundA few technical notes are in order. The disconnected process is given its own process group (if possible). Any unredirected standard I/O descriptors (e.g., standard input, standard output, standard error) are redirected to /dev/null. The variable tty_spawn_id is unset.

The ability to disconnect is extremely useful. If a script will need a password later, the script can prompt for the password immediately and then wait in the background. This avoids tying up the terminal, and also avoids storing the password in a script or passing it as an argument. Here is how this idea is implemented:

stty -echo

send_user "password? "

expect_user -re "(.*)

"

send_user "

"

set password $expect_out(1,string)

# got the password, now go into the background

if {[fork] != 0} exit

disconnect

# now in background, sleep (or wait for event, etc)

sleep 3600

# now do something requiring the password

spawn rlogin $host

expect "password:"

send "$password

"This technique works well with security systems such as MIT’s Kerberos. In order to run a process authenticated by Kerberos, all that is necessary is to spawn kinit to get a ticket, and similarly kdestroy when the ticket is no longer needed.

Scripts can reuse passwords multiple times. For example, the following script reads a password and then runs a program every hour that demands a password each time it is run. The script supplies the password to the program so that you only have to type it once.

stty -echo

send_user "password? "

expect_user -re "(.*)

"

set password $expect_out(1,string)

send_user "

"

while 1 {

if {[fork] != 0} {

sleep 3600

continue

}

disconnect

spawn priv_prog

expect "password:"

send "$password

"

. . .

exit

}This script does the forking and disconnecting quite differently than the previous one. Notice that the parent process sleeps in the foreground. That is to say, the parent remains connected to the controlling terminal, forking child processes as necessary. The child processes disconnect, but the parent continues running. This is the kind of processing that occurs with some mail programs; they fork a child process to dispatch outgoing mail while you remain in the foreground and continue to create new mail.

It might be necessary to ask the user for several passwords in advance before disconnecting. Just ask and be specific. It may be helpful to explain why the password is needed, or that it is needed for later.

Consider the following prompts:

send_user "password for $user1 on $host1: " send_user "password for $user2 on $host2: " send_user "password for root on hobbes: " send_user "encryption key for $user3: " send_user "sendmail wizard password: "

It is a good idea to force the user to enter the password twice. It may not be possible to authenticate it immediately (for example, the machine it is for may not be up at the moment), but at least the user can lower the probability of the script failing later due to a mistyped password.

stty -echo

send_user "root password: "

expect_user -re "(.*)

"

send_user "

"

set passwd $expect_out(1,string)

send_user "Again:"

expect_user -re "(.*)

"

send_user "

"

if {0 !=[string compare $passwd $expect_out(1,string)]} {

send_user "mistyped password?"

exit

}You can even offer to display the password just typed. This is not a security risk as long as the user can decline the offer or can display the password in privacy. Remember that the alternative of passing it as an argument allows anyone to see it if they run ps at the right moment.

Another advantage to using the disconnect command over the shell asynchronous process feature (&) is that Expect can save the terminal parameters prior to disconnection. When started in the foreground, Expect automatically saves the terminal parameters of the controlling terminal. These are used later by spawn when creating new processes and their controlling terminals.

On the other hand, when started asynchronously (using &), Expect does not have a chance to read the terminal’s parameters since the terminal is already disconnected by the time Expect receives control. In this case, the terminal is initialized purely by setting it with "stty sane“. But this loses information such as the number of rows and columns. While rows and columns may not be particularly valuable to disconnected programs, some programs may want a value—any value, as long as it is nonzero.

Debugging disconnected processes can be challenging. Expect’s debugger does not work in a disconnected program because the debugger reads from the standard input which is closed in a disconnected process. For simple problems, it may suffice to direct the log or diagnostic messages to a file or another window on your screen. Then you can use send_log to tell you what the child is doing. Some systems support programs such as syslog or logger. These programs provide a more controllable way of having disconnected processes report what they are doing. See your system’s documentation for more information.

These are all one-way solutions providing no way to get information back to the process. I will describe a more general two-way solution in the next section.

Unfortunately, there is no general way to reconnect a controlling terminal to a disconnected process. But with a little work, it is possible to emulate this behavior. This is useful for all sorts of reasons. For example, a script may discover that it needs (yet more) passwords. Or a backup program may need to get someone’s attention to change a tape. Or you may want to interactively debug a script.

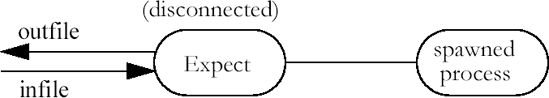

To restate: You have two processes (your shell and a disconnected Expect processes) that need to communicate. However they are unrelated. The simplest way to have unrelated processes communicate is through fifos. It is necessary to use a pair since communication must flow in both directions. Both processes thus have to open the fifos.

The disconnected script executes the following code to open the fifos. infifo contains the name of the fifo that will contain the user’s input (i.e., keystrokes). outfifo contains the name of the fifo that will be used to send data back to the user. This form of open is described further in Chapter 13 (p. 287).

spawn -open [open "|cat $catflags < $infifo" "r"] set userin $spawn_id spawn -open [open $outfifo w] set userout $spawn_id

The two opens hang until the other ends are opened (by the user process). Once both fifos are opened, an interact command passes all input to the spawned process and the output is returned.

interact -u $spawn_id -input $userin -output $userout

The -u flag declares the spawned process as one side of the interact and the fifos become the other side, thanks to the -input and -output flags.

What has been accomplished so far looks like this:

The real user must now read and write from the same two fifos. This is accomplished with another Expect script. The script reads very similarly to that used by the disconnected process—however the fifos are reversed. The one read by the process is written by the user. Similarly, the one written by the process is read by the user.

spawn -open [open $infifo w] set out $spawn_id spawn -open [open "|cat $catflags < $outfifo" "r"] set in $spawn_id

The code is reversed from that used by the disconnected process. However, the fifos are actually opened in the same order. Otherwise, both processes will block waiting to open a different fifo.

The user’s interact is also similar:

interact -output $out -input $in -output $user_spawn_id

The resulting processes look like this:

In this example, the Expect processes are transparent. The user is effectively joined to the spawned process.

More sophisticated uses are possible. For example, the user may want to communicate with a set of spawned processes. The disconnected Expect may serve as a process manager, negotiating access to the different processes. One of the queries that the disconnected Expect can support is disconnect. In this case, disconnect means breaking the fifo connection. The manager closes the fifos and proceeds to its next command. If the manager just wants to wait for the user, it immediately tries to reopen the fifos and waits until the user returns. (Complete code for such a manager is shown beginning on page 379.)

The manager may also wish to prompt for a password or even encrypt the fifo traffic to prevent other processes from connecting to it.

One of the complications that I have ignored is that the user and manager must know ahead of time (before reconnection) what the fifo names are. The manager cannot simply wait until it needs the information and then ask the user because doing that requires that the manager already be in communication with the user!

A simple solution is to store the fifo names in a file, say, in the home directory or /tmp. The manager can create the names when it starts and store the names in the file. The user-side Expect script can then retrieve the information from there. This is not sophisticated enough to allow the user to run multiple managers, but that seems an unlikely requirement. In any case, more sophisticated means can be used.

In the previous chapter, I described kibitz, a script that allows two users to control a common process. kibitz has a -noproc flag, which skips starting a common process and instead connects together the inputs and outputs of both users. The second user will receive what the first user sends and vice versa.

By using -noproc, a disconnected script can use kibitz to communicate with a user. This may seem peculiar, but it is quite useful and works well. The disconnected script plays the part of one of the users and requires only a few lines of code to do the work.

Imagine that a disconnected script has reached a point where it needs to contact the user. You can envision requests such as "I need a password to continue” or "The 3rd backup tape is bad, replace it and tell me when I can go on“.

To accomplish this, the script simply spawns a kibitz process to the appropriate user. kibitz does all the work of establishing the connection.

spawn kibitz -noproc $user

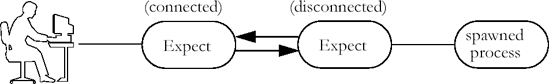

Once connected, the user can interact with the Expect process or can take direct control of one of the spawned processes. The following Expect fragment, run from cron, implements the latter possibility. The variable proc is initialized with the spawn id of the errant process while kibitz is the currently spawned process. The tilde is used to return control to the script.

spawn some-process; set proc $spawn_id

. . .

. . .

# script now has question or problem so it contacts user

spawn kibitz -noproc some-user

interact -u $proc -o ~ {

close

wait

return

}If proc refers to a shell, then you can use it to run any UNIX command. You can examine and set the environment variables interactively. You can run your process inside the debugger or while tracing system calls (i.e., under trace or truss). And this will all be under cron. This is an ideal way of debugging programs that work in the normal environment but fail under cron. The figure below shows the process relationship created by this bit of scripting.

Those half-dozen lines (above) are a complete, albeit simple, solution. A more professional touch might describe to the user what is going on. For example, after connecting, the script could do this:

send "The Frisbee ZQ30 being controlled by process $pid

refuses to reset and I don't know what else to do.

Should I let you interact (i), kill me (k), or

execute the dangerous gorp command (g)? "

The script describes the problem and offers the user a choice of possibilities. The script even offers to execute a command (gorp) it knows about but will not do by itself.

Responding to these is just like interacting with a user except that the user is in raw mode, so all lines should be terminated with a carriage-return linefeed. (Sending these with "send -h" can lend a rather amusing touch.)

Here is how the response might be handled:

expect {

g {

send "

ok, I'll do a gorp

"

gorp

send "Hmm. A gorp didn't seem to fix anything.

Now what (kgi)? "

exp_continue

}

k {

send "

ok, I'll kill myself...thanks

"

exit

}

i {

send "

press X to give up control, A to abort

everything

"

interact -u $proc -o X return A exit

send "

ok, thanks for helping...I'm on my own now

"

close

wait

}

}

Numerous strategies can be used for initially contacting the user. For example, the script can give up waiting for the user after some time and try someone else. Or it could try contacting multiple users at the same time much like the rlogin script in Chapter 11 (p. 249).

The following fragment tries to make contact with a single user. If the user is not logged in, kibitz returns immediately and the script waits for 5 minutes. If the user is logged in but does not respond, the script waits an hour. It then retries the kibitz, just in case the user missed the earlier messages. After maxtries attempts, it gives up and calls giveup. This could be a procedure that takes some last resort action, such as sending mail about the problem and then exiting.

set maxtries 30

set count 0

set timeout 3600 ;# wait an hour for user to respond

while 1 {

spawn kibitz -noproc $env(USER)

set kib $spawn_id

expect eof {

sleep 600

incr count

if {$count > $maxtries} giveup

continue

} -re "Escape sequence is.*" {

break

} timeout {

close; wait

}

}If the user does respond to the kibitz, both sides will see the message from kibitz describing the escape sequence. When the script sees this, it breaks out of the loop and begins communicating with the user.

In the previous section, I suggested using mail as a last ditch attempt to contact the user or perhaps just a way of letting someone know what happened. Most versions of cron automatically mail logs back to users by default, so it seems appropriate to cover mail here. Sending mail from a background process is not the most flexible way of communicating with a user, but it is clean, easy, and convenient.

People usually send mail interactively, but most mail programs do not need to be run with spawn. For example, /bin/mail can be run using exec with a string containing the message.

The lines of the message should be separated by newlines. The variable to names the recipient in this example:

exec /bin/mail $to << "this is a message of two lines "

There are no mandatory headers, but a few basic ones are customary. Using variables and physical newlines makes the next example easier to read:

exec /bin/mail $to << "From: $from To: $to From: $from Subject: $subject $body"

To send a file instead of a string, use the < redirection.

You can also create a mail process and write to it:

set mailfile [open "|/bin/mail $to" w] puts $mailfile "To: $to" puts $mailfile "From: $from" puts $mailfile "Subject: $subject"

This approach is useful when you are generating lines one at a time, such as in a loop:

while {....} {

... ;# compute line

puts $mailfile "computed another line: $line"

}

To send the message, just close the file.

close $mailfile

Earlier in the chapter, I suggested that a disconnected background process could be used as a process manager. This section presents a script which is a variation on that earlier idea. Called dislocate, this script lets you start a process, interact with it for a while, and then disconnect from it. Multiple disconnected processes live in the background waiting for you to reconnect. This could be useful in a number of situations. For example, a telnet connection to a remote site might be impossible to start during the day. Instead you could start it in the evening the day before, disconnect, and reconnect to it later the next morning.

A process is started by prefacing any UNIX command with dislocate, as in:

% dislocate rlogin io.jupiter.cosmos

Escape sequence is '^]'.

io.jupiter.cosmos:~1%

Once the processing is running, the escape sequence is used to disconnect. To reconnect, dislocate is run with no arguments. Later, I will describe this further, including what happens when multiple processes are disconnected.

The script starts similarly to xkibitz in Chapter 16 (p. 357)—by defining the escape character and several other global variables. The file ~/.dislocate is used to keep track of all of the disconnected processes of a single user. "disc" provides an application-specific prefix to files that are created in /tmp.

#!/usr/local/bin/expect --

set escape �35 ;# control-right-bracket

set escape_printable "^]"

set pidfile "~/.dislocate"

set prefix "disc"

set timeout −1

while {$argc} {

set flag [lindex $argv 0]

switch -- $flag

"-escape" {

set escape [lindex $argv 1]

set escape_printable $escape

set argv [lrange $argv 2 end]

incr argc -2

} default {

break

}

}Next come several definitions and procedures for fifo manipulation. The mkfifo procedure creates a single fifo. Creating a fifo on a non-POSIX system is surprisingly non-portable—mknod can be found in so many places!

proc mkfifo {f} {

if [file exists $f] {

# fifo already exists?

return

}

if 0==[catch {exec mkfifo $f}] return ;# POSIX

if 0==[catch {exec mknod $f p}] return

if 0==[catch {exec /etc/mknod $f p}] return ;# AIX,Cray

if 0==[catch {exec /usr/etc/mknod $f p}] return ;# Sun

puts "failed to make a fifo - where is mknod?"

exit

}Suffixes are declared to distinguish between input and output fifos. Here, they are descriptive from the parent’s point of view. In the child, they will be reset so that they appear backwards, allowing the following two routines to be used by both parent and child.

set infifosuffix ".i"

set outfifosuffix ".o"

proc infifoname {pid} {

global prefix infifosuffix

return "/tmp/$prefix$pid$infifosuffix"

}

proc outfifoname {pid} {

global prefix outfifosuffix

return "/tmp/$prefix$pid$outfifosuffix"

}Embedded in each fifo name is the process id corresponding to its disconnected process. For example, process id 1005 communicates through the fifos named /tmp/disc1005.i and /tmp/disc1005.o. Since the fifo names can be derived given a process id, only the process id has to be known to initiate a connection.

While in memory, the relationship between the process ids and process names (and arguments) is maintained in the array proc. It is indexed by the process id. For example, the variable proc(1005) could hold the string "telnet io.jupiter.cosmos“. A similar array maintains the date each process was started. These are initialized with the following code which also includes a utility procedure for removing process ids.

# allow element lookups on empty arrays

set date(dummy) dummy; unset date(dummy)

set proc(dummy) dummy; unset proc(dummy)

proc pid_remove {pid} {

global date proc

unset date($pid)

unset proc($pid)

}When a process is disconnected, the information on this and any other disconnected process is written to the .dislocate file mentioned earlier. Each line of the file describes a disconnected process. The format for a line is:

pid#date-started#argv

Writing the file is straightforward:

proc pidfile_write {} { global pidfile date proc set fp [open $pidfile w] foreach pid [array names date] { puts $fp "$pid#$date($pid)#$proc($pid)" } close $fp }

The procedure to read the file is a little more complex because it verifies that the process and fifos still exist.

proc pidfile_read {} { global date proc pidfile if [catch {open $pidfile} fp] return set line 0 while {[gets $fp buf]!=-1} { # while pid and date can't have # in it, proc can if [regexp "([^#]*)#([^#]*)#(.*)" $buf junk pid xdate xproc] { set date($pid) $xdate set proc($pid) $xproc } else { puts "warning: error in $pidfile line $line" } incr line } close $fp # see if pids are still around foreach pid [array names date] { if [catch {exec /bin/kill -0 $pid}] { # pid no longer exists, removing pid_remove $pid continue } # pid still there, see if fifos are if {![file exists [infifoname $pid]] || ![file exists [outfifoname $pid]]} { # $pid fifos no longer exists, removing pid_remove $pid continue } } }

The following two procedures create and destroy the fifo pairs, updating the .dislocate file appropriately.

proc fifo_pair_remove {pid} { global date proc prefix pidfile_read pid_remove $pid pidfile_write catch {exec rm -f [infifoname $pid] [outfifoname $pid]} } proc fifo_pair_create {pid argdate argv} { global prefix date proc pidfile_read set date($pid) $argdate set proc($pid) $argv pidfile_write mkfifo [infifoname $pid] mkfifo [outfifoname $pid] }

Things get very interesting at this point. If the user supplied any arguments, they are in turn used as arguments to spawn. A child process is created to handle the spawned process.

A fifo pair is created before the fork. The child will begin listening to the fifos for a connection. The original process (still connected to the controlling terminal) will immediately connect to the fifos. This sounds baroque but is simpler to code because the child behaves precisely this way when the user reconnects later.

Notice that initially the fifo creation occurs before the fork. If the creation was done after, then either the child or the parent might have to spin, inefficiently retrying the fifo open. Since it is impossible to know the process id ahead of time, the script goes ahead and just uses 0. This will be set to the real process id when the child does its initial disconnect. There is no collision problem because the fifos are deleted immediately anyway.

if {$argc} {

set datearg [exec date]

fifo_pair_create 0 $datearg $argv

set pid [fork]

if $pid==0 {

child $datearg $argv

}

# parent thinks of child as having pid 0 for

# reason given earlier

set pid 0

}If dislocate has been started with no arguments, it will look for disconnected processes to connect to. If multiple processes exist, the user is prompted to choose one. The interaction looks like this:

%dislocateconnectable processes: # pid date started process 1 5888 Mon Feb 14 23:11:49 rlogin io.jupiter.cosmos 2 5957 Tue Feb 15 01:23:13 telnet ganymede 3 1975 Tue Jan 11 07:20:26 rogue enter # or pid:1Escape sequence is ^]

Here is the code to prompt the user. The procedure to read a response from the user is shown later.

if ![info exists pid] {

global fifos date proc

# pid does not exist

pidfile_read

set count 0

foreach pid [array names date] {

incr count

}

if $count==0 {

puts "no connectable processes"

exit

} elseif $count==1 {

puts "one connectable process: $proc($pid)"

puts "pid $pid, started $date($pid)"

send_user "connect? [y] "

expect_user -re "(.*)

" {

set buf $expect_out(1,string)

}

if {$buf!="y" && $buf!=""} exit

} else {

puts "connectable processes:"

set count 1

puts " # pid date started process"

foreach pid [array names date] {

puts [format "%2d %6d %.19s %s"

$count $pid $date($pid) $proc($pid)]

set index($count) $pid

incr count

}

set pid [choose]

}

}Once the user has chosen a process, the fifos are opened, the user is told the escape sequence, the prompt is customized, and the interaction begins.

# opening [outfifoname $pid] for write

spawn -noecho -open [open [outfifoname $pid] w]

set out $spawn_id

# opening [infifoname $pid] for read

spawn -noecho -open [open [infifoname $pid] r]

set in $spawn_id

puts "Escape sequence is $escape_printable"

proc prompt1 {} {

global argv0

return "$argv0[history nextid]> "

}

interact {

-reset $escape escape

-output $out

-input $in

}The escape procedure drops the user into interpreter where they can enter Tcl or Expect commands. They can also press ^Z to suspend dislocate or they can enter exit to disconnect from the process entirely.

proc escape {} {

puts "

to disconnect, enter: exit (or ^D)"

puts "to suspend, enter appropriate job control chars"

puts "to return to process, enter: return"

interpreter

puts "returning ..."

}The choose procedure is a utility to interactively query the user to choose a process. It returns a process id. There is no specific handler to abort the dialogue because ^C will do it without harm.

proc choose {} { global index date while 1 { send_user "enter # or pid: " expect_user -re "(.*) " { set buf $expect_out(1,string) } if [info exists index($buf)] { set pid $index($buf) } elseif [info exists date($buf)] { set pid $buf } else { puts "no such # or pid" continue } return $pid } }

All that is left to show is the child process. It immediately disconnects and spawns the actual process. The child then waits for the other end of each fifo to be opened. Once opened, the fifos are removed so that no one else can connect, and then the interaction is started. When the user-level process exits, the child process gets an eof, returns from the interact, and recreates the fifos. The child then goes back to waiting for the fifos to be opened again. If the actual process exits, the child exits. Nothing more need be done. The fifos do not exist nor does the entry in the .dislocate file. They were both removed prior to the interact by fifo_pair_remove.

proc child {argdate argv} {

global infifosuffix outfifosuffix

disconnect

# these are backwards from the child's point of view

# so that we can make everything else look "right"

set infifosuffix ".o"

set outfifosuffix ".i"

set pid 0

eval spawn $argv

set proc_spawn_id $spawn_id

while {1} {

spawn -open [open [infifoname $pid] r]

set in $spawn_id

spawn -open [open [outfifoname $pid] w]

set out $spawn_id

fifo_pair_remove $pid

interact {

-u $proc_spawn_id eof exit

-output $out

-input $in

}

catch {close −i $in}

catch {close −i $out}

set pid [pid]

fifo_pair_create $pid $argdate $argv

}

}When you log in to a host, you have to provide a username and (usually) a password. It is possible to make use of certain services without this identification. finger and ftp are two examples of services that can be obtained anonymously—without logging in. The programs that provide such services are called daemons. Traditionally, a daemon is a background process that is started or woken when it receives a request for service.

UNIX systems often use a program called inetd to start these daemons as necessary. For example, when you ftp to a host, inetd on that host sees the request for ftp service and starts a program called in.ftpd (“Internet ftp daemon”) to provide you with service. There are many other daemons such as in.telnetd (“Internet telnet daemon”) and in.fingerd (“Internet finger daemon”).

You can write Expect scripts that behave just like these daemons. Then users will be able to run your Expect scripts without logging in. As the large number of daemons on any host suggests, there are many uses for offering services in this manner. And Expect makes it particularly easy to build a daemon—to offer remote access to partially or completely automated interactive services. In the next section, I will discuss how to use Expect to enable Gopher and Mosaic to automate connections that would otherwise require human interaction.

Simple Expect scripts require no change to run as a daemon. For example, the following Expect script prints out the contents of /etc/motd whether run from the shell or as a daemon.

exec cat /etc/motd

Such a trivial script does not offer any benefit for being written in Expect. It might as well be written in /bin/sh. Where Expect is useful is when dealing with interactive programs. For example, your daemon might need to execute ftp, telnet, or some other interactive program to do its job.

The telnet program (the “client”) is normally used to speak to a telnet daemon (the “server”) but telnet can be used to communicate with many other daemons as well. In Chapter 6 (p.129), I showed how to use telnet to connect to the SMTP port and communicate with the mail daemon.

If you telnet to an interactive program invoked by a daemon written as a shell script, you will notice some interesting properties. Input lines are buffered and echoed locally. Carriage-returns are received by the daemon as carriage-return linefeed sequences. This peculiar character handling has nothing to do with cooked or raw mode. In fact, there is no terminal interface between telnet and telnetd.

This translation is a by-product of telnet itself. telnet uses a special protocol to talk to its daemon. If the daemon does nothing special, telnet assumes these peculiar characteristics. Unfortunately, they are inappropriate for most interactive applications. For example, the following Expect script works perfectly when started in the foreground from a terminal. However, the script does not work correctly as a daemon because it does not provide support for the telnet protocol.

spawn /bin/sh interact

Fortunately, a telnet daemon can modify the behavior of telnet. A telnet client and daemon communicate using an interactive asynchronous protocol. An implementation of a telnet daemon in Expect is short and efficient.

The implementation starts by defining several values important to the protocol. IAC means Interpret As Command. All commands begin with an IAC byte. Anything else is data, such as from a user keystroke. Most commands are three bytes and consist of the IAC byte followed by a command byte from the second group of definitions followed by an option byte from the third group. For example, the sequence $IAC$WILL$ECHO means that the sender is willing to echo characters.

The IAC byte always has the value "xff“. This and other important values are fixed and defined as follows:

set IAC "xff" set DONT "xfe" set DO "xfd" set WONT "xfc" set WILL "xfb" set SB "xfa" ;# subnegotation begin set SE "xf0" ;# subnegotation end set TTYPE "x18" set SGA "x03" set ECHO "x01" set SEND "x01"

The response to WILL is either DO or DONT. If the receiver agrees, it responds with DO; otherwise it responds with DONT. Services supplied remotely can also be requested using DO, in which case the answer must be WILL or WONT. To avoid infinite protocol loops, only the first WILL or DO for a particular option is acknowledged. Other details of the protocol and many extensions to it can be found in more than a dozen RFCs beginning with RFC 854.[56]

The server begins by sending out three commands. The first command says that the server will echo characters. Next, line buffering is disabled. Finally, the server offers to handle the terminal type.

send "$IAC$WILL$ECHO" send "$IAC$WILL$SGA" send "$IAC$DO$TTYPE"

For reasons that will only become evident later, it is important both to the user and to the protocol to support nulls, so null removal is disabled at this point.

remove_nulls 0

Next, several patterns are declared to match commands returning from the client. While it is not required, I have declared each one as a regular expression so that the code is a little more consistent.

The first pattern matches $IAC$DO$ECHO and is a response to the $IAC$WILL$ECHO. Thus, it can be discarded. Because of the clever design of the protocol, the acknowledgment can be received before the command without harm. Each of the commands above has an acknowledgment pattern below that is handled similarly.

Any unknown requests are refused. For example, the $IAC$DO(.) pattern matches any unexpected requests and refuses them. (Notice the parenthesis has a preceding backslash to avoid having "$DO" be interpreted as an array reference!)

expect_before {

-re "^$IAC$DO$ECHO" {

# treat as acknowledgment and ignore

exp_continue

}

-re "^$IAC$DO$SGA" {

# treat as acknowledgment and ignore

exp_continue

}

-re "^$IAC$DO(.)" {

# refuse anything else

send_user "$IAC$WONT$expect_out(1,string)"

exp_continue

}

-re "^$IAC$WILL$TTYPE" {

# respond to acknowledgment

send_user "$IAC$SB$TTYPE$SEND$IAC$SE"

exp_continue

}

-re "^$IAC$WILL$SGA" {

# acknowledge request

send_user "$IAC$DO$SGA"

exp_continue

}

-re "^$IAC$WILL(.)" {

# refuse anything else

send_user "$IAC$DONT$expect_out(1,string)"

exp_continue

}

-re "^$IAC$SB$TTYPE" {

expect_user null

expect_user -re "(.*)$IAC$SE"

set env(TERM) [string tolower $expect_out(1,string)]

# now drop out of protocol handling loop

}

-re "^$IAC$WONT$TTYPE" {

# treat as acknowledgment and ignore

set env(TERM) vt100

# now drop out of protocol handling loop

}

}The terminal type is handled specially. If the client agrees to provide its terminal type, the server must send another command that means “ok, go ahead and tell me”. The response has an embedded null, so it is broken up into separate expect commands. The terminal type is provided in uppercase, so it is translated with "string lower“. If the client refuses to provide a terminal type, the server arbitrarily uses vt100.

All of the protocol and user activity occurs with the standard input and output. These two streams are automatically established if the daemon is configured with inetd.

Next, the timeout is disabled and a bare expect command is given. This allows the protocol interaction to take place according to the expect_before above. Most of the protocol actions end with exp_continue, allowing the expect to continue looking for more commands.

set timeout −1 expect ;# do negotiations up to terminal type

When the client returns (or refuses to return) the terminal type, the expect command ends and the protocol negotiations are temporarily suspended. At this point, the terminal type is stored in the environment, and programs can be spawned which will inherit the terminal type automatically.

spawn interactive-programNow imagine that the script performs a series of expect and send commands, perhaps to negotiate secret information or to navigate through a difficult or simply repetitive dialogue.

expect . . . send . . . expect . . .

Additional protocol commands may have continued to arrive while a process is being spawned, and the protocol commands are handled during these expect commands. Additional protocol commands can be exchanged at any time; however, in practice, none of the earlier ones will ever reoccur. Thus, they can be removed. The protocol negotiation typically takes place very quickly, so the patterns can usually be deleted after the first expect command that waits for real user data.

# remove protocol negotation patterns expect_before −i $user_spawn_id

One data transformation that cannot be disabled is that the telnet client appends either a null or linefeed character to every return character sent by the user. This can be handled in a number of ways. The following command does it within an interact command which is what the script might end with.

interact "

" {

send "

"

expect_user

{} null

}Additional patterns can be added to look for commands or real user data, but this suffices in the common case where the user ends up talking directly to the process on the remote host.

Ultimately, the connection established by the Expect daemon looks like this:

Gopher is an information system that follows links of information that may lead from one machine to another.[57] The Gopher daemon does not support the ability to run interactive programs. For instance, suppose you offer public access to a service on your system, such as a library catalog. Since this is an interactive service, it is accessed by logging in, usually with a well-known account name such as "info“.

Put another way, for someone to use the service, they must telnet to your host and enter "info" when prompted for an account. You could automate this with an Expect script. Not so, under Gopher. The Gopher daemon is incapable of running interactive processes itself. Instead, the daemon passes the telnet information to the Gopher client. Then it is up to the Gopher client to run telnet and log in.

This means that the client system has to do something with the account information. By default, the Gopher client displays the information on the screen and asks the user to type it back in. This is rather silly. After all, the client knows the information yet insists on having the user enter it manually. It is not a matter of permission. The client literally tells the user what to type! Here is an example (with XXX to protect the guilty) from an actual Gopher session.

Even Mosaic, with its slick user interface, does not do any better. It looks pretty, but the user is still stuck typing in the same information by hand.

Unfortunately, Mosaic and Gopher clients cannot perform interaction automation. One reason is that there is no standard way of doing it. For instance, there is no reason to expect that any arbitrary host has Expect. Many hosts (e.g., PCs running DOS) do not even support Expect, even though they can run telnet.

And even if all hosts could perform interaction automation, you might not want to give out the information needed to control the interaction. Your service might, for example, offer access to other private, internal, or pay-for-use data as well as public data. Automating the account name, as I showed in the example, is only the tip of the iceberg. There may be many other parts of the interaction that need to be automated. If you give out the account, password, and instructions to access the public data, users could potentially take this information, bypass the Gopher or Mosaic client and interact by hand, doing things you may not want.

The solution is to use the technique I described in the previous section and partially automate the service through an Expect daemon accessible from a telnet port. By doing the interaction in the daemon, there is no need to depend on or trust the client. A user could still telnet to the host, but would get only what the server allowed.

By controlling the interaction from the server rather than from the client, passwords and other sensitive pieces of information do not have a chance of being exposed. There is no way for the user to get information from the server if the server does not supply it. Another advantage is that the server can do much more sophisticated processing. The server can shape the conversation using all the power of Expect.

In practice, elements of the earlier script beginning on page 388 can be stored in another file that is sourced as needed. For instance, all of the commands starting with the telnet protocol definitions down to the bare expect command could be stored in a file (say, expectd.proto) and sourced by a number of similar servers.

source /usr/etc/expectd.proto

As an example, suppose you want to let people log into another host (such as a commercial service for which you pay real money) and run a single program there, but without their knowing which host it is or what your account and password are. Then, the server would spawn a telnet (or tip or whatever) to the other host.

log_user 0 ;# turn output off

spawn telnet secrethost

expect "Username:"

send "8234,34234

"

expect "Password"

send "jellyroll

"

expect "% "

send "ncic

"

expect -re "ncic

(.*)"

log_user 1 ;# turn output on

;# send anything that appeared just

;# after command was echoed

send_user "$expect_out(1,string)Finally, the script ends as before—removing the protocol patterns and dropping the user into an interact. As before, this could also be stored in an auxiliary file.

expect_before −i $user_spawn_id

interact "

" {

send "

"

expect_user

{} null

}In this example, the script is logging in to another host, and the password appears literally in the script. This can be very secure if users cannot log in to the host. Put scripts like these on a separate machine to which users cannot log in or physically approach. As long as the user can only access the machine over the network through these Expect daemons, you can offer services securely.

Providing the kind of daemon service I have just described requires very little resources. It is not CPU intensive nor is it particularly demanding of I/O. Thus, it is a fine way to make use of older workstations that most sites have sitting around idle and gathering dust.

Daemons like the one I described in the previous section are typically started using inetd. Several freely available programs can assist network daemons by providing access control, identification, logging, and other enhancements. For instance, tcp_wrapper does this with a small wrapper around the individual daemons, whereas xinetd is a total replacement for inetd. tcp_wrapper is available from cert.sei.cmu.edu as pub/network_tools. xinetd is available from the comp.source.unix archive.

Since anyone using xinetd and tcp_wrapper is likely to know how to configure a server already, I will provide an explanation based on inetd. The details may differ from one system to another.

Most versions of inetd read configuration data from the file /etc/inetd.conf. A single line describes each server. (The order of the lines is irrelevant.) For example, to add a service called secret, add the following line:

secret stream tcp nowait root /usr/etc/secretd secretd

The first field is the name of the port on which the service will be offered. The next three parameters must always be stream, tcp, and nowait for the kind of server I have shown here. The next parameter is the user id with which the server will be started. root is common but not necessary. The next argument is the file to execute. In this example, /usr/etc/secretd is the Expect script. This follows the tradition of storing servers in /usr/etc and ending their names with a "d“. The script must be marked executable. If the script contains passwords, the script should be readable, writable, and executable only by the owner. The script should also start with the usual #! line. Alternatively, you can list Expect as the server and pass the name of the script as an argument. The next argument in the line is the name of the script (again). Inside the script, this name is merely assigned to argv0. The remaining arguments are passed uninterpreted as the arguments to the script.

Once inetd is configured, you must send it a HUP signal. This causes inetd to reread its configuration file.

The file /etc/services (or the NIS services map) contains the mapping of service names to port numbers. Add a line for your new service and its port number. Your number must end with /tcp. "#" starts a comment which runs up to the end of the line. For example:

secret 9753/tcp # Don's secret server

You must choose a port number that is not already in use. Looking in the /etc/services file is not good enough since ports can be used without being listed there. There is no guaranteed way of avoiding future conflicts, but you can at least find a free port at the time by running a command such as "netstat -na | grep tcp" or lsof. lsof (which stands for “list open files”) and similar programs are available free from many Internet source archives.

Write a script that retrieves an FAQ. First the script should attempt to get the FAQ locally by using your local news reading software. If the FAQ has expired or is not present for some other reason, the script should anonymously

ftpit fromrtfm.mit.edu. (For instance, the first part of the Tcl FAQ lives in the file/pub/usenet/news.answers/tcl-faq/part1.) If theftpfails because the site is too loaded or is down, the script should go into the background and retry later, or it should send email to[email protected]in the form:send usenet/news.answers/tcl-faq/part1

Write a script called

netpipethat acts as a network pipe. Shell scripts on two different machines should be able to invokenetpipeto communicate with each other as easily as if they were using named pipes (i.e., fifos) on a single machine.Several of your colleagues are interested in listening to Internet Talk Radio and Internet Town Hall. [58]Unfortunately, each hour of listening pleasure is 30Mb. Rather than tying up your expensive communications link each time the same file is requested, make a

cronentry that downloads the new programs once each day. (For information about the service, send mail to [email protected].)The

dialbackscripts in Chapter 1 (p. 4) and Chapter 16 (p. 347) dial back immediately. Useatto delay the start by one minute so that there is time to log out and accept the incoming call on the original modem. Then rewrite the script usingforkanddisconnect. Is there any functional difference?Take the

telnetdaemon presented in this chapter and enhance it so that it understands when the client changes the window size. This option is defined by RFC 1073 and is called Negotiate About Window Size (NAWS) and uses command bytex1f. NAWS is similar to the terminal type subnegotation except that 1) it can occur at any time, and 2) the client sends back strings of the form:$IAC$SB$NAWS$ROWHI$ROWLO$COLHI$COLLO$IAC$SE

where

ROWHIis the high-order byte of the number of rows andROWLOis the low-order byte of the number of rows.COLHIandCOLLOare defined similarly.You notice someone is logging in to your computer and using an account that should not be in use. Replace the real

telnetdaemon with an Expect script that transparently records any sessions for that particular user. Do the same for therlogindaemon.

[55] cron, at, and batch all provide different twists on the same idea; however, Expect works equally well with each so I am going to say “cron” whenever I mean any of cron, at, or batch. You can read about these in your own man pages.

[56] TCP/IP Illustrated, Volume 1 by W. Richard Stevens (Addison-Wesley, 1994) contains a particularly lucid explanation of the protocol, while Internet System Handbook by Daniel Lynch and Marshall Rose (Addison-Wesley, 1993) describes some of the common mistakes. If you are trying for maximum interoperability, you must go beyond correctness and be prepared to handle other implementation’s bugs!

[57] While the WWW (e.g., Mosaic) interface is different than Gopher, both have the same restrictions on handling interactive processes and both can take advantage of the approach I describe here.

[58] The book review from April 7, 1993 is particularly worthwhile!