![]()

Using Apache Hadoop

Apache Hadoop is the de facto framework for processing large data sets. Apache Hadoop is a distributed software application that runs across several (up to hundreds and thousands) of nodes across a cluster. Apache Hadoop comprises of two main components: Hadoop Distributed File System (HDFS) and MapReduce. The HDFS is used for storing large data sets and MapReduce is used for processing the large data sets. Hadoop is linearly scalable without degradation in performance and makes use of commodity hardware rather than any specialized hardware. Hadoop is designed to be fault tolerant and makes use of data locality by moving the computation to the data rather than data to the computation. MapReduce framework has two versions MapReduce1 (MR1) and MapReduce2 (MR2) (also called YARN). MR1 is the default MapReduce framework in earlier versions of Hadoop (Hadoop 1.x) and YARN is the default in latter versions of Hadoop (Hadoop 2.x).

- Setting the Environment

- Starting Hadoop

- Starting the Interactive Shell

- Creating Input Files for a MapReduce Word Count Application

- Running a MapReduce Word Count Application

- Stopping the Hadoop Docker Container

- Using a CDH Docker Image

Setting the Environment

The following software is used in this chapter.

- -Docker (version 1.8)

- -Apache Hadoop Docker Image

- -Cloudera Hadoop (CDH) Docker Image

As in other chapters we have used an Amazon EC2 instance based on Red Hat Enterprise Linux 7.1 (HVM), SSD Volume Type - ami-12663b7a for installing the software. SSH login to the Amazon EC2 instance.

ssh -i "docker.pem" [email protected]

Install Docker as discussed in Chapter 1. Start the Docker service.

sudo service docker start

An OK message indicates that the Docker service has been started as shown in Figure 8-1.

Figure 8-1. Starting the Docker Service

Add a group called “hadoop” and a user called “hadoop”.

groupadd hadoop

useradd -g hadoop hadoop

Several Docker images are available for Apache Hadoop. We have used the sequenceiq/hadoop-docker Docker image available from the Docker Hub. Download the Docker image with label 2.7.0 or the latest tag image if different.

sudo docker pull sequenceiq/hadoop-docker:2.7.0

The docker pull command is shown in Figure 8-2.

Figure 8-2. Running the docker pull Command

The Docker image sequenceiq/hadoop-docker gets downloaded as shown in Figure 8-3.

Figure 8-3. Downloading Docker Image sequenceiq/hadoop-docker

Starting Hadoop

Next, start the Hadoop components HDFS and MapReduce. The Docker image sequenceiq/hadoop-docker is configured by default to start the YARN or MR2 framework. Run the following docker run command, which starts a Docker container in detached mode, to start the HDFS (NameNode and DataNode) and YARN (ResourceManager and NodeManager).

sudo docker run -d --name hadoop sequenceiq/hadoop-docker:2.7.0

Subsequently, list the running Docker containers.

sudo docker ps

The output from the preceding two commands is shown in Figure 8-4 including the running Docker container for Apache Hadoop based on the sequenceiq/hadoop-docker image. The Docker container name is “hadoop” and container id is “27436aa7c645”.

Figure 8-4. Running Docker Container for Apache Hadoop

Starting the Interactive Shell

Start the interactive shell or terminal (tty) with the following command.

sudo docker exec -it hadoop bash

The interactive terminal prompt gets displayed as shown in Figure 8-5.

![]()

Figure 8-5. Starting Interactive Terminal

The interactive shell may also be started using the container id instead of the container name.

sudo docker exec -it 27436aa7c645 bash

If the –d command parameter is omitted from the docker run command and the –it parameters (which is –i and –t supplied together) are supplied using the following command, the Docker container starts in foreground mode.

sudo docker run -it --name hadoop sequenceiq/hadoop-docker:2.7.0 /etc/bootstrap.sh –bash

The Hadoop components start and attach a console to the Hadoop stdin, stdout and stderr streams as shown in Figure 8-6. A message gets output to the console for each Hadoop component started. The –it parameter starts an interactive terminal (tty).

Figure 8-6. Starting Docker Container in Foreground

Creating Input Files for a MapReduce Word Count Application

In this section we shall create input files for a MapReduce Word Count application, which is included in the examples packaged with the Hadoop distribution. To create the input files, change the directory (cd) to the $HADOOP_PREFIX directory.

bash-4.1# cd $HADOOP_PREFIX

The preceding command is to be run from the interactive terminal (tty) as shown in Figure 8-7.

![]()

Figure 8-7. Setting Current Directory to $HADOOP_PREFIX Directory

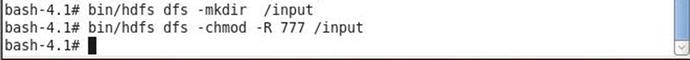

Create a directory called /input in the HDFS for the input files. Subsequently, set the directory permissions to global (777).

bash-4.1# bin/hdfs dfs -mkdir /input

bash-4.1# bin/hdfs dfs -chmod -R 777 /input

The preceding commands are also run from the interactive terminal as shown in Figure 8-8.

Figure 8-8. Creating Input Directory

Add two text files (input1.txt and input2.txt) with some sample text to the /input directory. To create a text file input1.txt run the following vi editor command in the tty.

vi input1.txt

Add the following two lines of text in the input1.txt.

Hello World Application for Apache Hadoop

Hello World and Hello Apache Hadoop

Save the input1.txt file with the :wq command as shown in Figure 8-9.

Figure 8-9. The input1.txt File

Put the input1.txt file in the HDFS directory /input with the following command, also shown in Figure 8-10.

bin/hdfs dfs -put input1.txt /input

Figure 8-10. Putting the input1.txt in the HDFS

The input1.txt file gets added to the /input directory in the HDFS.

Similarly, open another new text file input2.txt with the following vi command.

vi input2.txt

Add the following two lines of text in the input2.txt file.

Hello World

Hello Apache Hadoop

Save the input2.txt file with the :wq command as shown in Figure 8-11.

Figure 8-11. The input2.txt File

Put the input2.txt file in the HDFS directory /input.

bin/hdfs dfs -put input2.txt /input

Subsequently, run the following command to run the files in the /input directory.

bin/hdfs –ls /input

The two files added to the HDFS get listed as shown in Figure 8-12.

Figure 8-12. Listing the Input Files in the HDFS

Running a MapReduce Word Count Application

In this section we shall run a MapReduce application for word count; the application is packaged in the hadoop-mapreduce-examples-2.7.0.jar file and may be invoked with the arg “wordcount”. The wordcount application requires the input and output directories to be supplied. The input directory is the /input directory in the HDFS we created earlier and the output directory is /output, which must not exists before running the hadoop command. Run the following hadoop command from the interactive shell.

bin/hadoop jar $HADOOP_PREFIX/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar wordcount /input /output

A MapReduce job gets started using the YARN framework as shown in Figure 8-13.

Figure 8-13. Starting MapReduce Application with YARN Framework

The YARN job completes as shown in Figure 8-14, and the word count application gets output to the /output directory in the HDFS.

Figure 8-14. Output from the MapReduce Application

The complete output from the hadoop command is as follows.

<mapreduce/hadoop-mapreduce-examples-2.7.0.jar wordcount /input /output

15/10/18 15:46:17 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

15/10/18 15:46:19 INFO input.FileInputFormat: Total input paths to process : 2

15/10/18 15:46:19 INFO mapreduce.JobSubmitter: number of splits:2

15/10/18 15:46:20 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1445197241840_0001

15/10/18 15:46:21 INFO impl.YarnClientImpl: Submitted application application_1445197241840_0001

15/10/18 15:46:21 INFO mapreduce.Job: The url to track the job: http://fb25c4cabc55:8088/proxy/application_1445197241840_0001/

15/10/18 15:46:21 INFO mapreduce.Job: Running job: job_1445197241840_0001

15/10/18 15:46:40 INFO mapreduce.Job: Job job_1445197241840_0001 running in uber mode : false

15/10/18 15:46:40 INFO mapreduce.Job: map 0% reduce 0%

15/10/18 15:47:03 INFO mapreduce.Job: map 100% reduce 0%

15/10/18 15:47:17 INFO mapreduce.Job: map 100% reduce 100%

15/10/18 15:47:18 INFO mapreduce.Job: Job job_1445197241840_0001 completed successfully

15/10/18 15:47:18 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=144

FILE: Number of bytes written=345668

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=324

HDFS: Number of bytes written=60

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=41338

Total time spent by all reduces in occupied slots (ms)=11578

Total time spent by all map tasks (ms)=41338

Total time spent by all reduce tasks (ms)=11578

Total vcore-seconds taken by all map tasks=41338

Total vcore-seconds taken by all reduce tasks=11578

Total megabyte-seconds taken by all map tasks=42330112

Total megabyte-seconds taken by all reduce tasks=11855872

Map-Reduce Framework

Map input records=6

Map output records=17

Map output bytes=178

Map output materialized bytes=150

Input split bytes=212

Combine input records=17

Combine output records=11

Reduce input groups=7

Reduce shuffle bytes=150

Reduce input records=11

Reduce output records=7

Spilled Records=22

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=834

CPU time spent (ms)=2760

Physical memory (bytes) snapshot=540696576

Virtual memory (bytes) snapshot=2084392960

Total committed heap usage (bytes)=372310016

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=112

File Output Format Counters

Bytes Written=60

bash-4.1#

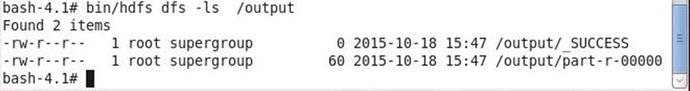

List the output files in the /output directory in HDFS with the following command.

bin/hdfs dfs -ls /output

Two files get listed: _SUCCESS, which indicates that the YARN job completed successfully, and part-r-00000, which is the output from the wordcount application as shown in Figure 8-15.

Figure 8-15. Files Output by the YARN Application

List the output from the wordcount application using the following command.

hdfs dfs -cat /output/part-r-00000

The word count for each distinct word in the input files input1.txt and input2.txt gets output as shown in Figure 8-16.

Figure 8-16. Listing the Word Count

Stopping the Hadoop Docker Container

The Docker container running the Hadoop processes may be stopped with the docker stop command.

sudo docker stop hadoop

Subsequently run the docker ps command and no container gets listed as running as shown in Figure 8-17.

Figure 8-17. Listing Running Docker Containers after stopping Apache Hadoop Container

Using a CDH Docker Image

As mentioned before several Docker images are available for Apache Hadoop. Another Docker image, which we shall also use in subsequent chapters based on the Apache Hadoop Ecosystem as packaged by the Cloudera Hadoop distribution called CDH, is the svds/cdh Docker image. The svds/cdh image includes not just Apache Hadoop but several frameworks in the Apache Hadoop ecosystem, some of which are discussed in later chapters. Download the svds/cdh image with the following command.

sudo docker pull svds/cdh

Start a Docker container running the CDH frameworks.

sudo docker run -d --name cdh svds/cdh

Start an interactive terminal to run commands for the CDH frameworks.

sudo docker exec -it cdh bash

In the tty, the Hadoop framework applications may be run without further configuration. For example, run the HDFS commands with “hdfs” on the command line. The hdfs commands usage is as listed as follows.

hdfs

The HDFS commands usage gets output as shown in Figure 8-18.

Figure 8-18. hdfs Command Usage

The configuration files are available in the /etc/hadoop/conf symlink as shown in Figure 8-19.

Figure 8-19. Listing the Symlink for the Configuration Directory

The configuration files in the /etc/alternatives/hadoop-conf directory to which the conf symlink points are listed as follows as shown in Figure 8-20.

Figure 8-20. Listing the Configuration Files

The cdh container may be stopped with the docker stop command.

sudo docker stop cdh

Summary

In this chapter we ran Apache Hadoop components in a Docker container. We created some files and put the files in the HDFS. Subsequently, we ran a MapReduce wordcount application packaged with the examples in the Hadoop distribution. We also introduced a Cloudera Hadoop distribution (CDH) based Docker image, which we shall also use in some of the subsequent chapters based on frameworks in the Apache Hadoop ecosystem.