IBM PowerVM overview

This chapter introduces PowerVM and provides a high-level overview of the IBM Power servers and PowerVM capabilities.

This chapter covers the following topics:

1.1 PowerVM introduction

PowerVM is an enterprise-class virtualization solution that provides a secure, flexible, and scalable virtualization for Power servers. PowerVM enables logical partitions (LPARs) and server consolidation. Clients can run AIX, IBM i, and Linux operating systems on Power servers with a world-class reliability, high availability (HA), and serviceability capabilities together with the leading performance of the Power platform.

This solution provides workload consolidation that helps clients control costs and improves overall performance, availability, flexibility, and energy efficiency. Power servers, which are combined with PowerVM technology, help consolidate and simplify your IT environment. Key capabilities include the following ones:

•Improve server utilization and I/O resource-sharing to reduce total cost of ownership and better use IT assets.

•Improve business responsiveness and operational speed by dynamically reallocating resources to applications as needed to better match changing business needs or handle unexpected changes in demand.

•Simplify IT infrastructure management by making workloads independent of hardware resources so that you make business-driven policies to deliver resources based on time, cost, and service-level requirements.

PowerVM is a combination of hardware enablement and added value to software. PowerVM consists of these major components:

•IBM PowerVM hypervisor (PHYP)

•Virtual I/O Server (VIOS)

•Service processor, enterprise Baseboard Management Controller (eBMC), or Flexible Service Processor (FSP)

•Hardware Management Console (HMC)

•PowerVM NovaLink

•IBM Power Virtualization Center (PowerVC)

Figure 1-1 on page 3 provides an overview of a Power server with multiple virtual machines (VMs) securely accessing resources, which is facilitated by PHYP.

Figure 1-1 PowerVM overview

PowerVM enables virtualization of the hardware, from processor to memory and storage I/O to network I/O resources. It enables platform-level capabilities like Live Partition Mobility (LPM) or Simplified Remote Restart (SRR).

Processor virtualization

The Power servers family gives you the freedom to either use the scale-up or scale-out processing model to run the broadest selection of enterprise applications without the costs and complexity that are often associated with managing multiple physical servers. PowerVM can help eliminate underutilized servers because it is designed to pool resources and optimize their usage across multiple application environments and operating systems. Through advanced VM capabilities, a single VM can act as a separate AIX, IBM i, or Linux operating environment that uses dedicated or shared system resources. With shared resources, PowerVM can automatically adjust pooled processor or storage resources across multiple operating systems, borrowing capacity from idle VMs to handle high resource demands from other workloads.

With PowerVM on Power servers, you have the power and flexibility to address multiple system requirements in a single machine. IBM Micro-Partitioning® supports multiple VMs per processor core. Depending on the Power servers model, you can run up to

1000 VMs on a single server, each with its own processor, memory, and I/O resources. Processor resources can be assigned at a granularity of 0.01 of a core. Consolidating systems with PowerVM can help cut operational costs, improve availability, ease management, and improve service levels, and businesses can quickly deploy applications.

1000 VMs on a single server, each with its own processor, memory, and I/O resources. Processor resources can be assigned at a granularity of 0.01 of a core. Consolidating systems with PowerVM can help cut operational costs, improve availability, ease management, and improve service levels, and businesses can quickly deploy applications.

Multiple shared processor pools (MSPP) allow for the automatic nondisruptive balancing of processing power between VMs that are assigned to shared pools, resulting in increased throughput. MSPP also can cap the processor core resources that are used by a group of VMs to potentially reduce processor-based software licensing costs.

Shared Dedicated Capacity allows for the “donation” of spare CPU cycles from dedicated processor VMs to a shared processor pool (SPP). Because a dedicated VM maintains absolute priority for CPU cycles, enabling this feature can increase system utilization without compromising the computing power for critical workloads.

Because its core technology is built into the system firmware, PowerVM offers a highly secure virtualization platform that received the Common Criteria Evaluation and Validation Scheme (CCEVS) EAL4+ certification3 for its security capabilities.

Memory virtualization

The PHYP automatically virtualizes the memory by dividing it into standard logical memory blocks (LMBs) and manages memory assignments to partitions by using hardware page tables (HPTs). This technique enables translations from effective addresses to physical real addresses and running multiple operating systems simultaneously in their own secured logical address space. The PHYP uses some of the activated memory in a Power server to manage memory that is assigned to individual partitions, manage I/O requests, and support virtualization requests.

PowerVM also features IBM Active Memory Expansion (AME), a technology that allows the effective maximum memory capacity to be much larger than the true physical memory for AIX partitions. AME uses memory compression technology to transparently compress in-memory data, which allows more data to be placed into memory and expand the memory capacity of configured systems. The in-memory data compression is managed by the operating system, and this compression is transparent to applications and users. AME is configurable on a per-LPAR basis. Thus, AME can be selectively enabled for one or more LPARs on a system.

I/O virtualization

The VIOS is a special purpose VM that can be used to virtualize I/O resources for AIX, IBM i, and Linux VMs. VIOS owns the resources that are shared by VMs. A physical adapter that is assigned to the VIOS can be shared by many VMs, which reduces the cost by eliminating the need for dedicated I/O adapters. PowerVM provides virtual SCSI (vSCSI) and N_Port ID Virtualization (NPIV) technologies to enable direct or indirect access to storage area networks (SANs) from multiple VMs. VIOS facilitates Shared Ethernet Adapter (SEA), which is a component that bridges a physical Ethernet adapter and one or more Virtual Ethernet Adapters (VEAs).

PowerVM supports single-root I/O virtualization (SR-IOV) technology, which allows a single I/O adapter to be shared concurrently with multiple LPARs. This capability provides hardware-level speeds with no additional CPU usage because the adapter virtualization is enabled by the adapter at the hardware level.

The SR-IOV implementation on Power servers has an extra feature that is called virtual Network Interface Controllers (vNICs). A vNIC is backed by an SR-IOV logical port (LP) on the VIOS, which supports LPM of VMs that use SR-IOV.

PowerVM also offers a capability that is called Hybrid Network Virtualization (HNV). HNV allows a partition to leverage the efficiency and performance benefits of SR-IOV LPs and participate in mobility operations. HNV leverages existing technologies such as AIX Network Interface Backup (NIB), IBM i Virtual IP Address (VIPA), and Linux active-backup bonding as its foundation, and introduces new automation for configuration and mobility operations.

When we talk about PowerVM, we are referring to the components, features, and technologies that are listed in the Table 1-1 and Table 1-2.

Table 1-1 Major components of PowerVM

|

Components

|

Function provided by

|

|

PHYP

|

Hardware platform

|

|

VIOS

|

Hypervisor or VIOS

|

|

HMC

|

HMC

|

|

PowerVM NovaLink

|

Hypervisor or Novalink

|

|

Service Processor

|

eBMC or FSP

|

|

Virtualization Management Interface (VMI)

|

Hypervisor

|

Table 1-2 PowerVM features and technologies

|

Category

|

Features and technologies

|

Function provided by

|

|

Server

|

LPAR

|

Hypervisor

|

|

Server

|

Dynamic logical partitioning (DLPAR)

|

Hypervisor

|

|

Server

|

Capacity on Demand (CoD)

|

Hypervisor or HMC

|

|

Server

|

LPM

|

Hypervisor, VIOS, or HMC

|

|

Server

|

SRR

|

Hypervisor or HMC

|

|

Server

|

AIX Workload Partitions (WPARs)

|

AIX

|

|

Server

|

IBM System Planning Tool (SPT)

|

SPT

|

|

Processor Virtualization

|

Micro-Partitioning

|

Hypervisor

|

|

Processor Virtualization

|

Processor compatibility mode

|

Hypervisor

|

|

Processor Virtualization

|

Simultaneous multithreading (SMT)

|

Hardware

|

|

Processor Virtualization

|

SPPs

|

Hypervisor

|

|

Memory Virtualization

|

Active Memory Mirroring (AMM)

|

Hardware

|

|

Memory Virtualization

|

AME

|

Hardware or AIX

|

|

Storage Virtualization

|

Shared storage pools (SSPs)

|

Hypervisor or VIOS

|

|

Storage Virtualization

|

vSCSI

|

Hypervisor or VIOS

|

|

Storage Virtualization

|

Virtual Fibre Channel (NPIV)

|

Hypervisor or VIOS

|

|

Storage Virtualization

|

Virtual Optical Device and Tape

|

Hypervisor or VIOS

|

|

Network Virtualization

|

VEA

|

Hypervisor or HMC

|

|

Network Virtualization

|

SEA

|

Hypervisor, VIOS, or HMC

|

|

Network Virtualization

|

Single-root I/O virtualization (SR-IOV)

|

Hypervisor or adapter

|

|

Network Virtualization

|

SR-IOV with vNIC

|

Hypervisor, adapter, or VIOS

|

|

Network Virtualization

|

HNV

|

Hypervisor, adapter, VIOS, or HMC

|

Table 1-3 lists the deprecated PowerVM features and technologies.

Table 1-3 Deprecated PowerVM features

|

Features and technologies

|

Function provided by

|

|

Integrated Virtualization Manager (IVM)

|

Hypervisor, VIOS, or IVM

|

|

Active Memory Sharing

|

Hypervisor or VIOS

|

|

Active Memory Deduplication

|

Hypervisor

|

|

Partition Suspend/Resume

|

Hypervisor or VIOS

|

|

Versioned WPARs

|

AIX

|

|

Host Ethernet Adapter (HEA)

|

Hypervisor

|

|

PowerVP

|

Hypervisor

|

1.2 IBM Power

The Power name is closely aligned with the physical processors that implement the Power architecture. The family of servers that are based on IBM POWER® processors is collectively referred as IBM Power.

Power servers are the core element of the PowerVM ecosystem. Power servers are widely known for their reliability, scalability, and performance characteristics for the most demanding workloads. They provide enterprise class virtualization for resource management and flexibility. Over decades, in every generation, Power servers were continuously enhanced in every aspect (performance, reliability, scalability, and flexibility), providing a wide range of options and solutions to customers.

Figure 1-2 on page 7 showcases the IBM sustained and consistent roadmap of technology advancements with Power servers.

Figure 1-2 IBM POWER processor roadmap

The IBM Power family of scale-out and scale-up servers includes workload consolidation platforms that help clients control costs and improve overall performance, availability, and energy efficiency.

At the time of writing, Power10 is the latest available processor technology in the portfolio, with offerings that range from enterprise-class systems to scale-out systems. The list of available Power10 processor-based systems is as follows:

•Enterprise scale-up

– IBM Power E1080 (9080-HEX)

– IBM Power E1050 (9043-MRX)

•Scale-out

– IBM Power S1024 (9105-42A)

– IBM Power S1022 (9105-22A)

– IBM Power S1022s (9105-22B)

– IBM Power S1014 (9105-41B)

– IBM Power L1014 (9786-42H)

– IBM Power L1022 (9786-22H)

– IBM Power L1024 (9786-42H)

For more information about Power servers, see IBM Power servers, found at:

All Power servers are equipped with the PHYP, which is embedded in the system firmware. When a machine is powered on, the PHYP is automatically loaded together with the system firmware. It enables virtualization capabilities at the heart of the hardware and minimizes the virtualization overhead as much as possible.

Some of the Power servers might be used as a stand-alone server without an HMC. However, the most commonly preferred approach is to use an HMC to extend capabilities of the PowerVM, including LPAR and virtualization.

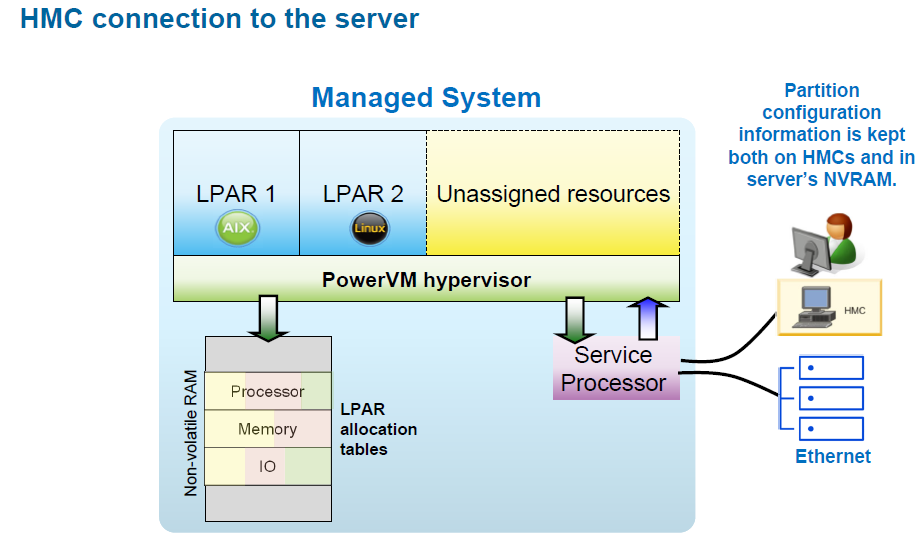

Figure 1-3 provides a high-level overview of a Power server that is managed by an HMC.

Figure 1-3 Power server that is managed by a Hardware Management Console

1.2.1 OpenPOWER

In August 2013, IBM worked closely with Google, Mellanox, Nvidia, and Tyan to establish the OpenPOWER Foundation. The goal of this collaboration is to establish an open ecosystem that is based on the IBM Power architecture.

In August 2019, IBM announced that the OpenPOWER Foundation would become part of the Linux Foundation. At the same time, IBM further opened the architecture to the world by allowing anybody to implement and manufacture their own processors capable of running software that is built for the Power Instruction Set Architecture (ISA) at no additional charge. IBM continues to base its POWER processors (including Power10) on the Power ISA, which is managed by the foundation. Since its inception, membership in the OpenPOWER foundation grew to over 150 organizations and academic partners.

1.2.2 Operating systems support on IBM Power servers

IBM Power architecture is bi-Endian, which allows running operating systems that are designed either for Big Endian or Little Endian platforms. As a result, Power servers support running IBM AIX, IBM i, and Linux workloads simultaneously.

For more information, see the following resources:

•IBM AIX Standard Edition, found at:

•IBM i operating system, found at:

•Enterprise Linux servers, found at:

1.3 PowerVM facts and features

PowerVM is built on the Power platform and virtualization technologies that are enabled by the PHYP and the VIOS.

|

Note: Historically, three editions of PowerVM, which were suited for various purposes, were available. These editions were PowerVM Express Edition, PowerVM Standard Edition, and PowerVM Enterprise Edition. Today, PowerVM Enterprise Edition is the only option, and it is automatically included in all Power servers.

|

PowerVM Enterprise Edition consists of the following components and features:

•PHYP

•VIOS

•HMC

•PowerVM NovaLink

•Service processor

•VMI

•LPAR

•DLPAR

•CoD

•IBM Power Enterprise Pools (PEP)

•LPM

•SRR

•AIX WPAR

•SPT

•Micro-Partitioning technology

•POWER processor compatibility modes

•SMT

•SPP

•PowerVM AMM

•PowerVM AME

•SSP

•Thin provisioning

•vSCSI

•Virtual Fibre Channel (NPIV)

•Virtual optical device and tape

•VEA

•SEA

•SR-IOV

•SR-IOV with vNIC adapters

•HNV

The remainder of this chapter briefly describes these PowerVM features.

1.3.1 PowerVM hypervisor

The PHYP is the foundation of PowerVM. The PHYP divides physical system resources into isolated LPARs. Each LPAR operates like an independent server that runs its own operating system, such as AIX, IBM i, Linux, or VIOS. The PHYP can assign dedicated processors, memory, and I/O resources, which can be dynamically reconfigured as needed to each LPAR. The PHYP also can assign shared processors to each LPAR by using its Micro-Partitioning feature. The PHYP creates an SPP from which it allocates virtual processors to the LPARs as needed. In other words, the PHYP creates virtual processors so that LPARs can share the physical processors while running independent operating environments.

Combined with features of the IBM POWER processors, the PHYP delivers functions that enable capabilities such as dedicated processor partitions, Micro-Partitioning, virtual processors, IEEE virtual local area network (VLAN) compatible virtual switches, VEAs, vSCSI adapters, virtual Fibre Channel (VFC) adapters, and virtual consoles.

The PHYP is a firmware layer between the hosted operating systems and the server hardware, as shown in Figure 1-4.

Figure 1-4 The PowerVM hypervisor abstracts physical server hardware

The PHYP is always installed and activated regardless of system configuration. The PHYP has no specific or dedicated processor resources that are assigned to it.

The PHYP performs the following tasks:

•Enforces partition integrity by providing a security layer between LPARs.

•Provides an abstraction layer between the physical hardware resources and the LPARs that use them. It controls the dispatch of virtual processors to physical processors, and saves and restores all processor state information during a virtual processor context switch.

•Controls hardware I/O interrupts and management facilities for partitions.

The PHYP firmware and the hosted operating systems communicate with each other through PHYP calls (hcalls).

1.3.2 Virtual I/O Server

As part of PowerVM, the VIOS is a software appliance with which you can associate physical resources and share these resources among multiple client LPARs.

The VIOS can provide both virtualized storage and virtualized network adapters to the virtual I/O clients. The goal is achieved by exporting the physical device on the VIOS through vSCSI. Alternatively, virtual I/O clients can access independent physical storage through the same or different physical Fibre Channel (FC) adapter by using NPIV technology. Virtual Ethernet enables IP-based communication between LPARs on the same system by using a virtual switch that is facilitated by the hypervisor that can work with VLANs.

For storage virtualization that uses vSCSI, these backing devices can be used:

•Direct-attached entire disks from the VIOS.

•SAN disks that are attached to the VIOS.

•Logical volumes that are defined on either of the previously mentioned disks.

•File-backed storage, with files that are on either of the previously mentioned disks.

•Logical units (LUs) from SSPs.

•Optical storage devices.

•Tape storage devices.

For storage that use NPIV, the VIOS facilitates VFC to FC mapping, which enables these backing devices:

•SAN disks that are directly presented to the VM, passing through VIOS.

•Tape Storage devices that are directly presented to the VM, passing through VIOS.

For virtual Ethernet, you can define SEAs on the VIOS, bridging network traffic between the server internal virtual Ethernet networks and external physical Ethernet networks.

The VIOS technology facilitates the consolidation of LAN and disk I/O resources and minimizes the number of physical adapters that are required while meeting the nonfunctional requirements of the server.

The VIOS can run in either a dedicated processor partition or a shared processor partition (Micro-Partition).

Figure 1-5 shows a basic VIOS configuration. This diagram shows only a small subset of the capabilities to illustrate the basic concept of how the VIOS works. The physical resources such as the physical Ethernet adapter and the physical disk adapter are accessed by the client partition by using virtual I/O devices.

Figure 1-5 Simple Virtual I/O Server configuration

1.3.3 Hardware Management Console

The HMC is a dedicated Linux-based appliance that you use to configure and manage

IBM Power servers. The HMC provides access to LPAR functions, service functions, and various system management functions through both a browser-based interface and a command-line interface (CLI). Because it is an external component, the HMC does not consume any resources from the systems that it manages, and you can maintain it without affecting system activity. The HMC can be configured for remote access either by using an Secure Shell (SSH) client or a web browser. The user can access only the HMC management application. The user is not allowed to install other applications.

IBM Power servers. The HMC provides access to LPAR functions, service functions, and various system management functions through both a browser-based interface and a command-line interface (CLI). Because it is an external component, the HMC does not consume any resources from the systems that it manages, and you can maintain it without affecting system activity. The HMC can be configured for remote access either by using an Secure Shell (SSH) client or a web browser. The user can access only the HMC management application. The user is not allowed to install other applications.

A second HMC can be connected to a single Power server for redundancy purposes, which is called a dual HMC configuration. An HMC can manage up to 48 Power servers and a maximum of 2000 VMs.

The HMC enhances PowerVM with the following capabilities:

•Virtual console access for VMs

•VM configuration and operation management

•CoD management

•Service tools

•LPM

•SRR

At the time of writing, two types of HMCs are available:

•A rack-mounted physical HMC appliance

•A virtual HMC (vHMC) appliance

An HMC virtual appliance can be configured on a VM within a Power server. A Power server-based vHMC is not allowed to manage its own host Power server.

An HMC virtual appliance on x86 environment can be configured by using one of the following hypervisors:

•KVM hypervisor

•Xen hypervisor

•VMware ESXi

1.3.4 PowerVM NovaLink

PowerVM NovaLink is a software interface that is used for virtualization management. You can install PowerVM NovaLink on a Power server. PowerVM NovaLink enables highly scalable modern cloud management and deployment of critical enterprise workloads. You can use PowerVM NovaLink to provision many VMs on Power servers quickly and at a reduced cost.

PowerVM NovaLink runs on a Linux LPAR on IBM POWER8®, IBM POWER9™, or Power10 processor-based systems that are virtualized by PowerVM. You can manage the server through a Representational State Transfer application programming interface (REST API) or through a CLI. You can also manage the server by using PowerVC or other OpenStack solutions. PowerVM NovaLink is available at no additional charge for servers that are virtualized by PowerVM.

PowerVM NovaLink provides the following benefits:

•Rapidly provisions many VMs on Power servers.

•Simplifies the deployment of new systems. The PowerVM NovaLink installer creates a PowerVM NovaLink partition and VIOS partitions on the server and installs operating systems and the PowerVM NovaLink software. The PowerVM NovaLink installer reduces the installation time and facilitates repeatable deployments.

•Reduces the complexity and increases the security of your server management infrastructure. PowerVM NovaLink provides a server management interface on the server. The server management network between PowerVM NovaLink and its VMs is secure by design and is configured with minimal user intervention.

•Operates with PowerVC or other OpenStack solutions to manage your servers.

For more information, see PowerVM NovaLink, found at:

1.3.5 Service processor

The service processor is a separate, independent processor that provides hardware initialization during system load, monitoring of environmental and error events, and maintenance support. The HMC is connected to the managed system through an Ethernet connection to the service processor. The service processor performs many vital reliability, availability, and serviceability (RAS) functions. It provides the means to diagnose, check th status of, and sense operational conditions of a remote system, even when the main processor is inoperable. The service processor enables firmware and operating system surveillance; several remote power controls; environmental monitoring; reset and boot features; remote maintenance; and diagnostic activities.

Up to the Power E1080 model, every Power server is equipped with FSPs. Starting with Power10 scale-out and midrange (Power E1050) models, servers now are configured with the eBMC service processor instead of the FSP.

1.3.6 Virtualization Management Interface

The eBMC-managed Power servers have a new model to communicate between the HMC and the PHYP in addition to a connection to the Baseboard Management Controller (BMC). This interface is called the VMI. Administrators must configure the VMI IP address after an eBMC-based system is connected to the HMC. As a best practice, configure the VMI IP before powering on the system. The HMC communicates with two endpoints to manage the system: the BMC itself and VMI.

Figure 1-6 shows the difference between an FSP-based system and an eBMC-based system. The VMI interface shares the physical connection of the eBMC port but has its own IP address.

Figure 1-6 Comparing an FSP-based system with an eBMC-based system

1.3.7 Logical partitioning

Logical partitioning is the ability to make a server run as though it were two or more independent servers. When you logically partition a server, you divide the resources on the server into subsets called LPARs. You can install software on an LPAR, and the LPAR runs as an independent logical server with the resources that you allocated to the LPAR.

You can assign processors, memory, and input/output devices to LPARs. You can run AIX, IBM i, Linux, and the VIOS in LPARs. The VIOS provides virtual I/O resources to other LPARs with general-purpose operating systems.

|

Note: The terms LPAR and VM have the same meaning and are used interchangeably in this publication.

|

LPARs share a few system attributes, such as the system serial number, system model, and processor feature code. All other system attributes can vary from one LPAR to another one.

You can create a maximum of 1000 LPARs on a server. You must use tools to create LPARs on your servers. The tool that you use to create LPARs on each server depends on the server model and the operating systems and features that you want to use on the server.

Each LPAR has its own of the following components:

•Operating system

•Licensed Internal Code (LIC) and open firmware

•Console

•Resources

•Other items that are expected in a stand-alone operating system environment, such as:

– Problem logs

– Data (libraries, objects, and file systems)

– Performance characteristics

– Network identity

– Date and time

Each LPAR can be:

•Dynamically modified

•Relocated (LPM)

Benefits of using partitions

Some benefits that are associated with the usage of partitions are as follows:

•Capacity management.

Flexibility in allocating system resources

•Consolidation:

– Consolidate multiple workloads that are running on different hardware and software licenses; reduce floor space; support contracts; and use in-house support and operations.

– Efficient use of resources. Dynamically reallocate resources and LPM to support performance and availability.

•Application isolation on a single frame:

– Separate workloads.

– Guaranteed resources.

– Data integrity.

•Merge production and test environments.

Test on the same hardware on which you deploy the production environment.

For more information about LPARs, see Logical partition overview, found at:

1.3.8 Dynamic logical partitioning

DLPAR refers to the ability to move resources between partitions without shutting down the partitions. This goal can be accomplished from the HMC application or by using the HMC CLI. These resources include the following ones:

•Processors, memory, and I/O slots.

•The ability to add and remove virtual devices.

DLPAR operations do not weaken the security or isolation between LPARs. A partition sees only resources that are explicitly allocated to the partition along with any potential connectors for more virtual resources that might be configured. Resources are reset when moved from one partition to another one. Processors are reinitialized; memory regions are cleared; and adapter slots are reset.

DLPAR operations depend on Resource Monitoring and Control (RMC) communication in between the HMC and the LPAR.

DLPAR is described in more detail in 2.5, “Dynamic logical partitioning” on page 52.

For more information about DLPAR, see Dynamic logical partitioning, found at:

1.3.9 Capacity on Demand

With CoD offerings, you can dynamically activate one or more resources on your server as your business peaks dictate. You can activate inactive processor cores or memory units that are installed on your server on a temporary and permanent basis. CoD offerings are available on selected IBM servers.

CoD is described in more detail in 2.9, “Capacity on Demand” on page 66.

For more information about CoD, see Capacity on Demand, found at:

1.3.10 Power Enterprise Pools

PEP are infrastructure licensing models that allow resource flexibility and sharing among Power servers, which enables cost efficiency. PEP are built on the CoD capability of Power servers, which allows consuming processor and memory resources beyond the initial configuration of pool member machines. Two different PEP are available:

•PEP 1.0

•PEP 2.0 (Power Systems Private Cloud with Shared Utility Capacity)

IBM PEP 1.0 is built on mobile capacity for processor and memory resources. You can move mobile resource activations among the systems in a pool with HMC commands. These operations provide flexibility when you manage large workloads in a pool of systems and helps to rebalance the resources to respond to business needs. This feature is useful for providing continuous application availability during maintenance. Workloads, processor activations, and memory activations can be moved to other systems. Disaster recovery (DR) planning also is more manageable and cost-efficient because of the ability to move activations where and when they are required.

IBM PEP 2.0, also known as Power Systems Private Cloud with Shared Utility Capacity, provides enhanced multisystem resource sharing and by-the-minute consumption of on-premises compute resources to clients who deploy and manage a private cloud infrastructure. All installed processors and memory on servers in a Power Enterprise Pool 2.0 are activated and made available for immediate use when a pool is started. Processor and memory usage on each server are tracked by the minute and aggregated across the pool by IBM Cloud® Management Console (IBM CMC). Base Processor Activation features and Base Memory Activation features and the corresponding software license entitlements are purchased for each server in a Power Enterprise Pool 2.0.

The base resources are aggregated and shared across the pool without having to move them from server to server. The unpurchased capacity in the pool can be used on a pay-as-you-go basis. Resource usage that exceeds the pool's aggregated base resources is charged as metered capacity by the minute and debited against purchased capacity credits on a real-time basis. Capacity credits can be purchased from IBM, an authorized IBM Business Partner, or online through the IBM Entitled Systems Support (IBM ESS) website, where available.

Shared Utility Capacity simplifies system management, so clients can focus on optimizing their business results instead of moving resources and applications around within their data center. Resources are tracked and monitored by IBM CMC, which automatically tracks usage by the minute and debits against Capacity Credits, which are based on actual usage. With Shared Utility Capacity, you no longer need to worry about over-provisioning capacity to support growth because all resources are activated on all systems in a pool. Purchased Base Activations can be seamlessly shared between systems in a pool, and all unpurchased capacity can be used on a pay-per-use basis.

PEP are described in more detail in 2.10, “Power Enterprise Pools” on page 70.

IBM Cloud Management Console

IBM CMC for IBM Power servers provides a consolidated view of the Power servers in your enterprise. It runs as a service that is hosted in IBM Cloud, and you can access it securely anytime and anywhere to monitor and gain insights about your Power servers. IBM CMC can be deployed based on the client input to different IBM Cloud regions. Dynamic views of performance, inventory, and logging for a complete Power enterprise, whether on-premises or off-premises, simplifies and unifies information in a single location. This information allows clients to easily make more informed decisions. As private and hybrid cloud deployments grow, enterprises need new insight into these environments. Tools that provide consolidated information and analytics can be key enablers to smooth operation of infrastructure.

The following applications are available on IBM CMC:

•Inventory

•Logging

•Patch Planning

•Capacity Monitoring

•Enterprise Pools 2.0

1.3.11 Live Partition Mobility

By using partition mobility, a component of the PowerVM Enterprise Edition hardware feature, you can migrate AIX, IBM i, and Linux LPARs from one system to another one. The mobility process transfers the system environment, which includes the processor state, memory, attached virtual devices, and connected users.

By using active partition migration (LPM), you can migrate AIX, IBM i, and Linux LPARs that are running, including the operating system and applications, from one system to another one. The LPAR and the applications that are running on that migrated LPAR do not need to be shut down.

By using inactive partition migration, you can migrate a powered-off AIX, IBM i, or Linux LPAR from one system to another one.

You can use the HMC to migrate an active or inactive LPAR from one server to another one.

LPM is described in more detail in 2.6, “Partition mobility” on page 54.

For more information about LPM, see Partition mobility, found at:

1.3.12 Simplified Remote Restart

SRR is a HA option for LPARs. When an error causes a server outage, a partition that is configured with the SRR capability can be restarted on a different physical server. Sometimes, it might take longer to start the server, in which case the SRR feature can be used for faster reprovisioning of the partition. This operation completes faster compared to restarting the server that failed and then restarting the partition.

The SRR feature is supported on IBM Power8 or later processor-based systems.

Here are the characteristics of the SRR feature:

•During the SRR operation, the LPAR is shut down and then restarted on a different system.

•The SRR feature preserves the resource configuration of the partition. If processors, memory or I/O are added or removed while the partition is running, the SRR operation activates the partition with the most recent configuration.

The SRR feature is not supported from the HMC for LPARs that are co-managed by the HMC and PowerVM NovaLink. However, you can run SRR operations by using PowerVC with PowerVM NovaLink.

SRR is described in more detail in 2.7, “Simplified Remote Restart” on page 59.

For more information, see Simplified Remote Restart, found at:

1.3.13 AIX Workload Partitions

WPARs are virtualized operating system environments within a single instance of the AIX operating system. WPARs secure and isolate the environment for the processes and signals that are used by enterprise applications.

For more information about WPARs, see IBM Workload Partitions for AIX, found at:

1.3.14 IBM System Planning Tool

The SPT is a browser-based application that helps you design system configurations. It is useful for designing LPARs. You can use SPT to plan a system that is based on existing performance data or based on new workloads. System plans that are generated by the SPT can be deployed on the system by the HMC. The SPT is available to help the user with system planning, design, and validation, and to provide a system validation report that reflects the user's system requirements while not exceeding system recommendations.

For more information about SPT, see IBM System Planning Tool for POWER processor-based systems, found at:

1.3.15 Micro-Partitioning

Micro-Partitioning technology enables the configuration of multiple partitions to share system processing power. All processors that are not dedicated to specific partitions are placed in the SPP that is managed by the hypervisor. Partitions that are set to use shared processors can use the SPP. You can set a partition that uses shared processors to use as little as 0.05 processing units, which is approximately a 20th of the processing capacity of a single processor. You can specify the number of processing units to be used by a shared processor partition down to a 100th of a processing unit. This ability to assign fractions of processing units to partitions and allowing partitions to share processing units is called Micro-Partitioning technology.

For more information about Micro-Partitioning, see Micro-Partitioning technology, found at:

1.3.16 POWER processor compatibility modes

Processor compatibility modes enable you to migrate LPARs between servers that have different processor types without upgrading the operating environments that are installed in the LPARs.

You can run several versions of the AIX, IBM i, Linux, and VIOS operating environments in LPARs. Sometimes earlier versions of these operating environments do not support the capabilities that are available with new processors, which limit your flexibility to migrate LPARs between servers that have different processor types.

A processor compatibility mode is a value that is assigned to an LPAR by the hypervisor that specifies the processor environment in which the LPAR can successfully operate. When you migrate an LPAR to a destination server that has a different processor type from the source server, the processor compatibility mode enables that LPAR to run in a processor environment on the destination server in which it can successfully operate. In other words, the processor compatibility mode enables the destination server to provide the LPAR with a subset of processor capabilities that are supported by the operating environment that is installed in the LPAR.

For more information about processor compatibility modes, see Processor compatibility modes, found at:

1.3.17 Simultaneous multithreading

SMT is a processor technology that allows multiple instruction streams (threads) to run concurrently on the same physical processor, which improves overall throughput. The principle behind SMT is to allow instructions from more than one thread to be run concurrently on a processor. This capability allows the processor to continue performing useful work even if one thread must wait for data to be loaded. To the operating system, each hardware thread is treated as an independent logical processor. Single-threaded (ST) execution mode is also supported. Power8 or later processors support eight SMT threads per core. Different VMs on the same server might be configured with different SMT values on the run time.

Figure 1-7 shows the relationship between physical, virtual, and logical processors.

Figure 1-7 Physical, virtual, and logical processors

1.3.18 Shared processor pools

An SPP is a hypervisor assisted capability, which allows a specific group of Micro-Partitions (and their associated virtual processors) to share physical processing resources.

Shared processors, SPP, and MSPP are described in detail in 2.1.3, “Shared processors” on page 34 and 2.1.5, “Multiple shared processor pools” on page 36.

1.3.19 Active Memory Mirroring

AMM for the hypervisor is a RAS feature that is designed to ensure that system operation continues even if the unlikely event of an uncorrectable error occurs in the main memory that is used by the system hypervisor. When this feature is activated, two identical copies of the system hypervisor are maintained in memory. Both copies are simultaneously updated with any changes. This feature is also sometimes referred to as system firmware mirroring.

|

Note: AMM does not mirror partition data. It mirrors only the hypervisor code and its components, allowing this data to be protected against a DIMM failure.

|

If a memory failure on the primary copy occurs, the second copy is automatically called, which eliminates platform outages due to uncorrectable errors in system hypervisor memory. The hypervisor code LMBs are mirrored on distinct DDIMMs to enable more usable memory. No specific DDIMM hosts the hypervisor memory blocks, so the mirroring is done at the LMB level, not at the DDIMM level. To enable the AMM feature, the server must have enough free memory to accommodate the mirrored memory blocks. The SPT can help to estimate the amount of memory that is required.

Figure 1-8 A simple view of Active Memory Mirroring

1.3.20 Active Memory Expansion

AME is an AIX OS feature. It enables in-flight memory compression and decompression by using hardware accelerators. The objective is to achieve better memory utilization and efficiency through a tradeoff between more processing capacity and more usable memory for the applications.

AME is described in more detail in 2.2.2, “Active Memory Expansion” on page 39.

1.3.21 Shared storage pools

An SSP is a server-based storage virtualization that is clustered. It is an extension of existing storage virtualization on the VIOS. In this feature, SAN-based LUNs are represented to a cluster of VIOSs and pooled together to allow VIOS administrators to create LUs out of the shared pool.

SSP is described in more detail in 2.3.3, “Shared storage pools” on page 44.

1.3.22 Virtual SCSI

vSCSI refers to a virtualized implementation of the SCSI protocol. vSCSI is based on a client/server relationship. The VIOS owns the physical resources and acts as server, or in SCSI terms, a target device. The client LPARs access the vSCSI backing storage devices that are provided by the VIOS as clients.

vSCSI is described in more detail in 2.3.1, “Virtual SCSI” on page 42.

1.3.23 Virtual Fibre Channel

NPIV is an industry-standard technology that allows an NPIV-capable FC adapter to be configured with multiple virtual worldwide port names (WWPNs). This technology also is called VFC. Similar to the vSCSI function, VFC is another way of securely sharing a physical FC adapter among multiple VIOS client partitions.

NPIV is described in more detail in 2.3.2, “Virtual Fibre Channel” on page 43.

1.3.24 Virtual optical device and tape

A CD or DVD device or a tape device that is attached to a VIOS can be virtualized and assigned to virtual I/O clients.

1.3.25 Virtual Ethernet Adapters

Virtual Ethernet allows the administrator to define in-memory connections between partitions that are handled at the system level (PHYP and operating systems interaction). These connections are represented as VEAs and exhibit characteristics like physical high-bandwidth Ethernet adapters. They support the industry standard protocols, such as IPv4, IPv6, ICMP, or Address Resolution Protocol (ARP).

VEAs are described in more detail in 2.4.1, “Virtual Ethernet Adapter” on page 46.

1.3.26 Shared Ethernet Adapter

A SEA is a VIOS component that bridges a real Ethernet adapter and one or more VEAs. A SEA allows many client partitions to effectively share physical network resources and communicate with networks outside of the server.

SEA is described in more detail in 2.4.2, “Shared Ethernet Adapter” on page 47.

1.3.27 Single-root I/O virtualization

SR-IOV is an extension to the Peripheral Component Interconnect Express (PCIe) specification that allows a single I/O adapter to be shared concurrently with multiple LPARs. It provides hardware level speeds with no additional CPU usage because the adapter virtualization is enabled by the adapter at the hardware level. However, this performance comes at the cost of the loss of LPM and SRR capabilities.

SR-IOV with vNIC

SR-IOV with vNIC is the virtualized version of the SR-IOV technology. It enables LPM between servers and allows up to six backing devices for hypervisor-assisted automated failover. Since vNIC virtualization is established by using VIOSs, more CPU is used on the VIOS.

SR-IOV is described in more detail in 2.4.3, “Single-root I/O virtualization” on page 47.

1.3.28 Hybrid Network Virtualization

HNV allows AIX, IBM i, and Linux partitions to leverage the efficiency and performance benefits of SR-IOV LPs and participate in mobility operations such as LPM and SRR. HNV is enabled by selecting a new Migratable option when an SR-IOV LP is configured. HNV uses active backup bonding to allow LPM for the partitions that are configured with an SR-IOV LP.

HNV is described in more detail in 2.4.5, “Hybrid Network Virtualization” on page 51.

1.4 PowerVM resiliency and availability

Power servers with PowerVM come with industry-leading resiliency features that are embedded into its layers, plus software solutions to solidify the availability and robustness of the entire ecosystem.

Starting with the POWER processor and server architecture, Power servers are equipped with redundant components and techniques that are used to provide outstanding resiliency and availability.

PowerVM resiliency starts in the hardware layer. Examples include redundancy of power and cooling components, and service processors and system clocks.

In the hypervisor layer, PowerVM offers AMM capability to keep two copies of the system memory.

In the management layer, PowerVM supports dual HMC and also supports HMC and Novalink for the same server concurrently.

In the virtualization layer, PowerVM supports multiple pairs of VIOSs. Redundant VIOSs (commonly referred as dual VIOS) are recommended for group of workloads. Dual VIOS enables higher throughput and availability, and allows resiliency through failovers in between active VIOSs for storage and network virtualization.

In the architectural level, PowerVM is equipped and surrounded with software solutions, which offer higher levels of availability by applying industry best practices. These software solutions include IBM PowerHA SystemMirror®, IBM VM Recovery Manager (VMRM) HA, and IBM VM Recovery Manager for DR.

VMRM is described in more detail in 2.8, “VM Recovery Manager” on page 62.

To learn more about Power server RAS features, see Introduction to IBM Power Reliability, Availability, and Serviceability for IBM POWER9 processor-based systems by using

IBM PowerVM, found at:

IBM PowerVM, found at:

1.5 PowerVM scalability

PowerVM supports up to 16 VIOSs, with 20 partitions per processor and 1000 LPARs per server. Each partition requires a minimum of one I/O slot for disk attachment and a second I/O slot for Ethernet attachment. Therefore, at least 2000 I/O slots are required to support 1000 LPARs when dedicated physical adapters are used, and that is before any resilience or adapter redundancy is considered.

The high-end IBM Power servers can provide many physical I/O slots by attaching expansion drawers, but it is nowhere near to the number of required physical I/O slots mentioned earlier. Also, the mid-end servers have a lower maximum number of I/O ports than high-end servers. To overcome these physical requirements, I/O resources are typically shared. vSCSI and VFC technologies, facilitated by the VIOS, provide the means to share I/O resources for storage. Also, virtual Ethernet, SEA, and SR-IOV based technologies provide the means for network resource sharing.

IBM Power servers can scale up to four system nodes, four processor sockets, and 16 TB of memory per system node, totaling a maximum of 64 TB memory, 16 processor sockets, and 240 cores in Power10 processor-based systems.

1.6 PowerVM security

Security is one of the prime concerns for any digital organization today. Persistent end-to-end security is needed to reduce the exposure to potential security threats. PowerVM offers a platform with industry-leading security features for your workloads by closely coupling with built-in hardware-enabled security capabilities of Power servers.

The key PowerVM security features are as follows:

•Workload isolation

PowerVM provides data isolation between the deployed partitions.

•Secure Boot

A secure initial program load (IPL) process or the Secure Boot feature allows only correctly signed firmware components to run on the system processors. Each component of the firmware stack, including hostboot, the PHYP, and partition firmware, is signed by the platform manufacturer and verified as part of the IPL process.

•OS Secure Boot (Linux and AIX)

The OS Secure Boot feature extends the chain of trust to the LPAR by digitally verifying the OS boot loader, kernel, runtime environment, device drivers, kernel extensions, applications, and libraries.

•Trusted Platform Module (TPM)

A framework to support remote attestation of the system firmware stack through a hardware TPM.

•Virtual Trusted Platform Module (vTPM)

You can enable a vTPM on an LPAR by using the HMC after the LPAR is created.

•Platform KeyStore (PKS)

An AES-256 GCM encrypted non-volatile store to provide LPARs with more capabilities to protect sensitive information. PowerVM provides an isolated PKS storage allocation for each partition with individually managed access controls. Some of the possible use cases of this feature include the following ones:

– Boot device encryption.

– Self-encrypting drives

– Unlocking encrypted logical volumes without requiring a passphrase.

– Public key and certificate protection.

– Provide a lockable flash that is accessible during an early IPL of the partition that is then locked down from further access.

•Transparent Memory Encryption (TME)

The Power10 family of servers introduces a new layer of defense with end-to-end memory encryption. All data in memory remains encrypted while in transit between memory and processor. Because this capability is enabled at the silicon level, there is no extra management setup and performance impact.

•Fully Homomorphic Encryption (FHE)

Power10 processor-based servers include four times more crypto engines in every core compared to Power9 processor-based servers to accelerate encryption performance across the stack. These innovations, along with new in-core defense for return-oriented programming attacks and support for post-quantum encryption and FHE, makes one of the most secure server platforms even better.

For more information about Secure Boot in PowerVM, see Secure Boot in PowerVM, found at:

For more information about Secure Boot in AIX, see Secure boot, found at:

For more information about vTPM, see Creating a logical partition with Virtual Trusted Platform capability, found at:

For more information about how to secure the PowerVM environment, see Security, found at:

For more information about Platform KeyStore, see Enabling the platform keystore capability on a logical partition, found at:

For more information about TME, see 2.1.9, “Pervasive memory encryption”, in IBM Power E1080 Technical Overview and Introduction, REDP-5649.

For more information about FHE, see Homomorphic Encryption Services, found at:

Power servers benefit from the integrated security management capabilities that are offered by IBM PowerSC for managing security and compliance on Power servers (AIX, IBM i, and Linux on Power). PowerSC introduces extra features to help customers manage security end-to-end for virtualized environments that are hosted on Power servers. For more information, see IBM PowerSC, found at:

Figure 1-9 depicts the security features and capabilities that the Power servers stack provides for applications and workloads that are deployed on Power.

Figure 1-9 PowerVM Security

1.7 PowerVM and the cloud

This section describes IBM PowerVM in a cloud environment.

IBM PowerVC provides simplified virtualization management and cloud deployments for AIX, IBM i, and Linux VMs running on IBM Power servers. PowerVC is designed to build private cloud capabilities on Power servers and improve administrator productivity. It can further integrate with cloud environments through higher-level cloud orchestrators.

IBM Power Systems Virtual Server (PowerVS) is a Power offering. The Power Systems Virtual Servers are in the IBM data centers, distinct from the IBM Cloud servers with separate networks and direct-attached storage. The environment is in its own pod and the internal networks are fenced but offer connectivity options to meet customer requirements.

This infrastructure design enables PowerVS to maintain key enterprise software certification and support because the PowerVS architecture is identical to the certified on-premises infrastructure. The virtual servers, also known as LPARs, run on IBM Power server hardware with the PHYP.

Either in a Power Private Cloud with IBM PowerVC or in a public cloud like IBM PowerVS, PowerVM plays a key role when you implement Power servers in the cloud environment.

For more information, see the following resources:

•IBM PowerVM, found at:

•Advanced virtualization and cloud management, found at:

•What is a Power Virtual Server?, found at:

•IBM Cloud Pak® System Software documentation, found at:

IBM offers cloud suites that help optimize business environments for reliability and better performance. IBM Cloud options are available to meet your expectations and needs based on the running infrastructure.

Figure 1-10 shows IBM different cloud options.

Figure 1-10 IBM Cloud options

For a private cloud, IBM brings the speed, agility, and pricing flexibility of public cloud solutions to on-premises. With the Power Private Cloud with Shared Utility Capacity offering, IBM enables by-the-minute resource sharing across systems and metering, which leads to unmatched flexibility, simplicity, and economic efficiency. The IBM Power Private Cloud Rack solution offers an open hybrid cloud offering based on IBM and Red Hat portfolios.

Figure 1-11 shows the IBM Power Private Cloud Rack solution.

Figure 1-11 IBM Power Private Cloud Rack solution

When it comes to infrastructure as a service (IaaS) and private cloud management for Power servers, IBM offers IBM PowerVC with a fully automated platform.

Figure 1-12 shows IBM PowerVC for Power Private Cloud.

Figure 1-12 IBM PowerVC

If you seek an extensive and mixed cloud environment, hybrid cloud solutions can integrate private cloud services, on-premises, and public cloud services and infrastructure. They provide orchestration, management, and application portability across all layers. The result is a single, unified, and flexible distributed computing environment where an organization can run and scale its traditional or cloud-native workloads on the most appropriate computing model.

Figure 1-13 shows a high-level picture of hybrid cloud integration.

Figure 1-13 IBM hybrid cloud

1.8 PowerVC enhanced benefits

PowerVC is an advanced enterprise virtualization management offering for Power servers that is based on OpenStack technology. PowerVC is available to every PowerVM client. PowerVC provides simplified virtualization management and cloud deployments for AIX,

IBM i, and Linux VMs that run on Power servers. Although PowerVM is virtualization for Power processor-based systems, PowerVC provides advanced virtualization and cloud management capabilities.

IBM i, and Linux VMs that run on Power servers. Although PowerVM is virtualization for Power processor-based systems, PowerVC provides advanced virtualization and cloud management capabilities.

PowerVC is designed to build private cloud capabilities on Power servers and improve administration productivity. PowerVC provides fast VM image capture, deployment, resizing, and management. PowerVC includes integrated management of storage, network, and compute resources that simplifies administration. PowerVM with PowerVC also improve usage and reduce complexity.

PowerVC integrates with widely accepted cloud and automation management tools in the industry like Ansible, Terraform, and Red Hat OpenShift. It can be integrated into orchestration tools like IBM Cloud Automation Manager (CAM), VMware vRealize, or SAP Landscape Management (LaMa). One of the great benefits is the easy transition of VM images between private and public cloud, as shown in Figure 1-12 on page 28.

Several different options exist to set up communication between managed hosts and PowerVC, depending on whether the HMC or PowerVM NovaLink is used to communicate with hosts.

|

Attention: If PowerVM NovaLink is installed in the host system, the system still can be added for HMC management by normal procedure. However, if PowerVM NovaLink is installed in the host system and it is HMC-connected, the management type from PowerVC for this host always must be PowerVM NovaLink. HMC can be used to manage the hardware and firmware on the host system.

|

PowerVC is used widely by Power customers. PowerVC can manage up to 10,000 VMs; includes Multifactor Authentication (MFA) support; and supports persistent memory, creation of volume clones for backup, dynamic resource optimization, and single click server evacuation.

For more information, see IBM PowerVC, found at:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.