Solution overview

This chapter provides an introduction to the IBM Db2 Mirror for i solution for continuous availability, including its architecture, concepts, and interaction with high availability (HA) and disaster recovery (DR) (collectively known as HA/DR) solutions.

1.1 Introduction to Db2 Mirror for i

With the IBM i 7.4 release, IBM offers a new licensed program for the IBM i portfolio: IBM Db2 Mirror for i. This new offering enables near-continuous availability through an IBM i exclusive Db2 active-active 2-system configuration.

Db2 Mirror for i synchronously mirrors database updates between two separate nodes by using a Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE) network. Applications can be deployed in active-active or active-passive mode (updates on one node and read access on the secondary). Db2 Mirror supports applications that use either traditional record-level access or SQL-based database access. It also supports both Java Database Connectivity (JDBC) -attached applications servers and the traditional 5250 approach. The applications and databases may be in either a System Auxiliary Storage Pool (SYSBAS) or as part of an Independent Auxiliary Storage Pool (IASP).

Db2 Mirror for i provides the following benefits:

• Continuous Application Availability: Provides a recovery time objective (RTO) of zero, enabling continuous application availability.

•Supports multiple technologies: Flexibility to choose between active-active and active-passive configurations for the application layer.

•Easy monitoring and management: Intuitive GUI for easy monitoring and management of Db2 Mirror for i.

•Support rolling upgrades.

•No journaling is required for replication.

Figure 1-1 shows a general overview of the architecture of a Db2 Mirror environment that uses RoCE as a low-latency communication protocol.

Figure 1-1 Db2 Mirror for i architecture overview

1.2 Positioning

Db2 Mirror for i is designed to address business needs that require continuous application availability. It provides an RTO and a recovery point objective (RPO) of zero.

Figure 1-2 shows a spectrum of three different types of HA classes. The illustrations represent 2-node shared-storage configurations for conceptual simplicity. There are many other topologies and data resiliency combinations.

Figure 1-2 High availability classification

The positioning of IBM Db2 Mirror for i within this HA spectrum, including the level of provided outage protection, RPO/RTO, and licensing considerations, is shown in Table 1-1.

Table 1-1 Db2 Mirror for i positioning

|

Technology

|

Active-active clustering

|

Active-passive clustering

|

Active-inactive

|

|

Definition

|

Application-level clustering: Applications in the cluster have simultaneous access to the production data. Therefore, there is no application restart after an application node outage. Certain types enable read-only access from secondary nodes.

|

Operating system (OS) -level clustering: One OS in the cluster has access to the production data and multiple OS instances on all nodes in the cluster. The application is restarted on a secondary node after an outage of a production node.

|

Virtual machine (VM) -level clustering: One VM in a cluster pair has access to the data, one logical OS, and one or two physical copies. The OS and applications must be restarted on a secondary node after a primary node outage event. Live Partition Mobility (LPM) enables the VM to be moved non-disruptively for a planned outage event.

|

|

Outage types

|

Software, hardware, HA, planned, unplanned RTO 0, and limited distance.

|

Software, hardware, HA/DR, planned, unplanned, RTO > 0, and multi-site.

|

Hardware, HA/DR, planned, unplanned, RTO > 0, and multi-site.

|

|

OS integration

|

Inside the OS.

|

Inside the OS.

|

OS-neutral.

|

|

RPO

|

Synchronous mode only.

|

Synchronous and Asynchronous.

|

Synchronous and asynchronous

|

|

RTO

|

0.

|

Fast (minutes)

|

Fast Enough (VM restart).

|

|

Licensing1

|

N+N licensing.

|

N+1 licensing.

|

N+0 licensing.

|

|

Industry examples

|

Oracle RAC, Db2 Mirror, and IBM pureScale®.

|

IBM PowerHA®,

Red Hat HA, and Linux HA. |

VMware, VM Recovery Manager (VMR) HA, and LPM.

|

1 N = number of licensed processor cores on each system in the cluster.

Db2 Mirror advantages

Db2 Mirror is easy to deploy and adopt. Users and applications are largely unaware that synchronous replication is keeping data between the two nodes identical. The data on both nodes is available for production use.

Db2 Mirror can run in two different environment modes:

•Active-passive mode: One node runs your applications while the other node is in standby mode. You can run queries or reports on your database.

•Active-active mode: Both nodes run your applications. Updates to the replicated data are synchronous, and users on both nodes see the same data.

These two modes are not fixed, and you can choose to adapt your environment to meet your needs.

Db2 Mirror can reduce and in some cases eliminate production outage due to planned initial program loads (IPLs) and updates. With the ability to suspend replication, one node can continue processing transactions while the second node is restarted or maintenance is applied. After the IPL is complete and communication is reestablished between nodes, the changes that were tracked by the active node are resynchronized with the secondary node to make the database file identical again. Then, the process can be reversed by suspending replication again and restarting or performing maintenance on the first node. For more information, see 5.1, “Planned outages” on page 70.

This process of using Db2 Mirror to avoid downtime for the business can also be used to upgrade your server or storage hardware.

1.3 Architecture

This section provides an overview of the Db2 Mirror architecture and explains some

Db2 Mirror concepts.

Db2 Mirror concepts.

1.3.1 Technology overview

A Db2 Mirror configuration consists of two IBM Power Systems servers in close proximity that are connected through a RoCE network. The application workload updates to the Db2 for i database are replicated in real-time synchronicity between the two systems, either bidirectionally for active-active application deployment, or unidirectional for active-passive application deployments. The active-passive configuration is ideal for query, Business Intelligence (BI), or artificial intelligence / machine learning (AI/ML) workloads that use real-time accurate data on the secondary node.

IBM Db2 Mirror is a licensed program, 5770DBM, that requires IBM i 7.4 on

Power Systems servers that are based on IBM POWER8®, IBM POWER9™, and higher technology.

Power Systems servers that are based on IBM POWER8®, IBM POWER9™, and higher technology.

Figure 1-3 shows two IBM i logical partitions (LPARs) which are called nodes in Db2 Mirror, each with their own separate database. The Db2 Mirror product provides the ability to have the OS keep native and SQL database objects synchronized between the two nodes. As data change requests occur on either node, Db2 Mirror automatically uses synchronous replication to keep the two instances identical. When one of the nodes is not available, Db2 Mirror tracks every change to replicated objects on the active node. After the mirrored pair is reconnected, all changes are synchronized between the nodes so that once resynchronized both databases are again identical. Db2 Mirror supports libraries and objects that are either in a System Auxiliary Storage Pool (SYSBAS) or an IASP. Simultaneous access for the IBM i Integrated File System (IFS) from both nodes is also supported in an IASP environment.

Figure 1-3 shows a Db2 Mirror environment architecture with applications running on IBM i and a replication layer that uses RoCE technology.

Figure 1-3 Db2 Mirror for i architecture

The configuration of a Db2 Mirror environment begins with one IBM i node that can be an existing production environment. During the setup process, the second node is initialized as an identical copy of that source node.

Db2 Mirror for i can be combined with existing HA/DR solutions like PowerHA or independent software vendor (ISV) logical replication solutions for HA/DR. Some ISVs developed complementary solutions to Db2 Mirror for i. For more information, see 1.4, “High availability and disaster recovery solutions” on page 9.

1.3.2 Remote Direct Memory Access over Converged Ethernet

The Db2 Mirror for i solution requires a high-speed network connection with low latency to perform synchronous data operations. RoCE as a RDMA-based protocol meets these requirements. RoCE adapters are required on both nodes for Db2 Mirror for i. The ports of the RoCE adapters can be cabled directly together or connected through a switch. A pair of IP addresses, one from each node, is used to identify each physical RDMA link between the two nodes.

For more information about the details on the hardware requirements, see 2.2.1, “IBM Power System hardware requirements” on page 21.

|

Note: Db2 Mirror does not use remote journals to accomplish synchronous replication. Db2 Mirror synchronous replication is built into the IBM i OS.

|

1.3.3 Network redundancy group

A network redundancy group (NRG) is a logical group of physical ports. It hides the complexity that is associated with automated physical network redundancy, failover, and load balancing of connections between the two nodes.

An NRG consists of one or more (up to 16) physical RDMA-enabled links between nodes. It is a single logical link to the target node. The RDMA network stack transparently handles all processing that is related to which physical link is used at any time based on the NRG configuration.

By default, five NRGs are defined:

•Db2 Mirror Environment Manager

•Database replication

•System object replication

•IFS replication

•Resynchronization

Priority and load balancing can be modified for the different NRGs.

1.3.4 Replication states

Replication states must be understood and monitored. A replication state indicates whether replication is in one of the following states:

•Active replication state

Normal state of Db2 Mirror. Replication is active, both nodes are in the active status, and they accept any creates or updates on replicated objects.

•Tracking / Blocked replication state

When a node is not available or if communication is interrupted between the nodes, active replication is suspended, which causes Db2 Mirror to go into the TRACKING/BLOCKED replication state to prevent conflicting changes to objects. One node may continue operations that affect replicated objects and its replication state is set to TRACKING. The other node is blocked from changing replicated objects and its replication state is set to BLOCKED. The node that is BLOCKED still may change non-replicated objects and query replicated objects.

Replication role

The determination of which node is set to TRACKING and which node becomes BLOCKED is made by primary and secondary node designation. During the initial setup of Db2 Mirror, the setup source node is the primary node and the setup copy node becomes the secondary node. So, the primary node goes to the TRACKING state and the secondary node goes to the BLOCKED state. Primary and secondary node roles can be changed by the administrator.

Database IASPs (DB IASPs) have a separate replication role from SYSBAS. During normal operation, the role of an active DB IASP matches the replication role of SYSBAS. If an IASP is varied off on either node, the replication role of the IASP is adjusted separately from the SYSBAS replication role.

Object tracking list

The object tracking list (OTL) is the mechanism that is used to track changes that are made on replicated objects when the replication state is TRACKING. Because IASPs might have a separate state from SYSBAS, there is an OTL for SYSBAS and a separate OTL for each IASP. Each OTL on a node contains the changes that must be sent to the other node during the resynchronization process. It is possible to define priorities on objects during resynchronization by using the GUI interface or Db2 Mirror SQL services. For more information, see 2.5, “Object tracking list suggested priorities” on page 29.

1.3.5 Replication criteria list

The replication criteria list (RCL) is a rules engine that is used by Db2 Mirror. It consists of a set of rules identifying group of objects that should or should not be replicated. The rules combined with the default inclusion state (including the entire SYSBAS or not), selected during initial setup, and system rules, provide a concise process to determine the replication status of any existing or future object.

Because Db2 Mirror supports objects in SYSBAS and in IASPs, an independent RCL exists for each IASP that is registered as a DB IASP with Db2 Mirror.

Replication-eligible objects

The Db2 Mirror environment supports replication of the object types that are updated in user applications. For more information, see 2.3, “Objects eligible for replication” on page 25.

1.3.6 Database IASP versus IFS IASP

IBM Db2 Mirror for i supports existing IASPs to be registered as either DB IASPs or IFS IASPs that are simultaneously accessible from both nodes:

Database IASPs Similar to SYSBAS object replication by Db2 Mirror, a DB IASP supports replication of all objects that are eligible for Db2 Mirror replication, as described in 2.3, “Objects eligible for replication” on page 25. Like for non Db2 Mirror environments, DB IASPs can be configured to provide different database name spaces for workload isolation or for extending Db2 Mirror by using an HA/DR solution with remote replication. Although it is simultaneously available on both Db2 Mirror nodes, a DB IASP is not switchable between the two local nodes.

IFS IASPs An IFS IASP must make IFS objects simultaneously available on both Db2 Mirror nodes. It is used to reference IFS objects only, and at any time is attached to only one of the two Db2 Mirror nodes. It is also must be switchable between both nodes by using PowerHA, but is not replicated by Db2 Mirror. Any database or other non-IFS objects that are included in IFS IASPs are accessible from the local node only where the IASP is varied on, but not from both nodes.

|

Note: An existing IASP with both database files and IFS must be split into two IASPs (one for database and one for IFS) to make both IASP objects and IFS files accessible from both nodes.

|

The different concepts for simultaneous data access among Db2 Mirror nodes for database and IFS IASPs are shown in Figure 1-4.

Figure 1-4 Db2 Mirror IASP support

Simultaneous IFS access from both nodes is implemented by using an IFS client/server technology that uses a mutable file system architecture like the one that is shown in Figure 1-5.

Figure 1-5 Db2 Mirror IFS support

The IFS IASP is accessible for reads and writes from both nodes. Requests to the IFS client node are forwarded to the IFS server node where the IFS IASP is varied on. The IFS IASP must be switchable either by using PowerHA logical unit number (LUN) -level switching or IASP replication. The client/server technology automatically changes when the storage is switched.

1.4 High availability and disaster recovery solutions

Db2 Mirror by design provides a local continuous availability solution within a data center with a maximum supported distance between two Db2 Mirror nodes of 100 meters, or 200 meters when using an Ethernet switch.

Many customers also require a HA or DR solution to protect them against local or regional outages. For this purpose, a Db2 Mirror local continuous availability solution can be combined with existing HA/DR replication technologies.

For a combination of Db2 Mirror with HA/DR replication technologies, the following considerations apply:

•Remote replication for DR can be implemented either by storage-based replication or ISV logical replication solutions.

•Any IFS IASP must remain switchable between both local Db2 Mirror nodes by choosing a DR topology that is supported by PowerHA.

•Any DB IASP is available on both local nodes (no switch between local nodes).

A DB IASP is not required for local Db2 Mirror database replication, but might be preferred for implementing a remote replication solution with shorter recovery times compared to SYSBAS replication.

•For a complete business continuity solution at the DR site, a remote Db2 Mirror node pair can be configured for a 4-node Db2 Mirror PowerHA cluster configuration. Both IFS IASPs and DB IASPs must be registered with the remote Db2 Mirror pair (by using the SHADOW option for the DB IASP to maintain its Db2 Mirror configuration data like default inclusion state and RCL).

Within the following sections, we provide some examples of how a local Db2 Mirror environment might be extended by available HA/DR technologies.

1.4.1 Db2 Mirror and full-system Metro or Global Mirror

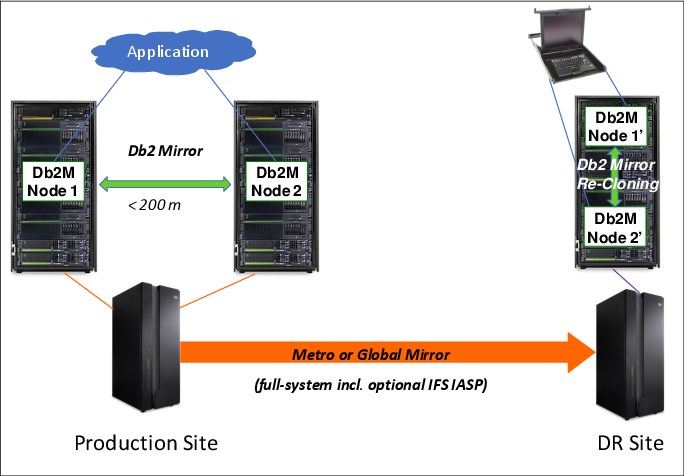

Db2 Mirror can be combined with storage-based full-system replication, as shown in Figure 1-6.

Figure 1-6 Db2 Mirror combined with full-system replication

The following considerations apply for combing Db2 Mirror with full-system replication as a DR solution:

•Full-system storage-based replication of Db2 Mirror is considered a DR only solution, and it should not be used for regular site swaps.

•Replication can be either synchronous like with IBM Metro Mirror or asynchronous with consistency like with IBM Global Mirror.

•The Db2 Mirror node state (active, tracking, or blocked) is replicated.

•Users typically must start both DR nodes if they are in doubt about the prior node state.

•If Db2 Mirror on the DR site is wanted, Db2 Mirror reconfiguration and recloning are required.

1.4.2 Db2 Mirror and full-system HyperSwap

Db2 Mirror combined with full-system replication (described in 1.4.1, “Db2 Mirror and full-system Metro or Global Mirror” on page 10) also can be combined with IBM Storage Metro Mirror HyperSwap® to provide extra protection against local storage outages without switching to the DR site, as shown in Figure 1-7.

Figure 1-7 Db2 Mirror combined with full-system HyperSwap

1.4.3 Db2 Mirror and PowerHA IASP replication

Combining Db2 Mirror with PowerHA IASP replication, as shown in Figure 1-8, instead of full-system replication provides a higher level of availability for DR with a shorter RTO due to the enabled PowerHA and managed IASP failover to the DR site.

Figure 1-8 Db2 Mirror combined with PowerHA IASP replication

The following considerations apply for this solution:

•The PowerHA cluster includes two Db2 Mirror node pairs.

•All data to be replicated between the Db2 Mirror production pair and the Db2 Mirror DR pair should be included in DB IASPs (production) and the IFS IASPs (DR) with their remote replication managed by PowerHA.

PowerHA support for a container cluster resource group (CRG) can be used for simultaneously switching DB IASPs and IFS IASPs as through they were one CRG,

•The system environment, like user profiles, are kept in sync between both Db2 Mirror pairs by the PowerHA cluster admin domain.

•A planned site-switch of DB IASPs preserves their replication state.

•Recloning of the DB IASPs might be required after an unplanned PowerHA failover.

To provide even higher redundancy and availability from a storage perspective, this solution also can be implemented with a separate IBM System Storage DS8000® storage system for each local Db2 Mirror node, as shown in Figure 1-9.

Figure 1-9 Db2 Mirror combined with PowerHA IASP replication with three DS8000 systems

In this example, the DB IASPs are replicated by using Metro Mirror or Global Mirror from the production to the DR site, and the IFS IASPs, which must remain switchable by PowerHA, is replicated by the PowerHA -supported Metro Mirror HyperSwap + Global Mirror 3-site configuration.

1.4.4 Db2 Mirror and ISV logical replication

Db2 Mirror also can be combined with an ISV logical replication solution. With this configuration, you can move the source for the logical replication between the production

Db2 Mirror pair and replicate to either a single DR node or two DR node configuration, as shown in Figure 1-10.

Db2 Mirror pair and replicate to either a single DR node or two DR node configuration, as shown in Figure 1-10.

Figure 1-10 Db2 Mirror combined with ISV logical replication

When using a 2-node DR configuration, the DR nodes also should be added to the Db2 Mirror cluster so that the quorum data, including the node status, is replicated outside the Db2 Mirror production node pair.

1.4.5 Db2 Mirror and Live Partition Mobility

For planned maintenance or migration without downtime, you can migrate a Db2 Mirror node to another Power Systems server by using LPM.

The following requirements apply for using LPM with Db2 Mirror:

•IBM i 7.4 TR1 or later.

•IBM POWER9 processor-based servers with firmware level 940 or later.

•Single root I/O virtualization (SR-IOV) logical ports are used for migrating the partition instead of a physical RoCE adapter.

•The restricted I/O attribute is set for LPM in the partitions’ profile on the Hardware Management Console (HMC).

Using LPM in a Db2 Mirror environment requires special considerations for removing and adding the SR-IOV logical ports, which are described in 5.1, “Planned outages” on page 70.

|

Note: IBM PowerVM® Simplified Remote Restart technology, which is used by some automation tools for remote restart recovery in case of unplanned outages, requires a virtual I/O only configuration for the partition, so it cannot be used for a Db2 Mirror node.

|

1.5 Interfaces

Db2 Mirror can be configured and managed by the Db2 Mirror GUI and by various SQL services that consist of SQL procedures, functions, and views. The initial setup of Db2 Mirror can be performed by either using the GUI or a combination of SQL services and the Db2 Mirror setup tool db2mtool, which is available as a Qshell command.

This section provides a brief overview of the available interfaces to set up and manage a

Db2 Mirror for i environment.

Db2 Mirror for i environment.

1.5.1 Graphical user interface

The Db2 Mirror GUI is a web-based GUI that is part of the 5770-DBM licensed program *BASE option, which does not require a Db2 Mirror license and is running on the IBM i HTTP Apache web server. It can be run from either the Db2 Mirror primary or secondary node for managing and monitoring. However, it is a best practice to run it from a third management node, which is required for the initial setup of a Db2 Mirror pair configuration and also can be used for a Db2 Mirror quorum, as described in 2.7, “Quorum” on page 31.

Figure 1-11 shows an example of one of the Db2 Mirror GUI’s initial setup windows.

Figure 1-11 iDb2 Mirror GUI initial setup window

Figure 1-12 shows an example of the GUI for managing an existing Db2 Mirror environment with the menu open for OTL tasks.

Figure 1-12 Db2 Mirror GUI managing an existing environment

1.5.2 SQL services

As an alternative to setting up or managing Db2 Mirror by using the GUI, there are various SQL services in the form of procedures, functions, and views that are available for the following categories:

•Communication services

•Product services

•Replication services

•Resynchronization services

•Serviceability services

A complete list of available Db2 Mirror SQL services can be obtained by querying the SQL SERVICES_INFO view, as shown in Example 1-1.

Example 1-1 Available Db2 Mirror SQL services

SELECT * FROM QSYS2.SERVICES_INFO WHERE SERVICE_CATEGORY LIKE 'MIRROR%';

In 4.2, “SQL services” on page 59, we show some examples of using Db2 Mirror SQL services from the 5250 command line and from IBM i Access Client Solutions.

For more information about the available Db2 Mirror SQL services, see IBM Knowledge Center.

1.5.3 Db2 Mirror setup tool

The Db2 Mirror setup tool is available as the Qshell command db2mtool and is required for some configuration and validation tasks of an initial Db2 Mirror setup if the GUI is not used.

This tool has different action parameters for working with Db2 Mirror JSON configuration files, performing validation and cloning operations, and configuring clustering.

|

Note: The db2mtool is required only if the GUI is not used for the initial setup or if PowerHA is not used for managing the Db2 Mirror cluster.

|

Db2 Mirror uses the following two kinds of JSON configuration files, which describe the setup for a new Db2 Mirror environment, including the setup source node, setup copy node, and storage configurations:

clone_info.json file Contains the storage and cloning attributes that are used by Db2 Mirror to automatically perform the storage cloning actions.

meta_data.json file Contains the setup copy node configuration settings that are used by Db2 Mirror to automatically configure the setup copy node during the first IPL after the storage clone.

If you use the GUI with its setup wizard to set up Db2 Mirror, you do not need to care about these configuration files because they are automatically created, populated, and uploaded to the setup source node with the detailed configuration information specified by you.

Using the Db2 Mirror tool to interactively create and populate a new JSON configuration file for a Db2 Mirror setup with the tool prompting the user to enter all required information, and using it for changing a cluster IP interface, are shown in Example 1-2.

Example 1-2 The db2mtool usage examples

db2mtool action=new jsonType=[clone_info | meta_data] jsonFile=/home/xxx

db2mtool action=cluster cl=chgcluinterface node=<nodename> ip1=<current_IP_address> newip1=<new_IP_address>

For more information about the Db2 Mirror tool, see IBM Knowledge Center.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.