High-Level Architecture and Design of a Decision Engine for Marine Safety and Security1

Piper Jackson∗, Uwe Glässer∗, Hamed Yaghoubi Shahir∗ and Hans Wehn†, ∗Software Technology Lab, School of Computing Science, Simon Fraser University, Burnaby, British Columbia, Canada, †Research & Development, MDA Corporation, Richmond, British Columbia, Canada

Chapter Outline

5.2.3 Fundamental Planning Elements

5.2.4 Hierarchical Task Networks

5.3.2 System Architecture and Design

5.4.3 Decision Engine ASM Specifications

5.6 Conclusions and Future Work

5.1 Introduction

By their very nature, some application domains are unpredictable. While we strive to develop the best technologies and practices in order to solve the problems we face, we are unable to assume that our actions will proceed without interruption. Equipment can fail and people can make mistakes. Despite the best planning, wrong decisions can be made. This is especially true in critical situations, when time is limited and the penalties for failure are severe. When we face the possibility of interference by neutral or adversarial actors, things become even more difficult. However, we are able to deal with this reality in everyday life because we are able to dynamically react to the world around us. We learn from failure and avoid problems when they become apparent. For an automated system to perform well in real-world situations, it must also be capable of doing these things.

Planning is the process of generating a series of actions that accomplish a goal. In computing, classical approaches to planning focus on theoretical properties and general algorithms. Over the last several decades, classical planning has been well studied [1]. However, a number of simplifying assumptions are made for this kind of research. Most commonly, only systems that are static, finite, deterministic, and fully observable are considered. These assumptions are appropriate only for some real-world applications, such as manufacturing, but for others they do not apply. Marine safety and security is a domain for which automated planning would be highly useful. Related operations include a mixture of routine and emergency activities, rapidly changing conditions, and complex resource requirements. Canada, with the longest coastline in the world [2], has a particular interest in innovations in this field. For automated planning to be able to perform in such a domain, it must be more flexible than a classical model allows. There are uncontrollable events and entities, imperfect knowledge and nondeterminism, i.e. actions may have unexpected results. Replanning, the dynamic generation of new plans, is a strategy that can help to address these issues.

Consider this example: A 911 call comes into a maritime services command center, initiating a search and rescue mission. A plan is put together that has the best chance of finding the capsized ship as well as any survivors within the given time window. Since we are dealing with unknowns, this plan cannot account for all eventualities. Several things can happen that could require rapid alteration of the plan. For example, new information (such as eyewitness reports) could add or remove areas of interest from the search. Another possibility is resource failure, either in the form of actual resource loss or due to situational reduction of capabilities. Bad weather is a prime cause of this, either by directly causing damage to equipment, or by interfering with movement or sensors (including vision). Finally, it is possible that a decision outlined in the plan may fail. In this case, it is a critical decision whether or not to repeat the failed action, try something else, or abort the current mission. Searching for survivors in open water during bad weather may not proceed in a textbook manner. It may be necessary to try a variety of sensing technologies or solutions, and it is possible the search attempt may become too dangerous to continue.

We propose here a comprehensive architectural model and algorithmic framework of continual planning using formal methods to represent the model at an abstract operational level, capturing the behavior of the system as a whole. Specifically, we use the Abstract State Machine (ASM) method [3] to express and delineate the responsibilities of the system components. The Decision Engine is designed to have a plug-in architecture, allowing various planning paradigms to be used within its components. For example, the set of available planning algorithms should match the needs of the domain model as represented by INFORM Lab [4], an industrial simulation platform for developing decision support systems in the marine safety and security domain (see Section 5.2.4 for more details). We also present a planning paradigm appropriate to the application domain. This design is built so that as new techniques and relevant technology are developed, they can be swapped in without difficulty. When complete, it is meant to act as a guide for future implementations of a continual planning layer.

The rest of this chapter is organized as follows: Section 5.2 discusses background knowledge and concepts related to the project; Section 5.3 presents Decision Engine design; Section 5.4 proposes a formal representation approach for the Decision Engine based on the Abstract State Machine method with some examples; Section 5.5 describes the application context in which the Decision Engine was developed; finally, Section 5.6 concludes the chapter with a discussion of future work and other possible applications of this research.

5.2 Background

In this section, we discuss several topics that are fundamental to our research. The OODA loop is the operational framework within which the system exists. Automated planning is the academic discipline that governs this research topic. In the Fundamental Planning Elements subsection, we discuss several ideas and entities of importance to the focus of our research. Next is a general explanation of Hierarchical Task Networks, since they are of particular interest to this project. Finally a short description of other related research is given.

5.2.1 The OODA Loop

The OODA loop (Figure 5.1), developed by the United States Air Force Colonel John Boyd, is a model of how an intelligent, sensing agent interacts with a dynamic environment, well established in both business and military contexts. It consists of four main steps: Observe the environment to discover what is happening; Orient the evidence observed, to establish its meaning in terms of one’s own situation and interests; Decide what to do based on what was understood during orientation; and, finally, Act on that decision. This is an iterative loop, with the results of the Act phase feeding into the next Observe phase. By continually following the OODA loop, an individual agent or group of agents are able to act in accordance to the situation they are in. Here, the OODA loop is employed as a definitive model of how both directed and reactive behavior can be broken down into their constituent elements, and how those elements interact with each other. For this reason, the four steps will be used throughout this text to refer to any process or algorithm that achieves their purpose within the context of a real-time adaptive system.

Figure 5.1 John Boyd’s OODA loop.

5.2.2 Automated Planning

Planning is the process of finding a set of actions that accomplish one or more goals. Automated planning is performed computationally in order to control automated systems or aid human operators in their decision making. Automated planning has great potential in dealing with the complexity of managing systems that use many resources. As a field, automated planning has provided multiple methods that can be used to decide on how to act when given goals and an environment in which to act. However, planning algorithms are brittle on their own, since conditions can change after a plan is constructed. Most have been formulated assuming a closed and predictable environment [1]. There is even greater complexity for domains that interact with the world at large, since this means that there will be many factors that are difficult or impossible to control. Classical approaches to planning are well understood, but do not deal well with incomplete knowledge or the unexpected. Continual planning systems adapt dynamically in order to handle challenges encountered during execution. Continual planning deals with the challenges of complex conditions by allowing the planning system to react to feedback from the environment and replan when necessary. Characteristics that enable effective continual planning are outlined in Refs [5,6]. These include: the ability to monitor changes in the environment and in execution of a plan; being able to maintain multiple plans and choose which to commit to; elaboration of a plan as appropriate to current knowledge and available resources; and the capacity for auditing plans and altering them as necessary. The design proposed in this chapter aims to embody these characteristics.

5.2.3 Fundamental Planning Elements

There are four classes of objects that are fundamental to planning. These are: goals, plans, decisions, and actions. Goals are high-level statements that the system aims to fulfill. A mission, such as search and rescue, is a prime example of a goal. Solving a problem that is interfering with system activities is also a goal, although in this case it would be generated dynamically rather than being introduced by system operators. Goals are achieved through the performance of appropriate actions.

Actions are straightforward: they are the external actions performed by a system as it interacts with its environment during execution. They are closely linked to decisions, which are their logical counterpart. In this way, it is possible to represent both the intention to do something (the decision) and the act of doing it (the action). This distinction is vital when the results of an action are not completely predictable. Actions can fail or never have an opportunity to begin. They may succeed partially or have unintended consequences. In fact, it is precisely the difference between decision and action that a system must evaluate in order to determine an appropriate reaction to an unforeseen result. This distinction enables robust planning behavior in a dynamic environment with uncertainty.

Plans are the intermediary objects necessary to generate decisions appropriate for a given goal. They are used to construct and organize a set of decisions that are likely to result in the fulfillment of that goal. A plan may contain decision points (conditionals) and constraints that limit when the plan can be enacted, such as preconditions that must be fulfilled before beginning (including which other decisions must precede it in terms of execution).

5.2.4 Hierarchical Task Networks

The Hierarchical Task Network (HTN) paradigm is an approach to automated planning that takes advantage of domain knowledge to reduce the search space when developing a solution to a planning problem. Traditional approaches to planning attempt to transform an initial state to a goal state by applying available actions in a specific order. This requires considering many possible actions at every decision point when building a solution, so the search space for such a planner is immense: without severe restrictions in representation or syntax, it is of exponential computational complexity [1]. Instead of looking at every possible action, HTNs use methods to incrementally break down an initial abstract goal task into progressively more specific tasks (referred to as decisions in the context of our project). For example, a rescue mission to save a capsized boat would be broken down into three high-level tasks: Search, Extract (personnel), and Secure (the boat). In turn, each of these would need to be further refined into specific tasks related to moving resources, using equipment, etc. The methods are developed using expert knowledge specific to the domain that the planner will be used for. This requires more effort in construction than a general planner, but this effort results in a quicker and more useful planner at execution time.

HTN methods express domain knowledge in a structured and formal manner. Since HTN methods are created by human experts from the field that the planner is used in, this makes the resulting plans more useful for human users in a number of ways. First of all, the plans are easier to understand, since they are similar to a plan formulated by a human. Secondly, since the overall structure of a plan matches one that a human expert would come up with, this means that a system user will be able to use a generated plan as part of their planning process without needing to rely on it completely. They can be confident in their ability to alter or correct parts of the plan based on their own expertise or knowledge of things not represented in the simulation environment. Since every computer simulation is necessarily an approximation of the real world, this situation is unavoidable. It is important to recognize the role of a Decision Support System (DSS) like INFORM Lab. It is able to perform difficult calculations and consider a vast number of items much more effectively than a human being is able to. However, it is limited to the knowledge represented in it, and is unable to innovate. Thus, the output of a DSS must be in a form that a human user is still able to work with. A planning system that produces plans in an unexplainable or arcane form will not be as useful, because it will be very difficult for human operators to verify that the plans are indeed worth implementing. These are the two big advantages of HTNs: a search space tractable under normal execution conditions, and an approach that mimics human experts and can therefore be understood and managed by them.

The HTN approach has been implemented in numerous programming languages, including C, Java, Prolog, and Golog [7–10]. HTNs are also appropriate for planning problems that are dynamic in nature, and studies have shown how they can be used in conjunction with replanning and plan repair [11]. One group at Toshiba Research have produced a number of articles looking at using HTNs in systems with replanning [9,12,13]. Their research is particularly interested in identifying which actions may be canceled, and what effects a cancellation has. HTNs are considered to be a general and useful approach to planning by many researchers, including planning for dynamic environments with uncertainty.

5.2.5 Other Related Research

A comprehensive overview of the field of automated planning is found in the eponymous text by Ghallab, Nau, and Traverso [1]. The bulk of the text focuses on well-established classical planning techniques that must be done offline. However, even in the introduction, the issues related to real-world planning applications, including continual planning, are outlined clearly. A more expansive description of the difficulties and potential of distributed, continual planning is found in Ref. [14]. One of the authors of that article, Edmund Durfee, thoroughly outlines distributed planning in his canonical article, which has been updated several times, the most recent of which is Ref. [15]. One issue in general with distributed planning is the reliability of other agents. If agents working together on a plan are unable to trust each other completely, a great deal of effort must be spent on tracking the behavior and reputations of other agents in order to determine who can be trusted. This is not an issue for multi-agent systems aimed at fulfilling the goals of a single organization, such as in safety and security operations. All agents can safely be assumed to be willing to cooperate in order to achieve system-wide goals. While this may seem obvious, it significantly reduces the complexity of distributed planning when compared to the more general case.

Several examples of systems similar in scope to INFORM Lab that integrate both planning and simulation exist. They highlight the benefits of planning for safety and security scenarios. I-GLOBE simulates emergency responses; in particular, it simulates the construction of temporary medical facilities in response to an island-based disaster. It illustrates how distributed planning can be implemented in a continual manner [16]. EKEMAS is a continual planning simulation with an emphasis on geographical considerations. It employs both spatial reasoning and geo-simulation for natural disaster response [17]. It uses forest fire response as the example domain of application. MADGS is a US Air Force project that is a comprehensive simulation system incorporating several legacy components. It puts particular emphasis on satisfying resource requirements [18]. These examples illustrate different solutions for these kinds of challenges. A goal of our project is the development of a general approach that draws upon existing techniques and may be more widely applied.

5.3 Conceptual Design

In this section we outline the requirements of a comprehensive decision-making component of an OODA-based system operating in a complex, dynamic environment. We describe the design of the Decision Engine and explain how it satisfies these fundamental concerns.

5.3.1 Requirements

Eliciting and capturing the requirements of a software system is one of the first fundamental steps in software and systems engineering. Since designing and implementing an extensible system is complex, it is important to clearly establish the requirements. Here we list the high-level and high-priority requirements of the Decision Engine:

• Appropriate output: Produce a set of decisions when provided with a goal. These decisions must be likely to accomplish that goal.

• Continuous updating: Update ongoing goals, plans, and decisions based on situation evidence provided by an orienting process.

• Flexible scheduling: Be able to do scheduling as appropriate to the current state of the system. When time is available, scheduling should be done as part of the planning process. In this situation, the system should take advantage of any optimizing scheduling algorithms that are available to it. When time is limited, scheduling should simply consist of finding appropriate resources that are available with regards to goal priority, and assigning the resources in a first-come, first-served manner.

• Robustness: The Decision Engine should be able to repair a problematic plan (see Section 5.3.4 for a detailed explanation), and be able to retry, reassign or replan a failed decision, as appropriate.

• Extensibility: The system must be able to include other algorithms as subplanners and schedulers within the Plan Management component.

• Generality: The system needs to be a general model of decision making for two reasons. Firstly, since it is part of a research simulation environment, it should be readily comprehensible so that other research groups can use it and modify it as part of their own work. Secondly, it should be able to integrate new technologies as they mature and become a growing consideration within the domain of study.

5.3.2 System Architecture and Design

The Decision Engine is a state machine model, consisting of four active entities: Evaluation, Goal Management, Plan Management, and Decision Management (see Figure 5.2). Each behaves as a concurrently operating autonomous entity, interacting with each other asynchronously within their operational environment (the Decision Engine). The management components are responsible for maintaining and updating their respective pools of objects (goals for Goal Management, etc.). The Evaluation component monitors progress and current conditions in order to recognize when replanning is necessary. The process of generating decisions begins when the Decision Engine is sent goals and/or evidence from the parent system (in our case, INFORM Lab, see Section 5.5). Plans are constructed to address goals; decisions are then derived from plans to provide concrete means of achieving those goals. In order to support these efforts, the Decision Engine is capable of querying the parent system for relevant information, such as resource status or current weather conditions. This information is used to track the progress and results of activities under way, and corrective action is taken if corresponding expectations are not met. In this way, the Decision Engine as a whole aims to provide decisions for the parent system to use in assigning resources to various activities. We now describe the four main components in more detail, as well as technological considerations and requirements relevant to our design.

Figure 5.2 Conceptual design of the Decision Engine.

Goal management

The Goal Management component is responsible for updating and processing the goals held in the goal pool, which is the set of active goals in the system. Like the other management components, it regularly checks the progress of the objects in its pool, performing management tasks when necessary and reacting to problems when they arise. The Goal Management component accepts new goals when they are inserted into the Decision Engine and maintains the list of plans associated with that goal. The potential for having multiple plans for a single goal provides greater robustness since the criteria for selecting which plan to act upon can change depending on current priorities and/or environmental conditions. Hierarchical goal analysis is used whenever possible to break down goals into independent subgoals in order to simplify planning.

Plan management

The Plan Management component creates, builds, and maintains plans within the system. For each goal, one or more plans are created, depending on the number of viable options available. If there is more than one plan, the relative cost of each will determine which is chosen to be the active plan for a goal and set into motion. Plans are completed when possible: a lack of information may prevent a plan from being completed before starting. Placeholder decisions can be used as a method of dealing with this challenge, as outlined in Ref. [19]. The history of choices made when developing a plan is also maintained, in order to allow backtracking when repairing a plan. The planning component turns the high-level requirements of a goal into decisions of a level practical enough that they can be acted upon. A variety of algorithms can be used to accomplish this process. This can be done as a single atomic process or incrementally, gradually refining an incomplete plan as required information becomes available. A plan must maintain the history of its construction process so that it may backtrack and look through alternate courses of action. This may occur during initial plan construction or as part of a replanning attempt to repair a plan that has become problematic during execution.

It is important to note that planning and scheduling are not modeled as separate processes at the highest level. This is due to the fact that the division between the two is not clear, and perhaps even arbitrary. They exist along a continuum of searching through the decision space. In general, planning can be considered to be the aspect of decision making related to choosing the actions to perform, while scheduling deals with the assignment of resources to be used. The two aspects are rarely independent, since the availability of resources can affect which actions can be performed. A given planning implementation may choose to optimize resource assignments by integrating planning and scheduling at each step, or it may largely disregard scheduling so that performance is optimized for timely reaction to critical situations. The current INFORM Lab implementation of planning is separated into three phases: Capability Planning (choosing actions), Mode Planning (choosing type of platforms), and Scheduling (deciding on specific platforms to assign). Any of these configurations of planning and scheduling are valid, and a high-level design of the decision process should be able to encompass them all. Indeed, considering the mixture of routine and emergency activity experienced in marine operations, it is beneficial to be able to do quick planning with simple scheduling when necessary and optimization when time is available. The Decision Engine accomplishes this by allowing either the Plan Management process (optimizing) or the Decision Management process (quick) to make resource assignments. Furthermore, an interim plan can be quickly proposed and begun while the Planning process continues to look for an improved alternate plan. If time is available and the change is worthwhile, the system can switch over to the alternate plan. By modeling the internal steps of the Decision Engine as processes and supporting alternate plans, this kind of robust, flexible behavior is possible.

Decision management

The Decision Management component handles the decisions that are currently active in the system. This includes both decisions that are executing, and thus are paired to an action being undertaken by the system, and decisions that are waiting for execution. Management of these decisions includes ensuring that decisions have resources assigned to them, recognizing when a decision should begin execution, as well as monitoring and reacting to status updates. For example, it may be necessary to interrupt the execution of a decision due to a failed execution, or a change in weather conditions, or because it was forced to release its current resource to a higher priority decision. Thus, it is necessary for Decision Management to monitor changes in the current status of a decision. This is in order to recognize information that relates to the progress of decisions under execution. Primarily, this is with regard to deviation from expected behavior. For example, a resource may not be moving as quickly as expected towards a target location, or a necessary sensory capability may be compromised, either due to weather or equipment failure. If possible, such problems should be solved at the decision management or scheduling level before resorting to replanning. In this way, the Decision Management component handles waiting decisions as well as those whose corresponding action is currently under execution.

Evaluation

The remaining part of the Decision Engine is the Evaluation component. It is responsible for deciding whether the current state of the world necessitates replanning. Evaluation receives the input to the Decision Engine, in the form of goals and situation evidence. Goals are passed along to the Goal Management component to be added to the goal pool. Situation Evidence is the information generated through an Orientation process that relates specifically to the goals, plans, and decisions that are currently active in the system. This includes things such as estimation of time or resources required to complete a decision, and forecasting the results of current activity. Evaluation is necessary for continual planning since it is the process that identifies when the current plan needs to change. Comparison of original expectations or requirements against current conditions or forecasts helps to identify when a problem has appeared, such as when a vehicle is moving too slowly to get to its destination by the time it is expected there. This could result in the adjustment of dependent decisions, or even the abandonment of the current mission in the extreme case. This can result in a change of status for a goal, plan, or decision, which can include cancellation. In many cases, no change would be required. It may also be necessary to create a new goal to handle some new set of conditions. The ability to reason about and react to change, provided here by the Evaluation component, is necessary for success in a dynamic environment [6].

Communication mechanism

The Decision Engine employs a message-passing paradigm as part of its foundation. The information being considered includes anything that is related to currently executing decisions. Such information needs to be modeled as coming from sensor data, like the other data already being collected in the simulation. Sensors that monitor those factors that affect decision execution, such as engine status, sensory capabilities, etc., are considered to be internal sensors. This is in contrast to external sensors, such as visibility and radar, which monitor the state of the world as encountered by a resource.

The first benefit of this is that status updates to goals, plans, decisions, and actions are all processed first as situation evidence by the Evaluation component. There is no direct, automatic updating done when an action completes, for example. The reasoning behind this is that the results of an action must be observed by sensors (internal or external) in order to be accepted as true. In other words, since we cannot fully predict the results of any action taken, we can only know for sure what has happened through observation. Another aspect of a message-based system is that we are able to model each of the components within the planning system as a software service agent, allowing the flexible use of resources to match current priorities. Similarly, multiple plans can be supported for a single goal, with the decision of which to use based on current conditions. Since each service agent acts upon shared data in a different manner, synchronization is not an issue. Furthermore, as a continual planning system, its fundamental purpose is to recognize and fix problematic situations.

The second benefit of employing message passing is that it allows the components to operate as asynchronous services. The model is more general since even a synchronous implementation will be a valid expression of the model. Also, it can improve flexibility during execution as, for example, the various processes could be running simultaneously but only use computing power proportional to their current needs. This could mean that the Planning process may be busy when first coming up with plans, and less busy afterwards. It may become busy again when it is feasible to spend resources on improving the existing set of plans. We can envision this kind of behavior as an OODA loop with multiple concentric rings. The results of some actions are immediately observable, and the Decision Engine has the potential to react to them swiftly, allowing for agile behavior in the system. Other processes take place over a long period of time, and the corresponding interactions between components, and thus their underlying OODA loop, are of a greater scale. By employing the message-passing paradigm to model the system, we are able to support this natural spectrum of dynamic behavior.

5.3.3 Hybrid HTN Framework

The Decision Engine model is designed to incorporate both current and upcoming technologies for managing plans. This is achieved in part by using an HTN planner as a general approach to planning that can call upon more specialized planners where appropriate. For example, an HTN planner may identify that a Move decision is necessary to get a resource into the right place. In this case, it is more appropriate and efficient to have a Path Planner to calculate the actual trajectories and spatial issues (such as collision detection and avoidance). Since HTNs only consider decisions as operators that change the world state, it would be difficult and inefficient to try to represent all decisions related to movement through space as HTN decisions. In this way, the HTN planner acts as the central mechanism in an automated planner, binding together a family of more specialized planners. This allows a varied group of decisions that require different algorithms for computing their plans to be used together to achieve a goal. For example, a search and rescue mission will require the coordination of sensing, communication, and movement of appropriate resources (such as rescue vehicles) just to set up the actual rescue attempt. Each of these activities involves different algorithms to compute, such as Path Finding for movement. Since HTNs break down abstract, high-level goals, an HTN planner can act as a framework, distributing more specific decisions to the appropriate subplanners when applicable. This use of HTNs as a framework can be considered to be the logical extension of the concept of critics within HTNs [20].

Figure 5.3 illustrates an example of how the Hybrid HTN planning architecture works. Given a goal to detect smuggling operations in a given area, the HTN planner begins with a high-level abstract decision that directly corresponds with this goal: Detect Smuggling. This decision possesses parameters that detail the specifics of this particular mission, e.g. the area that should be observed. The HTN planner then iteratively uses methods to break down the highest level decision into more specific ones. For example, Move to Area of Interest is refined with Fly because this is the best method for reaching the area under consideration. However, the next level of refinement uses a geographical navigation subplanner in order to generate a Flight Plan for this flight. The subplanner in question is specialized for dealing with geographical objects and issues. Other subplanners are used to refine other decisions. For example, a subplanner which can calculate search patterns and consider sensor capabilities is used to generate the Flight Plan for the Fly Search Pattern decision. HTN methods are used to select which subplanners to use. In this simplified example, the lowest level of decisions (the “leaves”) would be the output of the entire planning process, being passed along as decisions to the Decision Manager in the case where this plan is chosen for activation.

Figure 5.3 Example plan generated by the proposed Hybrid HTN approach.

5.3.4 Plan Robustness

Fundamentally, the model of planning employed here accepts the existence of failure and surprises, and deals with them in a proactive manner when able. An Evaluation component is used to analyze situation evidence generated both through agent activity as well as sensing of the environment. In this way, immediate problems are recognized as conflicts with constraints of decisions in the decision pool. Potential future problems are highlighted by comparing what is expected with current progress, e.g. expected time of arrival against current velocity. Success, problems, and failure are noted as status changes in decisions, plans, and goals. Failure is not an absolute state: it may allow for the retrying of an action as is, or with alternative methods or resources; it could also mean the need for replanning, and only result in the abandonment of a mission in a worst-case scenario. All planning is done in a manner such that relevant costs are minimized, including time and monetary expense. Replanning also considers the costs associated with changing a course of action, and the current plan is maintained as much as possible in order to support this. Scheduling of resources is done according to availability and the priority of a given decision. The continual planning model proposed in this project includes these current planning strategies in order to allow intelligent and dynamic behavior for automated agents in a complex environment.

The proposed system allows us to employ the most efficient approach when it comes to plan repair, in this order of preference:

1. Retry: If the planned action has failed but the plan as a whole is still valid, i.e. there are still resources and time available to perform it and its operational constraints still hold, then the most straightforward choice is to continue with the existing decision. This is undertaken in Decision Management.

2. Reassign: If resources assigned to the decision have become lost or ineffective, assign new resources to the decision. This is the obvious reaction to the failure of an action due to a resource problem (e.g. engine failure) or changes in the environment that interfere with capabilities of the current resources (e.g. severe weather). This option is also undertaken in Decision Management.

3. Replan: Employ a replanning algorithm to repair the plan. If possible, alterations to the plan should be minimal, in order to avoid difficulty in adjusting to the newly repaired plan. In this case, the response to the issue is taken care of in Plan Management.

Since the solution to the problem is taken care of in Decision Management when possible, this approach is the online analog to using a backtracking algorithm to fix a generated plan.

5.3.5 Emerging Technologies

Automated planning is an active area of research, and several new technologies and paradigms have become prominent recently. One example is plan merging, which is concerned with merging the actions of plans together in order to limit redundancy. For example, rather than sending two packages by separate couriers, it makes more sense to use one courier for both packages, if time and capacity constraints can still be met. It is important that any general design for a system that includes planning is able to integrate these new technologies as they start to mature. Plan merging is still a developing research topic, and as such it is not mature enough to include a plan merging algorithm within the Decision Engine yet. However, the general concepts of plan merging have been considered in this design so that it may more easily be integrated at a later date.

We do not outline the manner of performing planning in a distributed manner here, since the focus of this phase of our research is the complex dynamic behavior of a single agent. However, a method for dealing with this that is directly applicable to the proposed system is described in Ref. [21].

5.4 Formal Representation

A primary goal of this research is to capture the central issues of continual planning in a manner that is fundamentally mathematical in nature. The comprehensive high-level design presented here uses formal methods to express the model at an operational level, capturing the behavior of the system as a whole. By using formal methods to analyze and design the proposed model, later development and implementation should be straightforward. Specifically, we use the Abstract State Machine (ASM) method to delineate the responsibilities of the system components.

5.4.1 Abstract State Machines

The ASM method is a formalism that is particularly suited to applied systems engineering. ASMs combine first-order structures, in order to represent states as arbitrary data structures, with state transition systems, in order to capture the dynamic behavior of a target system. They are capable of capturing concepts naturally and formally, such that the essential characteristics of the target system are expressed clearly. This clarity is useful when working in conjunction with domain experts, since it is easier for them to participate in the construction and validation of a system model. The ASM method includes a stepwise refinement process, allowing any part of the model to be defined at an appropriate level of detail or abstraction. ASMs are executable in principle, so may also be used in an empirical manner, such as by testing a model experimentally. In this way, they act as a bridge between specification methods and computational modeling. An authoritative explanation of ASMs is contained in Ref. [3].

5.4.2 CoreASM Environment

CoreASM is an executable specification language and tool environment that allows experimental analysis of ASM specifications. Its plug-in based architecture provides an extensible framework that can be augmented in a modular manner in order to meet the needs of the current application [22]. In our research, CoreASM is employed to test the Decision Engine ASM specifications through execution on example scenarios. A secondary outcome of pursuing this line of development with the Decision Engine is a method for using CoreASM in conjunction with existing Java code. Previously, only one-way communication from CoreASM to Java was possible. Now, CoreASM can be accessed from Java, allowing two-way interaction. This in turn makes it possible to run the Decision Engine as an ASM specification in conjunction with an existing system (in this case INFORM Lab, running in Java). This enables a “hardware-in-the-loop” type of development process, where high-level design decisions can be immediately tested through interaction with previously existing code.

5.4.3 Decision Engine ASM Specifications

An Abstract State Machine specification of the Decision Engine has been developed at a high level of abstraction. First, the domains, or central element types and sets, are listed in Figure 5.4.

Figure 5.4 ASM specification of the system domains.

Next, the rules that govern Goal Management are shown in Figure 5.5.

Figure 5.5 ASM specification of the Goal Management component.

In Figure 5.6, the primary rules related to the Plan Management component are presented. Note that the main Plan Management rule considers all of the goals in the goalPool (the currently active set of goals) in order to act upon (or create) the plans related to those goals; it also considers all of the plans in the planPool (the currently active set of plans) individually for appropriate updates.

Figure 5.6 ASM specification of the Plan Management component.

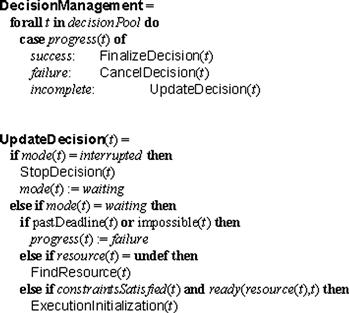

The Decision Management component in Figure 5.7 largely follows the rule for task management presented in Ref. [23]. Finally, a general description of the Evaluation component is contained in Figure 5.8. This component is the newest conceptually, and further development would be beneficial. While specific criteria for evaluating progress are not difficult to establish, such as comparing current location against the target destination in the case of movement, a general algorithm for evaluating progress and reacting to unexpected developments is still an open problem. In particular, it is challenging to determine which reaction to a partial failure is best for a specific situation, such as whether replanning is necessary or simply waiting will be sufficient to deal with the problem. Thus, the current specification for this component outlines the requirements for this component, and we consider a further refinement as an open (and interesting) problem.

Figure 5.7 ASM specification of the Decision Management component.

Figure 5.8 ASM specification of the Evaluation component.

5.5 Application

The target system for implementation, INFORM Lab, combines higher-level distributed dynamic information fusion, distributed dynamic resource management, communication strategies, and configuration management in a marine safety and security simulation environment. In particular, INFORM Lab simulates the directed actions of assets allocated to the Marine Security Operations Centre based at Esquimalt, British Columbia. For example, the system is capable of investigating and identifying suspicious behavior that may be smuggling in the environs of the Strait of Georgia, including Vancouver Island and the British Columbia mainland coast. It was designed and developed at MDA Corporation, Richmond, B.C., Canada. It has served as a source for establishing the requirements of a continual planning layer, and has shown such a layer could fit into a larger simulation engine or decision support system. In the future, INFORM Lab can act as a test bed for testing the implementation of the continual planning layer in its role as a Decision Engine. This will be accomplished through an interface that bridges the two systems and allows for the interchange of information. The eventual goal of this project is for a well-tested implementation of the Decision Engine to act as an improved decision processor for INFORM Lab. Our research is also motivated by the potential future use of an application like INFORM Lab being used by human operators in a decision support role for real-world activities.

5.6 Conclusions and Future Work

This chapter has presented a conceptual design for a Decision Engine that can work within the context of an OODA-based control loop. Such an engine can be used for determining and controlling agent actions in any application for which making decisions is complex and must be done in an online manner. This fulfills the requirements of a continual planning system for marine safety and security operations, where critical decisions must be made quickly in a complex environment and with imperfect knowledge. However, many other activities that do not take place in an isolated environment (e.g. a factory) fall into the same category. Thus, we argue that it is appropriate for numerous real-world operations, including situations with autonomous agents (as a controller for robots or in simulations) and/or human beings (when used in a decision support role). Currently, this Decision Engine is being used in conjunction with INFORM Lab for research purposes, and we expect that it will widen the range of possible situations that can be represented and handled within that simulation environment.

One of the main benefits of the approach presented in this chapter is the use of the Hybrid HTN framework to deal with online and real-time situations. By developing a framework in CoreASM that can handle different planners, subplanners, and schedulers, it will be possible to integrate different implemented planners (including JSHOP2 for HTN) and INFORM Lab. In this way, a varied group of decisions that require different algorithms for computing their plans can be processed together in order to satisfy a goal. The system proposed here explicitly captures, at a high level, the qualities that a continual planner needs to possess in order to succeed in real-world operations in a dynamic environment with the presence of uncertainty, and it is meant to be suitable as a blueprint in the future for applications in similar simulation environments.

Acknowledgments

The work presented here has been funded by MDA Corporation and MITACS through the MITACS-Accelerate internship program.

References

1. Ghallab M, Nau D, Traverso P. Automated Planning: Theory and Practice. first ed. Morgan Kaufmann 2004.

2. Natural Resources Canada. The Atlas of Canada: Coastline and Shoreline. (online). <http://atlas.nrcan.gc.ca/site/english/learningresources/facts/coastline.html> (accessed 01.09.11).

3. Börger E, Stärk R. Abstract State Machines: A Method for High-Level System Design and Analysis. Springer 2003.

4. Li Z, Leung H, Valin P, Wehn H. High level data fusion system for CanCoastWatch. Quebec City, Canada: Proc. 10th Int. Conf. on Information Fusion; 2007.

5. M. Pollack, J. Horty, There’s more to life than making plans: Plan management in dynamic, multiagent environments, AI Mag. 20 (4) (19990 71–83.

6. Hunter A, Happe J, Wei W, et al. Execution Management and Plan Adaptation. Final Report, DRDC Toronto, CR 2008-123 2008.

7. Nau D, Ilghami O, Kuter U, Murdock J, Wu D, Yaman F. SHOP2: An HTN planning system. J Artif Intell Res. 2003;20:379–404.

8. Ilghami O. Documentation for JSHOP2. Department of Computer Science, University of Maryland, CS-TR-4694 2005.

9. Hayashi H, Tokura S, Ozaki F. Towards real-world HTN planning agents. Knowl Process Decis Mak Agent Syst. 2009;170:13–41.

10. Goldman R. A semantics for HTN methods. Thessaloniki, Greece: Proc. 19th Int. Conf. on Automated Planning and Scheduling; 2009.

11. Ayan N, Kuter U, Yaman F, Goldman R. HOTRiDE: Hierarchical Ordered Task Replanning in Dynamic Environments. Proc. of the ICAPS Workshop on Planning and Plan Execution for Real-World Systems: Principles and Practices for Planning in Execution 2007.

12. Hayashi H, Cho K, Ohsuga A. Speculative computation and action execution in multi-agent systems. Electron Notes Theor Comput Sci. 2002;70(5):153–166.

13. Hayashi H, Cho K, Ohsuga A. A new HTN planning framework for agents in dynamic environments. Comput Log Multi Agent Syst. 2005;3259:55–56.

14. desJardins M, Durfee E, Ortiz C, Wolverton M. A survey of research in distributed, continual planning. AI Magaz. 1999;20(4):13–22.

15. Durfee E. Distributed problem solving and planning. Multi Agent Syst Appl. 2006;2086:118–149.

16. Komenda A, Vokřínek J, Pěchouček M, Wickler G, Dalton J, Tate A. Distributed planning and coordination in non-deterministic environments. Budapest, Hungary: Proc. 8th Int. Conf. on Autonomous Agents and Multiagent Systems (AAMAS); 2009.

17. Sahli N, Moulin B. EKEMAS, an agent-based geo-simulation framework to support continual planning in the real-word. Appl Intell. 2009;31(2):188–209.

18. Santos E, DeLoach S, Cox M. Achieving dynamic, multi-commander, multi-mission planning and execution. Appl Intell. 2006;25(3):335–357.

19. Brenner M, Nebel B. Continual planning and acting in dynamic multiagent environments. Auton Agents Multi Agent Syst. 2009;19(3):297–331.

20. Erol K, Hendler J, Nau D. Semantics for hierarchical task-network planning. University of Maryland Computer Science Technical Report, CS-TR-3239 1994.

21. Glässer U, Jackson P, Khalili Araghi A, Yaghoubi Shahir H, Wehn H. A collaborative decision support model for marine safety and security operations. Brisbane, Australia: Proc. 3rd IFIP TC 10 Int. Conf. on Biologically-Inspired Collaborative Computing (BICC); 2010.

22. Farahbod R, Glässer U. The CoreASM modeling framework, Software: Practice and Experience. Special Issue: Tool Building in Formal Methods. 2011;41(2):167–178.

23. Glässer U, Jackson P, Khalili Araghi A, Yaghoubi Shahir H. Intelligent decision support for marine safety and security operations. Vancouver, Canada: Proc. IEEE Int. Conf. on Intelligence and Security Informatics (ISI); 2010.

1Based on: P. Jackson, U. Glässer, H. Yaghoubi Shahir, and H. Wehn. An Extensible Decision Engine for Marine Safety and Security. IEEE Int. Conf. on Intelligence and Security Informatics (ISI), 2011: 54–59, with permission.This work has been supported in part by MDA Corporation and MITACS through the MITACS-Accelerate internship program.